- AI & NLP

- Churn & Loyalty

- Customer Experience

- Customer Journeys

- Customer Metrics

- Feedback Analysis

- Product Experience

- Product Updates

- Sentiment Analysis

- Surveys & Feedback Collection

- Try Thematic

Welcome to the community

Qualitative Data Analysis: Step-by-Step Guide (Manual vs. Automatic)

When we conduct qualitative methods of research, need to explain changes in metrics or understand people's opinions, we always turn to qualitative data. Qualitative data is typically generated through:

- Interview transcripts

- Surveys with open-ended questions

- Contact center transcripts

- Texts and documents

- Audio and video recordings

- Observational notes

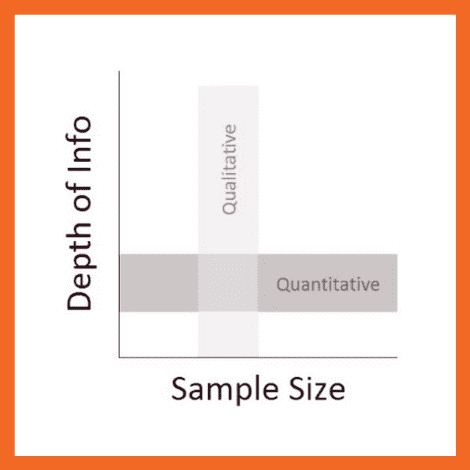

Compared to quantitative data, which captures structured information, qualitative data is unstructured and has more depth. It can answer our questions, can help formulate hypotheses and build understanding.

It's important to understand the differences between quantitative data & qualitative data . But unfortunately, analyzing qualitative data is difficult. While tools like Excel, Tableau and PowerBI crunch and visualize quantitative data with ease, there are a limited number of mainstream tools for analyzing qualitative data . The majority of qualitative data analysis still happens manually.

That said, there are two new trends that are changing this. First, there are advances in natural language processing (NLP) which is focused on understanding human language. Second, there is an explosion of user-friendly software designed for both researchers and businesses. Both help automate the qualitative data analysis process.

In this post we want to teach you how to conduct a successful qualitative data analysis. There are two primary qualitative data analysis methods; manual & automatic. We will teach you how to conduct the analysis manually, and also, automatically using software solutions powered by NLP. We’ll guide you through the steps to conduct a manual analysis, and look at what is involved and the role technology can play in automating this process.

More businesses are switching to fully-automated analysis of qualitative customer data because it is cheaper, faster, and just as accurate. Primarily, businesses purchase subscriptions to feedback analytics platforms so that they can understand customer pain points and sentiment.

We’ll take you through 5 steps to conduct a successful qualitative data analysis. Within each step we will highlight the key difference between the manual, and automated approach of qualitative researchers. Here's an overview of the steps:

The 5 steps to doing qualitative data analysis

- Gathering and collecting your qualitative data

- Organizing and connecting into your qualitative data

- Coding your qualitative data

- Analyzing the qualitative data for insights

- Reporting on the insights derived from your analysis

What is Qualitative Data Analysis?

Qualitative data analysis is a process of gathering, structuring and interpreting qualitative data to understand what it represents.

Qualitative data is non-numerical and unstructured. Qualitative data generally refers to text, such as open-ended responses to survey questions or user interviews, but also includes audio, photos and video.

Businesses often perform qualitative data analysis on customer feedback. And within this context, qualitative data generally refers to verbatim text data collected from sources such as reviews, complaints, chat messages, support centre interactions, customer interviews, case notes or social media comments.

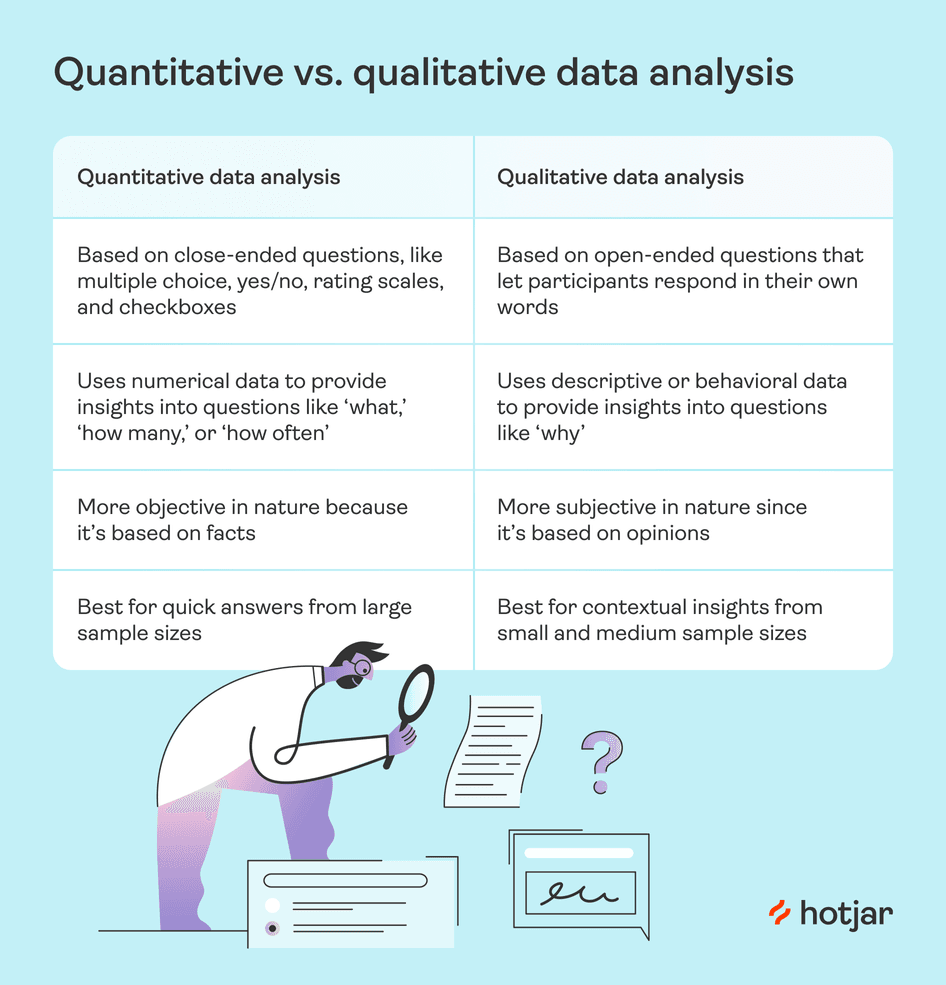

How is qualitative data analysis different from quantitative data analysis?

Understanding the differences between quantitative & qualitative data is important. When it comes to analyzing data, Qualitative Data Analysis serves a very different role to Quantitative Data Analysis. But what sets them apart?

Qualitative Data Analysis dives into the stories hidden in non-numerical data such as interviews, open-ended survey answers, or notes from observations. It uncovers the ‘whys’ and ‘hows’ giving a deep understanding of people’s experiences and emotions.

Quantitative Data Analysis on the other hand deals with numerical data, using statistics to measure differences, identify preferred options, and pinpoint root causes of issues. It steps back to address questions like "how many" or "what percentage" to offer broad insights we can apply to larger groups.

In short, Qualitative Data Analysis is like a microscope, helping us understand specific detail. Quantitative Data Analysis is like the telescope, giving us a broader perspective. Both are important, working together to decode data for different objectives.

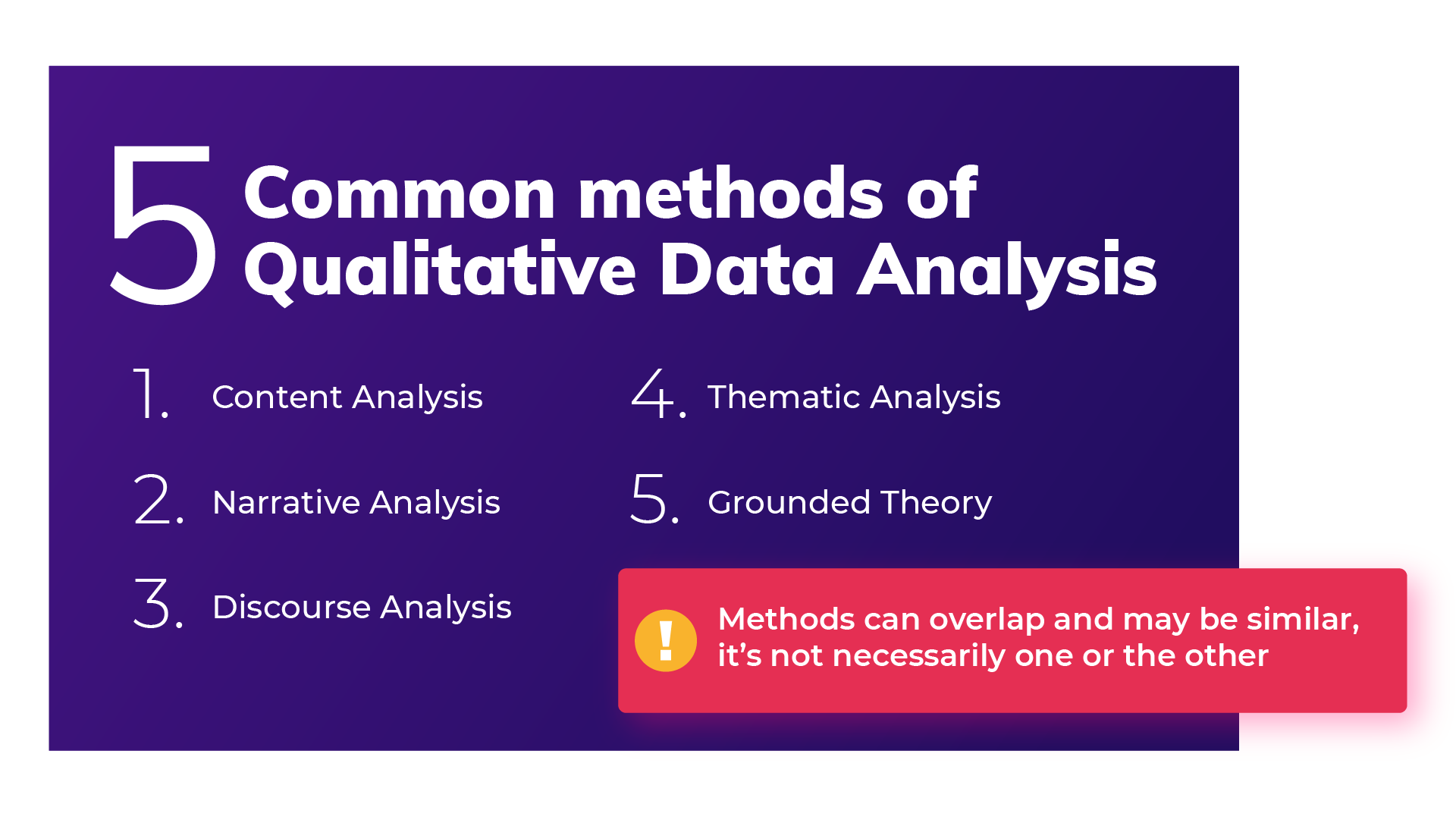

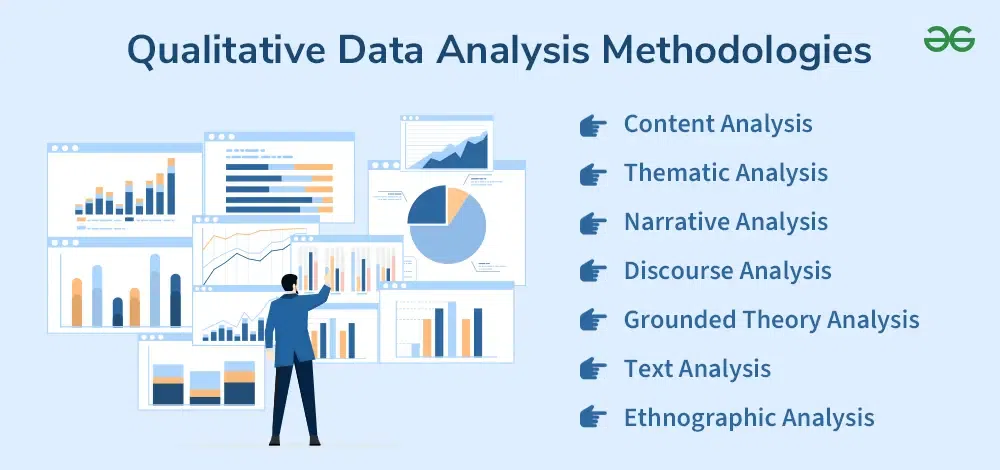

Qualitative Data Analysis methods

Once all the data has been captured, there are a variety of analysis techniques available and the choice is determined by your specific research objectives and the kind of data you’ve gathered. Common qualitative data analysis methods include:

Content Analysis

This is a popular approach to qualitative data analysis. Other qualitative analysis techniques may fit within the broad scope of content analysis. Thematic analysis is a part of the content analysis. Content analysis is used to identify the patterns that emerge from text, by grouping content into words, concepts, and themes. Content analysis is useful to quantify the relationship between all of the grouped content. The Columbia School of Public Health has a detailed breakdown of content analysis .

Narrative Analysis

Narrative analysis focuses on the stories people tell and the language they use to make sense of them. It is particularly useful in qualitative research methods where customer stories are used to get a deep understanding of customers’ perspectives on a specific issue. A narrative analysis might enable us to summarize the outcomes of a focused case study.

Discourse Analysis

Discourse analysis is used to get a thorough understanding of the political, cultural and power dynamics that exist in specific situations. The focus of discourse analysis here is on the way people express themselves in different social contexts. Discourse analysis is commonly used by brand strategists who hope to understand why a group of people feel the way they do about a brand or product.

Thematic Analysis

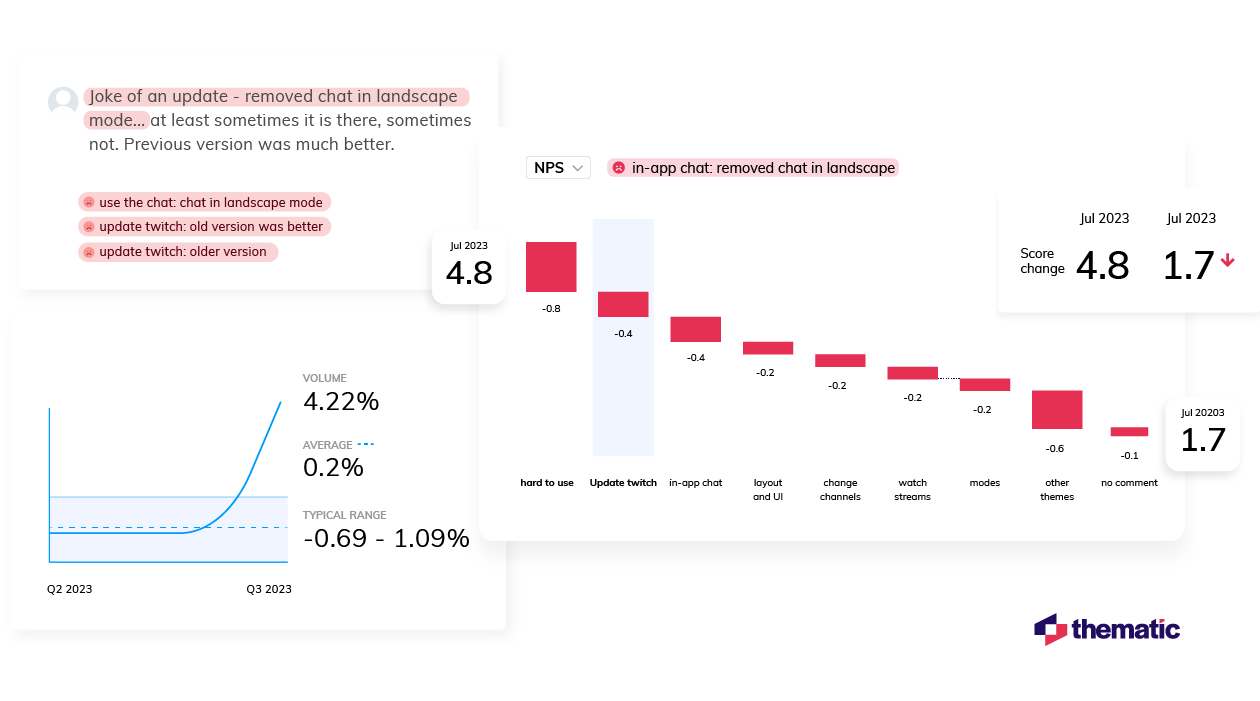

Thematic analysis is used to deduce the meaning behind the words people use. This is accomplished by discovering repeating themes in text. These meaningful themes reveal key insights into data and can be quantified, particularly when paired with sentiment analysis . Often, the outcome of thematic analysis is a code frame that captures themes in terms of codes, also called categories. So the process of thematic analysis is also referred to as “coding”. A common use-case for thematic analysis in companies is analysis of customer feedback.

Grounded Theory

Grounded theory is a useful approach when little is known about a subject. Grounded theory starts by formulating a theory around a single data case. This means that the theory is “grounded”. Grounded theory analysis is based on actual data, and not entirely speculative. Then additional cases can be examined to see if they are relevant and can add to the original grounded theory.

Challenges of Qualitative Data Analysis

While Qualitative Data Analysis offers rich insights, it comes with its challenges. Each unique QDA method has its unique hurdles. Let’s take a look at the challenges researchers and analysts might face, depending on the chosen method.

- Time and Effort (Narrative Analysis): Narrative analysis, which focuses on personal stories, demands patience. Sifting through lengthy narratives to find meaningful insights can be time-consuming, requires dedicated effort.

- Being Objective (Grounded Theory): Grounded theory, building theories from data, faces the challenges of personal biases. Staying objective while interpreting data is crucial, ensuring conclusions are rooted in the data itself.

- Complexity (Thematic Analysis): Thematic analysis involves identifying themes within data, a process that can be intricate. Categorizing and understanding themes can be complex, especially when each piece of data varies in context and structure. Thematic Analysis software can simplify this process.

- Generalizing Findings (Narrative Analysis): Narrative analysis, dealing with individual stories, makes drawing broad challenging. Extending findings from a single narrative to a broader context requires careful consideration.

- Managing Data (Thematic Analysis): Thematic analysis involves organizing and managing vast amounts of unstructured data, like interview transcripts. Managing this can be a hefty task, requiring effective data management strategies.

- Skill Level (Grounded Theory): Grounded theory demands specific skills to build theories from the ground up. Finding or training analysts with these skills poses a challenge, requiring investment in building expertise.

Benefits of qualitative data analysis

Qualitative Data Analysis (QDA) is like a versatile toolkit, offering a tailored approach to understanding your data. The benefits it offers are as diverse as the methods. Let’s explore why choosing the right method matters.

- Tailored Methods for Specific Needs: QDA isn't one-size-fits-all. Depending on your research objectives and the type of data at hand, different methods offer unique benefits. If you want emotive customer stories, narrative analysis paints a strong picture. When you want to explain a score, thematic analysis reveals insightful patterns

- Flexibility with Thematic Analysis: thematic analysis is like a chameleon in the toolkit of QDA. It adapts well to different types of data and research objectives, making it a top choice for any qualitative analysis.

- Deeper Understanding, Better Products: QDA helps you dive into people's thoughts and feelings. This deep understanding helps you build products and services that truly matches what people want, ensuring satisfied customers

- Finding the Unexpected: Qualitative data often reveals surprises that we miss in quantitative data. QDA offers us new ideas and perspectives, for insights we might otherwise miss.

- Building Effective Strategies: Insights from QDA are like strategic guides. They help businesses in crafting plans that match people’s desires.

- Creating Genuine Connections: Understanding people’s experiences lets businesses connect on a real level. This genuine connection helps build trust and loyalty, priceless for any business.

How to do Qualitative Data Analysis: 5 steps

Now we are going to show how you can do your own qualitative data analysis. We will guide you through this process step by step. As mentioned earlier, you will learn how to do qualitative data analysis manually , and also automatically using modern qualitative data and thematic analysis software.

To get best value from the analysis process and research process, it’s important to be super clear about the nature and scope of the question that’s being researched. This will help you select the research collection channels that are most likely to help you answer your question.

Depending on if you are a business looking to understand customer sentiment, or an academic surveying a school, your approach to qualitative data analysis will be unique.

Once you’re clear, there’s a sequence to follow. And, though there are differences in the manual and automatic approaches, the process steps are mostly the same.

The use case for our step-by-step guide is a company looking to collect data (customer feedback data), and analyze the customer feedback - in order to improve customer experience. By analyzing the customer feedback the company derives insights about their business and their customers. You can follow these same steps regardless of the nature of your research. Let’s get started.

Step 1: Gather your qualitative data and conduct research (Conduct qualitative research)

The first step of qualitative research is to do data collection. Put simply, data collection is gathering all of your data for analysis. A common situation is when qualitative data is spread across various sources.

Classic methods of gathering qualitative data

Most companies use traditional methods for gathering qualitative data: conducting interviews with research participants, running surveys, and running focus groups. This data is typically stored in documents, CRMs, databases and knowledge bases. It’s important to examine which data is available and needs to be included in your research project, based on its scope.

Using your existing qualitative feedback

As it becomes easier for customers to engage across a range of different channels, companies are gathering increasingly large amounts of both solicited and unsolicited qualitative feedback.

Most organizations have now invested in Voice of Customer programs , support ticketing systems, chatbot and support conversations, emails and even customer Slack chats.

These new channels provide companies with new ways of getting feedback, and also allow the collection of unstructured feedback data at scale.

The great thing about this data is that it contains a wealth of valubale insights and that it’s already there! When you have a new question about user behavior or your customers, you don’t need to create a new research study or set up a focus group. You can find most answers in the data you already have.

Typically, this data is stored in third-party solutions or a central database, but there are ways to export it or connect to a feedback analysis solution through integrations or an API.

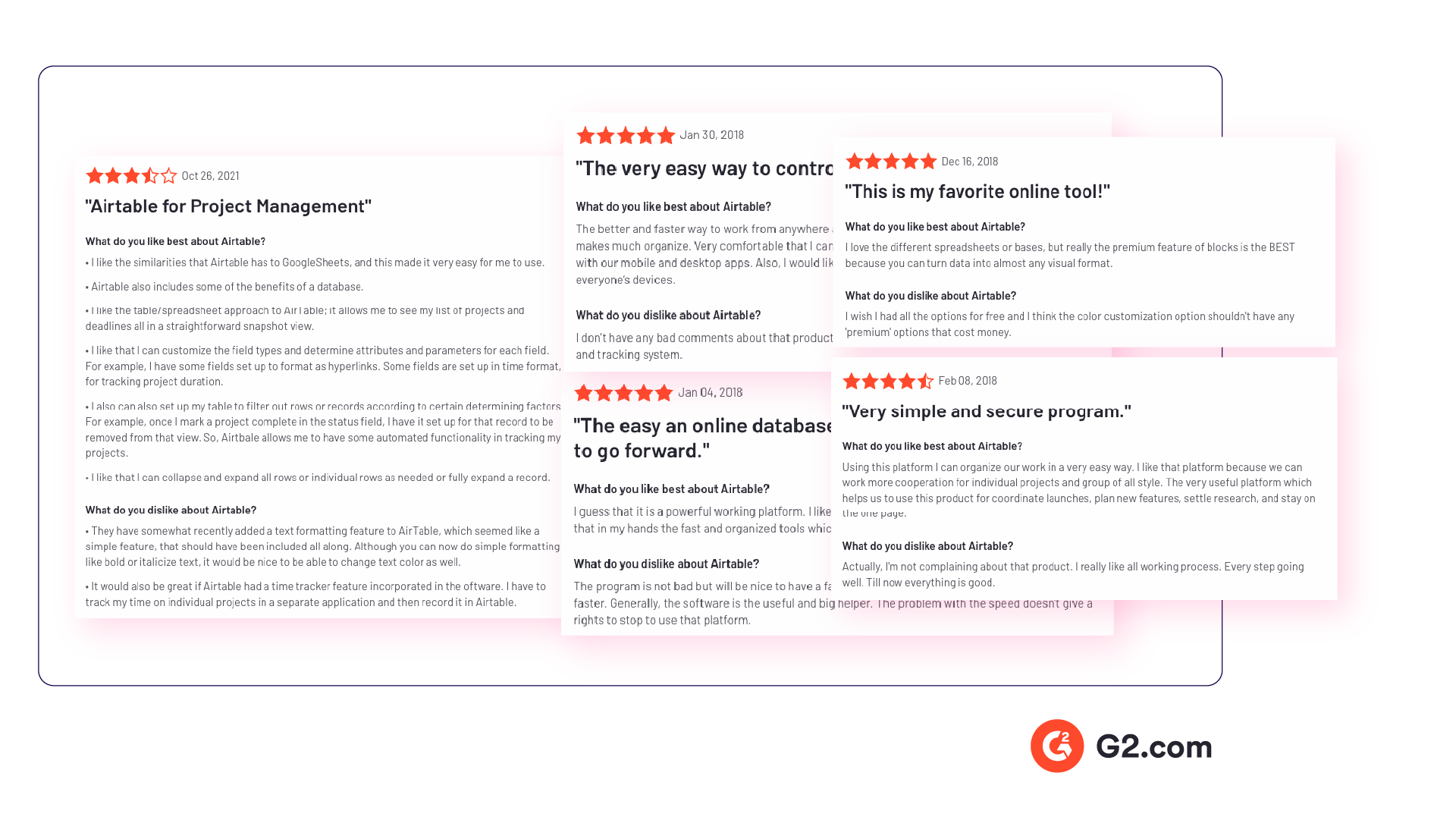

Utilize untapped qualitative data channels

There are many online qualitative data sources you may not have considered. For example, you can find useful qualitative data in social media channels like Twitter or Facebook. Online forums, review sites, and online communities such as Discourse or Reddit also contain valuable data about your customers, or research questions.

If you are considering performing a qualitative benchmark analysis against competitors - the internet is your best friend. Gathering feedback in competitor reviews on sites like Trustpilot, G2, Capterra, Better Business Bureau or on app stores is a great way to perform a competitor benchmark analysis.

Customer feedback analysis software often has integrations into social media and review sites, or you could use a solution like DataMiner to scrape the reviews.

Step 2: Connect & organize all your qualitative data

Now you all have this qualitative data but there’s a problem, the data is unstructured. Before feedback can be analyzed and assigned any value, it needs to be organized in a single place. Why is this important? Consistency!

If all data is easily accessible in one place and analyzed in a consistent manner, you will have an easier time summarizing and making decisions based on this data.

The manual approach to organizing your data

The classic method of structuring qualitative data is to plot all the raw data you’ve gathered into a spreadsheet.

Typically, research and support teams would share large Excel sheets and different business units would make sense of the qualitative feedback data on their own. Each team collects and organizes the data in a way that best suits them, which means the feedback tends to be kept in separate silos.

An alternative and a more robust solution is to store feedback in a central database, like Snowflake or Amazon Redshift .

Keep in mind that when you organize your data in this way, you are often preparing it to be imported into another software. If you go the route of a database, you would need to use an API to push the feedback into a third-party software.

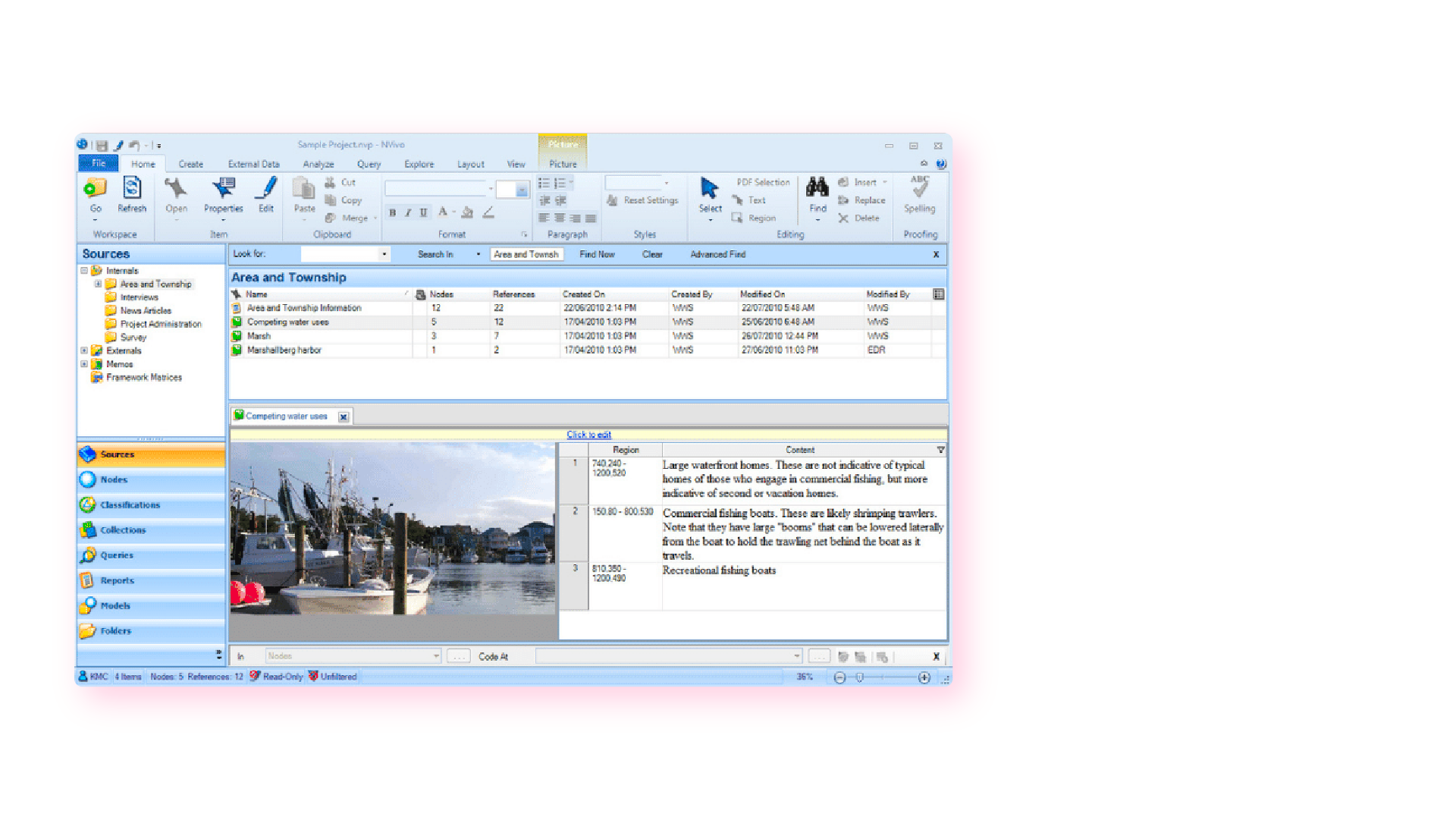

Computer-assisted qualitative data analysis software (CAQDAS)

Traditionally within the manual analysis approach (but not always), qualitative data is imported into CAQDAS software for coding.

In the early 2000s, CAQDAS software was popularised by developers such as ATLAS.ti, NVivo and MAXQDA and eagerly adopted by researchers to assist with the organizing and coding of data.

The benefits of using computer-assisted qualitative data analysis software:

- Assists in the organizing of your data

- Opens you up to exploring different interpretations of your data analysis

- Allows you to share your dataset easier and allows group collaboration (allows for secondary analysis)

However you still need to code the data, uncover the themes and do the analysis yourself. Therefore it is still a manual approach.

Organizing your qualitative data in a feedback repository

Another solution to organizing your qualitative data is to upload it into a feedback repository where it can be unified with your other data , and easily searchable and taggable. There are a number of software solutions that act as a central repository for your qualitative research data. Here are a couple solutions that you could investigate:

- Dovetail: Dovetail is a research repository with a focus on video and audio transcriptions. You can tag your transcriptions within the platform for theme analysis. You can also upload your other qualitative data such as research reports, survey responses, support conversations, and customer interviews. Dovetail acts as a single, searchable repository. And makes it easier to collaborate with other people around your qualitative research.

- EnjoyHQ: EnjoyHQ is another research repository with similar functionality to Dovetail. It boasts a more sophisticated search engine, but it has a higher starting subscription cost.

Organizing your qualitative data in a feedback analytics platform

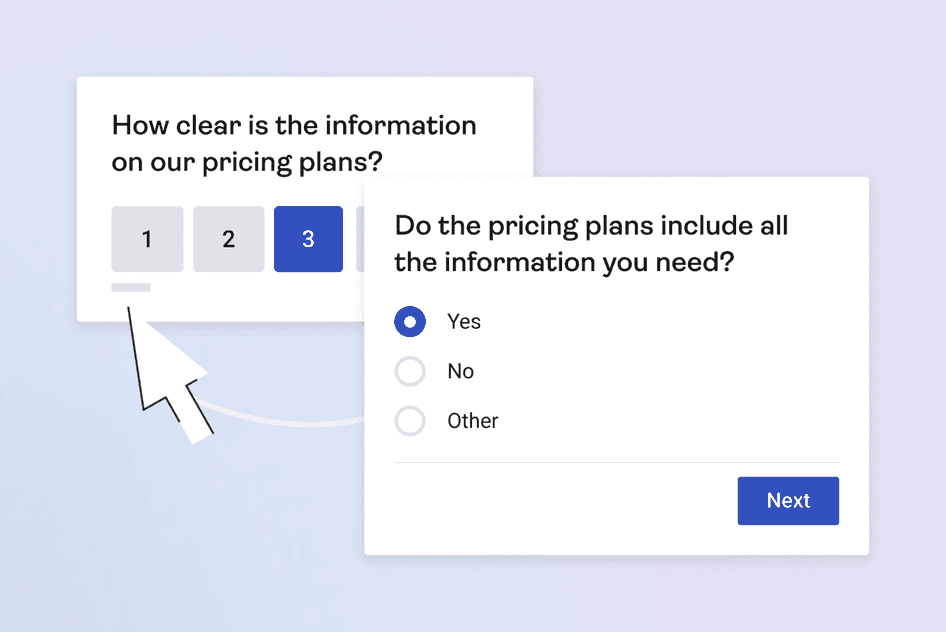

If you have a lot of qualitative customer or employee feedback, from the likes of customer surveys or employee surveys, you will benefit from a feedback analytics platform. A feedback analytics platform is a software that automates the process of both sentiment analysis and thematic analysis . Companies use the integrations offered by these platforms to directly tap into their qualitative data sources (review sites, social media, survey responses, etc.). The data collected is then organized and analyzed consistently within the platform.

If you have data prepared in a spreadsheet, it can also be imported into feedback analytics platforms.

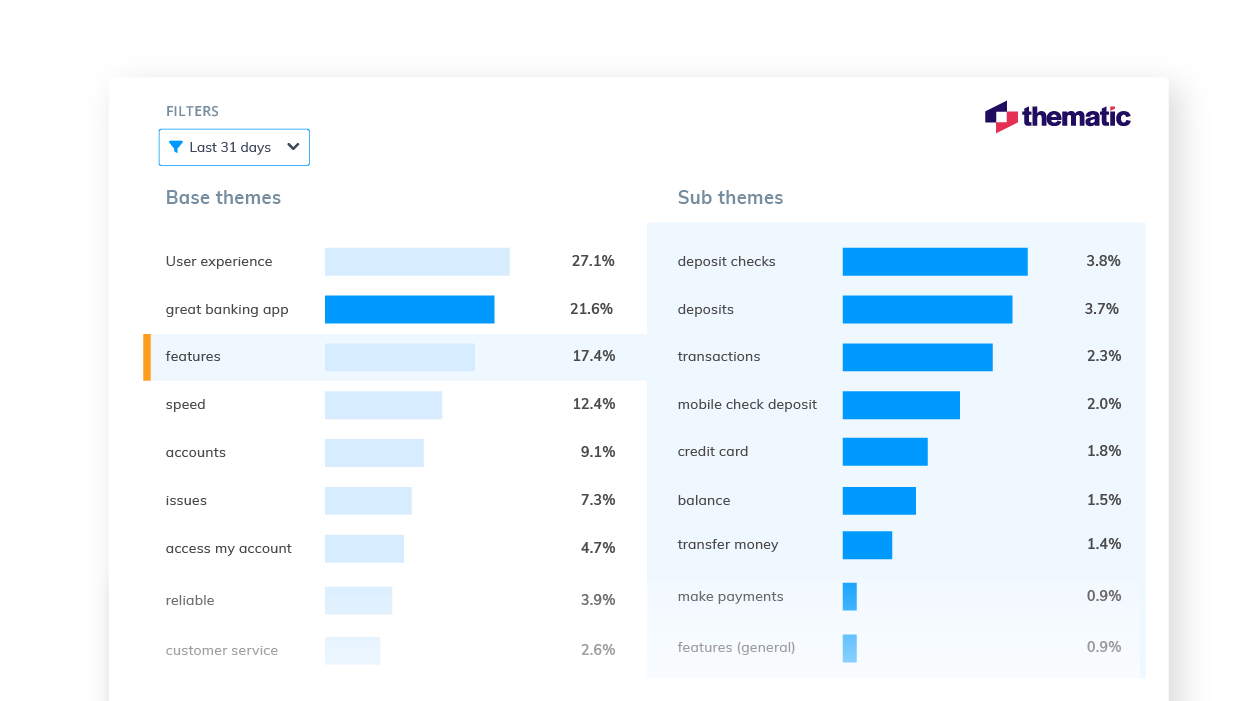

Once all this rich data has been organized within the feedback analytics platform, it is ready to be coded and themed, within the same platform. Thematic is a feedback analytics platform that offers one of the largest libraries of integrations with qualitative data sources.

Step 3: Coding your qualitative data

Your feedback data is now organized in one place. Either within your spreadsheet, CAQDAS, feedback repository or within your feedback analytics platform. The next step is to code your feedback data so we can extract meaningful insights in the next step.

Coding is the process of labelling and organizing your data in such a way that you can then identify themes in the data, and the relationships between these themes.

To simplify the coding process, you will take small samples of your customer feedback data, come up with a set of codes, or categories capturing themes, and label each piece of feedback, systematically, for patterns and meaning. Then you will take a larger sample of data, revising and refining the codes for greater accuracy and consistency as you go.

If you choose to use a feedback analytics platform, much of this process will be automated and accomplished for you.

The terms to describe different categories of meaning (‘theme’, ‘code’, ‘tag’, ‘category’ etc) can be confusing as they are often used interchangeably. For clarity, this article will use the term ‘code’.

To code means to identify key words or phrases and assign them to a category of meaning. “I really hate the customer service of this computer software company” would be coded as “poor customer service”.

How to manually code your qualitative data

- Decide whether you will use deductive or inductive coding. Deductive coding is when you create a list of predefined codes, and then assign them to the qualitative data. Inductive coding is the opposite of this, you create codes based on the data itself. Codes arise directly from the data and you label them as you go. You need to weigh up the pros and cons of each coding method and select the most appropriate.

- Read through the feedback data to get a broad sense of what it reveals. Now it’s time to start assigning your first set of codes to statements and sections of text.

- Keep repeating step 2, adding new codes and revising the code description as often as necessary. Once it has all been coded, go through everything again, to be sure there are no inconsistencies and that nothing has been overlooked.

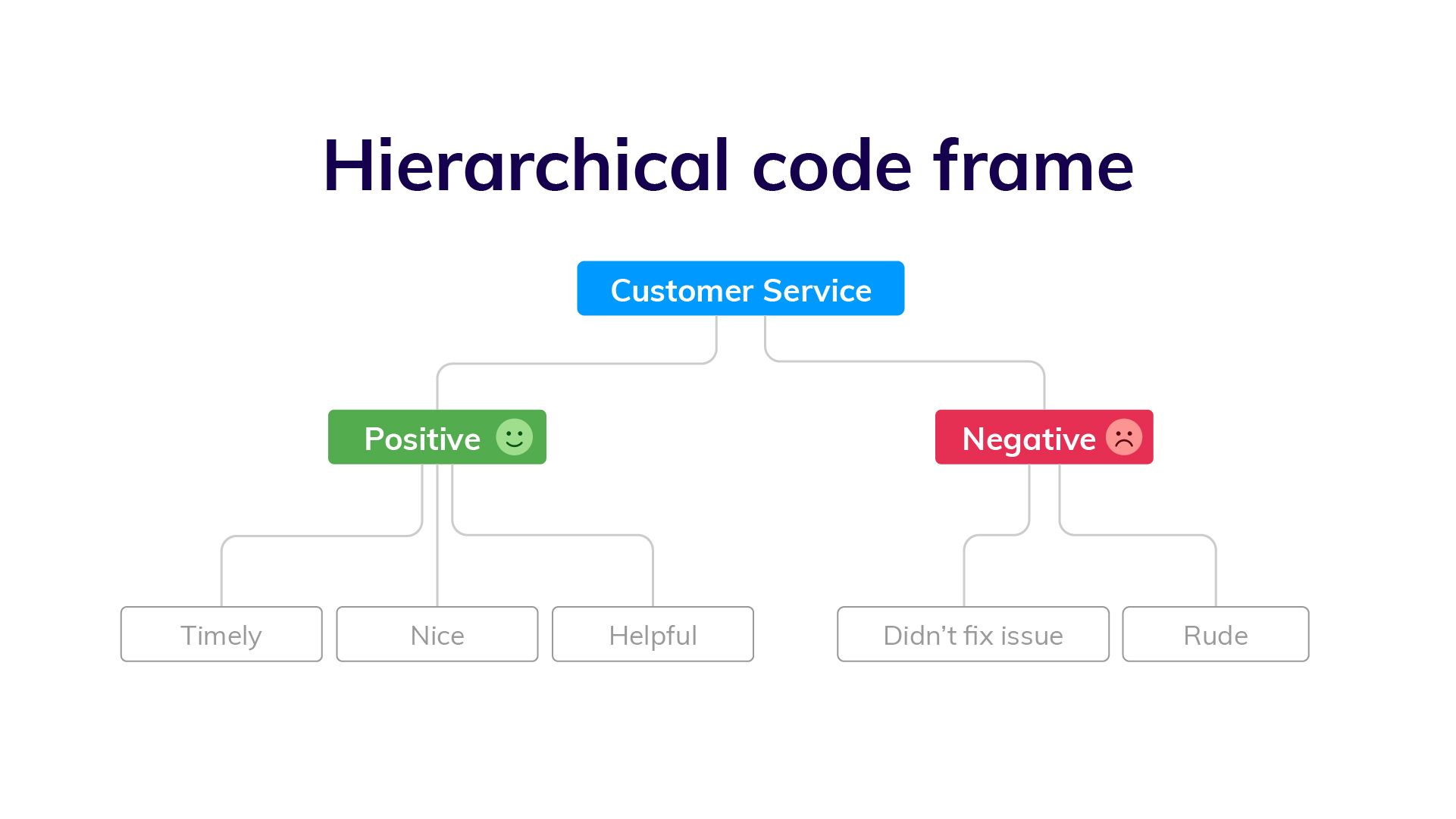

- Create a code frame to group your codes. The coding frame is the organizational structure of all your codes. And there are two commonly used types of coding frames, flat, or hierarchical. A hierarchical code frame will make it easier for you to derive insights from your analysis.

- Based on the number of times a particular code occurs, you can now see the common themes in your feedback data. This is insightful! If ‘bad customer service’ is a common code, it’s time to take action.

We have a detailed guide dedicated to manually coding your qualitative data .

Using software to speed up manual coding of qualitative data

An Excel spreadsheet is still a popular method for coding. But various software solutions can help speed up this process. Here are some examples.

- CAQDAS / NVivo - CAQDAS software has built-in functionality that allows you to code text within their software. You may find the interface the software offers easier for managing codes than a spreadsheet.

- Dovetail/EnjoyHQ - You can tag transcripts and other textual data within these solutions. As they are also repositories you may find it simpler to keep the coding in one platform.

- IBM SPSS - SPSS is a statistical analysis software that may make coding easier than in a spreadsheet.

- Ascribe - Ascribe’s ‘Coder’ is a coding management system. Its user interface will make it easier for you to manage your codes.

Automating the qualitative coding process using thematic analysis software

In solutions which speed up the manual coding process, you still have to come up with valid codes and often apply codes manually to pieces of feedback. But there are also solutions that automate both the discovery and the application of codes.

Advances in machine learning have now made it possible to read, code and structure qualitative data automatically. This type of automated coding is offered by thematic analysis software .

Automation makes it far simpler and faster to code the feedback and group it into themes. By incorporating natural language processing (NLP) into the software, the AI looks across sentences and phrases to identify common themes meaningful statements. Some automated solutions detect repeating patterns and assign codes to them, others make you train the AI by providing examples. You could say that the AI learns the meaning of the feedback on its own.

Thematic automates the coding of qualitative feedback regardless of source. There’s no need to set up themes or categories in advance. Simply upload your data and wait a few minutes. You can also manually edit the codes to further refine their accuracy. Experiments conducted indicate that Thematic’s automated coding is just as accurate as manual coding .

Paired with sentiment analysis and advanced text analytics - these automated solutions become powerful for deriving quality business or research insights.

You could also build your own , if you have the resources!

The key benefits of using an automated coding solution

Automated analysis can often be set up fast and there’s the potential to uncover things that would never have been revealed if you had given the software a prescribed list of themes to look for.

Because the model applies a consistent rule to the data, it captures phrases or statements that a human eye might have missed.

Complete and consistent analysis of customer feedback enables more meaningful findings. Leading us into step 4.

Step 4: Analyze your data: Find meaningful insights

Now we are going to analyze our data to find insights. This is where we start to answer our research questions. Keep in mind that step 4 and step 5 (tell the story) have some overlap . This is because creating visualizations is both part of analysis process and reporting.

The task of uncovering insights is to scour through the codes that emerge from the data and draw meaningful correlations from them. It is also about making sure each insight is distinct and has enough data to support it.

Part of the analysis is to establish how much each code relates to different demographics and customer profiles, and identify whether there’s any relationship between these data points.

Manually create sub-codes to improve the quality of insights

If your code frame only has one level, you may find that your codes are too broad to be able to extract meaningful insights. This is where it is valuable to create sub-codes to your primary codes. This process is sometimes referred to as meta coding.

Note: If you take an inductive coding approach, you can create sub-codes as you are reading through your feedback data and coding it.

While time-consuming, this exercise will improve the quality of your analysis. Here is an example of what sub-codes could look like.

You need to carefully read your qualitative data to create quality sub-codes. But as you can see, the depth of analysis is greatly improved. By calculating the frequency of these sub-codes you can get insight into which customer service problems you can immediately address.

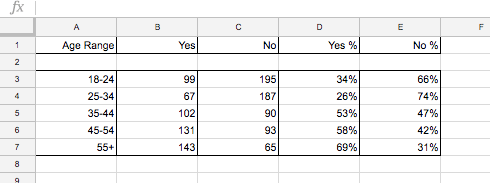

Correlate the frequency of codes to customer segments

Many businesses use customer segmentation . And you may have your own respondent segments that you can apply to your qualitative analysis. Segmentation is the practise of dividing customers or research respondents into subgroups.

Segments can be based on:

- Demographic

- And any other data type that you care to segment by

It is particularly useful to see the occurrence of codes within your segments. If one of your customer segments is considered unimportant to your business, but they are the cause of nearly all customer service complaints, it may be in your best interest to focus attention elsewhere. This is a useful insight!

Manually visualizing coded qualitative data

There are formulas you can use to visualize key insights in your data. The formulas we will suggest are imperative if you are measuring a score alongside your feedback.

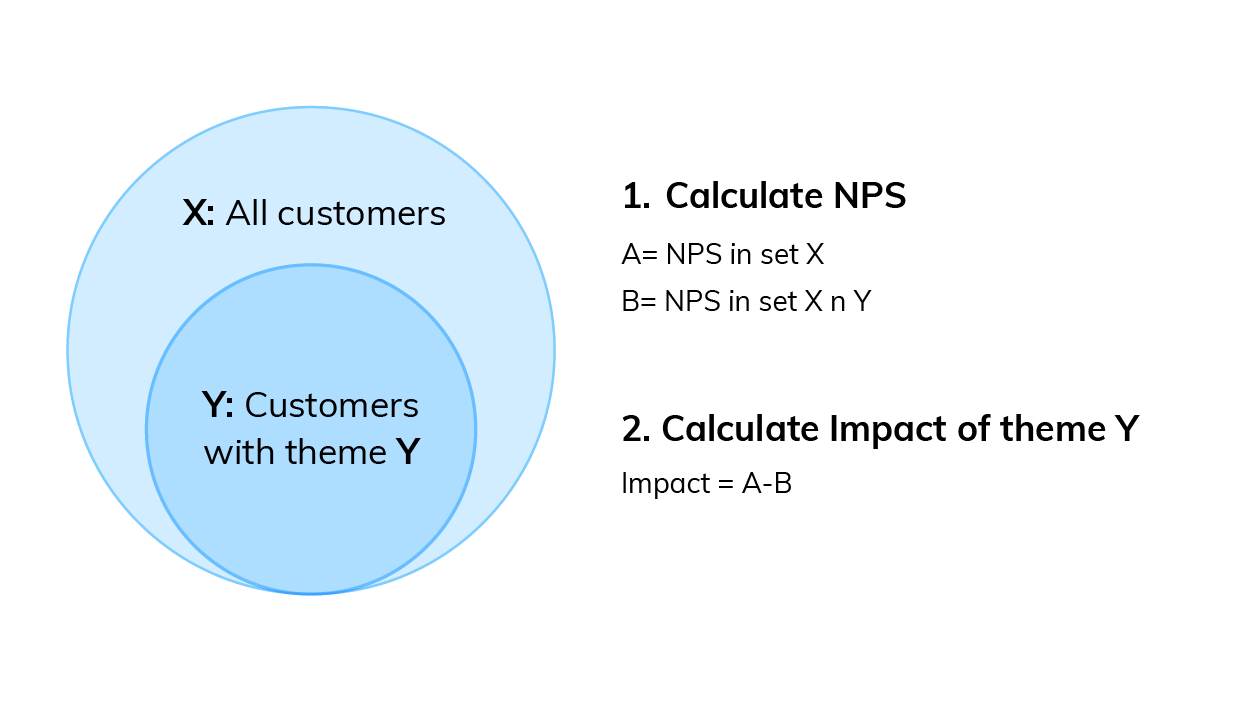

If you are collecting a metric alongside your qualitative data this is a key visualization. Impact answers the question: “What’s the impact of a code on my overall score?”. Using Net Promoter Score (NPS) as an example, first you need to:

- Calculate overall NPS

- Calculate NPS in the subset of responses that do not contain that theme

- Subtract B from A

Then you can use this simple formula to calculate code impact on NPS .

You can then visualize this data using a bar chart.

You can download our CX toolkit - it includes a template to recreate this.

Trends over time

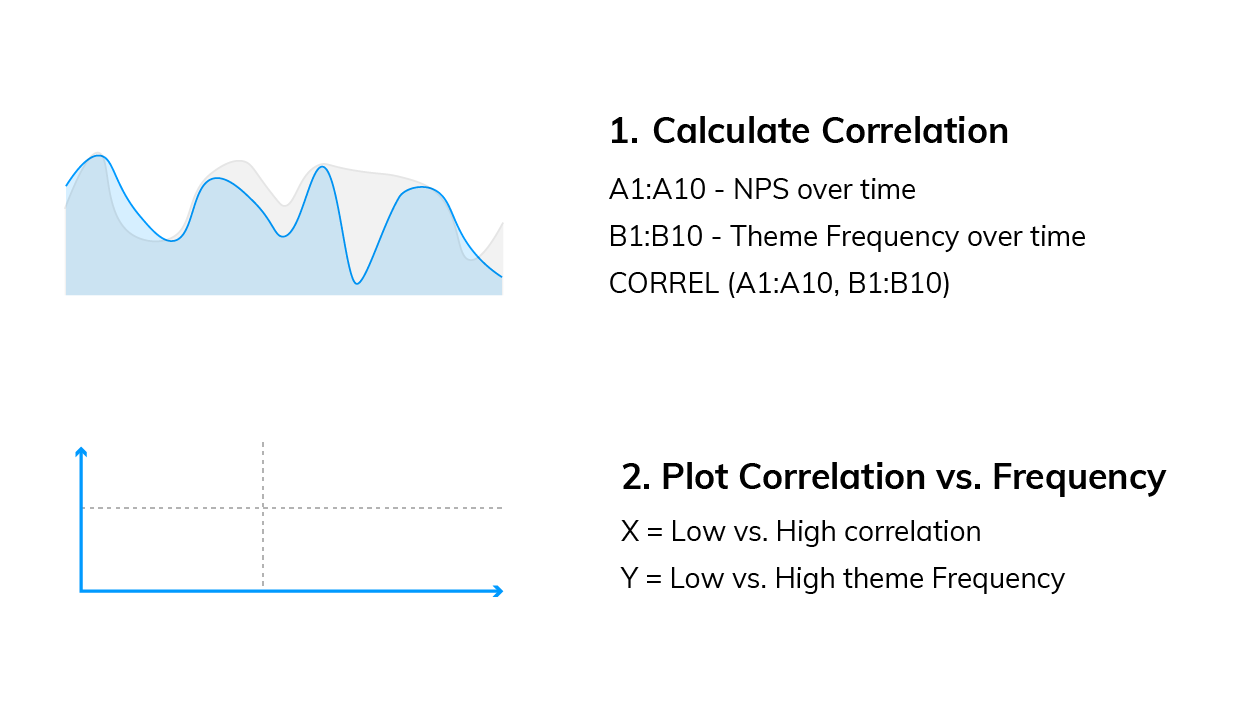

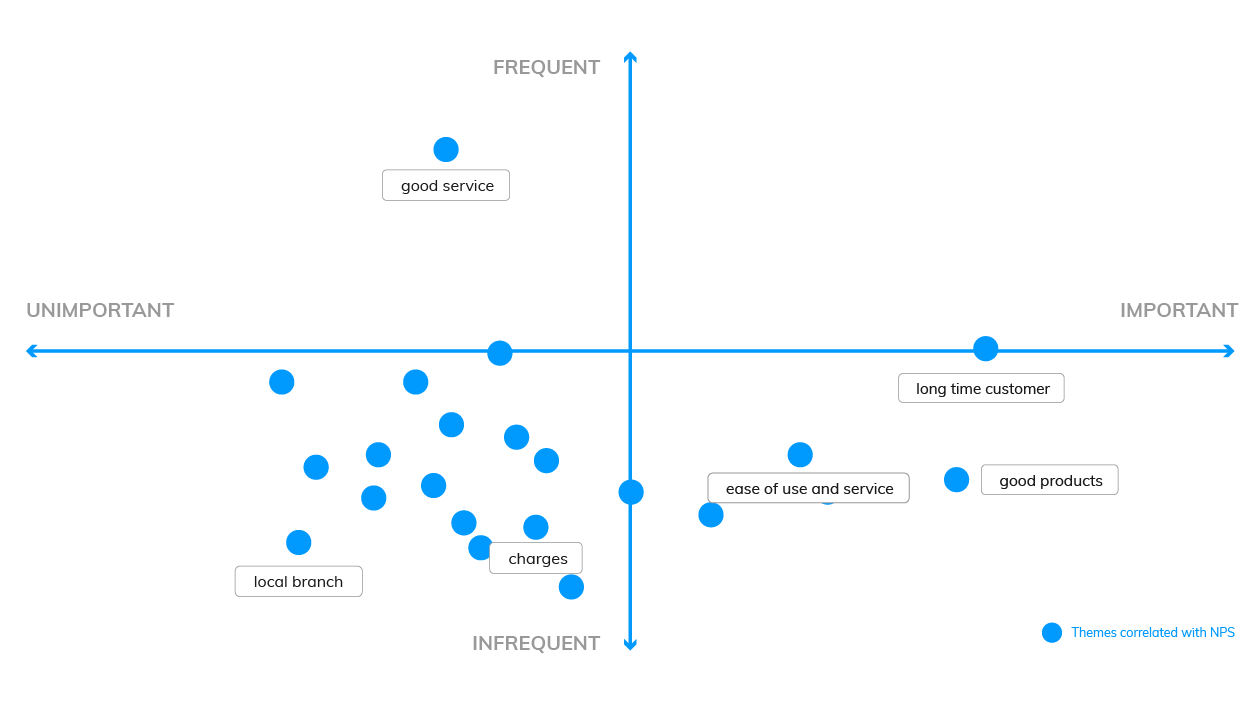

This analysis can help you answer questions like: “Which codes are linked to decreases or increases in my score over time?”

We need to compare two sequences of numbers: NPS over time and code frequency over time . Using Excel, calculate the correlation between the two sequences, which can be either positive (the more codes the higher the NPS, see picture below), or negative (the more codes the lower the NPS).

Now you need to plot code frequency against the absolute value of code correlation with NPS. Here is the formula:

The visualization could look like this:

These are two examples, but there are more. For a third manual formula, and to learn why word clouds are not an insightful form of analysis, read our visualizations article .

Using a text analytics solution to automate analysis

Automated text analytics solutions enable codes and sub-codes to be pulled out of the data automatically. This makes it far faster and easier to identify what’s driving negative or positive results. And to pick up emerging trends and find all manner of rich insights in the data.

Another benefit of AI-driven text analytics software is its built-in capability for sentiment analysis, which provides the emotive context behind your feedback and other qualitative textual data therein.

Thematic provides text analytics that goes further by allowing users to apply their expertise on business context to edit or augment the AI-generated outputs.

Since the move away from manual research is generally about reducing the human element, adding human input to the technology might sound counter-intuitive. However, this is mostly to make sure important business nuances in the feedback aren’t missed during coding. The result is a higher accuracy of analysis. This is sometimes referred to as augmented intelligence .

Step 5: Report on your data: Tell the story

The last step of analyzing your qualitative data is to report on it, to tell the story. At this point, the codes are fully developed and the focus is on communicating the narrative to the audience.

A coherent outline of the qualitative research, the findings and the insights is vital for stakeholders to discuss and debate before they can devise a meaningful course of action.

Creating graphs and reporting in Powerpoint

Typically, qualitative researchers take the tried and tested approach of distilling their report into a series of charts, tables and other visuals which are woven into a narrative for presentation in Powerpoint.

Using visualization software for reporting

With data transformation and APIs, the analyzed data can be shared with data visualisation software, such as Power BI or Tableau , Google Studio or Looker. Power BI and Tableau are among the most preferred options.

Visualizing your insights inside a feedback analytics platform

Feedback analytics platforms, like Thematic, incorporate visualisation tools that intuitively turn key data and insights into graphs. This removes the time consuming work of constructing charts to visually identify patterns and creates more time to focus on building a compelling narrative that highlights the insights, in bite-size chunks, for executive teams to review.

Using a feedback analytics platform with visualization tools means you don’t have to use a separate product for visualizations. You can export graphs into Powerpoints straight from the platforms.

Conclusion - Manual or Automated?

There are those who remain deeply invested in the manual approach - because it’s familiar, because they’re reluctant to spend money and time learning new software, or because they’ve been burned by the overpromises of AI.

For projects that involve small datasets, manual analysis makes sense. For example, if the objective is simply to quantify a simple question like “Do customers prefer X concepts to Y?”. If the findings are being extracted from a small set of focus groups and interviews, sometimes it’s easier to just read them

However, as new generations come into the workplace, it’s technology-driven solutions that feel more comfortable and practical. And the merits are undeniable. Especially if the objective is to go deeper and understand the ‘why’ behind customers’ preference for X or Y. And even more especially if time and money are considerations.

The ability to collect a free flow of qualitative feedback data at the same time as the metric means AI can cost-effectively scan, crunch, score and analyze a ton of feedback from one system in one go. And time-intensive processes like focus groups, or coding, that used to take weeks, can now be completed in a matter of hours or days.

But aside from the ever-present business case to speed things up and keep costs down, there are also powerful research imperatives for automated analysis of qualitative data: namely, accuracy and consistency.

Finding insights hidden in feedback requires consistency, especially in coding. Not to mention catching all the ‘unknown unknowns’ that can skew research findings and steering clear of cognitive bias.

Some say without manual data analysis researchers won’t get an accurate “feel” for the insights. However, the larger data sets are, the harder it is to sort through the feedback and organize feedback that has been pulled from different places. And, the more difficult it is to stay on course, the greater the risk of drawing incorrect, or incomplete, conclusions grows.

Though the process steps for qualitative data analysis have remained pretty much unchanged since psychologist Paul Felix Lazarsfeld paved the path a hundred years ago, the impact digital technology has had on types of qualitative feedback data and the approach to the analysis are profound.

If you want to try an automated feedback analysis solution on your own qualitative data, you can get started with Thematic .

Community & Marketing

Tyler manages our community of CX, insights & analytics professionals. Tyler's goal is to help unite insights professionals around common challenges.

We make it easy to discover the customer and product issues that matter.

Unlock the value of feedback at scale, in one platform. Try it for free now!

- Questions to ask your Feedback Analytics vendor

- How to end customer churn for good

- Scalable analysis of NPS verbatims

- 5 Text analytics approaches

- How to calculate the ROI of CX

Our experts will show you how Thematic works, how to discover pain points and track the ROI of decisions. To access your free trial, book a personal demo today.

Recent posts

Watercare is New Zealand's largest water and wastewater service provider. They are responsible for bringing clean water to 1.7 million people in Tamaki Makaurau (Auckland) and safeguarding the wastewater network to minimize impact on the environment. Water is a sector that often gets taken for granted, with drainage and

Become a qualitative theming pro! Creating a perfect code frame is hard, but thematic analysis software makes the process much easier.

Qualtrics is one of the most well-known and powerful Customer Feedback Management platforms. But even so, it has limitations. We recently hosted a live panel where data analysts from two well-known brands shared their experiences with Qualtrics, and how they extended this platform’s capabilities. Below, we’ll share the

Data & Finance for Work & Life

Data Analysis for Qualitative Research: 6 Step Guide

Data analysis for qualitative research is not intuitive. This is because qualitative data stands in opposition to traditional data analysis methodologies: while data analysis is concerned with quantities, qualitative data is by definition unquantified . But there is an easy, methodical approach that anyone can take use to get reliable results when performing data analysis for qualitative research. The process consists of 6 steps that I’ll break down in this article:

- Perform interviews(if necessary )

- Gather all documents and transcribe any non-paper records

- Decide whether to either code analytical data, analyze word frequencies, or both

- Decide what interpretive angle you want to take: content analysis , narrative analysis, discourse analysis, framework analysis, and/or grounded theory

- Compile your data in a spreadsheet using document saving techniques (windows and mac)

- Identify trends in words, themes, metaphors, natural patterns, and more

To complete these steps, you will need:

- Microsoft word

- Microsoft excel

- Internet access

You can get the free Intro to Data Analysis eBook to cover the fundamentals and ensure strong progression in all your data endeavors.

What is qualitative research?

Qualitative research is not the same as quantitative research. In short, qualitative research is the interpretation of non-numeric data. It usually aims at drawing conclusions that explain why a phenomenon occurs, rather than that one does occur. Here’s a great quote from a nursing magazine about quantitative vs qualitative research:

“A traditional quantitative study… uses a predetermined (and auditable) set of steps to confirm or refute [a] hypothesis. “In contrast, qualitative research often takes the position that an interpretive understanding is only possible by way of uncovering or deconstructing the meanings of a phenomenon. Thus, a distinction between explaining how something operates (explanation) and why it operates in the manner that it does (interpretation) may be [an] effective way to distinguish quantitative from qualitative analytic processes involved in any particular study.” (bold added) (( EBN ))

Learn to Interpret Your Qualitative Data

This article explain what data analysis is and how to do it. To learn how to interpret the results, visualize, and write an insightful report, sign up for our handbook below.

Step 1a: Data collection methods and techniques in qualitative research: interviews and focus groups

Step 1 is collecting the data that you will need for the analysis. If you are not performing any interviews or focus groups to gather data, then you can skip this step. It’s for people who need to go into the field and collect raw information as part of their qualitative analysis.

Since the whole point of an interview and of qualitative analysis in general is to understand a research question better, you should start by making sure you have a specific, refined research question . Whether you’re a researcher by trade or a data analyst working on one-time project, you must know specifically what you want to understand in order to get results.

Good research questions are specific enough to guide action but open enough to leave room for insight and growth. Examples of good research questions include:

- Good : To what degree does living in a city impact the quality of a person’s life? (open-ended, complex)

- Bad : Does living in a city impact the quality of a person’s life? (closed, simple)

Once you understand the research question, you need to develop a list of interview questions. These questions should likewise be open-ended and provide liberty of expression to the responder. They should support the research question in an active way without prejudicing the response. Examples of good interview questions include:

- Good : Tell me what it’s like to live in a city versus in the country. (open, not leading)

- Bad : Don’t you prefer the city to the country because there are more people? (closed, leading)

Some additional helpful tips include:

- Begin each interview with a neutral question to get the person relaxed

- Limit each question to a single idea

- If you don’t understand, ask for clarity

- Do not pass any judgements

- Do not spend more than 15m on an interview, lest the quality of responses drop

Focus groups

The alternative to interviews is focus groups. Focus groups are a great way for you to get an idea for how people communicate their opinions in a group setting, rather than a one-on-one setting as in interviews.

In short, focus groups are gatherings of small groups of people from representative backgrounds who receive instruction, or “facilitation,” from a focus group leader. Typically, the leader will ask questions to stimulate conversation, reformulate questions to bring the discussion back to focus, and prevent the discussion from turning sour or giving way to bad faith.

Focus group questions should be open-ended like their interview neighbors, and they should stimulate some degree of disagreement. Disagreement often leads to valuable information about differing opinions, as people tend to say what they mean if contradicted.

However, focus group leaders must be careful not to let disagreements escalate, as anger can make people lie to be hurtful or simply to win an argument. And lies are not helpful in data analysis for qualitative research.

Step 1b: Tools for qualitative data collection

When it comes to data analysis for qualitative analysis, the tools you use to collect data should align to some degree with the tools you will use to analyze the data.

As mentioned in the intro, you will be focusing on analysis techniques that only require the traditional Microsoft suite programs: Microsoft Excel and Microsoft Word . At the same time, you can source supplementary tools from various websites, like Text Analyzer and WordCounter.

In short, the tools for qualitative data collection that you need are Excel and Word , as well as web-based free tools like Text Analyzer and WordCounter . These online tools are helpful in the quantitative part of your qualitative research.

Step 2: Gather all documents & transcribe non-written docs

Once you have your interviews and/or focus group transcripts, it’s time to decide if you need other documentation. If you do, you’ll need to gather it all into one place first, then develop a strategy for how to transcribe any non-written documents.

When do you need documentation other than interviews and focus groups? Two situations usually call for documentation. First , if you have little funding , then you can’t afford to run expensive interviews and focus groups.

Second , social science researchers typically focus on documents since their research questions are less concerned with subject-oriented data, while hard science and business researchers typically focus on interviews and focus groups because they want to know what people think, and they want to know today.

Non-written records

Other factors at play include the type of research, the field, and specific research goal. For those who need documentation and to describe non-written records, there are some steps to follow:

- Put all hard copy source documents into a sealed binder (I use plastic paper holders with elastic seals ).

- If you are sourcing directly from printed books or journals, then you will need to digitalize them by scanning them and making them text readable by the computer. To do so, turn all PDFs into Word documents using online tools such as PDF to Word Converter . This process is never full-proof, and it may be a source of error in the data collection, but it’s part of the process.

- If you are sourcing online documents, try as often as possible to get computer-readable PDF documents that you can easily copy/paste or convert. Locked PDFs are essentially a lost cause .

- Transcribe any audio files into written documents. There are free online tools available to help with this, such as 360converter . If you run a test through the system, you’ll see that the output is not 100%. The best way to use this tool is as a first draft generator. You can then correct and complete it with old fashioned, direct transcription.

Step 3: Decide on the type of qualitative research

Before step 3 you should have collected your data, transcribed it all into written-word documents, and compiled it in one place. Now comes the interesting part. You need to decide what you want to get out of your research by choosing an analytic angle, or type of qualitative research.

The available types of qualitative research are as follows. Each of them takes a unique angle that you must choose to get what information you want from the analysis . In addition, each of them has a different impact on the data analysis for qualitative research (coding vs word frequency) that we use.

Content analysis

Narrative analysis, discourse analysis.

- Framework analysis, and/or

Grounded theory

From a high level, content, narrative, and discourse analysis are actionable independent tactics, whereas framework analysis and grounded theory are ways of honing and applying the first three.

- Definition : Content analysis is identify and labelling themes of any kind within a text.

- Focus : Identifying any kind of pattern in written text, transcribed audio, or transcribed video. This could be thematic, word repetition, idea repetition. Most often, the patterns we find are idea that make up an argument.

- Goal : To simplify, standardize, and quickly reference ideas from any given text. Content analysis is a way to pull the main ideas from huge documents for comparison. In this way, it’s more a means to an end.

- Pros : The huge advantage of doing content analysis is that you can quickly process huge amounts of texts using simple coding and word frequency techniques we will look at below. To use a metaphore, it is to qualitative analysis documents what Spark notes are to books.

- Cons : The downside to content analysis is that it’s quite general. If you have a very specific, narrative research question, then tracing “any and all ideas” will not be very helpful to you.

- Definition : Narrative analysis is the reformulation and simplification of interview answers or documentation into small narrative components to identify story-like patterns.

- Focus : Understanding the text based on its narrative components as opposed to themes or other qualities.

- Goal : To reference the text from an angle closer to the nature of texts in order to obtain further insights.

- Pros : Narrative analysis is very useful for getting perspective on a topic in which you’re extremely limited. It can be easy to get tunnel vision when you’re digging for themes and ideas from a reason-centric perspective. Turning to a narrative approach will help you stay grounded. More importantly, it helps reveal different kinds of trends.

- Cons : Narrative analysis adds another layer of subjectivity to the instinctive nature of qualitative research. Many see it as too dependent on the researcher to hold any critical value.

- Definition : Discourse analysis is the textual analysis of naturally occurring speech. Any oral expression must be transcribed before undergoing legitimate discourse analysis.

- Focus : Understanding ideas and themes through language communicated orally rather than pre-processed on paper.

- Goal : To obtain insights from an angle outside the traditional content analysis on text.

- Pros : Provides a considerable advantage in some areas of study in order to understand how people communicate an idea, versus the idea itself. For example, discourse analysis is important in political campaigning. People rarely vote for the candidate who most closely corresponds to his/her beliefs, but rather for the person they like the most.

- Cons : As with narrative analysis, discourse analysis is more subjective in nature than content analysis, which focuses on ideas and patterns. Some do not consider it rigorous enough to be considered a legitimate subset of qualitative analysis, but these people are few.

Framework analysis

- Definition : Framework analysis is a kind of qualitative analysis that includes 5 ordered steps: coding, indexing, charting, mapping, and interpreting . In most ways, framework analysis is a synonym for qualitative analysis — the same thing. The significant difference is the importance it places on the perspective used in the analysis.

- Focus : Understanding patterns in themes and ideas.

- Goal : Creating one specific framework for looking at a text.

- Pros : Framework analysis is helpful when the researcher clearly understands what he/she wants from the project, as it’s a limitation approach. Since each of its step has defined parameters, framework analysis is very useful for teamwork.

- Cons : It can lead to tunnel vision.

- Definition : The use of content, narrative, and discourse analysis to examine a single case, in the hopes that discoveries from that case will lead to a foundational theory used to examine other like cases.

- Focus : A vast approach using multiple techniques in order to establish patterns.

- Goal : To develop a foundational theory.

- Pros : When successful, grounded theories can revolutionize entire fields of study.

- Cons : It’s very difficult to establish ground theories, and there’s an enormous amount of risk involved.

Step 4: Coding, word frequency, or both

Coding in data analysis for qualitative research is the process of writing 2-5 word codes that summarize at least 1 paragraphs of text (not writing computer code). This allows researchers to keep track of and analyze those codes. On the other hand, word frequency is the process of counting the presence and orientation of words within a text, which makes it the quantitative element in qualitative data analysis.

Video example of coding for data analysis in qualitative research

In short, coding in the context of data analysis for qualitative research follows 2 steps (video below):

- Reading through the text one time

- Adding 2-5 word summaries each time a significant theme or idea appears

Let’s look at a brief example of how to code for qualitative research in this video:

Click here for a link to the source text. 1

Example of word frequency processing

And word frequency is the process of finding a specific word or identifying the most common words through 3 steps:

- Decide if you want to find 1 word or identify the most common ones

- Use word’s “Replace” function to find a word or phrase

- Use Text Analyzer to find the most common terms

Here’s another look at word frequency processing and how you to do it. Let’s look at the same example above, but from a quantitative perspective.

Imagine we are already familiar with melanoma and KITs , and we want to analyze the text based on these keywords. One thing we can do is look for these words using the Replace function in word

- Locate the search bar

- Click replace

- Type in the word

- See the total results

Here’s a brief video example:

Another option is to use an online Text Analyzer. This methodology won’t help us find a specific word, but it will help us discover the top performing phrases and words. All you need to do it put in a link to a target page or paste a text. I pasted the abstract from our source text, and what turns up is as expected. Here’s a picture:

Step 5: Compile your data in a spreadsheet

After you have some coded data in the word document, you need to get it into excel for analysis. This process requires saving the word doc as an .htm extension, which makes it a website. Once you have the website, it’s as simple as opening that page, scrolling to the bottom, and copying/pasting the comments, or codes, into an excel document.

You will need to wrangle the data slightly in order to make it readable in excel. I’ve made a video to explain this process and places it below.

Step 6: Identify trends & analyze!

There are literally thousands of different ways to analyze qualitative data, and in most situations, the best technique depends on the information you want to get out of the research.

Nevertheless, there are a few go-to techniques. The most important of this is occurrences . In this short video, we finish the example from above by counting the number of times our codes appear. In this way, it’s very similar to word frequency (discussed above).

A few other options include:

- Ranking each code on a set of relevant criteria and clustering

- Pure cluster analysis

- Causal analysis

We cover different types of analysis like this on the website, so be sure to check out other articles on the home page .

How to analyze qualitative data from an interview

To analyze qualitative data from an interview , follow the same 6 steps for quantitative data analysis:

- Perform the interviews

- Transcribe the interviews onto paper

- Decide whether to either code analytical data (open, axial, selective), analyze word frequencies, or both

- Compile your data in a spreadsheet using document saving techniques (for windows and mac)

- Source text [ ↩ ]

About the Author

Noah is the founder & Editor-in-Chief at AnalystAnswers. He is a transatlantic professional and entrepreneur with 5+ years of corporate finance and data analytics experience, as well as 3+ years in consumer financial products and business software. He started AnalystAnswers to provide aspiring professionals with accessible explanations of otherwise dense finance and data concepts. Noah believes everyone can benefit from an analytical mindset in growing digital world. When he's not busy at work, Noah likes to explore new European cities, exercise, and spend time with friends and family.

File available immediately.

Notice: JavaScript is required for this content.

Qualitative Data Analysis Methods 101:

The “big 6” methods + examples.

By: Kerryn Warren (PhD) | Reviewed By: Eunice Rautenbach (D.Tech) | May 2020 (Updated April 2023)

Qualitative data analysis methods. Wow, that’s a mouthful.

If you’re new to the world of research, qualitative data analysis can look rather intimidating. So much bulky terminology and so many abstract, fluffy concepts. It certainly can be a minefield!

Don’t worry – in this post, we’ll unpack the most popular analysis methods , one at a time, so that you can approach your analysis with confidence and competence – whether that’s for a dissertation, thesis or really any kind of research project.

What (exactly) is qualitative data analysis?

To understand qualitative data analysis, we need to first understand qualitative data – so let’s step back and ask the question, “what exactly is qualitative data?”.

Qualitative data refers to pretty much any data that’s “not numbers” . In other words, it’s not the stuff you measure using a fixed scale or complex equipment, nor do you analyse it using complex statistics or mathematics.

So, if it’s not numbers, what is it?

Words, you guessed? Well… sometimes , yes. Qualitative data can, and often does, take the form of interview transcripts, documents and open-ended survey responses – but it can also involve the interpretation of images and videos. In other words, qualitative isn’t just limited to text-based data.

So, how’s that different from quantitative data, you ask?

Simply put, qualitative research focuses on words, descriptions, concepts or ideas – while quantitative research focuses on numbers and statistics . Qualitative research investigates the “softer side” of things to explore and describe , while quantitative research focuses on the “hard numbers”, to measure differences between variables and the relationships between them. If you’re keen to learn more about the differences between qual and quant, we’ve got a detailed post over here .

So, qualitative analysis is easier than quantitative, right?

Not quite. In many ways, qualitative data can be challenging and time-consuming to analyse and interpret. At the end of your data collection phase (which itself takes a lot of time), you’ll likely have many pages of text-based data or hours upon hours of audio to work through. You might also have subtle nuances of interactions or discussions that have danced around in your mind, or that you scribbled down in messy field notes. All of this needs to work its way into your analysis.

Making sense of all of this is no small task and you shouldn’t underestimate it. Long story short – qualitative analysis can be a lot of work! Of course, quantitative analysis is no piece of cake either, but it’s important to recognise that qualitative analysis still requires a significant investment in terms of time and effort.

Need a helping hand?

In this post, we’ll explore qualitative data analysis by looking at some of the most common analysis methods we encounter. We’re not going to cover every possible qualitative method and we’re not going to go into heavy detail – we’re just going to give you the big picture. That said, we will of course includes links to loads of extra resources so that you can learn more about whichever analysis method interests you.

Without further delay, let’s get into it.

The “Big 6” Qualitative Analysis Methods

There are many different types of qualitative data analysis, all of which serve different purposes and have unique strengths and weaknesses . We’ll start by outlining the analysis methods and then we’ll dive into the details for each.

The 6 most popular methods (or at least the ones we see at Grad Coach) are:

- Content analysis

- Narrative analysis

- Discourse analysis

- Thematic analysis

- Grounded theory (GT)

- Interpretive phenomenological analysis (IPA)

Let’s take a look at each of them…

QDA Method #1: Qualitative Content Analysis

Content analysis is possibly the most common and straightforward QDA method. At the simplest level, content analysis is used to evaluate patterns within a piece of content (for example, words, phrases or images) or across multiple pieces of content or sources of communication. For example, a collection of newspaper articles or political speeches.

With content analysis, you could, for instance, identify the frequency with which an idea is shared or spoken about – like the number of times a Kardashian is mentioned on Twitter. Or you could identify patterns of deeper underlying interpretations – for instance, by identifying phrases or words in tourist pamphlets that highlight India as an ancient country.

Because content analysis can be used in such a wide variety of ways, it’s important to go into your analysis with a very specific question and goal, or you’ll get lost in the fog. With content analysis, you’ll group large amounts of text into codes , summarise these into categories, and possibly even tabulate the data to calculate the frequency of certain concepts or variables. Because of this, content analysis provides a small splash of quantitative thinking within a qualitative method.

Naturally, while content analysis is widely useful, it’s not without its drawbacks . One of the main issues with content analysis is that it can be very time-consuming , as it requires lots of reading and re-reading of the texts. Also, because of its multidimensional focus on both qualitative and quantitative aspects, it is sometimes accused of losing important nuances in communication.

Content analysis also tends to concentrate on a very specific timeline and doesn’t take into account what happened before or after that timeline. This isn’t necessarily a bad thing though – just something to be aware of. So, keep these factors in mind if you’re considering content analysis. Every analysis method has its limitations , so don’t be put off by these – just be aware of them ! If you’re interested in learning more about content analysis, the video below provides a good starting point.

QDA Method #2: Narrative Analysis

As the name suggests, narrative analysis is all about listening to people telling stories and analysing what that means . Since stories serve a functional purpose of helping us make sense of the world, we can gain insights into the ways that people deal with and make sense of reality by analysing their stories and the ways they’re told.

You could, for example, use narrative analysis to explore whether how something is being said is important. For instance, the narrative of a prisoner trying to justify their crime could provide insight into their view of the world and the justice system. Similarly, analysing the ways entrepreneurs talk about the struggles in their careers or cancer patients telling stories of hope could provide powerful insights into their mindsets and perspectives . Simply put, narrative analysis is about paying attention to the stories that people tell – and more importantly, the way they tell them.

Of course, the narrative approach has its weaknesses , too. Sample sizes are generally quite small due to the time-consuming process of capturing narratives. Because of this, along with the multitude of social and lifestyle factors which can influence a subject, narrative analysis can be quite difficult to reproduce in subsequent research. This means that it’s difficult to test the findings of some of this research.

Similarly, researcher bias can have a strong influence on the results here, so you need to be particularly careful about the potential biases you can bring into your analysis when using this method. Nevertheless, narrative analysis is still a very useful qualitative analysis method – just keep these limitations in mind and be careful not to draw broad conclusions . If you’re keen to learn more about narrative analysis, the video below provides a great introduction to this qualitative analysis method.

QDA Method #3: Discourse Analysis

Discourse is simply a fancy word for written or spoken language or debate . So, discourse analysis is all about analysing language within its social context. In other words, analysing language – such as a conversation, a speech, etc – within the culture and society it takes place. For example, you could analyse how a janitor speaks to a CEO, or how politicians speak about terrorism.

To truly understand these conversations or speeches, the culture and history of those involved in the communication are important factors to consider. For example, a janitor might speak more casually with a CEO in a company that emphasises equality among workers. Similarly, a politician might speak more about terrorism if there was a recent terrorist incident in the country.

So, as you can see, by using discourse analysis, you can identify how culture , history or power dynamics (to name a few) have an effect on the way concepts are spoken about. So, if your research aims and objectives involve understanding culture or power dynamics, discourse analysis can be a powerful method.

Because there are many social influences in terms of how we speak to each other, the potential use of discourse analysis is vast . Of course, this also means it’s important to have a very specific research question (or questions) in mind when analysing your data and looking for patterns and themes, or you might land up going down a winding rabbit hole.

Discourse analysis can also be very time-consuming as you need to sample the data to the point of saturation – in other words, until no new information and insights emerge. But this is, of course, part of what makes discourse analysis such a powerful technique. So, keep these factors in mind when considering this QDA method. Again, if you’re keen to learn more, the video below presents a good starting point.

QDA Method #4: Thematic Analysis

Thematic analysis looks at patterns of meaning in a data set – for example, a set of interviews or focus group transcripts. But what exactly does that… mean? Well, a thematic analysis takes bodies of data (which are often quite large) and groups them according to similarities – in other words, themes . These themes help us make sense of the content and derive meaning from it.

Let’s take a look at an example.

With thematic analysis, you could analyse 100 online reviews of a popular sushi restaurant to find out what patrons think about the place. By reviewing the data, you would then identify the themes that crop up repeatedly within the data – for example, “fresh ingredients” or “friendly wait staff”.

So, as you can see, thematic analysis can be pretty useful for finding out about people’s experiences , views, and opinions . Therefore, if your research aims and objectives involve understanding people’s experience or view of something, thematic analysis can be a great choice.

Since thematic analysis is a bit of an exploratory process, it’s not unusual for your research questions to develop , or even change as you progress through the analysis. While this is somewhat natural in exploratory research, it can also be seen as a disadvantage as it means that data needs to be re-reviewed each time a research question is adjusted. In other words, thematic analysis can be quite time-consuming – but for a good reason. So, keep this in mind if you choose to use thematic analysis for your project and budget extra time for unexpected adjustments.

QDA Method #5: Grounded theory (GT)

Grounded theory is a powerful qualitative analysis method where the intention is to create a new theory (or theories) using the data at hand, through a series of “ tests ” and “ revisions ”. Strictly speaking, GT is more a research design type than an analysis method, but we’ve included it here as it’s often referred to as a method.

What’s most important with grounded theory is that you go into the analysis with an open mind and let the data speak for itself – rather than dragging existing hypotheses or theories into your analysis. In other words, your analysis must develop from the ground up (hence the name).

Let’s look at an example of GT in action.

Assume you’re interested in developing a theory about what factors influence students to watch a YouTube video about qualitative analysis. Using Grounded theory , you’d start with this general overarching question about the given population (i.e., graduate students). First, you’d approach a small sample – for example, five graduate students in a department at a university. Ideally, this sample would be reasonably representative of the broader population. You’d interview these students to identify what factors lead them to watch the video.

After analysing the interview data, a general pattern could emerge. For example, you might notice that graduate students are more likely to read a post about qualitative methods if they are just starting on their dissertation journey, or if they have an upcoming test about research methods.

From here, you’ll look for another small sample – for example, five more graduate students in a different department – and see whether this pattern holds true for them. If not, you’ll look for commonalities and adapt your theory accordingly. As this process continues, the theory would develop . As we mentioned earlier, what’s important with grounded theory is that the theory develops from the data – not from some preconceived idea.

So, what are the drawbacks of grounded theory? Well, some argue that there’s a tricky circularity to grounded theory. For it to work, in principle, you should know as little as possible regarding the research question and population, so that you reduce the bias in your interpretation. However, in many circumstances, it’s also thought to be unwise to approach a research question without knowledge of the current literature . In other words, it’s a bit of a “chicken or the egg” situation.

Regardless, grounded theory remains a popular (and powerful) option. Naturally, it’s a very useful method when you’re researching a topic that is completely new or has very little existing research about it, as it allows you to start from scratch and work your way from the ground up .

QDA Method #6: Interpretive Phenomenological Analysis (IPA)

Interpretive. Phenomenological. Analysis. IPA . Try saying that three times fast…

Let’s just stick with IPA, okay?

IPA is designed to help you understand the personal experiences of a subject (for example, a person or group of people) concerning a major life event, an experience or a situation . This event or experience is the “phenomenon” that makes up the “P” in IPA. Such phenomena may range from relatively common events – such as motherhood, or being involved in a car accident – to those which are extremely rare – for example, someone’s personal experience in a refugee camp. So, IPA is a great choice if your research involves analysing people’s personal experiences of something that happened to them.

It’s important to remember that IPA is subject – centred . In other words, it’s focused on the experiencer . This means that, while you’ll likely use a coding system to identify commonalities, it’s important not to lose the depth of experience or meaning by trying to reduce everything to codes. Also, keep in mind that since your sample size will generally be very small with IPA, you often won’t be able to draw broad conclusions about the generalisability of your findings. But that’s okay as long as it aligns with your research aims and objectives.

Another thing to be aware of with IPA is personal bias . While researcher bias can creep into all forms of research, self-awareness is critically important with IPA, as it can have a major impact on the results. For example, a researcher who was a victim of a crime himself could insert his own feelings of frustration and anger into the way he interprets the experience of someone who was kidnapped. So, if you’re going to undertake IPA, you need to be very self-aware or you could muddy the analysis.

How to choose the right analysis method

In light of all of the qualitative analysis methods we’ve covered so far, you’re probably asking yourself the question, “ How do I choose the right one? ”

Much like all the other methodological decisions you’ll need to make, selecting the right qualitative analysis method largely depends on your research aims, objectives and questions . In other words, the best tool for the job depends on what you’re trying to build. For example:

- Perhaps your research aims to analyse the use of words and what they reveal about the intention of the storyteller and the cultural context of the time.

- Perhaps your research aims to develop an understanding of the unique personal experiences of people that have experienced a certain event, or

- Perhaps your research aims to develop insight regarding the influence of a certain culture on its members.

As you can probably see, each of these research aims are distinctly different , and therefore different analysis methods would be suitable for each one. For example, narrative analysis would likely be a good option for the first aim, while grounded theory wouldn’t be as relevant.

It’s also important to remember that each method has its own set of strengths, weaknesses and general limitations. No single analysis method is perfect . So, depending on the nature of your research, it may make sense to adopt more than one method (this is called triangulation ). Keep in mind though that this will of course be quite time-consuming.

As we’ve seen, all of the qualitative analysis methods we’ve discussed make use of coding and theme-generating techniques, but the intent and approach of each analysis method differ quite substantially. So, it’s very important to come into your research with a clear intention before you decide which analysis method (or methods) to use.

Start by reviewing your research aims , objectives and research questions to assess what exactly you’re trying to find out – then select a qualitative analysis method that fits. Never pick a method just because you like it or have experience using it – your analysis method (or methods) must align with your broader research aims and objectives.

Let’s recap on QDA methods…

In this post, we looked at six popular qualitative data analysis methods:

- First, we looked at content analysis , a straightforward method that blends a little bit of quant into a primarily qualitative analysis.

- Then we looked at narrative analysis , which is about analysing how stories are told.

- Next up was discourse analysis – which is about analysing conversations and interactions.

- Then we moved on to thematic analysis – which is about identifying themes and patterns.

- From there, we went south with grounded theory – which is about starting from scratch with a specific question and using the data alone to build a theory in response to that question.

- And finally, we looked at IPA – which is about understanding people’s unique experiences of a phenomenon.

Of course, these aren’t the only options when it comes to qualitative data analysis, but they’re a great starting point if you’re dipping your toes into qualitative research for the first time.

If you’re still feeling a bit confused, consider our private coaching service , where we hold your hand through the research process to help you develop your best work.

Psst... there’s more!

This post was based on one of our popular Research Bootcamps . If you're working on a research project, you'll definitely want to check this out ...

You Might Also Like:

84 Comments

This has been very helpful. Thank you.

Thank you madam,

Thank you so much for this information

I wonder it so clear for understand and good for me. can I ask additional query?

Very insightful and useful

Good work done with clear explanations. Thank you.

Thanks so much for the write-up, it’s really good.

Thanks madam . It is very important .

thank you very good

This has been very well explained in simple language . It is useful even for a new researcher.

Great to hear that. Good luck with your qualitative data analysis, Pramod!

This is very useful information. And it was very a clear language structured presentation. Thanks a lot.

Thank you so much.

very informative sequential presentation

Precise explanation of method.

Hi, may we use 2 data analysis methods in our qualitative research?

Thanks for your comment. Most commonly, one would use one type of analysis method, but it depends on your research aims and objectives.

You explained it in very simple language, everyone can understand it. Thanks so much.

Thank you very much, this is very helpful. It has been explained in a very simple manner that even a layman understands

Thank nicely explained can I ask is Qualitative content analysis the same as thematic analysis?

Thanks for your comment. No, QCA and thematic are two different types of analysis. This article might help clarify – https://onlinelibrary.wiley.com/doi/10.1111/nhs.12048

This is my first time to come across a well explained data analysis. so helpful.

I have thoroughly enjoyed your explanation of the six qualitative analysis methods. This is very helpful. Thank you!

Thank you very much, this is well explained and useful

i need a citation of your book.

Thanks a lot , remarkable indeed, enlighting to the best

Hi Derek, What other theories/methods would you recommend when the data is a whole speech?

Keep writing useful artikel.

It is important concept about QDA and also the way to express is easily understandable, so thanks for all.

Thank you, this is well explained and very useful.

Very helpful .Thanks.

Hi there! Very well explained. Simple but very useful style of writing. Please provide the citation of the text. warm regards

The session was very helpful and insightful. Thank you

This was very helpful and insightful. Easy to read and understand

As a professional academic writer, this has been so informative and educative. Keep up the good work Grad Coach you are unmatched with quality content for sure.

Keep up the good work Grad Coach you are unmatched with quality content for sure.

Its Great and help me the most. A Million Thanks you Dr.

It is a very nice work

Very insightful. Please, which of this approach could be used for a research that one is trying to elicit students’ misconceptions in a particular concept ?

This is Amazing and well explained, thanks

great overview

What do we call a research data analysis method that one use to advise or determining the best accounting tool or techniques that should be adopted in a company.

Informative video, explained in a clear and simple way. Kudos

Waoo! I have chosen method wrong for my data analysis. But I can revise my work according to this guide. Thank you so much for this helpful lecture.

This has been very helpful. It gave me a good view of my research objectives and how to choose the best method. Thematic analysis it is.

Very helpful indeed. Thanku so much for the insight.

This was incredibly helpful.

Very helpful.

very educative

Nicely written especially for novice academic researchers like me! Thank you.

choosing a right method for a paper is always a hard job for a student, this is a useful information, but it would be more useful personally for me, if the author provide me with a little bit more information about the data analysis techniques in type of explanatory research. Can we use qualitative content analysis technique for explanatory research ? or what is the suitable data analysis method for explanatory research in social studies?

that was very helpful for me. because these details are so important to my research. thank you very much

I learnt a lot. Thank you

Relevant and Informative, thanks !

Well-planned and organized, thanks much! 🙂

I have reviewed qualitative data analysis in a simplest way possible. The content will highly be useful for developing my book on qualitative data analysis methods. Cheers!

Clear explanation on qualitative and how about Case study

This was helpful. Thank you

This was really of great assistance, it was just the right information needed. Explanation very clear and follow.

Wow, Thanks for making my life easy

This was helpful thanks .

Very helpful…. clear and written in an easily understandable manner. Thank you.

This was so helpful as it was easy to understand. I’m a new to research thank you so much.

so educative…. but Ijust want to know which method is coding of the qualitative or tallying done?

Thank you for the great content, I have learnt a lot. So helpful

precise and clear presentation with simple language and thank you for that.

very informative content, thank you.

You guys are amazing on YouTube on this platform. Your teachings are great, educative, and informative. kudos!

Brilliant Delivery. You made a complex subject seem so easy. Well done.

Beautifully explained.

Thanks a lot