Summarize any | in a click.

TLDR This helps you summarize any piece of text into concise, easy to digest content so you can free yourself from information overload.

Enter an Article URL or paste your Text

Browser extensions.

Use TLDR This browser extensions to summarize any webpage in a click.

Single platform, endless summaries

Transforming information overload into manageable insights — consistently striving for clarity.

100% Automatic Article Summarization with just a click

In the sheer amount of information that bombards Internet users from all sides, hardly anyone wants to devote their valuable time to reading long texts. TLDR This's clever AI analyzes any piece of text and summarizes it automatically, in a way that makes it easy for you to read, understand and act on.

Article Metadata Extraction

TLDR This, the online article summarizer tool, not only condenses lengthy articles into shorter, digestible content, but it also automatically extracts essential metadata such as author and date information, related images, and the title. Additionally, it estimates the reading time for news articles and blog posts, ensuring you have all the necessary information consolidated in one place for efficient reading.

- Automated author-date extraction

- Related images consolidation

- Instant reading time estimation

Distraction and ad-free reading

As an efficient article summarizer tool, TLDR This meticulously eliminates ads, popups, graphics, and other online distractions, providing you with a clean, uncluttered reading experience. Moreover, it enhances your focus and comprehension by presenting the essential content in a concise and straightforward manner, thus transforming the way you consume information online.

Avoid the Clickbait Trap

TLDR This smartly selects the most relevant points from a text, filtering out weak arguments and baseless speculation. It allows for quick comprehension of the essence, without needing to sift through all paragraphs. By focusing on core substance and disregarding fluff, it enhances efficiency in consuming information, freeing more time for valuable content.

- Filters weak arguments and speculation

- Highlights most relevant points

- Saves time by eliminating fluff

Who is TLDR This for?

TLDR This is a summarizing tool designed for students, writers, teachers, institutions, journalists, and any internet user who needs to quickly understand the essence of lengthy content.

Anyone with access to the Internet

TLDR This is for anyone who just needs to get the gist of a long article. You can read this summary, then go read the original article if you want to.

TLDR This is for students studying for exams, who are overwhelmed by information overload. This tool will help them summarize information into a concise, easy to digest piece of text.

TLDR This is for anyone who writes frequently, and wants to quickly summarize their articles for easier writing and easier reading.

TLDR This is for teachers who want to summarize a long document or chapter for their students.

Institutions

TLDR This is for corporations and institutions who want to condense a piece of content into a summary that is easy to digest for their employees/students.

Journalists

TLDR This is for journalists who need to summarize a long article for their newspaper or magazine.

Featured by the world's best websites

Our platform has been recognized and utilized by top-tier websites across the globe, solidifying our reputation for excellence and reliability in the digital world.

Focus on the Value, Not the Noise.

Use AI to summarize scientific articles in seconds

Send a document, get a summary. It's that easy.

If GPT had a PhD

- 50,000 words summarized

- First article summarized per month can be up to 200,000 words

- 50 documents indexed for semantic search

- 100 Chat Messages

- Unlimited article searches

- Import and summarize references with the click of a button

- 1,000,000 words summarized per month

- Maximum document length of 200,000 words

- Unlimited bulk summaries

- 10,000 chat messages per month

- 1,000 documents indexed for semantic search

Summarize any text or PDF in seconds

Check your email for the summary.

Try PRO Upsum if you:

- Need to summarize more than 2 pages

- Need more accurate summaries

- No limits on PDFs summarisation

Chat with your PDF documents

Why choose upsum, simple and adaptable plans for your needs.

Who is UpSum for?

Research Papers

Analyze and understand large amounts of text, get insights, speed up research, communicate findings efficiently and create concise notes, abstracts, literature reviews.

Lengthy Reports

Stay up-to-date with the latest developments. Improve the efficiency of your research and analysis, present findings in a clear and concise manner.

Marketing Reports

Generate summaries of large amounts of text data and extract important information for analysis and understanding of trends and key insights.

UpSum to save hours

Unlock the core of any document with the UpSum algorithm. Experience the luxury of having the most vital information at your fingertips, whether it be a complex research paper, a pressing news article, or a critical business report. Save precious time and elevate your productivity.

Upload your documents

Set the length and the style of the summary

.png)

Download your summary

Full text input.

Research Papers and Research Articles

Business Reports and Legal Documents

News Reports and Blog Articles

Books and Novels

“UpSum.io is saving me hundreds of hours that I would have wasted on reading lengthy reports. With this tool I feel like I have developed a superpower. ”

Robert Jiménez

The latest from our blog.

.jpeg)

UPDATE: Chat with your documents

Summarise research paper tools: A valuable resource for academics and researchers.

Introduction to online summarizing tools: What are they and how do they work?

Asking the Right Questions: How to Extract Specific Information from Your PDFs with Upsum.io

Frequently asked questions.

We use state-of-the-art technology to summarize any text. Our core AI is based on the ChatGPT algorythm. ChatGPT uses a technique called extractive summarization to summarize text. Extractive summarization involves identifying and selecting the most important and relevant sentences or phrases from the original text and assembling them to create a summary. This is done by analyzing the text and determining the key concepts, entities and the relationships between them, then using this information to rank and select the most informative sentences. This technique is based on machine learning models such as transformer based models like GPT-2, GPT-3, etc. which are trained on large amounts of text data and are able to understand the meaning and context of the text. This enables the model to identify the most important and relevant information and generate a summary that accurately represents the main ideas and key points of the original text. We aren't just a summary tool, we use the latest AI models to make sure the summary is not just a shorter version of the text, but an actual summarization of the text with its more important and key takeaways.

Absolutely! Our tool is available for anyone to use, free of charge. With the free version, you may be limited in the amount of text you can input at once. If you require more flexibility and advanced features, we offer a premium subscription option. This will give you the ability to input longer text, access additional formats, and customize the summary length to suit your needs

At our company, we are committed to conducting our business with the highest level of integrity and ethical standards. We understand that trust is a fundamental element of any relationship, and we take great care to earn and maintain the trust of our customers. We are dedicated to providing the best service and the most advanced features to meet your needs. We are constantly working to improve our tool and stay ahead of the latest trends and technologies to ensure that our customers have the most effective and efficient solution available.

Suspendisse potenti. Aenean sodales nisl eu sapien consequat, at iaculis massa rutrum. Curabitur fringilla, risus commodo imperdiet tincidunt, urna elit faucibus massa, at tempus nisi mauris a sapien. Vestibulum faucibus, mi et venenatis hendrerit, mi tortor pharetra massa, ac molestie tortor lacus sed dui. Sed non magna consequat, rutrum leo sit amet, mattis augue. Cras eget purus rutrum, fermentum libero id, hendrerit mi.

Our free tool allows you to easily upload and condense texts of up to 3000 words, which is roughly equivalent to four standard pages. The resulting summary will be a concise 200-300-word summary, or roughly half a page. Upgrade to a premium account to enjoy even more flexibility, such as the ability to upload longer texts and customize your summary length to your exact needs.

Research Paper Summarizer by AcademicHelp

Free and easy-to-use summarization.

Different Summary Options

Clear and To-The-Point Paragraphs

Customizable Text Length

Free research paper summarizer.

Remember Me

What is your profession ? Student Teacher Writer Other

Forgotten Password?

Username or Email

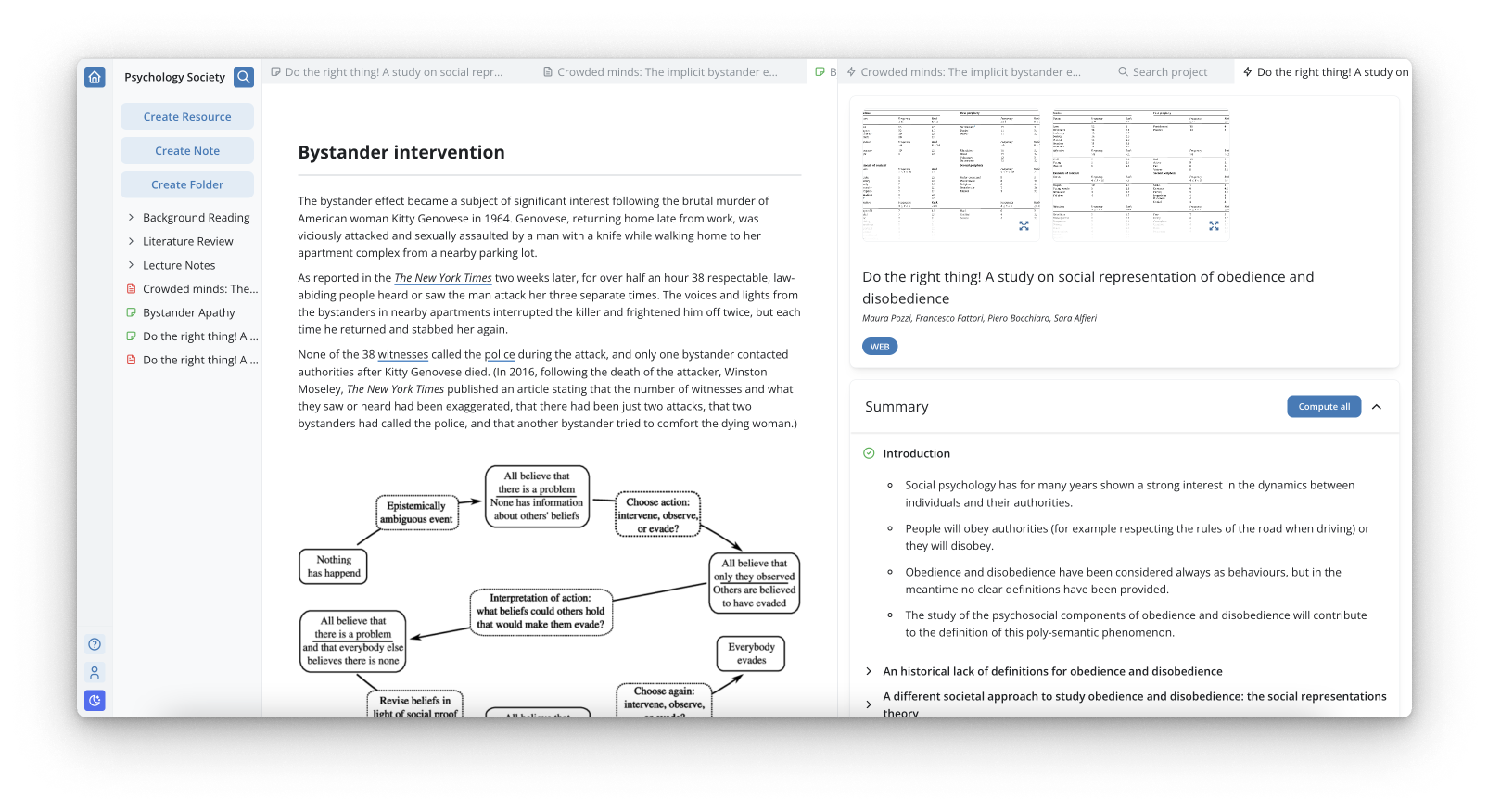

Research faster with genei

Automatically summarise background reading and produce blogs, articles, and reports faster.

"I could totally see this startup playing the same role as a Grammarly: a helpful extension of workflows that optimizes the way people who write for a living, write." Natasha Mascarenhas Senior Reporter at TechCrunch

Y-combinator summer 2021.

Genei is part of Y-Combinator, a US startup accelerator with over 2000 companies including Stripe, Airbnb, Reddit and Twitch.

TechCrunch favourite startups 2021

Genei was recently named among Tech Crunch's favourite startups of summer 2021.

Oxford University All Innovate 2020

Prize winning company in Oxford University's prestigious "All Innovate" startup competition.

Trusted by thought leaders and experts

"genei is a company that excites me a lot. Their AI has the potential to offer massive productivity boosts in research and writing."

"We can perform research using genei's keyword extraction tool to optimize our article content better than before."

"Genei’s summarisation provides a whole new dimension to our research and reporting, and helps contribute towards the clarity and conciseness of our work."

Add, organise, and manage information with ease.

95% of users say genei enables them work more productively. Documents can be stored in customisable projects and folders, whilst content can be linked to any part of a document to generate automatic references.

Ask questions and our AI will find answers.

95% of users say they find greater answers and insights from their work when using genei.

Finish your reading list faster.

AI-powered summarisation and keyword extraction for any group of PDFs or webpages. 98% of users say genei saves them time by paraphrasing complex ideas and enabling them to find crucial information faster.

.png)

Improve the quality & efficiency of your research today

Never miss important reading again.

Our chrome extension add-on means you can summarize webpages or save them for later reading as you browse.

- Import, view, summarise & analyse PDFs and webpages

- Document management and file storage system

- Full notepad & annotation capabilities

- In-built citation management and reference generator

- Export functionality

- Everything in basic

- 70% higher quality AI

- Access to GPT3 - the world's most advanced language based AI

- Multi-document summarisation, search, and question answering

- Rephrasing and Paraphrasing functionality

Loved by thousands of users worldwide

Find out how genei can benefit you.

Empower Your Academic Journey

AI Summarizer & Summary Generator

Jenni AI stands as a comprehensive academic writing assistant, encompassing an AI summarizer and summary generator among its key features. This specialized functionality is meticulously crafted to facilitate the creation of concise summaries, effectively condensing extensive research papers, articles, or essays. Jenni AI simplifies the process, enabling you to focus more on your analysis and less on summarization. Our tool is built with the ethos of promoting authentic academic endeavors, not replacing them.

Loved by over 3 million academics

Trusted By Academics Worldwide

Academics from leading institutions rely on Jenni AI for efficient summary generation

Crafting Quality Academic Writing Solutions with Our Text Summarizer

Discover how Jenni AI stands out as the solution for your summarization needs

Effortless Summarization

Jenni AI takes the hassle out of summarization. Just paste your text, and watch as Jenni AI distills the core ideas into a clear, concise summary.

Get started

Interactive Editing

Don’t just settle for the first draft. Interact with the summary, tweak, and refine it to meet your specific requirements, ensuring that every summary is precisely what you need.

Learning and Improvement

Jenni AI is not just a tool, but a companion in your academic journey. Learn from the summarization process and improve your writing skills with every interaction.

Our Commitment to Academic Integrity

At Jenni AI, we uphold the principle of academic integrity with the utmost regard. Our tool is devised to assist, not to replace your original work.

How Does Jenni AI Summarizing Tool Work?

Navigating the Realm of Academic Writing Has Never Been Easier

Create Your Account

Sign up for a free Jenni AI account to embark on a simplified summarization journey.

Paste Your Text

Copy and paste the text you wish to summarize. Whether it's a research article, essay, or a complex thesis, Jenni AI is here to assist.

Generate Your Summary

Ask Jenni to summarize and watch as it employs advanced algorithms to distill the core essence of your text, presenting a coherent and concise summary.

Review and edit your summary. Jenni AI's interactive platform allows you to tweak and refine the summary to align perfectly with your academic objectives.

What Scholars Are Saying

Hear from our satisfied users and elevate your writing to the next level

· Aug 26

I thought AI writing was useless. Then I found Jenni AI, the AI-powered assistant for academic writing. It turned out to be much more advanced than I ever could have imagined. Jenni AI = ChatGPT x 10.

Charlie Cuddy

@sonofgorkhali

· 23 Aug

Love this use of AI to assist with, not replace, writing! Keep crushing it @Davidjpark96 💪

Waqar Younas, PhD

@waqaryofficial

· 6 Apr

4/9 Jenni AI's Outline Builder is a game-changer for organizing your thoughts and structuring your content. Create detailed outlines effortlessly, ensuring your writing is clear and coherent. #OutlineBuilder #WritingTools #JenniAI

I started with Jenni-who & Jenni-what. But now I can't write without Jenni. I love Jenni AI and am amazed to see how far Jenni has come. Kudos to http://Jenni.AI team.

· 28 Jul

Jenni is perfect for writing research docs, SOPs, study projects presentations 👌🏽

Stéphane Prud'homme

http://jenni.ai is awesome and super useful! thanks to @Davidjpark96 and @whoisjenniai fyi @Phd_jeu @DoctoralStories @WriteThatPhD

Frequently asked questions

How does jenni ai generate summaries, is jenni ai suitable for all academic fields.

How does the citation helper work?

Can I use Jenni AI for professional or non-academic writing?

How does Jenni AI help with writer’s block?

How does Jenni AI compare to other summarization tools?

Choosing the Right Academic Writing Companion

Get ready to make an informed decision and uncover the key reasons why Jenni AI is your ultimate tool for academic excellence.

Feature Featire

COMPETITORS

Academic Orientation

Designed with academic rigor in mind, ensuring your summaries uphold scholarly standards.

Often lack academic focus, potentially diluting the essence of scholarly texts.

Contextual Understanding

Employs advanced AI to grasp the context, ensuring summaries are meaningful and coherent.

May struggle with contextual understanding, leading to disjointed or misleading summaries.

Customization

Offers customization options to tailor summaries according to your specific needs and preferences.

Generic summarization often with limited customization, risking loss of critical information.

User-Friendly Interface

Intuitive interface makes summarization a breeze, enhancing the user experience.

Clunky interfaces can hinder the summarization process, making it less user-friendly.

Promotes an interactive learning environment, aiding in improving your summarization skills over time.

Merely provide summarization with no added value in terms of learning or skill enhancement.

Ready to Elevate Your Academic Writing?

Create your free Jenni AI account today and discover a new horizon of academic excellence!

Research Paper Summary Generator – Online & Free Tool for Students

Use our research paper summarizer to shorten any text in 3 easy steps:

- Enter the text you want to reduce.

- Choose how long you want the summary to be.

- Press the “summarize” button and get the new text.

Number of sentences in the summary:

Original ratio

100 % in your summary

There's no doubt that recapping is an essential skill for students. Most academic writings require you to summarize literature sources for background information, or you need to condense tons of materials a night before an exam. All this might take a lot of time and effort.

We created a research paper summarizer to help you sum up any academic text in a few clicks. Continue reading to learn more about the free summary writer, and don’t miss excellent tips on how to cut down words manually.

- 🔧 When to Apply the Summarizer?

- 🤔 How to Summarize?

- 📝 Summary Examples

- 🤩 5 Extra Tips

🔗 References

🔧 summary of research paper online – application.

High school and college students often need to deal with long texts. Consider the most common situations in which our research paper summary generator might be helpful.

- You don't have time for home reading. The summary generator can narrow the texts down to the main points and eliminate irrelevant details.

- You need to summarize articles for literature analysis. The tool can help you identify the key ideas from literary sources and compare them easily.

- You want to reword someone's ideas and create an original text. The summarizing tool helps narrow down the text without plagiarism .

🤔 How Do You Summarize a Research Paper?

Our tool is a great solution to summarize a research paper online. However, you should know the general summing-up rules to get the best results from the tool.

📝 Research Paper Summary Generator Examples

We used an online research paper summarizer to cut down two articles in a few easy clicks. Have a look at these examples!

Source: What Affects Rural Ecological Environment Governance Efficiency? Evidence from China

The research paper focuses on the influencing factors that impact the sustainable economic and social development of vast rural areas of China. According to the author, protecting the ecological environment has become crucial with rapid economic growth. This paper separately examines the influencing factors in the eastern, central, and western regions. The results show that the main factors that positively affect the efficiency of rural ecological environment governance are: the level of rural economic development, rural public participation, and the size of village committees. Conversely, environmental protection social organizations have a negative influence, preventing the productivity of rural ecological environment governance. In conclusion, the key issue in improving rural ecological environment governance in China is to create differentiated regional coordinated governance mechanisms.

Source: Is artificial intelligence better at assessing heart health?

The article discusses the use of AI in assessing and diagnosing cardiac function. The author believes this technology will be beneficial when deployed across the clinical system nationwide. The study provides the results of the experiment conducted in 2020 by Smidt Heart Institute and Stanford University. They developed one of the first AI technologies to assess cardiac function, specifically, left ventricular ejection fraction. The key findings are: Cardiologists agreed with the AI initial assessment more frequently. The physicians could not tell which evaluations were made by AI and which were made by professionals. The AI assistance saved cardiologists a lot of time. In conclusion, the author believes that this level of evidence offers clinicians extra assurance to adopt artificial intelligence more broadly to increase efficiency and quality.

🤩 5 Extra Tips to Summarize a Research Paper

Summarizing a research paper might be incredibly challenging. We recommend using our free research paper summary generator to save time on other tasks. Here are some additional tips to help you create an accurate summary:

- Ensure you include the main ideas from all research paper parts : introduction , literature review, methods, and results.

- To identify the main idea of the research paper, look for a hypothesis or a thesis statement .

- Don't try to fit all the experiment's results and statistics in summary.

- Always mention the author of the original paper to avoid plagiarism.

- Don't add your personal opinion on the research paper you summarize.

❓ Research Paper Summarizer FAQ

❓ how to use the research paper summarizer.

Research paper summarizer is a free online tool that can summarize any paper in almost no time. The summarizer is available online and has a simple interface. You only need to copy and paste the original passage, choose the length of your summary, and click the "summarize" button.

❓ How to Write a Summary of a Research Paper?

You can summarize a research paper manually or with the help of a free research paper summary generator. Pay attention to the text's central idea expressed in the thesis statement to make an accurate summary. Ensure you carefully reflect on the author's main point and eliminate the less important details.

❓ How Long Is a Summary?

The length of a summary might vary depending on your goal. For example, if you summarize several sources for your literature review, it's better to keep them short. However, if you write an essay based on one article or book, you might want to provide a more extended summary.

- Summary For Research Paper: How To Write - eLearning Industry

- Summarizing a Research Article 1997-2006, University of Washington

- How To Write a Summary in 8 Steps (With Examples) | Indeed.com

- Thesis Statement - Writing Your Research Papers - SJSU Research Guides at San José State University Library

- How to reduce word count without reducing content

Research Assistant

Ai-powered research summarizer.

- Summarize academic papers: Quickly understand the main points of research papers without reading the entire document.

- Extract insights from reports: Identify key findings and trends from industry reports, surveys, or reviews.

- Prepare for presentations: Create concise summaries of research materials to include in your presentations or talking points.

- Enhance your understanding: Improve your comprehension of complex subjects by summarizing the main ideas and insights.

- Save time: Reduce the time spent on reading lengthy research documents by focusing on the most important points.

New & Trending Tools

In-cite ai reference generator, legal text refiner, job search ai assistant.

Best Summarizing Tool for Academic Texts

Copy and paste your text

Number of sentences in results:

⚙️ 11 Best Summarizing Tools

- 🤔 How to Summarize an Article without Plagiarizing?

- 📝 How to Proofread Your Summary?

⭐ Best Summarizing Tool: the Benefits

🔗 references, ✅ 11 best summary generators to consider.

We’re here to offer the whole list of text summarizers in this article. Every tool has a strong algorithm so you won’t have to proofread a lot in order to make the summary look hand-written. The usage of such websites can be productive for your studying as long as you can focus on more important tasks and leave this routine work to online tools.

In this blog post, you’ll also find tips on successful summarizing and proofreading. These are basic skills that you will need for many assignments. To summarize text better, you’ll need to read it critically, spot the main idea, underline the essential points, and so on. As for proofreading, this skill is useful not only to students but also to professional writers.

To summarize a text, a paragraph or even an essay, you can find a lot of tools online. Here we’ll list some of these, including those that allow choose the percent of similarity and define the length of the text you’ll get.

If you’re asked to summarize some article or paragraph in your own words, one of these summary makers can become significant for getting fast results. Their user-friendly design and accurate algorithms play an important role in the summary development.

1. Summarize Bot

Summarize Bot is an easy-to-use and ad-free software for fast and accurate summary creation in our list. With its help, you can save your time for research by compressing texts. The summary maker shows the reading time, which it saves for you, and other useful statistics. To summarize any text, you should only send the message in Facebook or add the bot to Slack. The app works with various file types: including PDF, mp3, DOC, TXT, jpg, etc., and supports almost every language.

The only drawback is the absence of web version. If you don’t have a Facebook account and don’t want to install Slack, you won’t be able to enjoy this app’s features.

SMMRY has everything you need for a perfect summary—easy to use design, lots of features, and advanced settings (URL usage). If you look for a web service that changes the wording, this one would never disappoint you.

SMMRY allows you to summarize the text not only by copy-pasting but also with the file uploading or URL inserting. The last one is especially interesting. With this option, you don’t have to edit an article in any way. Just put the URL into the field and get the result. The tool is ad-free and doesn’t require registration.

Jasper is an AI-powered summary generator. It creates unique, plagiarism-free summaries, so it’s a perfect option for those who don’t want to change the wording on their own.

When using this tool, you can summarize a text of up to 12,000 characters (roughly 2,000-3,000 words) in more than 30 languages. Although Jasper doesn’t have a free plan, it offers a 7-day free trial to let you see whether this tool meets your needs.

4. Quillbot

Quillbot offers many tools for students and writers, and summarizer is one of them. With this tool, you can customize your summary length and choose between two modes: paragraph and key sentences. The former presents a summary as a coherent paragraph, while the latter gives you key ideas of the text in the form of bullet points.

What is great about Quillbot is that you can use it for free. However, there’s a limitation: you can only summarize a text of up to 1,200 words on a free plan. A premium plan extends this limit to 6,000 words. In addition, you don’t need to register to use Quillbot summarizer; just input your text and get the result.

5. TLDR This

TLDR This is a summary generator that can help you quickly summarize long text. You can paste your paper directly into the tool or provide it with a URL of the article you want to shorten.

With a free plan, you have unlimited attempts to summarize texts in the form of key sentences. TLDR This also provides advanced AI summaries, but you have only 10 of these on a free plan. To get more of them, you have to go premium, which starts at $4 per month.

HIX.AI summarizer is an AI-powered tool that can help you summarize texts of up to 10,000 characters. If you use Google Chrome or Microsoft Edge, HIX.AI has a convenient extension for you.

You can use the tool for free to check 1,000 words per week. Along with this, you get access to over 120 other AI-powered tools to help you with your writing tasks. These include essay checker, essay rewriter, essay topic generator, and many others. With a premium plan, which starts at $19.99 a month billed yearly, you also get access to GPT-4 and other advanced features.

7. Scholarcy

Scholarcy is one of the best tools for summarizing academic articles. It presents summaries in the form of flashcards, which can be downloaded as Word, PowerPoint, or Markdown files.

This tool has some outstanding features for students and researchers. For example, it creates a referenced summary, which makes it easier for you to cite the information correctly in your paper. In addition, it can find the references from the summarized article and provide you with open-access links to them. The tool can also extract tables and figures from the text and let you download them as Excel files.

Unregistered users can summarize one article per day. With a free registered account, you can make 3 summary flashcards a day. Moreover, Scholarcy offers free Chrome, Edge, and Firefox extensions that allow you to summarize short and medium-sized articles.

Frase is an AI-powered summary generator that is available for free. The tool can summarize texts of up to 600-700 words. Therefore, it’s good if you want to, say, summarize the main points of your short essay or blog post to write a conclusion. However, if you need summaries of long research articles, you should choose another option.

This summary generator allows you to adjust the level of creativity, meaning that you can generate original, plagiarism-free summaries. Frase also has lots of extra features for SEO and project management, which makes it a good option for website content creators.

9. Resoomer

Resoomer is another paraphrasing and summarizing tool that works with several languages. You’re free to use the app in English, French, German, Italian, and Spanish.

This online tool may be considered as one of the best text summarizers in IvyPanda ranking, because it allows performing many custom settings. For example, you can click to Manual and set the size of the summary (in percent or words). You can also set the number of keywords for the tool to focus on.

Among its drawbacks, we would mention that the software works only with argumentative texts and won’t reword other types correctly. Also, free version contains lots of ads and does not allow its users to import files. The premium subscription costs 4.90€ per month or 39.90€/year.

10. Summarizer

Summarizer is another good way to summarize any article you read online. This simple Chrome extension will provide you with a summary within a couple of clicks. Install the add-on, open the article or select the piece of text you want to summarize and click the button “Summarize”.

The software processes various texts in your browser, including long PDF articles. The result of summarizing has only 7% of the original article. This app is great for all who don’t want to read long publications. However, it doesn’t allow you to import file or download the result.

11. Summary Generator

The last article and essay Summary Generator in our list which can be helpful for your experience in college or university. This is free open software everyone can use.

The tool has only two buttons—one to summarize the document and the other to clear the field. With this software, you’ll get a brief summary based on your text. You don’t have to register there to get your document shortened.

Speaking about drawbacks of the website, we would mention too many ads and no options to summarize a URL or document, set up the length of the result and export it to the popular file types.

These were the best online summarizing tools to deal with the task effectively. We hope some of them became your favorite summarizers, and you’ll use them often in the future.

Not sure if a summarizer will work for your paper? Check out this short tutorial on how the text summarizing tool can come in handy for essay writing.

🤔 Techniques & Tools to Summarize without Plagiarizing

Of course, there are times when you can’t depend on online tools. For example, you may be restricted to use them in a class or maybe you have to highlight some specific paragraphs and customizing the tool’s settings would take more time and efforts than summary writing itself.

In this chapter, you’ll learn to summarize a long article, essay, research paper, report, or a book chapter with the help of helpful tips, a logical approach, and a little bit of creativity.

Here are some methods to let you create a fantastic summary.

- Know your goal. To choose the right route to your goal, you need to understand it perfectly. Why should you summarize the text? What is its style: scientific or publicistic? Who is the author? Where was the article published? There are many significant questions that can help to adapt your text better. Develop a short interview to use during the summary writing. Include all the important information on where you need to post the text and for what purpose.

- Thorough reading. To systemize your thoughts about the text, it’s significant to investigate it in detail. Read the text two or more times to grasp the basic ideas of the article and understand its goals and motives. Give yourself all the time you need to process the text. Often we need a couple of hours to extract the right results from the study or learn to paraphrase the text properly.

- Highlight the main idea. When writing a summary, you bear a responsibility for the author. Not only you have to extract the significant idea of the text but to paraphrase it correctly. It’s important not to misrepresent any of the author’s conclusions in your summary. That’s why you should find the main idea and make sure, you can paraphrase it without a loss of meaning. If possible, read a couple of professional reviews of a targeted book chapter or article. It can help you to analyze the text better.

- Mark the arguments. The process of summarizing is always easier if you have a marker to highlight important details in the text. If you don’t have a printed text, there’s always Microsoft Word to use a highlight tool on the paper. Try to mark all arguments, statistics, and facts in the text to represent them in your summary. This information will turn into key elements of the summary you’ll create, so keep attention on what you highlight exactly.

- Take care of plagiarism. Before you start writing, learn what percent of originality should you aim at. Various projects have different requirements. And they determine how many efforts you should put into writing to get a perfect summary your teacher will like. Depending on the percent of originality, build a plan for your short text. Allow yourself copy as much information as allowed to save your time.

- Build a structure. With the help of key elements, which you’ve highlighted in the text, it’s possible to create a powerful structure including all the interesting facts and arguments. Develop an outline according to a basic structure – introduction, body, and conclusion. Even if your summary is extremely short, the main idea should sound in both the first and last sentences.

- Write a draft. If you’re not a professional writer, it can be extremely difficult to develop a text with the correct word count on the first try. We advise you to develop a general text firstly – include all the information without controlling the number of sentences.

- Cut out the unnecessary parts. On this step, you should edit the draft and eliminate the unnecessary parts. Keep in mind, the number of sentences your summary must contain. Make sure the main point is fully represented in the text. You can cut out any sentence except those concluding the significant arguments.

- Wordiness – you should delete unnecessary words, which make it difficult to understand the text

- Common mistakes – mistakes made in academic papers are basically the same, so it’s helpful to have an article like this one when you’re proofreading

- Appropriate terminology – for each topic, there’s a list of the terminology you can use

- Facts and statistics – you can accidently write a wrong year or percent, make sure to avoid these mistakes

- Quotes – every quote should be written correctly and have a link to its source.

📝 How to Proofread the Summarized Text?

Now, when you know how to summarize an article, it’s time to edit your text whether it’s your own writing or a summary generator’s results.

In this chapter, you’ll see the basic ways to proofread any type of text: academic paper (essay, research paper, etc.), article, letter, book’s chapter, and so on.

- Proofread your summary. Are there times when you can’t remember an appropriate synonym? Then you should use Thesaurus and analogous services from time to time. They can expand your vocabulary a lot and help to find the right words even in the most challenging situations.

- Pay attention to easily confused words. It’s especially significant if you edit a nonfiction text – there’s a number of words people often confuse without even realizing. English Oxford Living Dictionaries have a list of these word pairs so you won’t miss any.

- Proofread one type of mistakes at a time. To edit a paper properly don’t split your attention to grammar and punctuation—this way you can miss dozens of mistakes. To get more accurate results, read the first time to edit the style, the second to eliminate grammar mistakes, and the third to proofread punctuation. Take as many times as you need to concentrate on each type.

- Take a rest from your paper. If you use an online summarizing tool, you can skip this step. But if you’ve been writing a paper for several hours and now trying to edit it without taking a break, it may be a bad idea. Why? Because without a fresh pair of eyes there’s a great possibility not to spot even obvious mistakes. Give yourself some time to slightly forget the text—go for a walk or call a friend, and then return to work as a new person.

- Hire a proofreader. If you need to get perfect results, think about hiring a professional. Skills and qualification, which they have, guarantee a perfect text without any mistakes or style issues. Once you find a proofreader, you can optimize your work perfectly. Search for specialists on freelance websites like UpWork — it’s comfortable and safe to use. Of course, there’s one flaw you should think about—hiring a pro is expensive. So, everyone should decide on their own whether they need to spend this money or not.

- Switch your paper with a friend. If you can’t afford a professional editor, there’s a less expensive option—ask a friend to look through your paper and proofread theirs in return. Make sure, you both make manual editing, not just check it with Microsoft Office or analogous software. Although there are great grammar tools, they still can’t spot many mistakes obvious to a human.

- Use grammar checking tools. We recommend you not to depend on multiple grammar tools. But the assistance it can offer is irreplaceable. Start your proofreading by scanning your text with Grammarly or an analogous tool. The service detects many types of errors including confusing words’ pairs, punctuation, misspellings, wordiness, incorrect word order, unfinished sentences, and so on. Of course, you should never correct the mistakes without thinking on every specific issue. Tools not only miss a lot of mistakes but they also can be wrong about your errors.

- Read aloud. It’s amazing how different the written text can sound when read aloud. If you practice this proofreading method, you know that many mistakes can be spotted if you actually pronounce the text. Why does it happen? People understand information better if they perceive it with the help of different senses. You can use this trick even in learning— memorize the materials with the help of reading, listening, and speaking.

These tips are developed to help students proofread their papers easily. We hope this chapter and the post itself create a helpful guide on how to summarize an article.

Here you found the best summarizing tools, which are accessible online and completely free, and learned to summarize various texts and articles on your own.

Updated: Dec 19th, 2023

- Summarizing: Academic Integrity at MIT

- 4 of the Best Online Summarizer Tools to Shorten Text: maketecheasier

- Summarizing: University of Toronto

- 5 Easy Summarizing Strategies for Students: ThoughtCo.

- Summarizing: Texas A&M University Writing Center

- Comparative Study of Text Summarization Methods: Semantic Scholar

- How to Write a Summary: UTEP

- How to Write a Summary: UW

- Free Essays

- Writing Tools

- Lit. Guides

- Donate a Paper

- Referencing Guides

- Free Textbooks

- Tongue Twisters

- Job Openings

- Expert Application

- Video Contest

- Writing Scholarship

- Discount Codes

- IvyPanda Shop

- Terms and Conditions

- Privacy Policy

- Cookies Policy

- Copyright Principles

- DMCA Request

- Service Notice

This page is for anyone interested in creating a summary for an essay or any other written work. It lists the best online summarizing tools and gives advice on how to summarize an article well. Finally, you'll find tips on how to properly proofread your summary.

Upload your PDF, EPUB, DOCX, ODT, or TXT file here.

PDF, EPUB, DOCX, ODT, TXT

Or import your images / photos by clicking below

(JPEG / PNG)

Please wait... or cancel

Reading speed : 0.8

Go to the main ideas in your texts, summarize them « relevantly » in 1 Click

We advice + we design + we develope.

- Text example

Text example

Initialisation...

Identify the important ideas and facts

To help you summarize and analyze your argumentative texts , your articles, your scientific texts, your history texts as well as your well-structured analyses work of art, Resoomer provides you with a "Summary text tool" : an educational tool that identifies and summarizes the important ideas and facts of your documents. Summarize in 1-Click, go to the main idea or skim through so that you can then interpret your texts quickly and develop your syntheses .

Who is Resoomer for ?

College students.

With Resoomer, summarize your Wikipedia pages in a matter of seconds for your productivity.

Identify the most important ideas and arguments of your texts so that you can prepare your lessons.

JOURNALISTS

If you prefer simplified information that summarizes the major events, then Resoomer is for you !

Identify and understand very fast the facts and the ideas of your texts that are part of the current news and events.

PRESS RELEASES

With the help of Resoomer, go to the main idea of your articles to write your arguments and critiques .

Save time, summarize your digital documents for a relevant and fast uptake of information.

Need to summarize your books' presentations ? Identify the arguments in a matter of seconds.

Too many documents ? Simplify your readings with Resoomer like a desktop tool.

Need to summarize your chapters ? With Resoomer, go to the heart of your ideas.

Identify your books' or your authors' ideas quickly. Summarize the most important main points.

From now on, create quick summaries of your artists' presentation and their artworks .

INSTITUTIONS

Identify the most important passages in texts that contains a lot of words for detailed analyses .

They Tweeted

Follow @resoomer_ Tweeter

SUMMARIZE YOUR ONLINE ARTICLES IN 1-CLICK

Download the extension for your browser

Surf online and save time when reading on internet ! Resoomer summarizes your articles in 500 words so that you can go to the main idea of your text.

HOW DOES RESOOMER WORK ?

Popular articles.

- Summary and synthesis: the difference?

- The text summarizer

- Summarize a text

- Summarize a document online

- Summarize an online article

- Read more and faster documents

- Argue and find arguments in a text

- Learn more": How to increase your knowledge?

Our partners that like Resoom(er)ing their texts :

10 Powerful AI Tools for Academic Research

- Serra Ardem

AI is no longer science fiction, but a powerful ally in the academic realm. With AI by their side, researchers can free themselves from the burden of tedious tasks, and push the boundaries of knowledge. However, they must use AI carefully and ethically, as these practices introduce new considerations regarding data integrity, bias mitigation, and the preservation of academic rigor.

In this blog, we will:

- Highlight the increasing role of AI in academic research

- List 10 best AI tools for academic research, with a focus on each one’s strengths

- Share 5 best practices on how to use AI tools for academic research

Let’s dig in…

The Role of AI in Academic Research

AI tools for academic research hold immense potential, as they can analyze massive datasets and identify complex patterns. These tools can assist in generating new research questions and hypotheses, navigate mountains of academic literature to find relevant information, and automate tedious tasks like data entry.

Let’s take a look at the benefits AI tools offer for academic research:

- Supercharged literature reviews: AI can sift through vast amounts of academic literature, and pinpoint relevant studies with far greater speed and accuracy than manual searches.

- Accelerated data analysis: AI tools can rapidly analyze large datasets and uncover intricate insights that might otherwise be overlooked, or time-consuming to identify manually.

- Enhanced research quality: Helping with grammar checking, citation formatting, and data visualization, AI tools can lead to a more polished and impactful final product.

- Automation of repetitive tasks: By automating routine tasks, AI can save researchers time and effort, allowing them to focus on more intellectually demanding tasks of their research.

- Predictive modeling and forecasting: AI algorithms can develop predictive models and forecasts, aiding researchers in making informed decisions and projections in various fields.

- Cross-disciplinary collaboration: AI fosters collaboration between researchers from different disciplines by facilitating communication through shared data analysis and interpretation.

Now let’s move on to our list of 10 powerful AI tools for academic research, which you can refer to for a streamlined, refined workflow. From formulating research questions to organizing findings, these tools can offer solutions for every step of your research.

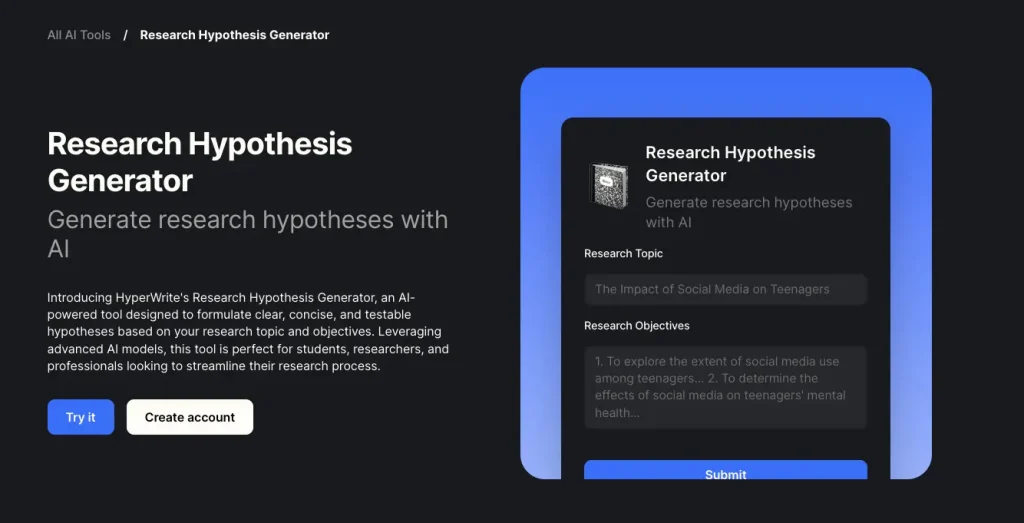

1. HyperWrite

For: hypothesis generation

HyperWrite’s Research Hypothesis Generator is perfect for students and academic researchers who want to formulate clear and concise hypotheses. All you have to do is enter your research topic and objectives into the provided fields, and then the tool will let its AI generate a testable hypothesis. You can review the generated hypothesis, make any necessary edits, and use it to guide your research process.

Pricing: You can have a limited free trial, but need to choose at least the Premium Plan for additional access. See more on pricing here .

2. Semantic Scholar

For: literature review and management

With over 200 million academic papers sourced, Semantic Scholar is one of the best AI tools for literature review. Mainly, it helps researchers to understand a paper at a glance. You can scan papers faster with the TLDRs (Too Long; Didn’t Read), or generate your own questions about the paper for the AI to answer. You can also organize papers in your own library, and get AI-powered paper recommendations for further research.

Pricing: free

For: summarizing papers

Apparently, Elicit is a huge booster as its users save up to 5 hours per week. With a database of 125 million papers, the tool will enable you to get one-sentence, abstract AI summaries, and extract details from a paper into an organized table. You can also find common themes and concepts across many papers. Keep in mind that Elicit works best with empirical domains that involve experiments and concrete results, like biomedicine and machine learning.

Pricing: Free plan offers 5,000 credits one time. See more on pricing here .

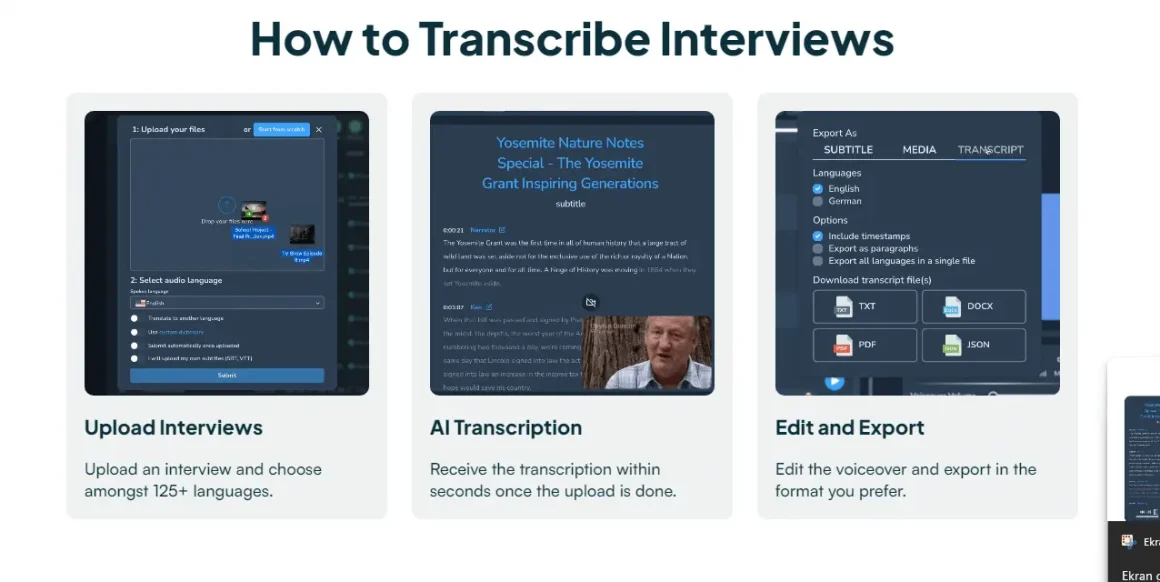

For: transcribing interviews

Supporting 125+ languages, Maestra’s interview transcription software will save you from the tedious task of manual transcription so you can dedicate more time to analyzing and interpreting your research data. Just upload your audio or video file to the tool, select the audio language, and click “Submit”. Maestra will convert your interview into text instantly, and with very high accuracy. You can always use the tool’s built-in text editor to make changes, and Maestra Teams to collaborate with fellow researchers on the transcript.

Pricing: With the “Pay As You Go” plan, you can pay for the amount of work done. See more on pricing here .

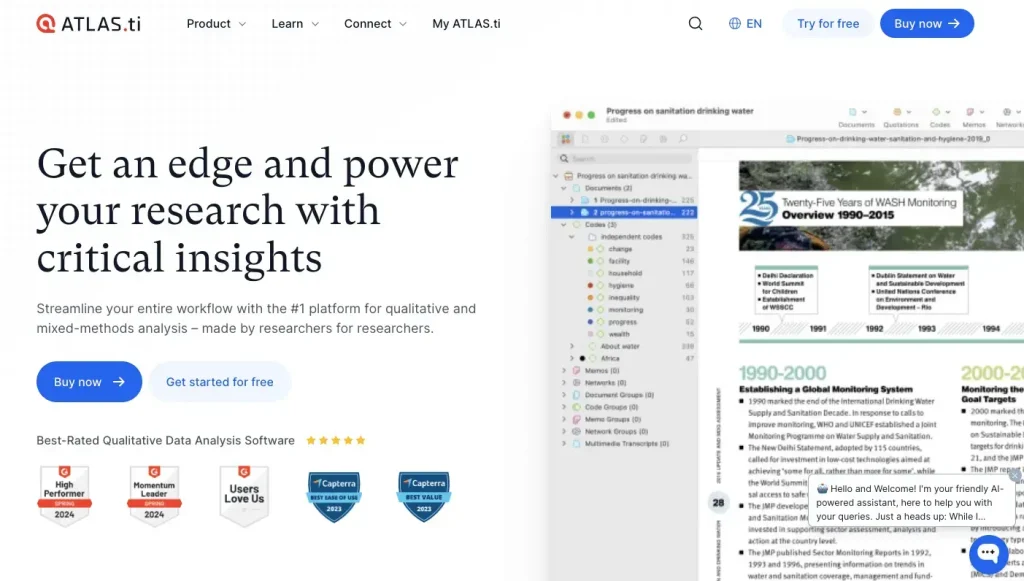

5. ATLAS.ti

For: qualitative data analysis

Whether you’re working with interview transcripts, focus group discussions, or open-ended surveys, ATLAS.ti provides a set of tools to help you extract meaningful insights from your data. You can analyze texts to uncover hidden patterns embedded in responses, or create a visualization of terms that appear most often in your research. Plus, features like sentiment analysis can identify emotional undercurrents within your data.

Pricing: Offers a variety of licenses for different purposes. See more on pricing here .

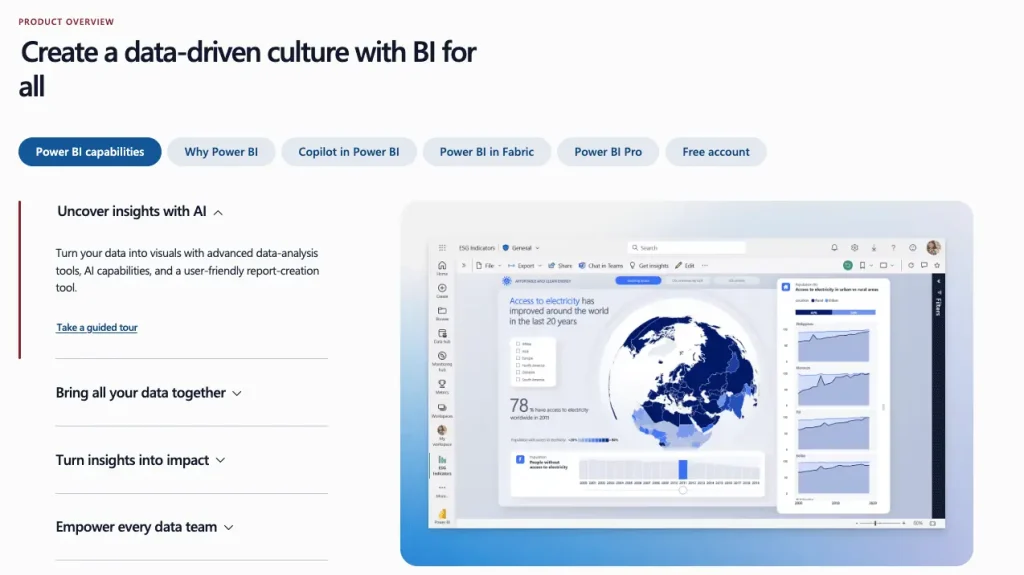

6. Power BI

For: quantitative data analysis

Microsoft’s Power BI offers AI Insights to consolidate data from various sources, analyze trends, and create interactive dashboards. One feature is “Natural Language Query”, where you can directly type your question and get quick insights about your data. Two other important features are “Anomaly Detection”, which can detect unexpected patterns, and “Decomposition Tree”, which can be utilized for root cause analysis.

Pricing: Included in a free account for Microsoft Fabric Preview. See more on pricing here .

7. Paperpal

For: writing research papers

As a popular AI writing assistant for academic papers, Paperpal is trained and built on 20+ years of scholarly knowledge. You can generate outlines, titles, abstracts, and keywords to kickstart your writing and structure your research effectively. With its ability to understand academic context, the tool can also come up with subject-specific language suggestions, and trim your paper to meet journal limits.

Pricing: Free plan offers 5 uses of AI features per day. See more on pricing here .

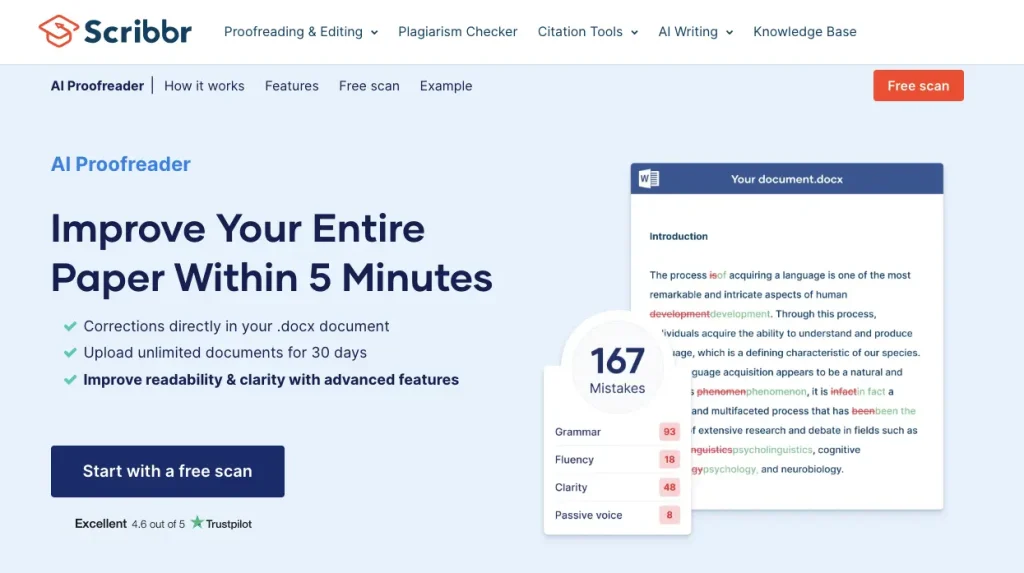

For: proofreading

With Scribbr’s AI Proofreader by your side, you can make your academic writing more clear and easy to read. The tool will first scan your document to catch mistakes. Then it will fix grammatical, spelling and punctuation errors while also suggesting fluency corrections. It is really easy to use (you can apply or reject corrections with 1-click), and works directly in a DOCX file.

Pricing: The free version gives a report of your issues but does not correct them. See more on pricing here .

9. Quillbot

For: detecting AI-generated content

Want to make sure your research paper does not include AI-generated content? Quillbot’s AI Detector can identify certain indicators like repetitive words, awkward phrases, and an unnatural flow. It’ll then show a percentage representing the amount of AI-generated content within your text. The tool has a very user-friendly interface, and you can have an unlimited number of checks.

10. Lateral

For: organizing documents

Lateral will help you keep everything in one place and easily find what you’re looking for.

With auto-generated tables, you can keep track of all your findings and never lose a reference. Plus, Lateral uses its own machine learning technology (LIP API) to make content suggestions. With its “AI-Powered Concepts” feature, you can name a Concept, and the tool will recommend relevant text across all your papers.

Pricing: Free version offers 500 Page Credits one-time. See more on pricing here .

How to Use AI Tools for Research: 5 Best Practices

Before we conclude our blog, we want to list 5 best practices to adopt when using AI tools for academic research. They will ensure you’re getting the most out of AI technology in your academic pursuits while maintaining ethical standards in your work.

- Always remember that AI is an enhancer, not a replacement. While it can excel at tasks like literature review and data analysis, it cannot replicate the critical thinking and creativity that define strong research. Researchers should leverage AI for repetitive tasks, but dedicate their own expertise to interpret results and draw conclusions.

- Verify results. Don’t take AI for granted. Yes, it can be incredibly efficient, but results still require validation to prevent misleading or inaccurate results. Review them thoroughly to ensure they align with your research goals and existing knowledge in the field.

- Guard yourself against bias. AI tools for academic research are trained on existing data, which can contain social biases. You must critically evaluate the underlying assumptions used by the AI model, and ask if they are valid or relevant to your research question. You can also minimize bias by incorporating data from various sources that represent diverse perspectives and demographics.

- Embrace open science. Sharing your AI workflow and findings can inspire others, leading to innovative applications of AI tools. Open science also promotes responsible AI development in research, as it fosters transparency and collaboration among scholars.

- Stay informed about the developments in the field. AI tools for academic research are constantly evolving, and your work can benefit from the recent advancements. You can follow numerous blogs and newsletters in the area ( The Rundown AI is a great one) , join online communities, or participate in workshops and training programs. Moreover, you can connect with AI researchers whose work aligns with your research interests.

Frequently Asked Questions

Is chatgpt good for academic research.

ChatGPT can be a valuable tool for supporting your academic research, but it has limitations. You can use it for brainstorming and idea generation, identifying relevant resources, or drafting text. However, ChatGPT can’t guarantee the information it provides is entirely accurate or unbiased. In short, you can use it as a starting point, but never rely solely on its output.

Can I use AI for my thesis?

Yes, but it shouldn’t replace your own work. It can help you identify research gaps, formulate a strong thesis statement, and synthesize existing knowledge to support your argument. You can always reach out to your advisor and discuss how you plan to use AI tools for academic research .

Can AI write review articles?

AI can analyze vast amounts of information and summarize research papers much faster than humans, which can be a big time-saver in the literature review stage. Yet it can struggle with critical thinking and adding its own analysis to the review. Plus, AI-generated text can lack the originality and unique voice that a human writer brings to a review.

Can professors detect AI writing?

Yes, they can detect AI writing in several ways. Software programs like Turnitin’s AI Writing Detection can analyze text for signs of AI generation. Furthermore, experienced professors who have read many student papers can often develop a gut feeling about whether a paper was written by a human or machine. However, highly sophisticated AI may be harder to detect than more basic versions.

Can I do a PhD in artificial intelligence?

Yes, you can pursue a PhD in artificial intelligence or a related field such as computer science, machine learning, or data science. Many universities worldwide offer programs where you can delve deep into specific areas like natural language processing, computer vision, and AI ethics. Overall, pursuing a PhD in AI can lead to exciting opportunities in academia, industry research labs, and tech companies.

This blog shared 10 powerful AI tools for academic research, and highlighted each tool’s specific function and strengths. It also explained the increasing role of AI in academia, and listed 5 best practices on how to adopt AI research tools ethically.

AI tools hold potential for even greater integration and impact on research. They are likely to become more interconnected, which can lead to groundbreaking discoveries at the intersection of seemingly disparate fields. Yet, as AI becomes more powerful, ethical concerns like bias and fairness will need to be addressed. In short, AI tools for academic research should be utilized carefully, with a keen awareness of their capabilities and limitations.

About Serra Ardem

Serra Ardem is a freelance writer and editor based in Istanbul. For the last 8 years, she has been collaborating with brands and businesses to tell their unique story and develop their verbal identity.

- Methodology

- Open access

- Published: 20 May 2024

Fuzzy cognitive mapping in participatory research and decision making: a practice review

- Iván Sarmiento 1 , 2 ,

- Anne Cockcroft 1 ,

- Anna Dion 1 ,

- Loubna Belaid 1 ,

- Hilah Silver 1 ,

- Katherine Pizarro 1 ,

- Juan Pimentel 1 , 3 ,

- Elyse Tratt 4 ,

- Lashanda Skerritt 1 ,

- Mona Z. Ghadirian 1 ,

- Marie-Catherine Gagnon-Dufresne 1 , 5 &

- Neil Andersson 1 , 6

Archives of Public Health volume 82 , Article number: 76 ( 2024 ) Cite this article

10 Accesses

1 Altmetric

Metrics details

Fuzzy cognitive mapping (FCM) is a graphic technique to describe causal understanding in a wide range of applications. This practice review summarises the experience of a group of participatory research specialists and trainees who used FCM to include stakeholder views in addressing health challenges. From a meeting of the research group, this practice review reports 25 experiences with FCM in nine countries between 2016 and 2023.

The methods, challenges and adjustments focus on participatory research practice. FCM portrayed multiple sources of knowledge: stakeholder knowledge, systematic reviews of literature, and survey data. Methodological advances included techniques to contrast and combine maps from different sources using Bayesian procedures, protocols to enhance the quality of data collection, and tools to facilitate analysis. Summary graphs communicating FCM findings sacrificed detail but facilitated stakeholder discussion of the most important relationships. We used maps not as predictive models but to surface and share perspectives of how change could happen and to inform dialogue. Analysis included simple manual techniques and sophisticated computer-based solutions. A wide range of experience in initiating, drawing, analysing, and communicating the maps illustrates FCM flexibility for different contexts and skill bases.

Conclusions

A strong core procedure can contribute to more robust applications of the technique while adapting FCM for different research settings. Decision-making often involves choices between plausible interventions in a context of uncertainty and multiple possible answers to the same question. FCM offers systematic and traceable ways to document, contrast and sometimes to combine perspectives, incorporating stakeholder experience and causal models to inform decision-making. Different depths of FCM analysis open opportunities for applying the technique in skill-limited settings.

Peer Review reports

Collaborative generation of knowledge recognises people’s right to be involved in decisions that shape their lives [ 1 ]. Their participation makes research and interventions more relevant to local context and priorities and, thus, more likely to be effective [ 2 ]. A commitment to the co-creation of knowledge proposes that people make better decisions when they have the benefit of both scientific and other forms of knowledge. These include context-specific understanding, knowledge claims based on local settings, experience and practice, and organisational know-how [ 3 ]. Participatory research expands the idea of what counts as evidence, opening space for the experience and knowledge of stakeholders [ 4 , 5 ]. The challenge is how to create a level playing field where diverse knowledges can contribute equally. We present fuzzy cognitive mapping (FCM) as a rigorous and transparent tool to combine different perspectives into composite theories to guide shared decision-making [ 6 , 7 , 8 ].

In the early 1980s, the combination of fuzzy logic [ 9 ] to concept mapping of decision making [ 10 , 11 ] led to FCM [ 12 ]. Fuzzy cognitive maps are directed graphs [ 13 ] where nodes correspond to factors or concepts, and arrows describe directed influences. Using this basic structure for causal relationships, users can represent their knowledge of complex systems, including many interacting concepts. Many variables are not easily measured or estimated with precision or are hard to circumscribe within a formal definition, for example, wellbeing, cultural safety, or racism [ 14 , 15 ]. Nevertheless, their causes and effects are important to capture for decision-making. Fuzzy cognitive maps offer a formal structure to include these kinds of variables in the analysis of complex health issues.

The flexibility of the technique allows for systematic mapping of knowledge from multiple sources to identify influences on a particular outcome while supporting collective learning and decision making [ 16 ]. FCM has been used across multiple fields with applications that include modelling, prediction, monitoring, decision-making, and management [ 17 , 18 , 19 , 20 ]. FCM has been applied in medicine to aid diagnosis and treatment decision-making [ 21 , 22 ]. FCM has also supported community and stakeholder engagement in environmental sciences [ 23 , 24 ] and health by examining conventional and Indigenous understanding of causes of diabetes [ 25 ].

Many implementation details contribute to interpretability of FCM, a common concern for researchers new to the technique. This review addresses these practical details when we used FCM to include local stakeholder understanding of causes of health issues in co-design of actions to tackle those issues. The focus is on transparent mapping of stakeholder experience and how it meets requirements for trustworthy data collection and initial analysis. The methods section describes what fuzzy cognitive maps are and how we documented our experience using them. We describe tools and procedures for researchers using FCM to incorporate different knowledges in health research. The results summarize experience in four stages of mapping: framing the outcome of concern, drawing the maps, performing basic analyses, and using the resulting maps. The discussion contrasts our practices with those described in the literature, identifying potential limitations and suggesting future directions.

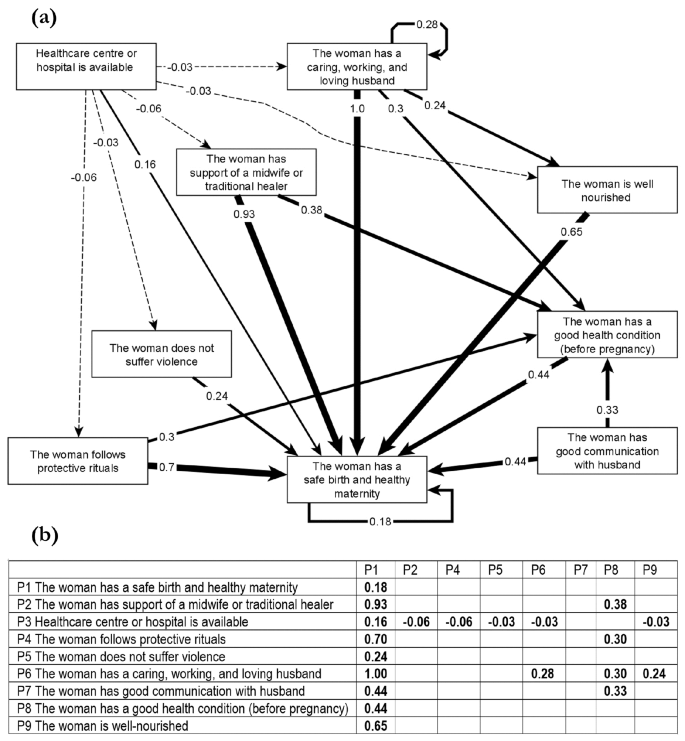

Methods of the practice review

Fuzzy cognitive maps are graphs of causal understanding [ 6 ]. The unit of meaning in fuzzy cognitive mapping is a relationship, which corresponds to two nodes (concepts) linked by an arrow. Arrows originate in the causes and point to their outcomes. A cause can lead to an outcome directly or through multiple pathways (succession of arrows). Figure 1 shows a fuzzy cognitive map of causes of healthy maternity according to indigenous traditional midwives in the South of Mexico [ 26 ].

Fuzzy cognitive map of causes of a healthy maternity according to indigenous traditional midwives in Guerrero, Mexico. ( a ) Graphical display of a fuzzy cognitive map. The boxes are nodes, and the arrows are directed edges. Strong lines indicate positive influences, and dashed lines indicate negative influences. Thicker lines correspond to stronger effects. ( b ) Adjacency matrix with the same content as the map. Rows and columns correspond to the nodes. The value in each cell indicates the strength of the influence of one node (row) on another (column). Reproduced without changes with permission from the authors of [ 26 ]

The “fuzzy” appellation refers to weights that indicate the strength of relationships between concepts. For example, a numeric scale with values between one and five might correspond to very low , low , medium , high or very high influence. If the value is 0, there is no causal relationship, and the concepts are independent. Negative weights indicate a causal decrease in the outcome, and positive weights indicate a causal increase in the outcome. A tabular display of the map, an adjacency matrix, has the concepts in columns and rows. The value in a cell indicates the weight of the influence of the row concept on the column concept (Fig. 1 ). A map can also be represented as an edge list. This shows relationships across three columns: causes (originating node), outcomes (landing node) and weights. Some maps use ranges of variability for the weights (grey fuzzy cognitive maps) [ 27 ] or fuzzy scales to indicate changing states of factors [ 21 ].

Following rules of logical inference, the relationships between concepts can suggest potential explanations for how they work together to influence a specific outcome [ 28 , 29 ]. One might interpret a cognitive map as a series of if-then rules [ 9 ] describing causal relationships between concepts [ 12 ]. For example, if the quality of health care increases, then the population’s health should also improve. Maps can incorporate feedback loops [ 30 ], such as: if violence increases, then more violence happens.

An international participatory research group met in Montreal, Canada, to share FCM experience and discussed its application. FCM implementation in all cases shared a common ten-step protocol [ 6 ], with results of almost all exercises published in peer-reviewed journals. The lead author of each publication presented their work and corroborated the synthesis reflected the most important aspects of their experiences. A webpage details the methods, materials, and tools members of the group have used in practice ( https://ciet.org/fcm ).

As a multilevel training exercise, the meeting included graduate students, emerging researchers with their first research projects and experienced FCM researchers. Nine researchers presented their experience, challenges and lessons learned. The senior co-author (NA) led a four-round nominal group discussion covering consecutive mapping stages: (1) who defined the research issue and how, (2) procedures for building maps and the role of participants at each point, (3) analysis tools and methods and (4) use of the maps. Before the session, participants received the published papers concerning the FCM projects under discussion and the guiding questions about the four themes. After the meeting, the first author (IS) transcribed and drew on the session recording to draft the manuscript. All authors subsequently contributed to the manuscript, which follows the approach used to describe our work with narrative evaluations [ 31 ]. The summary of FCM methods used, the results of the practice review, follows the categories used in the nominal group to inquire about FCM implementation.

Researchers reported their practice in three different FCM applications. Most cases mapped stakeholder knowledge in the context of participatory research [ 26 , 32 , 33 , 34 , 35 , 36 , 37 , 38 39 ]. They also described using FCM to contextualise mixed-methods literature reviews in stakeholder perspectives [ 5 , 40 , 41 , 42 ] and to conduct secondary quantitative analysis of surveys [ 43 , 44 , 45 ]. A fourth FCM application, not discussed in detail in this paper, is in graduate teaching. A master’s program in Colombia and a PhD course in Canada incorporated the creation of cognitive maps as a learning tool, with each student building a map to describe how their research project could contribute to promoting change.

Table 1 summarises the characteristics of the 25 FCM practices reviewed. The number of maps varied from a handful to dozens. Table 2 summarises the processes of defining the issue, drawing, analysing, and using the three different kinds of maps: stakeholder knowledge, mixed-methods literature reviews, and questionnaire data. Table 3 summarises the FCM processes in each of the four mapping stages. Of 23 FCM publications from the group since 2017 (see Additional File 1 ), four describe methodological contributions [ 4 , 5 , 6 , 35 ], and the rest describe the use of FCM in specific contexts.

Stage 1. Who defined the issue and how

Focus group discussions or conversations with partners were the most common methods for defining the issue to be mapped. Cases #6 (pregnant and parenting adolescents) and #20 (women’s satisfaction with HIV care) used literature maps to identify priorities with participants in Canada, while cases #5 (immigrant’s unmet postpartum care needs) and #7 (child protection involvement) contextualised literature-based maps with stakeholder knowledge. In cases #15 and #16 on violence against women and suicide among men in Botswana, community members involved in another project raised these issues as concerns. Two cases used FCM in the secondary analysis of survey data to answer questions defined by the research teams (#1 Mexico dengue) and academic groups (#2 Colombia medical education).

All cases used a participatory research framework [ 46 ]. FCM worked both in well-established partnerships (#8 and #9 involved researchers and Indigenous communities in Mexico, and #20 well-established partnerships with women living with HIV) and in the early stages of trust building (#6 adolescent parents in Canada).

Almost all cases reported two levels of ethical review: institutional boards linked with universities and local entities (health ministries and authorities, advisory boards, community organisations or leaders). Most review boards were unfamiliar with FCM, and some requested additional descriptions and protocols to help them understand the method. In Guatemala (#17) and Nunavik (#18), Indigenous authorities and a steering committee requested a mapping session themselves before approving the project. Most projects used oral consent, mainly due to the involvement of participants with a wide range of literacy levels and in contexts of mistrust about potential misuse of signed documents (Indigenous groups in #8) or during virtual mapping sessions (women living with HIV in #20).

Strengths-based or problem-focused

Most cases followed a strengths-based approach, focusing on what influences a positive outcome (for example, what causes good maternal health instead of what causes maternal morbidity or mortality). Some cases created two maps: one about causes of a positive outcome and one about causes of the corresponding negative outcome (#8 causes and risks for safe birth in Indigenous communities, and #10 causes and protectors of short birth interval). Building two maps helped to unearth additional actionable concepts but was time-consuming and tiring for the stakeholders creating the maps.

Broad concepts or tight questions

A recurring issue was how broad the question or focus should be. A broad question about ‘what influences wellbeing’ fitted well with the holistic perspectives of Mayan communities but posed challenges for drawing, analysing, and communicating maps with many concepts and interactions (#17, Guatemala). A very narrowly defined outcome, on the other hand, might miss potentially actionable causes.

Stage 2. Drawing maps

In the group’s experience, most people readily understand how to make maps, given their basic structure (cause, arrow and consequence). Based on their collective experience, the research group developed a protocol to increase replicability and data quality in FCM, particularly for stakeholder maps, which often involve multiple facilitators and different languages. Creating maps from literature reviews and questionnaire data did not have some of the complications of creating maps with stakeholders but also benefitted from detailed protocols.

Stakeholder maps

The mapping cases reviewed here included mappers ranging from highly trained university researchers (#9 on safe birth) to people without education and speaking only their local language (#8 in Mexico, #10 and #21 in Nigeria, #11 and #12 in Uganda). Meeting participants discussed the advantages and disadvantages of group and individual maps. Groups stimulate the emergence of ideas but include the challenge of ensuring all participants are heard. Careful training of facilitators and managing the mapping sessions as nominal groups helped to increase the participation of quieter people. Groups of not more than five mappers were much easier to facilitate without losing the creative turbulence of a group. Most cases relied on small homogeneous groups, run separately by age and gender, to avoid power imbalances among the map authors. Individual sessions worked well for sensitive topics. They accommodated schedules of busy participants and worked for mappers not linked to a specific community.

Basic equipment for mapping is inexpensive and almost universally available. Most researchers in our group used either sticky notes on a large sheet of paper or magnetic tiles on a metal whiteboard (Fig. 2 ). Some researchers had worked directly with free software to draw the electronic maps ( www.mentalmodeler.com or www.yworks.com/products/yed ), while others digitised the physical maps, often from a photograph. Three cases conducted FCM over the internet or telephone, with individual mappers (#9, #20 and #25) constructing their maps online in real-time.

Fuzzy cognitive maps from group sessions in Uganda and Nigeria. ( a ) A group of women in Uganda discusses what contributes to increasing institutional childbirths in rural communities. They used sticky notes and markers on white paper to draw the maps. ( b ) A group of men in Northern Nigeria uses a whiteboard and magnetic tiles to draw a map on causes of short birth intervals

Group mapping sessions typically had a facilitator and a reporter to take notes on the discussions. Reporters are crucial in recording explanations about the meaning of concepts and links. Experienced researchers stressed that careful training of facilitators and reporters, including several rounds of field practice, is essential to ensure quality. We developed materials to support training and quality control of mapping sessions (#21 Nigeria), available at www.ciet.org/fcm . In Nigeria (#21), the research team successfully field-tested the use of Zoom technology via mobile handsets with internet connection by the cellular network to allow virtual participation of international researchers in FCM sessions in the classroom and communities.

Many mappers in community groups had limited or no schooling and only verbal use of their local language. It worked well in these cases for the facilitators to write the concepts on the labels in English or Spanish, while the discussion was in the local language. Facilitators frequently reminded the groups about the labels of the concepts in the local language. In case #16 in Botswana, more literate groups wrote the concepts in Setswana, and the facilitators later translated them into English. Most researchers found that the FCM graphical format helped to overcome language barriers, and it seems to have worked equally well with literate and illiterate groups. Additional file 2 lists common pitfalls and potential solutions during group mapping sessions.

Identifying causes of the issue

Some mapping sessions started by asking participants what the central issue of the map meant to them. This was useful for comparing participant views about the main topic (#8 and #9 maternal health in Indigenous communities and #20 satisfaction with HIV care) and in understanding local concepts of broad topics (#17 Indigenous wellbeing). In Nigeria (#21), group discussions defined elements of adolescent sexual and reproductive health before undertaking FCM, and facilitators shared the list of elements with participants in mapping sessions. In Nunavik (#13 Canada, Inuit women on HPV self-sampling), participating women received an initial presentation to create a common understanding to discuss HPV self-sampling, an unfamiliar technique in Inuit communities.

Some cases created stakeholder maps from scratch, asking participants what they thought would cause the main outcome (#8 to 10, 14 to 19, 21, and 23 to 25). Other cases reviewed the literature first and presented the findings to participants (#5, 7 and 20). In these cases, the facilitators reminded participants that literature maps might not represent their experiences. They encouraged them to add, remove and reorganise concepts, relationships, and weights until they felt the map represented their knowledge.