Textual Analysis: Definition, Types & 10 Examples

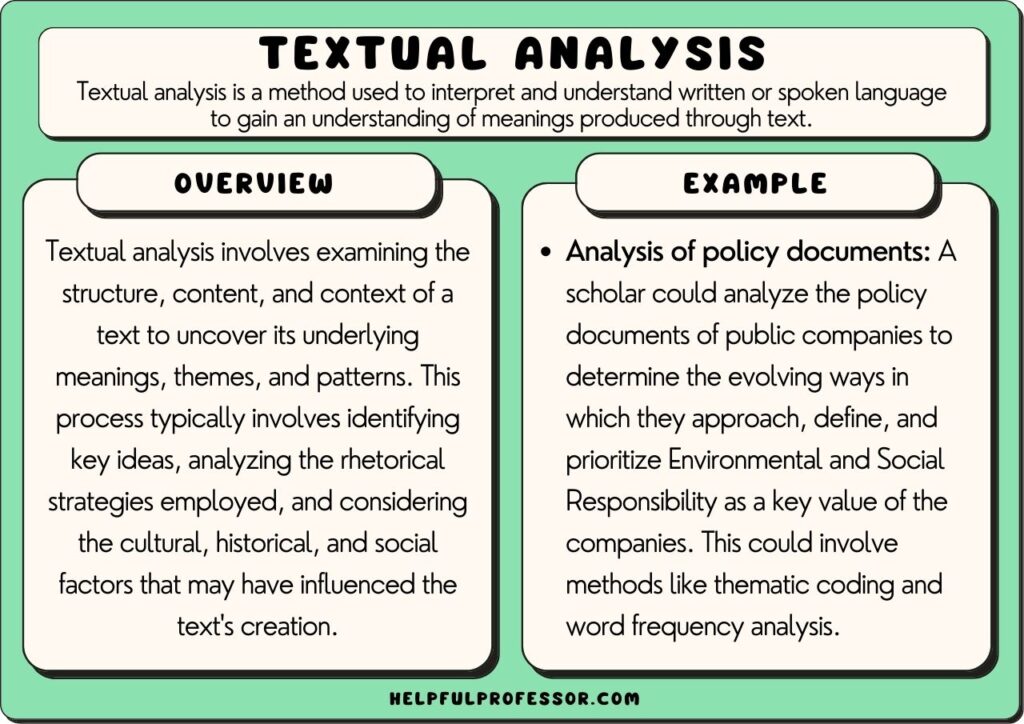

Textual analysis is a research methodology that involves exploring written text as empirical data. Scholars explore both the content and structure of texts, and attempt to discern key themes and statistics emergent from them.

This method of research is used in various academic disciplines, including cultural studies, literature, bilical studies, anthropology , sociology, and others (Dearing, 2022; McKee, 2003).

This method of analysis involves breaking down a text into its constituent parts for close reading and making inferences about its context, underlying themes, and the intentions of its author.

Textual Analysis Definition

Alan McKee is one of the preeminent scholars of textual analysis. He provides a clear and approachable definition in his book Textual Analysis: A Beginner’s Guide (2003) where he writes:

“When we perform textual analysis on a text we make an educated guess at some of the most likely interpretations that might be made of the text […] in order to try and obtain a sense of the ways in which, in particular cultures at particular times, people make sense of the world around them.”

A key insight worth extracting from this definition is that textual analysis can reveal what cultural groups value, how they create meaning, and how they interpret reality.

This is invaluable in situations where scholars are seeking to more deeply understand cultural groups and civilizations – both past and present (Metoyer et al., 2018).

As such, it may be beneficial for a range of different types of studies, such as:

- Studies of Historical Texts: A study of how certain concepts are framed, described, and approached in historical texts, such as the Bible.

- Studies of Industry Reports: A study of how industry reports frame and discuss concepts such as environmental and social responsibility.

- Studies of Literature: A study of how a particular text or group of texts within a genre define and frame concepts. For example, you could explore how great American literature mythologizes the concept of the ‘The American Dream’.

- Studies of Speeches: A study of how certain politicians position national identities in their appeals for votes.

- Studies of Newspapers: A study of the biases within newspapers toward or against certain groups of people.

- Etc. (For more, see: Dearing, 2022)

McKee uses the term ‘textual analysis’ to also refer to text types that are not just written, but multimodal. For a dive into the analysis of multimodal texts, I recommend my article on content analysis , where I explore the study of texts like television advertisements and movies in detail.

Features of a Textual Analysis

When conducting a textual analysis, you’ll need to consider a range of factors within the text that are worthy of close examination to infer meaning. Features worthy of considering include:

- Content: What is being said or conveyed in the text, including explicit and implicit meanings, themes, or ideas.

- Context: When and where the text was created, the culture and society it reflects, and the circumstances surrounding its creation and distribution.

- Audience: Who the text is intended for, how it’s received, and the effect it has on its audience.

- Authorship: Who created the text, their background and perspectives, and how these might influence the text.

- Form and structure: The layout, sequence, and organization of the text and how these elements contribute to its meanings (Metoyer et al., 2018).

Textual Analysis Coding Methods

The above features may be examined through quantitative or qualitative research designs , or a mixed-methods angle.

1. Quantitative Approaches

You could analyze several of the above features, namely, content, form, and structure, from a quantitative perspective using computational linguistics and natural language processing (NLP) analysis.

From this approach, you would use algorithms to extract useful information or insights about frequency of word and phrase usage, etc. This can include techniques like sentiment analysis, topic modeling, named entity recognition, and more.

2. Qualitative Approaches

In many ways, textual analysis lends itself best to qualitative analysis. When identifying words and phrases, you’re also going to want to look at the surrounding context and possibly cultural interpretations of what is going on (Mayring, 2015).

Generally, humans are far more perceptive at teasing out these contextual factors than machines (although, AI is giving us a run for our money).

One qualitative approach to textual analysis that I regularly use is inductive coding, a step-by-step methodology that can help you extract themes from texts. If you’re interested in using this step-by-step method, read my guide on inductive coding here .

See more Qualitative Research Approaches Here

Textual Analysis Examples

Title: “Discourses on Gender, Patriarchy and Resolution 1325: A Textual Analysis of UN Documents” Author: Nadine Puechguirbal Year: 2010 APA Citation: Puechguirbal, N. (2010). Discourses on Gender, Patriarchy and Resolution 1325: A Textual Analysis of UN Documents, International Peacekeeping, 17 (2): 172-187. doi: 10.1080/13533311003625068

Summary: The article discusses the language used in UN documents related to peace operations and analyzes how it perpetuates stereotypical portrayals of women as vulnerable individuals. The author argues that this language removes women’s agency and keeps them in a subordinate position as victims, instead of recognizing them as active participants and agents of change in post-conflict environments. Despite the adoption of UN Security Council Resolution 1325, which aims to address the role of women in peace and security, the author suggests that the UN’s male-dominated power structure remains unchallenged, and gender mainstreaming is often presented as a non-political activity.

Title: “Racism and the Media: A Textual Analysis” Author: Kassia E. Kulaszewicz Year: 2015 APA Citation: Kulaszewicz, K. E. (2015). Racism and the Media: A Textual Analysis . Dissertation. Retrieved from: https://sophia.stkate.edu/msw_papers/477

Summary: This study delves into the significant role media plays in fostering explicit racial bias. Using Bandura’s Learning Theory, it investigates how media content influences our beliefs through ‘observational learning’. Conducting a textual analysis, it finds differences in representation of black and white people, stereotyping of black people, and ostensibly micro-aggressions toward black people. The research highlights how media often criminalizes Black men, portraying them as violent, while justifying or supporting the actions of White officers, regardless of their potential criminality. The study concludes that news media likely continues to reinforce racism, whether consciously or unconsciously.

Title: “On the metaphorical nature of intellectual capital: a textual analysis” Author: Daniel Andriessen Year: 2006 APA Citation: Andriessen, D. (2006). On the metaphorical nature of intellectual capital: a textual analysis. Journal of Intellectual capital , 7 (1), 93-110.

Summary: This article delves into the metaphorical underpinnings of intellectual capital (IC) and knowledge management, examining how knowledge is conceptualized through metaphors. The researchers employed a textual analysis methodology, scrutinizing key texts in the field to identify prevalent metaphors. They found that over 95% of statements about knowledge are metaphor-based, with “knowledge as a resource” and “knowledge as capital” being the most dominant. This study demonstrates how textual analysis helps us to understand current understandings and ways of speaking about a topic.

Title: “Race in Rhetoric: A Textual Analysis of Barack Obama’s Campaign Discourse Regarding His Race” Author: Andrea Dawn Andrews Year: 2011 APA Citation: Andrew, A. D. (2011) Race in Rhetoric: A Textual Analysis of Barack Obama’s Campaign Discourse Regarding His Race. Undergraduate Honors Thesis Collection. 120 . https://digitalcommons.butler.edu/ugtheses/120

This undergraduate honors thesis is a textual analysis of Barack Obama’s speeches that explores how Obama frames the concept of race. The student’s capstone project found that Obama tended to frame racial inequality as something that could be overcome, and that this was a positive and uplifting project. Here, the student breaks-down times when Obama utilizes the concept of race in his speeches, and examines the surrounding content to see the connotations associated with race and race-relations embedded in the text. Here, we see a decidedly qualitative approach to textual analysis which can deliver contextualized and in-depth insights.

Sub-Types of Textual Analysis

While above I have focused on a generalized textual analysis approach, a range of sub-types and offshoots have emerged that focus on specific concepts, often within their own specific theoretical paradigms. Each are outlined below, and where I’ve got a guide, I’ve linked to it in blue:

- Content Analysis : Content analysis is similar to textual analysis, and I would consider it a type of textual analysis, where it’s got a broader understanding of the term ‘text’. In this type, a text is any type of ‘content’, and could be multimodal in nature, such as television advertisements, movies, posters, and so forth. Content analysis can be both qualitative and quantitative, depending on whether it focuses more on the meaning of the content or the frequency of certain words or concepts (Chung & Pennebaker, 2018).

- Discourse Analysis : Emergent specifically from critical and postmodern/ poststructural theories, discourse analysis focuses closely on the use of language within a social context, with the goal of revealing how repeated framing of terms and concepts has the effect of shaping how cultures understand social categories. It considers how texts interact with and shape social norms, power dynamics, ideologies, etc. For example, it might examine how gender is socially constructed as a distinct social category through Disney films. It may also be called ‘critical discourse analysis’.

- Narrative Analysis: This approach is used for analyzing stories and narratives within text. It looks at elements like plot, characters, themes, and the sequence of events to understand how narratives construct meaning.

- Frame Analysis: This approach looks at how events, ideas, and themes are presented or “framed” within a text. It explores how these frames can shape our understanding of the information being presented. While similar to discourse analysis, a frame analysis tends to be less associated with the loaded concept of ‘discourse’ that exists specifically within postmodern paradigms (Smith, 2017).

- Semiotic Analysis: This approach studies signs and symbols, both visual and textual, and could be a good compliment to a content analysis, as it provides the language and understandings necessary to describe how signs make meaning in cultural contexts that we might find with the fields of semantics and pragmatics . It’s based on the theory of semiotics, which is concerned with how meaning is created and communicated through signs and symbols.

- Computational Textual Analysis: In the context of data science or artificial intelligence, this type of analysis involves using algorithms to process large amounts of text. Techniques can include topic modeling, sentiment analysis, word frequency analysis, and others. While being extremely useful for a quantitative analysis of a large dataset of text, it falls short in its ability to provide deep contextualized understandings of words-in-context.

Each of these methods has its strengths and weaknesses, and the choice of method depends on the research question, the type of text being analyzed, and the broader context of the research.

See More Examples of Analysis Here

Strengths and Weaknesses of Textual Analysis

When writing your methodology for your textual analysis, make sure to define not only what textual analysis is, but (if applicable) the type of textual analysis, the features of the text you’re analyzing, and the ways you will code the data. It’s also worth actively reflecting on the potential weaknesses of a textual analysis approach, but also explaining why, despite those weaknesses, you believe this to be the most appropriate methodology for your study.

Chung, C. K., & Pennebaker, J. W. (2018). Textual analysis. In Measurement in social psychology (pp. 153-173). Routledge.

Dearing, V. A. (2022). Manual of textual analysis . Univ of California Press.

McKee, A. (2003). Textual analysis: A beginner’s guide. Textual analysis , 1-160.

Mayring, P. (2015). Qualitative content analysis: Theoretical background and procedures. Approaches to qualitative research in mathematics education: Examples of methodology and methods , 365-380. doi: https://doi.org/10.1007/978-94-017-9181-6_13

Metoyer, R., Zhi, Q., Janczuk, B., & Scheirer, W. (2018, March). Coupling story to visualization: Using textual analysis as a bridge between data and interpretation. In 23rd International Conference on Intelligent User Interfaces (pp. 503-507). doi: https://doi.org/10.1145/3172944.3173007

Smith, J. A. (2017). Textual analysis. The international encyclopedia of communication research methods , 1-7.

Chris Drew (PhD)

Dr. Chris Drew is the founder of the Helpful Professor. He holds a PhD in education and has published over 20 articles in scholarly journals. He is the former editor of the Journal of Learning Development in Higher Education. [Image Descriptor: Photo of Chris]

- Chris Drew (PhD) https://helpfulprofessor.com/author/chris-drew-phd/ 15 Animism Examples

- Chris Drew (PhD) https://helpfulprofessor.com/author/chris-drew-phd/ 10 Magical Thinking Examples

- Chris Drew (PhD) https://helpfulprofessor.com/author/chris-drew-phd/ Social-Emotional Learning (Definition, Examples, Pros & Cons)

- Chris Drew (PhD) https://helpfulprofessor.com/author/chris-drew-phd/ What is Educational Psychology?

Leave a Comment Cancel Reply

Your email address will not be published. Required fields are marked *

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Dissertation

- What Is a Research Methodology? | Steps & Tips

What Is a Research Methodology? | Steps & Tips

Published on 25 February 2019 by Shona McCombes . Revised on 10 October 2022.

Your research methodology discusses and explains the data collection and analysis methods you used in your research. A key part of your thesis, dissertation, or research paper, the methodology chapter explains what you did and how you did it, allowing readers to evaluate the reliability and validity of your research.

It should include:

- The type of research you conducted

- How you collected and analysed your data

- Any tools or materials you used in the research

- Why you chose these methods

- Your methodology section should generally be written in the past tense .

- Academic style guides in your field may provide detailed guidelines on what to include for different types of studies.

- Your citation style might provide guidelines for your methodology section (e.g., an APA Style methods section ).

Instantly correct all language mistakes in your text

Be assured that you'll submit flawless writing. Upload your document to correct all your mistakes.

Table of contents

How to write a research methodology, why is a methods section important, step 1: explain your methodological approach, step 2: describe your data collection methods, step 3: describe your analysis method, step 4: evaluate and justify the methodological choices you made, tips for writing a strong methodology chapter, frequently asked questions about methodology.

The only proofreading tool specialized in correcting academic writing

The academic proofreading tool has been trained on 1000s of academic texts and by native English editors. Making it the most accurate and reliable proofreading tool for students.

Correct my document today

Your methods section is your opportunity to share how you conducted your research and why you chose the methods you chose. It’s also the place to show that your research was rigorously conducted and can be replicated .

It gives your research legitimacy and situates it within your field, and also gives your readers a place to refer to if they have any questions or critiques in other sections.

You can start by introducing your overall approach to your research. You have two options here.

Option 1: Start with your “what”

What research problem or question did you investigate?

- Aim to describe the characteristics of something?

- Explore an under-researched topic?

- Establish a causal relationship?

And what type of data did you need to achieve this aim?

- Quantitative data , qualitative data , or a mix of both?

- Primary data collected yourself, or secondary data collected by someone else?

- Experimental data gathered by controlling and manipulating variables, or descriptive data gathered via observations?

Option 2: Start with your “why”

Depending on your discipline, you can also start with a discussion of the rationale and assumptions underpinning your methodology. In other words, why did you choose these methods for your study?

- Why is this the best way to answer your research question?

- Is this a standard methodology in your field, or does it require justification?

- Were there any ethical considerations involved in your choices?

- What are the criteria for validity and reliability in this type of research ?

Once you have introduced your reader to your methodological approach, you should share full details about your data collection methods .

Quantitative methods

In order to be considered generalisable, you should describe quantitative research methods in enough detail for another researcher to replicate your study.

Here, explain how you operationalised your concepts and measured your variables. Discuss your sampling method or inclusion/exclusion criteria, as well as any tools, procedures, and materials you used to gather your data.

Surveys Describe where, when, and how the survey was conducted.

- How did you design the questionnaire?

- What form did your questions take (e.g., multiple choice, Likert scale )?

- Were your surveys conducted in-person or virtually?

- What sampling method did you use to select participants?

- What was your sample size and response rate?

Experiments Share full details of the tools, techniques, and procedures you used to conduct your experiment.

- How did you design the experiment ?

- How did you recruit participants?

- How did you manipulate and measure the variables ?

- What tools did you use?

Existing data Explain how you gathered and selected the material (such as datasets or archival data) that you used in your analysis.

- Where did you source the material?

- How was the data originally produced?

- What criteria did you use to select material (e.g., date range)?

The survey consisted of 5 multiple-choice questions and 10 questions measured on a 7-point Likert scale.

The goal was to collect survey responses from 350 customers visiting the fitness apparel company’s brick-and-mortar location in Boston on 4–8 July 2022, between 11:00 and 15:00.

Here, a customer was defined as a person who had purchased a product from the company on the day they took the survey. Participants were given 5 minutes to fill in the survey anonymously. In total, 408 customers responded, but not all surveys were fully completed. Due to this, 371 survey results were included in the analysis.

Qualitative methods

In qualitative research , methods are often more flexible and subjective. For this reason, it’s crucial to robustly explain the methodology choices you made.

Be sure to discuss the criteria you used to select your data, the context in which your research was conducted, and the role you played in collecting your data (e.g., were you an active participant, or a passive observer?)

Interviews or focus groups Describe where, when, and how the interviews were conducted.

- How did you find and select participants?

- How many participants took part?

- What form did the interviews take ( structured , semi-structured , or unstructured )?

- How long were the interviews?

- How were they recorded?

Participant observation Describe where, when, and how you conducted the observation or ethnography .

- What group or community did you observe? How long did you spend there?

- How did you gain access to this group? What role did you play in the community?

- How long did you spend conducting the research? Where was it located?

- How did you record your data (e.g., audiovisual recordings, note-taking)?

Existing data Explain how you selected case study materials for your analysis.

- What type of materials did you analyse?

- How did you select them?

In order to gain better insight into possibilities for future improvement of the fitness shop’s product range, semi-structured interviews were conducted with 8 returning customers.

Here, a returning customer was defined as someone who usually bought products at least twice a week from the store.

Surveys were used to select participants. Interviews were conducted in a small office next to the cash register and lasted approximately 20 minutes each. Answers were recorded by note-taking, and seven interviews were also filmed with consent. One interviewee preferred not to be filmed.

Mixed methods

Mixed methods research combines quantitative and qualitative approaches. If a standalone quantitative or qualitative study is insufficient to answer your research question, mixed methods may be a good fit for you.

Mixed methods are less common than standalone analyses, largely because they require a great deal of effort to pull off successfully. If you choose to pursue mixed methods, it’s especially important to robustly justify your methods here.

Prevent plagiarism, run a free check.

Next, you should indicate how you processed and analysed your data. Avoid going into too much detail: you should not start introducing or discussing any of your results at this stage.

In quantitative research , your analysis will be based on numbers. In your methods section, you can include:

- How you prepared the data before analysing it (e.g., checking for missing data , removing outliers , transforming variables)

- Which software you used (e.g., SPSS, Stata or R)

- Which statistical tests you used (e.g., two-tailed t test , simple linear regression )

In qualitative research, your analysis will be based on language, images, and observations (often involving some form of textual analysis ).

Specific methods might include:

- Content analysis : Categorising and discussing the meaning of words, phrases and sentences

- Thematic analysis : Coding and closely examining the data to identify broad themes and patterns

- Discourse analysis : Studying communication and meaning in relation to their social context

Mixed methods combine the above two research methods, integrating both qualitative and quantitative approaches into one coherent analytical process.

Above all, your methodology section should clearly make the case for why you chose the methods you did. This is especially true if you did not take the most standard approach to your topic. In this case, discuss why other methods were not suitable for your objectives, and show how this approach contributes new knowledge or understanding.

In any case, it should be overwhelmingly clear to your reader that you set yourself up for success in terms of your methodology’s design. Show how your methods should lead to results that are valid and reliable, while leaving the analysis of the meaning, importance, and relevance of your results for your discussion section .

- Quantitative: Lab-based experiments cannot always accurately simulate real-life situations and behaviours, but they are effective for testing causal relationships between variables .

- Qualitative: Unstructured interviews usually produce results that cannot be generalised beyond the sample group , but they provide a more in-depth understanding of participants’ perceptions, motivations, and emotions.

- Mixed methods: Despite issues systematically comparing differing types of data, a solely quantitative study would not sufficiently incorporate the lived experience of each participant, while a solely qualitative study would be insufficiently generalisable.

Remember that your aim is not just to describe your methods, but to show how and why you applied them. Again, it’s critical to demonstrate that your research was rigorously conducted and can be replicated.

1. Focus on your objectives and research questions

The methodology section should clearly show why your methods suit your objectives and convince the reader that you chose the best possible approach to answering your problem statement and research questions .

2. Cite relevant sources

Your methodology can be strengthened by referencing existing research in your field. This can help you to:

- Show that you followed established practice for your type of research

- Discuss how you decided on your approach by evaluating existing research

- Present a novel methodological approach to address a gap in the literature

3. Write for your audience

Consider how much information you need to give, and avoid getting too lengthy. If you are using methods that are standard for your discipline, you probably don’t need to give a lot of background or justification.

Regardless, your methodology should be a clear, well-structured text that makes an argument for your approach, not just a list of technical details and procedures.

Methodology refers to the overarching strategy and rationale of your research. Developing your methodology involves studying the research methods used in your field and the theories or principles that underpin them, in order to choose the approach that best matches your objectives.

Methods are the specific tools and procedures you use to collect and analyse data (e.g. interviews, experiments , surveys , statistical tests ).

In a dissertation or scientific paper, the methodology chapter or methods section comes after the introduction and before the results , discussion and conclusion .

Depending on the length and type of document, you might also include a literature review or theoretical framework before the methodology.

Quantitative research deals with numbers and statistics, while qualitative research deals with words and meanings.

Quantitative methods allow you to test a hypothesis by systematically collecting and analysing data, while qualitative methods allow you to explore ideas and experiences in depth.

A sample is a subset of individuals from a larger population. Sampling means selecting the group that you will actually collect data from in your research.

For example, if you are researching the opinions of students in your university, you could survey a sample of 100 students.

Statistical sampling allows you to test a hypothesis about the characteristics of a population. There are various sampling methods you can use to ensure that your sample is representative of the population as a whole.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

McCombes, S. (2022, October 10). What Is a Research Methodology? | Steps & Tips. Scribbr. Retrieved 14 May 2024, from https://www.scribbr.co.uk/thesis-dissertation/methodology/

Is this article helpful?

Shona McCombes

Other students also liked, how to write a dissertation proposal | a step-by-step guide, what is a literature review | guide, template, & examples, what is a theoretical framework | a step-by-step guide.

- Subject List

- Take a Tour

- For Authors

- Subscriber Services

- Publications

- African American Studies

- African Studies

- American Literature

- Anthropology

- Architecture Planning and Preservation

- Art History

- Atlantic History

- Biblical Studies

- British and Irish Literature

- Childhood Studies

- Chinese Studies

- Cinema and Media Studies

Communication

- Criminology

- Environmental Science

- Evolutionary Biology

- International Law

- International Relations

- Islamic Studies

- Jewish Studies

- Latin American Studies

- Latino Studies

- Linguistics

- Literary and Critical Theory

- Medieval Studies

- Military History

- Political Science

- Public Health

- Renaissance and Reformation

- Social Work

- Urban Studies

- Victorian Literature

- Browse All Subjects

How to Subscribe

- Free Trials

In This Article Expand or collapse the "in this article" section Textual Analysis and Communication

Introduction, theoretical background.

- General Introductions

- Analytical Strategies

- Methodological Antecedents

- Methodological Debate

- Types of Textual Analysis

- Qualitative Research in Media and Communication Studies

Related Articles Expand or collapse the "related articles" section about

About related articles close popup.

Lorem Ipsum Sit Dolor Amet

Vestibulum ante ipsum primis in faucibus orci luctus et ultrices posuere cubilia Curae; Aliquam ligula odio, euismod ut aliquam et, vestibulum nec risus. Nulla viverra, arcu et iaculis consequat, justo diam ornare tellus, semper ultrices tellus nunc eu tellus.

- Approaches to Multimodal Discourse Analysis

- Critical and Cultural Studies

- Feminist Theory

- Food Studies and Communication

- Gender and the Media

- Rhetoric and Communication

- Symbolic Interactionism in Communication

Other Subject Areas

Forthcoming articles expand or collapse the "forthcoming articles" section.

- Culture Shock and Communication

- LGBTQ+ Family Communication

- Queerbaiting

- Find more forthcoming titles...

- Export Citations

- Share This Facebook LinkedIn Twitter

Textual Analysis and Communication by Elfriede Fürsich LAST REVIEWED: 25 September 2018 LAST MODIFIED: 25 September 2018 DOI: 10.1093/obo/9780199756841-0216

Textual analysis is a qualitative method used to examine content in media and popular culture, such as newspaper articles, television shows, websites, games, videos, and advertising. The method is linked closely to cultural studies. Based on semiotic and interpretive approaches, textual analysis is a type of qualitative analysis that focuses on the underlying ideological and cultural assumptions of a text. In contrast to systematic quantitative content analysis, textual analysis reaches beyond manifest content to understand the prevailing ideologies of a particular historical and cultural moment that make a specific coverage possible. Critical-cultural scholars understand media content and other cultural artifacts as indicators of how realities are constructed and which ideas are accepted as normal. Following the French cultural philosopher Roland Barthes, content is understood as “text,” i.e., not as a fixed entity but as a complex set of discursive strategies that is generated in a special social, political, historic, and cultural context ( Barthes 2013 , cited under Theoretical Background ). Any text can be interpreted in multiple ways; the possibility of multiple meanings within a text is called “polysemy.” The goal of textual analysis is not to find one “true” interpretation—in contrast to traditional hermeneutic approaches to text exegesis—but to explain the variety of possible meanings inscribed in the text. Researchers who use textual analysis do not follow a single established approach but employ a variety of analysis types, such as ideological, genre, narrative, rhetorical, gender, or discourse analysis. Therefore, the term “textual analysis” could also be understood as a collective term for a variety of qualitative, interpretive, and critical content analysis techniques of popular culture artifacts. This method, just as cultural studies itself, draws on an eclectic mix of disciplines, such as anthropology, literary studies, rhetorical criticism, and cultural sociology, along with intellectual traditions, such as semiotics, (post)structuralism, and deconstruction. What distinguishes textual analysis from other forms of qualitative content analysis in the sociological tradition is its critical-cultural focus on power and ideology. Moreover, textual analysts normally do not use linguistic aspects as central evidence (such as in critical discourse analysis), nor do they use a pre-established code book, such as some traditional qualitative content methods. Textual analysis follows an inductive, interpretive approach by finding patterns in the material that lead to “readings” grounded in the back and forth between observation and contextual analysis. Of central interest is the deconstruction of representations (especially but not always of Others with regard to race, class, gender, sexuality, and ability) because these highlight the relationship of media and content to overall ideologies. The method is based in a constructionist framework. For textual analysts, media content does not simply reflect reality; instead, media, popular culture, and society are mutually constituted. Media and popular culture are arenas in which representations and ideas about reality are produced, maintained, and also challenged.

Central to textual analysis is the idea that content as “text” is a coming together of multiple meanings in a specific moment. Barthes 2013 and Barthes 1977 discuss this idea in detail and provide groundbreaking analysis of cultural phenomena in postwar France. Fiske 1987 , Fiske 2010 , and Fiske 2011 provide the standard on how popular culture can be “read,” i.e., interpreted for its ideological assumptions. Because the central aim of textual analysis is to understand how representations are produced in media content, Stuart Hall’s chapter 1 “The Work of Representation” in the renowned textbook Hall, et al. 2013 delivers a compact but comprehensive explanation of representation as a concept. To understand the shift to post-structuralism and concepts, such as discourse, hegemony, and the relationship between language and power, that are central to textual work, one can turn to the works of original theorists such as Foucault 1972 as well as Best and Kellner 1991 for contextualized clarification. Moreover, Deleuze and Guattari 2004 is a foundational post-structural text that radically rethinks the relationship between meaning and practice.

Barthes, Roland. 2013. Mythologies . New York: Hill and Wang.

English translation by Richard Howard and Annette Lavers of the original book published in 1957. Part 1: “Mythologies” consists of a series of short essayistic analyses of cultural phenomena, such as the Blue Guide travel books or advertising for detergents. Part 2: “Myth Today” lays out Barthes’s semiotic-structural approach. Although Barthes later acknowledged the historic contingencies of his interpretations, they remain important as they provided relevant perspective and methodological vocabulary for textual analysis for years to come.

Barthes, Roland. 1977. Image, music, text . Essays selected and translated by Stephen Heath. New York: Hill and Wang.

Classic collection of Barthes’s writing on semiotics and structuralism. For methodological considerations, the chapters “Introduction to the Structural Analysis of Narrative” and “The Death of the Author” are especially relevant.

Best, Steven, and Douglas Kellner. 1991. Postmodern theory: Critical interventions . New York: Guilford.

DOI: 10.1007/978-1-349-21718-2

Accessible introduction to leading postmodern and post-structuralist theorists. For textual analysis, especially the chapter 2 “Foucault and the Critique of Modernity,” chapter 4 “Baudrillard en route to Postmodernity,” and chapter 5 “Lyotard and Postmodern Gaming” provide relevant context for understanding post-structural and postmodern principles.

Deleuze, Gilles, and Félix Guattari. 2004. A thousand plateaus . Translated by Brian Massumi. London and New York: Continuum.

This book is the second part of Deleuze and Guattari’s groundbreaking philosophical project, “Capitalism and Schizophrenia.” Originally published in 1980, it explains central post-structural concepts such as rhizomes, multiplicity, and nomadic thought. Foundational for understanding the production of knowledge and meaning, these ideas have stood the test of time and resonate in networked and digitalized societies in the early 21st century.

Fiske, John. 1987. Television culture . New York: Routledge.

A classic book by Fiske. His “codes of television” (pp. 4–20) explain even for beginning researchers the important relationship among reality, representation, and ideology that is foundational for the textual analysis of any media content even beyond television.

Fiske, John. 2010. Understanding popular culture . 2d ed. London and New York: Routledge.

Important work by Fiske, originally published in 1989, that lays out the theoretical foundations for cultural analysis.

Fiske, John. 2011. Reading the popular . 2d ed. London and New York: Routledge.

Recently reissued companion book to Fiske 2010 . Provides a variety of examples for cultural analysis ranging from Madonna and shopping malls to news and quiz shows.

Foucault, Michel. 1972. The discourse on language. In The archeology of knowledge and the discourse of language . By Michel Foucault, 215–237. Translated by A. M. Sheridan Smith. New York: Pantheon.

Based on the author’s inaugural lecture at the Collège de France in 1970, this appendix provides a fairly succinct introduction to Foucault’s scholarly program and outlines his specific concepts of “discourse.” The author begins to connect discourses to structures of power and knowledge, an argument that becomes more central in his later writings.

Hall, Stuart, Jessica Evans, and Sean Nixon, eds. 2013. Representation: Cultural representations and signifying practices . 2d ed. London and Thousand Oaks, CA: SAGE.

One of the central goals of textual analysis is to understand and interpret media representations. Chapter 1 “The Work of Representation,” by Stuart Hall, is the most comprehensive introduction to this central post-structural cultural studies concept.

back to top

Users without a subscription are not able to see the full content on this page. Please subscribe or login .

Oxford Bibliographies Online is available by subscription and perpetual access to institutions. For more information or to contact an Oxford Sales Representative click here .

- About Communication »

- Meet the Editorial Board »

- Accounting Communication

- Acculturation Processes and Communication

- Action Assembly Theory

- Action-Implicative Discourse Analysis

- Activist Media

- Adherence and Communication

- Adolescence and the Media

- Advertisements, Televised Political

- Advertising

- Advertising, Children and

- Advertising, International

- Advocacy Journalism

- Agenda Setting

- Annenberg, Walter H.

- Apologies and Accounts

- Applied Communication Research Methods

- Argumentation

- Artificial Intelligence (AI) Advertising

- Attitude-Behavior Consistency

- Audience Fragmentation

- Audience Studies

- Authoritarian Societies, Journalism in

- Bakhtin, Mikhail

- Bandwagon Effect

- Baudrillard, Jean

- Blockchain and Communication

- Bourdieu, Pierre

- Brand Equity

- British and Irish Magazine, History of the

- Broadcasting, Public Service

- Capture, Media

- Castells, Manuel

- Celebrity and Public Persona

- Civil Rights Movement and the Media, The

- Co-Cultural Theory and Communication

- Codes and Cultural Discourse Analysis

- Cognitive Dissonance

- Collective Memory, Communication and

- Comedic News

- Communication Apprehension

- Communication Campaigns

- Communication, Definitions and Concepts of

- Communication History

- Communication Law

- Communication Management

- Communication Networks

- Communication, Philosophy of

- Community Attachment

- Community Journalism

- Community Structure Approach

- Computational Journalism

- Computer-Mediated Communication

- Content Analysis

- Corporate Social Responsibility and Communication

- Crisis Communication

- Critical Race Theory and Communication

- Cross-tools and Cross-media Effects

- Cultivation

- Cultural and Creative Industries

- Cultural Imperialism Theories

- Cultural Mapping

- Cultural Persuadables

- Cultural Pluralism and Communication

- Cyberpolitics

- Death, Dying, and Communication

- Debates, Televised

- Deliberation

- Developmental Communication

- Diffusion of Innovations

- Digital Divide

- Digital Gender Diversity

- Digital Intimacies

- Digital Literacy

- Diplomacy, Public

- Distributed Work, Comunication and

- Documentary and Communication

- E-democracy/E-participation

- E-Government

- Elaboration Likelihood Model

- Electronic Word-of-Mouth (eWOM)

- Embedded Coverage

- Entertainment

- Entertainment-Education

- Environmental Communication

- Ethnic Media

- Ethnography of Communication

- Experiments

- Families, Multicultural

- Family Communication

- Federal Communications Commission

- Feminist and Queer Game Studies

- Feminist Data Studies

- Feminist Journalism

- Focus Groups

- Freedom of the Press

- Friendships, Intercultural

- Gatekeeping

- Global Englishes

- Global Media, History of

- Global Media Organizations

- Glocalization

- Goffman, Erving

- Habermas, Jürgen

- Habituation and Communication

- Health Communication

- Hermeneutic Communication Studies

- Homelessness and Communication

- Hook-Up and Dating Apps

- Hostile Media Effect

- Identification with Media Characters

- Identity, Cultural

- Image Repair Theory

- Implicit Measurement

- Impression Management

- Infographics

- Information and Communication Technology for Development

- Information Management

- Information Overload

- Information Processing

- Infotainment

- Innis, Harold

- Instructional Communication

- Integrated Marketing Communications

- Interactivity

- Intercultural Capital

- Intercultural Communication

- Intercultural Communication, Tourism and

- Intercultural Communication, Worldview in

- Intercultural Competence

- Intercultural Conflict Mediation

- Intercultural Dialogue

- Intercultural New Media

- Intergenerational Communication

- Intergroup Communication

- International Communications

- Interpersonal Communication

- Interpersonal LGBTQ Communication

- Interpretation/Reception

- Interpretive Communities

- Journalism, Accuracy in

- Journalism, Alternative

- Journalism and Trauma

- Journalism, Citizen

- Journalism, Citizen, History of

- Journalism Ethics

- Journalism, Interpretive

- Journalism, Peace

- Journalism, Tabloid

- Journalists, Violence against

- Knowledge Gap

- Lazarsfeld, Paul

- Leadership and Communication

- Mass Communication

- McLuhan, Marshall

- Media Activism

- Media Aesthetics

- Media and Time

- Media Convergence

- Media Credibility

- Media Dependency

- Media Ecology

- Media Economics

- Media Economics, Theories of

- Media, Educational

- Media Effects

- Media Ethics

- Media Events

- Media Exposure Measurement

- Media, Gays and Lesbians in the

- Media Literacy

- Media Logic

- Media Management

- Media Policy and Governance

- Media Regulation

- Media, Social

- Media Sociology

- Media Systems Theory

- Merton, Robert K.

- Message Characteristics and Persuasion

- Mobile Communication Studies

- Multimodal Discourse Analysis, Approaches to

- Multinational Organizations, Communication and Culture in

- Murdoch, Rupert

- Narrative Engagement

- Narrative Persuasion

- Net Neutrality

- News Framing

- News Media Coverage of Women

- NGOs, Communication and

- Online Campaigning

- Open Access

- Organizational Change and Organizational Change Communicat...

- Organizational Communication

- Organizational Communication, Aging and

- Parasocial Theory in Communication

- Participation, Civic/Political

- Participatory Action Research

- Patient-Provider Communication

- Peacebuilding and Communication

- Perceived Realism

- Personalized Communication

- Persuasion and Social Influence

- Persuasion, Resisting

- Photojournalism

- Political Advertising

- Political Communication, Normative Analysis of

- Political Economy

- Political Knowledge

- Political Marketing

- Political Scandals

- Political Socialization

- Polls, Opinion

- Product Placement

- Public Interest Communication

- Public Opinion

- Public Relations

- Public Sphere

- Queer Intercultural Communication

- Queer Migration and Digital Media

- Race and Communication

- Racism and Communication

- Radio Studies

- Reality Television

- Reasoned Action Frameworks

- Religion and the Media

- Reporting, Investigative

- Rhetoric and Intercultural Communication

- Rhetoric and Social Movements

- Rhetoric, Religious

- Rhetoric, Visual

- Risk Communication

- Rumor and Communication

- Schramm, Wilbur

- Science Communication

- Scripps, E. W.

- Selective Exposure

- Sense-Making/Sensemaking

- Sesame Street

- Sex in the Media

- Small-Group Communication

- Social Capital

- Social Change

- Social Cognition

- Social Construction

- Social Identity Theory and Communication

- Social Interaction

- Social Movements

- Social Network Analysis

- Social Protest

- Sports Communication

- Stereotypes

- Strategic Communication

- Superdiversity

- Surveillance and Communication

- Synchrony in Intercultural Communication

- Tabloidization

- Telecommunications History/Policy

- Television, Cable

- Textual Analysis and Communication

- Third Culture Kids

- Third-Person Effect

- Time Warner

- Transgender Media Studies

- Transmedia Storytelling

- Two-Step Flow

- United Nations and Communication

- Urban Communication

- Uses and Gratifications

- Video Deficit

- Video Games and Communication

- Violence in the Media

- Virtual Reality and Communication

- Visual Communication

- Web Archiving

- Whistleblowing

- Whiteness Theory in Intercultural Communication

- Youth and Media

- Zines and Communication

- Privacy Policy

- Cookie Policy

- Legal Notice

- Accessibility

Powered by:

- [66.249.64.20|185.66.14.236]

- 185.66.14.236

Table of Contents

Ai, ethics & human agency, collaboration, information literacy, writing process, textual analysis – how to engage in textual analysis.

- © 2023 by Jennifer Janechek - IBM Quantum

As a reader, a developing writer, and an informed student and citizen, you need to be able to locate, understand, and critically analyze others’ purposes in communicating information. Being able to identify and articulate the meaning of other writers’ arguments and theses enables you to engage in intelligent, meaningful, and critical knowledge exchanges. Ultimately, regardless of the discipline you choose to participate in, textual analysis —the summary, contextualization, and interpretation of a writer’s effective or ineffective delivery of their perspective on a topic, statement of thesis, and development of an argument—will be an invaluable skill. Your ability to critically engage in knowledge exchanges—through the analysis of others’ communication—is integral to your success as a student and as a citizen.

Step 1: What Is The Thesis?

In order to learn how to better recognize a thesis in a written text, let’s consider the following argument:

So far, [Google+] does seem better than Facebook, though I’m still a rookie and don’t know how to do even some basic things.

It’s better in design terms, and also much better with its “circles” allowing you to target posts to various groups.

Example: following that high school reunion, the overwhelming majority of my Facebook friends list (which I’m barely rebuilding after my rejoin) are people from my own hometown. None of these people are going to care too much when my new book comes out from Edinburgh. Likewise, not too many of you would care to hear inside jokes about our old high school teachers, or whatever it is we banter about.

Another example: people I know only from exchanging a couple of professional emails with them ask to be Facebook friends. I’ve never met these people and have no idea what they’re really like, even if they seem nice enough on email. Do I really want to add them to my friends list on the same level as my closest friends, brothers, valued colleagues, etc.? Not yet. But then there’s the risk of offending people if you don’t add them. On Google+ you can just drop them in the “acquaintances” circle, and they’ll never know how they’re classified.

But they won’t be getting any highly treasured personal information there, which is exactly the restriction you probably want for someone you’ve never met before.

I also don’t like too many family members on my Facebook friends list, because frankly they don’t need to know everything I’m doing or chatting about with people. But on Google+ this problem will be easily manageable. (Harman)

The first sentence, “[Google+] does seem better than Facebook” (Harman), doesn’t communicate the writer’s position on the topic; it is merely an observation . A position, also called a “claim,” often includes the conjunction “because,” providing a reason why the writer’s observation is unique, meaningful, and critical.https://www.youtube.com/embed/rwSFfnlwtjY?rel=0&feature=youtu.beTherefore, if the writer’s sentence, “[Google+] does seem better than Facebook” (Harman), is simply an observation, then in order to identify the writer’s position, we must find the answer to “because, why?” One such answer can be found in the author’s rhetorical question/answer, “Do I really want to add them to my friends list on the same level as my closest friends, brothers, valued colleagues, etc.? Not yet” (Harman). The writer’s “because, why?” could be “because Google+ allows me to manage old, new, and potential friends and acquaintances using separate circles, so that I’m targeting posts to various, separate groups.” Therefore, the writer’s thesis—their position—could be something like, “Google+ is better than Facebook because its design enables me to manage my friends using separate circles, so that I’m targeting posts to various, separate groups instead of posting the same information for everyone I’ve added to my network.”

In addition to communicating a position on a particular topic, a writer’s thesis outlines what aspects of the topic they will address. Outlining intentions within a thesis is not only acceptable, but also one of a writer’s primary obligations, since the thesis relates their general argument. In a sense, you could think of the thesis as a responsibility to answer the question, “What will you/won’t you be claiming and why?”

To explain this further, let’s consider another example. If someone were to ask you what change you want to see in the world, you probably wouldn’t readily answer “world peace,” even though you (and many others) may want that. Why wouldn’t you answer that way? Because such an answer is far too broad and ambiguous to be logically argued. Although world peace may be your goal, for logic’s sake, you would be better off articulating your answer as “a peaceful solution to the violence currently occurring on the border of southern Texas and Mexico,” or something similarly specific. The distinction between the two answers should be clear: the first answer, “world peace,” is broad, ambiguous, and not a fully developed claim (there wouldn’t be many, if any, people who would disagree with this statement); the second answer is narrower, more specific, and a fully developed claim. It confines the argument to a particular example of violence, but still allows you to address what you want, “world peace,” on a smaller, more manageable, and more logical scale.

Since a writer’s thesis functions as an outline of what they will address in an argument, it is often organized in the same manner as the argument itself. Let’s return to the argument about Google+ for an example. If the author stated their position as suggested—“Google+ is better than Facebook because its design enables me to manage my friends using separate circles, so that I’m targeting posts to various, separate groups instead of posting the same information I’ve added to my network”—we would expect them to first address the similarities and differences between the designs of Google+ and Facebook, and then the reasons why they believe Google+ is a more effective way of sharing information. The organization of their thesis should reflect the overall order of their argument. Such a well-organized thesis builds the foundation for a cohesive and persuasive argument.

Textual Analysis: How is the Argument Structured?

“Textual analysis” is the term writers use to describe a reader’s written explanation of a text. The reader’s textual analysis ought to include a summary of the author’s topic, an analysis or explanation of how the author’s perspective relates to the ongoing conversation about that particular topic, an interpretation of the effectiveness of the author’s argument and thesis , and references to specific components of the text that support his or her analysis or explanation.

An effective argument generally consists of the following components:

- A thesis. Communicates the writer’s position on a particular topic.

- Acknowledgement of opposition. Explains existing objections to the writer’s position.

- Clearly defined premises outlining reasoning. Details the logic of the writer’s position.

- Evidence of validating premises. Proves the writer’s thorough research of the topic.

- A conclusion convincing the audience of the argument’s soundness/persuasiveness. Argues the writer’s position is relevant, logical, and thoroughly researched and communicated.

An effective argument also is specifically concerned with the components involved in researching, framing, and communicating evidence:

- The credibility and breadth of the writer’s research

- The techniques (like rhetorical appeals) used to communicate the evidence (see “The Rhetorical Appeals”)

- The relevance of the evidence as it reflects the concerns and interests of the author’s targeted audience

To identify and analyze a writer’s argument, you must critically read and understand the text in question. Focus and take notes as you read, highlighting what you believe are key words or important phrases. Once you are confident in your general understanding of the text, you’ll need to explain the author’s argument in a condensed summary. One way of accomplishing this is to ask yourself the following questions:

- What topic has the author written about? (Explain in as few words as possible.)

- What is the author’s point of view concerning their topic?

- What has the author written about the opposing point of view? (Where does it appear as though the author is “giving credit” to the opposition?)

- Does the author offer proof (either in reference to another published source or from personal experience) supporting their stance on the topic?

- As a reader, would you say that the argument is persuasive? Can you think of ways to strengthen the argument? Using which evidence or techniques?

Your articulation of the author’s argument will most likely derive from your answers to these questions. Let’s reconsider the argument about Google+ and answer the reflection questions listed above:

The author’s topic is two social networks—Google+ and Facebook.

The author is “for” the new social network Google+.

The author makes a loose allusion to the opposing point of view in the explanation, “I’m still a rookie and don’t know how to do even some basic things” (Harman). (The author alludes to his inexperience and, therefore, the potential for the opposing argument to have more merit.)

Yes, the author offers proof from personal experience, particularly through their first example: “following that high school reunion, the overwhelming majority of my Facebook friends list (which I’m barely rebuilding after my rejoin) are people from my hometown” (Harman). In their second example, they cite that “[o]n Google+ you can just drop [individuals] in the ‘acquaintances’ circle, and they’ll never even know how they’re classified” (Harman) in order to offer even more credible proof, based on the way Google+ operates instead of personal experience.

Yes, I would say that this argument is persuasive, although if I wanted to make it even stronger, I would include more detailed information about the opposing point of view. A balanced argument—one that fairly and thoroughly articulates both sides—is often more respected and better received because it proves to the audience that the writer has thoroughly researched the topic prior to making a judgment in favor of one perspective or another.

Works Cited

Harman, Graham. Object-Oriented Philosophy. WordPress, n.d. Web. 15 May 2012.

Related Articles:

Annotating the margins, textual analysis - how to analyze ads, suggested edits.

- Please select the purpose of your message. * - Corrections, Typos, or Edits Technical Support/Problems using the site Advertising with Writing Commons Copyright Issues I am contacting you about something else

- Your full name

- Your email address *

- Page URL needing edits *

- Email This field is for validation purposes and should be left unchanged.

- Jennifer Janechek

Featured Articles

Academic Writing – How to Write for the Academic Community

Professional Writing – How to Write for the Professional World

Credibility & Authority – How to Be Credible & Authoritative in Speech & Writing

The Practical Guide to Textual Analysis

- Getting Started

- How Does It Work?

- Use Cases & Applications

Textual analysis is the process of gathering and examining qualitative data to understand what it’s about.

But making sense of qualitative information is a major challenge. Whether analyzing data in business or performing academic research, manually reading, analyzing, and tagging text is no longer effective – it’s time-consuming, results are often inaccurate, and the process far from scalable.

Fortunately, developments in the sub-fields of Artificial Intelligence (AI) like machine learning and natural language processing (NLP) are creating unprecedented opportunities to process and analyze large collections of text data.

Thanks to algorithms trained with machine learning it is possible to perform a myriad of tasks that involve analyzing text, like topic classification (automatically tagging texts by topic), feature extraction (identifying specific characteristics in a text) and sentiment analysis (recognizing the emotions that underlie a given text).

Below, we’ll dive into textual analysis with machine learning, what it is and how it works, and reveal its most important applications in business and academic research:

Getting started with textual analysis

- What is textual analysis?

- Difference between textual analysis and content analysis?

- What is computer-assisted textual analysis?

- Methods and techniques

- Why is it important?

How does textual analysis work?

- Text classification

- Text extraction

Use cases and applications

- Customer service

- Customer feedback

- Academic research

Let’s start with the basics!

Getting Started With Textual Analysis

What is textual analysis.

While similar to text analysis , textual analysis is mainly used in academic research to analyze content related to media and communication studies, popular culture, sociology, and philosophy.

In this case, the purpose of textual analysis is to understand the cultural and ideological aspects that underlie a text and how they are connected with the particular context in which the text has been produced. In short, textual analysis consists of describing the characteristics of a text and making interpretations to answer specific questions.

One of the challenges of textual analysis resides in how to turn complex, large-scale data into manageable information. Computer-assisted textual analysis can be instrumental at this point, as it allows you to perform certain tasks automatically (without having to read all the data) and makes it simple to observe patterns and get unexpected insights. For example, you could perform automated textual analysis on a large set of data and easily tag all the information according to a series of previously defined categories. You could also use it to extract specific pieces of data, like names, countries, emails, or any other features.

Companies are using computer-assisted textual analysis to make sense of unstructured business data , and find relevant insights that lead to data-driven decisions. It’s being used to automate everyday tasks like ticket tagging and routing, improving productivity, and saving valuable time.

Difference Between Textual Analysis and Content Analysis?

When we talk about textual analysis we refer to a data-gathering process for analyzing text data. This qualitative methodology examines the structure, content, and meaning of a text, and how it relates to the historical and cultural context in which it was produced. To do so, textual analysis combines knowledge from different disciplines, like linguistics and semiotics.

Content analysis can be considered a subcategory of textual analysis, which intends to systematically analyze text, by coding the elements of the text to get quantitative insights. By coding text (that is, establishing different categories for the analysis), content analysis makes it possible to examine large sets of data and make replicable and valid inferences.

Sitting at the intersection between qualitative and quantitative approaches, content analysis has proved to be very useful to study a wide array of text data ― from newspaper articles to social media messages ― within many different fields, that range from academic research to organizational or business studies.

What is Computer-Assisted Textual Analysis?

Computer-assisted textual analysis involves using a software, digital platform, or computational tools to perform tasks related to text analysis automatically.

The developments in machine learning make it possible to create algorithms that can be trained with examples and learn a series of tasks, from identifying topics on a given text to extracting relevant information from an extensive collection of data. Natural Language Processing (NLP), another sub-field of AI, helps machines process unstructured data and transform it into manageable information that’s ready to analyze.

Automated textual analysis enables you to analyze large amounts of data that would require a significant amount of time and resources if done manually. Not only is automated textual analysis fast and straightforward, but it’s also scalable and provides consistent results.

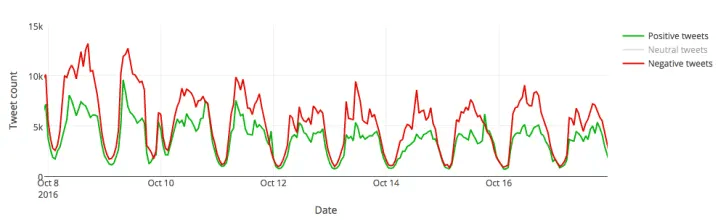

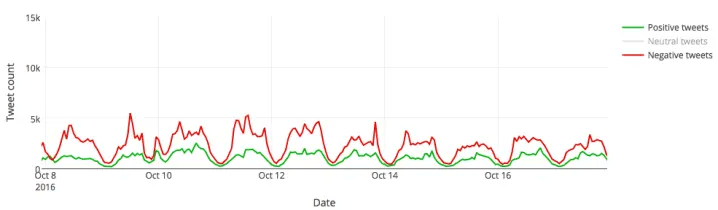

Let’s look at an example. During the US elections 2016, we used MonkeyLearn to analyze millions of tweets referring to Donald Trump and Hillary Clinton . A text classification model allowed us to tag each Tweet into the two predefined categories: Trump and Hillary. The results showed that, on an average day, Donald Trump was getting around 450,000 Twitter mentions while Hillary Clinton was only getting about 250,000. And that was just the tip of the iceberg! What was really interesting was the nuances of those mentions: were they favorable or unfavorable? By performing sentiment analysis , we were able to discover the feelings behind those messages and gain some interesting insights about the polarity of those opinions.

For example, this is how Trump’s Tweets looked like when counted by sentiment:

And this graphic shows the same for Hillary Clinton:

There are many methods and techniques for automated textual analysis. In the following section, we’ll take a closer look at each of them so that you have a better idea of what you can do with computer-assisted textual analysis.

Textual Analysis Methods & Techniques

- Word frequency

Collocation

Concordance, basic methods, word frequency.

Word frequency helps you find the most recurrent terms or expressions within a set of data. Counting the times a word is mentioned in a group of texts can lead you to interesting insights, for example, when analyzing customer feedback responses. If the terms ‘hard to use’ or ‘complex’ often appear in comments about your product, it may indicate you need to make UI/UX adjustments.

By ‘collocation’ we mean a sequence of words that frequently occur together. Collocations are usually bigrams (a pair of words) and trigrams (a combination of three words). ‘Average salary’ , ‘global market’ , ‘close a deal’ , ‘make an appointment’ , ‘attend a meeting’ are examples of collocations related to business.

In textual analysis, identifying collocations is useful to understand the semantic structure of a text. Counting bigrams and trigrams as one word improves the accuracy of the analysis.

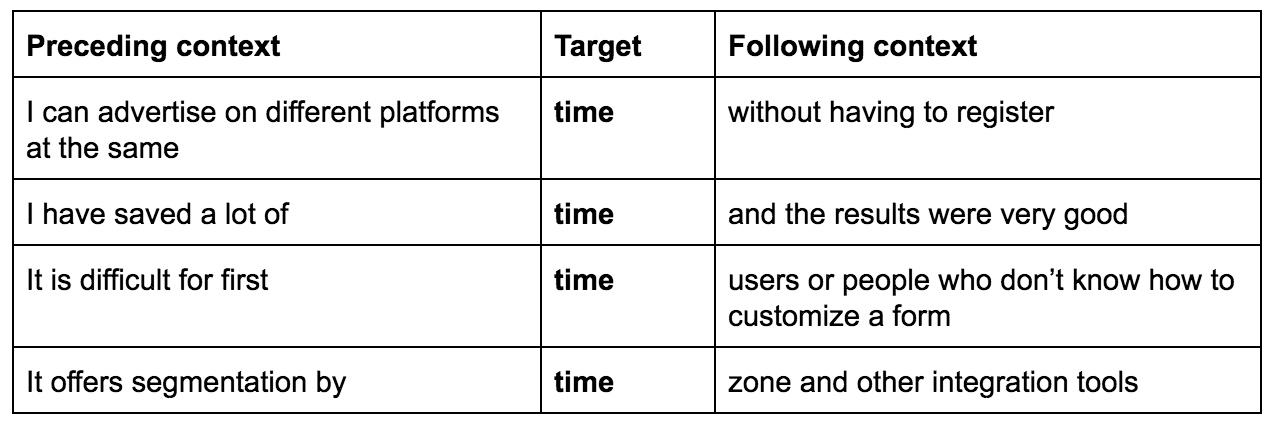

Human language is ambiguous: depending on the context, the same word can mean different things. Concordance is used to identify instances in which a word or a series of words appear, to understand its exact meaning. For example, here are a few sentences from product reviews containing the word ‘time’:

Advanced Methods

Text classification.

Text classification is the process of assigning tags or categories to unstructured data based on its content.

When we talk about unstructured data we refer to all sorts of text-based information that is unorganized, and therefore complex to sort and manage. For businesses, unstructured data may include emails, social media posts, chats, online reviews, support tickets, among many others. Text classification ― one of the essential tasks of Natural Language Processing (NLP) ― makes it possible to analyze text in a simple and cost-efficient way, organizing the data according to topic, urgency, sentiment or intent. We’ll take a closer look at each of these applications below:

Topic Analysis consists of assigning predefined tags to an extensive collection of text data, based on its topics or themes. Let’s say you want to analyze a series of product reviews to understand what aspects of your product are being discussed, and a review reads ‘the customer service is very responsive, they are always ready to help’ . This piece of feedback will be tagged under the topic ‘Customer Service’ .

Sentiment Analysis , also known as ‘opinion mining’, is the automated process of understanding the attributes of an opinion, that is, the emotions that underlie a text (e.g. positive, negative, and neutral). Sentiment analysis provides exciting opportunities in all kinds of fields. In business, you can use it to analyze customer feedback, social media posts, emails, support tickets, and chats. For instance, you could analyze support tickets to identify angry customers and solve their issues as a priority. You may also combine topic analysis with sentiment analysis (it is called aspect-based sentiment analysis ) to identify the topics being discussed about your product, and also, how people are reacting towards those topics. For example, take the product review we mentioned earlier for topic analysis: ‘the customer service is very responsive, they are always ready to help’ . This statement would be classified as both Positive and Customer Service .

Language detection : this allows you to classify a text based on its language. It’s particularly useful for routing purposes. For example, if you get a support ticket in Spanish, it could be automatically routed to a Spanish-speaking customer support team.

Intent detection : text classifiers can also be used to recognize the intent of a given text. What is the purpose behind a specific message? This can be helpful if you need to analyze customer support conversations or the results of a sales email campaign. For example, you could analyze email responses and classify your prospects based on their level of interest in your product.

Text Extraction

Text extraction is a textual analysis technique which consists of extracting specific terms or expressions from a collection of text data. Unlike text classification, the result is not a predefined tag but a piece of information that is already present in the text. For example, if you have a large collection of emails to analyze, you could easily pull out specific information such as email addresses, company names or any keyword that you need to retrieve. In some cases, you can combine text classification and text extraction in the same analysis.

The most useful text extraction tasks include:

Named-entity recognition : used to extract the names of companies , people , or organizations from a set of data.

Keyword extraction : allows you to extract the most relevant terms within a text. You can use keyword extraction to index data to be searched, create tags clouds, summarize the content of a text, among many other things.

Feature extraction : used to identify specific characteristics within a text. For example, if you are analyzing a series of product descriptions, you could create customized extractors to retrieve information like brand, model, color, etc .

Why is Textual Analysis Important?

Every day, we create a colossal amount of digital data. In fact, in the last two years alone we generated 90% percent of all the data in the world . That includes social media messages, emails, Google searches, and every other source of online data.

At the same time, books, media libraries, reports, and other types of databases are now available in digital format, providing researchers of all disciplines opportunities that didn’t exist before.

But the problem is that most of this data is unstructured. Since it doesn’t follow any organizational criteria, unstructured text is hard to search, manage, and examine. In this scenario, automated textual analysis tools are essential, as they help make sense of text data and find meaningful insights in a sea of information.

Text analysis enables businesses to go through massive collections of data with minimum human effort, saving precious time and resources, and allowing people to focus on areas where they can add more value. Here are some of the advantages of automated textual analysis:

Scalability

You can analyze as much data as you need in just seconds. Not only will you save valuable time, but you’ll also make your teams much more productive.

Real-time analysis

For businesses, it is key to detect angry customers on time or be warned of a potential PR crisis. By creating customized machine learning models for text analysis, you can easily monitor chats, reviews, social media channels, support tickets and all sorts of crucial data sources in real time, so you’re ready to take action when needed.

Academic researchers, especially in the political science field , may find real-time analysis with machine learning particularly useful to analyze polls, Twitter data, and election results.

Consistent criteria

Routine manual tasks (like tagging incoming tickets or processing customer feedback, for example) often end up being tedious and time-consuming. There are more chances of making mistakes and the criteria applied within team members often turns out to be inconsistent and subjective. Machine learning algorithms, on the other hand, learn from previous examples and always use the same criteria to analyze data .

How does Textual Analysis Work?

Computer-assisted textual analysis makes it easy to analyze large collections of text data and find meaningful information. Thanks to machine learning, it is possible to create models that learn from examples and can be trained to classify or extract relevant data.

But how easy is to get started with textual analysis?

As with most things related to artificial intelligence (AI), automated text analysis is perceived as a complex tool, only accessible to those with programming skills. Fortunately, that’s no longer the case. AI platforms like MonkeyLearn are actually very simple to use and don’t require any previous machine learning expertise. First-time users can try different pre-trained text analysis models right away, and use them for specific purposes even if they don’t have coding skills or have never studied machine learning.

However, if you want to take full advantage of textual analysis and create your own customized models, you should understand how it works.

There are two steps you need to follow before running an automated analysis: data gathering and data preparation. Here, we’ll explain them more in detail:

Data gathering : when we think of a topic we want to analyze, we should first make sure that we can obtain the data we need. Let’s say you want to analyze all the customer support tickets your company has received over a designated period of time. You should be able to export that information from your software and create a CSV or an Excel file. The data can be either internal (that is, data that’s only available to your business, like emails, support tickets, chats, spreadsheets, surveys, databases, etc) or external (like review sites, social media, news outlets or other websites).

Data preparation : before performing automated text analysis it’s necessary to prepare the data that you are going to use. This is done by applying a series of Natural Language Processing (NLP) techniques. Tokenization , parsing , lemmatization , stemming and stopword removal are just a few of them.

Once these steps are complete, you will be all set up for the data analysis itself. In this section, we’ll refer to how the most common textual analysis methods work: text classification and text extraction.

Text classification is the process of assigning tags to a collection of data based on its content.

When done manually, text categorization is a time-consuming task that often leads to mistakes and inaccuracies. By doing this automatically, it is possible to obtain very good results while spending less time and resources. Automatic text classification consists of three main approaches: rule-based, machine learning and hybrid.

Rule-based systems

Rule-based systems follow an ‘if-then’ (condition-action) structure based on linguistic rules. Basically, rules are human-made associations between a linguistic pattern on a text and a predefined tag. These linguistic patterns often refer to morphological, syntactic, lexical, semantic, or phonological aspects.

For instance, this could be a rule to classify a series of laptop descriptions:

( Lenovo | Sony | Hewlett Packard | Apple ) → Brand

In this case, when the text classification model detects any of those words within a text (the ‘if’ portion), it will assign the predefined tag ‘brand’ to them (the ‘then’ portion).

One of the main advantages of rule-based systems is that they are easy to understand by humans. On the downside, creating complex systems is quite tricky, because you need to have good knowledge of linguistics and of the topics present in the text that you want to analyze. Besides, adding new rules can be tough as it requires several tests, making rule-based systems hard to scale.

Machine learning-based systems

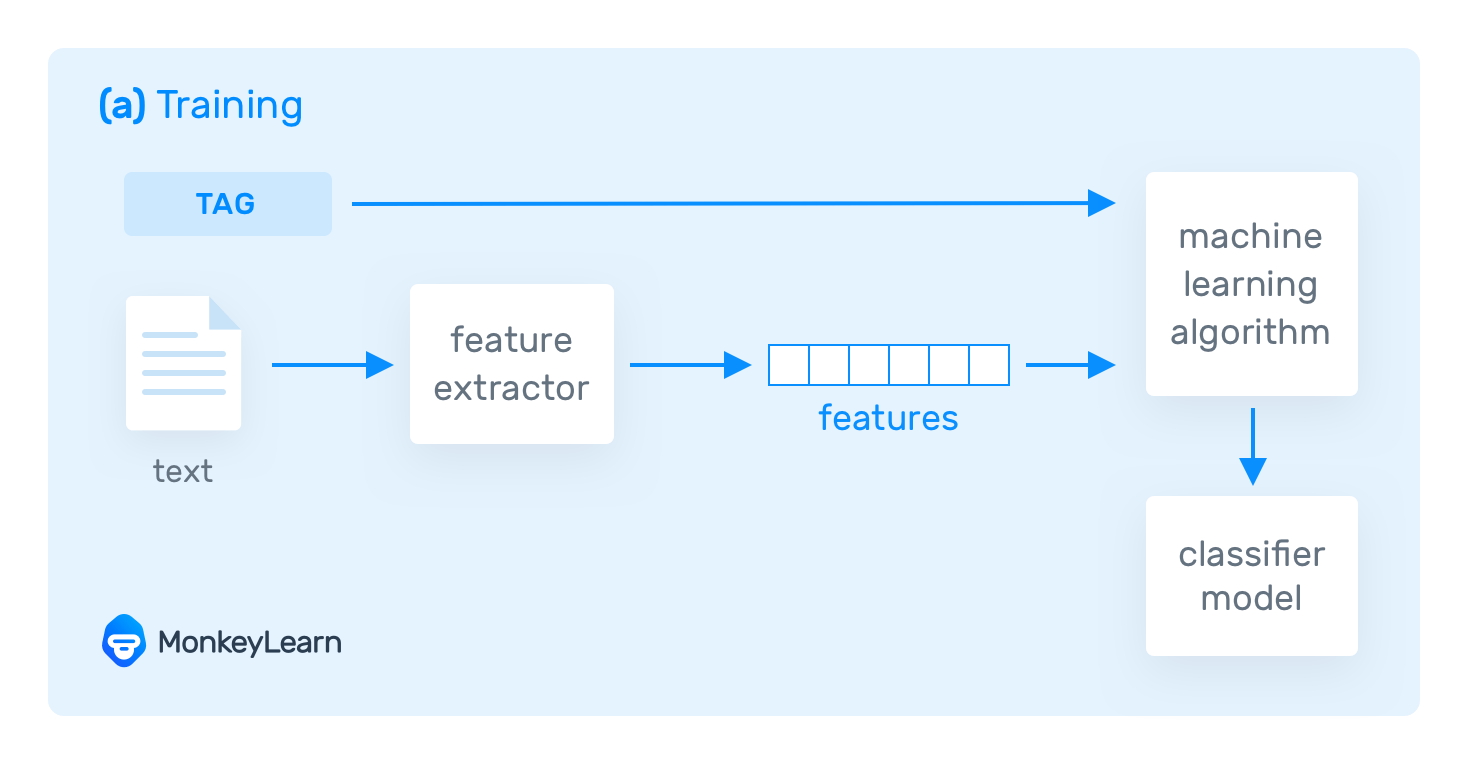

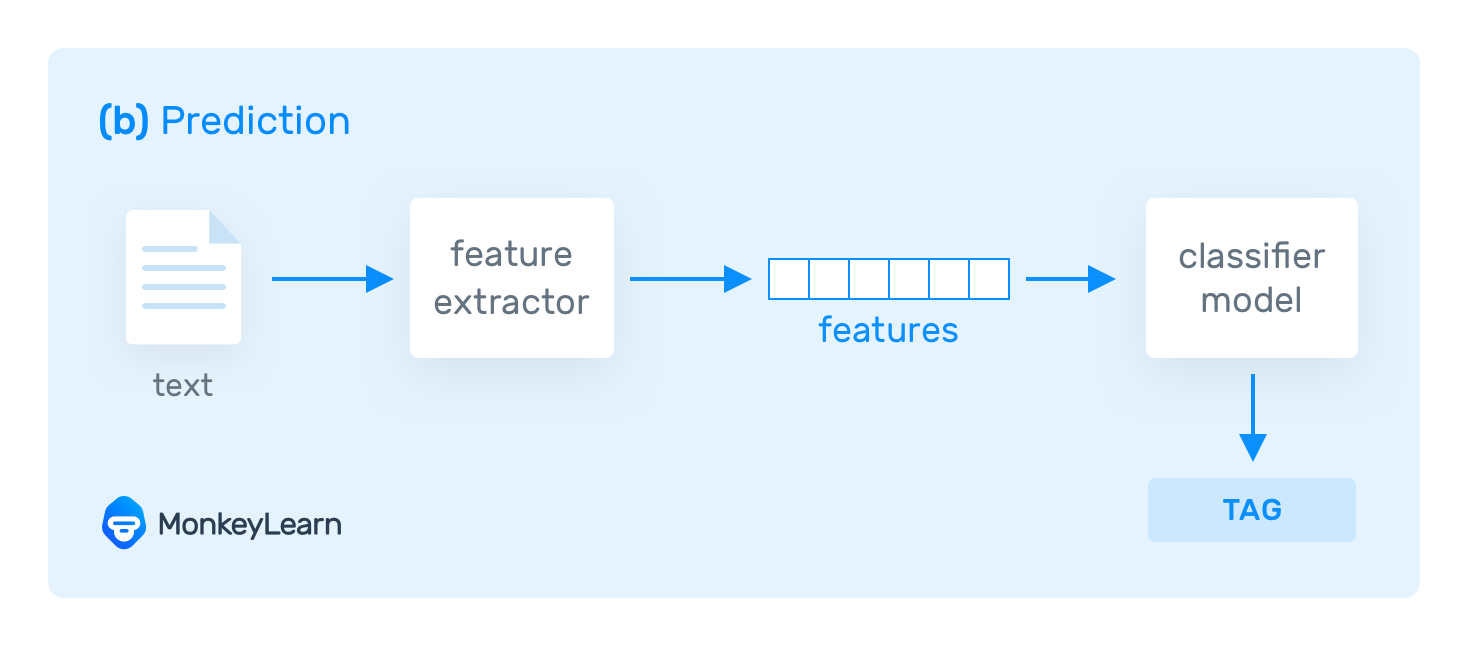

Machine learning-based systems are trained to make predictions based on examples. This means that a person needs to provide representative and consistent samples and assign the expected tags manually so that the system learns to make its own predictions from those past observations. The collection of manually tagged data is called training data .

But how does machine learning actually work?

Suppose you are training a machine learning-based classifier. The system needs to transform the training data into something it can understand: in this case, vectors (an array of numbers with encoded data). Vectors contain a set of relevant features from the given text, and use them to learn and make predictions on future data.

One of the most common methods for text vectorization is called bag of words and consists of counting how many times a particular word (from a predetermined list of words) appears in the text you want to analyze.