Multiple Regression Analysis Example with Conceptual Framework

Data analysis using multiple regression analysis is a fairly common tool used in statistics. Many graduate students find this too complicated to understand. However, this is not that difficult to do, especially with computers as everyday household items nowadays. You can now quickly analyze more than just two sets of variables in your research using multiple regression analysis.

How is multiple regression analysis done? This article explains this handy statistical test when dealing with many variables, then provides an example of a research using multiple regression analysis to show how it works. It explains how research using multiple regression analysis is conducted.

Multiple regression is often confused with multivariate regression. Multivariate regression, while also using several variables, deals with more than one dependent variable . Karen Grace-Martin clearly explains the difference in her post on the difference between the Multiple Regression Model and Multivariate Regression Model .

Table of Contents

Statistical software applications used in computing multiple regression analysis.

Multiple regression analysis is a powerful statistical test used to find the relationship between a given dependent variable and a set of independent variables .

Using multiple regression analysis requires a dedicated statistical software like the popular Statistical Package for the Social Sciences (SPSS) , Statistica, Microstat, and open-source statistical software applications like SOFA statistics and Jasp, among other sophisticated statistical packages.

Two decades ago, it will be near impossible to do the calculations using the obsolete simple calculator replaced by smartphones.

However, a standard spreadsheet application like Microsoft Excel can help you compute and model the relationship between the dependent variable and a set of predictor or independent variables. But you cannot do this without activating first the setting of statistical tools that ship with MS Excel.

Activating MS Excel

To activate the add-in for multiple regression analysis in MS Excel, you may view the two-minute Youtube tutorial below. If you already have this installed on your computer, you may proceed to the next section.

Multiple Regression Analysis Example

I will illustrate the use of multiple regression analysis by citing the actual research activity that my graduate students undertook two years ago.

The study pertains to identifying the factors predicting a current problem among high school students, the long hours they spend online for a variety of reasons. The purpose is to address many parents’ concerns about their difficulty of weaning their children away from the lures of online gaming, social networking, and other engaging virtual activities.

Review of Literature on Internet Use and Its Effect on Children

Upon reviewing the literature, the graduate students discovered that very few studies were conducted on the subject. Studies on problems associated with internet use are still in its infancy as the Internet has just begun to influence everyone’s life.

Hence, with my guidance, the group of six graduate students comprising school administrators, heads of elementary and high schools, and faculty members proceeded with the study.

Given that there is a need to use a computer to analyze multiple variable data, a principal who is nearing retirement was “forced” to buy a laptop, as she had none. Anyhow, she is very much open-minded and performed the class activities that require data analysis with much enthusiasm.

The Research on High School Students’ Use of the Internet

The brief research using multiple regression analysis is a broad study or analysis of the reasons or underlying factors that significantly relate to the number of hours devoted by high school students in using the Internet. The regression analysis is broad because it only focuses on the total number of hours devoted by high school students to activities online.

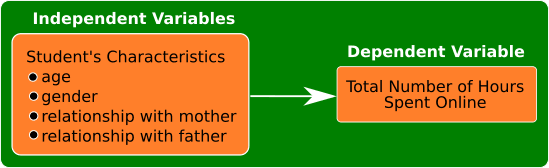

They correlated the time high school students spent online with their profile. The students’ profile comprised more than two independent variables, hence the term “multiple.” The independent variables are age, gender, relationship with the mother, and relationship with the father.

The statement of the problem in this study is:

“Is there a significant relationship between the total number of hours spent online and the students’ age, gender, relationship with their mother, and relationship with their father?”

Their parents’ relationship was gauged using a scale of 1 to 10, 1 being a poor relationship, and 10 being the best experience with parents. The figure below shows the paradigm of the study.

Notice that in research using multiple regression studies such as this, there is only one dependent variable involved. That is the total number of hours spent by high school students online.

Although many studies have identified factors that influence the use of the internet, it is standard practice to include the respondents’ profile among the set of predictor or independent variables. Hence, the standard variables age and gender are included in the multiple regression analysis.

Also, among the set of variables that may influence internet use, only the relationship between children and their parents was tested. The intention of this research using multiple regression analysis is to determine if parents spend quality time establishing strong emotional bonds between them and their children.

Findings of the Research Using Multiple Regression Analysis

What are the findings of this exploratory study? This quickly done example of a research using multiple regression analysis revealed an interesting finding.

The number of hours spent online relates significantly to the number of hours spent by a parent, specifically the mother, with her child. These two factors are inversely or negatively correlated.

The relationship means that the greater the number of hours spent by the mother with her child to establish a closer emotional bond, the fewer hours spent by her child using the internet. The number of hours spent by the children online relates significantly to the mother’s number of hours interacting with their children.

The number of hours spent by the children online relates significantly to the mother’s number of hours interacting with their children.

While this example of a research using multiple regression analysis may be a significant finding, the mother-child bond accounts for only a small percentage of the variance in total hours spent by the child online. This observation means that other factors need to be addressed to resolve long waking hours and abandonment of serious study of lessons by children.

But establishing a close bond between mother and child is a good start. Undertaking more investigations along this research concern will help strengthen the findings of this study.

The above example of a research using multiple regression analysis shows that the statistical tool is useful in predicting dependent variables’ behavior. In the above case, this is the number of hours spent by students online.

The identification of significant predictors can help determine the correct intervention to resolve the problem. Using multiple regression approaches prevents unnecessary costs for remedies that do not address an issue or a question.

Thus, this example of a research using multiple regression analysis streamlines solutions and focuses on those influential factors that must be given attention.

Once you become an expert in using multiple regression in analyzing data, you can try your hands on multivariate regression where you will deal with more than one dependent variable.

©2012 November 11 Patrick Regoniel Updated: 14 November 2020

Related Posts

Research Topics on Education: Four Child-Centered Examples

Four statistical scales of measurement, what is a good research problem, about the author, patrick regoniel.

Dr. Regoniel, a faculty member of the graduate school, served as consultant to various environmental research and development projects covering issues and concerns on climate change, coral reef resources and management, economic valuation of environmental and natural resources, mining, and waste management and pollution. He has extensive experience on applied statistics, systems modelling and analysis, an avid practitioner of LaTeX, and a multidisciplinary web developer. He leverages pioneering AI-powered content creation tools to produce unique and comprehensive articles in this website.

mostly in monasteries.

Manuscript is a collective name for texts

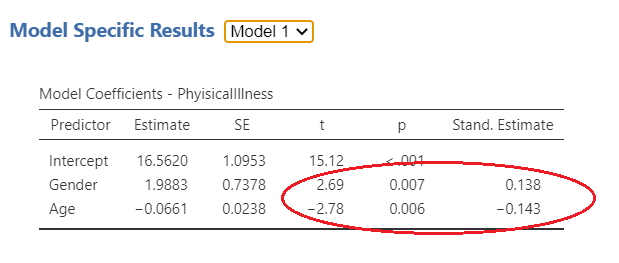

the example is good but lacks the table of regression results. With the tables, a student could learn more on how to interpret regression results

this is so enlightening,hope it reaches most of the parents…

nice; but it is not good enough for reference

This is an action research Daniel. And I have updated it here. It can set off other studies. And please take note that blogs nowadays are already recognized sources of information. Please read my post here on why this is so: https://simplyeducate.me/wordpress_Y//2019/09/26/using-blogs-in-education/

Was this study published? It may have important implications

Dear Gabe, this study was presented by one of my students in a conference. I am just unsure if she was able to publish it in a journal.

SimplyEducate.Me Privacy Policy

Multiple linear regression

Multiple linear regression #.

Fig. 11 Multiple linear regression #

Errors: \(\varepsilon_i \sim N(0,\sigma^2)\quad \text{i.i.d.}\)

Fit: the estimates \(\hat\beta_0\) and \(\hat\beta_1\) are chosen to minimize the residual sum of squares (RSS):

Matrix notation: with \(\beta=(\beta_0,\dots,\beta_p)\) and \({X}\) our usual data matrix with an extra column of ones on the left to account for the intercept, we can write

Multiple linear regression answers several questions #

Is at least one of the variables \(X_i\) useful for predicting the outcome \(Y\) ?

Which subset of the predictors is most important?

How good is a linear model for these data?

Given a set of predictor values, what is a likely value for \(Y\) , and how accurate is this prediction?

The estimates \(\hat\beta\) #

Our goal again is to minimize the RSS: $ \( \begin{aligned} \text{RSS}(\beta) &= \sum_{i=1}^n (y_i -\hat y_i(\beta))^2 \\ & = \sum_{i=1}^n (y_i - \beta_0- \beta_1 x_{i,1}-\dots-\beta_p x_{i,p})^2 \\ &= \|Y-X\beta\|^2_2 \end{aligned} \) $

One can show that this is minimized by the vector \(\hat\beta\) : $ \(\hat\beta = ({X}^T{X})^{-1}{X}^T{y}.\) $

We usually write \(RSS=RSS(\hat{\beta})\) for the minimized RSS.

Which variables are important? #

Consider the hypothesis: \(H_0:\) the last \(q\) predictors have no relation with \(Y\) .

Based on our model: \(H_0:\beta_{p-q+1}=\beta_{p-q+2}=\dots=\beta_p=0.\)

Let \(\text{RSS}_0\) be the minimized residual sum of squares for the model which excludes these variables.

The \(F\) -statistic is defined by: $ \(F = \frac{(\text{RSS}_0-\text{RSS})/q}{\text{RSS}/(n-p-1)}.\) $

Under the null hypothesis (of our model), this has an \(F\) -distribution.

Example: If \(q=p\) , we test whether any of the variables is important. $ \(\text{RSS}_0 = \sum_{i=1}^n(y_i-\overline y)^2 \) $

The \(t\) -statistic associated to the \(i\) th predictor is the square root of the \(F\) -statistic for the null hypothesis which sets only \(\beta_i=0\) .

A low \(p\) -value indicates that the predictor is important.

Warning: If there are many predictors, even under the null hypothesis, some of the \(t\) -tests will have low p-values even when the model has no explanatory power.

How many variables are important? #

When we select a subset of the predictors, we have \(2^p\) choices.

A way to simplify the choice is to define a range of models with an increasing number of variables, then select the best.

Forward selection: Starting from a null model, include variables one at a time, minimizing the RSS at each step.

Backward selection: Starting from the full model, eliminate variables one at a time, choosing the one with the largest p-value at each step.

Mixed selection: Starting from some model, include variables one at a time, minimizing the RSS at each step. If the p-value for some variable goes beyond a threshold, eliminate that variable.

Choosing one model in the range produced is a form of tuning . This tuning can invalidate some of our methods like hypothesis tests and confidence intervals…

How good are the predictions? #

The function predict in R outputs predictions and confidence intervals from a linear model:

Prediction intervals reflect uncertainty on \(\hat\beta\) and the irreducible error \(\varepsilon\) as well.

These functions rely on our linear regression model $ \( Y = X\beta + \epsilon. \) $

Dealing with categorical or qualitative predictors #

For each qualitative predictor, e.g. Region :

Choose a baseline category, e.g. East

For every other category, define a new predictor:

\(X_\text{South}\) is 1 if the person is from the South region and 0 otherwise

\(X_\text{West}\) is 1 if the person is from the West region and 0 otherwise.

The model will be: $ \(Y = \beta_0 + \beta_1 X_1 +\dots +\beta_7 X_7 + \color{Red}{\beta_\text{South}} X_\text{South} + \beta_\text{West} X_\text{West} +\varepsilon.\) $

The parameter \(\color{Red}{\beta_\text{South}}\) is the relative effect on Balance (our \(Y\) ) for being from the South compared to the baseline category (East).

The model fit and predictions are independent of the choice of the baseline category.

However, hypothesis tests derived from these variables are affected by the choice.

Solution: To check whether region is important, use an \(F\) -test for the hypothesis \(\beta_\text{South}=\beta_\text{West}=0\) by dropping Region from the model. This does not depend on the coding.

Note that there are other ways to encode qualitative predictors produce the same fit \(\hat f\) , but the coefficients have different interpretations.

So far, we have:

Defined Multiple Linear Regression

Discussed how to test the importance of variables.

Described one approach to choose a subset of variables.

Explained how to code qualitative variables.

Now, how do we evaluate model fit? Is the linear model any good? What can go wrong?

How good is the fit? #

To assess the fit, we focus on the residuals $ \( e = Y - \hat{Y} \) $

The RSS always decreases as we add more variables.

The residual standard error (RSE) corrects this: $ \(\text{RSE} = \sqrt{\frac{1}{n-p-1}\text{RSS}}.\) $

Fig. 12 Residuals #

Visualizing the residuals can reveal phenomena that are not accounted for by the model; eg. synergies or interactions:

Potential issues in linear regression #

Interactions between predictors

Non-linear relationships

Correlation of error terms

Non-constant variance of error (heteroskedasticity)

High leverage points

Collinearity

Interactions between predictors #

Linear regression has an additive assumption: $ \(\mathtt{sales} = \beta_0 + \beta_1\times\mathtt{tv}+ \beta_2\times\mathtt{radio}+\varepsilon\) $

i.e. An increase of 100 USD dollars in TV ads causes a fixed increase of \(100 \beta_2\) USD in sales on average, regardless of how much you spend on radio ads.

We saw that in Fig 3.5 above. If we visualize the fit and the observed points, we see they are not evenly scattered around the plane. This could be caused by an interaction.

One way to deal with this is to include multiplicative variables in the model:

The interaction variable tv \(\cdot\) radio is high when both tv and radio are high.

R makes it easy to include interaction variables in the model:

Non-linearities #

Fig. 13 A nonlinear fit might be better here. #

Example: Auto dataset.

A scatterplot between a predictor and the response may reveal a non-linear relationship.

Solution: include polynomial terms in the model.

Could use other functions besides polynomials…

Fig. 14 Residuals for Auto data #

In 2 or 3 dimensions, this is easy to visualize. What do we do when we have too many predictors?

Correlation of error terms #

We assumed that the errors for each sample are independent:

What if this breaks down?

The main effect is that this invalidates any assertions about Standard Errors, confidence intervals, and hypothesis tests…

Example : Suppose that by accident, we duplicate the data (we use each sample twice). Then, the standard errors would be artificially smaller by a factor of \(\sqrt{2}\) .

When could this happen in real life:

Time series: Each sample corresponds to a different point in time. The errors for samples that are close in time are correlated.

Spatial data: Each sample corresponds to a different location in space.

Grouped data: Imagine a study on predicting height from weight at birth. If some of the subjects in the study are in the same family, their shared environment could make them deviate from \(f(x)\) in similar ways.

Correlated errors #

Simulations of time series with increasing correlations between \(\varepsilon_i\)

Non-constant variance of error (heteroskedasticity) #

The variance of the error depends on some characteristics of the input features.

To diagnose this, we can plot residuals vs. fitted values:

If the trend in variance is relatively simple, we can transform the response using a logarithm, for example.

Outliers from a model are points with very high errors.

While they may not affect the fit, they might affect our assessment of model quality.

Possible solutions: #

If we believe an outlier is due to an error in data collection, we can remove it.

An outlier might be evidence of a missing predictor, or the need to specify a more complex model.

High leverage points #

Some samples with extreme inputs have an outsized effect on \(\hat \beta\) .

This can be measured with the leverage statistic or self influence :

Studentized residuals #

The residual \(e_i = y_i - \hat y_i\) is an estimate for the noise \(\epsilon_i\) .

The standard error of \(\hat \epsilon_i\) is \(\sigma \sqrt{1-h_{ii}}\) .

A studentized residual is \(\hat \epsilon_i\) divided by its standard error (with appropriate estimate of \(\sigma\) )

When model is correct, it follows a Student-t distribution with \(n-p-2\) degrees of freedom.

Collinearity #

Two predictors are collinear if one explains the other well:

Problem: The coefficients become unidentifiable .

Consider the extreme case of using two identical predictors limit : $ \( \begin{aligned} \mathtt{balance} &= \beta_0 + \beta_1\times\mathtt{limit} + \beta_2\times\mathtt{limit} + \epsilon \\ & = \beta_0 + (\beta_1+100)\times\mathtt{limit} + (\beta_2-100)\times\mathtt{limit} + \epsilon \end{aligned} \) $

For every \((\beta_0,\beta_1,\beta_2)\) the fit at \((\beta_0,\beta_1,\beta_2)\) is just as good as at \((\beta_0,\beta_1+100,\beta_2-100)\) .

If 2 variables are collinear, we can easily diagnose this using their correlation.

A group of \(q\) variables is multilinear if these variables “contain less information” than \(q\) independent variables.

Pairwise correlations may not reveal multilinear variables.

The Variance Inflation Factor (VIF) measures how predictable it is given the other variables, a proxy for how necessary a variable is:

Above, \(R^2_{X_j|X_{-j}}\) is the \(R^2\) statistic for Multiple Linear regression of the predictor \(X_j\) onto the remaining predictors.

Introduction to Research Methods

15 multiple regression.

In the last chapter we met our new friend (frenemy?) regression, and did a few brief examples. And at this point, regression is actually more of a roommate. If you stay in the apartment (research methods) it’s gonna be there. The good thing is regression brings a bunch of cool stuff for the apartment that we need, like a microwave.

15.1 Concepts

Let’s begin this chapter with a bit of a mystery, and then use regression to figure out what’s going on.

What would you predict, just based on what you know and your experiences, the relationship between the number of computers at a school and their math test scores is? Do you think schools with more computers do worse or better?

Computers might be useful for teaching math, and are typically more available in wealthier schools. Thus, I would predict that the number of computers at a school would predict higher scores on math tests. We can use the data on California schools to test that idea.

Oh. Interesting. The relationship is insignificant, and perhaps most surprisingly, negative. Schools with more computers did worse on the test in the sample. For each additional computer there was at a school, scores on the math test decreased by .001 points, and that result is not significant.

So computers don’t make much of a difference. Are computers distracting the test takers? Diminishing their skills in math? My old math teachers were always worried about us using calculators too much. Maybe, but maybe it’s not the computers fault.

Let’s ask a different question then.

What do you think the relationship is between the number of computers at a school and the number of students? Larger schools might not have the same number of computers per student, but if you had to bet money would you think the school with 10,000 students or 1000 students would have more computers?

If you’re guessing that schools with more students have more computers, you’d be correct. The correlation coefficient for the number of students and computers is .93 (very strong), and we can see that below in the graph.

More students means more computers. In the regression we ran though all it knows is that schools with more computers do worse on math, but they can’t tell why. If larger schools have more computers AND do worse on tests, a bivariate regression can’t separate those effects on its own. We did bivariate regression in the last chapter, where we just look at two variables, one independent and one dependent (bivariate means two (bi) variables (variate)).

Multiple regression can help us try though. Multiple regression doesn’t mean running multiple regressions, it refers to including multiple variables in the same regression. Most of the tools we’ve learned so far only allow for two variables to be used, but with regression we can use many (many) more.

Let’s see what happens when we look at the relationship between the number of computers and math scores, controlling for the number of students at the school.

This second regression shows something different. In the earlier regression, the number of computers was negative and not significant. Now? Now it’s positive and significant. So what happened?

We controlled for the number of students that are at the school, at the same time that we’re testing the relationship between computers and math scores. Don’t worry if that’s not clear yet, we’re going to spend some time on it. When I say “holding the number of students constant” it means comparing schools with different numbers of computers but that have the same number of students. If we compare two schools with the same number of students, we can then better identify the impact of computers.

We can interpret the variables in the same way as earlier when just testing one variable to some degree. We can see that a larger number of computers is associated with higher test scores, and that larger schools generally do worse on the math test.

Specifically, a one unit increase in computers is associated with an increase of math scores of.02 points, and that change is highly significant.

But our interpretation needs to add something more. With multiple regression what we’re doing is looking at the effect of each variable, while holding the other variable constant.

Specifically, a one unit increase in computers is associated with an increase of math scores of.002 points when holding the number of students constant , and that change is highly significant.

When we look at the effect of computers in this regression, we’re setting aside the impact of student enrollment and just looking at computers. And when we look at the coefficient for students, we’re setting aside the impact of computers and isolating the effect of larger school enrollments on test scores.

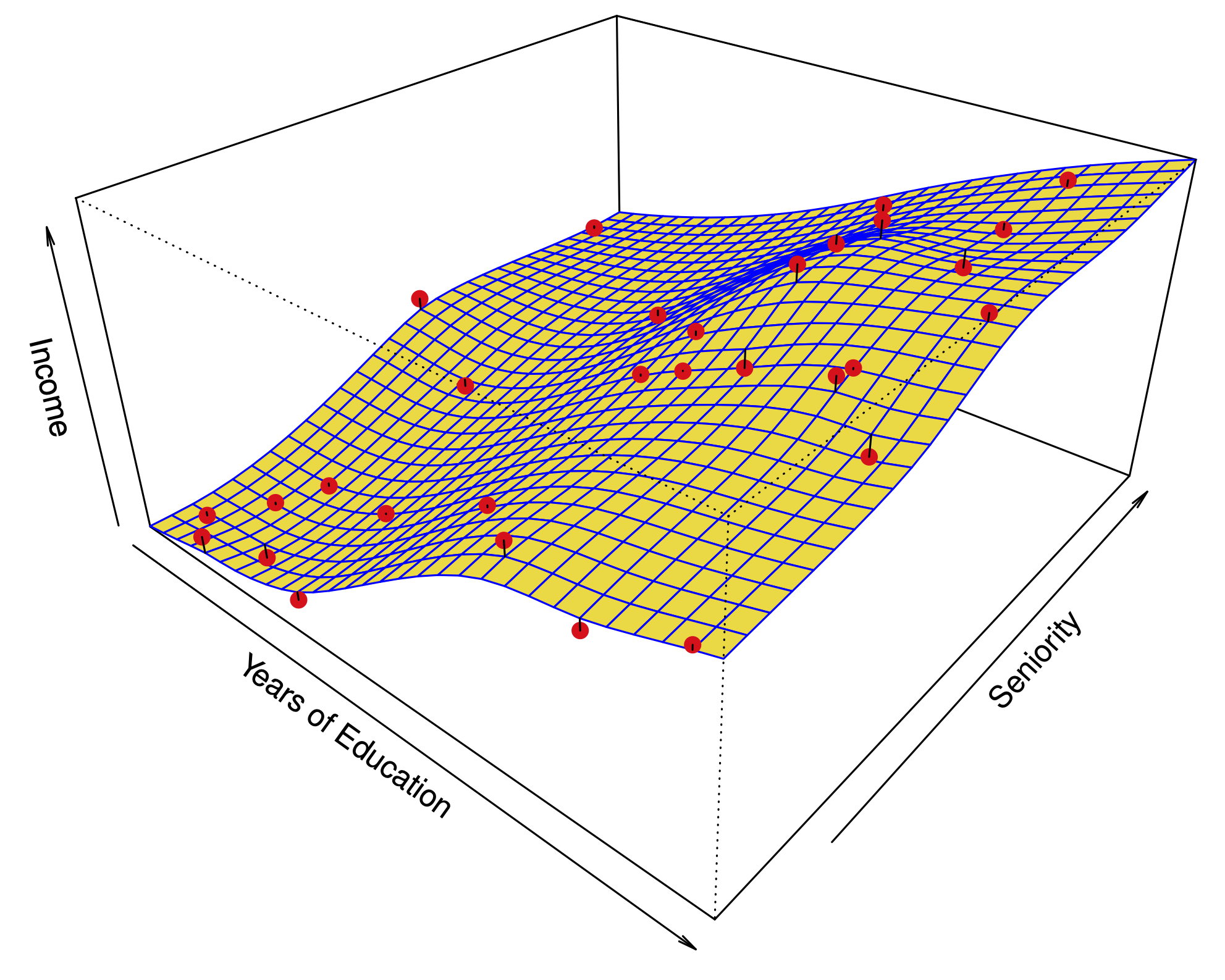

We looked at scatter plots and added a line to the graph to better understand the direction of relationships in the previous chapter. We can do that again, but it’s slightly different.

Here is the relationship of computers to math scores, and the relationship of computers to math scores holding students constant. That means we’re actually producing separate lines for both variables, but we’re doing that after accounting for the impact of computers on school enrollment, and school enrollment on computers.

We can also graph it in 3 dimensions, where we place the outcome on the z axis coming out of the paper/screen towards you.

But I’ll be honest, that doesn’t really clarify it for me. Multiple regression is still about drawing lines, but it’s more of a theoretical line. It’s really hard to actually effectively draw lines as we move beyond two variables or two dimensions. Hopefully that logic of drawing a line and the equation of a line still makes sense for you, because it’s the same formula we use in interpreting multiple regressions.

What we’re figuring out with multiple regression is what part of math scores is determined uniquely by the student enrollment at a school and what part of math scores is determined uniquely by the number of computers. Once R figures that out it gives us the slope of two lines, one for computers and one for students. The line for computers slopes upwards, because the more computers a school has the better it’s students do, when we hold constant the number of students at the school. When we hold constant the number of computers, larger schools do worse on the math test.

I don’t expect that to fully make sense yet. Understanding what it means to “hold something constant” is pretty complex and theoretical, but it’s also important to fully utilizing the powers of regression. What this example illustrates though is the dangers inherent in using regression results, and the difficulty of using them to prove causality.

Let’s go back to the bivariate regression we did, just including the number of computers at a school and math test scores. Did that prove that computers don’t impact scores? No, even though that would be the correct interpretation of the results. But lets go back to what we need for causality…

- Co-variation

- Temporal Precedence

- Elimination of Extraneous Variables or Hypotheses

We failed to eliminate extraneous variables. We tested the impact of computers, but we didn’t do anything to test any other hypotheses of what impacts math scores. We didn’t test whether other factors that impact scores (number of teachers, wealth of parents, size of the school) had a mediating relationship on the number of computers. Until we test every other explanation for the relationship, we haven’t really proven anything about computers and test scores. That’s why we need to take caution in doing regression. Yes, you can now do regression, and you can hopefully correctly interpret them. But correctly interpreting a regression, and doing a regression that proves something is a little more complicated. We’ll keep working towards that though.

15.1.1 Predicting Wages

To this point the book has attempted to avoid touching on anything that is too controversial. Statistics is a math, so it’s a fairly apolitical field, but it can be used to support political or controversial matters. We’re going to wade into one in this chapter, to try and show the way that statistics can let us get at some of the thorny issues our world deals with. In addition, this example should help to clarify what it means to “hold something constant”.

We’ll work with the same income data we used in the last chapter from the Panel Study of Income Dynamics from 1982. Just to remind you, these are the variables we have available.

- experience - Years of full-time work experience.

- weeks - Weeks worked.

- occupation - factor. Is the individual a white-collar (“white”) or blue-collar (“blue”) worker?

- industry - factor. Does the individual work in a manufacturing industry?

- south - factor. Does the individual reside in the South?

- smsa - factor. Does the individual reside in a SMSA (standard metropolitan statistical area)?

- married - factor. Is the individual married?

- gender - factor indicating gender.

- union - factor. Is the individual’s wage set by a union contract?

- education - Years of education.

- ethnicity - factor indicating ethnicity. Is the individual African American (“afam”) or not (“other”)?

- wage - Wage.

Let’s say we wanted to understand wage discrimination on the basis of race or ethnicity Do African Americans earn less than others in the workplace? Let’s see what this data tells us.

And a note before we begin. The variable ethnicity has two categories, “afam” which indicates African American or “other” which means anything but African American. Obviously, that captures a lot modernly, but in the 1980 that generally can be understood to generally be white people. I’ll generally just refer to it as other races in the text though.

The average wage for African Americans in the data is 808.5, and for others the average wage is 1174. That means that African Americans earn (in this really specific data set) 61% of how much men earn 365.5 less.

Let’s say we take that fact to someone that doesn’t believe that African Americans are discriminated against. We’ll call them you’re “contrarian friend”, you can fill in other ideas of what you’d think about that person. What will their response be? Probably that it isn’t evidence of discrimination, because of course African Americans earn less, they’re less likely to work in white collar jobs. And people that work in white collar jobs earn more, so that’s the reason African Americans earn less. It’s not discrimination, it’s just that they work different jobs.

And on the surface, they’d be right. African Americans are more likely to work in blue collar jobs (65% to 50%), and blue collar jobs earn less (956 for blue collar jobs to 1350 for white collar jobs).

So what we’d want to do then is compare African Americans to others that both work blue collar jobs, and African Americans to others working white collar jobs. If there is a difference in wages between two people working the same job, that’s better evidence that the pay gap is a result not of their occupational choices but their race.

We can visualize that with a two by two chart.

Let’s work across that chart to see what it tells us. A 2 by 2 chart like that is called a cross tab because it let’s us tab ulate figures a cross different characteristics of our data. They can be a methodologically simple way (we’re just showing means/averages there) to tell a story if the data is clear.

So what do we learn? Looking at the top row, white collar workers that are labeled other for ethnicity earn on average $1373. And white collar workers that are African American earn $918. Which means that for white collar workers, African Americans earn $455 less. For blue collar workers, other races earn $977, while African Americans earn $749. That’s a gap of $228. So the size of the gap is different depending on what a persons job is, but African American’s earn less regardless of their job. So it isn’t just that African Americans are less likely to work white collar jobs that drives their lower wages. Even those in white collar jobs earn less. In fact, African Americans in white collar jobs earn less on average than other races working blue collar jobs!

This is what it means to hold something constant. In that table above we’re holding occupation constant, and comparing people based on their race to people of another race that work the same job. So differences in those jobs aren’t influencing our results now, we’ve set that effect aside for the moment.

And we can do that automatically with regression, like we did when we looked at the effect of computers on math scores, while holding the impact of school enrollment constant.

Based on that regression results, African Americans earn $309 less than other races when holding occupation constant, and that effect is highly significant. And blue collar workers earn $380 less than white collar workers when holding race constant, and that effect is significant too.

So have we proven discrimination in wages? Probably not yet for the contrarian friend. Without pause they’ll likely say that education is also important for wages, and African Americans are less likely to go to college. And in the data they’d be correct. On average African Americans completed 11.65 years of education, and other races completed 12.94.

So let’s add that to our regression too.

Now with the ethnicity variable we’re comparing people of different ethnicities that have the same occupation and education. And what do we find? Even holding both of those constant, we would expect an African American worker to earn $262 less, and that is highly significant.

What your contrarian friend is doing is proposing alternative variables and hypotheses that explain the gap in earnings for African Americans. And while those other things do make a difference they don’t explain fully why African Americans earn less than others. We have shrunk the gap somewhat. Originally the gap was 465, which fell to 309 when we held occupation constant and now 262 with the inclusion of education. So those alternative explanations do explain a portion of why African Americans earned less, it was because they had lower-status jobs and less education (setting aside the fact that their lower-status jobs and less education may be the result of discrimination).

So what else do we want to include to try and explain that difference in wages? We can insert all of the variables in the data set to see if there is still a gap in wages between African Americans and others.

Controlling for occupation, education, experience, weeks worked, the industry, the region of employment, whether they are married, their gender, and their union status, does ethnicity make a difference in earnings? Yes, if you found two workers that had the same values for all of those variables except that they were of different races, the African American would still likely earn less.

In our regression African Americans earn $167 less when holding occupation, education, experience, weeks worked, the industry, region, marriage, gender, and their union status constant, and that effect is still statistically significant.

The contrarian friend may still have another alternative hypothesis to attempt to explain away that result, but unfortunately that’s all the data will let us test.

What we’re attempting to do is minimize what is called the missing variable bias . If there is a plausible story that explains our result, whether one is predicting math test scores or wages or whatever else, if we fail to account for that explanation our model may be misleading. It was misleading to say that computers don’t increase math test scores when we didn’t control for the effect of larger school sizes.

What missing variables do we not have that may explain the difference in earnings between African Americans and others? We don’t know who is a manager at work or anything about job performance, and both of those should help explain why people earn more. So we haven’t removed our missing variable bias, the evidence we can provide is limited by that. But based on the evidence we can generate, we find evidence of racial discrimination in wages.

And I should again emphasize, even if something else did explain the gap in earnings between African Americans and others it wouldn’t prove there wasn’t discrimination in society. If differences in occupation did explain the racial gap in wages, that wouldn’t prove the discrimination didn’t push African Americans towards lower paying jobs.

But the work we’ve done above is similar to what a law firm would do if bringing a lawsuit against a large employer for wage discrimination. It’s hard to prove discrimination in individual cases. The employer will always just argue that John is a bad employee, and that’s why they earn less than their coworkers. Wage discrimination suits are typically brought as class action suits, where a large group of employees sues based on evidence that even when accounting for differences in specific job, and job performance, and experience, and other things there is still a gap in wages.

I should add a note about interpretation here. It’s the researcher that has to identify what they different coefficients means in the real world. We can talk about discrimination because of differences in earnings for African Americans and others, but we wouldn’t say that blue collar workers are discriminated against because they earn less than white collar workers. It’s unlikely that someone would say that people with more experience earning more is the result of discrimination. These are interpretations that we layer on to the analysis based on our expectations and understanding of the research question.

15.1.2 Predicting Affairs

Regression can be used to make predictions and learn more about the world in all sorts of contexts. Let’s work through another example, with a little more focus on the interpretation.

We’ll use a data set called Affairs, which unsurprisingly has data about affairs. Or more specifically, about people, and whether or not they have had an affair.

In the data set there are 10 variables.

- affairsany - coded as 0 for those who haven’t had an affair and 1 for those who have had any number of affairs. This will be the dependent variable.

- gender - either male or female

- age - respondents age

- yearsmarried - number of years of current marriage

- children - are there children from the marriage

- religiousness - scaled from 1-5, with 1 being anti religion and 5 being very religious

- education -years of education

- occupation - 1-7 based on a specific system of rating for occupations

- rating 1-5 based on how happy the respondent reported their marriage being.

So we can throw all of those variables into a regression and see which ones have the largest impact on the likelihood someone had an affair. But before that we should pause to make predictions. We shouldn’t just include a variable just for laughs - we should have a reason for including it. We should be able to make a prediction for whether it should increase or decrease the dependent variable.

So what effect do you think these independent variables will have on the chances of someone having had an affair?

- gender - I would guess their (on average) higher libidos and lower levels of concern about childbearing will lead to more affairs.

- age - Young people are typically a little less ready for long term commitments, and a bit more irrational and willing to take chances, so age should decrease affairs. Although being older does give you more time to of had an affair. *yearsmarried - Longer marriages should be less likely to contain an affair. If someone was going to have an affair, i would expect it to happen earlier, and such things often end marriages.

- children - Children, and avoiding hurting them, are hopefully a good reason for people to avoid having affairs.

- religiousness - most religions teach that affairs are wrong, so I would guess people that are more religious are less likely to have affairs

- education and occupation - I actually can’t make a prediction for what effect education or occupation have on affairs, and since I don’t think they’ll impact the dependent variable I wouldn’t include them in the analysis if I was doing this for myself. But I’ll keep them here as an example to talk about later.

- rating - happier marriages will likely produce fewer affairs, in large part because it’s often unhappiness that makes couples stray.

Those arguments may be wrong or right. And they certainly wont be right in every case in the data - there will be counter examples. What I’ve tried to do is lay out predictions, or hypotheses, for what I expect the model to show us. Let’s test them all and see what predicts whether someone had an affair.

What do you see as the strongest predictors of whether someone had an affair? Let’s start by identifying what was highly statistically significant. Religiousness and rating both had p-values below .001, so we can be very confident that in the population people who are more religious and who report having happier marriages are both less likely to have affairs. Let’s interpret that more formally.

For each one unit increase in religiousness an individual’s chances of having an affair decrease by .05 holding their gender, age, years married, children, education, occupation and rating constant, and that change is significant.

That’s a long list of things we’re holding constant! When you get past 2 or 3 control variables, or when you’re describing different variables from the same model you can use “holding all else constant” in place of the list.

For each one unit increase in the happiness rating of a marriage an individual’s chances of having an affair decrease by .09, holding all else constant , and that change is significant.

What else that we included in the model is useful for predicting whether someone had an affair?

Age and years married both reach statistical significance. As individuals get older, their chances of having an affair decrease, as I predicted.

However, as their marriages get longer the chances of having had an affair increase, not decrease as I thought Interesting! Does that mean I should go back and change my prediction? No. What it likely means is that some of my assumptions were wrong, so I should update them and discuss why I was wrong (in the conclusion if this was a paper). If we only used regression to find things that we already know, we wouldn’t learn anything new. It’s still good that I made a prediction though because that highlights that the result is a little weird (to my eyes) or may be more surprising to the readers. Imagine if you found that a new jobs program actually lowered participants incomes, that would be a really important outcome of your research and just as valuable as if you’d found that incomes increase.

A surprising finding could also be evidence that there’s something wrong in the data. Did we enter years of marriage correctly, or did we possibly reverse it where longer marriages are actually coded as lower numbers. That’d be odd in this case, but it’s always worth thinking that possibility through. If I got data that showed college graduates earned less than those without a high school degree I’d be very skeptical of the data, because that would go against everything we know. It might just be an odd, fluky one-time finding, or it could be evidence something is wrong in the data.

Okay, what about everything else? All the other variables are insignificant. Should we remove them from the analysis, since they don’t have a significant effect on our dependent variable? It depends. Insignificant variables can be worth including in most cases in order to show that they don’t have an effect on the outcome. It’s worth knowing that gender and children don’t have an effect on affairs in the population. We had a reason to think they would, and it turns out they don’t really have much of an influence on whether someone has sex outside their marriage. That’s good to know.

I didn’t have a prediction for education or occupation though, and the fact they are insignificant means they aren’t really worth including. I’m not testing any interesting ideas about what affects affairs with those variables, they’re just being included because they’re in the data. That’s not a good reason for them to be there, we want to be testing something with each variable we include.

15.2 Practice

In truth, we haven’t done a lot of new work on code in this chapter. We’ve more so focused on this big idea of what it means to go from bivariate regression to multivariate regression. So we wont do a lot of practice, because the basic structure we learned in the last chapter drives most of what we’ll do.

We’ll read in some new data, that’s on Massachusetts schools and test scores there. It’s similar to the California Schools data, but from Massachusetts for variety.

We’ll focus on 4 of those variable, and try to figure out what predicts how schools do on tests in 8th grade (score8).

- score8 - test scores for 8th graders

- exptot - total spending for the school

- english - percentage of students that don’t speak english as their native language

- income - income of parents

Let’s start by practicing writing a regression to look at the impact of spending (exptot) on test scores.

That should look very similar to the last chapter. And we can interpret it the same way.

For each one unit increase in spending, we observe a .004 increase in test scores for 8th graders, and that change is significant.

Let’s add one more variable to the regression, and now include english along with exptot. To include an additional variable we just place a + sign between the two variables, as shown below.

Each one unit increase in spending is associated with a .007 increase in test scores for 8th graders, holding the percentage of english speakers constant, and that change is significant.

Each one unit increase in the percentage of students that don’t speak english as natives is associated with a 4.1 decrease in test scores for 8th graders, holding the spending constant, and that change is significant.

And one more, let’s add one more variable: income.

Interesting, spending actually lost its significance in that final regression and change directions.

Each one unit increase in spending is associated with a .002 decrease in test scores for 8th graders when holding the percentage of english speakers and parental income constant, but that change is insignificant.

Each one unit increase in the percentage of students that don’t speak english as natives is associated with a 2.2 decrease in test scores for 8th graders when holding spending and parental income constant, and that change is significant.

Each one unit increase in parental income is associated with a 2.8 increase in test scores for 8th graders when holding spending and the percentage of english speakers constant, and that change is significant.

The following video demonstrates the coding steps done above.

Multiple Regression Analysis using SPSS Statistics

Introduction.

Multiple regression is an extension of simple linear regression. It is used when we want to predict the value of a variable based on the value of two or more other variables. The variable we want to predict is called the dependent variable (or sometimes, the outcome, target or criterion variable). The variables we are using to predict the value of the dependent variable are called the independent variables (or sometimes, the predictor, explanatory or regressor variables).

For example, you could use multiple regression to understand whether exam performance can be predicted based on revision time, test anxiety, lecture attendance and gender. Alternately, you could use multiple regression to understand whether daily cigarette consumption can be predicted based on smoking duration, age when started smoking, smoker type, income and gender.

Multiple regression also allows you to determine the overall fit (variance explained) of the model and the relative contribution of each of the predictors to the total variance explained. For example, you might want to know how much of the variation in exam performance can be explained by revision time, test anxiety, lecture attendance and gender "as a whole", but also the "relative contribution" of each independent variable in explaining the variance.

This "quick start" guide shows you how to carry out multiple regression using SPSS Statistics, as well as interpret and report the results from this test. However, before we introduce you to this procedure, you need to understand the different assumptions that your data must meet in order for multiple regression to give you a valid result. We discuss these assumptions next.

SPSS Statistics

Assumptions.

When you choose to analyse your data using multiple regression, part of the process involves checking to make sure that the data you want to analyse can actually be analysed using multiple regression. You need to do this because it is only appropriate to use multiple regression if your data "passes" eight assumptions that are required for multiple regression to give you a valid result. In practice, checking for these eight assumptions just adds a little bit more time to your analysis, requiring you to click a few more buttons in SPSS Statistics when performing your analysis, as well as think a little bit more about your data, but it is not a difficult task.

Before we introduce you to these eight assumptions, do not be surprised if, when analysing your own data using SPSS Statistics, one or more of these assumptions is violated (i.e., not met). This is not uncommon when working with real-world data rather than textbook examples, which often only show you how to carry out multiple regression when everything goes well! However, don’t worry. Even when your data fails certain assumptions, there is often a solution to overcome this. First, let's take a look at these eight assumptions:

- Assumption #1: Your dependent variable should be measured on a continuous scale (i.e., it is either an interval or ratio variable). Examples of variables that meet this criterion include revision time (measured in hours), intelligence (measured using IQ score), exam performance (measured from 0 to 100), weight (measured in kg), and so forth. You can learn more about interval and ratio variables in our article: Types of Variable . If your dependent variable was measured on an ordinal scale, you will need to carry out ordinal regression rather than multiple regression. Examples of ordinal variables include Likert items (e.g., a 7-point scale from "strongly agree" through to "strongly disagree"), amongst other ways of ranking categories (e.g., a 3-point scale explaining how much a customer liked a product, ranging from "Not very much" to "Yes, a lot").

- Assumption #2: You have two or more independent variables , which can be either continuous (i.e., an interval or ratio variable) or categorical (i.e., an ordinal or nominal variable). For examples of continuous and ordinal variables , see the bullet above. Examples of nominal variables include gender (e.g., 2 groups: male and female), ethnicity (e.g., 3 groups: Caucasian, African American and Hispanic), physical activity level (e.g., 4 groups: sedentary, low, moderate and high), profession (e.g., 5 groups: surgeon, doctor, nurse, dentist, therapist), and so forth. Again, you can learn more about variables in our article: Types of Variable . If one of your independent variables is dichotomous and considered a moderating variable, you might need to run a Dichotomous moderator analysis .

- Assumption #3: You should have independence of observations (i.e., independence of residuals ), which you can easily check using the Durbin-Watson statistic, which is a simple test to run using SPSS Statistics. We explain how to interpret the result of the Durbin-Watson statistic, as well as showing you the SPSS Statistics procedure required, in our enhanced multiple regression guide.

- Assumption #4: There needs to be a linear relationship between (a) the dependent variable and each of your independent variables, and (b) the dependent variable and the independent variables collectively . Whilst there are a number of ways to check for these linear relationships, we suggest creating scatterplots and partial regression plots using SPSS Statistics, and then visually inspecting these scatterplots and partial regression plots to check for linearity. If the relationship displayed in your scatterplots and partial regression plots are not linear, you will have to either run a non-linear regression analysis or "transform" your data, which you can do using SPSS Statistics. In our enhanced multiple regression guide, we show you how to: (a) create scatterplots and partial regression plots to check for linearity when carrying out multiple regression using SPSS Statistics; (b) interpret different scatterplot and partial regression plot results; and (c) transform your data using SPSS Statistics if you do not have linear relationships between your variables.

- Assumption #5: Your data needs to show homoscedasticity , which is where the variances along the line of best fit remain similar as you move along the line. We explain more about what this means and how to assess the homoscedasticity of your data in our enhanced multiple regression guide. When you analyse your own data, you will need to plot the studentized residuals against the unstandardized predicted values. In our enhanced multiple regression guide, we explain: (a) how to test for homoscedasticity using SPSS Statistics; (b) some of the things you will need to consider when interpreting your data; and (c) possible ways to continue with your analysis if your data fails to meet this assumption.

- Assumption #6: Your data must not show multicollinearity , which occurs when you have two or more independent variables that are highly correlated with each other. This leads to problems with understanding which independent variable contributes to the variance explained in the dependent variable, as well as technical issues in calculating a multiple regression model. Therefore, in our enhanced multiple regression guide, we show you: (a) how to use SPSS Statistics to detect for multicollinearity through an inspection of correlation coefficients and Tolerance/VIF values; and (b) how to interpret these correlation coefficients and Tolerance/VIF values so that you can determine whether your data meets or violates this assumption.

- Assumption #7: There should be no significant outliers , high leverage points or highly influential points . Outliers, leverage and influential points are different terms used to represent observations in your data set that are in some way unusual when you wish to perform a multiple regression analysis. These different classifications of unusual points reflect the different impact they have on the regression line. An observation can be classified as more than one type of unusual point. However, all these points can have a very negative effect on the regression equation that is used to predict the value of the dependent variable based on the independent variables. This can change the output that SPSS Statistics produces and reduce the predictive accuracy of your results as well as the statistical significance. Fortunately, when using SPSS Statistics to run multiple regression on your data, you can detect possible outliers, high leverage points and highly influential points. In our enhanced multiple regression guide, we: (a) show you how to detect outliers using "casewise diagnostics" and "studentized deleted residuals", which you can do using SPSS Statistics, and discuss some of the options you have in order to deal with outliers; (b) check for leverage points using SPSS Statistics and discuss what you should do if you have any; and (c) check for influential points in SPSS Statistics using a measure of influence known as Cook's Distance, before presenting some practical approaches in SPSS Statistics to deal with any influential points you might have.

- Assumption #8: Finally, you need to check that the residuals (errors) are approximately normally distributed (we explain these terms in our enhanced multiple regression guide). Two common methods to check this assumption include using: (a) a histogram (with a superimposed normal curve) and a Normal P-P Plot; or (b) a Normal Q-Q Plot of the studentized residuals. Again, in our enhanced multiple regression guide, we: (a) show you how to check this assumption using SPSS Statistics, whether you use a histogram (with superimposed normal curve) and Normal P-P Plot, or Normal Q-Q Plot; (b) explain how to interpret these diagrams; and (c) provide a possible solution if your data fails to meet this assumption.

You can check assumptions #3, #4, #5, #6, #7 and #8 using SPSS Statistics. Assumptions #1 and #2 should be checked first, before moving onto assumptions #3, #4, #5, #6, #7 and #8. Just remember that if you do not run the statistical tests on these assumptions correctly, the results you get when running multiple regression might not be valid. This is why we dedicate a number of sections of our enhanced multiple regression guide to help you get this right. You can find out about our enhanced content as a whole on our Features: Overview page, or more specifically, learn how we help with testing assumptions on our Features: Assumptions page.

In the section, Procedure , we illustrate the SPSS Statistics procedure to perform a multiple regression assuming that no assumptions have been violated. First, we introduce the example that is used in this guide.

A health researcher wants to be able to predict "VO 2 max", an indicator of fitness and health. Normally, to perform this procedure requires expensive laboratory equipment and necessitates that an individual exercise to their maximum (i.e., until they can longer continue exercising due to physical exhaustion). This can put off those individuals who are not very active/fit and those individuals who might be at higher risk of ill health (e.g., older unfit subjects). For these reasons, it has been desirable to find a way of predicting an individual's VO 2 max based on attributes that can be measured more easily and cheaply. To this end, a researcher recruited 100 participants to perform a maximum VO 2 max test, but also recorded their "age", "weight", "heart rate" and "gender". Heart rate is the average of the last 5 minutes of a 20 minute, much easier, lower workload cycling test. The researcher's goal is to be able to predict VO 2 max based on these four attributes: age, weight, heart rate and gender.

Setup in SPSS Statistics

In SPSS Statistics, we created six variables: (1) VO 2 max , which is the maximal aerobic capacity; (2) age , which is the participant's age; (3) weight , which is the participant's weight (technically, it is their 'mass'); (4) heart_rate , which is the participant's heart rate; (5) gender , which is the participant's gender; and (6) caseno , which is the case number. The caseno variable is used to make it easy for you to eliminate cases (e.g., "significant outliers", "high leverage points" and "highly influential points") that you have identified when checking for assumptions. In our enhanced multiple regression guide, we show you how to correctly enter data in SPSS Statistics to run a multiple regression when you are also checking for assumptions. You can learn about our enhanced data setup content on our Features: Data Setup page. Alternately, see our generic, "quick start" guide: Entering Data in SPSS Statistics .

Test Procedure in SPSS Statistics

The seven steps below show you how to analyse your data using multiple regression in SPSS Statistics when none of the eight assumptions in the previous section, Assumptions , have been violated. At the end of these seven steps, we show you how to interpret the results from your multiple regression. If you are looking for help to make sure your data meets assumptions #3, #4, #5, #6, #7 and #8, which are required when using multiple regression and can be tested using SPSS Statistics, you can learn more in our enhanced guide (see our Features: Overview page to learn more).

Note: The procedure that follows is identical for SPSS Statistics versions 18 to 28 , as well as the subscription version of SPSS Statistics, with version 28 and the subscription version being the latest versions of SPSS Statistics. However, in version 27 and the subscription version , SPSS Statistics introduced a new look to their interface called " SPSS Light ", replacing the previous look for versions 26 and earlier versions , which was called " SPSS Standard ". Therefore, if you have SPSS Statistics versions 27 or 28 (or the subscription version of SPSS Statistics), the images that follow will be light grey rather than blue. However, the procedure is identical .

Published with written permission from SPSS Statistics, IBM Corporation.

Note: Don't worry that you're selecting A nalyze > R egression > L inear... on the main menu or that the dialogue boxes in the steps that follow have the title, Linear Regression . You have not made a mistake. You are in the correct place to carry out the multiple regression procedure. This is just the title that SPSS Statistics gives, even when running a multiple regression procedure.

Interpreting and Reporting the Output of Multiple Regression Analysis

SPSS Statistics will generate quite a few tables of output for a multiple regression analysis. In this section, we show you only the three main tables required to understand your results from the multiple regression procedure, assuming that no assumptions have been violated. A complete explanation of the output you have to interpret when checking your data for the eight assumptions required to carry out multiple regression is provided in our enhanced guide. This includes relevant scatterplots and partial regression plots, histogram (with superimposed normal curve), Normal P-P Plot and Normal Q-Q Plot, correlation coefficients and Tolerance/VIF values, casewise diagnostics and studentized deleted residuals.

However, in this "quick start" guide, we focus only on the three main tables you need to understand your multiple regression results, assuming that your data has already met the eight assumptions required for multiple regression to give you a valid result:

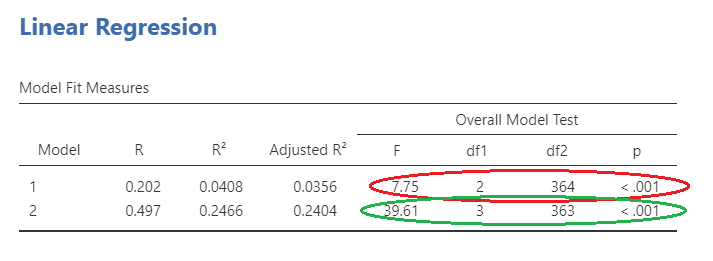

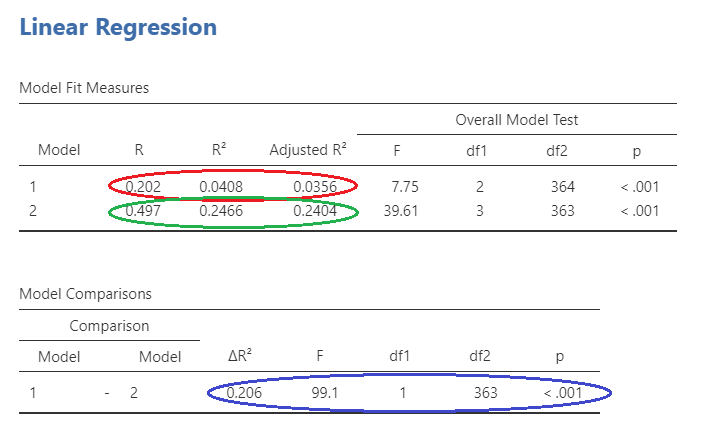

Determining how well the model fits

The first table of interest is the Model Summary table. This table provides the R , R 2 , adjusted R 2 , and the standard error of the estimate, which can be used to determine how well a regression model fits the data:

The " R " column represents the value of R , the multiple correlation coefficient . R can be considered to be one measure of the quality of the prediction of the dependent variable; in this case, VO 2 max . A value of 0.760, in this example, indicates a good level of prediction. The " R Square " column represents the R 2 value (also called the coefficient of determination), which is the proportion of variance in the dependent variable that can be explained by the independent variables (technically, it is the proportion of variation accounted for by the regression model above and beyond the mean model). You can see from our value of 0.577 that our independent variables explain 57.7% of the variability of our dependent variable, VO 2 max . However, you also need to be able to interpret " Adjusted R Square " ( adj. R 2 ) to accurately report your data. We explain the reasons for this, as well as the output, in our enhanced multiple regression guide.

Statistical significance

The F -ratio in the ANOVA table (see below) tests whether the overall regression model is a good fit for the data. The table shows that the independent variables statistically significantly predict the dependent variable, F (4, 95) = 32.393, p < .0005 (i.e., the regression model is a good fit of the data).

Estimated model coefficients

The general form of the equation to predict VO 2 max from age , weight , heart_rate , gender , is:

predicted VO 2 max = 87.83 – (0.165 x age ) – (0.385 x weight ) – (0.118 x heart_rate ) + (13.208 x gender )

This is obtained from the Coefficients table, as shown below:

Unstandardized coefficients indicate how much the dependent variable varies with an independent variable when all other independent variables are held constant. Consider the effect of age in this example. The unstandardized coefficient, B 1 , for age is equal to -0.165 (see Coefficients table). This means that for each one year increase in age, there is a decrease in VO 2 max of 0.165 ml/min/kg.

Statistical significance of the independent variables

You can test for the statistical significance of each of the independent variables. This tests whether the unstandardized (or standardized) coefficients are equal to 0 (zero) in the population. If p < .05, you can conclude that the coefficients are statistically significantly different to 0 (zero). The t -value and corresponding p -value are located in the " t " and " Sig. " columns, respectively, as highlighted below:

You can see from the " Sig. " column that all independent variable coefficients are statistically significantly different from 0 (zero). Although the intercept, B 0 , is tested for statistical significance, this is rarely an important or interesting finding.

Putting it all together

You could write up the results as follows:

A multiple regression was run to predict VO 2 max from gender, age, weight and heart rate. These variables statistically significantly predicted VO 2 max, F (4, 95) = 32.393, p < .0005, R 2 = .577. All four variables added statistically significantly to the prediction, p < .05.

If you are unsure how to interpret regression equations or how to use them to make predictions, we discuss this in our enhanced multiple regression guide. We also show you how to write up the results from your assumptions tests and multiple regression output if you need to report this in a dissertation/thesis, assignment or research report. We do this using the Harvard and APA styles. You can learn more about our enhanced content on our Features: Overview page.

- Privacy Policy

Home » Regression Analysis – Methods, Types and Examples

Regression Analysis – Methods, Types and Examples

Table of Contents

Regression Analysis

Regression analysis is a set of statistical processes for estimating the relationships among variables . It includes many techniques for modeling and analyzing several variables when the focus is on the relationship between a dependent variable and one or more independent variables (or ‘predictors’).

Regression Analysis Methodology

Here is a general methodology for performing regression analysis:

- Define the research question: Clearly state the research question or hypothesis you want to investigate. Identify the dependent variable (also called the response variable or outcome variable) and the independent variables (also called predictor variables or explanatory variables) that you believe are related to the dependent variable.

- Collect data: Gather the data for the dependent variable and independent variables. Ensure that the data is relevant, accurate, and representative of the population or phenomenon you are studying.

- Explore the data: Perform exploratory data analysis to understand the characteristics of the data, identify any missing values or outliers, and assess the relationships between variables through scatter plots, histograms, or summary statistics.

- Choose the regression model: Select an appropriate regression model based on the nature of the variables and the research question. Common regression models include linear regression, multiple regression, logistic regression, polynomial regression, and time series regression, among others.

- Assess assumptions: Check the assumptions of the regression model. Some common assumptions include linearity (the relationship between variables is linear), independence of errors, homoscedasticity (constant variance of errors), and normality of errors. Violation of these assumptions may require additional steps or alternative models.

- Estimate the model: Use a suitable method to estimate the parameters of the regression model. The most common method is ordinary least squares (OLS), which minimizes the sum of squared differences between the observed and predicted values of the dependent variable.

- I nterpret the results: Analyze the estimated coefficients, p-values, confidence intervals, and goodness-of-fit measures (e.g., R-squared) to interpret the results. Determine the significance and direction of the relationships between the independent variables and the dependent variable.

- Evaluate model performance: Assess the overall performance of the regression model using appropriate measures, such as R-squared, adjusted R-squared, and root mean squared error (RMSE). These measures indicate how well the model fits the data and how much of the variation in the dependent variable is explained by the independent variables.

- Test assumptions and diagnose problems: Check the residuals (the differences between observed and predicted values) for any patterns or deviations from assumptions. Conduct diagnostic tests, such as examining residual plots, testing for multicollinearity among independent variables, and assessing heteroscedasticity or autocorrelation, if applicable.

- Make predictions and draw conclusions: Once you have a satisfactory model, use it to make predictions on new or unseen data. Draw conclusions based on the results of the analysis, considering the limitations and potential implications of the findings.

Types of Regression Analysis

Types of Regression Analysis are as follows:

Linear Regression

Linear regression is the most basic and widely used form of regression analysis. It models the linear relationship between a dependent variable and one or more independent variables. The goal is to find the best-fitting line that minimizes the sum of squared differences between observed and predicted values.

Multiple Regression

Multiple regression extends linear regression by incorporating two or more independent variables to predict the dependent variable. It allows for examining the simultaneous effects of multiple predictors on the outcome variable.

Polynomial Regression

Polynomial regression models non-linear relationships between variables by adding polynomial terms (e.g., squared or cubic terms) to the regression equation. It can capture curved or nonlinear patterns in the data.

Logistic Regression

Logistic regression is used when the dependent variable is binary or categorical. It models the probability of the occurrence of a certain event or outcome based on the independent variables. Logistic regression estimates the coefficients using the logistic function, which transforms the linear combination of predictors into a probability.

Ridge Regression and Lasso Regression

Ridge regression and Lasso regression are techniques used for addressing multicollinearity (high correlation between independent variables) and variable selection. Both methods introduce a penalty term to the regression equation to shrink or eliminate less important variables. Ridge regression uses L2 regularization, while Lasso regression uses L1 regularization.

Time Series Regression

Time series regression analyzes the relationship between a dependent variable and independent variables when the data is collected over time. It accounts for autocorrelation and trends in the data and is used in forecasting and studying temporal relationships.

Nonlinear Regression

Nonlinear regression models are used when the relationship between the dependent variable and independent variables is not linear. These models can take various functional forms and require estimation techniques different from those used in linear regression.

Poisson Regression

Poisson regression is employed when the dependent variable represents count data. It models the relationship between the independent variables and the expected count, assuming a Poisson distribution for the dependent variable.

Generalized Linear Models (GLM)

GLMs are a flexible class of regression models that extend the linear regression framework to handle different types of dependent variables, including binary, count, and continuous variables. GLMs incorporate various probability distributions and link functions.

Regression Analysis Formulas

Regression analysis involves estimating the parameters of a regression model to describe the relationship between the dependent variable (Y) and one or more independent variables (X). Here are the basic formulas for linear regression, multiple regression, and logistic regression:

Linear Regression:

Simple Linear Regression Model: Y = β0 + β1X + ε

Multiple Linear Regression Model: Y = β0 + β1X1 + β2X2 + … + βnXn + ε

In both formulas:

- Y represents the dependent variable (response variable).

- X represents the independent variable(s) (predictor variable(s)).

- β0, β1, β2, …, βn are the regression coefficients or parameters that need to be estimated.

- ε represents the error term or residual (the difference between the observed and predicted values).

Multiple Regression:

Multiple regression extends the concept of simple linear regression by including multiple independent variables.

Multiple Regression Model: Y = β0 + β1X1 + β2X2 + … + βnXn + ε

The formulas are similar to those in linear regression, with the addition of more independent variables.

Logistic Regression:

Logistic regression is used when the dependent variable is binary or categorical. The logistic regression model applies a logistic or sigmoid function to the linear combination of the independent variables.

Logistic Regression Model: p = 1 / (1 + e^-(β0 + β1X1 + β2X2 + … + βnXn))

In the formula:

- p represents the probability of the event occurring (e.g., the probability of success or belonging to a certain category).

- X1, X2, …, Xn represent the independent variables.

- e is the base of the natural logarithm.

The logistic function ensures that the predicted probabilities lie between 0 and 1, allowing for binary classification.

Regression Analysis Examples

Regression Analysis Examples are as follows:

- Stock Market Prediction: Regression analysis can be used to predict stock prices based on various factors such as historical prices, trading volume, news sentiment, and economic indicators. Traders and investors can use this analysis to make informed decisions about buying or selling stocks.

- Demand Forecasting: In retail and e-commerce, real-time It can help forecast demand for products. By analyzing historical sales data along with real-time data such as website traffic, promotional activities, and market trends, businesses can adjust their inventory levels and production schedules to meet customer demand more effectively.

- Energy Load Forecasting: Utility companies often use real-time regression analysis to forecast electricity demand. By analyzing historical energy consumption data, weather conditions, and other relevant factors, they can predict future energy loads. This information helps them optimize power generation and distribution, ensuring a stable and efficient energy supply.

- Online Advertising Performance: It can be used to assess the performance of online advertising campaigns. By analyzing real-time data on ad impressions, click-through rates, conversion rates, and other metrics, advertisers can adjust their targeting, messaging, and ad placement strategies to maximize their return on investment.

- Predictive Maintenance: Regression analysis can be applied to predict equipment failures or maintenance needs. By continuously monitoring sensor data from machines or vehicles, regression models can identify patterns or anomalies that indicate potential failures. This enables proactive maintenance, reducing downtime and optimizing maintenance schedules.

- Financial Risk Assessment: Real-time regression analysis can help financial institutions assess the risk associated with lending or investment decisions. By analyzing real-time data on factors such as borrower financials, market conditions, and macroeconomic indicators, regression models can estimate the likelihood of default or assess the risk-return tradeoff for investment portfolios.

Importance of Regression Analysis

Importance of Regression Analysis is as follows:

- Relationship Identification: Regression analysis helps in identifying and quantifying the relationship between a dependent variable and one or more independent variables. It allows us to determine how changes in independent variables impact the dependent variable. This information is crucial for decision-making, planning, and forecasting.

- Prediction and Forecasting: Regression analysis enables us to make predictions and forecasts based on the relationships identified. By estimating the values of the dependent variable using known values of independent variables, regression models can provide valuable insights into future outcomes. This is particularly useful in business, economics, finance, and other fields where forecasting is vital for planning and strategy development.

- Causality Assessment: While correlation does not imply causation, regression analysis provides a framework for assessing causality by considering the direction and strength of the relationship between variables. It allows researchers to control for other factors and assess the impact of a specific independent variable on the dependent variable. This helps in determining the causal effect and identifying significant factors that influence outcomes.

- Model Building and Variable Selection: Regression analysis aids in model building by determining the most appropriate functional form of the relationship between variables. It helps researchers select relevant independent variables and eliminate irrelevant ones, reducing complexity and improving model accuracy. This process is crucial for creating robust and interpretable models.