Research Topics & Ideas: Data Science

50 Topic Ideas To Kickstart Your Research Project

If you’re just starting out exploring data science-related topics for your dissertation, thesis or research project, you’ve come to the right place. In this post, we’ll help kickstart your research by providing a hearty list of data science and analytics-related research ideas , including examples from recent studies.

PS – This is just the start…

We know it’s exciting to run through a list of research topics, but please keep in mind that this list is just a starting point . These topic ideas provided here are intentionally broad and generic , so keep in mind that you will need to develop them further. Nevertheless, they should inspire some ideas for your project.

To develop a suitable research topic, you’ll need to identify a clear and convincing research gap , and a viable plan to fill that gap. If this sounds foreign to you, check out our free research topic webinar that explores how to find and refine a high-quality research topic, from scratch. Alternatively, consider our 1-on-1 coaching service .

Data Science-Related Research Topics

- Developing machine learning models for real-time fraud detection in online transactions.

- The use of big data analytics in predicting and managing urban traffic flow.

- Investigating the effectiveness of data mining techniques in identifying early signs of mental health issues from social media usage.

- The application of predictive analytics in personalizing cancer treatment plans.

- Analyzing consumer behavior through big data to enhance retail marketing strategies.

- The role of data science in optimizing renewable energy generation from wind farms.

- Developing natural language processing algorithms for real-time news aggregation and summarization.

- The application of big data in monitoring and predicting epidemic outbreaks.

- Investigating the use of machine learning in automating credit scoring for microfinance.

- The role of data analytics in improving patient care in telemedicine.

- Developing AI-driven models for predictive maintenance in the manufacturing industry.

- The use of big data analytics in enhancing cybersecurity threat intelligence.

- Investigating the impact of sentiment analysis on brand reputation management.

- The application of data science in optimizing logistics and supply chain operations.

- Developing deep learning techniques for image recognition in medical diagnostics.

- The role of big data in analyzing climate change impacts on agricultural productivity.

- Investigating the use of data analytics in optimizing energy consumption in smart buildings.

- The application of machine learning in detecting plagiarism in academic works.

- Analyzing social media data for trends in political opinion and electoral predictions.

- The role of big data in enhancing sports performance analytics.

- Developing data-driven strategies for effective water resource management.

- The use of big data in improving customer experience in the banking sector.

- Investigating the application of data science in fraud detection in insurance claims.

- The role of predictive analytics in financial market risk assessment.

- Developing AI models for early detection of network vulnerabilities.

Data Science Research Ideas (Continued)

- The application of big data in public transportation systems for route optimization.

- Investigating the impact of big data analytics on e-commerce recommendation systems.

- The use of data mining techniques in understanding consumer preferences in the entertainment industry.

- Developing predictive models for real estate pricing and market trends.

- The role of big data in tracking and managing environmental pollution.

- Investigating the use of data analytics in improving airline operational efficiency.

- The application of machine learning in optimizing pharmaceutical drug discovery.

- Analyzing online customer reviews to inform product development in the tech industry.

- The role of data science in crime prediction and prevention strategies.

- Developing models for analyzing financial time series data for investment strategies.

- The use of big data in assessing the impact of educational policies on student performance.

- Investigating the effectiveness of data visualization techniques in business reporting.

- The application of data analytics in human resource management and talent acquisition.

- Developing algorithms for anomaly detection in network traffic data.

- The role of machine learning in enhancing personalized online learning experiences.

- Investigating the use of big data in urban planning and smart city development.

- The application of predictive analytics in weather forecasting and disaster management.

- Analyzing consumer data to drive innovations in the automotive industry.

- The role of data science in optimizing content delivery networks for streaming services.

- Developing machine learning models for automated text classification in legal documents.

- The use of big data in tracking global supply chain disruptions.

- Investigating the application of data analytics in personalized nutrition and fitness.

- The role of big data in enhancing the accuracy of geological surveying for natural resource exploration.

- Developing predictive models for customer churn in the telecommunications industry.

- The application of data science in optimizing advertisement placement and reach.

Recent Data Science-Related Studies

While the ideas we’ve presented above are a decent starting point for finding a research topic, they are fairly generic and non-specific. So, it helps to look at actual studies in the data science and analytics space to see how this all comes together in practice.

Below, we’ve included a selection of recent studies to help refine your thinking. These are actual studies, so they can provide some useful insight as to what a research topic looks like in practice.

- Data Science in Healthcare: COVID-19 and Beyond (Hulsen, 2022)

- Auto-ML Web-application for Automated Machine Learning Algorithm Training and evaluation (Mukherjee & Rao, 2022)

- Survey on Statistics and ML in Data Science and Effect in Businesses (Reddy et al., 2022)

- Visualization in Data Science VDS @ KDD 2022 (Plant et al., 2022)

- An Essay on How Data Science Can Strengthen Business (Santos, 2023)

- A Deep study of Data science related problems, application and machine learning algorithms utilized in Data science (Ranjani et al., 2022)

- You Teach WHAT in Your Data Science Course?!? (Posner & Kerby-Helm, 2022)

- Statistical Analysis for the Traffic Police Activity: Nashville, Tennessee, USA (Tufail & Gul, 2022)

- Data Management and Visual Information Processing in Financial Organization using Machine Learning (Balamurugan et al., 2022)

- A Proposal of an Interactive Web Application Tool QuickViz: To Automate Exploratory Data Analysis (Pitroda, 2022)

- Applications of Data Science in Respective Engineering Domains (Rasool & Chaudhary, 2022)

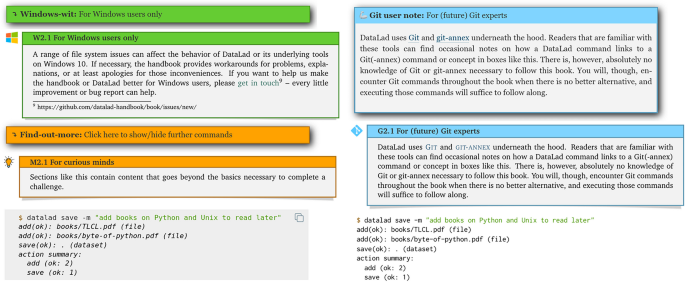

- Jupyter Notebooks for Introducing Data Science to Novice Users (Fruchart et al., 2022)

- Towards a Systematic Review of Data Science Programs: Themes, Courses, and Ethics (Nellore & Zimmer, 2022)

- Application of data science and bioinformatics in healthcare technologies (Veeranki & Varshney, 2022)

- TAPS Responsibility Matrix: A tool for responsible data science by design (Urovi et al., 2023)

- Data Detectives: A Data Science Program for Middle Grade Learners (Thompson & Irgens, 2022)

- MACHINE LEARNING FOR NON-MAJORS: A WHITE BOX APPROACH (Mike & Hazzan, 2022)

- COMPONENTS OF DATA SCIENCE AND ITS APPLICATIONS (Paul et al., 2022)

- Analysis on the Application of Data Science in Business Analytics (Wang, 2022)

As you can see, these research topics are a lot more focused than the generic topic ideas we presented earlier. So, for you to develop a high-quality research topic, you’ll need to get specific and laser-focused on a specific context with specific variables of interest. In the video below, we explore some other important things you’ll need to consider when crafting your research topic.

Get 1-On-1 Help

If you’re still unsure about how to find a quality research topic, check out our Research Topic Kickstarter service, which is the perfect starting point for developing a unique, well-justified research topic.

You Might Also Like:

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Print Friendly

- SUGGESTED TOPICS

- The Magazine

- Newsletters

- Managing Yourself

- Managing Teams

- Work-life Balance

- The Big Idea

- Data & Visuals

- Reading Lists

- Case Selections

- HBR Learning

- Topic Feeds

- Account Settings

- Email Preferences

Data management

- Technology and analytics

- Analytics and data science

- Performance indicators

How Well Does Your Company Use Analytics?

- Preethika Sainam

- Seigyoung Auh

- Richard Ettenson

- Yeon Sung Jung

- July 27, 2022

How Midsize Companies Can Compete in AI

- Yannick Bammens

- Paul Hünermund

- September 06, 2021

Generative AI Will Transform Virtual Meetings

- Dash Bibhudatta

- November 29, 2023

Bad Data Is Sapping Your Team’s Productivity

- Thomas C. Redman

- November 30, 2022

The Untapped Potential of Health Care APIs

- Robert S. Huckman

- Maya Uppaluru

- December 23, 2015

5 Pillars for Democratizing Data at Your Organization

- Hippolyte Lefebvre

- Christine Legner

- Elizabeth A Teracino

- November 24, 2023

How GDPR Will Transform Digital Marketing

- Dipayan Ghosh

- May 21, 2018

What Do We Do About the Biases in AI?

- James Manyika

- Jake Silberg

- Brittany Presten

- October 25, 2019

The Dangers of Digital Protectionism

- Ziyang K Fan and Anil Gupta

- Anil K. Gupta

- August 30, 2018

We Need AI That Is Explainable, Auditable, and Transparent

- Greg Satell

- Josh Sutton

- October 28, 2019

Why Your Company Needs Data-Product Managers

- Thomas H. Davenport

- October 13, 2022

Make Data a Cornerstone of Your Team

- Vadim Revzin

- Sergei Revzin

- October 09, 2018

Two leading researchers discuss the value of oddball data

- Stephen Scherer

- Roger Martin

- From the November 2009 Issue

The Dangers of Categorical Thinking

- Bart de Langhe

- Phil Fernbach

- From the September–October 2019 Issue

Using Uncertainty Modeling to Better Predict Demand

- Murat Tarakci

- January 06, 2022

Why You Aren't Getting More from Your Marketing AI

- Eva Ascarza

- Michael Ross

- Bruce G.S. Hardie

- From the July–August 2021 Issue

How Google Proved Management Matters

- David A. Garvin

- November 19, 2013

Selling into Micromarkets

- Manish Goyal

- Maryanne Q. Hancock

- Homayoun Hatami

- From the July–August 2012 Issue

3 Ways to Build a Data-Driven Team

- Tomas Chamorro-Premuzic PhD.

- October 10, 2018

With Big Data Comes Big Responsibility

- Alex "Sandy" Pentland

- Scott Berinato

- From the November 2014 Issue

Electronic Medical Records at the ISS Clinic in Mbarara, Uganda

- Julie Rosenberg

- Rebecca Weintraub

- May 18, 2012

Fleet Sales Pricing at Fjord Motor

- Robert L. Phillips

- October 04, 2011

Modak Analytics: Shaping the Future in Digital India?

- Naga Lakshmi Damaraju

- Navneet Kaur Khangura

- January 31, 2017

GasBuddy: Fueling Its Digital Platform for Agility and Growth

- Clare Gillan Huang

- March 01, 2018

From Theme Park To Resort: Customer Information Management At Port Aventura

- Mariano A Hervas

- Marc Planell

- Xavier Sala

- July 05, 2011

E-Commerce Analytics for CPG Firms (C): Free Delivery Terms

- Ayelet Israeli

- Fedor Ted Lisitsyn

- January 06, 2021

Fusion Strategy: How Real-Time Data and AI Will Power the Industrial Future

- Vijay Govindarajan

- N Venkat Venkatraman

- March 12, 2024

Data Driven: Profiting from Your Most Important Business Asset

- September 22, 2008

Behavior Change for Good

- Max H. Bazerman

- Michael Luca

- Marie Lawrence

- March 02, 2020

Happy Cow Ice Cream: Data-Driven Sales Forecasting

- Akarhade Prasanna

- Tim Summers

- Xiao Fang Cai

- October 08, 2019

Customer Data and Privacy: Tools for Preparing Your Team for the Future

- Harvard Business Review

- Timothy Morey

- Andrew Burt

- Christine Moorman

- October 07, 2020

VIA Science (C)

- Juan Alcacer

- Rembrand Koning

- Annelena Lobb

- Kerry Herman

- April 01, 2021

HBR's Year in Business and Technology: 2021 (2 Books)

- October 20, 2020

Generative AI Value Chain

- Matt Higgins

- July 17, 2023

The Year in Tech, 2021: Tools for Preparing Your Team for the Future

- David Weinberger

- Darrell K. Rigby

- David Furlonger

HBR Insights Web3, Crypto, and Blockchain Collection (3 Books)

- December 05, 2023

Facebook: Hard Questions (B)

- Neil Malhotra

- Sheila Melvin

- October 03, 2018

Generative AI: Tools for Preparing Your Team for the Future

- Ethan Mollick

- David De Cremer

- Tsedal Neeley

- Prabhakant Sinha

- February 06, 2024

Zalora: Data-Driven Pricing Recommendations

- August 19, 2022

Managing Marketing Data at Allstate

- John Deighton

- March 11, 2016

HR and the Information Agenda

- David Ulrich

- October 24, 2016

The (Often Hidden) Costs of Poor Data and Information

Popular topics, partner center.

Click through the PLOS taxonomy to find articles in your field.

For more information about PLOS Subject Areas, click here .

Loading metrics

Open Access

Peer-reviewed

Research Article

Research data management in academic institutions: A scoping review

Contributed equally to this work with: Laure Perrier, Erik Blondal, Heather MacDonald

* E-mail: [email protected]

Affiliation Gerstein Science Information Centre, University of Toronto, Toronto, Ontario, Canada

Affiliation Institute of Health Policy, Management and Evaluation, University of Toronto, Toronto, Ontario, Canada

¶ ‡ These authors also contributed equally to this work.

Affiliation Gibson D. Lewis Health Science Library, UNT Health Science Center, Fort Worth, Texas, United States of America

Affiliation St. Michael’s Hospital Library, St. Michael’s Hospital, Toronto, Ontario, Canada

Affiliation Faculty of Information, University of Toronto, Toronto, Ontario, Canada

Affiliation Engineering & Computer Science Library, University of Toronto, Toronto, Ontario, Canada

Affiliation Map and Data Library, University of Toronto, Toronto, Ontario, Canada

Affiliation MacOdrum Library, Carleton University, Ottawa, Ontario, Canada

- Laure Perrier,

- Erik Blondal,

- A. Patricia Ayala,

- Dylanne Dearborn,

- Tim Kenny,

- David Lightfoot,

- Roger Reka,

- Mindy Thuna,

- Leanne Trimble,

- Heather MacDonald

- Published: May 23, 2017

- https://doi.org/10.1371/journal.pone.0178261

- Reader Comments

The purpose of this study is to describe the volume, topics, and methodological nature of the existing research literature on research data management in academic institutions.

Materials and methods

We conducted a scoping review by searching forty literature databases encompassing a broad range of disciplines from inception to April 2016. We included all study types and data extracted on study design, discipline, data collection tools, and phase of the research data lifecycle.

We included 301 articles plus 10 companion reports after screening 13,002 titles and abstracts and 654 full-text articles. Most articles (85%) were published from 2010 onwards and conducted within the sciences (86%). More than three-quarters of the articles (78%) reported methods that included interviews, cross-sectional, or case studies. Most articles (68%) included the Giving Access to Data phase of the UK Data Archive Research Data Lifecycle that examines activities such as sharing data. When studies were grouped into five dominant groupings (Stakeholder, Data, Library, Tool/Device, and Publication), data quality emerged as an integral element.

Most studies relied on self-reports (interviews, surveys) or accounts from an observer (case studies) and we found few studies that collected empirical evidence on activities amongst data producers, particularly those examining the impact of research data management interventions. As well, fewer studies examined research data management at the early phases of research projects. The quality of all research outputs needs attention, from the application of best practices in research data management studies, to data producers depositing data in repositories for long-term use.

Citation: Perrier L, Blondal E, Ayala AP, Dearborn D, Kenny T, Lightfoot D, et al. (2017) Research data management in academic institutions: A scoping review. PLoS ONE 12(5): e0178261. https://doi.org/10.1371/journal.pone.0178261

Editor: Sanjay B. Jadhao, International Nutrition Inc, UNITED STATES

Received: February 27, 2017; Accepted: April 26, 2017; Published: May 23, 2017

Copyright: © 2017 Perrier et al. This is an open access article distributed under the terms of the Creative Commons Attribution License , which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Data Availability: Dataset is available from the Zenodo Repository, DOI: 10.5281/zenodo.557043 .

Funding: The authors received no specific funding for this work.

Competing interests: The authors have declared that no competing interests exist.

Introduction

Increased connectivity has accelerated progress in global research and estimates indicate scientific output is doubling approximately every ten years [ 1 ]. A rise in research activity results in an increase in research data output. However, data generated from research that is not prepared and stored for long-term access is at risk of being lost forever. Vines and colleagues report that the availability of data related to studies declines rapidly with the age of a study and determined that the odds of a data set being reported as available decreased 17% per year after publication)[ 2 ]. At the same time, research funding agencies and scholarly journals are progressively moving towards directives that require data management plans and demand data sharing [ 3 – 6 ]. The current research ecosystem is complex and highlights the need for focused attention on the stewardship of research data [ 1 , 7 ].

Academic institutions are multifaceted organizations that exist within the research ecosystem. Researchers practicing within universities and higher education institutions must comply with funding agency requirements when they are the recipients of research grants. For some disciplines, such as genomics and astronomy, persevering and sharing data is the norm [ 8 – 9 ] yet best practices stipulate that research be reproducible and transparent which indicates effective data management is pertinent to all disciplines.

Interest in research data management in the global community is on the rise. Recent activity has included the Bill & Melinda Gates Foundation moving their open access/open data policy, considered to be exceptionally strong, into force at the beginning of 2017 [ 10 ]. Researchers working towards a solution to the Zika virus organized themselves to publish all epidemiological and clinical data as soon as it was gathered and analyzed [ 11 ]. Fecher and colleagues [ 12 ] conducted a systematic review focusing on data sharing to support the development of a conceptual framework, however it lacked rigorous methods, such as the use of a comprehensive search strategy [ 13 ]. Another review on data sharing was conducted by Bull and colleagues [ 14 ] that examined stakeholders’ perspectives on ethical best practices but focused specifically on low- and middle-income settings. In this scoping review, we aim to assess the research literature that examines research data management as it relates to academic institutions. It is a time of increasing activity in the area of research data management [ 15 ] and higher learning institutions need to be ready to address this change, as well as provide support for their faculty and researchers. Identifying the current state of the literature so there is a clear understanding of the evidence in the area will provide guidance in planning strategies for services and support, as well as outlining essential areas for future research endeavors in research data management. The purpose of this study is to describe the volume, topics, and methodological nature of the existing research literature on research data management in academic institutions.

We conducted a scoping review using guidance from Arksey and O’Malley [ 16 ] and the Joanna Briggs Manual for Scoping Reviews [ 17 ]. A scoping review protocol was prepared and revised based on input from the research team, which included methodologists and librarians specializing in data management. It is available upon request from the corresponding author. Although traditionally applied to systematic reviews, the PRISMA Statement was used for reporting [ 18 ].

Data sources and literature search

We searched 40 electronic literature databases from inception until April 3–4, 2016. Since research data management is relevant to all disciplines, we did not restrict our search to literature databases in the sciences. This was done in order to gain an understanding of the breadth of research available and provide context for the science research literature on the topic of research data management. The search was peer-reviewed by an experienced librarian (HM) using the Peer Review of Electronic Search Strategies checklist and modified as necessary [ 19 ]. The full literature search for MEDLINE is available in the S1 File . Additional database literature searches are available from the corresponding author. Searches were performed with no year or language restrictions. We also searched conference proceedings and gray literature. The gray literature discovery process involved identifying and searching the websites of relevant organizations (such as the Association of Research Libraries, the Joint Information Systems Committee, and the Data Curation Centre). Finally, we scanned the references of included studies to identify other potentially relevant articles. The results were imported into Covidence (covidence.org) for the review team to screen the records.

Study selection

All study designs were considered, including qualitative and quantitative methods such as focus groups, interviews, cross-sectional studies, and randomized controlled trials. Eligible studies included academic institutions and reported on research data management involving areas such as infrastructure, services, and policy. We included studies from all disciplines within academic institutions with no restrictions on geographical location. Studies reporting results that accepted participants outside of academic institutions were included if 50% or more of the total sample represented respondents from academic institutions. For studies that examined entities other than human subjects, the study was included if the outcomes were pertinent to the broader research community, including academia. For example, if a sample of journal articles were retrieved to examine the data sharing statements but each study was not explicitly linked to a research sector, it was accepted into our review since the outcomes are significant to the entire research community and academia was not explicitly excluded. We excluded commentaries, editorials, or papers providing descriptions of processes that lacked a research component.

We define an academic institution as a higher education degree-granting organization dedicated to education and research. Research data management is defined as the storage, access, and preservation of data produced from a given investigation [ 20 ]. This includes issues such as creating data management plans, matters related to sharing data, delivery of services and tools, infrastructure considerations typically related to researchers, planners, librarians, and administrators.

A two-stage process was used to assess articles. Two investigators independently reviewed the retrieved titles and abstracts to identify those that met the inclusion criteria. The study selection process was pilot tested on a sample of records from the literature search. In the second stage, full-text articles of all records identified as relevant were retrieved and independently assessed by two investigators to determine if they met the inclusion criteria. Discrepancies were addressed by having a third reviewer resolve disagreements.

Data abstraction and analysis

After a training exercise, two investigators independently read each article and extracted relevant data in duplicate. Extracted data included study design, study details (such as purpose, methodology), participant characteristics, discipline, and data collection tools used to gather information for the study. In addition, articles were aligned with the research data lifecycle proposed by the United Kingdom Data Archive [ 21 ]. Although represented in a simple diagram, this framework incorporates a comprehensive set of activities (creating data, processing data, analyzing data, preserving data, giving access to data, re-using data) and actions associated with research data management clarifying the longer lifespan that data has outside of the research project that is was created within (see S2 File ). Differences in abstraction were resolved by a third reviewer. Companion reports were identified by matching the authors, timeframe for the study, and intervention. Those that were identified were used for supplementary material only. Risk of bias of individual studies was not assessed because our aim was to examine the extent, range, and nature of research activity, as is consistent with the proposed scoping review methodology [ 16 – 17 ].

We summarized the results descriptively with the use of validated guidelines for narrative synthesis [ 22 – 25 ]. Following guidance from Rodgers and colleagues, [ 22 ] data extraction tables were examined to determine the presence of dominant groups or clusters of characteristics by which the subsequent analysis could be organized. Two team members independently evaluated the abstracted data from the included articles in order to identify key characteristics and themes. Disagreement was resolved through discussion. Due to the heterogeneity of the data, articles and themes were summarized as frequencies and proportions.

Literature search

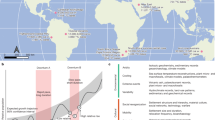

The literature search identified a total of 15,228 articles. After reviewing titles and abstracts, we retrieved 654 potentially relevant full-text articles. 301 articles were identified for inclusion in the study along with 10 companion documents ( Fig 1 ). The full list of citations for the included studies can be found in the S3 File . The five literature databases that identified the most included studies were MEDLINE (81 articles or 21.60%), Compendex (60 articles or 16%), INSPEC (55 articles or 14.67%), Library and Information Science Abstracts (52 articles or 13.87%), and BIOSIS Previews (47 articles or 12.53%). The full list of electronic databases is available in the S4 File which also includes the number of included studies traced back to their original literature database.

- PPT PowerPoint slide

- PNG larger image

- TIFF original image

https://doi.org/10.1371/journal.pone.0178261.g001

Characteristics of included articles

Most of the 301 articles were published from 2010 onwards (256 or 85.04%) with 15% published prior to that time ( Table 1 ). Almost half (45.85%) identified North America (Canada, United States, or Mexico) as the region where studies were conducted; however, close to one fifth of articles (18.60%) did not report where the study was conducted. Most of the articles (78.51%) reported methods that included cross-sectional (129 or 35.54%), interviews (86 or 23.69%), or case studies (70 or 19.28%), with 42 articles (out of 301) describing two or more methods. Articles were almost even for reporting qualitative evidence (44.85%) and quantitative evidence (43.85%), with mixed methods representing a smaller proportion (11.29%). Reliance was put on authors in reporting characteristics of studies and no interpretations were made with regards to how attributes of the studies were reported. As a result, some information may appear to have overlap in the reporting of disciplines. For example, health science, medicine, and biomedicine are reported separately as disciplines/subject areas. Authors identified 35 distinct disciplines in the articles with just under ten percent (8.64%) not reporting a discipline and the largest group (105 or 34.88%) being a multidisciplinary. The two disciplines reported most often were medicine and information science/library science (31 or 10.30% each). Studies were reported in 116 journals, 43 conference papers, 26 gray literature documents (e.g., reports), two book chapters, and one PhD dissertation. Almost one-third of the articles (99 or 32.89%) did not use a data collection tool (e.g., when a case study was reported) and a small number (22 or 7.31%) based their data collection tools on instruments previously reported in the literature. Most data collection tools were either developed by authors (97 or 32.23%) or no description was provided about their development (83 or 27.57%). No validated data collection tools were reported. We identified articles that offered no information on the sample size or participant characteristics, [ 26 – 29 ] as well as those that reported on the number of participants that completed the study but failed to describe how many were recruited [ 30 – 31 ].

https://doi.org/10.1371/journal.pone.0178261.t001

Research data lifecycle framework

Two hundred and seven (31.13%) articles aligned with the Giving Access to Data phase of the Research Data Lifecycle [ 20 ] ( Table 2 ) which include the components of distributing data, sharing data, controlling access, establishing copyright, and promoting data. The Preserving Data phase contained the next largest set of articles with 178 (26.77%). In contrast, Analysing Data and Processing Data were the two phases with the least amount of articles containing 28 (4.21%) and 49 (7.37%) respectively. Most articles (87 or 28.9%) were aligned with two phases of the Research Data Lifecycle and were followed by an almost even match of 73 (24.25%) aligning with three phases and 70 (23.26%) with one phase. Twenty-nine (9.63%) were not aligned with any phase of the Research Data Lifecycle and these included articles such as those that described education and training for librarians, or identified skill sets needed to work in research data management.

https://doi.org/10.1371/journal.pone.0178261.t002

Key characteristics of articles

Five dominant groupings were identified for the 301 articles ( Table 3 ). Each of these dominant groups were further categorized into subgroupings of articles to provide more granularity. The top three study types and the top three discipline/subject area is reported for each of the dominant groups. Half of the articles (151 or 50.17%) concentrated on stakeholders (Stakeholder Group), e.g., activities of researchers, publishers, participants / patients, funding agencies, 57 (18.94%) were data-focused (Data Group), e.g., investigating quality or integrity of data in repositories, development or refinement of metadata, 42 (13.95%) centered on library-related activities (Library Group), e.g., identifying skills or training for librarians working in data management, 27 (8.97%) described specific tools/applications/repositories (Tool/Device Group), e.g., introducing an electronic notebook into a laboratory, and 24 (7.97%) articles focused on the activities of publishing (Publication Group), e.g., examining data policies. The Stakeholder Group contained the largest subgroup of articles which was labelled ‘Researcher’ (119 or 39.53%).

https://doi.org/10.1371/journal.pone.0178261.t003

We identified 301 articles and 10 companion documents that focus on research data management in academic institutions published between 1995 and 2016. Tracing articles back to their original literature database indicates that 86% of the studies accepted into our review were from the applied science or basic science literature indicating high activity for research in this area among the sciences. The number of published articles has risen dramatically since 2010 with 85% of articles published post-2009, signaling the increased importance and interest in this area of research. However, the limited use of study designs, deficiency in standardized or validated data collection tools, and lack of transparency in reporting demonstrate the need for attention to rigor. As well, there are limited studies that examine the impact of research data management activities (e.g., the implementation of services, training, or tools).

Few of the study designs employed in the 301 articles collected empirical evidence on activities amongst data producers such as examining changes in behavior (e.g., movement from data withholding to data sharing) or identifying changes in endeavors (e.g., strategies to increase data quality in repositories). Close to 80% of the articles rely on self-reports (e.g., participating in interviews, filling out surveys) or accounts from an observer (e.g., describing events in a case study). Case studies made up almost one-fifth of the articles examined. This group of articles ranged from question-and-answer journalistic style reports, [ 32 ] to articles that offered structured descriptions of activities and processes [ 33 ]. Although study quality was not formally assessed, this range of offerings provided challenges with data abstraction, in particular with the journalistic style accounts. If papers provided clear reporting that included declaring a purpose and describing well-defined outcomes, these articles could supply valuable input to knowledge syntheses such as a realist review [ 34 – 35 ] despite being ranked lower in the hierarchy of evidence [ 36 ]. One exception was Hruby and colleagues [ 37 ] that included a retrospective analysis in their case report that examined the impact of introducing a centralized research data repository for datasets within a urology department at Columbia University. This study offered readers a fuller understanding of the impact of a research data management intervention by providing evidence that detailed a change. Results described a reduction in the time required to complete studies, and an increase in publication quantity and quality (i.e., increase in average journal impact factor of papers published). There is opportunity for those wishing to conduct studies that provide empirical evidence for data producers and those interested in data reuse, however, for those wishing to conduct case studies, the development of reporting guidelines may be of benefit.

Using the Research Data Lifecycle framework provides the opportunity to understand where researchers are focusing their efforts in studying research data management. Most studies fell within the Giving Access to Data phase of the framework which includes activities such as sharing data and controlling access to data, and the Preserving Data phase which focuses on activities such as documenting and archiving data. This aligns with the global trend of funding agencies moving towards requirements for open access and open data [ 15 ] which includes activities such as creating metadata/documentation and sharing data in public repositories when possible. Fewer studies fell within phases that occurred at the beginning of the Research Data Lifecycle which includes activities such as writing data management plans and the preparation of data for preservation. Research in these early phases that include planning and setting up processes for handling data as it is being created may provide insight into how these activities impact later phases of the Research Data Lifecycle, in particular with regards to data quality.

Data quality was examined in several of the Groups described in Table 3 . Within the Data Group, ‘data quality and integrity’ comprised the biggest subgroup of articles. Two other subgroups in the Data Group, ‘classification systems’ and ‘repositories’, provided articles that touched on issues related to data quality as well. These issues included refining metadata and improving functionalities in repositories that enabled scholarly use and reuse of materials. Willoughby and colleagues illustrated some of the challenges related to data quality when reporting on researchers in chemistry, biology, and physics [ 38 ]. They found that when filling out metadata for a repository, researchers used a ‘minimum required’ approach. The biggest inhibitor to adding useful metadata was the ‘blank canvas’ effect, where the users may have been willing to add metadata but did not know how. The authors concluded that simply providing a mechanism to add metadata was not sufficient. Data quality, or the lack thereof, was also identified in the Publication Group, with ‘data availability, accessibility, and reuse’ and ‘data policies’ subgroups listing articles that tracked the completeness of deposited data sets, and offered assessments on the guidance offered by journals on their data sharing policies. Piwowar and Chapman analyzed whether data sharing frequency was associated with funder and publisher requirements [ 39 ]. They found that NIH (National Institute of Health) funding had little impact on data sharing despite policies that required this. Data sharing was significantly association with the impact factor of a journal (not a journal’s data sharing policy) and the experience of the first/last authors. Studies that investigate processes to improve the quality of data deposited in repositories, or strategies to increase compliance with journal or funder data sharing policies that support depositing high-quality and useable data, could potentially provide tangible guidance to investigators interested in effective data reuse.

We found a number of articles with important information not reported. This included the geographic region in which the study was conducted (56 or 18.6%) and the discipline or subject area being examined (26 or 8.64%). Data abstraction identified studies that provided no information on participant populations (such as sample size or characteristics of the participants) as well as studies that reported the number of participants who completed the study, but failed to report the number recruited. Lack of transparency and poor documentation of research is highlighted in the recent Lancet series on ‘research waste’ that calls attention to avoiding the misuse of valuable resources and the inadequate emphasis on the reproducibility of research [ 40 ]. Those conducting research in data management must recognize the importance of research integrity being reflected in all research outputs that includes both publications and data.

We identified a sizable body of literature that describes research data management related to academic institutions, with the majority of studies conducted in the applied or basic sciences. Our results should promote further research in several areas. One area includes shifting the focus of studies towards collecting empirical evidence that demonstrates the impact of interventions related to research data management. Another area that requires further attention is researching activities that demonstrate concrete improvements to the quality and usefulness of data in repositories for reuse, as well as the examining facilitators and barriers for researchers to participate in this activity. In particular, there is a gap in research that examines activities in the early phases of research projects to determine the impact of interventions at this stage. Finally, researchers investigating research data management must follow best practices in research reporting and ensure the high quality of their own research outputs that includes both publications and datasets.

Supporting information

S1 file. medline search strategy..

https://doi.org/10.1371/journal.pone.0178261.s001

S2 File. Research data lifecycle phases.

https://doi.org/10.1371/journal.pone.0178261.s002

S3 File. Included studies.

https://doi.org/10.1371/journal.pone.0178261.s003

S4 File. Literature databases searched.

https://doi.org/10.1371/journal.pone.0178261.s004

Acknowledgments

We thank Mikaela Gray for retrieving articles, tracking papers back to their original literature databases, and assisting with references. We also thank Lily Yuxi Ren for retrieving conference proceedings and searching the gray literature. We acknowledge Matt Gertler for screening abstracts.

Author Contributions

- Conceptualization: LP.

- Data curation: LP EB HM.

- Formal analysis: LP EB.

- Investigation: LP EB HM APA DD TK DL MT LT RR.

- Methodology: LP.

- Project administration: LP.

- Supervision: LP.

- Validation: LP HM.

- Writing – original draft: LP.

- Writing – review & editing: LP EB HM APA DD DL TK MT LT RR.

- View Article

- Google Scholar

- PubMed/NCBI

- 3. Holdren JP. Increasing access to the results of federally funded scientific research. February 22, 2013. Office of Science and Technology Policy. Executive Office of the President. United States of America. Available at: https://obamawhitehouse.archives.gov/blog/2016/02/22/increasing-access-results-federally-funded-science . Accessed February 27, 2017.

- 4. OECD (Organization for Economic Co-Operation and Development). Declaration on access to research data from public funding. 2004. Available at: http://acts.oecd.org/Instruments/ShowInstrumentView.aspx?InstrumentID=157 . Accessed February 27, 2017.

- 5. Government of Canada. Research data. 2011. Available at: http://www.science.gc.ca/default.asp?lang=en&n=2BBD98C5-1 . Accessed February 27, 2017.

- 6. DCC (Digital Curation Centre). Overview of funders’ data policies. Available at: http://www.dcc.ac.uk/resources/policy-and-legal/overview-funders-data-policies . Accessed February 27, 2017.

- 8. Hayes J. The data-sharing policy of the World Meteorological Organization: The case for international sharing of scientific data. In: Mathae KB, Uhlir PF, editors. Committee on the Case of International Sharing of Scientific Data: A Focus on Developing Countries. National Academies Press; 2012. p. 29–31.

- 9. Ivezic Z. Data sharing in astronomy. In: Mathae KB, Uhlir PF, editors. Committee on the Case of International Sharing of Scientific Data: A Focus on Developing Countries. National Academies Press; 2012. p. 41–45.

- 10. van Noorden R. Gates Foundation announces world’s strongest policy on open access research. Nature Newsblog. Available at: http://blogs.nature.com/news/2014/11/gates-foundation-announces-worlds-strongest-policy-on-open-access-research.html . Accessed February 27, 2017.

- 13. Higgins JPT, Green S (editors). Cochrane Handbook for Systematic Reviews of Interventions Version 5.1.0 [updated March 2011]. The Cochrane Collaboration, 2011. Available from www.handbook.cochrane.org . Accessed February 27, 2017.

- 15. Shearer K. Comprehensive Brief on Research Data Management Policies. April 2015. Available at: http://web.archive.org/web/20151001135755/http://science.gc.ca/default.asp?lang=En&n=1E116DB8-1 . Accessed February 27, 2017.

- 17. The Joanna Briggs Institute. Joanna Briggs Institute Reviewers’ Manual: 2015 Edition. Methodology for JBI Scoping Reviews. Available at: http://joannabriggs.org/assets/docs/sumari/Reviewers-Manual_Methodology-for-JBI-Scoping-Reviews_2015_v2.pdf . Accessed February 27, 2017.

- 19. PRESS–Peer Review of Electronic Search Strategies: 2015 Guideline Explanation and Elaboration (PRESS E&E). Ottawa: CADTH; 2016 Jan.

- 20. Research Data Canada. Glossary–Research Data Management. Available at: http://www.rdc-drc.ca/glossary . Accessed February 27, 2017.

- 21. UK Data Archive. Research Data Lifecycle. Available at: http://www.data-archive.ac.uk/create-manage/life-cycle . Accessed February 27, 2017.

- 25. Hurwitz B, Greenhalgn T, Skultans V. Meta-narrative mapping: a new approach to the systematic review of complex evidence. In: Greenhalgh T, editor. Narrative Research in Health and Illness. Malden, MA: Blackwell Publishing Ltd; 2008. P. 349–381.

- 29. Wynholds LA, Wallis JC, Borgman CL, Sands A. Data, data use, and scientific inquiry: two case studies of data practices. Proceedings of the 12th ACM/IEEE-CS Joint Conference on Digital Libraries. 2012;19–22.

- 30. Averkamp S, Gu X. Report on the University libraries’ data management need survey. 2012. Available at: http://ir.uiowa.edu/lib_pubs/152 . Accessed February 27, 2017.

- 32. Roos A. Case study: developing research data management training and support at Helsinki University Library. Association of European Research Libraries. LIBER Case Study. June 2014. Available at: http://libereurope.eu/wp-content/uploads/2014/06/LIBER-Case-Study-Helsinki.pdf . Accessed February 27, 2017.

Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- My Account Login

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Open access

- Published: 17 June 2022

A focus groups study on data sharing and research data management

- Devan Ray Donaldson ORCID: orcid.org/0000-0003-2304-6303 1 &

- Joshua Wolfgang Koepke ORCID: orcid.org/0000-0002-0870-2918 1

Scientific Data volume 9 , Article number: 345 ( 2022 ) Cite this article

6391 Accesses

10 Citations

39 Altmetric

Metrics details

- Research data

- Research management

Data sharing can accelerate scientific discovery while increasing return on investment beyond the researcher or group that produced them. Data repositories enable data sharing and preservation over the long term, but little is known about scientists’ perceptions of them and their perspectives on data management and sharing practices. Using focus groups with scientists from five disciplines (atmospheric and earth science, computer science, chemistry, ecology, and neuroscience), we asked questions about data management to lead into a discussion of what features they think are necessary to include in data repository systems and services to help them implement the data sharing and preservation parts of their data management plans. Participants identified metadata quality control and training as problem areas in data management. Additionally, participants discussed several desired repository features, including: metadata control, data traceability, security, stable infrastructure, and data use restrictions. We present their desired repository features as a rubric for the research community to encourage repository utilization. Future directions for research are discussed.

Similar content being viewed by others

Frequent disturbances enhanced the resilience of past human populations

Interviews in the social sciences

The environmental sustainability of digital content consumption

Introduction.

Sharing scientific research data has many benefits. Data sharing produces stronger initial publication data by allowing peer review and validation of datasets and methods prior to publication 1 , 2 . Enabling such activities enhances the integrity of research data and promotes transparency 1 , 2 , both of which are critical for increasing confidence in science 3 , 4 . After publication, data sharing encourages further scientific inquiry and advancements by making data available for other scientists to explore and build upon 2 , 3 , 4 , 5 . Open data allows further scientific inquiry without the costs associated with new data creation 4 , 6 . Researchers in the developing world disproportionately experience the high costs of new data creation as they may struggle to find funding for projects not associated with direct improvement in living conditions 6 . Therefore, data sharing can enable lower-cost research opportunities within developing nations through reusing datasets, creating what Ukwoma and Dike 7 refer to as a “global network” of scientific data. The development of vaccines for the COVID-19 virus illustrates the impact of data sharing on society. Through open data sharing practices, including sharing the genome sequence for the virus, scientists were able to build on each other’s data to create vaccines in record time 8 , 9 , saving millions of people’s lives.

Despite the benefits, research has shown that many scientists still do not share their research data 10 , 11 . Most disciplines operate without established data sharing or data management guidelines, relying on individual or institutional solutions for data management and sharing 10 . Exceptions include research associated with government funding, specific grant restrictions, or approval requirements from institutional review boards (IRBs).

Current scholarship on data management and data sharing within academic disciplines is fragmented. Few interdisciplinary studies exist, and of these, important topics, such as data librarianship and scientists’ perceptions of necessary repository features, are left out of analysis 12 , 13 , 14 , 15 . Also, singular discipline studies on scientific data management and repository utilization contribute limited views and are dated 16 , 17 , 18 .

Applying the conceptual framework of Knowledge Infrastructures (KI) provides a basis for understanding the creation, flow, and maintenance of knowledge 19 . KI posits that seven interconnected entities account for the system: shared norms and values, artifacts, people, institutions, policies, routines and practices, and technology 19 . Examination of some or all of these entities throw into relief inefficiencies and areas for growth within knowledge creation, sharing, and maintenance. In particular, prior research 11 points to repositories and human resources as key areas of investment to improve data management and increase sharing within the scientific community.

This study uses KI as a lens to focus on the individual scientist (i.e., people), their use of repositories (i.e., routines and practices/technology), their opinions of librarians and data sharing (i.e., norms and values), and their data management plans (i.e., policies/institutions). We explore the data management and sharing practices of scientists across five disciplines by answering the following research question: what features do scientists think are necessary to include in data repository systems and services to help them implement the data sharing and preservation parts of their data management plans (DMPs)? We found a consensus across disciplines on certain desired repository features and areas where scientists felt they needed help with data management. In contrast, we found that some discipline-specific issues require unique data management and repository usage. Also, there was little consensus among study participants on the perceived role of librarians in scientific data management.

This paper discusses the following. First, we provide an analysis of the results of our focus group research. Second, we discuss how our study advances research on understanding scientists’ perspectives on data sharing, data management, and repository needs and introduce a rubric for determining data repository appropriateness. Our rubric contributes to research on KI by providing an aid for scientists in selecting data repositories. Finally, we discuss our methods and research design.

Scientists’ data practices

Participants across all the focus groups indicated having a DMP for at least one of their recent or current projects. Regarding data storage, some participants across four focus groups (atmosphere and earth science, chemistry, computer science, and neuroscience) used institutional repositories (IRs) for their data at some point within the data lifecycle, with five participants explicitly indicating use of IRs in their DMPs. The other popular choice discussed across four focus groups (atmospheric and earth science, computer science, ecology, and neuroscience) was proprietary cloud storage systems (e.g., DropBox, GitHub, and Google Drive). These users were concerned about file size limitations, costs, long-term preservation, data mining by the service providers, and the number of storage solutions becoming burdensome.

Desired repository features

Data traceability.

Participants across four focus groups (atmosphere and earth science, chemistry, ecology, and neuroscience) mentioned wanting different kinds of information about how their data were being used to be tracked after data deposit in repositories. They wanted to know how many researchers view, cite, and publish based on the data they deposit. Additionally, participants wanted repositories to track any changes to their data post-deposit. For example, they suggested the creation of a path for updates to items in repositories after initial submission. They also wanted repositories to allow explicit versioning of their materials to clearly inform users of changes to materials over time. Relatedly, participants wanted repositories to provide notification systems for data depositors and users to know when new versions or derivative works based on their data become available as well as notifications for depositors about when their data has been viewed, cited, or included in a publication.

Participants across three focus groups (atmospheric and earth science, chemistry, and neuroscience) discussed wanting high quality metadata within repositories. Some argued for automated metadata creation when uploading their data into repositories to save time and provide at least some level of description of their data (e.g., P1, P4, Chemistry). Within their own projects and in utilizing repositories, participants wanted help with metadata quality control issues. Participants within atmospheric and earth science who frequently created or interacted with complex files wanted expanded types of metadata (e.g., greater spatial metadata for geographic information system (GIS) data). Atmospheric and earth scientists, chemists, and neuroscientists wanted greater searchability and machine readability of data and entities within datasets housed in repositories, specifically to find a variable by multiple search parameters.

Data use restrictions

Participants across all five focus groups agreed that repositories need to clearly explain what a researcher can and cannot do with a dataset. For example, participants thought repositories should clearly state on every dataset whether researchers can: base new research on the data, publish based on the data, and use the data for business purposes. Participants stated current data restrictions can be confusing to those not acquainted with legal principles. For example, one data professional (P2, Chemistry) explained that researchers often mislabeled their datasets with ill-suited licenses. Participants commonly reported using Open Access or Creative Commons, but articulated the necessity of having the option for restrictive or proprietary licenses, although most had not used such licenses.

Some participants used embargoes and others never had. Most viewed embargoes as “a necessary evil,” provided that they are limited to approximately a few years after repository submission or until time of publication. Participants did not think it was fair to repository staff or potential data reusers to have any data embargoed in perpetuity.

Stable infrastructure

Participants across two focus groups (atmospheric and earth science, and chemistry) expressed concern about the long-term stability of their data in repositories. Some stated that their fear of a repository not being able to provide long-term preservation of their data led them to seek out and utilize alternative storage solutions. Others expected repositories to commit to the future of their data and have satisfactory funding structures to fulfill their stated missions. Participants described stable repository infrastructure in terms of updating data files (i.e., versioning) and formats over time and ensuring their usability.

Participants across four focus groups (atmospheric and earth science, chemistry, computer science, and neuroscience) discussed wanting their data to be secure. They feared lax security could compromise their data. Specific to embargoed data, they feared lax security could enable “scooping” of research before data depositors are able to make use of the data through publication. Those handling data with confidential, sensitive or personally identifiable information expressed the most concern about potential security breaches because it could result in a breach and loss of trust with their current and future study participants, making it harder for themselves and future researchers to recruit study participants in the long-term, and it would result in noncompliance with mandates from their IRBs.

Desired help with aspects of data management

Help with metadata standardization and quality control.

Participants across four focus groups (atmospheric and earth science, chemistry, ecology, and neuroscience) discussed wanting help with metadata standardization, including quality control for metadata associated with their datasets, to help fulfill their DMPs while enhancing data searchability and discoverability.

Help with verification of deleted data when necessary

Our university-affiliated participants were particularly concerned about verifiable deletion of data when necessary to comply with their IRBs. Participants expressed concern about their newer graduate students’ capacity and follow-through on deleting sensitive data that their predecessors (i.e., graduate students who graduated before study completion) collected before they started school. In this scenario, failure to delete sensitive data is a serious breach of IRB policy, which can lead to the data being compromised and/or revocation of permission to conduct future research. Participants who worked with sensitive information frequently (e.g., passwords in computer science and medical information in neuroscience) cited this as a concern.

Need for data management training

Participants across four focus groups (atmospheric and earth science, chemistry, ecology, and neuroscience) mentioned the need for additional training in data management. Participants stated they were unaware of the number of discipline-specific repositories that were currently available until their peers or librarians shared this information. Consequently, several participants suggested training sessions to raise awareness about these repositories within their disciplines. Participants were also concerned about what they perceived as a limited amount of training available for graduate students and new researchers on data management tools. They described current efforts that they were aware of as either training new workers/students on simpler tools or conducting training “piecemeal” on advanced data management tools, both of which they perceived as limiting project productivity. No participants in the computer science focus group mentioned the need for additional technical or informational training in data management.

Knowledge of existing data management principles and practices

Results were mixed on participants’ knowledge about the FAIR Guiding Principles for Scientific Data Management and Stewardship 20 . Twelve participants across all five focus groups knew about the FAIR principles, while ten across four disciplines (chemistry, computer science, ecology, and neuroscience) did not know about them. Those who were familiar mentioned challenges with applying the FAIR principles to large and multimodal datasets (e.g., P4, Neuroscience).

Role of librarians in data management

Participants across two focus groups (atmospheric and earth science and chemistry) did not think librarians should have a role in their data management for two reasons. First, they thought their data were too technical or specialized for librarians to meaningfully contribute to their management (e.g., P3, Chemistry). Second, they assumed librarians were too busy to help with data management. In their view, librarians were already stretched too thin with greater priorities related to addressing the “modern era of information overload” to be concerned with managing their data (e.g., P6, Chemistry).

In contrast, other participants across all five focus groups thought librarians could play a role in scientific data management and sharing by providing assistance with publication, literature searches, patents, copyright searches, management of data mandates, embargo enforcement, information literacy, and metadata standardization. The two largest areas of agreement for participants who indicated a role for librarians were the more traditional area of assistance with information research and search help (e.g., P5, Atmospheric and Earth Science) as well as data management (e.g., P4, Neuroscience).

This study contributes to the research literature on scientists’ perspectives on data management, repositories, and librarians. Additionally, our study presents a rubric based on the perceived importance of repository features by our participants as a decision-support tool to enable the selection of data repositories based on scientists’ data management and sharing needs and preferences.

Our results suggest several aspects to improve KI focused on research data management and sharing. For example, in terms of data management wants and needs, participants in the atmospheric and earth science, ecology, and neuroscience disciplines who stated a need for help with metadata also wanted greater searchability of metadata from repositories. However, poor metadata searchability might be the net result of poor metadata quality control by data producers during data creation and/or deposit, which repositories can provide guidelines for, but in many cases cannot force data producers to do (or do well). This may be an example of KI entities (routines and practices impacting technology allowances of the corresponding repository) impacting each other. Metadata regulation issues are consistent with prior research 12 , 18 , 21 , 22 , 23 , 24 , 25 .

Data integrity challenges that researchers needed assistance with were developing data standards and the technical skills of employees. Both issues connect to data integrity and trust development, which are vital for data sharing and reuse 1 , 2 . The need for training on data management topics is consistent with prior research 25 , 26 , 27 . Within metadata standards development, this study’s results point to the discipline-specific call for repository integration of GIS data for discoverability by atmospheric and earth science participants. Sixty percent of participants within this subject area expressed a desire for such metadata additions. This study recognizes the need for standardized, more descriptive, and quality controlled metadata for repositories, highlighting the metadata problem faced by open access initiatives. Open access repositories have significant metadata issues, especially between repositories, which limit their searchability 28 , 29 . Future research on creating standards and corresponding guides to encourage better metadata creation by dataset originators may advance description and open access efforts.

Our findings are on trend with predictions from prior research about the policies, routines, and practices of data storage during and after scientists’ studies. For example, our finding of utilization of cloud storage solutions was predicted in prior research to increase over time 15 . Additionally, our study confirms overall low IR usage within 14 and between disciplines 12 , 13 , 14 with a slight increase in IR utilization over time 13 . Future research on DMP use and repository integration within DMPs may contribute to the KI entity of policy, possibly influencing more well-formed data, more shared data, and increased data integrity.

Applying the people aspect of KI to our data set, our participants did not have a consensus on the role of librarians in data management and sharing. To them, librarians’ roles appear largely ad hoc and dependent on individual institutions and librarians. Articulations of their roles varied broadly, including: teaching data management skills, implementing data standards, helping with legal aspects (e.g., rights management), and resolving technical preservation issues that concern scientists 30 . Interestingly, participants framed librarian roles in data management as a dichotomy between help with technical issues (e.g., programming skills) and traditional librarianship skills (e.g., literature search and journal access). Whether true or not, scientists’ assumptions about librarians’ roles likely have an impact on their utilization of librarians and libraries for data management and sharing. Exploring scientists’ perceptions of librarians’ roles may provide the necessary insight to foreground collaboration between scientists and librarians on, for example, improving dataset integrity 31 and increasing dataset usage 32 , helping to justify the costs associated with making data open.

Finally, examining the routines, practices, norms, and technologies used by researchers regarding repositories has brought to the surface both an appreciation of open data as a concept and a lack of provision of open data by some of the same scientists who think open data is a good idea. A reluctance to provide open data may stem from the perceived lack of repository features discussed above, in the section Desired Repository Features, in whatever repositories scientists may have entertained using at some point in the past. Consequently, in an effort to encourage greater and more effective repository utilization by scientists across disciplines, we present a repository evaluation rubric based on the desired repository features for which we found empirical support in our study (see Supplementary Table 1 ).

The intended users of this rubric are scientists, librarians, and repository managers. Scientists can use our rubric to compare the relative merits of repositories based on their needs and consider what features they deem important. Librarians providing data consultations may utilize our rubric when helping their patrons. Repository managers can use our rubric to evaluate their repositories and services as a decision-support tool for what areas to improve, including what features to add to their repositories. The purpose of our rubric is to aid in repository selection and critical analysis of available repositories while encouraging repositories to provide features that we have found scientists want. As a corollary, we hope to encourage an increase in data deposit by scientists thereby increasing research opportunities to advance the studied academic disciplines 2 , 3 , 4 , 5 . This is particularly important for scientists in developing countries who may need to be more reliant on utilizing existing datasets for cost effectiveness 6 . Moreover our rubric’s encouragement of data deposit may increase research integrity by making the data available for experts to check 1 , 2 .

Future studies can compare the desired repository features that we gathered empirical support for to additional desired repository features identified through conducting comparable research with scientists from similar and different disciplines and scientists from countries with differing levels of development than those we studied here to assess the generalizability and appropriateness of our instrument.

We produced a convenience sample for our study by browsing the participants lists of major conferences in each discipline: AGU Annual Meetings for atmospheric and earth science, American Chemical Society for chemistry, SOUPS’19 and SOUPS’20 for computer science, Society for Freshwater Science Annual Meetings for ecology, and Neuroscience’19 and Neuroscience’20 for neuroscience. From these participants lists we randomly selected individuals to receive recruitment emails from us inviting their participation in our study. Snowball sampling yielded a few additional participants, and a few other participants were obtained from informal knowledge networks in online communities (e.g., subject-specific discord groups). All professionals were vetted for credentials before inclusion (e.g., master’s and/or doctoral degrees in their disciplines).

Our study participants came from a variety of educational and workplace backgrounds. Table 1 lists our study participants by subject discipline, occupation, and affiliation. Study participant selection criteria required participants to self-identify as a scientist from one of the five subject domains under investigation and possess or be in the process of obtaining a graduate-level degree (e.g., active enrollment within a graduate program) within a particular scientific discipline. We sought individuals from separate institutional affiliations, subdiscipline research backgrounds/interests, and career stages for each scientific discipline to encourage a diversity of opinions and to ensure certain data metrics would not be artificially skewed (e.g., having multiple chemistry participants who actively work together on projects or work at the same institution were likely to answer our questions similarly). All participants currently work and reside in developed western countries. Most are from the United States, with two individuals (one from the scientific discipline of chemistry and another from neuroscience) living in developed countries in western Europe. Participants ranged from researchers primarily focused on experimenting to professors combining research with teaching responsibilities to professionals providing services or guidance to researchers (e.g., a data manager for a lab). The majority were mid-career, with a few early- and late-career. Most were affiliated with universities; however, some were government-affiliated or worked at private, for-profit enterprises.

We conducted focus groups via zoom video-conferencing software between April and August of 2021. After introductions and collecting basic demographic information (e.g., education, work experience, research interests, etc.), we asked participants questions about their data, past and present research projects, their data management, DMPs, what aspects of data management they would like help with, whether they think libraries can help, and data sharing. We used these questions to lead into a discussion about the FAIR principles and what features they thought were necessary to include in data repository systems and services to help them implement the data sharing and preservation parts of their DMPs. Specifically, we asked participants about their expectations for: file size acceptance, licensing, embargo periods, data discoverability, and reuse. Each focus group lasted approximately an hour and a half. We gave participants $50 electronic amazon gift cards as incentives for their participation. The Indiana University Human Subjects Office approved this study (IRB Study #1907150522). Informed consent was obtained from all participants.

We transcribed the focus group recordings and analyzed the transcripts in MAXQDA, qualitative data analysis software. Afterwards, we followed the steps outlined in the literature on the analysis of focus groups data 33 : (1) familiarization, (2) generating codes, (3) constructing themes, (4) revising and (5) defining themes, and (6) producing the report, to apply thematic analysis to our dataset, which is publicly available in figshare 34 .

Limitations

While using focus groups as a data collection methodology had many benefits for us, including the distinct advantage of unscripted interactions between participants, the ability to ask follow-up questions in the moment, and ask open-ended questions to elicit an in-depth understanding of the complex and individualized topic of scientists’ data management practices 35 , 36 , 37 , it also had some disadvantages. Our overall sample size was small, which may have diminished the generalizability and repeatability of our results 36 . However, it is important to note that the sizes of our focus groups are consistent with the sizes of focus groups that were conducted in prior research on similar topics 13 , 38 . Despite the limitations of our method, we argue that the benefits of the knowledge gained from our scientific inquiry outweigh any potential drawbacks.

Data availability

The dataset generated and analyzed during the current study is available in figshare, https://doi.org/10.6084/m9.figshare.19493060.v1 34 .

Code availability

No custom code was used to generate or process the data described in this manuscript.

Curty, R. G., Crowston, K., Specht, A., Grant, B. W. & Dalton, E. D. Attitudes and norms affecting scientists’ data reuse. PLOS ONE 12 , e0189288 (2017).

Article Google Scholar

Vuong, Q. H. Author’s corner: open data, open review and open dialogue in making social sciences plausible. Scientific Data Updates http://blogs.nature.com/scientificdata/2017/12/12/author’s-corner-open-data-open-review-and-open-dialogue-in-making-social-sciences-plausible/ (2017).

Duke, C. S. & Porter, J. H. The ethics of data sharing and reuse in biology. BioScience 63 , 483–489 (2013).

Perrino, T. et al . Advancing science through collaborative data sharing and synthesis. Perspect Psychol Sci 8 , 433–444 (2013).

Pisani, E. et al . Beyond open data: realising the health benefits of sharing data. BMJ 355 , i5295 (2016).

Vuong, Q. H. The (ir)rational consideration of the cost of science in transition economies. Nat Hum Behav 2 , 5–5 (2018).

Ukwoma, S. C. & Dike, V. W. Academics’ attitudes toward the utilization of institutional repositories in Nigerian universities. portal 17 , 17–32 (2017).

Bagdasarian, N., Cross, G. B. & Fisher, D. Rapid publications risk the integrity of science in the era of COVID-19. BMC Med 18 , 192 (2020).

Article CAS Google Scholar

Vuong, Q. H. et al . Covid-19 vaccines production and societal immunization under the serendipity-mindsponge-3D knowledge management theory and conceptual framework. Humanit Soc Sci Commun 9 , 22 (2022).

Bezuidenhout, L. To share or not to share: incentivizing data sharing in life science communities. Developing World Bioeth 19 , 18–24 (2019).

Borgman, C. L. Big Data, Little Data, No Data: Scholarship in the Networked World . (The MIT Press, 2016).

Akers, K. G. & Doty, J. Disciplinary differences in faculty research data management practices and perspectives. IJDC 8 , 5–26 (2013).

Cragin, M. H., Palmer, C. L., Carlson, J. R. & Witt, M. Data sharing, small science and institutional repositories. Phil. Trans. R. Soc. A. 368 , 4023–4038 (2010).

Article ADS CAS Google Scholar

Pryor, G. Attitudes and aspirations in a diverse world: the Project StORe perspective on scientific repositories. IJDC 2 , 135–144 (2008).

Weller, T. & Monroe-Gulick, A. Understanding methodological and disciplinary differences in the data practices of academic researchers. Library Hi Tech 32 , 467–482 (2014).

Borgman, C. L., Wallis, J. C. & Enyedy, N. Little science confronts the data deluge: habitat ecology, embedded sensor networks, and digital libraries. Int J Digit Libr 7 , 17–30 (2007).

Cragin, M. H. & Shankar, K. Scientific data collections and distributed collective practice. Comput Supported Coop Work 15 , 185–204 (2006).

Polydoratou, P. Use and linkage of source and output repositories and the expectations of the chemistry research community about their use. in Digital Libraries: Achievements , Challenges and Opportunities (eds. Sugimoto, S., Hunter, J., Rauber, A. & Morishima, A.) 4312 429–438 (Springer Berlin Heidelberg, 2006).

Edwards, P. N. A Vast Machine: Computer Models, Climate Data, and the Politics of Global Warming . (The MIT Press, 2013).

Wilkinson, M. D. et al . The FAIR Guiding Principles for scientific data management and stewardship. Sci Data 3 , 160018 (2016).

Gil, Y. et al . Toward the geoscience paper of the future: best practices for documenting and sharing research from data to software to provenance. Earth and Space Science 3 , 388–415 (2016).

Article ADS Google Scholar

Tenopir, C. et al . Data sharing by scientists: practices and perceptions. PLOS ONE 6 , e21101 (2011).

Tenopir, C. et al . Changes in data sharing and data reuse practices and perceptions among scientists worldwide. PLOS ONE 10 , e0134826 (2015).

Waide, R. B., Brunt, J. W. & Servilla, M. S. Demystifying the landscape of ecological data repositories in the United States. BioScience 67 , 1044–1051 (2017).

Whitmire, A. L., Boock, M. & Sutton, S. C. Variability in academic research data management practices: Implications for data services development from a faculty survey. Program: Electronic Library and Information Systems 49 , 382–407 (2015).