11 Tips For Writing a Dissertation Data Analysis

Since the evolution of the fourth industrial revolution – the Digital World; lots of data have surrounded us. There are terabytes of data around us or in data centers that need to be processed and used. The data needs to be appropriately analyzed to process it, and Dissertation data analysis forms its basis. If data analysis is valid and free from errors, the research outcomes will be reliable and lead to a successful dissertation.

Considering the complexity of many data analysis projects, it becomes challenging to get precise results if analysts are not familiar with data analysis tools and tests properly. The analysis is a time-taking process that starts with collecting valid and relevant data and ends with the demonstration of error-free results.

So, in today’s topic, we will cover the need to analyze data, dissertation data analysis, and mainly the tips for writing an outstanding data analysis dissertation. If you are a doctoral student and plan to perform dissertation data analysis on your data, make sure that you give this article a thorough read for the best tips!

What is Data Analysis in Dissertation?

Dissertation Data Analysis is the process of understanding, gathering, compiling, and processing a large amount of data. Then identifying common patterns in responses and critically examining facts and figures to find the rationale behind those outcomes.

Even f you have the data collected and compiled in the form of facts and figures, it is not enough for proving your research outcomes. There is still a need to apply dissertation data analysis on your data; to use it in the dissertation. It provides scientific support to the thesis and conclusion of the research.

Data Analysis Tools

There are plenty of indicative tests used to analyze data and infer relevant results for the discussion part. Following are some tests used to perform analysis of data leading to a scientific conclusion:

11 Most Useful Tips for Dissertation Data Analysis

Doctoral students need to perform dissertation data analysis and then dissertation to receive their degree. Many Ph.D. students find it hard to do dissertation data analysis because they are not trained in it.

1. Dissertation Data Analysis Services

The first tip applies to those students who can afford to look for help with their dissertation data analysis work. It’s a viable option, and it can help with time management and with building the other elements of the dissertation with much detail.

Dissertation Analysis services are professional services that help doctoral students with all the basics of their dissertation work, from planning, research and clarification, methodology, dissertation data analysis and review, literature review, and final powerpoint presentation.

One great reference for dissertation data analysis professional services is Statistics Solutions , they’ve been around for over 22 years helping students succeed in their dissertation work. You can find the link to their website here .

For a proper dissertation data analysis, the student should have a clear understanding and statistical knowledge. Through this knowledge and experience, a student can perform dissertation analysis on their own.

Following are some helpful tips for writing a splendid dissertation data analysis:

2. Relevance of Collected Data

If the data is irrelevant and not appropriate, you might get distracted from the point of focus. To show the reader that you can critically solve the problem, make sure that you write a theoretical proposition regarding the selection and analysis of data.

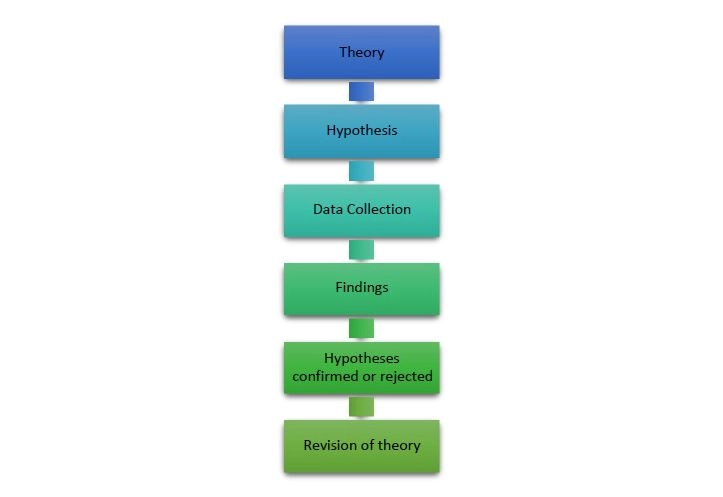

3. Data Analysis

For analysis, it is crucial to use such methods that fit best with the types of data collected and the research objectives. Elaborate on these methods and the ones that justify your data collection methods thoroughly. Make sure to make the reader believe that you did not choose your method randomly. Instead, you arrived at it after critical analysis and prolonged research.

On the other hand, quantitative analysis refers to the analysis and interpretation of facts and figures – to build reasoning behind the advent of primary findings. An assessment of the main results and the literature review plays a pivotal role in qualitative and quantitative analysis.

The overall objective of data analysis is to detect patterns and inclinations in data and then present the outcomes implicitly. It helps in providing a solid foundation for critical conclusions and assisting the researcher to complete the dissertation proposal.

4. Qualitative Data Analysis

Qualitative data refers to data that does not involve numbers. You are required to carry out an analysis of the data collected through experiments, focus groups, and interviews. This can be a time-taking process because it requires iterative examination and sometimes demanding the application of hermeneutics. Note that using qualitative technique doesn’t only mean generating good outcomes but to unveil more profound knowledge that can be transferrable.

Presenting qualitative data analysis in a dissertation can also be a challenging task. It contains longer and more detailed responses. Placing such comprehensive data coherently in one chapter of the dissertation can be difficult due to two reasons. Firstly, we cannot figure out clearly which data to include and which one to exclude. Secondly, unlike quantitative data, it becomes problematic to present data in figures and tables. Making information condensed into a visual representation is not possible. As a writer, it is of essence to address both of these challenges.

Qualitative Data Analysis Methods

Following are the methods used to perform quantitative data analysis.

- Deductive Method

This method involves analyzing qualitative data based on an argument that a researcher already defines. It’s a comparatively easy approach to analyze data. It is suitable for the researcher with a fair idea about the responses they are likely to receive from the questionnaires.

- Inductive Method

In this method, the researcher analyzes the data not based on any predefined rules. It is a time-taking process used by students who have very little knowledge of the research phenomenon.

5. Quantitative Data Analysis

Quantitative data contains facts and figures obtained from scientific research and requires extensive statistical analysis. After collection and analysis, you will be able to conclude. Generic outcomes can be accepted beyond the sample by assuming that it is representative – one of the preliminary checkpoints to carry out in your analysis to a larger group. This method is also referred to as the “scientific method”, gaining its roots from natural sciences.

The Presentation of quantitative data depends on the domain to which it is being presented. It is beneficial to consider your audience while writing your findings. Quantitative data for hard sciences might require numeric inputs and statistics. As for natural sciences , such comprehensive analysis is not required.

Quantitative Analysis Methods

Following are some of the methods used to perform quantitative data analysis.

- Trend analysis: This corresponds to a statistical analysis approach to look at the trend of quantitative data collected over a considerable period.

- Cross-tabulation: This method uses a tabula way to draw readings among data sets in research.

- Conjoint analysis : Quantitative data analysis method that can collect and analyze advanced measures. These measures provide a thorough vision about purchasing decisions and the most importantly, marked parameters.

- TURF analysis: This approach assesses the total market reach of a service or product or a mix of both.

- Gap analysis: It utilizes the side-by-side matrix to portray quantitative data, which captures the difference between the actual and expected performance.

- Text analysis: In this method, innovative tools enumerate open-ended data into easily understandable data.

6. Data Presentation Tools

Since large volumes of data need to be represented, it becomes a difficult task to present such an amount of data in coherent ways. To resolve this issue, consider all the available choices you have, such as tables, charts, diagrams, and graphs.

Tables help in presenting both qualitative and quantitative data concisely. While presenting data, always keep your reader in mind. Anything clear to you may not be apparent to your reader. So, constantly rethink whether your data presentation method is understandable to someone less conversant with your research and findings. If the answer is “No”, you may need to rethink your Presentation.

7. Include Appendix or Addendum

After presenting a large amount of data, your dissertation analysis part might get messy and look disorganized. Also, you would not be cutting down or excluding the data you spent days and months collecting. To avoid this, you should include an appendix part.

The data you find hard to arrange within the text, include that in the appendix part of a dissertation . And place questionnaires, copies of focus groups and interviews, and data sheets in the appendix. On the other hand, one must put the statistical analysis and sayings quoted by interviewees within the dissertation.

8. Thoroughness of Data

It is a common misconception that the data presented is self-explanatory. Most of the students provide the data and quotes and think that it is enough and explaining everything. It is not sufficient. Rather than just quoting everything, you should analyze and identify which data you will use to approve or disapprove your standpoints.

Thoroughly demonstrate the ideas and critically analyze each perspective taking care of the points where errors can occur. Always make sure to discuss the anomalies and strengths of your data to add credibility to your research.

9. Discussing Data

Discussion of data involves elaborating the dimensions to classify patterns, themes, and trends in presented data. In addition, to balancing, also take theoretical interpretations into account. Discuss the reliability of your data by assessing their effect and significance. Do not hide the anomalies. While using interviews to discuss the data, make sure you use relevant quotes to develop a strong rationale.

It also involves answering what you are trying to do with the data and how you have structured your findings. Once you have presented the results, the reader will be looking for interpretation. Hence, it is essential to deliver the understanding as soon as you have submitted your data.

10. Findings and Results

Findings refer to the facts derived after the analysis of collected data. These outcomes should be stated; clearly, their statements should tightly support your objective and provide logical reasoning and scientific backing to your point. This part comprises of majority part of the dissertation.

In the finding part, you should tell the reader what they are looking for. There should be no suspense for the reader as it would divert their attention. State your findings clearly and concisely so that they can get the idea of what is more to come in your dissertation.

11. Connection with Literature Review

At the ending of your data analysis in the dissertation, make sure to compare your data with other published research. In this way, you can identify the points of differences and agreements. Check the consistency of your findings if they meet your expectations—lookup for bottleneck position. Analyze and discuss the reasons behind it. Identify the key themes, gaps, and the relation of your findings with the literature review. In short, you should link your data with your research question, and the questions should form a basis for literature.

The Role of Data Analytics at The Senior Management Level

From small and medium-sized businesses to Fortune 500 conglomerates, the success of a modern business is now increasingly tied to how the company implements its data infrastructure and data-based decision-making. According

The Decision-Making Model Explained (In Plain Terms)

Any form of the systematic decision-making process is better enhanced with data. But making sense of big data or even small data analysis when venturing into a decision-making process might

13 Reasons Why Data Is Important in Decision Making

Wrapping Up

Writing data analysis in the dissertation involves dedication, and its implementations demand sound knowledge and proper planning. Choosing your topic, gathering relevant data, analyzing it, presenting your data and findings correctly, discussing the results, connecting with the literature and conclusions are milestones in it. Among these checkpoints, the Data analysis stage is most important and requires a lot of keenness.

In this article, we thoroughly looked at the tips that prove valuable for writing a data analysis in a dissertation. Make sure to give this article a thorough read before you write data analysis in the dissertation leading to the successful future of your research.

Oxbridge Essays. Top 10 Tips for Writing a Dissertation Data Analysis.

Emidio Amadebai

As an IT Engineer, who is passionate about learning and sharing. I have worked and learned quite a bit from Data Engineers, Data Analysts, Business Analysts, and Key Decision Makers almost for the past 5 years. Interested in learning more about Data Science and How to leverage it for better decision-making in my business and hopefully help you do the same in yours.

Recent Posts

Causal vs Evidential Decision-making (How to Make Businesses More Effective)

In today’s fast-paced business landscape, it is crucial to make informed decisions to stay in the competition which makes it important to understand the concept of the different characteristics and...

Bootstrapping vs. Boosting

Over the past decade, the field of machine learning has witnessed remarkable advancements in predictive techniques and ensemble learning methods. Ensemble techniques are very popular in machine...

- Cookies & Privacy

- GETTING STARTED

- Introduction

- FUNDAMENTALS

Getting to the main article

Choosing your route

Setting research questions/ hypotheses

Assessment point

Building the theoretical case

Setting your research strategy

Data collection

Data analysis

Data analysis techniques

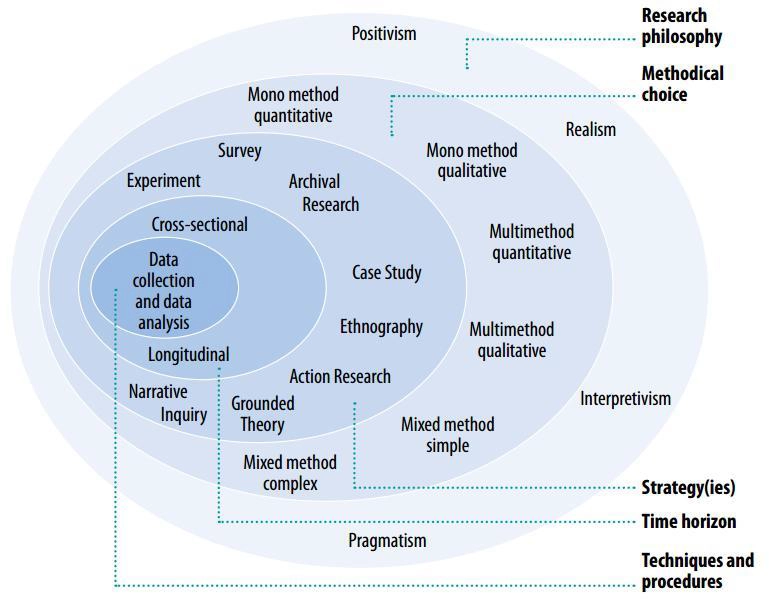

In STAGE NINE: Data analysis , we discuss the data you will have collected during STAGE EIGHT: Data collection . However, before you collect your data, having followed the research strategy you set out in this STAGE SIX , it is useful to think about the data analysis techniques you may apply to your data when it is collected.

The statistical tests that are appropriate for your dissertation will depend on (a) the research questions/hypotheses you have set, (b) the research design you are using, and (c) the nature of your data. You should already been clear about your research questions/hypotheses from STAGE THREE: Setting research questions and/or hypotheses , as well as knowing the goal of your research design from STEP TWO: Research design in this STAGE SIX: Setting your research strategy . These two pieces of information - your research questions/hypotheses and research design - will let you know, in principle , the statistical tests that may be appropriate to run on your data in order to answer your research questions.

We highlight the words in principle and may because the most appropriate statistical test to run on your data not only depend on your research questions/hypotheses and research design, but also the nature of your data . As you should have identified in STEP THREE: Research methods , and in the article, Types of variables , in the Fundamentals part of Lærd Dissertation, (a) not all data is the same, and (b) not all variables are measured in the same way (i.e., variables can be dichotomous, ordinal or continuous). In addition, not all data is normal , nor is the data when comparing groups necessarily equal , terms we explain in the Data Analysis section in the Fundamentals part of Lærd Dissertation. As a result, you might think that running a particular statistical test is correct at this point of setting your research strategy (e.g., a statistical test called a dependent t-test ), based on the research questions/hypotheses you have set, but when you collect your data (i.e., during STAGE EIGHT: Data collection ), the data may fail certain assumptions that are important to such a statistical test (i.e., normality and homogeneity of variance ). As a result, you have to run another statistical test (e.g., a Wilcoxon signed-rank test instead of a dependent t-test ).

At this stage in the dissertation process, it is important, or at the very least, useful to think about the data analysis techniques you may apply to your data when it is collected. We suggest that you do this for two reasons:

REASON A Supervisors sometimes expect you to know what statistical analysis you will perform at this stage of the dissertation process

This is not always the case, but if you have had to write a Dissertation Proposal or Ethics Proposal , there is sometimes an expectation that you explain the type of data analysis that you plan to carry out. An understanding of the data analysis that you will carry out on your data can also be an expected component of the Research Strategy chapter of your dissertation write-up (i.e., usually Chapter Three: Research Strategy ). Therefore, it is a good time to think about the data analysis process if you plan to start writing up this chapter at this stage.

REASON B It takes time to get your head around data analysis

When you come to analyse your data in STAGE NINE: Data analysis , you will need to think about (a) selecting the correct statistical tests to perform on your data, (b) running these tests on your data using a statistics package such as SPSS, and (c) learning how to interpret the output from such statistical tests so that you can answer your research questions or hypotheses. Whilst we show you how to do this for a wide range of scenarios in the in the Data Analysis section in the Fundamentals part of Lærd Dissertation, it can be a time consuming process. Unless you took an advanced statistics module/option as part of your degree (i.e., not just an introductory course to statistics, which are often taught in undergraduate and master?s degrees), it can take time to get your head around data analysis. Starting this process at this stage (i.e., STAGE SIX: Research strategy ), rather than waiting until you finish collecting your data (i.e., STAGE EIGHT: Data collection ) is a sensible approach.

Final thoughts...

Setting the research strategy for your dissertation required you to describe, explain and justify the research paradigm, quantitative research design, research method(s), sampling strategy, and approach towards research ethics and data analysis that you plan to follow, as well as determine how you will ensure the research quality of your findings so that you can effectively answer your research questions/hypotheses. However, from a practical perspective, just remember that the main goal of STAGE SIX: Research strategy is to have a clear research strategy that you can implement (i.e., operationalize ). After all, if you are unable to clearly follow your plan and carry out your research in the field, you will struggle to answer your research questions/hypotheses. Once you are sure that you have a clear plan, it is a good idea to take a step back, speak with your supervisor, and assess where you are before moving on to collect data. Therefore, when you are ready, proceed to STAGE SEVEN: Assessment point .

- Open access

- Published: 06 January 2022

The use of Big Data Analytics in healthcare

- Kornelia Batko ORCID: orcid.org/0000-0001-6561-3826 1 &

- Andrzej Ślęzak 2

Journal of Big Data volume 9 , Article number: 3 ( 2022 ) Cite this article

74k Accesses

108 Citations

28 Altmetric

Metrics details

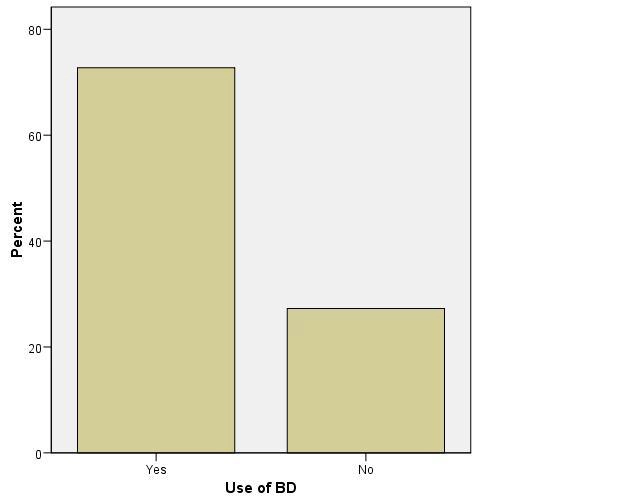

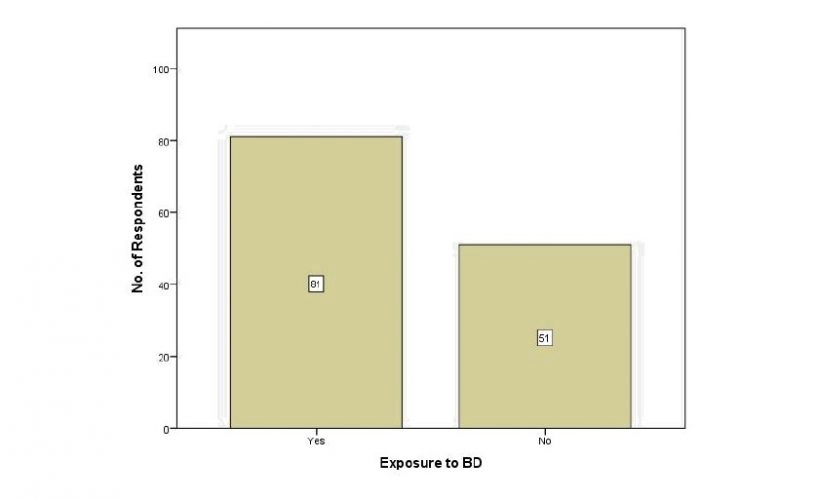

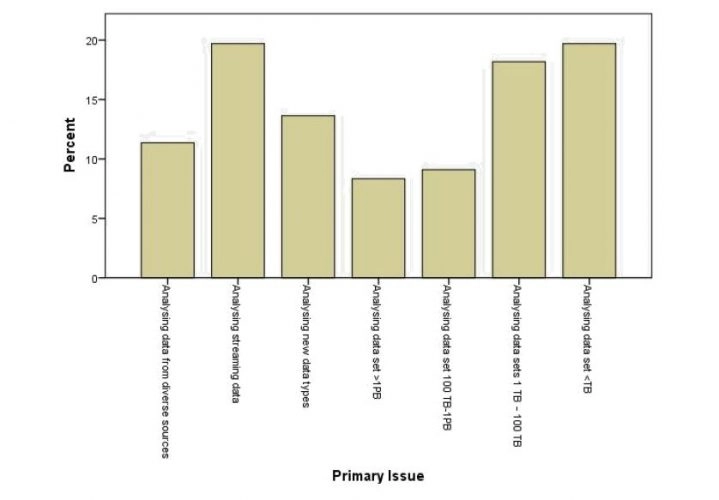

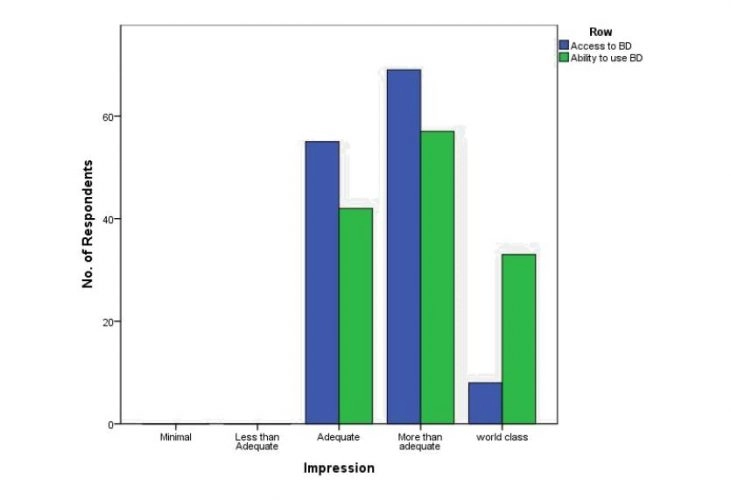

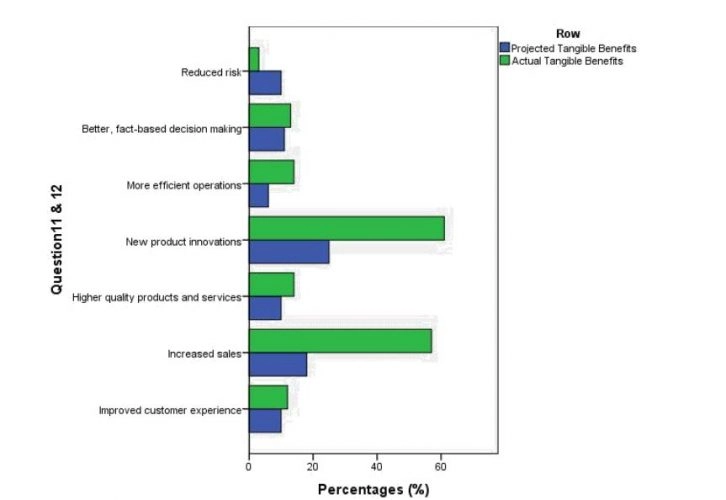

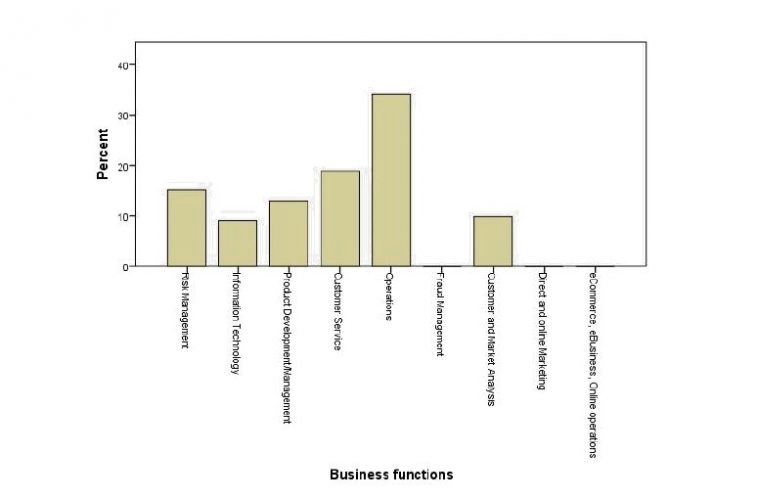

The introduction of Big Data Analytics (BDA) in healthcare will allow to use new technologies both in treatment of patients and health management. The paper aims at analyzing the possibilities of using Big Data Analytics in healthcare. The research is based on a critical analysis of the literature, as well as the presentation of selected results of direct research on the use of Big Data Analytics in medical facilities. The direct research was carried out based on research questionnaire and conducted on a sample of 217 medical facilities in Poland. Literature studies have shown that the use of Big Data Analytics can bring many benefits to medical facilities, while direct research has shown that medical facilities in Poland are moving towards data-based healthcare because they use structured and unstructured data, reach for analytics in the administrative, business and clinical area. The research positively confirmed that medical facilities are working on both structural data and unstructured data. The following kinds and sources of data can be distinguished: from databases, transaction data, unstructured content of emails and documents, data from devices and sensors. However, the use of data from social media is lower as in their activity they reach for analytics, not only in the administrative and business but also in the clinical area. It clearly shows that the decisions made in medical facilities are highly data-driven. The results of the study confirm what has been analyzed in the literature that medical facilities are moving towards data-based healthcare, together with its benefits.

Introduction

The main contribution of this paper is to present an analytical overview of using structured and unstructured data (Big Data) analytics in medical facilities in Poland. Medical facilities use both structured and unstructured data in their practice. Structured data has a predetermined schema, it is extensive, freeform, and comes in variety of forms [ 27 ]. In contrast, unstructured data, referred to as Big Data (BD), does not fit into the typical data processing format. Big Data is a massive amount of data sets that cannot be stored, processed, or analyzed using traditional tools. It remains stored but not analyzed. Due to the lack of a well-defined schema, it is difficult to search and analyze such data and, therefore, it requires a specific technology and method to transform it into value [ 20 , 68 ]. Integrating data stored in both structured and unstructured formats can add significant value to an organization [ 27 ]. Organizations must approach unstructured data in a different way. Therefore, the potential is seen in Big Data Analytics (BDA). Big Data Analytics are techniques and tools used to analyze and extract information from Big Data. The results of Big Data analysis can be used to predict the future. They also help in creating trends about the past. When it comes to healthcare, it allows to analyze large datasets from thousands of patients, identifying clusters and correlation between datasets, as well as developing predictive models using data mining techniques [ 60 ].

This paper is the first study to consolidate and characterize the use of Big Data from different perspectives. The first part consists of a brief literature review of studies on Big Data (BD) and Big Data Analytics (BDA), while the second part presents results of direct research aimed at diagnosing the use of big data analyses in medical facilities in Poland.

Healthcare is a complex system with varied stakeholders: patients, doctors, hospitals, pharmaceutical companies and healthcare decision-makers. This sector is also limited by strict rules and regulations. However, worldwide one may observe a departure from the traditional doctor-patient approach. The doctor becomes a partner and the patient is involved in the therapeutic process [ 14 ]. Healthcare is no longer focused solely on the treatment of patients. The priority for decision-makers should be to promote proper health attitudes and prevent diseases that can be avoided [ 81 ]. This became visible and important especially during the Covid-19 pandemic [ 44 ].

The next challenges that healthcare will have to face is the growing number of elderly people and a decline in fertility. Fertility rates in the country are found below the reproductive minimum necessary to keep the population stable [ 10 ]. The reflection of both effects, namely the increase in age and lower fertility rates, are demographic load indicators, which is constantly growing. Forecasts show that providing healthcare in the form it is provided today will become impossible in the next 20 years [ 70 ]. It is especially visible now during the Covid-19 pandemic when healthcare faced quite a challenge related to the analysis of huge data amounts and the need to identify trends and predict the spread of the coronavirus. The pandemic showed it even more that patients should have access to information about their health condition, the possibility of digital analysis of this data and access to reliable medical support online. Health monitoring and cooperation with doctors in order to prevent diseases can actually revolutionize the healthcare system. One of the most important aspects of the change necessary in healthcare is putting the patient in the center of the system.

Technology is not enough to achieve these goals. Therefore, changes should be made not only at the technological level but also in the management and design of complete healthcare processes and what is more, they should affect the business models of service providers. The use of Big Data Analytics is becoming more and more common in enterprises [ 17 , 54 ]. However, medical enterprises still cannot keep up with the information needs of patients, clinicians, administrators and the creator’s policy. The adoption of a Big Data approach would allow the implementation of personalized and precise medicine based on personalized information, delivered in real time and tailored to individual patients.

To achieve this goal, it is necessary to implement systems that will be able to learn quickly about the data generated by people within clinical care and everyday life. This will enable data-driven decision making, receiving better personalized predictions about prognosis and responses to treatments; a deeper understanding of the complex factors and their interactions that influence health at the patient level, the health system and society, enhanced approaches to detecting safety problems with drugs and devices, as well as more effective methods of comparing prevention, diagnostic, and treatment options [ 40 ].

In the literature, there is a lot of research showing what opportunities can be offered to companies by big data analysis and what data can be analyzed. However, there are few studies showing how data analysis in the area of healthcare is performed, what data is used by medical facilities and what analyses and in which areas they carry out. This paper aims to fill this gap by presenting the results of research carried out in medical facilities in Poland. The goal is to analyze the possibilities of using Big Data Analytics in healthcare, especially in Polish conditions. In particular, the paper is aimed at determining what data is processed by medical facilities in Poland, what analyses they perform and in what areas, and how they assess their analytical maturity. In order to achieve this goal, a critical analysis of the literature was performed, and the direct research was based on a research questionnaire conducted on a sample of 217 medical facilities in Poland. It was hypothesized that medical facilities in Poland are working on both structured and unstructured data and moving towards data-based healthcare and its benefits. Examining the maturity of healthcare facilities in the use of Big Data and Big Data Analytics is crucial in determining the potential future benefits that the healthcare sector can gain from Big Data Analytics. There is also a pressing need to predicate whether, in the coming years, healthcare will be able to cope with the threats and challenges it faces.

This paper is divided into eight parts. The first is the introduction which provides background and the general problem statement of this research. In the second part, this paper discusses considerations on use of Big Data and Big Data Analytics in Healthcare, and then, in the third part, it moves on to challenges and potential benefits of using Big Data Analytics in healthcare. The next part involves the explanation of the proposed method. The result of direct research and discussion are presented in the fifth part, while the following part of the paper is the conclusion. The seventh part of the paper presents practical implications. The final section of the paper provides limitations and directions for future research.

Considerations on use Big Data and Big Data Analytics in the healthcare

In recent years one can observe a constantly increasing demand for solutions offering effective analytical tools. This trend is also noticeable in the analysis of large volumes of data (Big Data, BD). Organizations are looking for ways to use the power of Big Data to improve their decision making, competitive advantage or business performance [ 7 , 54 ]. Big Data is considered to offer potential solutions to public and private organizations, however, still not much is known about the outcome of the practical use of Big Data in different types of organizations [ 24 ].

As already mentioned, in recent years, healthcare management worldwide has been changed from a disease-centered model to a patient-centered model, even in value-based healthcare delivery model [ 68 ]. In order to meet the requirements of this model and provide effective patient-centered care, it is necessary to manage and analyze healthcare Big Data.

The issue often raised when it comes to the use of data in healthcare is the appropriate use of Big Data. Healthcare has always generated huge amounts of data and nowadays, the introduction of electronic medical records, as well as the huge amount of data sent by various types of sensors or generated by patients in social media causes data streams to constantly grow. Also, the medical industry generates significant amounts of data, including clinical records, medical images, genomic data and health behaviors. Proper use of the data will allow healthcare organizations to support clinical decision-making, disease surveillance, and public health management. The challenge posed by clinical data processing involves not only the quantity of data but also the difficulty in processing it.

In the literature one can find many different definitions of Big Data. This concept has evolved in recent years, however, it is still not clearly understood. Nevertheless, despite the range and differences in definitions, Big Data can be treated as a: large amount of digital data, large data sets, tool, technology or phenomenon (cultural or technological.

Big Data can be considered as massive and continually generated digital datasets that are produced via interactions with online technologies [ 53 ]. Big Data can be defined as datasets that are of such large sizes that they pose challenges in traditional storage and analysis techniques [ 28 ]. A similar opinion about Big Data was presented by Ohlhorst who sees Big Data as extremely large data sets, possible neither to manage nor to analyze with traditional data processing tools [ 57 ]. In his opinion, the bigger the data set, the more difficult it is to gain any value from it.

In turn, Knapp perceived Big Data as tools, processes and procedures that allow an organization to create, manipulate and manage very large data sets and storage facilities [ 38 ]. From this point of view, Big Data is identified as a tool to gather information from different databases and processes, allowing users to manage large amounts of data.

Similar perception of the term ‘Big Data’ is shown by Carter. According to him, Big Data technologies refer to a new generation of technologies and architectures, designed to economically extract value from very large volumes of a wide variety of data by enabling high velocity capture, discovery and/or analysis [ 13 ].

Jordan combines these two approaches by identifying Big Data as a complex system, as it needs data bases for data to be stored in, programs and tools to be managed, as well as expertise and personnel able to retrieve useful information and visualization to be understood [ 37 ].

Following the definition of Laney for Big Data, it can be state that: it is large amount of data generated in very fast motion and it contains a lot of content [ 43 ]. Such data comes from unstructured sources, such as stream of clicks on the web, social networks (Twitter, blogs, Facebook), video recordings from the shops, recording of calls in a call center, real time information from various kinds of sensors, RFID, GPS devices, mobile phones and other devices that identify and monitor something [ 8 ]. Big Data is a powerful digital data silo, raw, collected with all sorts of sources, unstructured and difficult, or even impossible, to analyze using conventional techniques used so far to relational databases.

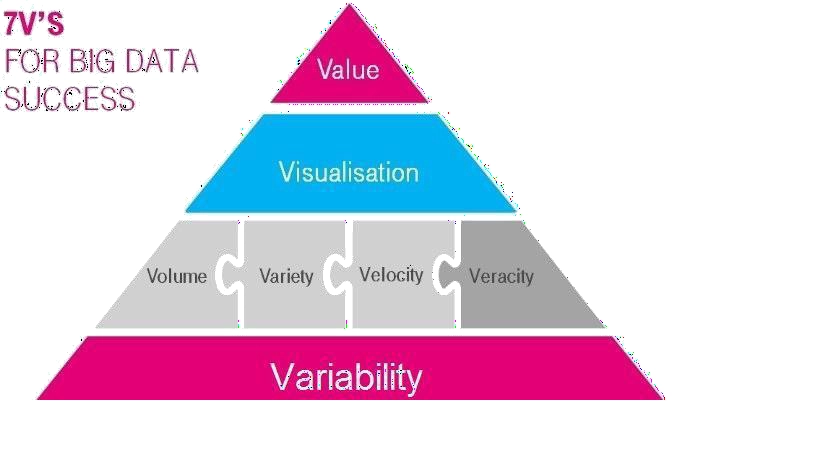

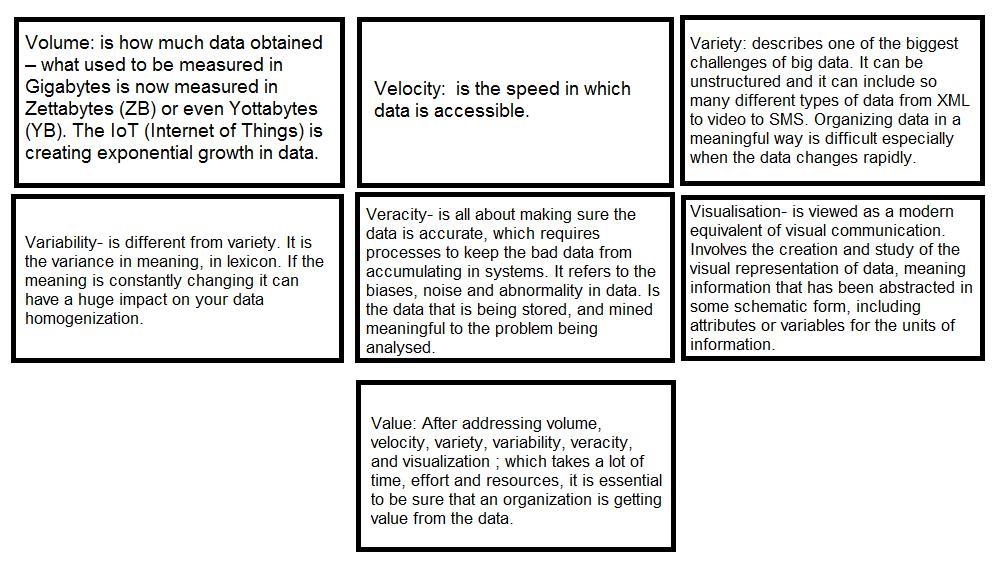

While describing Big Data, it cannot be overlooked that the term refers more to a phenomenon than to specific technology. Therefore, instead of defining this phenomenon, trying to describe them, more authors are describing Big Data by giving them characteristics included a collection of V’s related to its nature [ 2 , 3 , 23 , 25 , 58 ]:

Volume (refers to the amount of data and is one of the biggest challenges in Big Data Analytics),

Velocity (speed with which new data is generated, the challenge is to be able to manage data effectively and in real time),

Variety (heterogeneity of data, many different types of healthcare data, the challenge is to derive insights by looking at all available heterogenous data in a holistic manner),

Variability (inconsistency of data, the challenge is to correct the interpretation of data that can vary significantly depending on the context),

Veracity (how trustworthy the data is, quality of the data),

Visualization (ability to interpret data and resulting insights, challenging for Big Data due to its other features as described above).

Value (the goal of Big Data Analytics is to discover the hidden knowledge from huge amounts of data).

Big Data is defined as an information asset with high volume, velocity, and variety, which requires specific technology and method for its transformation into value [ 21 , 77 ]. Big Data is also a collection of information about high-volume, high volatility or high diversity, requiring new forms of processing in order to support decision-making, discovering new phenomena and process optimization [ 5 , 7 ]. Big Data is too large for traditional data-processing systems and software tools to capture, store, manage and analyze, therefore it requires new technologies [ 28 , 50 , 61 ] to manage (capture, aggregate, process) its volume, velocity and variety [ 9 ].

Undoubtedly, Big Data differs from the data sources used so far by organizations. Therefore, organizations must approach this type of unstructured data in a different way. First of all, organizations must start to see data as flows and not stocks—this entails the need to implement the so-called streaming analytics [ 48 ]. The mentioned features make it necessary to use new IT tools that allow the fullest use of new data [ 58 ]. The Big Data idea, inseparable from the huge increase in data available to various organizations or individuals, creates opportunities for access to valuable analyses, conclusions and enables making more accurate decisions [ 6 , 11 , 59 ].

The Big Data concept is constantly evolving and currently it does not focus on huge amounts of data, but rather on the process of creating value from this data [ 52 ]. Big Data is collected from various sources that have different data properties and are processed by different organizational units, resulting in creation of a Big Data chain [ 36 ]. The aim of the organizations is to manage, process and analyze Big Data. In the healthcare sector, Big Data streams consist of various types of data, namely [ 8 , 51 ]:

clinical data, i.e. data obtained from electronic medical records, data from hospital information systems, image centers, laboratories, pharmacies and other organizations providing health services, patient generated health data, physician’s free-text notes, genomic data, physiological monitoring data [ 4 ],

biometric data provided from various types of devices that monitor weight, pressure, glucose level, etc.,

financial data, constituting a full record of economic operations reflecting the conducted activity,

data from scientific research activities, i.e. results of research, including drug research, design of medical devices and new methods of treatment,

data provided by patients, including description of preferences, level of satisfaction, information from systems for self-monitoring of their activity: exercises, sleep, meals consumed, etc.

data from social media.

These data are provided not only by patients but also by organizations and institutions, as well as by various types of monitoring devices, sensors or instruments [ 16 ]. Data that has been generated so far in the healthcare sector is stored in both paper and digital form. Thus, the essence and the specificity of the process of Big Data analyses means that organizations need to face new technological and organizational challenges [ 67 ]. The healthcare sector has always generated huge amounts of data and this is connected, among others, with the need to store medical records of patients. However, the problem with Big Data in healthcare is not limited to an overwhelming volume but also an unprecedented diversity in terms of types, data formats and speed with which it should be analyzed in order to provide the necessary information on an ongoing basis [ 3 ]. It is also difficult to apply traditional tools and methods for management of unstructured data [ 67 ]. Due to the diversity and quantity of data sources that are growing all the time, advanced analytical tools and technologies, as well as Big Data analysis methods which can meet and exceed the possibilities of managing healthcare data, are needed [ 3 , 68 ].

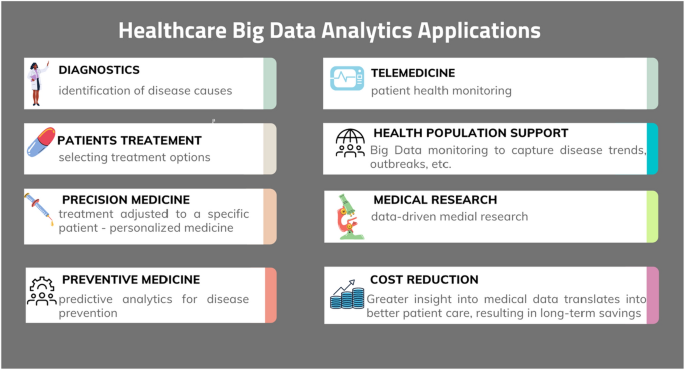

Therefore, the potential is seen in Big Data analyses, especially in the aspect of improving the quality of medical care, saving lives or reducing costs [ 30 ]. Extracting from this tangle of given association rules, patterns and trends will allow health service providers and other stakeholders in the healthcare sector to offer more accurate and more insightful diagnoses of patients, personalized treatment, monitoring of the patients, preventive medicine, support of medical research and health population, as well as better quality of medical services and patient care while, at the same time, the ability to reduce costs (Fig. 1 ).

(Source: Own elaboration)

Healthcare Big Data Analytics applications

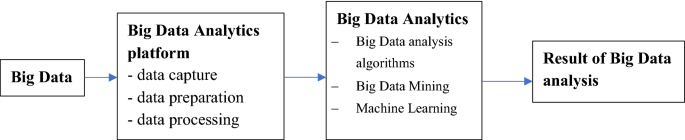

The main challenge with Big Data is how to handle such a large amount of information and use it to make data-driven decisions in plenty of areas [ 64 ]. In the context of healthcare data, another major challenge is to adjust big data storage, analysis, presentation of analysis results and inference basing on them in a clinical setting. Data analytics systems implemented in healthcare are designed to describe, integrate and present complex data in an appropriate way so that it can be understood better (Fig. 2 ). This would improve the efficiency of acquiring, storing, analyzing and visualizing big data from healthcare [ 71 ].

Process of Big Data Analytics

The result of data processing with the use of Big Data Analytics is appropriate data storytelling which may contribute to making decisions with both lower risk and data support. This, in turn, can benefit healthcare stakeholders. To take advantage of the potential massive amounts of data in healthcare and to ensure that the right intervention to the right patient is properly timed, personalized, and potentially beneficial to all components of the healthcare system such as the payer, patient, and management, analytics of large datasets must connect communities involved in data analytics and healthcare informatics [ 49 ]. Big Data Analytics can provide insight into clinical data and thus facilitate informed decision-making about the diagnosis and treatment of patients, prevention of diseases or others. Big Data Analytics can also improve the efficiency of healthcare organizations by realizing the data potential [ 3 , 62 ].

Big Data Analytics in medicine and healthcare refers to the integration and analysis of a large amount of complex heterogeneous data, such as various omics (genomics, epigenomics, transcriptomics, proteomics, metabolomics, interactomics, pharmacogenetics, deasomics), biomedical data, talemedicine data (sensors, medical equipment data) and electronic health records data [ 46 , 65 ].

When analyzing the phenomenon of Big Data in the healthcare sector, it should be noted that it can be considered from the point of view of three areas: epidemiological, clinical and business.

From a clinical point of view, the Big Data analysis aims to improve the health and condition of patients, enable long-term predictions about their health status and implementation of appropriate therapeutic procedures. Ultimately, the use of data analysis in medicine is to allow the adaptation of therapy to a specific patient, that is personalized medicine (precision, personalized medicine).

From an epidemiological point of view, it is desirable to obtain an accurate prognosis of morbidity in order to implement preventive programs in advance.

In the business context, Big Data analysis may enable offering personalized packages of commercial services or determining the probability of individual disease and infection occurrence. It is worth noting that Big Data means not only the collection and processing of data but, most of all, the inference and visualization of data necessary to obtain specific business benefits.

In order to introduce new management methods and new solutions in terms of effectiveness and transparency, it becomes necessary to make data more accessible, digital, searchable, as well as analyzed and visualized.

Erickson and Rothberg state that the information and data do not reveal their full value until insights are drawn from them. Data becomes useful when it enhances decision making and decision making is enhanced only when analytical techniques are used and an element of human interaction is applied [ 22 ].

Thus, healthcare has experienced much progress in usage and analysis of data. A large-scale digitalization and transparency in this sector is a key statement of almost all countries governments policies. For centuries, the treatment of patients was based on the judgment of doctors who made treatment decisions. In recent years, however, Evidence-Based Medicine has become more and more important as a result of it being related to the systematic analysis of clinical data and decision-making treatment based on the best available information [ 42 ]. In the healthcare sector, Big Data Analytics is expected to improve the quality of life and reduce operational costs [ 72 , 82 ]. Big Data Analytics enables organizations to improve and increase their understanding of the information contained in data. It also helps identify data that provides insightful insights for current as well as future decisions [ 28 ].

Big Data Analytics refers to technologies that are grounded mostly in data mining: text mining, web mining, process mining, audio and video analytics, statistical analysis, network analytics, social media analytics and web analytics [ 16 , 25 , 31 ]. Different data mining techniques can be applied on heterogeneous healthcare data sets, such as: anomaly detection, clustering, classification, association rules as well as summarization and visualization of those Big Data sets [ 65 ]. Modern data analytics techniques explore and leverage unique data characteristics even from high-speed data streams and sensor data [ 15 , 16 , 31 , 55 ]. Big Data can be used, for example, for better diagnosis in the context of comprehensive patient data, disease prevention and telemedicine (in particular when using real-time alerts for immediate care), monitoring patients at home, preventing unnecessary hospital visits, integrating medical imaging for a wider diagnosis, creating predictive analytics, reducing fraud and improving data security, better strategic planning and increasing patients’ involvement in their own health.

Big Data Analytics in healthcare can be divided into [ 33 , 73 , 74 ]:

descriptive analytics in healthcare is used to understand past and current healthcare decisions, converting data into useful information for understanding and analyzing healthcare decisions, outcomes and quality, as well as making informed decisions [ 33 ]. It can be used to create reports (i.e. about patients’ hospitalizations, physicians’ performance, utilization management), visualization, customized reports, drill down tables, or running queries on the basis of historical data.

predictive analytics operates on past performance in an effort to predict the future by examining historical or summarized health data, detecting patterns of relationships in these data, and then extrapolating these relationships to forecast. It can be used to i.e. predict the response of different patient groups to different drugs (dosages) or reactions (clinical trials), anticipate risk and find relationships in health data and detect hidden patterns [ 62 ]. In this way, it is possible to predict the epidemic spread, anticipate service contracts and plan healthcare resources. Predictive analytics is used in proper diagnosis and for appropriate treatments to be given to patients suffering from certain diseases [ 39 ].

prescriptive analytics—occurs when health problems involve too many choices or alternatives. It uses health and medical knowledge in addition to data or information. Prescriptive analytics is used in many areas of healthcare, including drug prescriptions and treatment alternatives. Personalized medicine and evidence-based medicine are both supported by prescriptive analytics.

discovery analytics—utilizes knowledge about knowledge to discover new “inventions” like drugs (drug discovery), previously unknown diseases and medical conditions, alternative treatments, etc.

Although the models and tools used in descriptive, predictive, prescriptive, and discovery analytics are different, many applications involve all four of them [ 62 ]. Big Data Analytics in healthcare can help enable personalized medicine by identifying optimal patient-specific treatments. This can influence the improvement of life standards, reduce waste of healthcare resources and save costs of healthcare [ 56 , 63 , 71 ]. The introduction of large data analysis gives new analytical possibilities in terms of scope, flexibility and visualization. Techniques such as data mining (computational pattern discovery process in large data sets) facilitate inductive reasoning and analysis of exploratory data, enabling scientists to identify data patterns that are independent of specific hypotheses. As a result, predictive analysis and real-time analysis becomes possible, making it easier for medical staff to start early treatments and reduce potential morbidity and mortality. In addition, document analysis, statistical modeling, discovering patterns and topics in document collections and data in the EHR, as well as an inductive approach can help identify and discover relationships between health phenomena.

Advanced analytical techniques can be used for a large amount of existing (but not yet analytical) data on patient health and related medical data to achieve a better understanding of the information and results obtained, as well as to design optimal clinical pathways [ 62 ]. Big Data Analytics in healthcare integrates analysis of several scientific areas such as bioinformatics, medical imaging, sensor informatics, medical informatics and health informatics [ 65 ]. Big Data Analytics in healthcare allows to analyze large datasets from thousands of patients, identifying clusters and correlation between datasets, as well as developing predictive models using data mining techniques [ 65 ]. Discussing all the techniques used for Big Data Analytics goes beyond the scope of a single article [ 25 ].

The success of Big Data analysis and its accuracy depend heavily on the tools and techniques used to analyze the ability to provide reliable, up-to-date and meaningful information to various stakeholders [ 12 ]. It is believed that the implementation of big data analytics by healthcare organizations could bring many benefits in the upcoming years, including lowering health care costs, better diagnosis and prediction of diseases and their spread, improving patient care and developing protocols to prevent re-hospitalization, optimizing staff, optimizing equipment, forecasting the need for hospital beds, operating rooms, treatments, and improving the drug supply chain [ 71 ].

Challenges and potential benefits of using Big Data Analytics in healthcare

Modern analytics gives possibilities not only to have insight in historical data, but also to have information necessary to generate insight into what may happen in the future. Even when it comes to prediction of evidence-based actions. The emphasis on reform has prompted payers and suppliers to pursue data analysis to reduce risk, detect fraud, improve efficiency and save lives. Everyone—payers, providers, even patients—are focusing on doing more with fewer resources. Thus, some areas in which enhanced data and analytics can yield the greatest results include various healthcare stakeholders (Table 1 ).

Healthcare organizations see the opportunity to grow through investments in Big Data Analytics. In recent years, by collecting medical data of patients, converting them into Big Data and applying appropriate algorithms, reliable information has been generated that helps patients, physicians and stakeholders in the health sector to identify values and opportunities [ 31 ]. It is worth noting that there are many changes and challenges in the structure of the healthcare sector. Digitization and effective use of Big Data in healthcare can bring benefits to every stakeholder in this sector. A single doctor would benefit the same as the entire healthcare system. Potential opportunities to achieve benefits and effects from Big Data in healthcare can be divided into four groups [ 8 ]:

Improving the quality of healthcare services:

assessment of diagnoses made by doctors and the manner of treatment of diseases indicated by them based on the decision support system working on Big Data collections,

detection of more effective, from a medical point of view, and more cost-effective ways to diagnose and treat patients,

analysis of large volumes of data to reach practical information useful for identifying needs, introducing new health services, preventing and overcoming crises,

prediction of the incidence of diseases,

detecting trends that lead to an improvement in health and lifestyle of the society,

analysis of the human genome for the introduction of personalized treatment.

Supporting the work of medical personnel

doctors’ comparison of current medical cases to cases from the past for better diagnosis and treatment adjustment,

detection of diseases at earlier stages when they can be more easily and quickly cured,

detecting epidemiological risks and improving control of pathogenic spots and reaction rates,

identification of patients who are predicted to have the highest risk of specific, life-threatening diseases by collating data on the history of the most common diseases, in healing people with reports entering insurance companies,

health management of each patient individually (personalized medicine) and health management of the whole society,

capturing and analyzing large amounts of data from hospitals and homes in real time, life monitoring devices to monitor safety and predict adverse events,

analysis of patient profiles to identify people for whom prevention should be applied, lifestyle change or preventive care approach,

the ability to predict the occurrence of specific diseases or worsening of patients’ results,

predicting disease progression and its determinants, estimating the risk of complications,

detecting drug interactions and their side effects.

Supporting scientific and research activity

supporting work on new drugs and clinical trials thanks to the possibility of analyzing “all data” instead of selecting a test sample,

the ability to identify patients with specific, biological features that will take part in specialized clinical trials,

selecting a group of patients for which the tested drug is likely to have the desired effect and no side effects,

using modeling and predictive analysis to design better drugs and devices.

Business and management

reduction of costs and counteracting abuse and counseling practices,

faster and more effective identification of incorrect or unauthorized financial operations in order to prevent abuse and eliminate errors,

increase in profitability by detecting patients generating high costs or identifying doctors whose work, procedures and treatment methods cost the most and offering them solutions that reduce the amount of money spent,

identification of unnecessary medical activities and procedures, e.g. duplicate tests.

According to research conducted by Wang, Kung and Byrd, Big Data Analytics benefits can be classified into five categories: IT infrastructure benefits (reducing system redundancy, avoiding unnecessary IT costs, transferring data quickly among healthcare IT systems, better use of healthcare systems, processing standardization among various healthcare IT systems, reducing IT maintenance costs regarding data storage), operational benefits (improving the quality and accuracy of clinical decisions, processing a large number of health records in seconds, reducing the time of patient travel, immediate access to clinical data to analyze, shortening the time of diagnostic test, reductions in surgery-related hospitalizations, exploring inconceivable new research avenues), organizational benefits (detecting interoperability problems much more quickly than traditional manual methods, improving cross-functional communication and collaboration among administrative staffs, researchers, clinicians and IT staffs, enabling data sharing with other institutions and adding new services, content sources and research partners), managerial benefits (gaining quick insights about changing healthcare trends in the market, providing members of the board and heads of department with sound decision-support information on the daily clinical setting, optimizing business growth-related decisions) and strategic benefits (providing a big picture view of treatment delivery for meeting future need, creating high competitive healthcare services) [ 73 ].

The above specification does not constitute a full list of potential areas of use of Big Data Analysis in healthcare because the possibilities of using analysis are practically unlimited. In addition, advanced analytical tools allow to analyze data from all possible sources and conduct cross-analyses to provide better data insights [ 26 ]. For example, a cross-analysis can refer to a combination of patient characteristics, as well as costs and care results that can help identify the best, in medical terms, and the most cost-effective treatment or treatments and this may allow a better adjustment of the service provider’s offer [ 62 ].

In turn, the analysis of patient profiles (e.g. segmentation and predictive modeling) allows identification of people who should be subject to prophylaxis, prevention or should change their lifestyle [ 8 ]. Shortened list of benefits for Big Data Analytics in healthcare is presented in paper [ 3 ] and consists of: better performance, day-to-day guides, detection of diseases in early stages, making predictive analytics, cost effectiveness, Evidence Based Medicine and effectiveness in patient treatment.

Summarizing, healthcare big data represents a huge potential for the transformation of healthcare: improvement of patients’ results, prediction of outbreaks of epidemics, valuable insights, avoidance of preventable diseases, reduction of the cost of healthcare delivery and improvement of the quality of life in general [ 1 ]. Big Data also generates many challenges such as difficulties in data capture, data storage, data analysis and data visualization [ 15 ]. The main challenges are connected with the issues of: data structure (Big Data should be user-friendly, transparent, and menu-driven but it is fragmented, dispersed, rarely standardized and difficult to aggregate and analyze), security (data security, privacy and sensitivity of healthcare data, there are significant concerns related to confidentiality), data standardization (data is stored in formats that are not compatible with all applications and technologies), storage and transfers (especially costs associated with securing, storing, and transferring unstructured data), managerial skills, such as data governance, lack of appropriate analytical skills and problems with Real-Time Analytics (health care is to be able to utilize Big Data in real time) [ 4 , 34 , 41 ].

The research is based on a critical analysis of the literature, as well as the presentation of selected results of direct research on the use of Big Data Analytics in medical facilities in Poland.

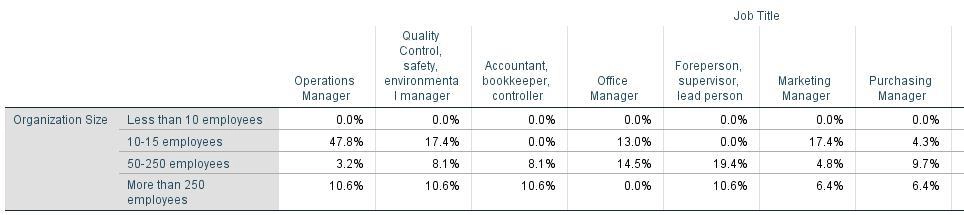

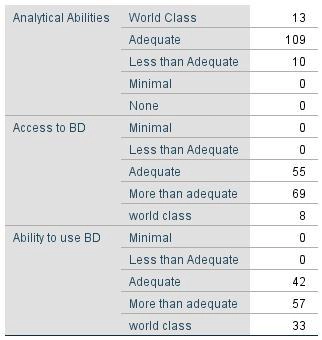

Presented research results are part of a larger questionnaire form on Big Data Analytics. The direct research was based on an interview questionnaire which contained 100 questions with 5-point Likert scale (1—strongly disagree, 2—I rather disagree, 3—I do not agree, nor disagree, 4—I rather agree, 5—I definitely agree) and 4 metrics questions. The study was conducted in December 2018 on a sample of 217 medical facilities (110 private, 107 public). The research was conducted by a specialized market research agency: Center for Research and Expertise of the University of Economics in Katowice.

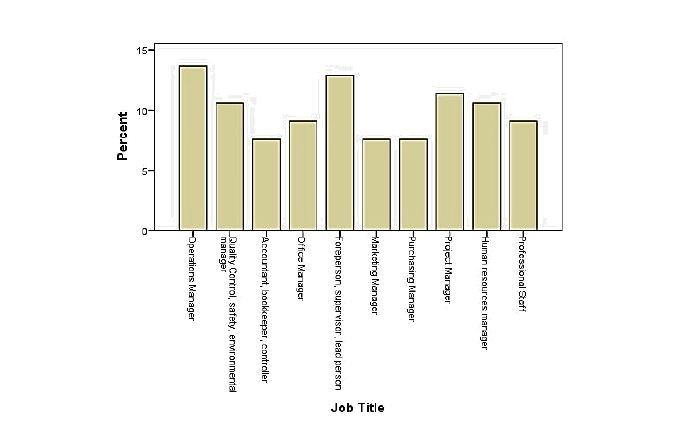

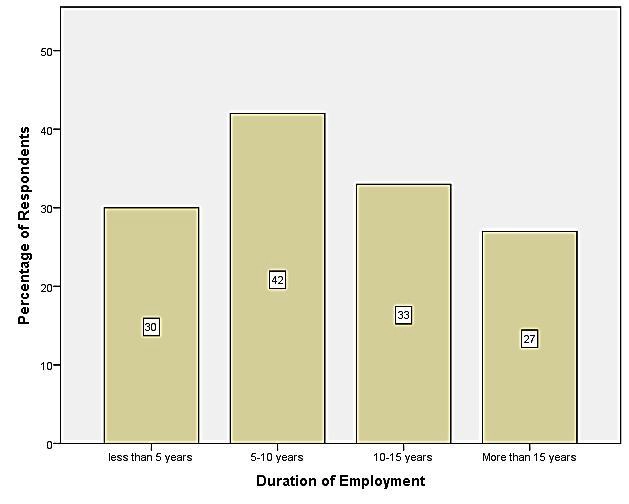

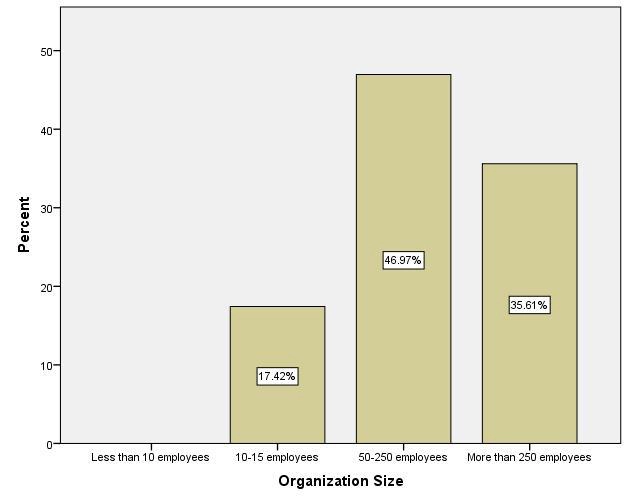

When it comes to direct research, the selected entities included entities financed from public sources—the National Health Fund (23.5%), and entities operating commercially (11.5%). In the surveyed group of entities, more than a half (64.9%) are hybrid financed, both from public and commercial sources. The diversity of the research sample also applies to the size of the entities, defined by the number of employees. Taking into account proportions of the surveyed entities, it should be noted that in the sector structure, medium-sized (10–50 employees—34% of the sample) and large (51–250 employees—27%) entities dominate. The research was of all-Poland nature, and the entities included in the research sample come from all of the voivodships. The largest group were entities from Łódzkie (32%), Śląskie (18%) and Mazowieckie (18%) voivodships, as these voivodships have the largest number of medical institutions. Other regions of the country were represented by single units. The selection of the research sample was random—layered. As part of medical facilities database, groups of private and public medical facilities have been identified and the ones to which the questionnaire was targeted were drawn from each of these groups. The analyses were performed using the GNU PSPP 0.10.2 software.

The aim of the study was to determine whether medical facilities in Poland use Big Data Analytics and if so, in which areas. Characteristics of the research sample is presented in Table 2 .

The research is non-exhaustive due to the incomplete and uneven regional distribution of the samples, overrepresented in three voivodeships (Łódzkie, Mazowieckie and Śląskie). The size of the research sample (217 entities) allows the authors of the paper to formulate specific conclusions on the use of Big Data in the process of its management.

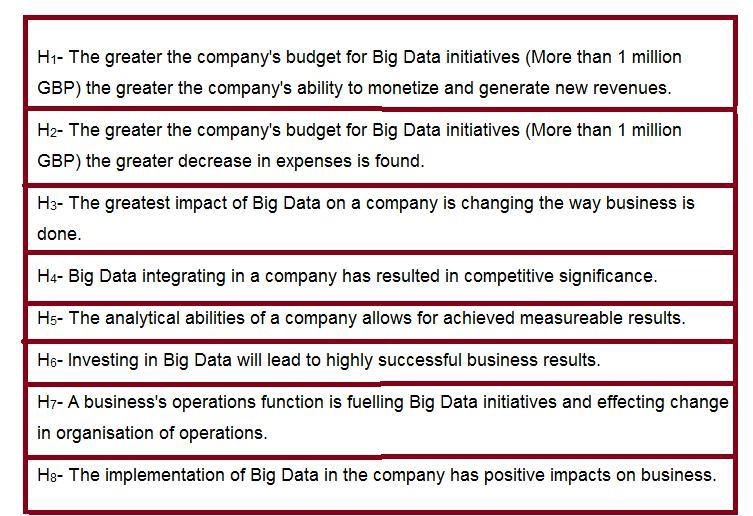

For the purpose of this paper, the following research hypotheses were formulated: (1) medical facilities in Poland are working on both structured and unstructured data (2) medical facilities in Poland are moving towards data-based healthcare and its benefits.

The paper poses the following research questions and statements that coincide with the selected questions from the research questionnaire:

From what sources do medical facilities obtain data? What types of data are used by the particular organization, whether structured or unstructured, and to what extent?

From what sources do medical facilities obtain data?

In which area organizations are using data and analytical systems (clinical or business)?

Is data analytics performed based on historical data or are predictive analyses also performed?

Determining whether administrative and medical staff receive complete, accurate and reliable data in a timely manner?

Determining whether real-time analyses are performed to support the particular organization’s activities.

Results and discussion

On the basis of the literature analysis and research study, a set of questions and statements related to the researched area was formulated. The results from the surveys show that medical facilities use a variety of data sources in their operations. These sources are both structured and unstructured data (Table 3 ).

According to the data provided by the respondents, considering the first statement made in the questionnaire, almost half of the medical institutions (47.58%) agreed that they rather collect and use structured data (e.g. databases and data warehouses, reports to external entities) and 10.57% entirely agree with this statement. As much as 23.35% of representatives of medical institutions stated “I agree or disagree”. Other medical facilities do not collect and use structured data (7.93%) and 6.17% strongly disagree with the first statement. Also, the median calculated based on the obtained results (median: 4), proves that medical facilities in Poland collect and use structured data (Table 4 ).

In turn, 28.19% of the medical institutions agreed that they rather collect and use unstructured data and as much as 9.25% entirely agree with this statement. The number of representatives of medical institutions that stated “I agree or disagree” was 27.31%. Other medical facilities do not collect and use structured data (17.18%) and 13.66% strongly disagree with the first statement. In the case of unstructured data the median is 3, which means that the collection and use of this type of data by medical facilities in Poland is lower.

In the further part of the analysis, it was checked whether the size of the medical facility and form of ownership have an impact on whether it analyzes unstructured data (Tables 4 and 5 ). In order to find this out, correlation coefficients were calculated.

Based on the calculations, it can be concluded that there is a small statistically monotonic correlation between the size of the medical facility and its collection and use of structured data (p < 0.001; τ = 0.16). This means that the use of structured data is slightly increasing in larger medical facilities. The size of the medical facility is more important according to use of unstructured data (p < 0.001; τ = 0.23) (Table 4 .).

To determine whether the form of medical facility ownership affects data collection, the Mann–Whitney U test was used. The calculations show that the form of ownership does not affect what data the organization collects and uses (Table 5 ).

Detailed information on the sources of from which medical facilities collect and use data is presented in the Table 6 .

The questionnaire results show that medical facilities are especially using information published in databases, reports to external units and transaction data, but they also use unstructured data from e-mails, medical devices, sensors, phone calls, audio and video data (Table 6 ). Data from social media, RFID and geolocation data are used to a small extent. Similar findings are concluded in the literature studies.

From the analysis of the answers given by the respondents, more than half of the medical facilities have integrated hospital system (HIS) implemented. As much as 43.61% use integrated hospital system and 16.30% use it extensively (Table 7 ). 19.38% of exanimated medical facilities do not use it at all. Moreover, most of the examined medical facilities (34.80% use it, 32.16% use extensively) conduct medical documentation in an electronic form, which gives an opportunity to use data analytics. Only 4.85% of medical facilities don’t use it at all.

Other problems that needed to be investigated were: whether medical facilities in Poland use data analytics? If so, in what form and in what areas? (Table 8 ). The analysis of answers given by the respondents about the potential of data analytics in medical facilities shows that a similar number of medical facilities use data analytics in administration and business (31.72% agreed with the statement no. 5 and 12.33% strongly agreed) as in the clinical area (33.04% agreed with the statement no. 6 and 12.33% strongly agreed). When considering decision-making issues, 35.24% agree with the statement "the organization uses data and analytical systems to support business decisions” and 8.37% of respondents strongly agree. Almost 40.09% agree with the statement that “the organization uses data and analytical systems to support clinical decisions (in the field of diagnostics and therapy)” and 15.42% of respondents strongly agree. Exanimated medical facilities use in their activity analytics based both on historical data (33.48% agree with statement 7 and 12.78% strongly agree) and predictive analytics (33.04% agrees with the statement number 8 and 15.86% strongly agree). Detailed results are presented in Table 8 .

Medical facilities focus on development in the field of data processing, as they confirm that they conduct analytical planning processes systematically and analyze new opportunities for strategic use of analytics in business and clinical activities (38.33% rather agree and 10.57% strongly agree with this statement). The situation is different with real-time data analysis, here, the situation is not so optimistic. Only 28.19% rather agree and 14.10% strongly agree with the statement that real-time analyses are performed to support an organization’s activities.

When considering whether a facility’s performance in the clinical area depends on the form of ownership, it can be concluded that taking the average and the Mann–Whitney U test depends. A higher degree of use of analyses in the clinical area can be observed in public institutions.

Whether a medical facility performs a descriptive or predictive analysis do not depend on the form of ownership (p > 0.05). It can be concluded that when analyzing the mean and median, they are higher in public facilities, than in private ones. What is more, the Mann–Whitney U test shows that these variables are dependent from each other (p < 0.05) (Table 9 ).

When considering whether a facility’s performance in the clinical area depends on its size, it can be concluded that taking the Kendall’s Tau (τ) it depends (p < 0.001; τ = 0.22), and the correlation is weak but statistically important. This means that the use of data and analytical systems to support clinical decisions (in the field of diagnostics and therapy) increases with the increase of size of the medical facility. A similar relationship, but even less powerful, can be found in the use of descriptive and predictive analyses (Table 10 ).

Considering the results of research in the area of analytical maturity of medical facilities, 8.81% of medical facilities stated that they are at the first level of maturity, i.e. an organization has developed analytical skills and does not perform analyses. As much as 13.66% of medical facilities confirmed that they have poor analytical skills, while 38.33% of the medical facility has located itself at level 3, meaning that “there is a lot to do in analytics”. On the other hand, 28.19% believe that analytical capabilities are well developed and 6.61% stated that analytics are at the highest level and the analytical capabilities are very well developed. Detailed data is presented in Table 11 . Average amounts to 3.11 and Median to 3.

The results of the research have enabled the formulation of following conclusions. Medical facilities in Poland are working on both structured and unstructured data. This data comes from databases, transactions, unstructured content of emails and documents, devices and sensors. However, the use of data from social media is smaller. In their activity, they reach for analytics in the administrative and business, as well as in the clinical area. Also, the decisions made are largely data-driven.

In summary, analysis of the literature that the benefits that medical facilities can get using Big Data Analytics in their activities relate primarily to patients, physicians and medical facilities. It can be confirmed that: patients will be better informed, will receive treatments that will work for them, will have prescribed medications that work for them and not be given unnecessary medications [ 78 ]. Physician roles will likely change to more of a consultant than decision maker. They will advise, warn, and help individual patients and have more time to form positive and lasting relationships with their patients in order to help people. Medical facilities will see changes as well, for example in fewer unnecessary hospitalizations, resulting initially in less revenue, but after the market adjusts, also the accomplishment [ 78 ]. The use of Big Data Analytics can literally revolutionize the way healthcare is practiced for better health and disease reduction.

The analysis of the latest data reveals that data analytics increase the accuracy of diagnoses. Physicians can use predictive algorithms to help them make more accurate diagnoses [ 45 ]. Moreover, it could be helpful in preventive medicine and public health because with early intervention, many diseases can be prevented or ameliorated [ 29 ]. Predictive analytics also allows to identify risk factors for a given patient, and with this knowledge patients will be able to change their lives what, in turn, may contribute to the fact that population disease patterns may dramatically change, resulting in savings in medical costs. Moreover, personalized medicine is the best solution for an individual patient seeking treatment. It can help doctors decide the exact treatments for those individuals. Better diagnoses and more targeted treatments will naturally lead to increases in good outcomes and fewer resources used, including doctors’ time.

The quantitative analysis of the research carried out and presented in this article made it possible to determine whether medical facilities in Poland use Big Data Analytics and if so, in which areas. Thanks to the results obtained it was possible to formulate the following conclusions. Medical facilities are working on both structured and unstructured data, which comes from databases, transactions, unstructured content of emails and documents, devices and sensors. According to analytics, they reach for analytics in the administrative and business, as well as in the clinical area. It clearly showed that the decisions made are largely data-driven. The results of the study confirm what has been analyzed in the literature. Medical facilities are moving towards data-based healthcare and its benefits.

In conclusion, Big Data Analytics has the potential for positive impact and global implications in healthcare. Future research on the use of Big Data in medical facilities will concern the definition of strategies adopted by medical facilities to promote and implement such solutions, as well as the benefits they gain from the use of Big Data analysis and how the perspectives in this area are seen.

Practical implications

This work sought to narrow the gap that exists in analyzing the possibility of using Big Data Analytics in healthcare. Showing how medical facilities in Poland are doing in this respect is an element that is part of global research carried out in this area, including [ 29 , 32 , 60 ].

Limitations and future directions

The research described in this article does not fully exhaust the questions related to the use of Big Data Analytics in Polish healthcare facilities. Only some of the dimensions characterizing the use of data by medical facilities in Poland have been examined. In order to get the full picture, it would be necessary to examine the results of using structured and unstructured data analytics in healthcare. Future research may examine the benefits that medical institutions achieve as a result of the analysis of structured and unstructured data in the clinical and management areas and what limitations they encounter in these areas. For this purpose, it is planned to conduct in-depth interviews with chosen medical facilities in Poland. These facilities could give additional data for empirical analyses based more on their suggestions. Further research should also include medical institutions from beyond the borders of Poland, enabling international comparative analyses.

Future research in the healthcare field has virtually endless possibilities. These regard the use of Big Data Analytics to diagnose specific conditions [ 47 , 66 , 69 , 76 ], propose an approach that can be used in other healthcare applications and create mechanisms to identify “patients like me” [ 75 , 80 ]. Big Data Analytics could also be used for studies related to the spread of pandemics, the efficacy of covid treatment [ 18 , 79 ], or psychology and psychiatry studies, e.g. emotion recognition [ 35 ].

Availability of data and materials

The datasets for this study are available on request to the corresponding author.

Abouelmehdi K, Beni-Hessane A, Khaloufi H. Big healthcare data: preserving security and privacy. J Big Data. 2018. https://doi.org/10.1186/s40537-017-0110-7 .

Article Google Scholar

Agrawal A, Choudhary A. Health services data: big data analytics for deriving predictive healthcare insights. Health Serv Eval. 2019. https://doi.org/10.1007/978-1-4899-7673-4_2-1 .

Al Mayahi S, Al-Badi A, Tarhini A. Exploring the potential benefits of big data analytics in providing smart healthcare. In: Miraz MH, Excell P, Ware A, Ali M, Soomro S, editors. Emerging technologies in computing—first international conference, iCETiC 2018, proceedings (Lecture Notes of the Institute for Computer Sciences, Social-Informatics and Telecommunications Engineering, LNICST). Cham: Springer; 2018. p. 247–58. https://doi.org/10.1007/978-3-319-95450-9_21 .

Bainbridge M. Big data challenges for clinical and precision medicine. In: Househ M, Kushniruk A, Borycki E, editors. Big data, big challenges: a healthcare perspective: background, issues, solutions and research directions. Cham: Springer; 2019. p. 17–31.

Google Scholar

Bartuś K, Batko K, Lorek P. Business intelligence systems: barriers during implementation. In: Jabłoński M, editor. Strategic performance management new concept and contemporary trends. New York: Nova Science Publishers; 2017. p. 299–327. ISBN: 978-1-53612-681-5.

Bartuś K, Batko K, Lorek P. Diagnoza wykorzystania big data w organizacjach-wybrane wyniki badań. Informatyka Ekonomiczna. 2017;3(45):9–20.

Bartuś K, Batko K, Lorek P. Wykorzystanie rozwiązań business intelligence, competitive intelligence i big data w przedsiębiorstwach województwa śląskiego. Przegląd Organizacji. 2018;2:33–9.

Batko K. Możliwości wykorzystania Big Data w ochronie zdrowia. Roczniki Kolegium Analiz Ekonomicznych. 2016;42:267–82.

Bi Z, Cochran D. Big data analytics with applications. J Manag Anal. 2014;1(4):249–65. https://doi.org/10.1080/23270012.2014.992985 .

Boerma T, Requejo J, Victora CG, Amouzou A, Asha G, Agyepong I, Borghi J. Countdown to 2030: tracking progress towards universal coverage for reproductive, maternal, newborn, and child health. Lancet. 2018;391(10129):1538–48.

Bollier D, Firestone CM. The promise and peril of big data. Washington, D.C: Aspen Institute, Communications and Society Program; 2010. p. 1–66.

Bose R. Competitive intelligence process and tools for intelligence analysis. Ind Manag Data Syst. 2008;108(4):510–28.

Carter P. Big data analytics: future architectures, skills and roadmaps for the CIO: in white paper, IDC sponsored by SAS. 2011. p. 1–16.

Castro EM, Van Regenmortel T, Vanhaecht K, Sermeus W, Van Hecke A. Patient empowerment, patient participation and patient-centeredness in hospital care: a concept analysis based on a literature review. Patient Educ Couns. 2016;99(12):1923–39.

Chen H, Chiang RH, Storey VC. Business intelligence and analytics: from big data to big impact. MIS Q. 2012;36(4):1165–88.

Chen CP, Zhang CY. Data-intensive applications, challenges, techniques and technologies: a survey on big data. Inf Sci. 2014;275:314–47.

Chomiak-Orsa I, Mrozek B. Główne perspektywy wykorzystania big data w mediach społecznościowych. Informatyka Ekonomiczna. 2017;3(45):44–54.

Corsi A, de Souza FF, Pagani RN, et al. Big data analytics as a tool for fighting pandemics: a systematic review of literature. J Ambient Intell Hum Comput. 2021;12:9163–80. https://doi.org/10.1007/s12652-020-02617-4 .

Davenport TH, Harris JG. Competing on analytics, the new science of winning. Boston: Harvard Business School Publishing Corporation; 2007.

Davenport TH. Big data at work: dispelling the myths, uncovering the opportunities. Boston: Harvard Business School Publishing; 2014.

De Cnudde S, Martens D. Loyal to your city? A data mining analysis of a public service loyalty program. Decis Support Syst. 2015;73:74–84.

Erickson S, Rothberg H. Data, information, and intelligence. In: Rodriguez E, editor. The analytics process. Boca Raton: Auerbach Publications; 2017. p. 111–26.

Fang H, Zhang Z, Wang CJ, Daneshmand M, Wang C, Wang H. A survey of big data research. IEEE Netw. 2015;29(5):6–9.