Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, automatically generate references for free.

- Knowledge Base

- Methodology

Research Design | Step-by-Step Guide with Examples

Published on 5 May 2022 by Shona McCombes . Revised on 20 March 2023.

A research design is a strategy for answering your research question using empirical data. Creating a research design means making decisions about:

- Your overall aims and approach

- The type of research design you’ll use

- Your sampling methods or criteria for selecting subjects

- Your data collection methods

- The procedures you’ll follow to collect data

- Your data analysis methods

A well-planned research design helps ensure that your methods match your research aims and that you use the right kind of analysis for your data.

Table of contents

Step 1: consider your aims and approach, step 2: choose a type of research design, step 3: identify your population and sampling method, step 4: choose your data collection methods, step 5: plan your data collection procedures, step 6: decide on your data analysis strategies, frequently asked questions.

- Introduction

Before you can start designing your research, you should already have a clear idea of the research question you want to investigate.

There are many different ways you could go about answering this question. Your research design choices should be driven by your aims and priorities – start by thinking carefully about what you want to achieve.

The first choice you need to make is whether you’ll take a qualitative or quantitative approach.

Qualitative research designs tend to be more flexible and inductive , allowing you to adjust your approach based on what you find throughout the research process.

Quantitative research designs tend to be more fixed and deductive , with variables and hypotheses clearly defined in advance of data collection.

It’s also possible to use a mixed methods design that integrates aspects of both approaches. By combining qualitative and quantitative insights, you can gain a more complete picture of the problem you’re studying and strengthen the credibility of your conclusions.

Practical and ethical considerations when designing research

As well as scientific considerations, you need to think practically when designing your research. If your research involves people or animals, you also need to consider research ethics .

- How much time do you have to collect data and write up the research?

- Will you be able to gain access to the data you need (e.g., by travelling to a specific location or contacting specific people)?

- Do you have the necessary research skills (e.g., statistical analysis or interview techniques)?

- Will you need ethical approval ?

At each stage of the research design process, make sure that your choices are practically feasible.

Prevent plagiarism, run a free check.

Within both qualitative and quantitative approaches, there are several types of research design to choose from. Each type provides a framework for the overall shape of your research.

Types of quantitative research designs

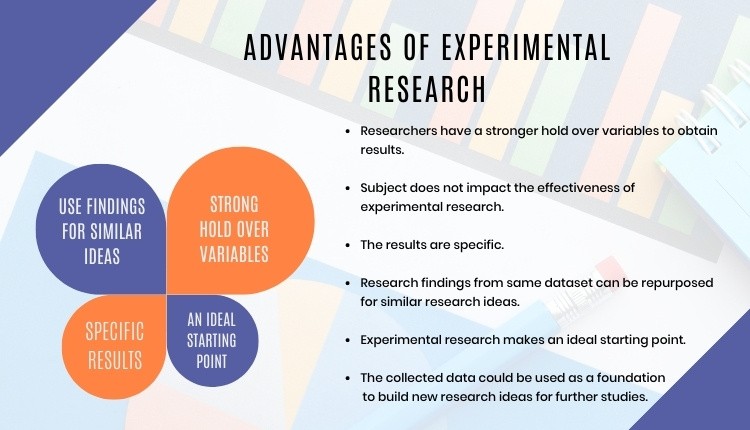

Quantitative designs can be split into four main types. Experimental and quasi-experimental designs allow you to test cause-and-effect relationships, while descriptive and correlational designs allow you to measure variables and describe relationships between them.

With descriptive and correlational designs, you can get a clear picture of characteristics, trends, and relationships as they exist in the real world. However, you can’t draw conclusions about cause and effect (because correlation doesn’t imply causation ).

Experiments are the strongest way to test cause-and-effect relationships without the risk of other variables influencing the results. However, their controlled conditions may not always reflect how things work in the real world. They’re often also more difficult and expensive to implement.

Types of qualitative research designs

Qualitative designs are less strictly defined. This approach is about gaining a rich, detailed understanding of a specific context or phenomenon, and you can often be more creative and flexible in designing your research.

The table below shows some common types of qualitative design. They often have similar approaches in terms of data collection, but focus on different aspects when analysing the data.

Your research design should clearly define who or what your research will focus on, and how you’ll go about choosing your participants or subjects.

In research, a population is the entire group that you want to draw conclusions about, while a sample is the smaller group of individuals you’ll actually collect data from.

Defining the population

A population can be made up of anything you want to study – plants, animals, organisations, texts, countries, etc. In the social sciences, it most often refers to a group of people.

For example, will you focus on people from a specific demographic, region, or background? Are you interested in people with a certain job or medical condition, or users of a particular product?

The more precisely you define your population, the easier it will be to gather a representative sample.

Sampling methods

Even with a narrowly defined population, it’s rarely possible to collect data from every individual. Instead, you’ll collect data from a sample.

To select a sample, there are two main approaches: probability sampling and non-probability sampling . The sampling method you use affects how confidently you can generalise your results to the population as a whole.

Probability sampling is the most statistically valid option, but it’s often difficult to achieve unless you’re dealing with a very small and accessible population.

For practical reasons, many studies use non-probability sampling, but it’s important to be aware of the limitations and carefully consider potential biases. You should always make an effort to gather a sample that’s as representative as possible of the population.

Case selection in qualitative research

In some types of qualitative designs, sampling may not be relevant.

For example, in an ethnography or a case study, your aim is to deeply understand a specific context, not to generalise to a population. Instead of sampling, you may simply aim to collect as much data as possible about the context you are studying.

In these types of design, you still have to carefully consider your choice of case or community. You should have a clear rationale for why this particular case is suitable for answering your research question.

For example, you might choose a case study that reveals an unusual or neglected aspect of your research problem, or you might choose several very similar or very different cases in order to compare them.

Data collection methods are ways of directly measuring variables and gathering information. They allow you to gain first-hand knowledge and original insights into your research problem.

You can choose just one data collection method, or use several methods in the same study.

Survey methods

Surveys allow you to collect data about opinions, behaviours, experiences, and characteristics by asking people directly. There are two main survey methods to choose from: questionnaires and interviews.

Observation methods

Observations allow you to collect data unobtrusively, observing characteristics, behaviours, or social interactions without relying on self-reporting.

Observations may be conducted in real time, taking notes as you observe, or you might make audiovisual recordings for later analysis. They can be qualitative or quantitative.

Other methods of data collection

There are many other ways you might collect data depending on your field and topic.

If you’re not sure which methods will work best for your research design, try reading some papers in your field to see what data collection methods they used.

Secondary data

If you don’t have the time or resources to collect data from the population you’re interested in, you can also choose to use secondary data that other researchers already collected – for example, datasets from government surveys or previous studies on your topic.

With this raw data, you can do your own analysis to answer new research questions that weren’t addressed by the original study.

Using secondary data can expand the scope of your research, as you may be able to access much larger and more varied samples than you could collect yourself.

However, it also means you don’t have any control over which variables to measure or how to measure them, so the conclusions you can draw may be limited.

As well as deciding on your methods, you need to plan exactly how you’ll use these methods to collect data that’s consistent, accurate, and unbiased.

Planning systematic procedures is especially important in quantitative research, where you need to precisely define your variables and ensure your measurements are reliable and valid.

Operationalisation

Some variables, like height or age, are easily measured. But often you’ll be dealing with more abstract concepts, like satisfaction, anxiety, or competence. Operationalisation means turning these fuzzy ideas into measurable indicators.

If you’re using observations , which events or actions will you count?

If you’re using surveys , which questions will you ask and what range of responses will be offered?

You may also choose to use or adapt existing materials designed to measure the concept you’re interested in – for example, questionnaires or inventories whose reliability and validity has already been established.

Reliability and validity

Reliability means your results can be consistently reproduced , while validity means that you’re actually measuring the concept you’re interested in.

For valid and reliable results, your measurement materials should be thoroughly researched and carefully designed. Plan your procedures to make sure you carry out the same steps in the same way for each participant.

If you’re developing a new questionnaire or other instrument to measure a specific concept, running a pilot study allows you to check its validity and reliability in advance.

Sampling procedures

As well as choosing an appropriate sampling method, you need a concrete plan for how you’ll actually contact and recruit your selected sample.

That means making decisions about things like:

- How many participants do you need for an adequate sample size?

- What inclusion and exclusion criteria will you use to identify eligible participants?

- How will you contact your sample – by mail, online, by phone, or in person?

If you’re using a probability sampling method, it’s important that everyone who is randomly selected actually participates in the study. How will you ensure a high response rate?

If you’re using a non-probability method, how will you avoid bias and ensure a representative sample?

Data management

It’s also important to create a data management plan for organising and storing your data.

Will you need to transcribe interviews or perform data entry for observations? You should anonymise and safeguard any sensitive data, and make sure it’s backed up regularly.

Keeping your data well organised will save time when it comes to analysing them. It can also help other researchers validate and add to your findings.

On their own, raw data can’t answer your research question. The last step of designing your research is planning how you’ll analyse the data.

Quantitative data analysis

In quantitative research, you’ll most likely use some form of statistical analysis . With statistics, you can summarise your sample data, make estimates, and test hypotheses.

Using descriptive statistics , you can summarise your sample data in terms of:

- The distribution of the data (e.g., the frequency of each score on a test)

- The central tendency of the data (e.g., the mean to describe the average score)

- The variability of the data (e.g., the standard deviation to describe how spread out the scores are)

The specific calculations you can do depend on the level of measurement of your variables.

Using inferential statistics , you can:

- Make estimates about the population based on your sample data.

- Test hypotheses about a relationship between variables.

Regression and correlation tests look for associations between two or more variables, while comparison tests (such as t tests and ANOVAs ) look for differences in the outcomes of different groups.

Your choice of statistical test depends on various aspects of your research design, including the types of variables you’re dealing with and the distribution of your data.

Qualitative data analysis

In qualitative research, your data will usually be very dense with information and ideas. Instead of summing it up in numbers, you’ll need to comb through the data in detail, interpret its meanings, identify patterns, and extract the parts that are most relevant to your research question.

Two of the most common approaches to doing this are thematic analysis and discourse analysis .

There are many other ways of analysing qualitative data depending on the aims of your research. To get a sense of potential approaches, try reading some qualitative research papers in your field.

A sample is a subset of individuals from a larger population. Sampling means selecting the group that you will actually collect data from in your research.

For example, if you are researching the opinions of students in your university, you could survey a sample of 100 students.

Statistical sampling allows you to test a hypothesis about the characteristics of a population. There are various sampling methods you can use to ensure that your sample is representative of the population as a whole.

Operationalisation means turning abstract conceptual ideas into measurable observations.

For example, the concept of social anxiety isn’t directly observable, but it can be operationally defined in terms of self-rating scores, behavioural avoidance of crowded places, or physical anxiety symptoms in social situations.

Before collecting data , it’s important to consider how you will operationalise the variables that you want to measure.

The research methods you use depend on the type of data you need to answer your research question .

- If you want to measure something or test a hypothesis , use quantitative methods . If you want to explore ideas, thoughts, and meanings, use qualitative methods .

- If you want to analyse a large amount of readily available data, use secondary data. If you want data specific to your purposes with control over how they are generated, collect primary data.

- If you want to establish cause-and-effect relationships between variables , use experimental methods. If you want to understand the characteristics of a research subject, use descriptive methods.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

McCombes, S. (2023, March 20). Research Design | Step-by-Step Guide with Examples. Scribbr. Retrieved 21 May 2024, from https://www.scribbr.co.uk/research-methods/research-design/

Is this article helpful?

Shona McCombes

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- J Athl Train

- v.45(1); Jan-Feb 2010

Study/Experimental/Research Design: Much More Than Statistics

Kenneth l. knight.

Brigham Young University, Provo, UT

The purpose of study, experimental, or research design in scientific manuscripts has changed significantly over the years. It has evolved from an explanation of the design of the experiment (ie, data gathering or acquisition) to an explanation of the statistical analysis. This practice makes “Methods” sections hard to read and understand.

To clarify the difference between study design and statistical analysis, to show the advantages of a properly written study design on article comprehension, and to encourage authors to correctly describe study designs.

Description:

The role of study design is explored from the introduction of the concept by Fisher through modern-day scientists and the AMA Manual of Style . At one time, when experiments were simpler, the study design and statistical design were identical or very similar. With the complex research that is common today, which often includes manipulating variables to create new variables and the multiple (and different) analyses of a single data set, data collection is very different than statistical design. Thus, both a study design and a statistical design are necessary.

Advantages:

Scientific manuscripts will be much easier to read and comprehend. A proper experimental design serves as a road map to the study methods, helping readers to understand more clearly how the data were obtained and, therefore, assisting them in properly analyzing the results.

Study, experimental, or research design is the backbone of good research. It directs the experiment by orchestrating data collection, defines the statistical analysis of the resultant data, and guides the interpretation of the results. When properly described in the written report of the experiment, it serves as a road map to readers, 1 helping them negotiate the “Methods” section, and, thus, it improves the clarity of communication between authors and readers.

A growing trend is to equate study design with only the statistical analysis of the data. The design statement typically is placed at the end of the “Methods” section as a subsection called “Experimental Design” or as part of a subsection called “Data Analysis.” This placement, however, equates experimental design and statistical analysis, minimizing the effect of experimental design on the planning and reporting of an experiment. This linkage is inappropriate, because some of the elements of the study design that should be described at the beginning of the “Methods” section are instead placed in the “Statistical Analysis” section or, worse, are absent from the manuscript entirely.

Have you ever interrupted your reading of the “Methods” to sketch out the variables in the margins of the paper as you attempt to understand how they all fit together? Or have you jumped back and forth from the early paragraphs of the “Methods” section to the “Statistics” section to try to understand which variables were collected and when? These efforts would be unnecessary if a road map at the beginning of the “Methods” section outlined how the independent variables were related, which dependent variables were measured, and when they were measured. When they were measured is especially important if the variables used in the statistical analysis were a subset of the measured variables or were computed from measured variables (such as change scores).

The purpose of this Communications article is to clarify the purpose and placement of study design elements in an experimental manuscript. Adopting these ideas may improve your science and surely will enhance the communication of that science. These ideas will make experimental manuscripts easier to read and understand and, therefore, will allow them to become part of readers' clinical decision making.

WHAT IS A STUDY (OR EXPERIMENTAL OR RESEARCH) DESIGN?

The terms study design, experimental design, and research design are often thought to be synonymous and are sometimes used interchangeably in a single paper. Avoid doing so. Use the term that is preferred by the style manual of the journal for which you are writing. Study design is the preferred term in the AMA Manual of Style , 2 so I will use it here.

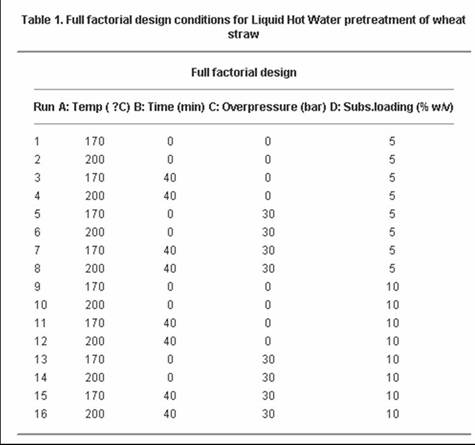

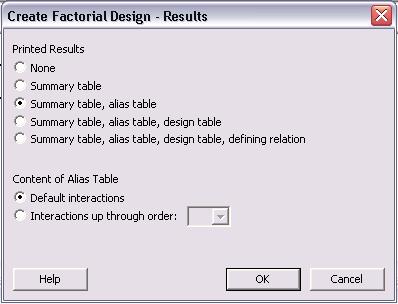

A study design is the architecture of an experimental study 3 and a description of how the study was conducted, 4 including all elements of how the data were obtained. 5 The study design should be the first subsection of the “Methods” section in an experimental manuscript (see the Table ). “Statistical Design” or, preferably, “Statistical Analysis” or “Data Analysis” should be the last subsection of the “Methods” section.

Table. Elements of a “Methods” Section

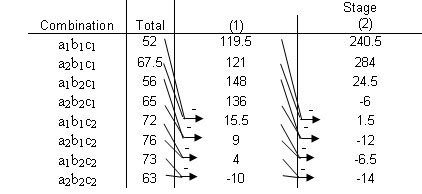

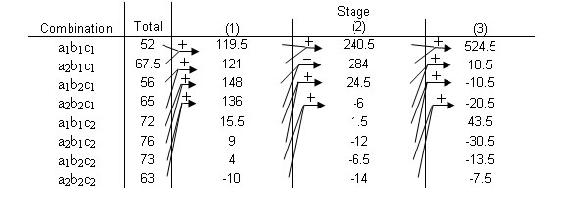

The “Study Design” subsection describes how the variables and participants interacted. It begins with a general statement of how the study was conducted (eg, crossover trials, parallel, or observational study). 2 The second element, which usually begins with the second sentence, details the number of independent variables or factors, the levels of each variable, and their names. A shorthand way of doing so is with a statement such as “A 2 × 4 × 8 factorial guided data collection.” This tells us that there were 3 independent variables (factors), with 2 levels of the first factor, 4 levels of the second factor, and 8 levels of the third factor. Following is a sentence that names the levels of each factor: for example, “The independent variables were sex (male or female), training program (eg, walking, running, weight lifting, or plyometrics), and time (2, 4, 6, 8, 10, 15, 20, or 30 weeks).” Such an approach clearly outlines for readers how the various procedures fit into the overall structure and, therefore, enhances their understanding of how the data were collected. Thus, the design statement is a road map of the methods.

The dependent (or measurement or outcome) variables are then named. Details of how they were measured are not given at this point in the manuscript but are explained later in the “Instruments” and “Procedures” subsections.

Next is a paragraph detailing who the participants were and how they were selected, placed into groups, and assigned to a particular treatment order, if the experiment was a repeated-measures design. And although not a part of the design per se, a statement about obtaining written informed consent from participants and institutional review board approval is usually included in this subsection.

The nuts and bolts of the “Methods” section follow, including such things as equipment, materials, protocols, etc. These are beyond the scope of this commentary, however, and so will not be discussed.

The last part of the “Methods” section and last part of the “Study Design” section is the “Data Analysis” subsection. It begins with an explanation of any data manipulation, such as how data were combined or how new variables (eg, ratios or differences between collected variables) were calculated. Next, readers are told of the statistical measures used to analyze the data, such as a mixed 2 × 4 × 8 analysis of variance (ANOVA) with 2 between-groups factors (sex and training program) and 1 within-groups factor (time of measurement). Researchers should state and reference the statistical package and procedure(s) within the package used to compute the statistics. (Various statistical packages perform analyses slightly differently, so it is important to know the package and specific procedure used.) This detail allows readers to judge the appropriateness of the statistical measures and the conclusions drawn from the data.

STATISTICAL DESIGN VERSUS STATISTICAL ANALYSIS

Avoid using the term statistical design . Statistical methods are only part of the overall design. The term gives too much emphasis to the statistics, which are important, but only one of many tools used in interpreting data and only part of the study design:

The most important issues in biostatistics are not expressed with statistical procedures. The issues are inherently scientific, rather than purely statistical, and relate to the architectural design of the research, not the numbers with which the data are cited and interpreted. 6

Stated another way, “The justification for the analysis lies not in the data collected but in the manner in which the data were collected.” 3 “Without the solid foundation of a good design, the edifice of statistical analysis is unsafe.” 7 (pp4–5)

The intertwining of study design and statistical analysis may have been caused (unintentionally) by R.A. Fisher, “… a genius who almost single-handedly created the foundations for modern statistical science.” 8 Most research did not involve statistics until Fisher invented the concepts and procedures of ANOVA (in 1921) 9 , 10 and experimental design (in 1935). 11 His books became standard references for scientists in many disciplines. As a result, many ANOVA books were titled Experimental Design (see, for example, Edwards 12 ), and ANOVA courses taught in psychology and education departments included the words experimental design in their course titles.

Before the widespread use of computers to analyze data, designs were much simpler, and often there was little difference between study design and statistical analysis. So combining the 2 elements did not cause serious problems. This is no longer true, however, for 3 reasons: (1) Research studies are becoming more complex, with multiple independent and dependent variables. The procedures sections of these complex studies can be difficult to understand if your only reference point is the statistical analysis and design. (2) Dependent variables are frequently measured at different times. (3) How the data were collected is often not directly correlated with the statistical design.

For example, assume the goal is to determine the strength gain in novice and experienced athletes as a result of 3 strength training programs. Rate of change in strength is not a measurable variable; rather, it is calculated from strength measurements taken at various time intervals during the training. So the study design would be a 2 × 2 × 3 factorial with independent variables of time (pretest or posttest), experience (novice or advanced), and training (isokinetic, isotonic, or isometric) and a dependent variable of strength. The statistical design , however, would be a 2 × 3 factorial with independent variables of experience (novice or advanced) and training (isokinetic, isotonic, or isometric) and a dependent variable of strength gain. Note that data were collected according to a 3-factor design but were analyzed according to a 2-factor design and that the dependent variables were different. So a single design statement, usually a statistical design statement, would not communicate which data were collected or how. Readers would be left to figure out on their own how the data were collected.

MULTIVARIATE RESEARCH AND THE NEED FOR STUDY DESIGNS

With the advent of electronic data gathering and computerized data handling and analysis, research projects have increased in complexity. Many projects involve multiple dependent variables measured at different times, and, therefore, multiple design statements may be needed for both data collection and statistical analysis. Consider, for example, a study of the effects of heat and cold on neural inhibition. The variables of H max and M max are measured 3 times each: before, immediately after, and 30 minutes after a 20-minute treatment with heat or cold. Muscle temperature might be measured each minute before, during, and after the treatment. Although the minute-by-minute data are important for graphing temperature fluctuations during the procedure, only 3 temperatures (time 0, time 20, and time 50) are used for statistical analysis. A single dependent variable H max :M max ratio is computed to illustrate neural inhibition. Again, a single statistical design statement would tell little about how the data were obtained. And in this example, separate design statements would be needed for temperature measurement and H max :M max measurements.

As stated earlier, drawing conclusions from the data depends more on how the data were measured than on how they were analyzed. 3 , 6 , 7 , 13 So a single study design statement (or multiple such statements) at the beginning of the “Methods” section acts as a road map to the study and, thus, increases scientists' and readers' comprehension of how the experiment was conducted (ie, how the data were collected). Appropriate study design statements also increase the accuracy of conclusions drawn from the study.

CONCLUSIONS

The goal of scientific writing, or any writing, for that matter, is to communicate information. Including 2 design statements or subsections in scientific papers—one to explain how the data were collected and another to explain how they were statistically analyzed—will improve the clarity of communication and bring praise from readers. To summarize:

- Purge from your thoughts and vocabulary the idea that experimental design and statistical design are synonymous.

- Study or experimental design plays a much broader role than simply defining and directing the statistical analysis of an experiment.

- A properly written study design serves as a road map to the “Methods” section of an experiment and, therefore, improves communication with the reader.

- Study design should include a description of the type of design used, each factor (and each level) involved in the experiment, and the time at which each measurement was made.

- Clarify when the variables involved in data collection and data analysis are different, such as when data analysis involves only a subset of a collected variable or a resultant variable from the mathematical manipulation of 2 or more collected variables.

Acknowledgments

Thanks to Thomas A. Cappaert, PhD, ATC, CSCS, CSE, for suggesting the link between R.A. Fisher and the melding of the concepts of research design and statistics.

Want to Get your Dissertation Accepted?

Discover how we've helped doctoral students complete their dissertations and advance their academic careers!

Join 200+ Graduated Students

Get Your Dissertation Accepted On Your Next Submission

Get customized coaching for:.

- Crafting your proposal,

- Collecting and analyzing your data, or

- Preparing your defense.

Trapped in dissertation revisions?

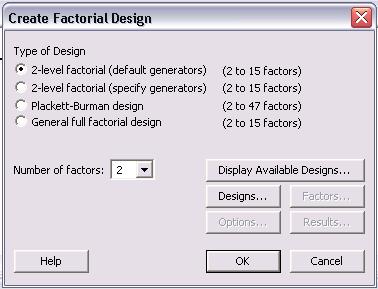

Design of experiments, published by branford mcallister on july 27, 2023 july 27, 2023.

Last Updated on: 3rd February 2024, 01:28 am

Among several quantitative research alternatives is the experimental method . Experimentation is a very rigorous technique to control the conditions during data collection. This is a requirement when the objective is to determine cause and effect.

Experimentation is suitable for dissertations and theses, and used quite often to investigate real-world problems.

There are principles of experimentation that can be applied to all quantitative research methods—even when we are only interested in correlation between variables or simply performing comparative or descriptive analysis.

In this article, I will describe the principles of experimentation, explain when and how they are used, and discuss how experiments are planned and executed.

What is Design of Experiments?

The science of experimentation was formalized about 100 years ago in England by R. A. Fisher. The principles are captured in the term, design of experiments —often called DOE or experimental design .

The central idea is to carefully and logically plan and execute data collection and analysis to control the factors that are hypothesized to influence a measurable outcome . The outcome or response is a numerical assessment of behavior or performance.

Renowned statistician George Box said, “All experiments are designed experiments — some are poorly designed, some are well-designed.”

So, the intent of experimental design is to design experiments properly so we can reliably assess the influence that the control factors have on the outcome. The techniques have been proven, mathematically, to be more effective (accurate) in identifying influential factors and their impact. And, the techniques yield the most efficient use of resources for that task.

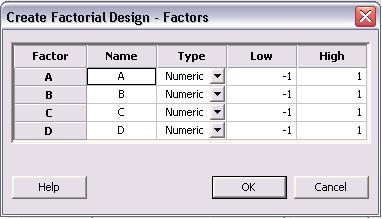

Variables – Definitions

Response variables are the measures of performance, behavior, or attributes of a system, process, population, group, or activity. They are objective, measurable, and quantitative. They represent the result or outcome of a process. For example: academic test scores.

Control factors include conditions that might influence performance, behavior, or attributes of a process, system, or activity. They can be either controlled or measured . These might include, for example, environmental conditions (day/night, weather); operational conditions (school, curriculum).

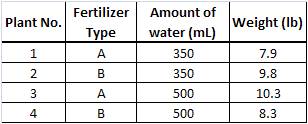

Let’s say we wish to analyze the impact that a new arithmetic curriculum has on elementary students, compared to the curriculum currently in use. The outcome (or response) is measured using a diagnostic test (comparing it to a pre-test or a control group). So, one control factor is type of curriculum .

But, we also postulate that two other factors, gender and school, may be influential. We plan an experiment to control the three factors: curriculum (CUR), school (SCH), and gender (GDR). Let’s say for the sake of illustrating the concepts that there are two versions of the curriculum (old and new), two schools, and two genders (male and female).

We’ll carry this example through the article.

Over 50% of doctoral candidates don’t finish their dissertations.

Factorial Experiments

The purest form of a controlled, designed experiment is the factorial experiment .

A factorial experiment varies all of the control factors together instead of one at a time or some other scheme. A full factorial design investigates all combinations of factor levels.

Factorial designs provide the most efficient and effective method of assessing the influence of control factors across the entire factor space. The influence is assessed using analysis of variance (ANOVA).

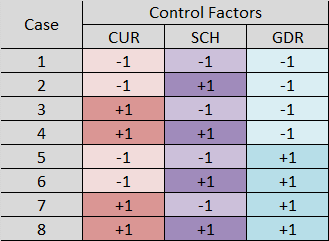

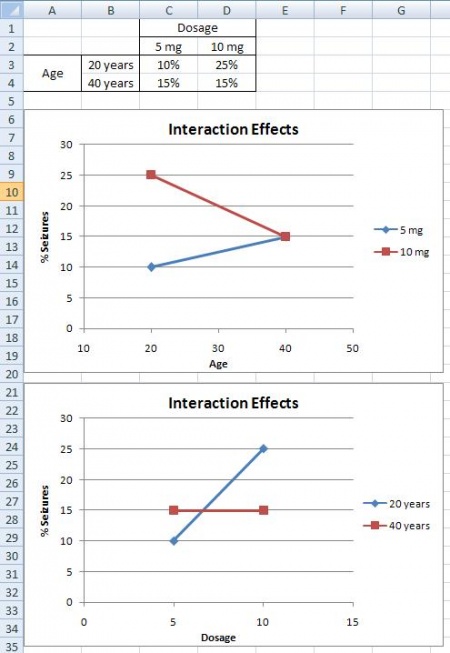

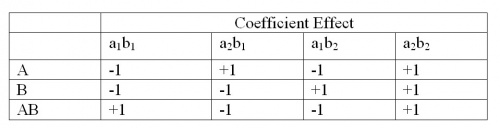

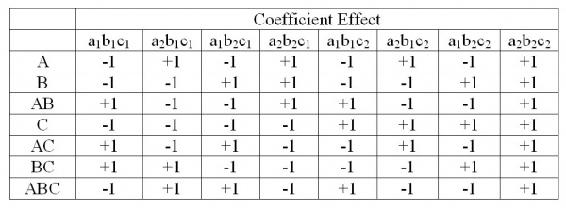

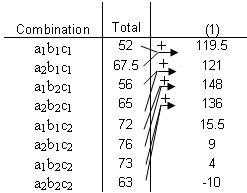

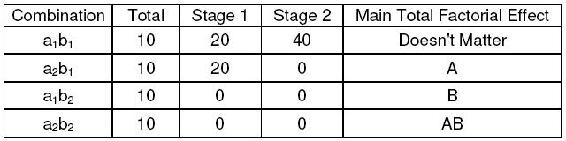

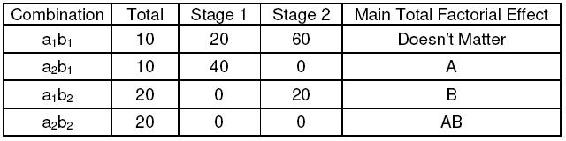

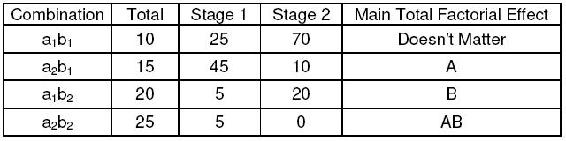

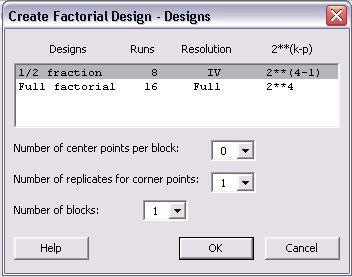

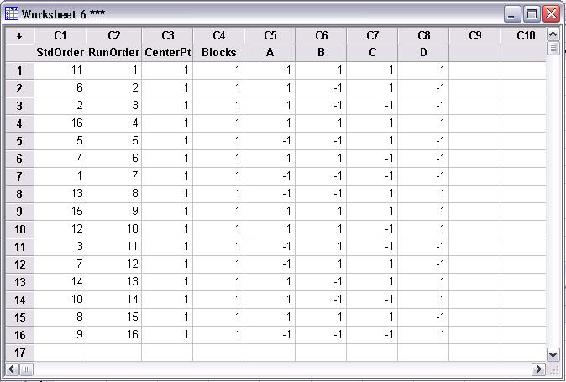

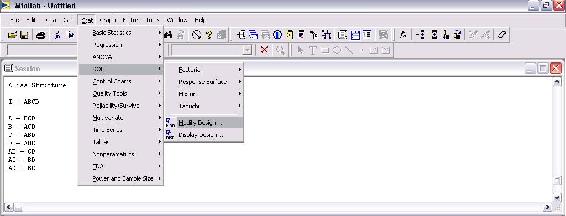

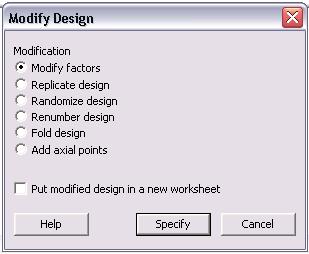

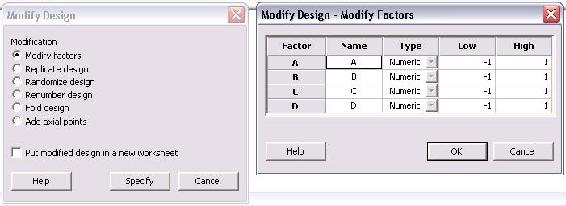

In our example, the experiment has three control factors (CUR, SCH, and GDR), at two levels each. The matrix of control factors and their levels is illustrated in this table:

We use here a form of coding called effects coding . We represent two-level factors with +1 and -1. When there are factors with more levels, effects coding can employ other numbers, as long as the codes for any factor sum to zero. We will save the discussion on factor coding for another article.

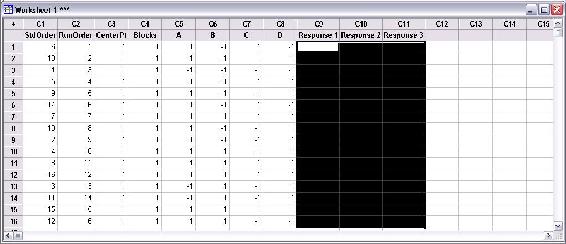

We can see that in our experiment, there are 2 x 2 x 2 = 2 3 = 8 combinations of factor levels. The table represents those combinations as 8 cases .

It is likely that we would need more sample size than 8. Sample size enables the following:

- Adequate precision (effect size).

- Adequate statistical power (the inverse of the probability of a Type II or false negative statistical error).

- Adequate statistical confidence (the inverse of the probability of a Type I or false positive statistical error).

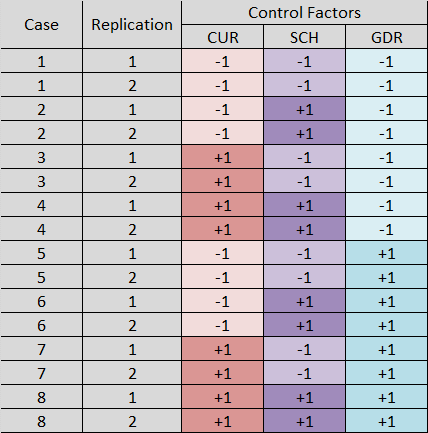

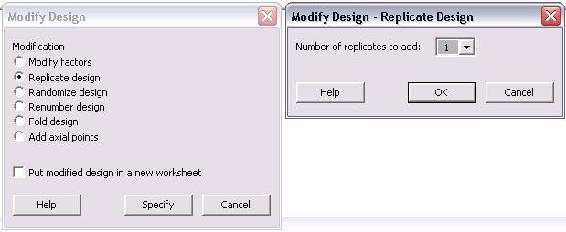

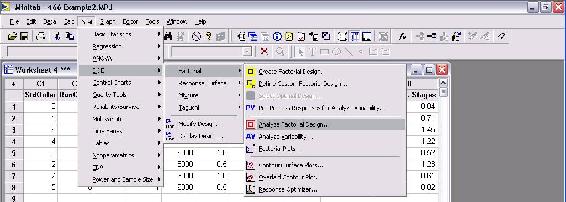

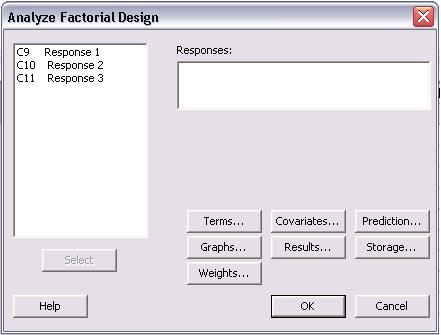

To get more sample size, we simply replicate (i.e., repeat) the cases. That is, we sample multiples of each case as shown here (replication of 2):

The sample size needed, and hence the replication of cases, is computed using an app such as G*Power (Faul et al. 2009). In this example, we have 8 cases, replicated twice, for a sample size of 16.

Fractional Factorial Designs

Sometimes it is not possible to run all possible combinations of factor levels (perhaps insufficient resources to execute a full factorial with replication). As an alternative, we can use a mathematically derived subset of the entire set of combinations of the full factorial, called a fractional factorial .

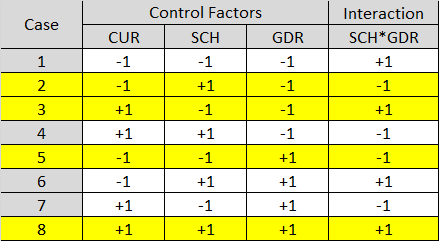

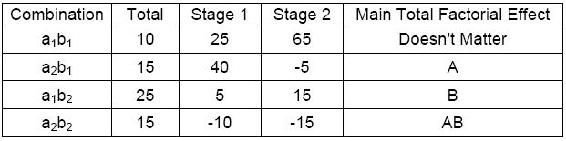

For example, if instead of our full factorial, we employ a logically derived subset of the original 8 cases as highlighted here, and replicate these 4 cases (a half fractional factorial ):

The cases are chosen by aliasing or confounding a two-factor interaction (SCH*GDR) with the control factor, CUR. The assumption is that the two-factor interaction SCH*GDR is not meaningful. So, the interaction is confounded with CUR and its effects are not distinguishable from the effects of CUR.

With proper sample size, a fractional factorial is capable of identifying significant main effects and interactions.

Major Principles of Experimental Design

The major principles of experimental design include the following:

- Quantitative, measurable response variables—outputs of a process or system.

- Precision when measuring response variables.

- Control factors and independent variables—inputs to a process or system.

- A real-world process tested with an instrument (a test, observation, or simulation experiment using a model of the real system or process).

- Designed experiments—controlling the factors and measuring the associated variation in the response variables.

- Factorial or fractional factorial designs—assessing all combinations/cases.

- Orthogonality among levels of the control factors.

- Power analysis (sample size calculations based on effect size, statistical confidence, and statistical power).

- Replication of cases to achieve minimum required sample size.

- Random selection of cases (combinations of control factors and their levels).

Even in quasi- or non-experimental designs, many of these principles can and should be applied when planning and conducting research and analysis. For example, a non-experimental comparative study using a questionnaire should incorporate these attributes:

- A quantitative, measurable response variable informed by the average score on a subset of the questions.

- A control over the participant demographics achieved with stratified sampling.

- A factorial design assessing all combinations of demographics (cases).

- Power analysis (calculating sample size based on effect size, confidence, and power).

- Random selection of cases within each stratum.

What Experimental Design Contributes

Experimental design was developed to assess the influence that predictors have on response variables (system outputs). That is, to facilitate a mathematically rigorous assessment of how a change in inputs results in a corresponding change in outputs .

The essential idea behind a designed experiment is control—to purposefully manipulate the values or levels of the control factors in order to measure the corresponding change in response variables. This is done in a way that mathematically associates the change in system performance within controlled, measurable conditions.

In some analyses, it may be desirable or necessary to allow some predictors to vary, randomly, within a range; instead of setting fixed values. In this case, though there is an impact on statistical power and confidence, at the very least these control factors are measured as precisely as possible so that their variation can be associated with variation in the response variable. In this situation, multiple linear regression is the analysis tool.

Experimental design permits us to accomplish these objectives reliably:

- Characterize the sensitivity of response variables to changes in the predictors.

- Determine which predictors are clearly influential.

- Identify which predictors are clearly not significant, then reduce the number of cases to analyze.

- Identify interactions between control factors.

- Identify factors that are not significant individually, but moderators of other predictors.

- Predict system performance or behavior.

- Identify key cases for subject matter expert interpretation.

- Use the same control factors and common response variables during separate analyses and using different research designs and methods.

- Combine or compare performance and behavior across research designs and methods.

Experimental Design and Analysis Considerations

Any experiment or analysis should be planned to address a research problem, purpose, and research question. Planning should not only respond to the fundamental objectives of the research, but also account for resource limitations, such as

- Capacity (for example, human effort and computer resources).

- Competing demands for the resources (money).

Therefore, it is essential to

- Prioritize the use of scarce resources.

- Use efficient methodologies (experimental designs and analytical methods).

- Seek no more precision than is needed to answer a question or meet an analytical objective.

An experimental design and analytical method are chosen that, within the resource constraints, offer the greatest potential to address the analytical objective that motivated the experiment. There will be tradeoffs among competing attributes of various designs (e.g., trading statistical precision against sample size).

The following are some considerations for choosing a design that answers questions adequately, maximizes efficiency, and makes appropriate tradeoffs in experimental attributes.

Sequential Experimentation

Sequential experimentation involves a linked series of experiments:

- Building on what is learned through analysis in the previous experiment.

- Refining understanding of the system or process—the significant and non-significant explanatory factors.

- Reducing factor space based on analysis-based factor screening —smaller, more focused, more efficient follow-on experiments.

- Refining and re-focusing analytical objectives, methodologies, and statistical tests.

- Investigating specific cases where performance or behavior is unexpected or interesting.

- Using data previously collected and analyzed to inform future experiments.

- Computing variance in response variables that aids in performing sample size calculations for subsequent experiments.

Orthogonality

In an orthogonal design (as in a factorial experiment), the main effect of each control factor can be estimated independently without confounding the effect of one control factor by another.

In other words, the estimated effect of one control factor will not interfere with the estimated effect of another control factor.

The importance of orthogonal designs is their capability to minimize the effects of collinearity . A confident estimate of control factor effects leads to understanding the true system performance.

Factor Interactions

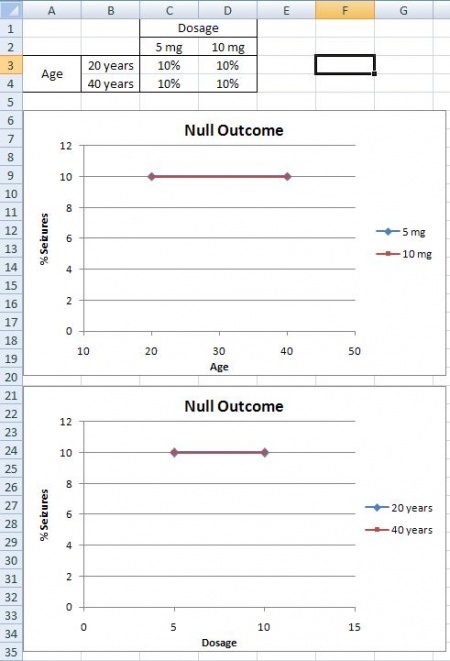

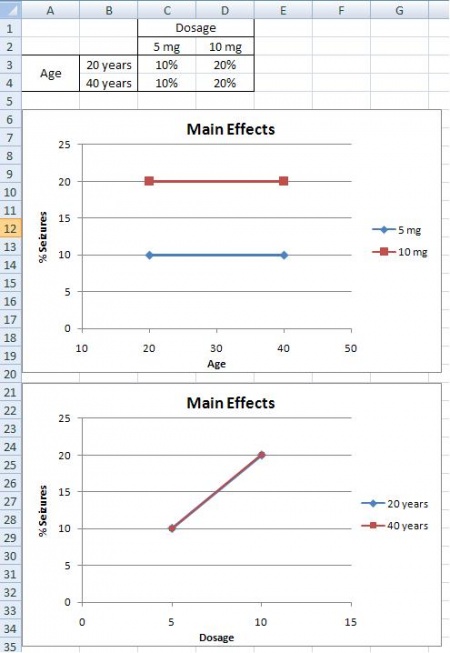

Factorial designs enable the experimenter to investigate not only the individual effects (or influence) of each control factor (main effects) but the interaction of control factors with each other.

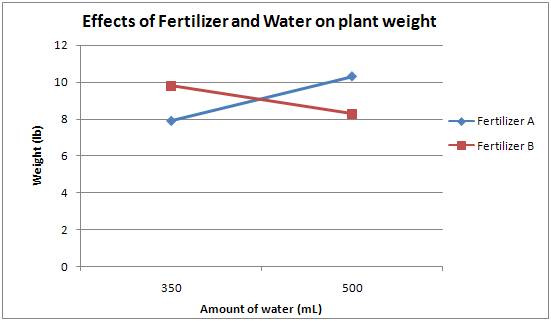

A two-factor interaction means this: The effect that a control factor has on the response depends on the value of a second control factor. For example . . . the influence of one predictor (say, CUR) on the response (Y = test scores) depends on the value of another predictor (say, SCH). The relationship between CUR and Y (the slope or coefficient) changes depending on the value of SCH.

A two-factor interaction represents a qualification on any claim that one control factor influences the response. Some of the most important insights are gleaned from two-factor interactions.

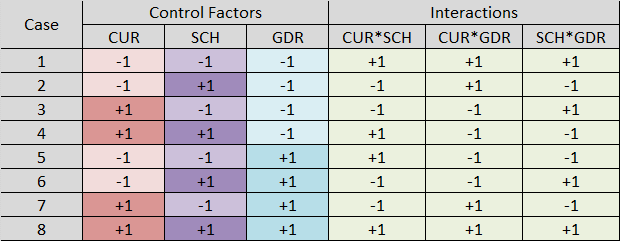

A two-factor interaction is calculated as the product of its two control factors, as shown in this table:

Two-factor interactions are evaluated in ANOVA just as if they were individual predictors.

The conclusions about the significance and magnitude of relationships between control factors and the response variable must include a discussion of the significant two-factor interactions.

Factor Aliasing

Aliasing in a test matrix occurs when the levels for a control factor are correlated with another control factor. This causes the main effects (influence of individual control factors) to be confounded (i.e., the effects cannot be estimated separately from one another).

The alias structure of an experiment matrix characterizes the confounding within the experimental design and can be evaluated using software applications (e.g., SPSS). The alias matrix describes the degree to which the control factors are confounded with one another. A rule of thumb in complex designs is that control factors whose aliasing is less than |0.7| can be evaluated in the model. If the aliasing is greater than |0.7|, one of the control factors should be considered for removal from the analysis; or the control factors should be combined.

Factorial designs avoid problems with factor aliasing. The issue is most prevalent in fractional factorials.

Pseudo-factorial Designs

ANOVA and factorial designs go hand in hand because the predictors (control factors) are categorical variables, suitable for controlling and readily analyzed using ANOVA. The advantage of a factorial design is that it is capable, with high power and confidence, of detecting the influence of control factors on the response, with an efficient use of resources. Another advantage is the ability to calculate and evaluate interactions between control factors.

There are some disadvantages of factorial designs:

- Nonlinearities between the points defined by the control factor levels may not be detected

- The control factor levels may be set at a relatively small number of discrete points.

- The design may not provide a detailed understanding of performance throughout the factor space (i.e., between points).

- Some control factors may not be controllable (e.g., air temperature and pressure).

In these kinds of experiments, ANOVA and pure factorial experiments may not be the best option.

An alternative is a pseudo-factorial experiment . This approach allows some latitude in the values of some of the predictors, while preserving some of the principles of factorial experiments. These designs are built around a factorial experiment in which the quantitative predictors are allowed to vary randomly within an operational range. Those ranges are controlled as if they were categorical.

Final Thoughts

An experiment is a powerful research design for assessing the influence of factors on the performance or behavior of systems, activities, and other phenomena. Proper experimental design is essential when assessing cause and effect. But, the principles of experimental design are also desirable attributes in many if not most quantitative research and analysis.

- Aczel, A. D., & Sounderpandian, J. (2006). Complete business statistics (6 th ed.). McGraw-Hill/Irwin.

- Faul, F., Erdfelder, E., Buchner, A., & Lang, A. G. (2009). Statistical power analyses using G*Power 3.1: Tests for correlation and regression analyses. Behavior Research Methods, 41 , 1149-1160. https://link.springer.com/article/10.3758/BRM.41.4.1149

- Levine, D. M., Berenson, M. L., Krehbiel, T. C., & Stephan, D. F. (2011). Statistics for managers using MS Excel . Prentice Hall/Pearson.

- McAllister, B. (2023). Simulation-based performance assessment. Kindle.

- Montgomery, D. C. (2019). Design and analysis of experiments . Wiley.

- Nicolis, G. & Nicolis, C. (2009). Foundations of complex systems. European Review , 17 (2), 237-248. https://doi.org/10.1017/S1062798709000738

- Snedecor, G. W. & Cochran, W. G. (1973). Statistical methods (6 th ed.). The Iowa State University Press.

- Warner, R. M. (2013). Applied statistics: From bivariate through multivariate techniques . Sage.

Branford McAllister

Branford McAllister received his PhD from Walden University in 2005. He has been an instructor and PhD mentor for the University of Phoenix, Baker College, and Walden University; and a professor and lecturer on military strategy and operations at the National Defense University. He has technical and management experience in the military and private sector, has research interests related to leadership, and is an expert in advanced quantitative analysis techniques. He is passionately committed to mentoring students in post-secondary educational programs. Book a Free Consultation with Branford McAllister

Related Posts

Statistical Analysis

Research: approach, method, and design.

One of the most confusing things about academic research is the inconsistency with terms. This is especially challenging with the concepts of research approach, method, and design.

Definitions Related to Variables

One of the most confusing things about statistics is the lack of a common vernacular of terms and definitions related to variables. The taxonomy of variables is complex and inconsistently applied throughout academia and the Read more…

Data Collection

“To consult the statistician after an experiment is finished is often merely to ask him to conduct a post mortem examination. He can perhaps say what the experiment died of.” Sir R. A. Fisher, English Read more…

Design and Analysis of Experiments

- Living reference work entry

- First Online: 12 January 2022

- Cite this living reference work entry

- Alessandra Mattei 2 ,

- Fabrizia Mealli 2 &

- Anahita Nodehi 2

205 Accesses

2 Citations

2 Altmetric

This chapter provides an overview of the econometric and statistical methods for drawing inference on causal effects from randomized experiments under the potential outcome approach. Well-designed and conducted randomized experiments are generally considered to be the gold standard for obtaining objective causal inferences, but the design and analysis of experiments require to address a number of statistical issues. This chapter first discusses design and inferential issues in classical randomized experiments, by providing insights on the relative advantages and drawbacks of alternative types of classical randomized experiments. Then, it discusses complications arising from clustered randomization, multiple site experiments, and rerandomization as well as issues arising in the design and analysis of randomized experiments with posttreatment complications, sequential and dynamic experiments, and experiments in settings with interference. Recently developed approaches for estimating causal effects using machine learning methods are also described. This chapter concludes with some discussion on the external and internal validity of randomized experiments.

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

Institutional subscriptions

Angrist J, Pischke S (2009) Mostly harmless econometrics. Princeton University Press, New Jersey

Book Google Scholar

Angrist J, Imbens GW, Rubin DB (1996) Identification of causal effects using instrumental variables. J Am Stat Assoc 91:444–472

Article Google Scholar

Athey S, Imbens GW (2015) Machine learning methods for estimating heterogeneous causal effects. ArXiv working paper No 1504.01132v2

Google Scholar

Athey S, Imbens G (2016a) Recursive partitioning for heterogeneous causal effects. Proc Natl Acad Sci 113(27):7353–7360

Athey S, Imbens GW (2016b) The state of applied econometrics – Causality and policy evaluation . ArXiv working paper No 1607.00699

Athey S, Imbens GW (2017) The econometrics of randomized experiments. Handbook of Econ Field Exper, Chap 3 1:73–140

Athey S, Chetty R, Imbens GW, Kang H (2019) The surrogate index: combining short-term proxies to estimate long-term treatment effects more rapidly and precisely. National Bureau of Economic Research, Cambridge, MA

Baccini M, Mattei A, Mealli F (2017) Bayesian inference for causal mechanisms with application to a randomized study for postoperative pain control. Biostatistics 18(4):605–617

Balke A, Pearl J (1997) Bounds on treatment effects from studies with imperfect compliance. J Am Stat Assoc 92(439):1171–1176

Ball S, Bogatz D, Rubin DB, Beaton A (1973). Reading with television: An evaluation of the electric company. A Report to the Children’s Television Workshop. Vol I and II

Banerjee AV, Duflo E (2009) The experimental approach to development economics. Ann Rev Econ 1(1):151–178

Banerjee AV, Cole S, Duflo E, Linden L (2007) Remedying education: evidence from two randomized experiments in India. Q J Econ 122(3):1235–1264

Banerjee AV, Chassang S, Snowberg E (2016) Decision theoretic approaches to experimental design and external validity. North Holland, Amsterdam

Baranov V, Bhalotra S, Biroli P, Maselko J (2020) Maternal depression, women’s empowerment, and parental investment: evidence from a randomized controlled trial. Am Econ Rev 110(3):824–859

Bartolucci F, Grilli L (2011) Modeling partial compliance through copulas in a principal stratification framework. J Am Stat Assoc 106(494):469–479

Belloni A, Chernozhukov V, Hansen C (2014a) High-dimensional methods and inference on structural and treatment effects. J Econ Perspect 28(2):29–50

Belloni A, Chernozhukov V, Hansen C (2014b) Inference on treatment effects after selection among high-dimensional controls. Rev Econ Stud 81(2):608–650

Bia M, Mattei A, Mercatanti A (2020) Assessing causal effects in a longitudinal observational study with truncated outcomes due to unemployment and nonignorable missing data. J Bus Econ Stat

Blanco G, Bia M (2019) Inference for treatment effects of job training on wages: using bounds to compute fisher’s exact p -value. Appl Econ Lett 26(17):1424–1428

Blanco G, Flores CA, Flores-Lagunes A (2013) Bounds on average and quantile treatment effects of job corps training on wages. J Hum Resour 48(3):659–701

Blanco G, Chen X, Flores CA, Flores-Lagunes A (2020) Bounds on average and quantile treatment effects on duration outcomes under censoring, selection, and noncompliance. J Bus Econ Stat 38(4):901–920

Bloom H (1984) Accounting for no-shows in experimental evaluation designs. Eval Rev 8:225–246

Bloom HS, Raudenbush SW, Weiss M, Porter K (2015) Using multisite evaluations to study variation in effects of program assignment. MDRC, New York

Branson Z, Dasgupta T, Rubin DB (2016) Improving covariate balance in 2k factorial designs via rerandomization with an application to a New York city department of education high school study. Ann Appl Stat 10(4):1958–1976

Breiman L (2001) Random forests. Mach Learn 45(1):5–32

Breiman L, Friedman J, Stone CJ, Olshen RA (1984) Classification and regression trees. CRC Press

Bruhn M, McKenzie D (2009) In pursuit of balance: randomization in practice in development field experiments. Am Econ J Appl Econ 1(4):200–232

Card D, Krueger AB (1994) Minimum wages and employment: a case study of the fast-food industry in New Jersey and Pennsylvania. Am Econ Rev 84(4):772–793

Chakraborty B, Murphy SA (2014) Dynamic treatment regimes. Ann Rev Stat App 1(1):447–464

Chattopadhyay R, Duflo E (2004) Women as policy makers: evidence from a randomized policy experiment in India. Econometrica 72(5):1409–1443

Chen X, Flores CA (2015) Bounds on treatment effects in the presence of sample selection and noncompliance: the wage effects of job corps. J Bus Econ Stat 33(4):523–540

Chen H, Geng Z, Zhou XH (2009) Identifiability and estimation of causal effects in randomized trials with noncompliance and completely nonignorable missing data (with discussion). Biometrics 65:675–691

Chernozhukov V, Chetverikov D, Demirer M, Duflo E, Hansen C, Newey WK (2016) Double machine learning for treatment and causal parameters. Technical report, cemmap working paper

Cochran WG, Cox G (1957) Experimental design. Wiley Classics Library

Cohen J (1988) Statistical power for the behavioral sciences, 2nd edn. Routledge

Collins LM, Murphy SA, Bierman KA (2001) Design, and evaluation of adaptive preventive interventions. Prev Sci

Conti E, Duranti S, Mattei A, Mealli F, Sciclone N (2014) The effect of a dropout prevention program on secondary students’ outcomes. Rassegna Italiana di Valutazione 58:15–49

Cox D (1958) The planning of experiments. Wiley, New York

Cuzick J, Edwards R, Segnan N (1997) Adjusting for non-compliance and contamination in randomized clinical trials. Stat Med 16:1017–1039

Dehijia RH, Wahba S (1999) Causal effects in non-experimental studies: reevaluating the evaluation of training program. J Am Stat Assoc 95:1053–1062

Desu MM (2012) Sample size methodology. Elsevier

Ding P (2017) A paradox from randomization-based causal inference. Stat Sci 32(3):331–345

Ding P, Li F (2018) Causal inference: a missing data perspective. Stat Sci 33(2):214–237

Ding P, Feller A, Miratrix L (2019) Decomposing treatment effect variation. J Am Stat Assoc 114(525):304–317

Donner A (1998) Some aspects of the design and analysis of cluster randomization trials. J Royal Stat Soc: Series C (Appl Stat) 47(1):95–113

Donner A, Klar N (2004) Pitfalls of and controversies in cluster randomization trials. Am J Public Health 94(3):416–422

Duflo, E., R. Glennerster, and M. Kremer (2008). Using randomization in development economics research: a toolkit. In T. P. Schultz and J. Strauss (Eds.), Handbook of development economics, Volume 4, pp. 3895–3962. Elsevier, North-Holland

Feller A, Grindal T, Miratrix L, Page LC (2016) Compared to what? Variation in the impacts of early childhood education by alternative care type. Ann Appl Stat 10(3):1245–1285

Feller A, Mealli F, Miratrix L (2017) Principal score methods: assumptions, extensions and practical considerations. J Educ Behav Stat 42(6):726–758

Fisher RA (1925) Statistical methods for research workers, 1st edn. Oliver and Boyd, Edinburgh

Fisher RA (1926) The arrangement of field experiments. J Minist Agric Great Britain 33:503–513

Fisher RA (1935) The design of experiments, 1st edn. Oliver and Boyd, London

Forastiere L, Mealli F, Miratrix LW (2018) Posterior predictive p– values with fisher randomization tests in noncompliance settings: test statistics vs discrepancy measures. Bayesian Anal 13(3):681–701

Foster JC, Taylor JM, Ruberg SJ (2011) Subgroup identification from randomized clinical trial data. Stat Med 30(24):2867–2880

Frangakis CE, Rubin DB (1999) Addressing complications of intention-to-treat analysis in the presence of combined all-or-none treatment-noncompliance and subsequent missing outcomes. Biometrika 86:365–379

Frangakis CE, Rubin DB (2002) Principal stratification in causal inference. Biometrics 58(1):21–29

Frangakis CE, Brookmeyer R, Varadhan R, Mahboobeh S, Vlahov D, Strathdee S (2004) Methodology for evaluating a partially controlled longitudinal treatment using principal stratification, with application to a needle exchange program. J Am Stat Assoc 99:239–249

Freedman DA (2008) On regression adjustments to experimental data. Adv Appl Math 40(2):180–193

Frumento P, Mealli F, Pacini B, Rubin DB (2012) Evaluating the effect of training on wages in the presence of noncompliance, nonemployment, and missing outcome data. J Am Stat Assoc 107(498):450–466

Gilbert PB, Hudgens MG (2008) Evaluating candidate principal surrogate endpoints. Biometrics 64(4):1146–1154

Gilbert PB, Bosch RJ, Hudgens MG (2003) Sensitivity analysis for the assessment of causal vaccine effects on viral load in HIV vaccine trials. Biometrics 59(3):531–541

Glennerster R (2016) The practicalities of running randomized evaluations: partnerships, measurement, ethics, and transparency. North Holland

Glennerster R, Takavarasha K (2013) Running randomized evaluations: a practical guide. Princeton University Press, New Jersey

Green DP, Kern HL (2010) Detecting heterogenous treatment effects in large-scale experiments using Bayesian additive regression trees. In: The annual summer meeting of the society of political methodology, University of Iowa. Citeseer

Green DP, Kern HL (2012) Modeling heterogeneous treatment effects in survey experiments with Bayesian additive regression trees. Public Opin Q 76(3):491–511

Grilli L, Mealli F (2008) Nonparametric bounds on the causal effect of university studies on job opportunities using principal stratification. J Educ Behav Stat 33(1):111–130

Gustafson P (2015) Bayesian inference for partially identified models exploring the limits of limited data. Taylor & Francis

Halloran ME, Hudgens MG (2016) Dependent happenings: a recent methodological review. Curr Epidemiol Rep 3(4):297–305

Han S (2019) Optimal dynamic treatment regimes and partial welfare ordering. arXiv preprint arXiv:1912.10014

Han S (2020) Nonparametric identification in models for dynamic treatment effects. J Econ

Hastie T, Tibshirani R, Friedman J (2009) The elements of statistical learning: data mining, inference, and prediction. Springer Science & Business Media

Hawley WA, Phillips-Howard PA, ter Kuile FO, Terlouw DJ, Vulule JM, Ombok M, Nahlen BL, Gimnig JE, Kariuki SK, Kolczak MS, Hightower AW (2003) Community-wide effects of permethrin-treated bed nets on child mortality and malaria morbidity in western Kenya. Am J Trop Med Hyg 68:121–127

Holland P (1986) Statistics and causal inference (with discussion). J Am Stat Assoc 81:945–970

Holland P (1988) Causal inference, path analysis, and recursive structural equations models (with discussion). Sociol Methodol 18:449–484

Hudgens MG, Halloran ME (2006) Causal vaccine effects on binary post-infection outcomes. J Am Stat Assoc 101:51–64

Hudgens MG, Halloran ME (2008) Toward causal inference with interference. J Am Stat Assoc 103(482):832–842

Ibarra LG, McKenzie D, Ruiz-Ortega C (2019) Estimating treatment effects with big data when take-up is low: an application to financial education. World Bank Econ Rev

Imai K, Ratkovic M (2013) Estimating treatment effect heterogeneity in randomized program evaluation. Ann Appl Stat 7(1):443–470

Imbens GW (2004) Nonparametric estimation of average treatment effects under exogeneity: a review. Rev Econ Stat 89:1–29

Imbens GW (2014) Instrumental variables: An econometricians perspective. Stat Sci 89(3):323–358

Imbens GW (2018) Comment on “understanding and misunderstanding randomized controlled trials” by cartwright and Deaton. Soc Sci Med 210:50–52

Imbens GW, Rosenbaum P (2005) Randomization inference with an instrumental variable. J R Stat Soc Ser A 168:109–126

Imbens GW, Rubin DB (1997a) Bayesian inference for causal effects in randomized experiments with noncompliance. Ann Stat 25:305–327

Imbens GW, Rubin DB (1997b) Estimating outcome distributions for compliers in instrumental variable models. Rev Econ Stud 64(3):555–574

Imbens GW, Rubin DB (2015) Causal inference for statistics, social, and biomedical sciences. An introduction. Cambridge University Press, New York

Imbens GW, Wooldridge JM (2009) Recent developments in the econometrics of program evaluation. J Econ Lit 47:5–86

Jin H, Rubin DB (2008) Principal stratification for causal inference with extended partial compliance. J Am Stat Assoc 103:101–111

Jo B (2002) Statistical power in randomized intervention studies with noncompliance. Psychol Methods 7(2):178–193

Jo B, Vinokur AD (2011) Sensitivity analysis and bounding of causal effects with alternative identifying assumptions. J Educ Behav Stat 36(4):415–440

Johansson P, Schultzberg M, Rubin D (2019) On optimal re-randomization designs. Technical report

Kallus N (2018) Optimal a priori balance in the design of controlled experiments. J Roy Stat Soc: Series B (Stat Methodol) 80(1):85–112

Klasnja P, Hekler EB, Shiffman S, Boruvka A, Almirall D, Tewari A, Murphy SA (2015) Microrandomized trials: an experimental design for developing just-in-time adaptive interventions. Health Psychol 34:1220–1228

Kling JR, Liebman JB (2004) Experimental analysis of neighborhood effects on youth. John F. Kennedy School of Government, Harvard University

Kumar S, Nilsen WJ, Abernethy A, Atienza A, Patrick K, Pavel M, Riley WT, Shar A, Spring B, Spruijt-Metz D, Hedeker D (2013) Mobile health technology evaluation: the mhealth evidence workshop. Am J Prev Med 45(2):228–236

Laber EB, Lizotte DJ, Qian M, Pelham WE, Murphy SA (2014) Dynamic treatment regimes: technical challenges and applications. Electron J Stat 8(1):1225

Lalonde RJ (1986) Evaluating the econometric evaluations of training programs with experimental data. Am Econ Rev 76:604–620

Lavori PW, Dawson R (2000) A design for testing clinical strategies: biased adaptive within-subject randomization. J R Stat Soc A Stat Soc 163(1):29–38

Lee DS (2009) Training, wages, and sample selection: estimating sharp bounds on treatment effects. Rev Econ Stud 76:1071–1102

Lee JJ, Forastiere L, Miratrix L, Pillai NS (2017) More powerful multiple testing in randomized experiments with non-compliance. Stat Sin 27(3):1319–1345

Lei, L. and P. Ding (2021). Regression adjustment in completely randomized experiments with a diverging number of covariates. Biometrika 10(4):815–828.

Li, X. and P. Ding (2020). Rerandomization and regression adjustment. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 82(1):241–268.

Li X, Ding P, Rubin DB (2018a) Asymptotic theory of re-randomization in treatment-control experiments. Proc Natl Acad Sci U S A 115(37):9157–9162

Li X, Ding P, Rubin DB (2018b) Re-randomization in 2 K factorial experiments. Ann Stat 48(1):43–63

Little RJ, Rubin DB (2000) Causal effects in clinical and epidemiological studies via potential outcomes: concepts and analytical approaches. Annu Rev Public Health 21(1):121–145

Luckett DJ, Laber EB, Kahkoska AR, Maahs DM, Mayer-Davis E, Kosorok MR (2019) Estimating dynamic treatment regimes in mobile health using v-learning. J Am Stat Assoc:1–34

Manski CF (1990) Nonparametric bounds on treatment effects. Am Econ Rev 80(2):319–323

Manski CF (1996) Learning about treatment effects from experiments with random assignment of treatments. J Hum Resour:709–733

Manski CF (2003) Partial identification of probability distributions. Springer Science & Business Media

Manski CF (2013) Public policy in an uncertain world. Harvard University Press

Mattei A, Mealli F (2007) Application of the principal stratification approach to the Faenza randomized experiment on breast self examination. Biometrics 63:437–446

Mattei A, Mealli F (2011) Augmented designs to assess principal strata direct effects. J Roy Stat Soc – Series B 73(5):729–752

Mattei A, Li F, Mealli F (2013) Exploiting multiple outcomes in Bayesian principal stratification analysis with application to the evaluation of a job training program. Ann Appl Stat 7(4):2336–2360

Mattei A, Mealli F, Pacini B (2014) Identification of causal effects in the presence of nonignorable missing outcome values. Biometrics 70:278–288

Mealli F, Mattei A (2012) A refreshing account of principal stratification. Int J Biostat 8(1)

Mealli F, Pacini B (2013) Using secondary outcomes to sharpen inference in randomized experiments with noncompliance. J Am Stat Assoc 108(503):1120–1131

Mealli F, Rubin DB (2015) Clarifying missing at random and related definitions and implications when coupled with exchangeability. Biometrika 102:995–1000

Mealli F, Imbens GW, Ferro S, Biggeri A (2004) Analyzing a randomized trial on breast self-examination with noncompliance and missing outcomes. Biostatistics 5(2):207–222

Mealli F, Pacini B, Rubin DB (2011) Statistical inference for causal effects. In: Modern analysis of customer surveys: with applications using R. Wiley, pp 171–192

Chapter Google Scholar

Montgomery DC (2017) Design and analysis of experiments. Wiley

Morgan KL, Rubin DB (2012) Rerandomization to improve covariate balance in experiments. Ann Stat 40(2):1263–1282

Morgan KL, Rubin DB (2015) Re-randomization to balance tiers of covariates. J Am Stat Assoc 110(512):1412–1421

Murphy SA (2003) Optimal dynamic treatment regimes. J Roy Stat Soc: Series B (Stat Methodol) 65(2):331–355

Murphy SA, van der Laan MJ, Robins JM, C. P. P. R. Group (2001) Marginal mean models for dynamic regimes. J Am Stat Assoc 96(456):1410–1423

Murphy K, Myors B, Wollach A (2014) Statistical power analysis. Routledge

Murray DM (1998) Design and analysis of group randomized trials. Oxford University Press, New York

Nahum-Shani I, Hekler EB, Spruijt-Metz D (2015) Building health behavior models to guide the development of just-in-time adaptive interventions: a pragmatic framework. Health Psychol 34(S):1209

National Forum on Early Childhood Policy and Programs (2010) Understanding the head start impact study. Technical report

Neyman J (1923) On the application of probability theory to agricultural experiments. Essay on principles. Stat Sci 5:465–480

Pearl J (2000) Causality: models, reasoning and inference. Cambridge University Press, Cambridge

Powers D, Swinton S (1984) Effects of self-study for coachable test item types. J Educ Meas 76:266–278

Ratkovic, M. and D. Tingley (2017). Causal inference through the method of direct estimation. arXiv working paper N.1703.05849

Raudenbush SW (2014) Random coefficient models for multi-site randomized trials with inverse probability of treatment weighting. Department of Sociology, University of Chicago, Chicago

Raudenbush SW, Bloom HS (2015) Learning about and from a distribution of program impacts using multi site trials. Am J Eval 36(4):475–499

Robins JM (1986) A new approach to causal inference in mortality studies with sustained exposure periods application to control of the healthy worker survivor effect. Math Model 7:1393–1512

Robins JM (1997) Causal inference from complex longitudinal data. In: Latent variable modeling and applications to causality. Springer, pp 69–117

Robins JM, Greenland S (1992) Identifiability and exchangeability for direct and indirect effects. Epidemiology 3:143–155

Rosembaum PR, Rubin DB (1983a) Assessing sensitivity to an unobserved binary covariate in an observational study with binary outcome. J R Stat Soc Ser B 45:212–218

Rosembaum PR, Rubin DB (1983b) The central role of the propensity score in observational studies for causal effects. Biometrika 70:41–45

Rosenbaum PR (1988) Permutation tests for matched pairs. Appl Stat 37:401–411

Rosenbaum PR (2002) Observational studies. Springer, New York

Rubin DB (1974) Estimating causal effects of treatments in randomized and nonrandomized studies. J Educ Psychol 66:688–701

Rubin DB (1975) Bayesian inference for causality: the importance of randomization. Proc Soc Stat Sect Am Stat Assoc:233–239

Rubin DB (1976) Inference and missing data. Biometrika 63(3):581–592

Rubin DB (1977) Assignment to treatment group on the basis of a covariate. J Educ Stat 2(1):1–26

Rubin DB (1978) Bayesian inference for causal effects: the role of randomization. Ann Stat 6:34–58

Rubin DB (1979) Discussion of “conditional independence in statistical theory” by a.P. Dawid. J Roy Stat Soc Series B 41:27–28

Rubin DB (1980) Discussion of “randomization analysis of experimental data in the fisher randomization test” by Basu. J Am Stat Assoc 75:591–593

Rubin DB (1990) Formal modes of statistical inference for causal effects. J Stat Plan Infer 25:279–292

Rubin DB (1998) More powerful randomization-based p-values in double-blind trials with non-compliance. Stat Med 17:371–385

Rubin DB (2006) Causal inference through potential outcomes and principal stratification: application to studies with censoring due to death. Stat Sci 21(3):299–309

Rubin DB (2008a) Comment: the design and analysis of gold standard randomized experiments. J Am Stat Assoc 103:1350–1353

Rubin DB (2008b) Statistical inference for causal effects, with emphasis on application in epidemiology and medical statistics. Handbook Stat 27:28–62

Rubin DB, Zell ER (2010) Dealing with noncompliance and missing outcomes in a randomized trial using Bayesian technology: prevention of perinatal sepsis clinical trial, Soweto, South Africa. Stat Methodol 7:338–350

Shadish W, Cook T, Campbell D (2002) Experimental and Quasi-experimental designs for generalized causal inference. Houghton Mifflin

Sobel ME (2006) What do randomized studies of housing mobility demonstrate? Causal inference in the face of interference. J Am Stat Assoc 101(476):1398–1407

Sobel ME, Muthen B (2012) Compliance mixture modelling with a zero-effect complier class and missing data. Biometrics 68:1037–1045

Tamer E (2010) Partial identification in econometrics. Ann Rev Econ 2(1):167–195

Tchetgen Tchetgen EJ, VanderWeele TJ (2012) On causal inference in the presence of interference. Stat Methods Med Res 21(1):55–75

Tian L, Alizadeh AA, Gentles AJ, Tibshirani R (2014) A simple method for estimating interactions between a treatment and a large number of covariates. J Am Stat Assoc 109(508):1517–1532

Tibshirani R (1996) Regression shrinkage and selection via the lasso. J Roy Stat Soc: Series B (Methodol) 58(1):267–288

VanderWeele TJ (2008) Simple relations between principal stratification and direct and indirect effects. Stat Probab Lett 78:2957–2962

Vapnik VN (1999) An overview of statistical learning theory. IEEE Trans Neural Netw 10(5):988–999

Vapnik VN (2013) The nature of statistical learning theory. Springer Science & Business Media

Wager S, Athey S (2018) Estimation and inference of heterogeneous treatment effects using random forests. J Am Stat Assoc 113(523):1228–1242

Xu G, Wu Z, Murphy SA (2018) Micro-randomized trial. In: Wiley StatsRef: statistics reference online, pp 1–6

Yang F, Ding P (2018) Using survival information in truncation by death problems without the monotonicity assumption. Biometrics 74(4):1232–1239

Yau LH, Little RJ (2001) Inference for the complier-average causal effect from longitudinal data subject to non-compliance and missing data, with application to a job training assessment for the unemployed. J Am Stat Assoc

Yuan LH, Feller A, Miratrix LW (2019) Identifying and estimating principal causal effects in a multi-site trial of early college high schools. Ann Appl Stat 13(3):1348–1369

Zhang JZ, Rubin DB (2003) Estimation of causal effects via principal stratification when some outcomes are truncated by death. J Educ Behav Stat 28(4):353–368

Zhang JZ, Rubin DB, Mealli F (2008) Evaluating the effects of job training programs on wages through principal stratification. Adv Econ 21:117–145

Zhang JL, Rubin DB, Mealli F (2009) Likelihood-based analysis of causal effects of job-training programs using principal stratification. J Am Stat Assoc 104:166–176

Zhou Q, Ernst PA, Morgan KL, Rubin DB, Zhang A (2018) Sequential re-randomization. Biometrika 105(3):745–752

Zigler CM, Belin TR (2012) A bayesian approach to improved estimation of causal effect predictiveness for a principal surrogate endpoint. Biometrics 68(3):922–932

Download references

Acknowledgments

Responsible Section Editor: Alfonso Flores-Lagunes. The chapter has benefited from valuable comments of the editors and anonymous referees. The chapter was supported by the Department of Statistics, Computer Science, Applications of the University of Florence, (authors’ affiliation) through funding received as Department of Excellence 2018-2022 from the Italian Ministry of Education, University and Research (MIUR). There is no conflict of interest.

Author information

Authors and affiliations.

Department of Statistics, Computer Science, Applications “Giuseppe Parenti”, University of Florence, Florence Center for Data Science, Florence, Italy

Alessandra Mattei, Fabrizia Mealli & Anahita Nodehi

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Fabrizia Mealli .

Editor information

Editors and affiliations.

UNU-MERIT & Princeton University, Maastricht, The Netherlands

Klaus F. Zimmermann

Section Editor information

Center for Policy Research; Institute of Labor Economics (IZA) and Global Labor Organization (GLO), Syracuse University, Syracuse, NY, USA

Alfonso Flores-Lagunes

Rights and permissions

Reprints and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this entry

Cite this entry.

Mattei, A., Mealli, F., Nodehi, A. (2021). Design and Analysis of Experiments. In: Zimmermann, K.F. (eds) Handbook of Labor, Human Resources and Population Economics. Springer, Cham. https://doi.org/10.1007/978-3-319-57365-6_40-1

Download citation

DOI : https://doi.org/10.1007/978-3-319-57365-6_40-1

Received : 02 August 2021

Accepted : 04 August 2021

Published : 12 January 2022

Publisher Name : Springer, Cham

Print ISBN : 978-3-319-57365-6

Online ISBN : 978-3-319-57365-6

eBook Packages : Springer Reference Economics and Finance Reference Module Humanities and Social Sciences Reference Module Business, Economics and Social Sciences

- Publish with us

Policies and ethics

- Find a journal

- Track your research

- Cookies & Privacy

- GETTING STARTED

- Introduction

- FUNDAMENTALS

Getting to the main article

Choosing your route

Setting research questions/ hypotheses

Assessment point

Building the theoretical case

Setting your research strategy

Data collection

Data analysis

Research design