When you choose to publish with PLOS, your research makes an impact. Make your work accessible to all, without restrictions, and accelerate scientific discovery with options like preprints and published peer review that make your work more Open.

- PLOS Biology

- PLOS Climate

- PLOS Complex Systems

- PLOS Computational Biology

- PLOS Digital Health

- PLOS Genetics

- PLOS Global Public Health

- PLOS Medicine

- PLOS Mental Health

- PLOS Neglected Tropical Diseases

- PLOS Pathogens

- PLOS Sustainability and Transformation

- PLOS Collections

- How to Write Discussions and Conclusions

The discussion section contains the results and outcomes of a study. An effective discussion informs readers what can be learned from your experiment and provides context for the results.

What makes an effective discussion?

When you’re ready to write your discussion, you’ve already introduced the purpose of your study and provided an in-depth description of the methodology. The discussion informs readers about the larger implications of your study based on the results. Highlighting these implications while not overstating the findings can be challenging, especially when you’re submitting to a journal that selects articles based on novelty or potential impact. Regardless of what journal you are submitting to, the discussion section always serves the same purpose: concluding what your study results actually mean.

A successful discussion section puts your findings in context. It should include:

- the results of your research,

- a discussion of related research, and

- a comparison between your results and initial hypothesis.

Tip: Not all journals share the same naming conventions.

You can apply the advice in this article to the conclusion, results or discussion sections of your manuscript.

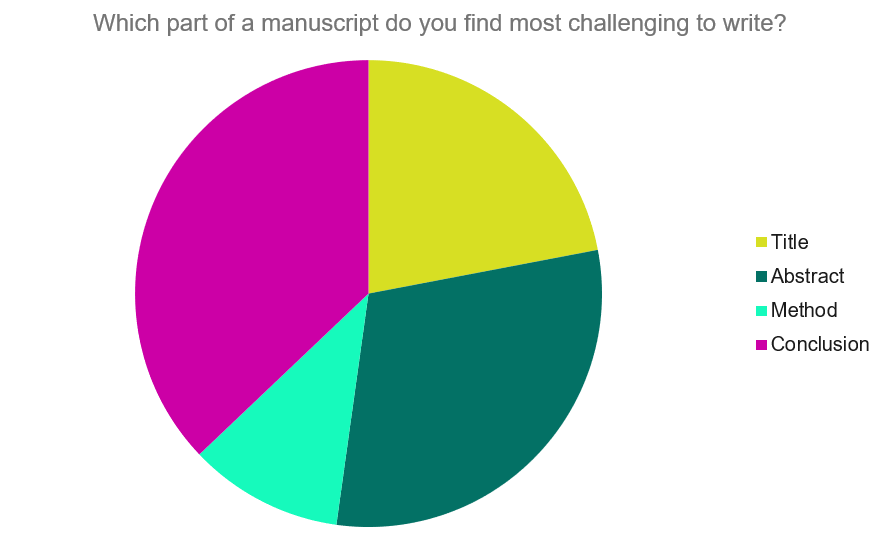

Our Early Career Researcher community tells us that the conclusion is often considered the most difficult aspect of a manuscript to write. To help, this guide provides questions to ask yourself, a basic structure to model your discussion off of and examples from published manuscripts.

Questions to ask yourself:

- Was my hypothesis correct?

- If my hypothesis is partially correct or entirely different, what can be learned from the results?

- How do the conclusions reshape or add onto the existing knowledge in the field? What does previous research say about the topic?

- Why are the results important or relevant to your audience? Do they add further evidence to a scientific consensus or disprove prior studies?

- How can future research build on these observations? What are the key experiments that must be done?

- What is the “take-home” message you want your reader to leave with?

How to structure a discussion

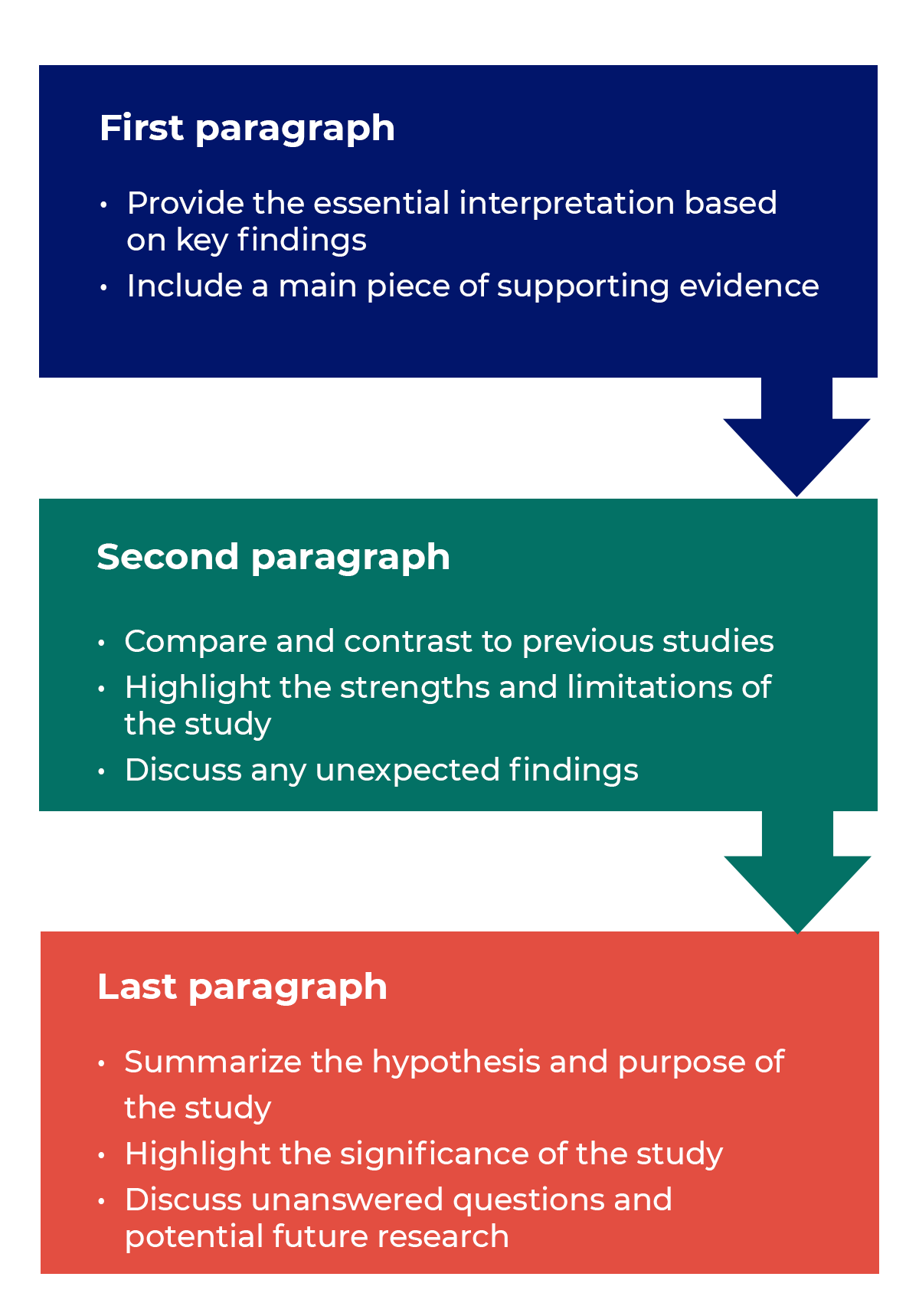

Trying to fit a complete discussion into a single paragraph can add unnecessary stress to the writing process. If possible, you’ll want to give yourself two or three paragraphs to give the reader a comprehensive understanding of your study as a whole. Here’s one way to structure an effective discussion:

Writing Tips

While the above sections can help you brainstorm and structure your discussion, there are many common mistakes that writers revert to when having difficulties with their paper. Writing a discussion can be a delicate balance between summarizing your results, providing proper context for your research and avoiding introducing new information. Remember that your paper should be both confident and honest about the results!

- Read the journal’s guidelines on the discussion and conclusion sections. If possible, learn about the guidelines before writing the discussion to ensure you’re writing to meet their expectations.

- Begin with a clear statement of the principal findings. This will reinforce the main take-away for the reader and set up the rest of the discussion.

- Explain why the outcomes of your study are important to the reader. Discuss the implications of your findings realistically based on previous literature, highlighting both the strengths and limitations of the research.

- State whether the results prove or disprove your hypothesis. If your hypothesis was disproved, what might be the reasons?

- Introduce new or expanded ways to think about the research question. Indicate what next steps can be taken to further pursue any unresolved questions.

- If dealing with a contemporary or ongoing problem, such as climate change, discuss possible consequences if the problem is avoided.

- Be concise. Adding unnecessary detail can distract from the main findings.

Don’t

- Rewrite your abstract. Statements with “we investigated” or “we studied” generally do not belong in the discussion.

- Include new arguments or evidence not previously discussed. Necessary information and evidence should be introduced in the main body of the paper.

- Apologize. Even if your research contains significant limitations, don’t undermine your authority by including statements that doubt your methodology or execution.

- Shy away from speaking on limitations or negative results. Including limitations and negative results will give readers a complete understanding of the presented research. Potential limitations include sources of potential bias, threats to internal or external validity, barriers to implementing an intervention and other issues inherent to the study design.

- Overstate the importance of your findings. Making grand statements about how a study will fully resolve large questions can lead readers to doubt the success of the research.

Snippets of Effective Discussions:

Consumer-based actions to reduce plastic pollution in rivers: A multi-criteria decision analysis approach

Identifying reliable indicators of fitness in polar bears

- How to Write a Great Title

- How to Write an Abstract

- How to Write Your Methods

- How to Report Statistics

- How to Edit Your Work

The contents of the Peer Review Center are also available as a live, interactive training session, complete with slides, talking points, and activities. …

The contents of the Writing Center are also available as a live, interactive training session, complete with slides, talking points, and activities. …

There’s a lot to consider when deciding where to submit your work. Learn how to choose a journal that will help your study reach its audience, while reflecting your values as a researcher…

- USC Libraries

- Research Guides

Organizing Your Social Sciences Research Paper

- 8. The Discussion

- Purpose of Guide

- Design Flaws to Avoid

- Independent and Dependent Variables

- Glossary of Research Terms

- Reading Research Effectively

- Narrowing a Topic Idea

- Broadening a Topic Idea

- Extending the Timeliness of a Topic Idea

- Academic Writing Style

- Applying Critical Thinking

- Choosing a Title

- Making an Outline

- Paragraph Development

- Research Process Video Series

- Executive Summary

- The C.A.R.S. Model

- Background Information

- The Research Problem/Question

- Theoretical Framework

- Citation Tracking

- Content Alert Services

- Evaluating Sources

- Primary Sources

- Secondary Sources

- Tiertiary Sources

- Scholarly vs. Popular Publications

- Qualitative Methods

- Quantitative Methods

- Insiderness

- Using Non-Textual Elements

- Limitations of the Study

- Common Grammar Mistakes

- Writing Concisely

- Avoiding Plagiarism

- Footnotes or Endnotes?

- Further Readings

- Generative AI and Writing

- USC Libraries Tutorials and Other Guides

- Bibliography

The purpose of the discussion section is to interpret and describe the significance of your findings in relation to what was already known about the research problem being investigated and to explain any new understanding or insights that emerged as a result of your research. The discussion will always connect to the introduction by way of the research questions or hypotheses you posed and the literature you reviewed, but the discussion does not simply repeat or rearrange the first parts of your paper; the discussion clearly explains how your study advanced the reader's understanding of the research problem from where you left them at the end of your review of prior research.

Annesley, Thomas M. “The Discussion Section: Your Closing Argument.” Clinical Chemistry 56 (November 2010): 1671-1674; Peacock, Matthew. “Communicative Moves in the Discussion Section of Research Articles.” System 30 (December 2002): 479-497.

Importance of a Good Discussion

The discussion section is often considered the most important part of your research paper because it:

- Most effectively demonstrates your ability as a researcher to think critically about an issue, to develop creative solutions to problems based upon a logical synthesis of the findings, and to formulate a deeper, more profound understanding of the research problem under investigation;

- Presents the underlying meaning of your research, notes possible implications in other areas of study, and explores possible improvements that can be made in order to further develop the concerns of your research;

- Highlights the importance of your study and how it can contribute to understanding the research problem within the field of study;

- Presents how the findings from your study revealed and helped fill gaps in the literature that had not been previously exposed or adequately described; and,

- Engages the reader in thinking critically about issues based on an evidence-based interpretation of findings; it is not governed strictly by objective reporting of information.

Annesley Thomas M. “The Discussion Section: Your Closing Argument.” Clinical Chemistry 56 (November 2010): 1671-1674; Bitchener, John and Helen Basturkmen. “Perceptions of the Difficulties of Postgraduate L2 Thesis Students Writing the Discussion Section.” Journal of English for Academic Purposes 5 (January 2006): 4-18; Kretchmer, Paul. Fourteen Steps to Writing an Effective Discussion Section. San Francisco Edit, 2003-2008.

Structure and Writing Style

I. General Rules

These are the general rules you should adopt when composing your discussion of the results :

- Do not be verbose or repetitive; be concise and make your points clearly

- Avoid the use of jargon or undefined technical language

- Follow a logical stream of thought; in general, interpret and discuss the significance of your findings in the same sequence you described them in your results section [a notable exception is to begin by highlighting an unexpected result or a finding that can grab the reader's attention]

- Use the present verb tense, especially for established facts; however, refer to specific works or prior studies in the past tense

- If needed, use subheadings to help organize your discussion or to categorize your interpretations into themes

II. The Content

The content of the discussion section of your paper most often includes :

- Explanation of results : Comment on whether or not the results were expected for each set of findings; go into greater depth to explain findings that were unexpected or especially profound. If appropriate, note any unusual or unanticipated patterns or trends that emerged from your results and explain their meaning in relation to the research problem.

- References to previous research : Either compare your results with the findings from other studies or use the studies to support a claim. This can include re-visiting key sources already cited in your literature review section, or, save them to cite later in the discussion section if they are more important to compare with your results instead of being a part of the general literature review of prior research used to provide context and background information. Note that you can make this decision to highlight specific studies after you have begun writing the discussion section.

- Deduction : A claim for how the results can be applied more generally. For example, describing lessons learned, proposing recommendations that can help improve a situation, or highlighting best practices.

- Hypothesis : A more general claim or possible conclusion arising from the results [which may be proved or disproved in subsequent research]. This can be framed as new research questions that emerged as a consequence of your analysis.

III. Organization and Structure

Keep the following sequential points in mind as you organize and write the discussion section of your paper:

- Think of your discussion as an inverted pyramid. Organize the discussion from the general to the specific, linking your findings to the literature, then to theory, then to practice [if appropriate].

- Use the same key terms, narrative style, and verb tense [present] that you used when describing the research problem in your introduction.

- Begin by briefly re-stating the research problem you were investigating and answer all of the research questions underpinning the problem that you posed in the introduction.

- Describe the patterns, principles, and relationships shown by each major findings and place them in proper perspective. The sequence of this information is important; first state the answer, then the relevant results, then cite the work of others. If appropriate, refer the reader to a figure or table to help enhance the interpretation of the data [either within the text or as an appendix].

- Regardless of where it's mentioned, a good discussion section includes analysis of any unexpected findings. This part of the discussion should begin with a description of the unanticipated finding, followed by a brief interpretation as to why you believe it appeared and, if necessary, its possible significance in relation to the overall study. If more than one unexpected finding emerged during the study, describe each of them in the order they appeared as you gathered or analyzed the data. As noted, the exception to discussing findings in the same order you described them in the results section would be to begin by highlighting the implications of a particularly unexpected or significant finding that emerged from the study, followed by a discussion of the remaining findings.

- Before concluding the discussion, identify potential limitations and weaknesses if you do not plan to do so in the conclusion of the paper. Comment on their relative importance in relation to your overall interpretation of the results and, if necessary, note how they may affect the validity of your findings. Avoid using an apologetic tone; however, be honest and self-critical [e.g., in retrospect, had you included a particular question in a survey instrument, additional data could have been revealed].

- The discussion section should end with a concise summary of the principal implications of the findings regardless of their significance. Give a brief explanation about why you believe the findings and conclusions of your study are important and how they support broader knowledge or understanding of the research problem. This can be followed by any recommendations for further research. However, do not offer recommendations which could have been easily addressed within the study. This would demonstrate to the reader that you have inadequately examined and interpreted the data.

IV. Overall Objectives

The objectives of your discussion section should include the following: I. Reiterate the Research Problem/State the Major Findings

Briefly reiterate the research problem or problems you are investigating and the methods you used to investigate them, then move quickly to describe the major findings of the study. You should write a direct, declarative, and succinct proclamation of the study results, usually in one paragraph.

II. Explain the Meaning of the Findings and Why They are Important

No one has thought as long and hard about your study as you have. Systematically explain the underlying meaning of your findings and state why you believe they are significant. After reading the discussion section, you want the reader to think critically about the results and why they are important. You don’t want to force the reader to go through the paper multiple times to figure out what it all means. If applicable, begin this part of the section by repeating what you consider to be your most significant or unanticipated finding first, then systematically review each finding. Otherwise, follow the general order you reported the findings presented in the results section.

III. Relate the Findings to Similar Studies

No study in the social sciences is so novel or possesses such a restricted focus that it has absolutely no relation to previously published research. The discussion section should relate your results to those found in other studies, particularly if questions raised from prior studies served as the motivation for your research. This is important because comparing and contrasting the findings of other studies helps to support the overall importance of your results and it highlights how and in what ways your study differs from other research about the topic. Note that any significant or unanticipated finding is often because there was no prior research to indicate the finding could occur. If there is prior research to indicate this, you need to explain why it was significant or unanticipated. IV. Consider Alternative Explanations of the Findings

It is important to remember that the purpose of research in the social sciences is to discover and not to prove . When writing the discussion section, you should carefully consider all possible explanations for the study results, rather than just those that fit your hypothesis or prior assumptions and biases. This is especially important when describing the discovery of significant or unanticipated findings.

V. Acknowledge the Study’s Limitations

It is far better for you to identify and acknowledge your study’s limitations than to have them pointed out by your professor! Note any unanswered questions or issues your study could not address and describe the generalizability of your results to other situations. If a limitation is applicable to the method chosen to gather information, then describe in detail the problems you encountered and why. VI. Make Suggestions for Further Research

You may choose to conclude the discussion section by making suggestions for further research [as opposed to offering suggestions in the conclusion of your paper]. Although your study can offer important insights about the research problem, this is where you can address other questions related to the problem that remain unanswered or highlight hidden issues that were revealed as a result of conducting your research. You should frame your suggestions by linking the need for further research to the limitations of your study [e.g., in future studies, the survey instrument should include more questions that ask..."] or linking to critical issues revealed from the data that were not considered initially in your research.

NOTE: Besides the literature review section, the preponderance of references to sources is usually found in the discussion section . A few historical references may be helpful for perspective, but most of the references should be relatively recent and included to aid in the interpretation of your results, to support the significance of a finding, and/or to place a finding within a particular context. If a study that you cited does not support your findings, don't ignore it--clearly explain why your research findings differ from theirs.

V. Problems to Avoid

- Do not waste time restating your results . Should you need to remind the reader of a finding to be discussed, use "bridge sentences" that relate the result to the interpretation. An example would be: “In the case of determining available housing to single women with children in rural areas of Texas, the findings suggest that access to good schools is important...," then move on to further explaining this finding and its implications.

- As noted, recommendations for further research can be included in either the discussion or conclusion of your paper, but do not repeat your recommendations in the both sections. Think about the overall narrative flow of your paper to determine where best to locate this information. However, if your findings raise a lot of new questions or issues, consider including suggestions for further research in the discussion section.

- Do not introduce new results in the discussion section. Be wary of mistaking the reiteration of a specific finding for an interpretation because it may confuse the reader. The description of findings [results section] and the interpretation of their significance [discussion section] should be distinct parts of your paper. If you choose to combine the results section and the discussion section into a single narrative, you must be clear in how you report the information discovered and your own interpretation of each finding. This approach is not recommended if you lack experience writing college-level research papers.

- Use of the first person pronoun is generally acceptable. Using first person singular pronouns can help emphasize a point or illustrate a contrasting finding. However, keep in mind that too much use of the first person can actually distract the reader from the main points [i.e., I know you're telling me this--just tell me!].

Analyzing vs. Summarizing. Department of English Writing Guide. George Mason University; Discussion. The Structure, Format, Content, and Style of a Journal-Style Scientific Paper. Department of Biology. Bates College; Hess, Dean R. "How to Write an Effective Discussion." Respiratory Care 49 (October 2004); Kretchmer, Paul. Fourteen Steps to Writing to Writing an Effective Discussion Section. San Francisco Edit, 2003-2008; The Lab Report. University College Writing Centre. University of Toronto; Sauaia, A. et al. "The Anatomy of an Article: The Discussion Section: "How Does the Article I Read Today Change What I Will Recommend to my Patients Tomorrow?” The Journal of Trauma and Acute Care Surgery 74 (June 2013): 1599-1602; Research Limitations & Future Research . Lund Research Ltd., 2012; Summary: Using it Wisely. The Writing Center. University of North Carolina; Schafer, Mickey S. Writing the Discussion. Writing in Psychology course syllabus. University of Florida; Yellin, Linda L. A Sociology Writer's Guide . Boston, MA: Allyn and Bacon, 2009.

Writing Tip

Don’t Over-Interpret the Results!

Interpretation is a subjective exercise. As such, you should always approach the selection and interpretation of your findings introspectively and to think critically about the possibility of judgmental biases unintentionally entering into discussions about the significance of your work. With this in mind, be careful that you do not read more into the findings than can be supported by the evidence you have gathered. Remember that the data are the data: nothing more, nothing less.

MacCoun, Robert J. "Biases in the Interpretation and Use of Research Results." Annual Review of Psychology 49 (February 1998): 259-287; Ward, Paulet al, editors. The Oxford Handbook of Expertise . Oxford, UK: Oxford University Press, 2018.

Another Writing Tip

Don't Write Two Results Sections!

One of the most common mistakes that you can make when discussing the results of your study is to present a superficial interpretation of the findings that more or less re-states the results section of your paper. Obviously, you must refer to your results when discussing them, but focus on the interpretation of those results and their significance in relation to the research problem, not the data itself.

Azar, Beth. "Discussing Your Findings." American Psychological Association gradPSYCH Magazine (January 2006).

Yet Another Writing Tip

Avoid Unwarranted Speculation!

The discussion section should remain focused on the findings of your study. For example, if the purpose of your research was to measure the impact of foreign aid on increasing access to education among disadvantaged children in Bangladesh, it would not be appropriate to speculate about how your findings might apply to populations in other countries without drawing from existing studies to support your claim or if analysis of other countries was not a part of your original research design. If you feel compelled to speculate, do so in the form of describing possible implications or explaining possible impacts. Be certain that you clearly identify your comments as speculation or as a suggestion for where further research is needed. Sometimes your professor will encourage you to expand your discussion of the results in this way, while others don’t care what your opinion is beyond your effort to interpret the data in relation to the research problem.

- << Previous: Using Non-Textual Elements

- Next: Limitations of the Study >>

- Last Updated: May 18, 2024 11:38 AM

- URL: https://libguides.usc.edu/writingguide

Library Guides

Dissertations 5: findings, analysis and discussion: home.

- Results/Findings

Alternative Structures

The time has come to show and discuss the findings of your research. How to structure this part of your dissertation?

Dissertations can have different structures, as you can see in the dissertation structure guide.

Dissertations organised by sections

Many dissertations are organised by sections. In this case, we suggest three options. Note that, if within your course you have been instructed to use a specific structure, you should do that. Also note that sometimes there is considerable freedom on the structure, so you can come up with other structures too.

A) More common for scientific dissertations and quantitative methods:

- Results chapter

- Discussion chapter

Example:

- Introduction

- Literature review

- Methodology

- (Recommendations)

if you write a scientific dissertation, or anyway using quantitative methods, you will have some objective results that you will present in the Results chapter. You will then interpret the results in the Discussion chapter.

B) More common for qualitative methods

- Analysis chapter. This can have more descriptive/thematic subheadings.

- Discussion chapter. This can have more descriptive/thematic subheadings.

- Case study of Company X (fashion brand) environmental strategies

- Successful elements

- Lessons learnt

- Criticisms of Company X environmental strategies

- Possible alternatives

C) More common for qualitative methods

- Analysis and discussion chapter. This can have more descriptive/thematic titles.

- Case study of Company X (fashion brand) environmental strategies

If your dissertation uses qualitative methods, it is harder to identify and report objective data. Instead, it may be more productive and meaningful to present the findings in the same sections where you also analyse, and possibly discuss, them. You will probably have different sections dealing with different themes. The different themes can be subheadings of the Analysis and Discussion (together or separate) chapter(s).

Thematic dissertations

If the structure of your dissertation is thematic , you will have several chapters analysing and discussing the issues raised by your research. The chapters will have descriptive/thematic titles.

- Background on the conflict in Yemen (2004-present day)

- Classification of the conflict in international law

- International law violations

- Options for enforcement of international law

- Next: Results/Findings >>

- Last Updated: Aug 4, 2023 2:17 PM

- URL: https://libguides.westminster.ac.uk/c.php?g=696975

CONNECT WITH US

How to Write the Discussion Section of a Research Paper

The discussion section of a research paper analyzes and interprets the findings, provides context, compares them with previous studies, identifies limitations, and suggests future research directions.

Updated on September 15, 2023

Structure your discussion section right, and you’ll be cited more often while doing a greater service to the scientific community. So, what actually goes into the discussion section? And how do you write it?

The discussion section of your research paper is where you let the reader know how your study is positioned in the literature, what to take away from your paper, and how your work helps them. It can also include your conclusions and suggestions for future studies.

First, we’ll define all the parts of your discussion paper, and then look into how to write a strong, effective discussion section for your paper or manuscript.

Discussion section: what is it, what it does

The discussion section comes later in your paper, following the introduction, methods, and results. The discussion sets up your study’s conclusions. Its main goals are to present, interpret, and provide a context for your results.

What is it?

The discussion section provides an analysis and interpretation of the findings, compares them with previous studies, identifies limitations, and suggests future directions for research.

This section combines information from the preceding parts of your paper into a coherent story. By this point, the reader already knows why you did your study (introduction), how you did it (methods), and what happened (results). In the discussion, you’ll help the reader connect the ideas from these sections.

Why is it necessary?

The discussion provides context and interpretations for the results. It also answers the questions posed in the introduction. While the results section describes your findings, the discussion explains what they say. This is also where you can describe the impact or implications of your research.

Adds context for your results

Most research studies aim to answer a question, replicate a finding, or address limitations in the literature. These goals are first described in the introduction. However, in the discussion section, the author can refer back to them to explain how the study's objective was achieved.

Shows what your results actually mean and real-world implications

The discussion can also describe the effect of your findings on research or practice. How are your results significant for readers, other researchers, or policymakers?

What to include in your discussion (in the correct order)

A complete and effective discussion section should at least touch on the points described below.

Summary of key findings

The discussion should begin with a brief factual summary of the results. Concisely overview the main results you obtained.

Begin with key findings with supporting evidence

Your results section described a list of findings, but what message do they send when you look at them all together?

Your findings were detailed in the results section, so there’s no need to repeat them here, but do provide at least a few highlights. This will help refresh the reader’s memory and help them focus on the big picture.

Read the first paragraph of the discussion section in this article (PDF) for an example of how to start this part of your paper. Notice how the authors break down their results and follow each description sentence with an explanation of why each finding is relevant.

State clearly and concisely

Following a clear and direct writing style is especially important in the discussion section. After all, this is where you will make some of the most impactful points in your paper. While the results section often contains technical vocabulary, such as statistical terms, the discussion section lets you describe your findings more clearly.

Interpretation of results

Once you’ve given your reader an overview of your results, you need to interpret those results. In other words, what do your results mean? Discuss the findings’ implications and significance in relation to your research question or hypothesis.

Analyze and interpret your findings

Look into your findings and explore what’s behind them or what may have caused them. If your introduction cited theories or studies that could explain your findings, use these sources as a basis to discuss your results.

For example, look at the second paragraph in the discussion section of this article on waggling honey bees. Here, the authors explore their results based on information from the literature.

Unexpected or contradictory results

Sometimes, your findings are not what you expect. Here’s where you describe this and try to find a reason for it. Could it be because of the method you used? Does it have something to do with the variables analyzed? Comparing your methods with those of other similar studies can help with this task.

Context and comparison with previous work

Refer to related studies to place your research in a larger context and the literature. Compare and contrast your findings with existing literature, highlighting similarities, differences, and/or contradictions.

How your work compares or contrasts with previous work

Studies with similar findings to yours can be cited to show the strength of your findings. Information from these studies can also be used to help explain your results. Differences between your findings and others in the literature can also be discussed here.

How to divide this section into subsections

If you have more than one objective in your study or many key findings, you can dedicate a separate section to each of these. Here’s an example of this approach. You can see that the discussion section is divided into topics and even has a separate heading for each of them.

Limitations

Many journals require you to include the limitations of your study in the discussion. Even if they don’t, there are good reasons to mention these in your paper.

Why limitations don’t have a negative connotation

A study’s limitations are points to be improved upon in future research. While some of these may be flaws in your method, many may be due to factors you couldn’t predict.

Examples include time constraints or small sample sizes. Pointing this out will help future researchers avoid or address these issues. This part of the discussion can also include any attempts you have made to reduce the impact of these limitations, as in this study .

How limitations add to a researcher's credibility

Pointing out the limitations of your study demonstrates transparency. It also shows that you know your methods well and can conduct a critical assessment of them.

Implications and significance

The final paragraph of the discussion section should contain the take-home messages for your study. It can also cite the “strong points” of your study, to contrast with the limitations section.

Restate your hypothesis

Remind the reader what your hypothesis was before you conducted the study.

How was it proven or disproven?

Identify your main findings and describe how they relate to your hypothesis.

How your results contribute to the literature

Were you able to answer your research question? Or address a gap in the literature?

Future implications of your research

Describe the impact that your results may have on the topic of study. Your results may show, for instance, that there are still limitations in the literature for future studies to address. There may be a need for studies that extend your findings in a specific way. You also may need additional research to corroborate your findings.

Sample discussion section

This fictitious example covers all the aspects discussed above. Your actual discussion section will probably be much longer, but you can read this to get an idea of everything your discussion should cover.

Our results showed that the presence of cats in a household is associated with higher levels of perceived happiness by its human occupants. These findings support our hypothesis and demonstrate the association between pet ownership and well-being.

The present findings align with those of Bao and Schreer (2016) and Hardie et al. (2023), who observed greater life satisfaction in pet owners relative to non-owners. Although the present study did not directly evaluate life satisfaction, this factor may explain the association between happiness and cat ownership observed in our sample.

Our findings must be interpreted in light of some limitations, such as the focus on cat ownership only rather than pets as a whole. This may limit the generalizability of our results.

Nevertheless, this study had several strengths. These include its strict exclusion criteria and use of a standardized assessment instrument to investigate the relationships between pets and owners. These attributes bolster the accuracy of our results and reduce the influence of confounding factors, increasing the strength of our conclusions. Future studies may examine the factors that mediate the association between pet ownership and happiness to better comprehend this phenomenon.

This brief discussion begins with a quick summary of the results and hypothesis. The next paragraph cites previous research and compares its findings to those of this study. Information from previous studies is also used to help interpret the findings. After discussing the results of the study, some limitations are pointed out. The paper also explains why these limitations may influence the interpretation of results. Then, final conclusions are drawn based on the study, and directions for future research are suggested.

How to make your discussion flow naturally

If you find writing in scientific English challenging, the discussion and conclusions are often the hardest parts of the paper to write. That’s because you’re not just listing up studies, methods, and outcomes. You’re actually expressing your thoughts and interpretations in words.

- How formal should it be?

- What words should you use, or not use?

- How do you meet strict word limits, or make it longer and more informative?

Always give it your best, but sometimes a helping hand can, well, help. Getting a professional edit can help clarify your work’s importance while improving the English used to explain it. When readers know the value of your work, they’ll cite it. We’ll assign your study to an expert editor knowledgeable in your area of research. Their work will clarify your discussion, helping it to tell your story. Find out more about AJE Editing.

Adam Goulston, PsyD, MS, MBA, MISD, ELS

Science Marketing Consultant

See our "Privacy Policy"

Ensure your structure and ideas are consistent and clearly communicated

Pair your Premium Editing with our add-on service Presubmission Review for an overall assessment of your manuscript.

How To Write The Discussion Chapter

A Simple Explainer With Examples + Free Template

By: Jenna Crossley (PhD) | Reviewed By: Dr. Eunice Rautenbach | August 2021

If you’re reading this, chances are you’ve reached the discussion chapter of your thesis or dissertation and are looking for a bit of guidance. Well, you’ve come to the right place ! In this post, we’ll unpack and demystify the typical discussion chapter in straightforward, easy to understand language, with loads of examples .

Overview: The Discussion Chapter

- What the discussion chapter is

- What to include in your discussion

- How to write up your discussion

- A few tips and tricks to help you along the way

- Free discussion template

What (exactly) is the discussion chapter?

The discussion chapter is where you interpret and explain your results within your thesis or dissertation. This contrasts with the results chapter, where you merely present and describe the analysis findings (whether qualitative or quantitative ). In the discussion chapter, you elaborate on and evaluate your research findings, and discuss the significance and implications of your results .

In this chapter, you’ll situate your research findings in terms of your research questions or hypotheses and tie them back to previous studies and literature (which you would have covered in your literature review chapter). You’ll also have a look at how relevant and/or significant your findings are to your field of research, and you’ll argue for the conclusions that you draw from your analysis. Simply put, the discussion chapter is there for you to interact with and explain your research findings in a thorough and coherent manner.

What should I include in the discussion chapter?

First things first: in some studies, the results and discussion chapter are combined into one chapter . This depends on the type of study you conducted (i.e., the nature of the study and methodology adopted), as well as the standards set by the university. So, check in with your university regarding their norms and expectations before getting started. In this post, we’ll treat the two chapters as separate, as this is most common.

Basically, your discussion chapter should analyse , explore the meaning and identify the importance of the data you presented in your results chapter. In the discussion chapter, you’ll give your results some form of meaning by evaluating and interpreting them. This will help answer your research questions, achieve your research aims and support your overall conclusion (s). Therefore, you discussion chapter should focus on findings that are directly connected to your research aims and questions. Don’t waste precious time and word count on findings that are not central to the purpose of your research project.

As this chapter is a reflection of your results chapter, it’s vital that you don’t report any new findings . In other words, you can’t present claims here if you didn’t present the relevant data in the results chapter first. So, make sure that for every discussion point you raise in this chapter, you’ve covered the respective data analysis in the results chapter. If you haven’t, you’ll need to go back and adjust your results chapter accordingly.

If you’re struggling to get started, try writing down a bullet point list everything you found in your results chapter. From this, you can make a list of everything you need to cover in your discussion chapter. Also, make sure you revisit your research questions or hypotheses and incorporate the relevant discussion to address these. This will also help you to see how you can structure your chapter logically.

Need a helping hand?

How to write the discussion chapter

Now that you’ve got a clear idea of what the discussion chapter is and what it needs to include, let’s look at how you can go about structuring this critically important chapter. Broadly speaking, there are six core components that need to be included, and these can be treated as steps in the chapter writing process.

Step 1: Restate your research problem and research questions

The first step in writing up your discussion chapter is to remind your reader of your research problem , as well as your research aim(s) and research questions . If you have hypotheses, you can also briefly mention these. This “reminder” is very important because, after reading dozens of pages, the reader may have forgotten the original point of your research or been swayed in another direction. It’s also likely that some readers skip straight to your discussion chapter from the introduction chapter , so make sure that your research aims and research questions are clear.

Step 2: Summarise your key findings

Next, you’ll want to summarise your key findings from your results chapter. This may look different for qualitative and quantitative research , where qualitative research may report on themes and relationships, whereas quantitative research may touch on correlations and causal relationships. Regardless of the methodology, in this section you need to highlight the overall key findings in relation to your research questions.

Typically, this section only requires one or two paragraphs , depending on how many research questions you have. Aim to be concise here, as you will unpack these findings in more detail later in the chapter. For now, a few lines that directly address your research questions are all that you need.

Some examples of the kind of language you’d use here include:

- The data suggest that…

- The data support/oppose the theory that…

- The analysis identifies…

These are purely examples. What you present here will be completely dependent on your original research questions, so make sure that you are led by them .

Step 3: Interpret your results

Once you’ve restated your research problem and research question(s) and briefly presented your key findings, you can unpack your findings by interpreting your results. Remember: only include what you reported in your results section – don’t introduce new information.

From a structural perspective, it can be a wise approach to follow a similar structure in this chapter as you did in your results chapter. This would help improve readability and make it easier for your reader to follow your arguments. For example, if you structured you results discussion by qualitative themes, it may make sense to do the same here.

Alternatively, you may structure this chapter by research questions, or based on an overarching theoretical framework that your study revolved around. Every study is different, so you’ll need to assess what structure works best for you.

When interpreting your results, you’ll want to assess how your findings compare to those of the existing research (from your literature review chapter). Even if your findings contrast with the existing research, you need to include these in your discussion. In fact, those contrasts are often the most interesting findings . In this case, you’d want to think about why you didn’t find what you were expecting in your data and what the significance of this contrast is.

Here are a few questions to help guide your discussion:

- How do your results relate with those of previous studies ?

- If you get results that differ from those of previous studies, why may this be the case?

- What do your results contribute to your field of research?

- What other explanations could there be for your findings?

When interpreting your findings, be careful not to draw conclusions that aren’t substantiated . Every claim you make needs to be backed up with evidence or findings from the data (and that data needs to be presented in the previous chapter – results). This can look different for different studies; qualitative data may require quotes as evidence, whereas quantitative data would use statistical methods and tests. Whatever the case, every claim you make needs to be strongly backed up.

Step 4: Acknowledge the limitations of your study

The fourth step in writing up your discussion chapter is to acknowledge the limitations of the study. These limitations can cover any part of your study , from the scope or theoretical basis to the analysis method(s) or sample. For example, you may find that you collected data from a very small sample with unique characteristics, which would mean that you are unable to generalise your results to the broader population.

For some students, discussing the limitations of their work can feel a little bit self-defeating . This is a misconception, as a core indicator of high-quality research is its ability to accurately identify its weaknesses. In other words, accurately stating the limitations of your work is a strength, not a weakness . All that said, be careful not to undermine your own research. Tell the reader what limitations exist and what improvements could be made, but also remind them of the value of your study despite its limitations.

Step 5: Make recommendations for implementation and future research

Now that you’ve unpacked your findings and acknowledge the limitations thereof, the next thing you’ll need to do is reflect on your study in terms of two factors:

- The practical application of your findings

- Suggestions for future research

The first thing to discuss is how your findings can be used in the real world – in other words, what contribution can they make to the field or industry? Where are these contributions applicable, how and why? For example, if your research is on communication in health settings, in what ways can your findings be applied to the context of a hospital or medical clinic? Make sure that you spell this out for your reader in practical terms, but also be realistic and make sure that any applications are feasible.

The next discussion point is the opportunity for future research . In other words, how can other studies build on what you’ve found and also improve the findings by overcoming some of the limitations in your study (which you discussed a little earlier). In doing this, you’ll want to investigate whether your results fit in with findings of previous research, and if not, why this may be the case. For example, are there any factors that you didn’t consider in your study? What future research can be done to remedy this? When you write up your suggestions, make sure that you don’t just say that more research is needed on the topic, also comment on how the research can build on your study.

Step 6: Provide a concluding summary

Finally, you’ve reached your final stretch. In this section, you’ll want to provide a brief recap of the key findings – in other words, the findings that directly address your research questions . Basically, your conclusion should tell the reader what your study has found, and what they need to take away from reading your report.

When writing up your concluding summary, bear in mind that some readers may skip straight to this section from the beginning of the chapter. So, make sure that this section flows well from and has a strong connection to the opening section of the chapter.

Tips and tricks for an A-grade discussion chapter

Now that you know what the discussion chapter is , what to include and exclude , and how to structure it , here are some tips and suggestions to help you craft a quality discussion chapter.

- When you write up your discussion chapter, make sure that you keep it consistent with your introduction chapter , as some readers will skip from the introduction chapter directly to the discussion chapter. Your discussion should use the same tense as your introduction, and it should also make use of the same key terms.

- Don’t make assumptions about your readers. As a writer, you have hands-on experience with the data and so it can be easy to present it in an over-simplified manner. Make sure that you spell out your findings and interpretations for the intelligent layman.

- Have a look at other theses and dissertations from your institution, especially the discussion sections. This will help you to understand the standards and conventions of your university, and you’ll also get a good idea of how others have structured their discussion chapters. You can also check out our chapter template .

- Avoid using absolute terms such as “These results prove that…”, rather make use of terms such as “suggest” or “indicate”, where you could say, “These results suggest that…” or “These results indicate…”. It is highly unlikely that a dissertation or thesis will scientifically prove something (due to a variety of resource constraints), so be humble in your language.

- Use well-structured and consistently formatted headings to ensure that your reader can easily navigate between sections, and so that your chapter flows logically and coherently.

If you have any questions or thoughts regarding this post, feel free to leave a comment below. Also, if you’re looking for one-on-one help with your discussion chapter (or thesis in general), consider booking a free consultation with one of our highly experienced Grad Coaches to discuss how we can help you.

Psst... there’s more!

This post was based on one of our popular Research Bootcamps . If you're working on a research project, you'll definitely want to check this out ...

You Might Also Like:

36 Comments

Thank you this is helpful!

This is very helpful to me… Thanks a lot for sharing this with us 😊

This has been very helpful indeed. Thank you.

This is actually really helpful, I just stumbled upon it. Very happy that I found it, thank you.

Me too! I was kinda lost on how to approach my discussion chapter. How helpful! Thanks a lot!

This is really good and explicit. Thanks

Thank you, this blog has been such a help.

Thank you. This is very helpful.

Dear sir/madame

Thanks a lot for this helpful blog. Really, it supported me in writing my discussion chapter while I was totally unaware about its structure and method of writing.

With regards

Syed Firoz Ahmad PhD, Research Scholar

I agree so much. This blog was god sent. It assisted me so much while I was totally clueless about the context and the know-how. Now I am fully aware of what I am to do and how I am to do it.

Thanks! This is helpful!

thanks alot for this informative website

Dear Sir/Madam,

Truly, your article was much benefited when i structured my discussion chapter.

Thank you very much!!!

This is helpful for me in writing my research discussion component. I have to copy this text on Microsoft word cause of my weakness that I cannot be able to read the text on screen a long time. So many thanks for this articles.

This was helpful

Thanks Jenna, well explained.

Thank you! This is super helpful.

Thanks very much. I have appreciated the six steps on writing the Discussion chapter which are (i) Restating the research problem and questions (ii) Summarising the key findings (iii) Interpreting the results linked to relating to previous results in positive and negative ways; explaining whay different or same and contribution to field of research and expalnation of findings (iv) Acknowledgeing limitations (v) Recommendations for implementation and future resaerch and finally (vi) Providing a conscluding summary

My two questions are: 1. On step 1 and 2 can it be the overall or you restate and sumamrise on each findings based on the reaerch question? 2. On 4 and 5 do you do the acknowlledgement , recommendations on each research finding or overall. This is not clear from your expalanattion.

Please respond.

This post is very useful. I’m wondering whether practical implications must be introduced in the Discussion section or in the Conclusion section?

Sigh, I never knew a 20 min video could have literally save my life like this. I found this at the right time!!!! Everything I need to know in one video thanks a mil ! OMGG and that 6 step!!!!!! was the cherry on top the cake!!!!!!!!!

Thanks alot.., I have gained much

This piece is very helpful on how to go about my discussion section. I can always recommend GradCoach research guides for colleagues.

Many thanks for this resource. It has been very helpful to me. I was finding it hard to even write the first sentence. Much appreciated.

Thanks so much. Very helpful to know what is included in the discussion section

this was a very helpful and useful information

This is very helpful. Very very helpful. Thanks for sharing this online!

it is very helpfull article, and i will recommend it to my fellow students. Thank you.

Superlative! More grease to your elbows.

Powerful, thank you for sharing.

Wow! Just wow! God bless the day I stumbled upon you guys’ YouTube videos! It’s been truly life changing and anxiety about my report that is due in less than a month has subsided significantly!

Simplified explanation. Well done.

The presentation is enlightening. Thank you very much.

Thanks for the support and guidance

This has been a great help to me and thank you do much

I second that “it is highly unlikely that a dissertation or thesis will scientifically prove something”; although, could you enlighten us on that comment and elaborate more please?

Sure, no problem.

Scientific proof is generally considered a very strong assertion that something is definitively and universally true. In most scientific disciplines, especially within the realms of natural and social sciences, absolute proof is very rare. Instead, researchers aim to provide evidence that supports or rejects hypotheses. This evidence increases or decreases the likelihood that a particular theory is correct, but it rarely proves something in the absolute sense.

Dissertations and theses, as substantial as they are, typically focus on exploring a specific question or problem within a larger field of study. They contribute to a broader conversation and body of knowledge. The aim is often to provide detailed insight, extend understanding, and suggest directions for further research rather than to offer definitive proof. These academic works are part of a cumulative process of knowledge building where each piece of research connects with others to gradually enhance our understanding of complex phenomena.

Furthermore, the rigorous nature of scientific inquiry involves continuous testing, validation, and potential refutation of ideas. What might be considered a “proof” at one point can later be challenged by new evidence or alternative interpretations. Therefore, the language of “proof” is cautiously used in academic circles to maintain scientific integrity and humility.

Submit a Comment Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Print Friendly

Writing the Dissertation - Guides for Success: The Results and Discussion

- Writing the Dissertation Homepage

- Overview and Planning

- The Literature Review

- The Methodology

- The Results and Discussion

- The Conclusion

- The Abstract

- The Difference

- What to Avoid

Overview of writing the results and discussion

The results and discussion follow on from the methods or methodology chapter of the dissertation. This creates a natural transition from how you designed your study, to what your study reveals, highlighting your own contribution to the research area.

Disciplinary differences

Please note: this guide is not specific to any one discipline. The results and discussion can vary depending on the nature of the research and the expectations of the school or department, so please adapt the following advice to meet the demands of your project and department. Consult your supervisor for further guidance; you can also peruse our Writing Across Subjects guide .

Guide contents

As part of the Writing the Dissertation series, this guide covers the most common conventions of the results and discussion chapters, giving you the necessary knowledge, tips and guidance needed to impress your markers! The sections are organised as follows:

- The Difference - Breaks down the distinctions between the results and discussion chapters.

- Results - Provides a walk-through of common characteristics of the results chapter.

- Discussion - Provides a walk-through of how to approach writing your discussion chapter, including structure.

- What to Avoid - Covers a few frequent mistakes you'll want to...avoid!

- FAQs - Guidance on first- vs. third-person, limitations and more.

- Checklist - Includes a summary of key points and a self-evaluation checklist.

Training and tools

- The Academic Skills team has recorded a Writing the Dissertation workshop series to help you with each section of a standard dissertation, including a video on writing the results and discussion (embedded below).

- The dissertation planner tool can help you think through the timeline for planning, research, drafting and editing.

- iSolutions offers training and a Word template to help you digitally format and structure your dissertation.

Introduction

The results of your study are often followed by a separate chapter of discussion. This is certainly the case with scientific writing. Some dissertations, however, might incorporate both the results and discussion in one chapter. This depends on the nature of your dissertation and the conventions within your school or department. Always follow the guidelines given to you and ask your supervisor for further guidance.

As part of the Writing the Dissertation series, this guide covers the essentials of writing your results and discussion, giving you the necesary knowledge, tips and guidance needed to leave a positive impression on your markers! This guide covers the results and discussion as separate – although interrelated – chapters, as you'll see in the next two tabs. However, you can easily adapt the guidance to suit one single chapter – keep an eye out for some hints on how to do this throughout the guide.

Results or discussion - what's the difference?

To understand what the results and discussion sections are about, we need to clearly define the difference between the two.

The results should provide a clear account of the findings . This is written in a dry and direct manner, simply highlighting the findings as they appear once processed. It’s expected to have tables and graphics, where relevant, to contextualise and illustrate the data.

Rather than simply stating the findings of the study, the discussion interprets the findings to offer a more nuanced understanding of the research. The discussion is similar to the second half of the conclusion because it’s where you consider and formulate a response to the question, ‘what do we now know that we didn’t before?’ (see our Writing the Conclusion guide for more). The discussion achieves this by answering the research questions and responding to any hypotheses proposed. With this in mind, the discussion should be the most insightful chapter or section of your dissertation because it provides the most original insight.

Across the next two tabs of this guide, we will look at the results and discussion chapters separately in more detail.

Writing the results

The results chapter should provide a direct and factual account of the data collected without any interpretation or interrogation of the findings. As this might suggest, the results chapter can be slightly monotonous, particularly for quantitative data. Nevertheless, it’s crucial that you present your results in a clear and direct manner as it provides the necessary detail for your subsequent discussion.

Note: If you’re writing your results and discussion as one chapter, then you can either:

1) write them as distinctly separate sections in the same chapter, with the discussion following on from the results, or...

2) integrate the two throughout by presenting a subset of the results and then discussing that subset in further detail.

Next, we'll explore some of the most important factors to consider when writing your results chapter.

How you structure your results chapter depends on the design and purpose of your study. Here are some possible options for structuring your results chapter (adapted from Glatthorn and Joyner, 2005):

- Chronological – depending on the nature of the study, it might be important to present your results in order of how you collected the data, such as a pretest-posttest design.

- Research method – if you’ve used a mixed-methods approach, you could isolate each research method and instrument employed in the study.

- Research question and/or hypotheses – you could structure your results around your research questions and/or hypotheses, providing you have more than one. However, keep in mind that the results on their own don’t necessarily answer the questions or respond to the hypotheses in a definitive manner. You need to interpret the findings in the discussion chapter to gain a more rounded understanding.

- Variable – you could isolate each variable in your study (where relevant) and specify how and whether the results changed.

Tables and figures

For your results, you are expected to convert your data into tables and figures, particularly when dealing with quantitative data. Making use of tables and figures is a way of contextualising your results within the study. It also helps to visually reinforce your written account of the data. However, make sure you’re only using tables and figures to supplement , rather than replace, your written account of the results (see the 'What to avoid' tab for more on this).

Figures and tables need to be numbered in order of when they appear in the dissertation, and they should be capitalised. You also need to make direct reference to them in the text, which you can do (with some variation) in one of the following ways:

Figure 1 shows…

The results of the test (see Figure 1) demonstrate…

The actual figures and tables themselves also need to be accompanied by a caption that briefly outlines what is displayed. For example:

Table 1. Variables of the regression model

Table captions normally appear above the table, whilst figures or other such graphical forms appear below, although it’s worth confirming this with your supervisor as the formatting can change depending on the school or discipline. The style guide used for writing in your subject area (e.g., Harvard, MLA, APA, OSCOLA) often dictates correct formatting of tables, graphs and figures, so have a look at your style guide for additional support.

Using quotations

If your qualitative data comes from interviews and focus groups, your data will largely consist of quotations from participants. When presenting this data, you should identify and group the most common and interesting responses and then quote two or three relevant examples to illustrate this point. Here’s a brief example from a qualitative study on the habits of online food shoppers:

Regardless of whether or not participants regularly engage in online food shopping, all but two respondents commented, in some form, on the convenience of online food shopping:

"It’s about convenience for me. I’m at work all week and the weekend doesn’t allow much time for food shopping, so knowing it can be ordered and then delivered in 24 hours is great for me” (Participant A).

"It fits around my schedule, which is important for me and my family” (Participant D).

"In the past, I’ve always gone food shopping after work, which has always been a hassle. Online food shopping, however, frees up some of my time” (Participant E).

As shown in this example, each quotation is attributed to a particular participant, although their anonymity is protected. The details used to identify participants can depend on the relevance of certain factors to the research. For instance, age or gender could be included.

Writing the discussion

The discussion chapter is where “you critically examine your own results in the light of the previous state of the subject as outlined in the background, and make judgments as to what has been learnt in your work” (Evans et al., 2014: 12). Whilst the results chapter is strictly factual, reporting on the data on a surface level, the discussion is rooted in analysis and interpretation , allowing you and your reader to delve beneath the surface.

Next, we will review some of the most important factors to consider when writing your discussion chapter.

Like the results, there is no single way to structure your discussion chapter. As always, it depends on the nature of your dissertation and whether you’re dealing with qualitative, quantitative or mixed-methods research. It’s good to be consistent with the results chapter, so you could structure your discussion chapter, where possible, in the same way as your results.

When it comes to structure, it’s particularly important that you guide your reader through the various points, subtopics or themes of your discussion. You should do this by structuring sections of your discussion, which might incorporate three or four paragraphs around the same theme or issue, in a three-part way that mirrors the typical three-part essay structure of introduction, main body and conclusion.

Figure 1: The three-part cycle that embodies a typical essay structure and reflects how you structure themes or subtopics in your discussion.

This is your topic sentence where you clearly state the focus of this paragraph/section. It’s often a fairly short, declarative statement in order to grab the reader’s attention, and it should be clearly related to your research purpose, such as responding to a research question.

This constitutes your analysis where you explore the theme or focus, outlined in the topic sentence, in further detail by interrogating why this particular theme or finding emerged and the significance of this data. This is also where you bring in the relevant secondary literature.

This is the evaluative stage of the cycle where you explicitly return back to the topic sentence and tell the reader what this means in terms of answering the relevant research question and establishing new knowledge. It could be a single sentence, or a short paragraph, and it doesn’t strictly need to appear at the end of every section or theme. Instead, some prefer to bring the main themes together towards the end of the discussion in a single paragraph or two. Either way, it’s imperative that you evaluate the significance of your discussion and tell the reader what this means.

A note on the three-part structure

This is often how you’re taught to construct a paragraph, but the themes and ideas you engage with at dissertation level are going to extend beyond the confines of a short paragraph. Therefore, this is a structure to guide how you write about particular themes or patterns in your discussion. Think of this structure like a cycle that you can engage in its smallest form to shape a paragraph; in a slightly larger form to shape a subsection of a chapter; and in its largest form to shape the entire chapter. You can 'level up' the same basic structure to accommodate a deeper breadth of thinking and critical engagement.

Using secondary literature

Your discussion chapter should return to the relevant literature (previously identified in your literature review ) in order to contextualise and deepen your reader’s understanding of the findings. This might help to strengthen your findings, or you might find contradictory evidence that serves to counter your results. In the case of the latter, it’s important that you consider why this might be and the implications for this. It’s through your incorporation of secondary literature that you can consider the question, ‘What do we now know that we didn’t before?’

Limitations

You may have included a limitations section in your methodology chapter (see our Writing the Methodology guide ), but it’s also common to have one in your discussion chapter. The difference here is that your limitations are directly associated with your results and the capacity to interpret and analyse those results.

Think of it this way: the limitations in your methodology refer to the issues identified before conducting the research, whilst the limitations in your discussion refer to the issues that emerged after conducting the research. For example, you might only be able to identify a limitation about the external validity or generalisability of your research once you have processed and analysed the data. Try not to overstress the limitations of your work – doing so can undermine the work you’ve done – and try to contextualise them, perhaps by relating them to certain limitations of other studies.

Recommendations

It’s often good to follow your limitations with some recommendations for future research. This creates a neat linearity from what didn’t work, or what could be improved, to how other researchers could address these issues in the future. This helps to reposition your limitations in a positive way by offering an action-oriented response. Try to limit the amount of recommendations you discuss – too many can bring the end of your discussion to a rather negative end as you’re ultimately focusing on what should be done, rather than what you have done. You also don’t need to repeat the recommendations in your conclusion if you’ve included them here.

What to avoid

This portion of the guide will cover some common missteps you should try to avoid in writing your results and discussion.

Over-reliance on tables and figures

It’s very common to produce visual representations of data, such as graphs and tables, and to use these representations in your results chapter. However, the use of these figures should not entirely replace your written account of the data. You don’t need to specify every detail in the data set, but you should provide some written account of what the data shows, drawing your reader’s attention to the most important elements of the data. The figures should support your account and help to contextualise your results. Simply stating, ‘look at Table 1’, without any further detail is not sufficient. Writers often try to do this as a way of saving words, but your markers will know!

Ignoring unexpected or contradictory data

Research can be a complex process with ups and downs, surprises and anomalies. Don’t be tempted to ignore any data that doesn’t meet your expectations, or that perhaps you’re struggling to explain. Failing to report on data for these, and other such reasons, is a problem because it undermines your credibility as a researcher, which inevitably undermines your research in the process. You have to do your best to provide some reason to such data. For instance, there might be some methodological reason behind a particular trend in the data.

Including raw data

You don’t need to include any raw data in your results chapter – raw data meaning unprocessed data that hasn’t undergone any calculations or other such refinement. This can overwhelm your reader and obscure the clarity of the research. You can include raw data in an appendix, providing you feel it’s necessary.

Presenting new results in the discussion

You shouldn’t be stating original findings for the first time in the discussion chapter. The findings of your study should first appear in your results before elaborating on them in the discussion.

Overstressing the significance of your research

It’s important that you clarify what your research demonstrates so you can highlight your own contribution to the research field. However, don’t overstress or inflate the significance of your results. It’s always difficult to provide definitive answers in academic research, especially with qualitative data. You should be confident and authoritative where possible, but don’t claim to reach the absolute truth when perhaps other conclusions could be reached. Where necessary, you should use hedging (see definition) to slightly soften the tone and register of your language.

Definition: Hedging refers to 'the act of expressing your attitude or ideas in tentative or cautious ways' (Singh and Lukkarila, 2017: 101). It’s mostly achieved through a number of verbs or adverbs, such as ‘suggest’ or ‘seemingly.’

Q: What’s the difference between the results and discussion?

A: The results chapter is a factual account of the data collected, whilst the discussion considers the implications of these findings by relating them to relevant literature and answering your research question(s). See the tab 'The Differences' in this guide for more detail.

Q: Should the discussion include recommendations for future research?

A: Your dissertation should include some recommendations for future research, but it can vary where it appears. Recommendations are often featured towards the end of the discussion chapter, but they also regularly appear in the conclusion chapter (see our Writing the Conclusion guide for more). It simply depends on your dissertation and the conventions of your school or department. It’s worth consulting any specific guidance that you’ve been given, or asking your supervisor directly.

Q: Should the discussion include the limitations of the study?

A: Like the answer above, you should engage with the limitations of your study, but it might appear in the discussion of some dissertations, or the conclusion of others. Consider the narrative flow and whether it makes sense to include the limitations in your discussion chapter, or your conclusion. You should also consult any discipline-specific guidance you’ve been given, or ask your supervisor for more. Be mindful that this is slightly different to the limitations outlined in the methodology or methods chapter (see our Writing the Methodology guide vs. the 'Discussion' tab of this guide).

Q: Should the results and discussion be in the first-person or third?

A: It’s important to be consistent , so you should use whatever you’ve been using throughout your dissertation. Third-person is more commonly accepted, but certain disciplines are happy with the use of first-person. Just remember that the first-person pronoun can be a distracting, but powerful device, so use it sparingly. Consult your lecturer for discipline-specific guidance.

Q: Is there a difference between the discussion and the conclusion of a dissertation?

A: Yes, there is a difference. The discussion chapter is a detailed consideration of how your findings answer your research questions. This includes the use of secondary literature to help contextualise your discussion. Rather than considering the findings in detail, the conclusion briefly summarises and synthesises the main findings of your study before bringing the dissertation to a close. Both are similar, particularly in the way they ‘broaden out’ to consider the wider implications of the research. They are, however, their own distinct chapters, unless otherwise stated by your supervisor.