Harvard Dataverse

Harvard Dataverse is an online data repository where you can share, preserve, cite, explore, and analyze research data. It is open to all researchers, both inside and out of the Harvard community.

Harvard Dataverse provides access to a rich array of datasets to support your research. It offers advanced searching and text mining in over 2,000 dataverses, 75,000 datasets, and 350,000+ files, representing institutions, groups, and individuals at Harvard and beyond.

Explore Harvard Dataverse

The Harvard Dataverse repository runs on the open-source web application Dataverse , developed at the Institute for Quantitative Social Science . Dataverse helps make your data available to others, and allows you to replicate others' work more easily. Researchers, journals, data authors, publishers, data distributors, and affiliated institutions all receive academic credit and web visibility.

Why Create a Personal Dataverse?

- Easy set up

- Display your data on your personal website

- Brand it uniquely as your research program

- Makes your data more discoverable to the research community

- Satisfies data management plans

Terms to know

- A Dataverse repository is the software installation, which then hosts multiple virtual archives called dataverses .

- Each dataverse contains datasets, and each dataset contains descriptive metadata and data files (including documentation and code that accompany the data).

- As an organizing method, dataverses may also contain other dataverses.

Related Services and Tools

Research data services, qualitative research support.

Share your research data

Mendeley Data is a free and secure cloud-based communal repository where you can store your data, ensuring it is easy to share, access and cite, wherever you are.

Find out more about our institutional offering, Digital Commons Data

Search the repository

Recently published.

The Generalist Repository Ecosystem Initiative

Elsevier's Mendeley Data repository is a participating member of the National Institutes of Health (NIH) Office of Data Science Strategy (ODSS) GREI project. The GREI includes seven established generalist repositories funded by the NIH to work together to establish consistent metadata, develop use cases for data sharing, train and educate researchers on FAIR data and the importance of data sharing, and more.

Why use Mendeley Data?

The Mendeley Data communal data repository is powered by Digital Commons Data.

Digital Commons Data provides everything that your institution will need to launch and maintain a successful Research Data Management program at scale.

Data Monitor provides visibility on an institution's entire research data output by harvesting research data from 2000+ generalist and domain-specific repositories, including everything in Mendeley Data.

New re3data.org Editorial Board Members

The re3data Editorial Board is pleased to welcome seven new members: Dalal Hakim Rahme, Coordinator of Content Curation, United Nations Rene Faustino Gabriel Junior, Professor, Universidade Federal do Rio Grando do Sul Sandra Gisela Martín, Library...

re3data Call for Editorial Board

re3data Call for Editorial Board The re3data.org registry has been in operation for over 10 years and provides a curated index of over 3,000 research data repositories around the world from all disciplines. New repositories are identified and reviewed by an...

Releasing version 4.0 of the re3data Metadata Schema

re3data caters to a range of needs for diverse stakeholders (Vierkant et al., 2021). Detailed and precise repository descriptions are at the heart of the majority of these use cases, and repository metadata are given special attention at the re3data ...

Helmholtz Open Science Office

Humbold-Universität zu Berlin

Karlsruher Institut für Technologie

Purdue University Libraries

Deutsche Forschungsgemeinschaft

Deutsche Initiative für Netzwerkinformation

FAIRsharing

CoreTrustSeal

- Legal notice / Impressum

- Terms of Use & Privacy Policy

- Current projects

- EOSC FAIR-IMPACT

- re3data COREF

- Cite this service: re3data.org - Registry of Research Data Repositories. https://doi.org/10.17616/R3D last accessed: 2024-06-20

Data Repositories

- Harvard Dataverse

- IEEE DataPort

- Mendeley Data

- Open Science Framework

- Science Data Bank

- NIH and NCBI Repositories

- Manuscript Repositories

A key aspect of data sharing involves not only posting or publishing research articles on preprint servers or in scientific journals, but also making public the data, code, and materials that support the research. Data repositories are a centralized place to hold data, share data publicly, and organize data in a logical manner.

Benefits of Data Repositories

- manage your data

- organize and deposit your data

- cite your data by supplying a persistent identifier

- facilitate discovery of your data

- make your data more valuable for current and future research

- preserve your data for the long-run

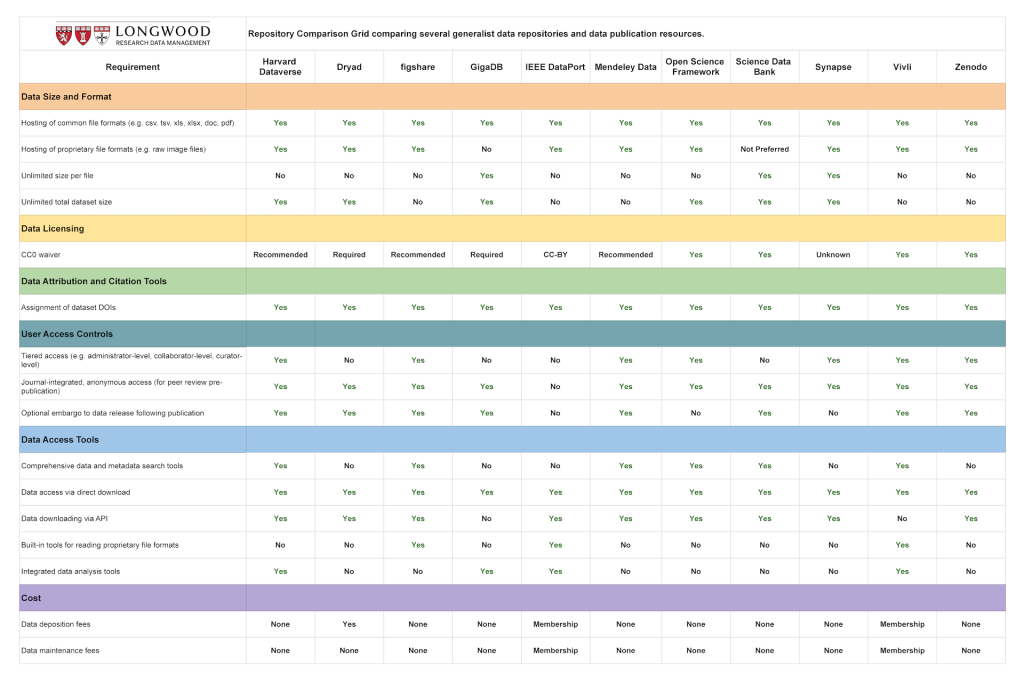

Repository Comparison Grid

The number of available resources for data sharing and data publication has increased substantially in recent years. You can search the re3data.org global registry of research data repositories to find appropriate academic discipline repositories.

We have also created a resource to compare and contrast several of the general data repositories currently available for NIH and biomedical science researchers. Detailed feature descriptions of each platform are available on the subpages of this page.

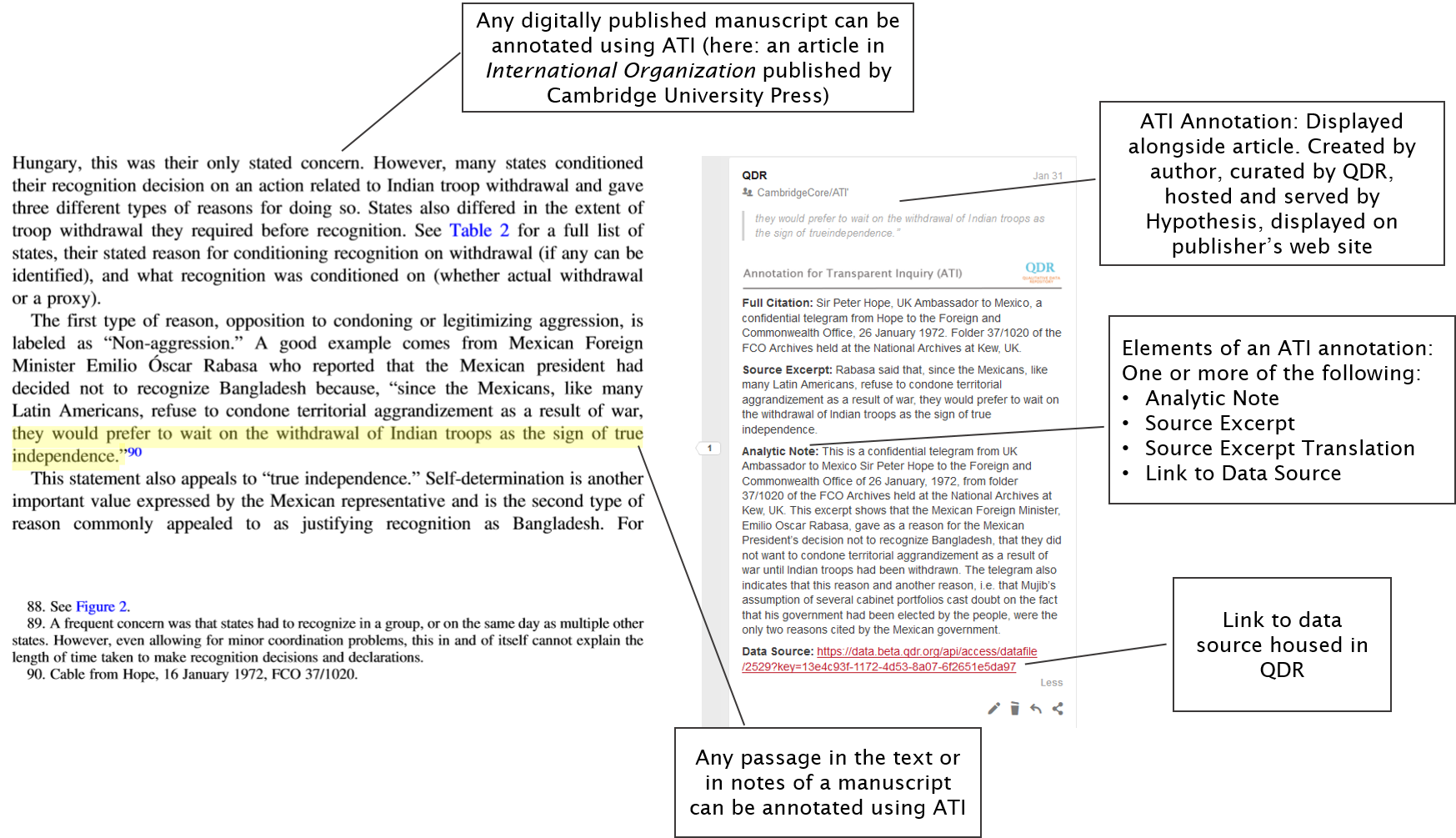

Click on the Harvard Biomedical Repository Matrix below to view an enlarged version of the table on Zenodo.

Accessible Repository Comparison

Repository comparison list.

A PDF version of this information is also available on Zenodo.

Harvard Dataverse Features & Specifications

Data size and format, hosting of common file formats (e.g. csv, tsv, xls, xlsx, doc, pdf).

- All file formats accepted (tabular, non-tabular, and compressed as a zip file bundle with file hierarchy feature to preserve directory structure).

Hosting of proprietary file formats (e.g. raw image files)

Unlimited size per file.

- To use the browser-based upload function, file can’t exceed 2.5GB. However, Harvard Dataverse is willing to work with Harvard researchers who have larger files.

Unlimited total dataset size

- 1TB per researcher. Harvard Dataverse will work with Harvard researchers who have larger datasets (>1 TB).

Data Licensing

- Recommended

- Harvard Dataverse strongly encourages use of a Creative Commons Zero (CC0) waiver for all public datasets, but dataset owners can specify other terms of use and restrict access to data.

Data Attribution and Citation Tools

Assignment of dataset dois.

- Harvard Dataverse assigns a DOI to each dataset and datafile within a dataverse.

- Dataset authors can identify themselves and other types of data contributors using the following types of unique IDs: ORCID, ISNI, LCNA, VIAF, GND, DAI, ResearcherID, Scopus ID.

User Access Controls

Tiered access (e.g. administrator-level, collaborator-level, curator-level).

- Harvard Dataverse allows draft, unpublished, and published (public) datasets. For draft and unpublished datasets, a variety of tiers of access can be assigned to different registered users.

Journal-integrated, anonymous access (for peer review pre-publication)

- The Harvard Dataverse Repository offers open access, restricted, and embargo options for all files, along with the ability to apply standard licenses and add custom terms of data access.

Optional embargo to data release following publication

Data access tools, comprehensive data and metadata search tools.

- Without logging in, users can browse a Dataverse installation and search for Dataverse collections, datasets, and files, view dataset descriptions and files for published datasets, and subset, analyze, and visualize data for published (restricted & not restricted) data files.

Data access via direct download

Data downloading via api.

- In addition to individual file downloading, Harvard Dataverse has multiple APIs for programmatic data and metadata access, as described in the Dataverse API Guide

Built-in tools for reading proprietary file formats

Integrated data analysis tools.

- Harvard Dataverse includes external tools that provide additional features that are not part of the Dataverse Software itself, such as data file previews, visualization, and curation.

Data deposition fees

- Harvard Dataverse Repository is free for all researchers worldwide (up to 1 TB).

Data maintenance fees

Dryad features & specifications.

- Prefers all data be submitted in non-proprietary, openly-documented formats that are preservation-friendly. Will accept other file types if they are "community-accepted" format. Do not accept submissions with personally identifiable information.

- No specified amount; no limit on storage space per researcher.

- 300GB/dataset

- Creative Commons Zero (CC0) Any data submitted will be published under the CC0 license. Does not currently support any other license types, nor allow for restrictions on data access or use.

- Each dataset published receives a DOI for the data submission as a whole. A suffix is added to the DOI when a data file or dataset is revised to enable version control.

- Can be shared anonymously and securely with editors and reviewers at the subset of journals that have integrated repository into their editorial workflow.

- Machine Readable metadata, primarily consists of keywords and information about the associated publication. Detailed, file-associated metadata are not records and thus not searchable. Uses the DataCite metadata schema.

- All datasets are free to download when published. Individual file downloading, multiple API's for programmatic data and metadata access.

- $120 data publishing charge unless funded by the institution, publishers or funders. Users can download datasets free of charge once they are published.

figshare Features & Specifications

- All file types (both compressed and non-compressed formats) are accepted. Some file types are rendered in the browser page. Files are tagged as follows: figures, datasets, media, code, paper, thesis, poster, presentation, and fileset.

- System-wide limit of 5TB per file.

- 20GB of private data files. Figshare+ (designed for larger datasets) offers storage in stages beginning with 100GB up to over 10TB per dataset. No limit on storage space per researcher.

- Code and software licenses include MIT, GPL-3.0, or Apache-2.0.

- All other files: CC-BY. These licensing rules apply to all individuals uploading files directly to figshare. Institutions and publishers are allowed to mandate alternative licenses.

- figshare assigns a DOI to each individual file at the point of publication. Authors can also add an ORCID ID, so all items are pushed to ORCID. Related files can be aggregated under a master DOI encompassing a Collection. A suffix is added to a file-level DOI when a file is revised or replaced to enable version control.

- Before the dataset is released publicly, a user can share it with a private sharing link or through a project/collaboration group.

- Publishers who have integrated their editorial workflow with figshare may have additional user access controls enabled to allow the journal's editors and reviewers to have anonymous and secure access to files before they are made public.

- Free-text search functionality is provided. Limited metadata, which consist of keywords and information about any associated publication, are recorded.

- Detailed, file-associated metadata are not recorded in the general-use figshare repository and thus are not searchable, but institutional figshare instances are allowed to develop custom metadata standards.

- In addition to individual file downloading, figshare has an API for programmatic data and metadata access.

- Figshare+ has a one-time fee based on the size of the dataset requested.

GigaDB Features & Specifications

- Only non-proprietary file types are accepted.

- An appropriate Open-Source Initiative (OSI) or other open-source license can be applied to software files, workflows, and virtual machines.

- Each dataset will be assigned a DOI that can be used as a citation in future articles and publications. No files present at the time of publication can be removed, but a versioning system allows authors to add new files after publication if needed. Detailed information about the data should be submitted by the authors in ISA-Tab.

- Through the GigaDB staging server, the journal's editors and reviewers have anonymous and secure access to files before they are made public, and authors can submit revised files during the peer-review process.

- Free-text search functionality is provided. Detailed, file-associated metadata are not recorded and thus are not searchable.

- Datasets may be downloaded via FTP or via a browser using GigaDB's Aspera server software. For larger datasets, GigaScience will copy data to a hard drive and ship it to a user ( at the user's expense ).

- Author-provided tools are hosted in GigaDB or on the GigaGalaxy server and linked to from the associated paper's GigaScience landing page.

- Data deposition costs for up to 1 TB of data are included in the standard article publication charge. All data provided by GigaDB is free to download and use.

IEEE DataPort Features & Specifications

- The following formats are currently supported: ZIP, GZ, gzip, CSV, JSON, TXT, SQL, XML, TSV, EBS, Avro, ORC, Parquet, HDF5, 7z, TBZ2, ISO, tar, BZ2, Z, XLS, XLSX, graph, properties, offsets, FLAC, OGG, WAV, AAC, MP3, GIF, JPG, JPEG, PNG, AVI, MOV, MP4, MPG, M4V, YAML, DAT, MAT, fig.

- No individual file size limit. For large datasets, upload a series of files that are 100 GB or less. For datasets with a large number of files (>100), compress your upload(s) using the ZIP and/or GZIP format.

- Up to 2TB of storage or 10 TB for Institutional Subscribers for each dataset.

- Datasets on IEEE DataPort are made available under Creative Commons Attribution (CC-BY) licenses which require attribution.

- Datasets on IEEE DataPort are made available under CC-BY licenses which require attribution. Any use requires citation. IEEE DataPort includes a Cite button on each dataset page so a user can easily obtain the proper citation for the dataset. The citation provided by using the Cite button is provided in multiple formats to facilitate easy attribution.

- IEEE DataPort is a self-monitoring system with feedback mechanisms so users can provide comments on datasets.

- To search, access, and analyze datasets on IEEE DataPort, you first need to create a free IEEE account or login with your existing account. After logging in you can search datasets by entering keywords in the search bar or by browsing the dataset categories. Once a desired dataset is located you can access it by simply clicking on the dataset.

- Users need to subscribe to access and/or download Standard datasets on IEEE DataPort. Open Access datasets are available to all registered users of IEEE DataPort.

- IEEE DataPort is data agnostic and will allow any format of dataset to be submitted for storage on IEEE DataPort. The provision of metadata, tools and/or supporting documentation allow the user to understand how each specific dataset can be analyzed.

- You can then view and analyze the dataset. If you do not have sufficient storage and computing capacity on your local system to perform the analysis, IEEE currently provides access to the dataset in the Cloud to facilitate the analysis. Once your analysis is complete, you can upload the analysis by clicking the “submit an analysis” button directly below the dataset image.

- An individual subscription allows you to view, download and/or access in the cloud all datasets, store your own research data at no cost, and access data management features. Individual subscriptions are free for all IEEE Society Members or $40/month.

Mendeley Data Features & Specifications

- 10GB per dataset

- Mendeley Data datasets for personal accounts have a maximum limit of 10 GB per dataset. However, if your Institution subscribes to Mendeley Data you will have the ability to create datasets up to a maximum size of 100GB. The maximum size will depend on the storage agreement that your institution has.

- Users can choose from a range of 16 licenses that can be applied to data, with the default being Creative Commons Zero (CC0) .

- Mendeley Data reserves a DOI when the dataset is created and mints it when the dataset is published.

- Mendeley Data provides PIDs for individual files and folders within a dataset.

- Each draft dataset has a share link which you can copy to send to collaborators; they’ll be able to access the dataset metadata and files prior to publishing.

- When publishing a dataset, a user may choose to defer the date at which the data becomes available (for example, so that it is available at the same time as an associated article).

- You can search for datasets by keyword, within dataset metadata and data files. You can perform an advanced search using field codes to target one or more specific fields and / or boolean operators.

- If the dataset owner has decided to allow the download of datasets, this can be done freely and only the download count is tracked.

- You can download all the files within the dataset using the “Download All” button in a Published dataset, which will give you the file size before you start the download and create a zip file with the Dataset name and version. You can see the same structure of the dataset including all the subfolders, within the zip file.

- The communal repository is available free of charge for individual researchers who want to publish relatively small datasets (up to 10GB each).

Open Science Framework (OSF) Features & Specifications

- Any type of file can be uploaded. Most files will render directly in the File Viewer. For example, if the file is an image, you can zoom in and out on details.

- 5GB/file upload limit for native OSF Storage. There is no limit imposed by OSF for the amount of storage used across add-ons connected to a given project.

- Users can choose from a long list of licenses. Users can also define a license in a .txt file and uploads to the project.

- OSF uses Globally Unique Identifiers (GUIDs) on all objects (users, files, projects, components, registrations, and preprints) across the platform, which are citable in scholarly communication. OSF also supports registration of DOIs for projects, components, and research registrations with DataCite, and for preprints with Crossref. OSF collects ORCID iDs for users and contributors, and provides those with metadata sent for DOI minting, as well as ROR identifiers when contributor affiliations are known.

- OSF does not currently support DOI versioning.

- OSF supports request access and private sharing settings, as well as view only link with ability to anonymize contributor list.

- Contributors are a group of collaborators within a project, component, registration, or preprint. Projects and components have individual contributor lists and permissions levels, so you can control who can access and modify your work.

- You can create a view-only link to share projects so those who have the link can view—but not edit—the project, and also the anonymize contributor list (e.g., for peer review).

- Free Text Search functionality provided. The OSF Search interface offers a few options for filtering search results. Tags are automatically indexed by search for public content.

- Access to view and download public content on OSF is free and does not require an account. You can download individual or multiple Quick Files to save and view them on your computer.

- Files stored on OSF can also be downloaded locally through the API.

- OSF is free to use by research producers and consumers. Signing up for an account on OSF is quick and easy, by providing a name, email, and password, or by using ORCID or institutional credentials (including Harvard Key).

Science Data Bank (ScienceDB) Features & Specifications

- They prefer non-proprietary file formats, which can be found in the Science Data Bank Preferred File Format table .

- Not preferred

- CC0 is the default license assigned to datasets.

- ScienceDB provides the following licensing options: CC0, CC-BY 4.0, CC BY-SA 4.0, CC BY-NC 4.0, CC BY-NC-SA 4.0, CC BY-ND 4.0, CC BY-NC-ND 4.0, and 3 licenses for database: PDDL, ODC-By, ODbL, as well as 12 software license agreements: MIT, Apache-2.0, AGPL-3.0, LGPL-2.1, GPL-2.0, GPL-3.0, BSD-2-Clause, BSD-3-Clause, MPL-2.0, BSL-1.0, EPL-2.0 and The Unlicense. For more information, see the Science Data Bank FAQ webpage.

- Once a submission is published, ScienceDB assigns a DOI to each dataset. A Commons Science and Technology Resource (CSTR) is also assigned to accepted datasets. Data depositors can select their preferred data citation format.

- At the time of data submission, users may select to an open access or embargo option. Files can be restricted to require users to request access through a Data Access Application.

- OPEN-API allows users to access datasets programmatically. All metadata is harvested via OAI-PMH.

- Currently, submitting data to ScienceDB is free. However, the Science Data Bank FAQ webpage states that they reserve the right to charge for submission, review, or storage in the future.

Synapse Features & Specifications

- Conditions for use are put in place to define/restrict how users who have permission to download data may use it.

- Conditions for use may include IRB approval or other restrictions that you define as the data contributor.

- You are responsible for determining if the data you would like to contribute is controlled data and therefore requires conditions for use.

- Conditions for use can be set at the project, folder, file and table level.

- Synapse items receive a unique identifier (SynID). Items that receive a SynID are files, folders, projects, tables, views, wikis, links, and Docker repositories. Each file also receives a MD5 checksum. DOIs are available in Synapse for projects, files, folders, tables, and views.

- There are a number of Synapse user access controls for public and private projects, and sharing settings can be managed at the project, folder, file, and table level. Data containing sensitive information (i.e., data with a de-identification risk) can be restricted to specific users; users wanting access to controlled data can request individual access. Project administrators can add and manage permissions for individual team members on private projects. Public projects can either be fully public or restricted to registered Synapse Users.

- Users are required to have a Synapse account and become a certified user in order to upload data. Data can be uploaded and downloaded via a web-based user interface or programmatically using Python, R, or the command line.

- Intended for individual researchers wanting to share small datasets (<100GB) for publication (including creating DOIs) and scientific, educational, and research collaborations.

Vivli Features & Specifications

- Data sets are not required to be standardized; however, we highly recommend that data sets be made available in CDISC SDTM (Standard Data Tabulation Model) format to support the most efficient data aggregation, re-use, and sharing. In the future, Vivli will explore the use of Common Data Elements within specific clinical domains.

- Vivli data contributors can share files up to 500 MB. Larger sizes of up to 100T can be accommodated. If your file is larger than 1T, please email Vivli Support .

- There is no limit per researcher, and each data contribution is reviewed by Vivli.

- All data contributors must sign and conform to the Data Contributor Agreement (available upon request), which includes language about intellectual property.

- All clinical research that is available for search and request on the Vivli platform is assigned a DataCite Digital Object Identifier (DOI) at the time the metadata for the clinical research data appears in the Vivli search and is available for request.

- The clinical research dataset is assigned a main DOI with a parent-child data object reference for all data and documents associated with a study’s data package to support data discovery.

- Datasets contributed to Vivli may be made available via various levels of access through download after signing of a Data Use Agreement.

- The Vivli platform allows users to search through listed studies using three search methods, including a Keyword Search, PICO Search, and Quick Study Look-up.

- To request data, a researcher or team must first create an account on Vivli and submit a research proposal.

- Yes, through Vivli’s secure research environment.

- Use robust analytical tools to combine and analyze multiple datasets. Vivli’s secure research environment is a virtual work-space within the Vivli platform where researchers who have been provided access to data, will have remote desktop access to conduct their analysis. Researchers will have access to SAS , STATA , R , Python , Jupyter , and the Microsoft Office suite to enable analysis of shared data sets. If desired, additional analytical tools, data and scripts may be included in a research team’s secure research environment.

- Access to metadata and data hosted by Vivli is free and accessible to all, subject to meeting a data contributor’s data sharing policies.

- If your academic institution is a member of Vivli there is no cost to deposit data in Vivli’s platform starting in 2023.

- Service fee for clinical trial datasets (<500GB): $4,000

- Larger clinical trial datasets (>500GB): $10,000

- Optional anonymization services provided by Privacy Analytics: $10,000 per dataset

Zenodo Features & Specifications

- All file types are accepted. Files are categorized as: publications, posters, presentations, datasets, images, software, videos/audio, and interactive materials. Zenodo also integrates with GitHub to deposit GitHub repositories for sharing and long-term preservation.

- Total files size limit per record is 50GB. Higher quotas can be requested and granted on a case-by-case basis

- Currently accept up to 50GB per dataset (you can have multiple datasets). There is no size limit on communities. Zenodo also encourages researchers to reach out to discuss use cases with larger dataset sizes.

- Users can choose from a long list of licenses, with the default being Creative Commons Zero (CC0) . Users must specify a license for all publicly available files.

- Zenodo assigns all publicly available uploads a Digital Object Identifier (DOI) to make the upload easily and uniquely citable. Zenodo further supports harvesting of all content via the OAI-PMH protocol. Also allows the user to enter a previously assigned DOI.

- Users can choose to deposit files under open, embargoed, restricted, or closed access. For embargoed files, the user can choose the length of the embargo period, and the content will become publicly available automatically at the end of the embargo period. Users may also deposit restricted files and grant access to specific individuals.

- Free-text search functionality is provided. Machine-readable metadata are also recorded, according to the Invenio Digital Library Framework . Zenodo communicates with existing services, such as Mendeley, ORCID, Crossref, and OpenAIRE for pre-filling metadata.

- In addition to individual file downloading, Zenodo has an OAI-PMH API for programmatic data and metadata access, as described in the Zenodo API documentation

© 2024 by the President and Fellows of Harvard College

Click through the PLOS taxonomy to find articles in your field.

For more information about PLOS Subject Areas, click here .

Recommended Repositories

All data, software and code underlying reported findings should be deposited in appropriate public repositories, unless already provided as part of the article. Repositories may be either subject-specific repositories that accept specific types of structured data and/or software, or cross-disciplinary generalist repositories that accept multiple data and/or software types.

If field-specific standards for data or software deposition exist, PLOS requires authors to comply with these standards. Authors should select repositories appropriate to their field of study (for example, ArrayExpress or GEO for microarray data; GenBank, EMBL, or DDBJ for gene sequences). PLOS has identified a set of established repositories, listed below, that are recognized and trusted within their respective communities. PLOS does not dictate repository selection for the data availability policy.

For further information on environmental and biomedical science repositories and field standards, we suggest utilizing FAIRsharing . Additionally, the Registry of Research Data Repositories ( Re3Data ) is a full scale resource of registered data repositories across subject areas. Both FAIRsharing and Re3Data provide information on an array of criteria to help researchers identify the repositories most suitable for their needs (e.g., licensing, certificates and standards, policy, etc.).

If no specialized community-endorsed public repository exists, institutional repositories that use open licenses permitting free and unrestricted use or public domain, and that adhere to best practices pertaining to responsible sharing, sustainable digital preservation, proper citation, and openness are also suitable for deposition.

If authors use repositories with stated licensing policies, the policies should not be more restrictive than the Creative Commons Attribution (CC BY) license .

Cross-disciplinary repositories

- Dryad Digital Repository

- Harvard Dataverse Network

- Network Data Exchange (NDEx)

- Open Science Framework

- Swedish National Data Service

Repositories by type

Biochemistry

| * |

*Data entered in the STRENDA DB submission form are automatically checked for compliance and receive a fact sheet PDF with warnings for any missing information.

Biomedical Sciences

Marine Sciences

- SEA scieNtific Open data Edition (SEANOE)

Model Organisms

Neuroscience

- Functional Connectomes Project International Neuroimaging Data-Sharing Initiative (FCP/INDI)

- German Neuroinformatics Node/G-Node (GIN)

- NeuroMorpho.org

Physical Sciences

Social Sciences

- Inter-university Consortium for Political and Social Research (ICPSR)

- Qualitative Data Repository

- UK Data Service

Structural Databases

Taxonomic & Species Diversity

Unstructured and/or Large Data

PLOS would like to thank the Open Access Nature Publishing Group journal, Scientific Data , for their own list of recommended repositories .

Repository Criteria

The list of repositories above is not exhaustive and PLOS encourages the use of any repository that meet the following criteria:

Dataset submissions should be open to all researchers whose research fits the scientific scope of the repository. PLOS’ list does not include repositories that place geographical or affiliation restrictions on submission of datasets.

Repositories must assign a stable persistent identifier (PID) for each dataset at publication, such as a digital object identifier (DOI) or an accession number.

- Repositories must provide the option for data to be available under CC0 or CC BY licenses (or equivalents that are no less restrictive). Specifically, there must be no restrictions on derivative works or commercial use.

- Repositories should make datasets available to any interested readers at no cost, and with no registration requirements that unnecessarily restrict access to data. PLOS will not recommend repositories that charge readers access fees or subscription fees.

- Repositories must have a long-term data management plan (including funding) to ensure that datasets are maintained for the foreseeable future.

- Repositories should demonstrate acceptance and usage within the relevant research community, for example, via use of the repository for data deposition for multiple published articles.

- Repositories should have an entry in FAIRsharing.org to allow it to be linked to the PLOS entry .

Please note, the list of recommended repositories is not actively maintained. Please use the resources at the top of the page and the criteria above to help select an appropriate repository.

Unfortunately we don't fully support your browser. If you have the option to, please upgrade to a newer version or use Mozilla Firefox , Microsoft Edge , Google Chrome , or Safari 14 or newer. If you are unable to, and need support, please send us your feedback .

We'd appreciate your feedback. Tell us what you think! opens in new tab/window

Sharing research data

As a researcher, you are increasingly encouraged, or even mandated, to make your research data available, accessible, discoverable and usable.

Sharing research data is something we are passionate about too, so we’ve created this short video and written guide to help you get started.

Research Data

What is research data.

While the definition often differs per field, generally, research data refers to the results of observations or experiments that validate your research findings. These span a range of useful materials associated with your research project, including:

Raw or processed data files

Research data does not include text in manuscript or final published article form, or data or other materials submitted and published as part of a journal article.

Why should I share my research data?

There are so many good reasons. We’ve listed just a few:

How you benefit

You get credit for the work you've done

Leads to more citations! 1

Can boost your number of publications

Increases your exposure and may lead to new collaborations

What it means for the research community

It's easy to reuse and reinterpret your data

Duplication of experiments can be avoided

New insights can be gained, sparking new lines of inquiry

Empowers replication

And society at large…

Greater transparency boosts public faith in research

Can play a role in guiding government policy

Improves access to research for those outside health and academia

Benefits the public purse as funding of repeat work is reduced

How do I share my research data?

The good news is it’s easy.

Yet to submit your research article? There are a number of options available. These may vary depending on the journal you have chosen, so be sure to read the Research Data section in its Guide for Authors before you begin.

Already published your research article? No problem – it’s never too late to share the research data associated with it.

Two of the most popular data sharing routes are:

Publishing a research elements article

These brief, peer-reviewed articles complement full research papers and are an easy way to receive proper credit and recognition for the work you have done. Research elements are research outputs that have come about as a result of following the research cycle – this includes things like data, methods and protocols, software, hardware and more.

You can publish research elements articles in several different Elsevier journals, including our suite of dedicated Research Elements journals . They are easy to submit, are subject to a peer review process, receive a DOI and are fully citable. They also make your work more sharable, discoverable, comprehensible, reusable and reproducible.

The accompanying raw data can still be placed in a repository of your choice (see below).

Uploading your data to a repository like Mendeley Data

Mendeley Data is a certified, free-to-use repository that hosts open data from all disciplines, whatever its format (e.g. raw and processed data, tables, codes and software). With many Elsevier journals, it’s possible to upload and store your data to Mendeley Data during the manuscript submission process. You can also upload your data directly to the repository. In each case, your data will receive a DOI, making it independently citable and it can be linked to any associated article on ScienceDirect, making it easy for readers to find and reuse.

View an article featuring Mendeley data opens in new tab/window (just select the Research Data link in the left-hand bar or scroll down the page).

What if I can’t submit my research data?

Data statements offer transparency.

We understand that there are times when the data is simply not available to post or there are good reasons why it shouldn’t be shared. A number of Elsevier journals encourage authors to submit a data statement alongside their manuscript. This statement allows you to clearly explain the data you’ve used in the article and the reasons why it might not be available. The statement will appear with the article on ScienceDirect.

View a sample data statement opens in new tab/window (just select the Research Data link in the left-hand bar or scroll down the page).

Showcasing your research data on ScienceDirect

We have 3 top tips to help you maximize the impact of your data in your article on ScienceDirect.

Link with data repositories

You can create bidirectional links between any data repositories you’ve used to store your data and your online article. If you’ve published a data article, you can link to that too.

Enrich with interactive data visualizations

The days of being confined to static visuals are over. Our in-article interactive viewers let readers delve into the data with helpful functions such as zoom, configurable display options and full screen mode.

Cite your research data

Get credit for your work by citing your research data in your article and adding a data reference to the reference list. This ensures you are recognized for the data you shared and/or used in your research. Read the References section in your chosen journal’s Guide for Authors for more information.

Ready to get started?

If you have yet to publish your research paper, the first step is to find the right journal for your submission and read the Guide for Authors .

Find a journal by matching paper title and abstract of your manuscript in Elsevier's JournalFinder opens in new tab/window

Find journal by title opens in new tab/window

Already published? Just view the options for sharing your research data above.

1 Several studies have now shown that making data available for an article increases article citations.

You are using an outdated browser. Please upgrade your browser to improve your experience.

Featured communities

Open repository for EU-funded research outputs from Horizon Europe, Euratom and earlier Framework Programmes.

Collection of items related to the Generic Mapping Tools software [www.generic-mapping-tools.org].

A community to share publications related to bio-systematics.

Generalist Repository Ecosystem Initiative (GREI) aims to develop collaborative approaches for data management and sharing through inclusion of the generalist repositories in the NIH data ecosystem.

Aurora is a University network platform for European university leaders, administrators, academics, and students to learn from and with each other. The projects we do and the results that can be shared publically will be put in this community page.

ECEMF is a Horizon 2020-funded project aiming to establish a European forum for energy and climate researchers and policy makers to achieve climate neutrality.

Recent uploads

Curated OpenFF PhAlkEthOH Dataset: 1000 conformer test set, version "nc_1000_v0": This provides a curated hdf5 file for a subset of the OpenFF PhAlkEthOH dataset designed to be compatible with modelforge, an infrastructure to implement and train NNPs. This dataset contains 1000 total conformers, for 101 unique molecules (a maximum of 10...

Uploaded on June 20, 2024

Part of modelforge: an infrastructure to implement and train NNPs

1 more version exist for this record

Curated OpenFF PhAlkEthOH Dataset: Full dataset, version "full_dataset_v0": This provides a curated hdf5 file for the OpenFF PhAlkEthOH dataset designed to be compatible with modelforge, an infrastructure to implement and train NNPs. This dataset contains 1,188,709 total conformers, for 12,271 unique molecules. When applicable, the units...

A .zip file containing the source data files & Jupyter notebook for analysis of the Prymnesium parvum 12B1 PKZILLA-detecting proteomic results (https://doi.org/10.5281/zenodo.10023441), and the resulting files from the workflow. See "Analysis of proteomic results" section of the manuscript Materials and Methods for further detail. Key...

Part of Brad S. Moore Lab

Source data files, executable code, and results for A-type PKZILLA PKS domain phylogenetic analysis. Difference from previous version: Difference between v1.0 to v1.1 of this Zenodo item series: is that KR11f0, KR20f0 were renamed in the plots to K11*, KR20*, respectively, based on new interpretations. Difference between v1.1 to v1.2:...

2 more versions exist for this record

DAO-Analyzer's dataset. Explore data from Decentralized Autonomous Organizations deployed on the DAOstack, DAOhaus and Aragon platforms.

Part of EU Open Research Repository (Pilot)

245 more versions exist for this record

What's Changed weaver: bump version to 5.1.0 + debug notebook output by @fmigneault in https://github.com/bird-house/birdhouse-deploy/pull/449 weaver: bump version to 5.6.0 by @fmigneault in https://github.com/bird-house/birdhouse-deploy/pull/463 Full Changelog: https://github.com/bird-house/birdhouse-deploy/compare/2.4.2...2.5.0

Part of Ouranos Consortium on Regional Climatology and Climate Change Adaptation Computer Research Institute of Montreal Birdhouse

64 more versions exist for this record

•Annual electricty use is approx. 67 kwh/capita one of the lowest in the world •Government anticipates electricity generation of 1000 MW by 2030 Research objectives •What is the best alternative technology for the expansion of the power sector in Sierra Leone? •What is the impact of natural gas power generation in the power sector? •How...

Uploaded on June 19, 2024

Part of Climate Compatible Growth

Slides for the EMI session at the World Biodiversity Forum 2024. https://worldbiodiversityforum2024.org/

Part of The Earth Metabolome Initiative

Submission ID: 157 H2IOSC (Humanities and cultural Heritage Italian Open Science Cloud) is a project funded by the National Recovery and Resilience Plan (PNRR) in Italy, aiming to create a federated cluster comprising the Italian branches of four European research infrastructures (RIs) - CLARIN, DARIAH, E-RIHS, OPERAS - operating in the...

Part of Workflows: Digital Methods for Reproducible Research Practices in the Arts and Humanities - DARIAH Annual Event 2024

The goal of scholarly editing is to reconstruct and publish texts by exploring philological phenomena and documenting them in critical apparatuses or editorial notes. Textual scholarship involves complex, multi-stage processes, and the digital landscape requires even additional effort from textual scholars, as it necessitates formal protocols...

This site uses cookies. Find out more on how we use cookies

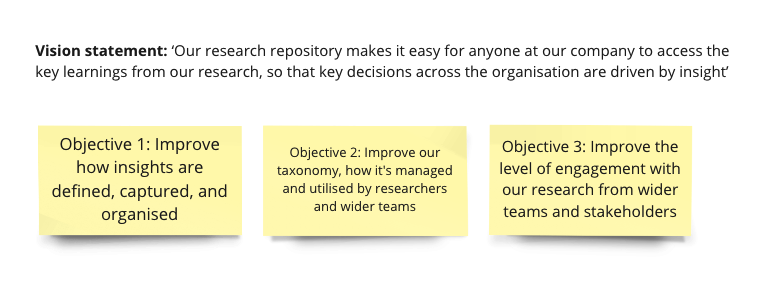

What is a research repository, and why do you need one?

Last updated

31 January 2024

Reviewed by

Miroslav Damyanov

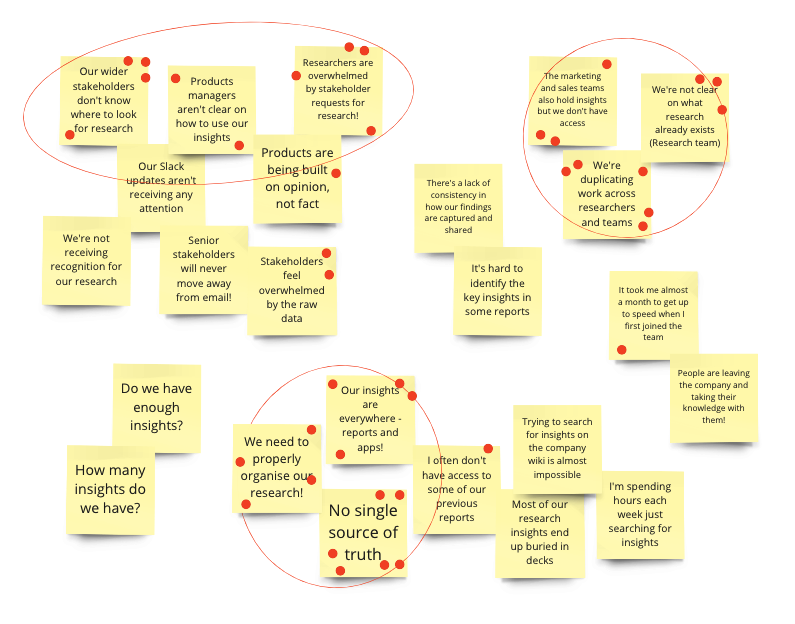

Without one organized source of truth, research can be left in silos, making it incomplete, redundant, and useless when it comes to gaining actionable insights.

A research repository can act as one cohesive place where teams can collate research in meaningful ways. This helps streamline the research process and ensures the insights gathered make a real difference.

- What is a research repository?

A research repository acts as a centralized database where information is gathered, stored, analyzed, and archived in one organized space.

In this single source of truth, raw data, documents, reports, observations, and insights can be viewed, managed, and analyzed. This allows teams to organize raw data into themes, gather actionable insights , and share those insights with key stakeholders.

Ultimately, the research repository can make the research you gain much more valuable to the wider organization.

- Why do you need a research repository?

Information gathered through the research process can be disparate, challenging to organize, and difficult to obtain actionable insights from.

Some of the most common challenges researchers face include the following:

Information being collected in silos

No single source of truth

Research being conducted multiple times unnecessarily

No seamless way to share research with the wider team

Reports get lost and go unread

Without a way to store information effectively, it can become disparate and inconclusive, lacking utility. This can lead to research being completed by different teams without new insights being gathered.

A research repository can streamline the information gathered to address those key issues, improve processes, and boost efficiency. Among other things, an effective research repository can:

Optimize processes: it can ensure the process of storing, searching, and sharing information is streamlined and optimized across teams.

Minimize redundant research: when all information is stored in one accessible place for all relevant team members, the chances of research being repeated are significantly reduced.

Boost insights: having one source of truth boosts the chances of being able to properly analyze all the research that has been conducted and draw actionable insights from it.

Provide comprehensive data: there’s less risk of gaps in the data when it can be easily viewed and understood. The overall research is also likely to be more comprehensive.

Increase collaboration: given that information can be more easily shared and understood, there’s a higher likelihood of better collaboration and positive actions across the business.

- What to include in a research repository

Including the right things in your research repository from the start can help ensure that it provides maximum benefit for your team.

Here are some of the things that should be included in a research repository:

An overall structure

There are many ways to organize the data you collect. To organize it in a way that’s valuable for your organization, you’ll need an overall structure that aligns with your goals.

You might wish to organize projects by research type, project, department, or when the research was completed. This will help you better understand the research you’re looking at and find it quickly.

Including information about the research—such as authors, titles, keywords, a description, and dates—can make searching through raw data much faster and make the organization process more efficient.

All key data and information

It’s essential to include all of the key data you’ve gathered in the repository, including supplementary materials. This prevents information gaps, and stakeholders can easily stay informed. You’ll need to include the following information, if relevant:

Research and journey maps

Tools and templates (such as discussion guides, email invitations, consent forms, and participant tracking)

Raw data and artifacts (such as videos, CSV files, and transcripts)

Research findings and insights in various formats (including reports, desks, maps, images, and tables)

Version control

It’s important to use a system that has version control. This ensures the changes (including updates and edits) made by various team members can be viewed and reversed if needed.

- What makes a good research repository?

The following key elements make up a good research repository that’s useful for your team:

Access: all key stakeholders should be able to access the repository to ensure there’s an effective flow of information.

Actionable insights: a well-organized research repository should help you get from raw data to actionable insights faster.

Effective searchability : searching through large amounts of research can be very time-consuming. To save time, maximize search and discoverability by clearly labeling and indexing information.

Accuracy: the research in the repository must be accurately completed and organized so that it can be acted on with confidence.

Security: when dealing with data, it’s also important to consider security regulations. For example, any personally identifiable information (PII) must be protected. Depending on the information you gather, you may need password protection, encryption, and access control so that only those who need to read the information can access it.

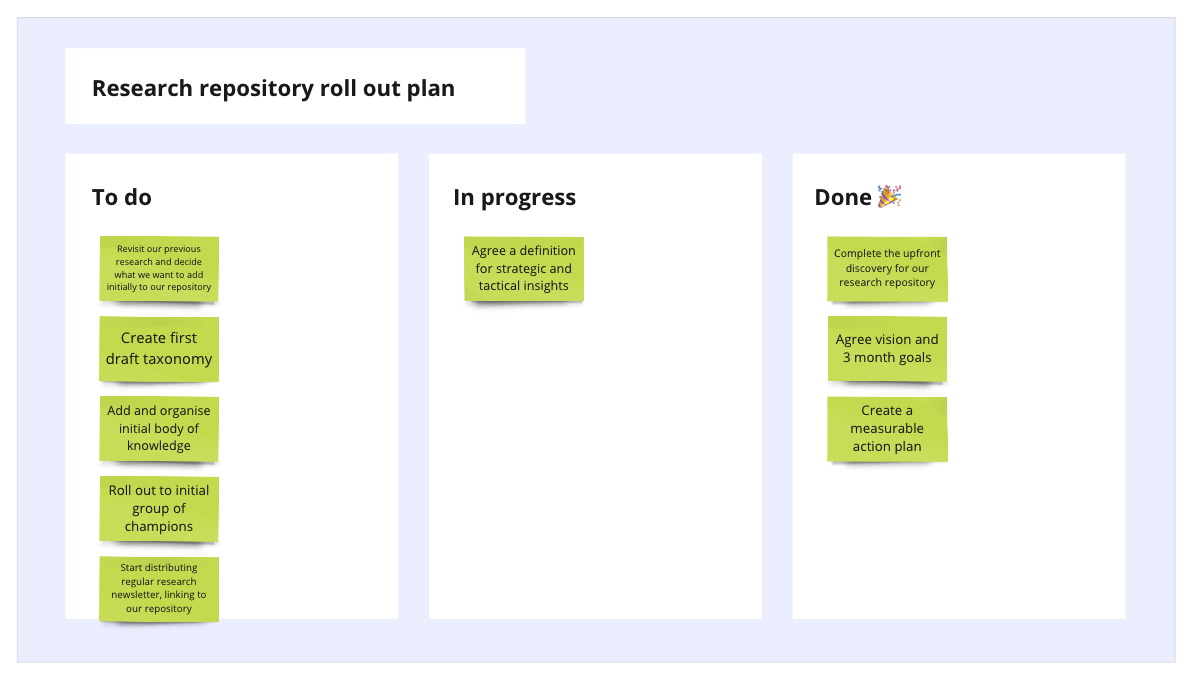

- How to create a research repository

Getting started with a research repository doesn’t have to be convoluted or complicated. Taking time at the beginning to set up the repository in an organized way can help keep processes simple further down the line.

The following six steps should simplify the process:

1. Define your goals

Before diving in, consider your organization’s goals. All research should align with these business goals, and they can help inform the repository.

As an example, your goal may be to deeply understand your customers and provide a better customer experience . Setting out this goal will help you decide what information should be collated into your research repository and how it should be organized for maximum benefit.

2. Choose a platform

When choosing a platform, consider the following:

Will it offer a single source of truth?

Is it simple to use

Is it relevant to your project?

Does it align with your business’s goals?

3. Choose an organizational method

To ensure you’ll be able to easily search for the documents, studies, and data you need, choose an organizational method that will speed up this process.

Choosing whether to organize your data by project, date, research type, or customer segment will make a big difference later on.

4. Upload all materials

Once you have chosen the platform and organization method, it’s time to upload all the research materials you have gathered. This also means including supplementary materials and any other information that will provide a clear picture of your customers.

Keep in mind that the repository is a single source of truth. All materials that relate to the project at hand should be included.

5. Tag or label materials

Adding metadata to your materials will help ensure you can easily search for the information you need. While this process can take time (and can be tempting to skip), it will pay off in the long run.

The right labeling will help all team members access the materials they need. It will also prevent redundant research, which wastes valuable time and money.

6. Share insights

For research to be impactful, you’ll need to gather actionable insights. It’s simpler to spot trends, see themes, and recognize patterns when using a repository. These insights can be shared with key stakeholders for data-driven decision-making and positive action within the organization.

- Different types of research repositories

There are many different types of research repositories used across organizations. Here are some of them:

Data repositories: these are used to store large datasets to help organizations deeply understand their customers and other information.

Project repositories: data and information related to a specific project may be stored in a project-specific repository. This can help users understand what is and isn’t related to a project.

Government repositories: research funded by governments or public resources may be stored in government repositories. This data is often publicly available to promote transparent information sharing.

Thesis repositories: academic repositories can store information relevant to theses. This allows the information to be made available to the general public.

Institutional repositories: some organizations and institutions, such as universities, hospitals, and other companies, have repositories to store all relevant information related to the organization.

- Build your research repository in Dovetail

With Dovetail, building an insights hub is simple. It functions as a single source of truth where research can be gathered, stored, and analyzed in a streamlined way.

1. Get started with Dovetail

Dovetail is a scalable platform that helps your team easily share the insights you gather for positive actions across the business.

2. Assign a project lead

It’s helpful to have a clear project lead to create the repository. This makes it clear who is responsible and avoids duplication.

3. Create a project

To keep track of data, simply create a project. This is where you’ll upload all the necessary information.

You can create projects based on customer segments, specific products, research methods , or when the research was conducted. The project breakdown will relate back to your overall goals and mission.

4. Upload data and information

Now, you’ll need to upload all of the necessary materials. These might include data from customer interviews , sales calls, product feedback , usability testing , and more. You can also upload supplementary information.

5. Create a taxonomy

Create a taxonomy to organize the data effectively by ensuring that each piece of information will be tagged and organized.

When creating a taxonomy, consider your goals and how they relate to your customers. Ensure those tags are relevant and helpful.

6. Tag key themes

Once the taxonomy is created, tag each piece of information to ensure you can easily filter data, group themes, and spot trends and patterns.

With Dovetail, automatic clustering helps quickly sort through large amounts of information to uncover themes and highlight patterns. Sentiment analysis can also help you track positive and negative themes over time.

7. Share insights

With Dovetail, it’s simple to organize data by themes to uncover patterns and share impactful insights. You can share these insights with the wider team and key stakeholders, who can use them to make customer-informed decisions across the organization.

8. Use Dovetail as a source of truth

Use your Dovetail repository as a source of truth for new and historic data to keep data and information in one streamlined and efficient place. This will help you better understand your customers and, ultimately, deliver a better experience for them.

Should you be using a customer insights hub?

Do you want to discover previous research faster?

Do you share your research findings with others?

Do you analyze research data?

Start for free today, add your research, and get to key insights faster

Editor’s picks

Last updated: 18 April 2023

Last updated: 27 February 2023

Last updated: 6 February 2023

Last updated: 15 January 2024

Last updated: 6 October 2023

Last updated: 5 February 2023

Last updated: 16 April 2023

Last updated: 7 March 2023

Last updated: 9 March 2023

Last updated: 12 December 2023

Last updated: 11 March 2024

Last updated: 13 May 2024

Latest articles

Related topics, .css-je19u9{-webkit-align-items:flex-end;-webkit-box-align:flex-end;-ms-flex-align:flex-end;align-items:flex-end;display:-webkit-box;display:-webkit-flex;display:-ms-flexbox;display:flex;-webkit-flex-direction:row;-ms-flex-direction:row;flex-direction:row;-webkit-box-flex-wrap:wrap;-webkit-flex-wrap:wrap;-ms-flex-wrap:wrap;flex-wrap:wrap;-webkit-box-pack:center;-ms-flex-pack:center;-webkit-justify-content:center;justify-content:center;row-gap:0;text-align:center;max-width:671px;}@media (max-width: 1079px){.css-je19u9{max-width:400px;}.css-je19u9>span{white-space:pre;}}@media (max-width: 799px){.css-je19u9{max-width:400px;}.css-je19u9>span{white-space:pre;}} decide what to .css-1kiodld{max-height:56px;display:-webkit-box;display:-webkit-flex;display:-ms-flexbox;display:flex;-webkit-align-items:center;-webkit-box-align:center;-ms-flex-align:center;align-items:center;}@media (max-width: 1079px){.css-1kiodld{display:none;}} build next, decide what to build next.

Users report unexpectedly high data usage, especially during streaming sessions.

Users find it hard to navigate from the home page to relevant playlists in the app.

It would be great to have a sleep timer feature, especially for bedtime listening.

I need better filters to find the songs or artists I’m looking for.

Log in or sign up

Get started for free

News: Teamscope joins StudyPages 🎉

Data collection in the fight against COVID-19

Data Sharing

6 repositories to share your research data.

Dear Diary, I have been struggling with an eating disorder for the past few years. I am afraid to eat and afraid I will gain weight. The fear is unjustified as I was never overweight. I have weighed the same since I was 12 years old, and I am currently nearing my 25th birthday. Yet, when I see my reflection, I see somebody who is much larger than reality. I told my therapist that I thought I was fat. She said it was 'body dysmorphia'. She explained this as a mental health condition where a person is apprehensive about their appearance and suggested I visit a nutritionist. She also told me that this condition was associated with other anxiety disorders and eating disorders. I did not understand what she was saying as I was in denial; I had a problem, to begin with. I wanted a solution without having to address my issues. Upon visiting my nutritionist, he conducted an in-body scan and told me my body weight was dangerously low. I disagreed with him. I felt he was speaking about a different person than the person I saw in the mirror. I felt like the elephant in the room- both literally and figuratively. He then made the simple but revolutionary suggestion to keep a food diary to track what I was eating. This was a clever way for my nutritionist and me to be on the same page. By recording all my meals, drinks, and snacks, I was able to see what I was eating versus what I was supposed to be eating. Keeping a meal diary was a powerful and non-invasive way for my nutritionist to walk in my shoes for a specific time and understand my eating (and thinking) habits. No other methodology would have allowed my nutritionist to capture so much contextual and behavioural information on my eating patterns other than a daily detailed food diary. However, by using a paper and pen, I often forgot (or intentionally did not enter my food entries) as I felt guilty reading what I had eaten or that I had eaten at all. I also did not have the visual flexibility to express myself through using photos, videos, voice recordings, and screen recordings. The usage of multiple media sources would have allowed my nutritionist to observe my behaviour in real-time and gain a holistic view of my physical and emotional needs. I confessed to my therapist my deliberate dishonesty in completing the physical food diary and why I had been reluctant to participate in the exercise. My therapist then suggested to my nutritionist and me to transition to a mobile diary study. Whilst I used a physical diary (paper and pen), a mobile diary study app would have helped my nutritionist and me reach a common ground (and to be on the same page) sooner rather than later. As a millennial, I wanted to feel like journaling was as easy as Tweeting or posting a picture on Instagram. But at the same time, I wanted to know that the information I provided in a digital diary would be as safe and private as it would have been as my handwritten diary locked in my bedroom cabinet. Further, a digital food diary study platform with push notifications would have served as a constant reminder to log in my food entries as I constantly check my phone. It would have also made the task of writing a food diary less momentous by transforming my journaling into micro-journaling by allowing me to enter one bite at a time rather than the whole day's worth of meals at once. Mainly, the digital food diary could help collect the evidence that I was not the elephant in the room, but rather that the elephant in the room was my denied eating disorder. Sincerely, The elephant in the room

Why share research data?

Sharing information stimulates science. When researchers choose to make their data publicly available, they are allowing their work to contribute far beyond their original findings.

The benefits of data sharing are immense. When researchers make their data public, they increase transparency and trust in their work, they enable others to reproduce and validate their findings, and ultimately, contribute to the pace of scientific discovery by allowing others to reuse and build on top of their data.

"If I have seen further it is by standing on the shoulders of Giants." Isaac Newton, 1675.

While the benefits of data sharing and open science are categorical, sadly 86% of medical research data is never reused . In a 2014 survey conducted by Wiley with over 2000 researchers across different fields, found that 21% of surveyed researchers did not know where to share their data and 16% how to do so.

In a series of articles on Data Sharing we seek to break down this process for you and cover everything you need to know on how to share your research outputs.

In this first article, we will introduce essential concepts of public data and share six powerful platforms to upload and share datasets.

What is a Research Data Repository?

The best way to publish and share research data is with a research data repository. A repository is an online database that allows research data to be preserved across time and helps others find it.

Apart from archiving research data, a repository will assign a DOI to each uploaded object and provide a web page that tells what it is, how to cite it and how many times other researchers have cited or downloaded that object.

What is a DOI?

When a researcher uploads a document to an online data repository, a digital object identifier (DOI) will be assigned. A DOI is a globally unique and persistent string (e.g. 10.6084/m9.figshare.7509368.v1) that identifies your work permanently.

A data repository can assign a DOI to any document, such as spreadsheets, images or presentation, and at different levels of hierarchy, like collection images or a specific chapter in a book.

The DOI contains metadata that provides users with relevant information about an object, such as the title, author, keywords, year of publication and the URL where that document is stored.

The International DOI Foundation (IDF) developed and introduced the DOI in 2000. Registration Agencies, a federation of independent organizations, register DOIs and provide the necessary infrastructure that allows researchers to declare and maintain metadata.

Key benefits of the DOI system:

- A more straightforward way to track research outputs

- Gives certainty to scientific work

- DOI's versioning system tracks changes to work overtime

- Can be assigned to any document

- Enables proper indexation and citation of research outputs

Once a document has a DOI, others can easily cite it. A handy tool to convert DOI's into a citation is DOI Citation Formatter .

Six repositories to share research data

Now that we have covered the role of a DOI and a data repository, below is a list of 6 data repositories for publishing and sharing research data.

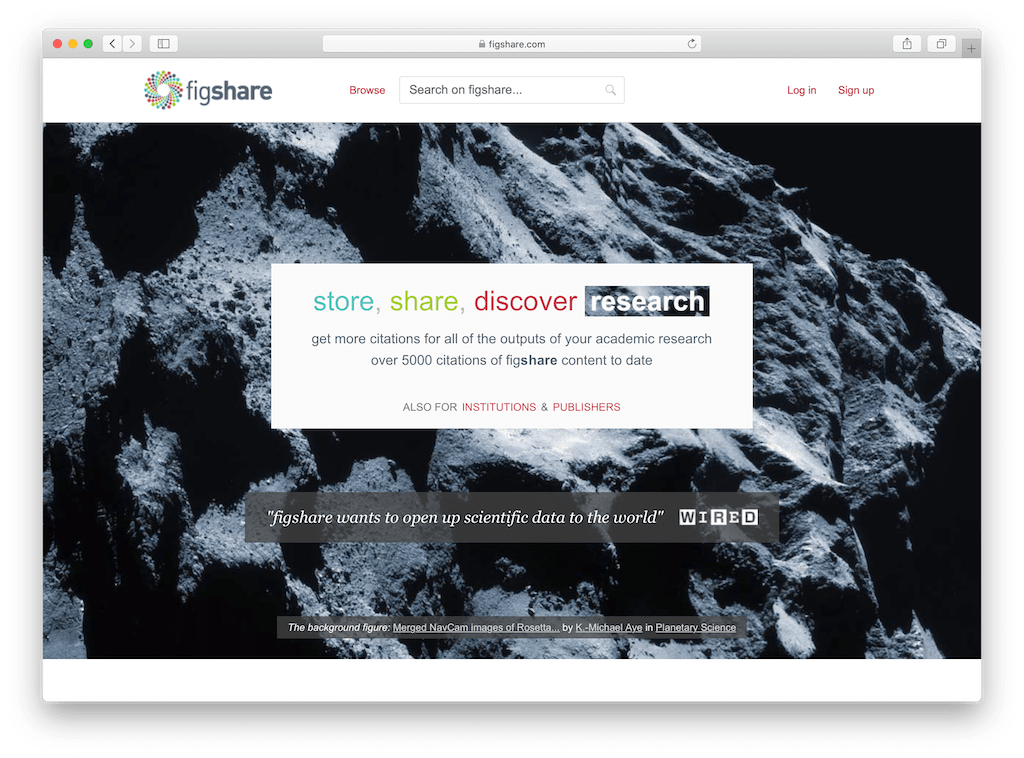

1. figshare

Figshare is an open access data repository where researchers can preserve their research outputs, such as datasets, images, and videos and make them discoverable.

Figshare allows researchers to upload any file format and assigns a digital object identifier (DOI) for citations.

Mark Hahnel launched Figshare in January 2011. Hahnel first developed the platform as a personal tool for organizing and publishing the outputs of his PhD in stem cell biology. More than 50 institutions now use this solution.

Figshare releases' The State of Open Data' every year to assess the changing academic landscape around open research.

Free accounts on Figshare can upload files of up to 5gb and get 20gb of free storage.

2. Mendeley Data

Mendeley Data is an open research data repository, where researchers can store and share their data. Datasets can be shared privately between individuals, as well as publicly with the world.

Mendeley's mission is to facilitate data sharing. In their own words, "when research data is made publicly available, science benefits:

- the findings can be verified and reproduced- the data can be reused in new ways

- discovery of relevant research is facilitated

- funders get more value from their funding investment."

Datasets uploaded to Mendeley Data go into a moderation process where they are reviewed. This ensures the content constitutes research data, is scientific, and does not contain a previously published research article.

Researchers can upload and store their work free of cost on Mendeley Data.

If appropriately used in the 21st century, data could save us from lots of failed interventions and enable us to provide evidence-based solutions towards tackling malaria globally. This is also part of what makes the ALMA scorecard generated by the African Leaders Malaria Alliance an essential tool for tracking malaria intervention globally. If we are able to know the financial resources deployed to fight malaria in an endemic country and equate it to the coverage and impact, it would be easier to strengthen accountability for malaria control and also track progress in malaria elimination across the continent of Africa and beyond.

Odinaka Kingsley Obeta

West African Lead, ALMA Youth Advisory Council/Zero Malaria Champion

There is a smarter way to do research.

Build fully customizable data capture forms, collect data wherever you are and analyze it with a few clicks — without any training required.

3. Dryad Digital Repository

Dryad is a curated general-purpose repository that makes data discoverable, freely reusable, and citable.

Most types of files can be submitted (e.g., text, spreadsheets, video, photographs, software code) including compressed archives of multiple files.

Since a guiding principle of Dryad is to make its contents freely available for research and educational use, there are no access costs for individual users or institutions. Instead, Dryad supports its operation by charging a $120US fee each time data is published.

4. Harvard Dataverse

Harvard Dataverse is an online data repository where scientists can preserve, share, cite and explore research data.

The Harvard Dataverse repository is powered by the open-source web application Dataverse, developed by Insitute of Quantitative Social Science at Harvard.

Researchers, journals and institutions may choose to install the Dataverse web application on their own server or use Harvard's installation. Harvard Dataverse is open to all scientific data from all disciplines.

Harvard Dataverse is free and has a limit of 2.5 GB per file and 10 GB per dataset.

5. Open Science Framework

OSF is a free, open-source research management and collaboration tool designed to help researchers document their project's lifecycle and archive materials. It is built and maintained by the nonprofit Center for Open Science.

Each user, project, component, and file is given a unique, persistent uniform resource locator (URL) to enable sharing and promote attribution. Projects can also be assigned digital object identifiers (DOIs) if they are made publicly available.

OSF is a free service.

Zenodo is a general-purpose open-access repository developed under the European OpenAIRE program and operated by CERN.

Zenodo was first born as the OpenAire orphan records repository, with the mission to provide open science compliance to researchers without an institutional repository, irrespective of their subject area, funder or nation.

Zenodo encourages users to early on in their research lifecycle to upload their research outputs by allowing them to be private. Once an associated paper is published, datasets are automatically made open.

Zenodo has no restriction on the file type that researchers may upload and accepts dataset of up to 50 GB.

Research data can save lives, help develop solutions and maximise our knowledge. Promoting collaboration and cooperation among a global research community is the first step to reduce the burden of wasted research.

Although the waste of research data is an alarming issue with billions of euros lost every year, the future is optimistic. The pressure to reduce the burden of wasted research is pushing journals, funders and academic institutions to make data sharing a strict requirement.

We hope with this series of articles on data sharing that we can light up the path for many researchers who are weighing the benefits of making their data open to the world.

The six research data repositories shared in this article are a practical way for researchers to preserve datasets across time and maximize the value of their work.

Cover image by Copernicus Sentinel data (2019), processed by ESA, CC BY-SA 3.0 IG .

References:

“Harvard Dataverse,” Harvard Dataverse, https://library.harvard.edu/services-tools/harvard-dataverse

“Recommended Data Repositories.” Nature, https://go.nature.com/2zdLYTz

“DOI Marketing Brochure,” International DOI Foundation, http://bit.ly/2KU4HsK

“Managing and sharing data: best practice for researchers.” UK Data Archive, http://bit.ly/2KJHE53

Wikipedia contributors, “Figshare,” Wikipedia, The Free Encyclopedia, https://en.wikipedia.org/w/index.php?title=Figshare&oldid=896290279 (accessed August 20, 2019).

Walport, M., & Brest, P. (2011). Sharing research data to improve public health. The Lancet, 377(9765), 537–539. https://doi.org/10.1016/s0140-6736(10)62234-9

Foster, E. D., & Deardorff, A. (2017). Open Science Framework (OSF). Journal of the Medical Library Association : JMLA , 105 (2), 203–206. doi:10.5195/jmla.2017.88

Wikipedia contributors, "Zenodo," Wikipedia, The Free Encyclopedia, https://en.wikipedia.org/w/index.php?title=Zenodo&oldid=907771739 (accessed August 20, 2019).

Wikipedia contributors, "Dryad (repository)," Wikipedia, The Free Encyclopedia, https://en.wikipedia.org/w/index.php?title=Dryad_(repository)&oldid=879494242 (accessed August 20, 2019).

“How and Why Researchers Share Data (and Why They don't),” The Wiley Network, Liz Ferguson , http://bit.ly/31TzVHs

“Frequently Asked Questions,” Mendeley Data, https://data.mendeley.com/faq

Dear Digital Diary, I realized that there is an unquestionable comfort in being misunderstood. For to be understood, one must peel off all the emotional layers and be exposed. This requires both vulnerability and strength. I guess by using a physical diary (a paper and a pen), I never felt like what I was saying was analyzed or judged. But I also never thought I was understood. Paper does not talk back.Using a daily digital diary has required emotional strength. It has required the need to trust and the need to provide information to be helped and understood. Using a daily diary has needed less time and effort than a physical diary as I am prompted to interact through mobile notifications. I also no longer relay information from memory, but rather the medical or personal insights I enter are real-time behaviours and experiences. The interaction is more organic. I also must confess this technology has allowed me to see patterns in my behaviour that I would have otherwise never noticed. I trust that the data I enter is safe as it is password protected. I also trust that I am safe because my doctor and nutritionist can view my records in real-time. Also, with the data entered being more objective and diverse through pictures and voice recordings, my treatment plan has been better suited to my needs. Sincerely, No more elephants in this room

Diego Menchaca

Diego is the founder and CEO of Teamscope. He started Teamscope from a scribble on a table. It instantly became his passion project and a vehicle into the unknown. Diego is originally from Chile and lives in Nijmegen, the Netherlands.

More articles on

How to successfully share research data.

Understanding and using data repositories

What is a data repository.

A data repository is a storage space for researchers to deposit data sets associated with their research. And if you’re an author seeking to comply with a journal data sharing policy , you’ll need to identify a suitable repository for your data.

An open access data repository openly stores data in a way that allows immediate user access to anyone. There are no limitations to the repository access.

Publishing tips, direct to your inbox

Expert tips and guidance on getting published and maximizing the impact of your research. Register now for weekly insights direct to your inbox.

How should I choose a data repository?

First we recommend speaking to your institutional librarian, funder or colleagues at your institution for guidance on choosing a repository that is relevant to your discipline. You can also use FAIRsharing and re3data.org to search for a suitable repository – both provide a list of certified data repositories.

For cases where there is no subject-specific repository, you may wish to consider some of the generalist data repository types below.

4TU.ResearchData

ANDS contributing repositories

Dryad Digital Repository

Harvard Dataverse

Mendeley Data

Open Science Framework

Science Data Bank

Code Ocean (with code)

We encourage authors to select a data repository that issues a persistent identifier, preferably a Digital Object Identifier (DOI), and has established a robust preservation plan to ensure the data is preserved in perpetuity. Additionally, we highly encourage researchers to consider the FAIR Data Principles when depositing data.

Taylor & Francis Online supports ScholeXplorer data linking, helping you to establish a permanent link between your published article and its associated data . If you deposit your data in a ScholeXplorer recognized repository a link to your data will automatically appear on Taylor & Francis Online when your associated article is published.

Checklist for choosing a data repository

Use the Instructions for Authors to find out which data sharing policy your chosen journal adheres to.

Speak to your librarian for a recommendation that’s relevant to your discipline. There may be an institutional repository that is suitable.

Use FAIRsharing and re3data.org if you still haven’t found a suitable repository.

Frequently asked questions about using data repositories

The journal i’m submitting to is double-anonymous. what repository should i use.

If you’re submitting your article to a journal with a double-anonymous peer review policy and a data policy that mandates sharing, then you will need to deposit your data in a repository that preserves anonymity, i.e. removes the details of the authors.

You can use the repository Figshare to generate a ‘private sharing link’ for free. This can be sent via email and the recipient can access the data without logging in or having a Figshare account. This feature is especially for anonymous peer review; you can generate a private sharing link to anonymize data for reviewers. It does not include the Author field or any non-Figshare branding. It is important to note that these links expire after one year however; therefore you should not cite them in publications.

Dryad is another (paid for) alternative which allows you to make your data temporarily “private for peer review.” Dryad uses professional curators to ensure the validity of the files and descriptive information.

The policy states I need to share my data in a ‘FAIR aligned’ repository. What repository should I use?

The repository finder tool, developed by DataCite allows you to search for repositories which are certified and support the FAIR data principles.

Read on to find out how you can choose a FAIR aligned repository .

I need to limit access to my data in a repository. How can I do this?

There are a number of generalist repositories which allow you to limit access to your data, whether permanently or following an embargo period. Some of the repositories offering this functionality include:

Figshare – You can generate a ‘private sharing link’ for free. This can be sent via email address and the recipient can access the data without logging in or having a Figshare account.

Zenodo – Users may deposit restricted files with the ability to share access with others if certain requirements are met. These files will not be publicly available. The depositor of the original file will need to approve sharing of the data. You can also deposit content under an embargo status and provide an end date for the embargo; at the end date the content will become publicly available automatically.