Careers in STEM: Why Should I Study Data Science?

Careers in data science are in high demand and offer high salaries and advancement opportunities. Learn five reasons to consider a career in the field.

Valerie Kirk

Data is often referred to as the new gold because it has become an essential raw material.

From smartphones to traffic cameras to weather satellites, modern technology devices are collecting massive amounts of data that support everything from cancer research to city planning.

The importance of data in today’s world spans all industries including healthcare, education, travel, and government. Business decisions are made based on data and improving customer experiences relies on data. It is also critical for our national defense. Simply put, today’s world runs on data.

But unlike gold, data does not have value in its raw state. To tap into the power of data to make smart, data-driven decisions, it has to be collected, cleaned, organized, and analyzed.

This is why data is also called the new oil, which also needs to be extracted and refined in order to have value.

That’s where the field of data science comes in.

What is Data Science?

Data science is the study of data to extract meaningful insights for business and government.

People who pursue a degree in data science study math and computer science. Their career path includes jobs where they handle, organize, and interpret massive volumes of information with the goal of discerning patterns. They also construct complex algorithms to build predictive models. Data science tasks include data processing, data analytics, and data visualization.

Data scientists are on the leading edge of innovation and emerging technology, including machine learning and artificial intelligence, which relies on a significant amount of digital data to generate insights.

Careers in data science are growing fast. Data science jobs are in high demand and can be found in nearly every industry. A few of the most common data science jobs include:

- Chief Data Officer

- Artificial Intelligence Engineer

- Data Scientist

- Data Engineer

- Machine Learning Engineer

- Software Engineer

- Data Modeler

- Data Analyst

- Big Data Engineer

Why is Data Science Important?

Just as data is the new gold and the new oil, data is also the new currency. For businesses, the insights derived from data science are essential for data-driven decision-making. They guide everything from the product lifecycle to fulfillment to office or warehouse locations. Data scientists provide information that’s critical to a company’s growth.

The benefits of data science extend beyond business. Government agencies from the federal level down to state and local entities also rely on data insights for emergency planning and response, public safety, city planning, intelligence gathering, national defense, and many other services.

Another reason why data science is important? It taps into the potential of artificial intelligence, which can improve productivity and efficiencies, provide stronger cybersecurity, and personalize customer experiences. To be effective, artificial intelligence relies on a lot of data, which is often pulled from massive data repositories and organized and analyzed by data scientists.

Learn About Our Data Science Graduate Degree Program

5 Reasons to Study Data Science

The field of data science is a great career choice that offers high salaries, opportunities across several industries, and long-term job security. Here are five reasons to consider a career in data science.

1. Data Scientists Are in High Demand

According to the United States Bureau of Labor Statistics , data scientist jobs are projected to grow 36% by 2031, which is much faster than the average for all occupations. Data science careers also offer significant potential for advancement, with the relatively new role of chief data officer becoming a key C-suite position across all types of businesses.

Because the high-demand field requires a special skill set, professionals with data science degrees or certificates are more likely to land a desired position in a top company and enjoy more job security.

2. Careers in Data Science Have High Earning Potential

That high demand also leads to higher salaries relative to other careers. According to Glassdoor, the estimated total pay for a data scientist in the United States is $126,200 per year .

New data scientists can expect starting salaries of around $100,000 per year, with experienced data scientists earning more than $200,000 per year. The average annual salary for chief data officers is $636,000 , with top data executives clearing more than $1 million a year.

The salary potential is only expected to grow as data drives artificial intelligence innovations.

3. Data Science Skills are Going to Grow in Value

Think about this — smartphones, drones, satellites, sensors, security cameras, and other devices collect data 24 hours a day, seven days a week. Data is also being generated by organizations from every project, product launch, customer sale, employee action, and other business activities.

Then think about data that comes from every financial transaction, healthcare interaction, scholarly research project, and other initiatives outside of the business world. Data is continuously being generated from multiple sources for multiple uses — and that isn’t going to stop.

Turning all of that data into actionable insights is a unique, high-demand skill that will only grow in value as more data is generated. As technology advances, data scientists will be at the forefront of new breakthroughs and innovations. It’s an exciting and evolving career.

4. Data Science Provides a Wide Range of Job Opportunities

Every business, government agency, and educational institution generates data. They all need support in gaining insights from that data. Having a degree or certificate in data science gives people the flexibility to work in the industry that interests and inspires them.

5. Data Scientists Can Make the World a Better Place

While data scientists can offer insights to help businesses grow, they can also offer insights to help humanity. Data science careers include unique opportunities to make an impact on the world. Consider these initiatives where data science is playing a significant role:

- Climate change. To support climate control measures that could lower carbon dioxide emissions, the California Air Resources Board, Plant Labs, and the Environmental Defense Fund are working together on a Climate Data Partnership to track climate change from space.

- Medical research. The National Institutes of Health is working to improve biomedical research through its NIH Science and Technology Research Infrastructure for Discovery, Experimentation, and Sustainability Initiative , which enables access to rich datasets and breaking down data silos to support medical researchers.

- Rural planning. The U.S. Department of Agriculture launched a Rural Data Gateway to support farmers and ranchers in accessing the resources they need to support everything from sustainable farming practices to how to lower energy costs.

Other data-driven service-oriented initiatives include making cities safer for pedestrians and bikers, supporting affordable housing for underserved communities, and improving access to social services. Hear about other initiatives that are tapping into the power of AI for good in this fireside chat with Harvard Extension School’s director of IT programs, Bruce Huang.

Study Data Science at Harvard Extension School

If you are ready to start, advance, or pivot to a career in this exciting and growing field, Harvard Extension School offers a Data Science Master’s Degree Program .

The program focuses on mastering the technical, analytical, and practical skills needed to solve real-world, data-driven problems. The program covers predictive modeling, data mining, machine learning, artificial intelligence, data visualization, and big data. You will also learn how to apply data science and analytical methods to address data-rich problems and develop the skills for quantitative thought leadership, including the ethical and legal dimensions of data analytics.

The program includes 11 courses that can be taken online and one on-campus course, in which you develop a plan for a capstone project with peers and faculty. In the final capstone course, you will apply your new skills to a real-world challenge. Capstone project teams collaborate with industry, government, or academic institutions to explore the possibilities of using data science and analytics for good. Recent capstone projects include:

- Improving the climate change model used by NASA.

- Developing a tool that combines aerial imagery and advanced georeferencing techniques to assess damage in disaster-stricken areas.

- Using computer vision and video classification to develop a crime detection system for analyzing surveillance videos and identifying suspicious activities, contributing to enhanced public safety and crime prevention efforts.

- Predicting patient MRI scans in a hospital system to optimize resource allocation and ensure efficient patient care delivery.

- Streamlining the medical coding process to reduce errors and improve efficiencies.

You can also earn a Data Science Graduate Certificate through the Harvard Extension School . In this certificate program, you will:

- Master key facets of data investigation, including data wrangling, cleaning, sampling, management, exploratory analysis, regression and classification, prediction, and data communication

- Implement foundational concepts of data computation, such as data structure, algorithms, parallel computing, simulation, and analysis.

- Leverage your knowledge of key subject areas, such as game theory, statistical quality control, exponential smoothing, seasonally adjusted trend analysis, or data visualization.

Four courses are required for the program and vary based on the data science career path you are interested in pursuing.

If you are thinking about advancing your career or making a career change into the growing data science field, learn more about the Data Science Master’s Degree program or the Data Science Graduate Certificate program including class requirements, tuition, and how to apply.

About the Author

Valerie Kirk is a freelance writer and corporate storyteller specializing in customer and community outreach and topics and trends in education, technology, and healthcare. Based in Maryland near the Chesapeake Bay, she spends her free time exploring nature by bike, paddleboard, or on long hikes with her family.

Computer Science vs. Systems Engineering Programs — Which is Right for You?

This blog post explores the difference between the fields of computer science and systems engineering — and which might be right for you.

Harvard Division of Continuing Education

The Division of Continuing Education (DCE) at Harvard University is dedicated to bringing rigorous academics and innovative teaching capabilities to those seeking to improve their lives through education. We make Harvard education accessible to lifelong learners from high school to retirement.

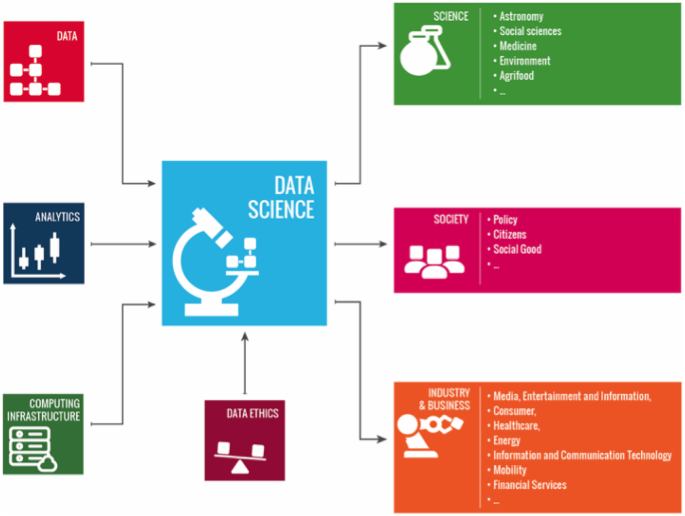

Data science combines math and statistics, specialized programming, advanced analytics , artificial intelligence (AI) and machine learning with specific subject matter expertise to uncover actionable insights hidden in an organization’s data. These insights can be used to guide decision making and strategic planning.

The accelerating volume of data sources, and subsequently data, has made data science is one of the fastest growing field across every industry. As a result, it is no surprise that the role of the data scientist was dubbed the “sexiest job of the 21st century” by Harvard Business Review (link resides outside ibm.com). Organizations are increasingly reliant on them to interpret data and provide actionable recommendations to improve business outcomes.

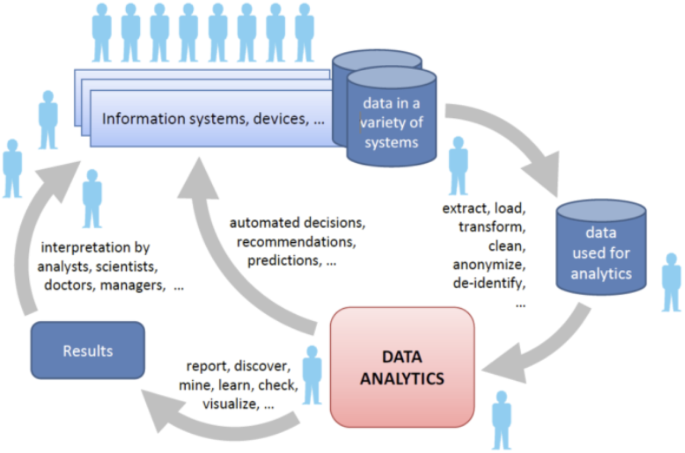

The data science lifecycle involves various roles, tools, and processes, which enables analysts to glean actionable insights. Typically, a data science project undergoes the following stages:

- Data ingestion : The lifecycle begins with the data collection—both raw structured and unstructured data from all relevant sources using a variety of methods. These methods can include manual entry, web scraping, and real-time streaming data from systems and devices. Data sources can include structured data, such as customer data, along with unstructured data like log files, video, audio, pictures, the Internet of Things (IoT) , social media, and more.

- Data storage and data processing : Since data can have different formats and structures, companies need to consider different storage systems based on the type of data that needs to be captured. Data management teams help to set standards around data storage and structure, which facilitate workflows around analytics, machine learning and deep learning models. This stage includes cleaning data, deduplicating, transforming and combining the data using ETL (extract, transform, load) jobs or other data integration technologies. This data preparation is essential for promoting data quality before loading into a data warehouse , data lake , or other repository.

- Data analysis : Here, data scientists conduct an exploratory data analysis to examine biases, patterns, ranges, and distributions of values within the data. This data analytics exploration drives hypothesis generation for a/b testing. It also allows analysts to determine the data’s relevance for use within modeling efforts for predictive analytics, machine learning, and/or deep learning. Depending on a model’s accuracy, organizations can become reliant on these insights for business decision making, allowing them to drive more scalability.

- Communicate : Finally, insights are presented as reports and other data visualizations that make the insights—and their impact on business—easier for business analysts and other decision-makers to understand. A data science programming language such as R or Python includes components for generating visualizations; alternately, data scientists can use dedicated visualization tools.

Use this ebook to align with other leaders on the 3 key goals of MLOps and trustworthy AI: trust in data, trust in models and trust in processes.

Register for the ebook on generative AI

Data science is considered a discipline, while data scientists are the practitioners within that field. Data scientists are not necessarily directly responsible for all the processes involved in the data science lifecycle. For example, data pipelines are typically handled by data engineers—but the data scientist may make recommendations about what sort of data is useful or required. While data scientists can build machine learning models, scaling these efforts at a larger level requires more software engineering skills to optimize a program to run more quickly. As a result, it’s common for a data scientist to partner with machine learning engineers to scale machine learning models.

Data scientist responsibilities can commonly overlap with a data analyst, particularly with exploratory data analysis and data visualization. However, a data scientist’s skillset is typically broader than the average data analyst. Comparatively speaking, data scientist leverage common programming languages, such as R and Python, to conduct more statistical inference and data visualization.

To perform these tasks, data scientists require computer science and pure science skills beyond those of a typical business analyst or data analyst. The data scientist must also understand the specifics of the business, such as automobile manufacturing, eCommerce, or healthcare.

In short, a data scientist must be able to:

- Know enough about the business to ask pertinent questions and identify business pain points.

- Apply statistics and computer science, along with business acumen, to data analysis.

- Use a wide range of tools and techniques for preparing and extracting data—everything from databases and SQL to data mining to data integration methods.

- Extract insights from big data using predictive analytics and artificial intelligence (AI), including machine learning models , natural language processing , and deep learning .

- Write programs that automate data processing and calculations.

- Tell—and illustrate—stories that clearly convey the meaning of results to decision-makers and stakeholders at every level of technical understanding.

- Explain how the results can be used to solve business problems.

- Collaborate with other data science team members, such as data and business analysts, IT architects, data engineers, and application developers.

These skills are in high demand, and as a result, many individuals that are breaking into a data science career, explore a variety of data science programs, such as certification programs, data science courses, and degree programs offered by educational institutions.

The all new enterprise studio that brings together traditional machine learning along with new generative AI capabilities powered by foundation models.

Watson Studio

IBM Cloud Pak for Data

It may be easy to confuse the terms “data science” and “business intelligence” (BI) because they both relate to an organization’s data and analysis of that data, but they do differ in focus.

Business intelligence (BI) is typically an umbrella term for the technology that enables data preparation, data mining, data management, and data visualization. Business intelligence tools and processes allow end users to identify actionable information from raw data, facilitating data-driven decision-making within organizations across various industries. While data science tools overlap in much of this regard, business intelligence focuses more on data from the past, and the insights from BI tools are more descriptive in nature. It uses data to understand what happened before to inform a course of action. BI is geared toward static (unchanging) data that is usually structured. While data science uses descriptive data, it typically utilizes it to determine predictive variables, which are then used to categorize data or to make forecasts.

Data science and BI are not mutually exclusive—digitally savvy organizations use both to fully understand and extract value from their data.

Data scientists rely on popular programming languages to conduct exploratory data analysis and statistical regression. These open source tools support pre-built statistical modeling, machine learning, and graphics capabilities. These languages include the following (read more at " Python vs. R: What's the Difference? "):

- R Studio: An open source programming language and environment for developing statistical computing and graphics.

- Python: It is a dynamic and flexible programming language. The Python includes numerous libraries, such as NumPy, Pandas, Matplotlib, for analyzing data quickly.

To facilitate sharing code and other information, data scientists may use GitHub and Jupyter notebooks.

Some data scientists may prefer a user interface, and two common enterprise tools for statistical analysis include:

- SAS: A comprehensive tool suite, including visualizations and interactive dashboards, for analyzing, reporting, data mining, and predictive modeling.

- IBM SPSS : Offers advanced statistical analysis, a large library of machine learning algorithms, text analysis, open source extensibility, integration with big data, and seamless deployment into applications.

Data scientists also gain proficiency in using big data processing platforms, such as Apache Spark, the open source framework Apache Hadoop, and NoSQL databases. They are also skilled with a wide range of data visualization tools, including simple graphics tools included with business presentation and spreadsheet applications (like Microsoft Excel), built-for-purpose commercial visualization tools like Tableau and IBM Cognos, and open source tools like D3.js (a JavaScript library for creating interactive data visualizations) and RAW Graphs. For building machine learning models, data scientists frequently turn to several frameworks like PyTorch, TensorFlow, MXNet, and Spark MLib.

Given the steep learning curve in data science, many companies are seeking to accelerate their return on investment for AI projects; they often struggle to hire the talent needed to realize data science project’s full potential. To address this gap, they are turning to multipersona data science and machine learning (DSML) platforms, giving rise to the role of “citizen data scientist.”

Multipersona DSML platforms use automation, self-service portals, and low-code/no-code user interfaces so that people with little or no background in digital technology or expert data science can create business value using data science and machine learning. These platforms also support expert data scientists by also offering a more technical interface. Using a multipersona DSML platform encourages collaboration across the enterprise.

Cloud computing scales data science by providing access to additional processing power, storage, and other tools required for data science projects.

Since data science frequently leverages large data sets, tools that can scale with the size of the data is incredibly important, particularly for time-sensitive projects. Cloud storage solutions, such as data lakes, provide access to storage infrastructure, which are capable of ingesting and processing large volumes of data with ease. These storage systems provide flexibility to end users, allowing them to spin up large clusters as needed. They can also add incremental compute nodes to expedite data processing jobs, allowing the business to make short-term tradeoffs for a larger long-term outcome. Cloud platforms typically have different pricing models, such a per-use or subscriptions, to meet the needs of their end user—whether they are a large enterprise or a small startup.

Open source technologies are widely used in data science tool sets. When they’re hosted in the cloud, teams don’t need to install, configure, maintain, or update them locally. Several cloud providers, including IBM Cloud®, also offer prepackaged tool kits that enable data scientists to build models without coding, further democratizing access to technology innovations and data insights.

Enterprises can unlock numerous benefits from data science. Common use cases include process optimization through intelligent automation and enhanced targeting and personalization to improve the customer experience (CX). However, more specific examples include:

Here are a few representative use cases for data science and artificial intelligence:

- An international bank delivers faster loan services with a mobile app using machine learning-powered credit risk models and a hybrid cloud computing architecture that is both powerful and secure.

- An electronics firm is developing ultra-powerful 3D-printed sensors to guide tomorrow’s driverless vehicles. The solution relies on data science and analytics tools to enhance its real-time object detection capabilities.

- A robotic process automation (RPA) solution provider developed a cognitive business process mining solution that reduces incident handling times between 15% and 95% for its client companies. The solution is trained to understand the content and sentiment of customer emails, directing service teams to prioritize those that are most relevant and urgent.

- A digital media technology company created an audience analytics platform that enables its clients to see what’s engaging TV audiences as they’re offered a growing range of digital channels. The solution employs deep analytics and machine learning to gather real-time insights into viewer behavior.

- An urban police department created statistical incident analysis tools (link resides outside ibm.com) to help officers understand when and where to deploy resources in order to prevent crime. The data-driven solution creates reports and dashboards to augment situational awareness for field officers.

- Shanghai Changjiang Science and Technology Development used IBM® Watson® technology to build an AI-based medical assessment platform that can analyze existing medical records to categorize patients based on their risk of experiencing a stroke and that can predict the success rate of different treatment plans.

Experiment with foundation models and build machine learning models automatically in our next-generation studio for AI builders.

Synchronize DevOps and ModelOps. Build and scale AI models with your cloud-native apps across virtually any cloud.

Increase AI interpretability. Assess and mitigate AI risks. Deploy AI with trust and confidence.

Build and train high-quality predictive models quickly. Simplify AI lifecycle management.

Autostrade per l’Italia implemented several IBM solutions for a complete digital transformation to improve how it monitors and maintains its vast array of infrastructure assets.

MANA Community teamed with IBM Garage to build an AI platform to mine huge volumes of environmental data volumes from multiple digital channels and thousands of sources.

Having a complete freedom in choice of programming languages, tools and frameworks improves creative thinking and evolvement.

Scale AI workloads for all your data, anywhere, with IBM watsonx.data, a fit-for-purpose data store built on an open data lakehouse architecture.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Springer Nature - PMC COVID-19 Collection

Data Science and Analytics: An Overview from Data-Driven Smart Computing, Decision-Making and Applications Perspective

Iqbal h. sarker.

1 Swinburne University of Technology, Melbourne, VIC 3122 Australia

2 Department of Computer Science and Engineering, Chittagong University of Engineering & Technology, Chittagong, 4349 Bangladesh

The digital world has a wealth of data, such as internet of things (IoT) data, business data, health data, mobile data, urban data, security data, and many more, in the current age of the Fourth Industrial Revolution (Industry 4.0 or 4IR). Extracting knowledge or useful insights from these data can be used for smart decision-making in various applications domains. In the area of data science, advanced analytics methods including machine learning modeling can provide actionable insights or deeper knowledge about data, which makes the computing process automatic and smart. In this paper, we present a comprehensive view on “Data Science” including various types of advanced analytics methods that can be applied to enhance the intelligence and capabilities of an application through smart decision-making in different scenarios. We also discuss and summarize ten potential real-world application domains including business, healthcare, cybersecurity, urban and rural data science, and so on by taking into account data-driven smart computing and decision making. Based on this, we finally highlight the challenges and potential research directions within the scope of our study. Overall, this paper aims to serve as a reference point on data science and advanced analytics to the researchers and decision-makers as well as application developers, particularly from the data-driven solution point of view for real-world problems.

Introduction

We are living in the age of “data science and advanced analytics”, where almost everything in our daily lives is digitally recorded as data [ 17 ]. Thus the current electronic world is a wealth of various kinds of data, such as business data, financial data, healthcare data, multimedia data, internet of things (IoT) data, cybersecurity data, social media data, etc [ 112 ]. The data can be structured, semi-structured, or unstructured, which increases day by day [ 105 ]. Data science is typically a “concept to unify statistics, data analysis, and their related methods” to understand and analyze the actual phenomena with data. According to Cao et al. [ 17 ] “data science is the science of data” or “data science is the study of data”, where a data product is a data deliverable, or data-enabled or guided, which can be a discovery, prediction, service, suggestion, insight into decision-making, thought, model, paradigm, tool, or system. The popularity of “Data science” is increasing day-by-day, which is shown in Fig. Fig.1 1 according to Google Trends data over the last 5 years [ 36 ]. In addition to data science, we have also shown the popularity trends of the relevant areas such as “Data analytics”, “Data mining”, “Big data”, “Machine learning” in the figure. According to Fig. Fig.1, 1 , the popularity indication values for these data-driven domains, particularly “Data science”, and “Machine learning” are increasing day-by-day. This statistical information and the applicability of the data-driven smart decision-making in various real-world application areas, motivate us to study briefly on “Data science” and machine-learning-based “Advanced analytics” in this paper.

The worldwide popularity score of data science comparing with relevant areas in a range of 0 (min) to 100 (max) over time where x -axis represents the timestamp information and y -axis represents the corresponding score

Usually, data science is the field of applying advanced analytics methods and scientific concepts to derive useful business information from data. The emphasis of advanced analytics is more on anticipating the use of data to detect patterns to determine what is likely to occur in the future. Basic analytics offer a description of data in general, while advanced analytics is a step forward in offering a deeper understanding of data and helping to analyze granular data, which we are interested in. In the field of data science, several types of analytics are popular, such as "Descriptive analytics" which answers the question of what happened; "Diagnostic analytics" which answers the question of why did it happen; "Predictive analytics" which predicts what will happen in the future; and "Prescriptive analytics" which prescribes what action should be taken, discussed briefly in “ Advanced analytics methods and smart computing ”. Such advanced analytics and decision-making based on machine learning techniques [ 105 ], a major part of artificial intelligence (AI) [ 102 ] can also play a significant role in the Fourth Industrial Revolution (Industry 4.0) due to its learning capability for smart computing as well as automation [ 121 ].

Although the area of “data science” is huge, we mainly focus on deriving useful insights through advanced analytics, where the results are used to make smart decisions in various real-world application areas. For this, various advanced analytics methods such as machine learning modeling, natural language processing, sentiment analysis, neural network, or deep learning analysis can provide deeper knowledge about data, and thus can be used to develop data-driven intelligent applications. More specifically, regression analysis, classification, clustering analysis, association rules, time-series analysis, sentiment analysis, behavioral patterns, anomaly detection, factor analysis, log analysis, and deep learning which is originated from the artificial neural network, are taken into account in our study. These machine learning-based advanced analytics methods are discussed briefly in “ Advanced analytics methods and smart computing ”. Thus, it’s important to understand the principles of various advanced analytics methods mentioned above and their applicability to apply in various real-world application areas. For instance, in our earlier paper Sarker et al. [ 114 ], we have discussed how data science and machine learning modeling can play a significant role in the domain of cybersecurity for making smart decisions and to provide data-driven intelligent security services. In this paper, we broadly take into account the data science application areas and real-world problems in ten potential domains including the area of business data science, health data science, IoT data science, behavioral data science, urban data science, and so on, discussed briefly in “ Real-world application domains ”.

Based on the importance of machine learning modeling to extract the useful insights from the data mentioned above and data-driven smart decision-making, in this paper, we present a comprehensive view on “Data Science” including various types of advanced analytics methods that can be applied to enhance the intelligence and the capabilities of an application. The key contribution of this study is thus understanding data science modeling, explaining different analytic methods for solution perspective and their applicability in various real-world data-driven applications areas mentioned earlier. Overall, the purpose of this paper is, therefore, to provide a basic guide or reference for those academia and industry people who want to study, research, and develop automated and intelligent applications or systems based on smart computing and decision making within the area of data science.

The main contributions of this paper are summarized as follows:

- To define the scope of our study towards data-driven smart computing and decision-making in our real-world life. We also make a brief discussion on the concept of data science modeling from business problems to data product and automation, to understand its applicability and provide intelligent services in real-world scenarios.

- To provide a comprehensive view on data science including advanced analytics methods that can be applied to enhance the intelligence and the capabilities of an application.

- To discuss the applicability and significance of machine learning-based analytics methods in various real-world application areas. We also summarize ten potential real-world application areas, from business to personalized applications in our daily life, where advanced analytics with machine learning modeling can be used to achieve the expected outcome.

- To highlight and summarize the challenges and potential research directions within the scope of our study.

The rest of the paper is organized as follows. The next section provides the background and related work and defines the scope of our study. The following section presents the concepts of data science modeling for building a data-driven application. After that, briefly discuss and explain different advanced analytics methods and smart computing. Various real-world application areas are discussed and summarized in the next section. We then highlight and summarize several research issues and potential future directions, and finally, the last section concludes this paper.

Background and Related Work

In this section, we first discuss various data terms and works related to data science and highlight the scope of our study.

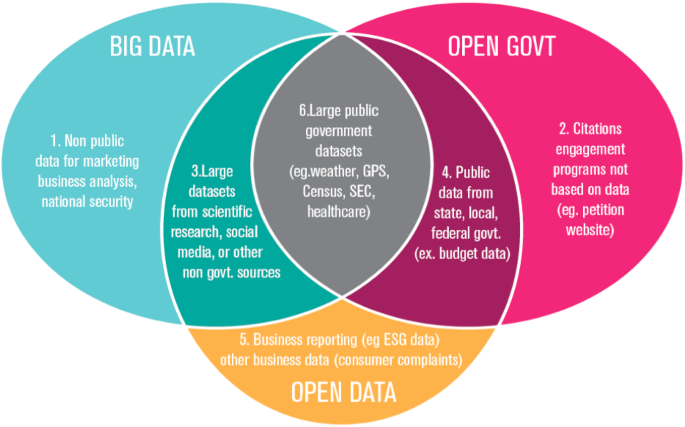

Data Terms and Definitions

There is a range of key terms in the field, such as data analysis, data mining, data analytics, big data, data science, advanced analytics, machine learning, and deep learning, which are highly related and easily confusing. In the following, we define these terms and differentiate them with the term “Data Science” according to our goal.

The term “Data analysis” refers to the processing of data by conventional (e.g., classic statistical, empirical, or logical) theories, technologies, and tools for extracting useful information and for practical purposes [ 17 ]. The term “Data analytics”, on the other hand, refers to the theories, technologies, instruments, and processes that allow for an in-depth understanding and exploration of actionable data insight [ 17 ]. Statistical and mathematical analysis of the data is the major concern in this process. “Data mining” is another popular term over the last decade, which has a similar meaning with several other terms such as knowledge mining from data, knowledge extraction, knowledge discovery from data (KDD), data/pattern analysis, data archaeology, and data dredging. According to Han et al. [ 38 ], it should have been more appropriately named “knowledge mining from data”. Overall, data mining is defined as the process of discovering interesting patterns and knowledge from large amounts of data [ 38 ]. Data sources may include databases, data centers, the Internet or Web, other repositories of data, or data dynamically streamed through the system. “Big data” is another popular term nowadays, which may change the statistical and data analysis approaches as it has the unique features of “massive, high dimensional, heterogeneous, complex, unstructured, incomplete, noisy, and erroneous” [ 74 ]. Big data can be generated by mobile devices, social networks, the Internet of Things, multimedia, and many other new applications [ 129 ]. Several unique features including volume, velocity, variety, veracity, value (5Vs), and complexity are used to understand and describe big data [ 69 ].

In terms of analytics, basic analytics provides a summary of data whereas the term “Advanced Analytics” takes a step forward in offering a deeper understanding of data and helps to analyze granular data. Advanced analytics is characterized or defined as autonomous or semi-autonomous data or content analysis using advanced techniques and methods to discover deeper insights, predict or generate recommendations, typically beyond traditional business intelligence or analytics. “Machine learning”, a branch of artificial intelligence (AI), is one of the major techniques used in advanced analytics which can automate analytical model building [ 112 ]. This is focused on the premise that systems can learn from data, recognize trends, and make decisions, with minimal human involvement [ 38 , 115 ]. “Deep Learning” is a subfield of machine learning that discusses algorithms inspired by the human brain’s structure and the function called artificial neural networks [ 38 , 139 ].

Unlike the above data-related terms, “Data science” is an umbrella term that encompasses advanced data analytics, data mining, machine, and deep learning modeling, and several other related disciplines like statistics, to extract insights or useful knowledge from the datasets and transform them into actionable business strategies. In [ 17 ], Cao et al. defined data science from the disciplinary perspective as “data science is a new interdisciplinary field that synthesizes and builds on statistics, informatics, computing, communication, management, and sociology to study data and its environments (including domains and other contextual aspects, such as organizational and social aspects) to transform data to insights and decisions by following a data-to-knowledge-to-wisdom thinking and methodology”. In “ Understanding data science modeling ”, we briefly discuss the data science modeling from a practical perspective starting from business problems to data products that can assist the data scientists to think and work in a particular real-world problem domain within the area of data science and analytics.

Related Work

In the area, several papers have been reviewed by the researchers based on data science and its significance. For example, the authors in [ 19 ] identify the evolving field of data science and its importance in the broader knowledge environment and some issues that differentiate data science and informatics issues from conventional approaches in information sciences. Donoho et al. [ 27 ] present 50 years of data science including recent commentary on data science in mass media, and on how/whether data science varies from statistics. The authors formally conceptualize the theory-guided data science (TGDS) model in [ 53 ] and present a taxonomy of research themes in TGDS. Cao et al. include a detailed survey and tutorial on the fundamental aspects of data science in [ 17 ], which considers the transition from data analysis to data science, the principles of data science, as well as the discipline and competence of data education.

Besides, the authors include a data science analysis in [ 20 ], which aims to provide a realistic overview of the use of statistical features and related data science methods in bioimage informatics. The authors in [ 61 ] study the key streams of data science algorithm use at central banks and show how their popularity has risen over time. This research contributes to the creation of a research vector on the role of data science in central banking. In [ 62 ], the authors provide an overview and tutorial on the data-driven design of intelligent wireless networks. The authors in [ 87 ] provide a thorough understanding of computational optimal transport with application to data science. In [ 97 ], the authors present data science as theoretical contributions in information systems via text analytics.

Unlike the above recent studies, in this paper, we concentrate on the knowledge of data science including advanced analytics methods, machine learning modeling, real-world application domains, and potential research directions within the scope of our study. The advanced analytics methods based on machine learning techniques discussed in this paper can be applied to enhance the capabilities of an application in terms of data-driven intelligent decision making and automation in the final data product or systems.

Understanding Data Science Modeling

In this section, we briefly discuss how data science can play a significant role in the real-world business process. For this, we first categorize various types of data and then discuss the major steps of data science modeling starting from business problems to data product and automation.

Types of Real-World Data

Typically, to build a data-driven real-world system in a particular domain, the availability of data is the key [ 17 , 112 , 114 ]. The data can be in different types such as (i) Structured—that has a well-defined data structure and follows a standard order, examples are names, dates, addresses, credit card numbers, stock information, geolocation, etc.; (ii) Unstructured—has no pre-defined format or organization, examples are sensor data, emails, blog entries, wikis, and word processing documents, PDF files, audio files, videos, images, presentations, web pages, etc.; (iii) Semi-structured—has elements of both the structured and unstructured data containing certain organizational properties, examples are HTML, XML, JSON documents, NoSQL databases, etc.; and (iv) Metadata—that represents data about the data, examples are author, file type, file size, creation date and time, last modification date and time, etc. [ 38 , 105 ].

In the area of data science, researchers use various widely-used datasets for different purposes. These are, for example, cybersecurity datasets such as NSL-KDD [ 127 ], UNSW-NB15 [ 79 ], Bot-IoT [ 59 ], ISCX’12 [ 15 ], CIC-DDoS2019 [ 22 ], etc., smartphone datasets such as phone call logs [ 88 , 110 ], mobile application usages logs [ 124 , 149 ], SMS Log [ 28 ], mobile phone notification logs [ 77 ] etc., IoT data [ 56 , 11 , 64 ], health data such as heart disease [ 99 ], diabetes mellitus [ 86 , 147 ], COVID-19 [ 41 , 78 ], etc., agriculture and e-commerce data [ 128 , 150 ], and many more in various application domains. In “ Real-world application domains ”, we discuss ten potential real-world application domains of data science and analytics by taking into account data-driven smart computing and decision making, which can help the data scientists and application developers to explore more in various real-world issues.

Overall, the data used in data-driven applications can be any of the types mentioned above, and they can differ from one application to another in the real world. Data science modeling, which is briefly discussed below, can be used to analyze such data in a specific problem domain and derive insights or useful information from the data to build a data-driven model or data product.

Steps of Data Science Modeling

Data science is typically an umbrella term that encompasses advanced data analytics, data mining, machine, and deep learning modeling, and several other related disciplines like statistics, to extract insights or useful knowledge from the datasets and transform them into actionable business strategies, mentioned earlier in “ Background and related work ”. In this section, we briefly discuss how data science can play a significant role in the real-world business process. Figure Figure2 2 shows an example of data science modeling starting from real-world data to data-driven product and automation. In the following, we briefly discuss each module of the data science process.

- Understanding business problems: This involves getting a clear understanding of the problem that is needed to solve, how it impacts the relevant organization or individuals, the ultimate goals for addressing it, and the relevant project plan. Thus to understand and identify the business problems, the data scientists formulate relevant questions while working with the end-users and other stakeholders. For instance, how much/many, which category/group, is the behavior unrealistic/abnormal, which option should be taken, what action, etc. could be relevant questions depending on the nature of the problems. This helps to get a better idea of what business needs and what we should be extracted from data. Such business knowledge can enable organizations to enhance their decision-making process, is known as “Business Intelligence” [ 65 ]. Identifying the relevant data sources that can help to answer the formulated questions and what kinds of actions should be taken from the trends that the data shows, is another important task associated with this stage. Once the business problem has been clearly stated, the data scientist can define the analytic approach to solve the problem.

- Understanding data: As we know that data science is largely driven by the availability of data [ 114 ]. Thus a sound understanding of the data is needed towards a data-driven model or system. The reason is that real-world data sets are often noisy, missing values, have inconsistencies, or other data issues, which are needed to handle effectively [ 101 ]. To gain actionable insights, the appropriate data or the quality of the data must be sourced and cleansed, which is fundamental to any data science engagement. For this, data assessment that evaluates what data is available and how it aligns to the business problem could be the first step in data understanding. Several aspects such as data type/format, the quantity of data whether it is sufficient or not to extract the useful knowledge, data relevance, authorized access to data, feature or attribute importance, combining multiple data sources, important metrics to report the data, etc. are needed to take into account to clearly understand the data for a particular business problem. Overall, the data understanding module involves figuring out what data would be best needed and the best ways to acquire it.

- Data pre-processing and exploration: Exploratory data analysis is defined in data science as an approach to analyzing datasets to summarize their key characteristics, often with visual methods [ 135 ]. This examines a broad data collection to discover initial trends, attributes, points of interest, etc. in an unstructured manner to construct meaningful summaries of the data. Thus data exploration is typically used to figure out the gist of data and to develop a first step assessment of its quality, quantity, and characteristics. A statistical model can be used or not, but primarily it offers tools for creating hypotheses by generally visualizing and interpreting the data through graphical representation such as a chart, plot, histogram, etc [ 72 , 91 ]. Before the data is ready for modeling, it’s necessary to use data summarization and visualization to audit the quality of the data and provide the information needed to process it. To ensure the quality of the data, the data pre-processing technique, which is typically the process of cleaning and transforming raw data [ 107 ] before processing and analysis is important. It also involves reformatting information, making data corrections, and merging data sets to enrich data. Thus, several aspects such as expected data, data cleaning, formatting or transforming data, dealing with missing values, handling data imbalance and bias issues, data distribution, search for outliers or anomalies in data and dealing with them, ensuring data quality, etc. could be the key considerations in this step.

- Machine learning modeling and evaluation: Once the data is prepared for building the model, data scientists design a model, algorithm, or set of models, to address the business problem. Model building is dependent on what type of analytics, e.g., predictive analytics, is needed to solve the particular problem, which is discussed briefly in “ Advanced analytics methods and smart computing ”. To best fits the data according to the type of analytics, different types of data-driven or machine learning models that have been summarized in our earlier paper Sarker et al. [ 105 ], can be built to achieve the goal. Data scientists typically separate training and test subsets of the given dataset usually dividing in the ratio of 80:20 or data considering the most popular k -folds data splitting method [ 38 ]. This is to observe whether the model performs well or not on the data, to maximize the model performance. Various model validation and assessment metrics, such as error rate, accuracy, true positive, false positive, true negative, false negative, precision, recall, f-score, ROC (receiver operating characteristic curve) analysis, applicability analysis, etc. [ 38 , 115 ] are used to measure the model performance, which can guide the data scientists to choose or design the learning method or model. Besides, machine learning experts or data scientists can take into account several advanced analytics such as feature engineering, feature selection or extraction methods, algorithm tuning, ensemble methods, modifying existing algorithms, or designing new algorithms, etc. to improve the ultimate data-driven model to solve a particular business problem through smart decision making.

- Data product and automation: A data product is typically the output of any data science activity [ 17 ]. A data product, in general terms, is a data deliverable, or data-enabled or guide, which can be a discovery, prediction, service, suggestion, insight into decision-making, thought, model, paradigm, tool, application, or system that process data and generate results. Businesses can use the results of such data analysis to obtain useful information like churn (a measure of how many customers stop using a product) prediction and customer segmentation, and use these results to make smarter business decisions and automation. Thus to make better decisions in various business problems, various machine learning pipelines and data products can be developed. To highlight this, we summarize several potential real-world data science application areas in “ Real-world application domains ”, where various data products can play a significant role in relevant business problems to make them smart and automate.

Overall, we can conclude that data science modeling can be used to help drive changes and improvements in business practices. The interesting part of the data science process indicates having a deeper understanding of the business problem to solve. Without that, it would be much harder to gather the right data and extract the most useful information from the data for making decisions to solve the problem. In terms of role, “Data Scientists” typically interpret and manage data to uncover the answers to major questions that help organizations to make objective decisions and solve complex problems. In a summary, a data scientist proactively gathers and analyzes information from multiple sources to better understand how the business performs, and designs machine learning or data-driven tools/methods, or algorithms, focused on advanced analytics, which can make today’s computing process smarter and intelligent, discussed briefly in the following section.

An example of data science modeling from real-world data to data-driven system and decision making

Advanced Analytics Methods and Smart Computing

As mentioned earlier in “ Background and related work ”, basic analytics provides a summary of data whereas advanced analytics takes a step forward in offering a deeper understanding of data and helps in granular data analysis. For instance, the predictive capabilities of advanced analytics can be used to forecast trends, events, and behaviors. Thus, “advanced analytics” can be defined as the autonomous or semi-autonomous analysis of data or content using advanced techniques and methods to discover deeper insights, make predictions, or produce recommendations, where machine learning-based analytical modeling is considered as the key technologies in the area. In the following section, we first summarize various types of analytics and outcome that are needed to solve the associated business problems, and then we briefly discuss machine learning-based analytical modeling.

Types of Analytics and Outcome

In the real-world business process, several key questions such as “What happened?”, “Why did it happen?”, “What will happen in the future?”, “What action should be taken?” are common and important. Based on these questions, in this paper, we categorize and highlight the analytics into four types such as descriptive, diagnostic, predictive, and prescriptive, which are discussed below.

- Descriptive analytics: It is the interpretation of historical data to better understand the changes that have occurred in a business. Thus descriptive analytics answers the question, “what happened in the past?” by summarizing past data such as statistics on sales and operations or marketing strategies, use of social media, and engagement with Twitter, Linkedin or Facebook, etc. For instance, using descriptive analytics through analyzing trends, patterns, and anomalies, etc., customers’ historical shopping data can be used to predict the probability of a customer purchasing a product. Thus, descriptive analytics can play a significant role to provide an accurate picture of what has occurred in a business and how it relates to previous times utilizing a broad range of relevant business data. As a result, managers and decision-makers can pinpoint areas of strength and weakness in their business, and eventually can take more effective management strategies and business decisions.

- Diagnostic analytics: It is a form of advanced analytics that examines data or content to answer the question, “why did it happen?” The goal of diagnostic analytics is to help to find the root cause of the problem. For example, the human resource management department of a business organization may use these diagnostic analytics to find the best applicant for a position, select them, and compare them to other similar positions to see how well they perform. In a healthcare example, it might help to figure out whether the patients’ symptoms such as high fever, dry cough, headache, fatigue, etc. are all caused by the same infectious agent. Overall, diagnostic analytics enables one to extract value from the data by posing the right questions and conducting in-depth investigations into the answers. It is characterized by techniques such as drill-down, data discovery, data mining, and correlations.

- Predictive analytics: Predictive analytics is an important analytical technique used by many organizations for various purposes such as to assess business risks, anticipate potential market patterns, and decide when maintenance is needed, to enhance their business. It is a form of advanced analytics that examines data or content to answer the question, “what will happen in the future?” Thus, the primary goal of predictive analytics is to identify and typically answer this question with a high degree of probability. Data scientists can use historical data as a source to extract insights for building predictive models using various regression analyses and machine learning techniques, which can be used in various application domains for a better outcome. Companies, for example, can use predictive analytics to minimize costs by better anticipating future demand and changing output and inventory, banks and other financial institutions to reduce fraud and risks by predicting suspicious activity, medical specialists to make effective decisions through predicting patients who are at risk of diseases, retailers to increase sales and customer satisfaction through understanding and predicting customer preferences, manufacturers to optimize production capacity through predicting maintenance requirements, and many more. Thus predictive analytics can be considered as the core analytical method within the area of data science.

- Prescriptive analytics: Prescriptive analytics focuses on recommending the best way forward with actionable information to maximize overall returns and profitability, which typically answer the question, “what action should be taken?” In business analytics, prescriptive analytics is considered the final step. For its models, prescriptive analytics collects data from several descriptive and predictive sources and applies it to the decision-making process. Thus, we can say that it is related to both descriptive analytics and predictive analytics, but it emphasizes actionable insights instead of data monitoring. In other words, it can be considered as the opposite of descriptive analytics, which examines decisions and outcomes after the fact. By integrating big data, machine learning, and business rules, prescriptive analytics helps organizations to make more informed decisions to produce results that drive the most successful business decisions.

In summary, to clarify what happened and why it happened, both descriptive analytics and diagnostic analytics look at the past. Historical data is used by predictive analytics and prescriptive analytics to forecast what will happen in the future and what steps should be taken to impact those effects. In Table Table1, 1 , we have summarized these analytics methods with examples. Forward-thinking organizations in the real world can jointly use these analytical methods to make smart decisions that help drive changes in business processes and improvements. In the following, we discuss how machine learning techniques can play a big role in these analytical methods through their learning capabilities from the data.

Various types of analytical methods with examples

Machine Learning Based Analytical Modeling

In this section, we briefly discuss various advanced analytics methods based on machine learning modeling, which can make the computing process smart through intelligent decision-making in a business process. Figure Figure3 3 shows a general structure of a machine learning-based predictive modeling considering both the training and testing phase. In the following, we discuss a wide range of methods such as regression and classification analysis, association rule analysis, time-series analysis, behavioral analysis, log analysis, and so on within the scope of our study.

A general structure of a machine learning based predictive model considering both the training and testing phase

Regression Analysis

In data science, one of the most common statistical approaches used for predictive modeling and data mining tasks is regression techniques [ 38 ]. Regression analysis is a form of supervised machine learning that examines the relationship between a dependent variable (target) and independent variables (predictor) to predict continuous-valued output [ 105 , 117 ]. The following equations Eqs. 1 , 2 , and 3 [ 85 , 105 ] represent the simple, multiple or multivariate, and polynomial regressions respectively, where x represents independent variable and y is the predicted/target output mentioned above:

Regression analysis is typically conducted for one of two purposes: to predict the value of the dependent variable in the case of individuals for whom some knowledge relating to the explanatory variables is available, or to estimate the effect of some explanatory variable on the dependent variable, i.e., finding the relationship of causal influence between the variables. Linear regression cannot be used to fit non-linear data and may cause an underfitting problem. In that case, polynomial regression performs better, however, increases the model complexity. The regularization techniques such as Ridge, Lasso, Elastic-Net, etc. [ 85 , 105 ] can be used to optimize the linear regression model. Besides, support vector regression, decision tree regression, random forest regression techniques [ 85 , 105 ] can be used for building effective regression models depending on the problem type, e.g., non-linear tasks. Financial forecasting or prediction, cost estimation, trend analysis, marketing, time-series estimation, drug response modeling, etc. are some examples where the regression models can be used to solve real-world problems in the domain of data science and analytics.

Classification Analysis

Classification is one of the most widely used and best-known data science processes. This is a form of supervised machine learning approach that also refers to a predictive modeling problem in which a class label is predicted for a given example [ 38 ]. Spam identification, such as ‘spam’ and ‘not spam’ in email service providers, can be an example of a classification problem. There are several forms of classification analysis available in the area such as binary classification—which refers to the prediction of one of two classes; multi-class classification—which involves the prediction of one of more than two classes; multi-label classification—a generalization of multiclass classification in which the problem’s classes are organized hierarchically [ 105 ].

Several popular classification techniques, such as k-nearest neighbors [ 5 ], support vector machines [ 55 ], navies Bayes [ 49 ], adaptive boosting [ 32 ], extreme gradient boosting [ 85 ], logistic regression [ 66 ], decision trees ID3 [ 92 ], C4.5 [ 93 ], and random forests [ 13 ] exist to solve classification problems. The tree-based classification technique, e.g., random forest considering multiple decision trees, performs better than others to solve real-world problems in many cases as due to its capability of producing logic rules [ 103 , 115 ]. Figure Figure4 4 shows an example of a random forest structure considering multiple decision trees. In addition, BehavDT recently proposed by Sarker et al. [ 109 ], and IntrudTree [ 106 ] can be used for building effective classification or prediction models in the relevant tasks within the domain of data science and analytics.

An example of a random forest structure considering multiple decision trees

Cluster Analysis

Clustering is a form of unsupervised machine learning technique and is well-known in many data science application areas for statistical data analysis [ 38 ]. Usually, clustering techniques search for the structures inside a dataset and, if the classification is not previously identified, classify homogeneous groups of cases. This means that data points are identical to each other within a cluster, and different from data points in another cluster. Overall, the purpose of cluster analysis is to sort various data points into groups (or clusters) that are homogeneous internally and heterogeneous externally [ 105 ]. To gain insight into how data is distributed in a given dataset or as a preprocessing phase for other algorithms, clustering is often used. Data clustering, for example, assists with customer shopping behavior, sales campaigns, and retention of consumers for retail businesses, anomaly detection, etc.

Many clustering algorithms with the ability to group data have been proposed in machine learning and data science literature [ 98 , 138 , 141 ]. In our earlier paper Sarker et al. [ 105 ], we have summarized this based on several perspectives, such as partitioning methods, density-based methods, hierarchical-based methods, model-based methods, etc. In the literature, the popular K-means [ 75 ], K-Mediods [ 84 ], CLARA [ 54 ] etc. are known as partitioning methods; DBSCAN [ 30 ], OPTICS [ 8 ] etc. are known as density-based methods; single linkage [ 122 ], complete linkage [ 123 ], etc. are known as hierarchical methods. In addition, grid-based clustering methods, such as STING [ 134 ], CLIQUE [ 2 ], etc.; model-based clustering such as neural network learning [ 141 ], GMM [ 94 ], SOM [ 18 , 104 ], etc.; constrained-based methods such as COP K-means [ 131 ], CMWK-Means [ 25 ], etc. are used in the area. Recently, Sarker et al. [ 111 ] proposed a hierarchical clustering method, BOTS [ 111 ] based on bottom-up agglomerative technique for capturing user’s similar behavioral characteristics over time. The key benefit of agglomerative hierarchical clustering is that the tree-structure hierarchy created by agglomerative clustering is more informative than an unstructured set of flat clusters, which can assist in better decision-making in relevant application areas in data science.

Association Rule Analysis

Association rule learning is known as a rule-based machine learning system, an unsupervised learning method is typically used to establish a relationship among variables. This is a descriptive technique often used to analyze large datasets for discovering interesting relationships or patterns. The association learning technique’s main strength is its comprehensiveness, as it produces all associations that meet user-specified constraints including minimum support and confidence value [ 138 ].

Association rules allow a data scientist to identify trends, associations, and co-occurrences between data sets inside large data collections. In a supermarket, for example, associations infer knowledge about the buying behavior of consumers for different items, which helps to change the marketing and sales plan. In healthcare, to better diagnose patients, physicians may use association guidelines. Doctors can assess the conditional likelihood of a given illness by comparing symptom associations in the data from previous cases using association rules and machine learning-based data analysis. Similarly, association rules are useful for consumer behavior analysis and prediction, customer market analysis, bioinformatics, weblog mining, recommendation systems, etc.

Several types of association rules have been proposed in the area, such as frequent pattern based [ 4 , 47 , 73 ], logic-based [ 31 ], tree-based [ 39 ], fuzzy-rules [ 126 ], belief rule [ 148 ] etc. The rule learning techniques such as AIS [ 3 ], Apriori [ 4 ], Apriori-TID and Apriori-Hybrid [ 4 ], FP-Tree [ 39 ], Eclat [ 144 ], RARM [ 24 ] exist to solve the relevant business problems. Apriori [ 4 ] is the most commonly used algorithm for discovering association rules from a given dataset among the association rule learning techniques [ 145 ]. The recent association rule-learning technique ABC-RuleMiner proposed in our earlier paper by Sarker et al. [ 113 ] could give significant results in terms of generating non-redundant rules that can be used for smart decision making according to human preferences, within the area of data science applications.

Time-Series Analysis and Forecasting

A time series is typically a series of data points indexed in time order particularly, by date, or timestamp [ 111 ]. Depending on the frequency, the time-series can be different types such as annually, e.g., annual budget, quarterly, e.g., expenditure, monthly, e.g., air traffic, weekly, e.g., sales quantity, daily, e.g., weather, hourly, e.g., stock price, minute-wise, e.g., inbound calls in a call center, and even second-wise, e.g., web traffic, and so on in relevant domains.

A mathematical method dealing with such time-series data, or the procedure of fitting a time series to a proper model is termed time-series analysis. Many different time series forecasting algorithms and analysis methods can be applied to extract the relevant information. For instance, to do time-series forecasting for future patterns, the autoregressive (AR) model [ 130 ] learns the behavioral trends or patterns of past data. Moving average (MA) [ 40 ] is another simple and common form of smoothing used in time series analysis and forecasting that uses past forecasted errors in a regression-like model to elaborate an averaged trend across the data. The autoregressive moving average (ARMA) [ 12 , 120 ] combines these two approaches, where autoregressive extracts the momentum and pattern of the trend and moving average capture the noise effects. The most popular and frequently used time-series model is the autoregressive integrated moving average (ARIMA) model [ 12 , 120 ]. ARIMA model, a generalization of an ARMA model, is more flexible than other statistical models such as exponential smoothing or simple linear regression. In terms of data, the ARMA model can only be used for stationary time-series data, while the ARIMA model includes the case of non-stationarity as well. Similarly, seasonal autoregressive integrated moving average (SARIMA), autoregressive fractionally integrated moving average (ARFIMA), autoregressive moving average model with exogenous inputs model (ARMAX model) are also used in time-series models [ 120 ].

In addition to the stochastic methods for time-series modeling and forecasting, machine and deep learning-based approach can be used for effective time-series analysis and forecasting. For instance, in our earlier paper, Sarker et al. [ 111 ] present a bottom-up clustering-based time-series analysis to capture the mobile usage behavioral patterns of the users. Figure Figure5 5 shows an example of producing aggregate time segments Seg_i from initial time slices TS_i based on similar behavioral characteristics that are used in our bottom-up clustering approach, where D represents the dominant behavior BH_i of the users, mentioned above [ 111 ]. The authors in [ 118 ], used a long short-term memory (LSTM) model, a kind of recurrent neural network (RNN) deep learning model, in forecasting time-series that outperform traditional approaches such as the ARIMA model. Time-series analysis is commonly used these days in various fields such as financial, manufacturing, business, social media, event data (e.g., clickstreams and system events), IoT and smartphone data, and generally in any applied science and engineering temporal measurement domain. Thus, it covers a wide range of application areas in data science.

An example of producing aggregate time segments from initial time slices based on similar behavioral characteristics

Opinion Mining and Sentiment Analysis

Sentiment analysis or opinion mining is the computational study of the opinions, thoughts, emotions, assessments, and attitudes of people towards entities such as products, services, organizations, individuals, issues, events, topics, and their attributes [ 71 ]. There are three kinds of sentiments: positive, negative, and neutral, along with more extreme feelings such as angry, happy and sad, or interested or not interested, etc. More refined sentiments to evaluate the feelings of individuals in various situations can also be found according to the problem domain.

Although the task of opinion mining and sentiment analysis is very challenging from a technical point of view, it’s very useful in real-world practice. For instance, a business always aims to obtain an opinion from the public or customers about its products and services to refine the business policy as well as a better business decision. It can thus benefit a business to understand the social opinion of their brand, product, or service. Besides, potential customers want to know what consumers believe they have when they use a service or purchase a product. Document-level, sentence level, aspect level, and concept level, are the possible levels of opinion mining in the area [ 45 ].

Several popular techniques such as lexicon-based including dictionary-based and corpus-based methods, machine learning including supervised and unsupervised learning, deep learning, and hybrid methods are used in sentiment analysis-related tasks [ 70 ]. To systematically define, extract, measure, and analyze affective states and subjective knowledge, it incorporates the use of statistics, natural language processing (NLP), machine learning as well as deep learning methods. Sentiment analysis is widely used in many applications, such as reviews and survey data, web and social media, and healthcare content, ranging from marketing and customer support to clinical practice. Thus sentiment analysis has a big influence in many data science applications, where public sentiment is involved in various real-world issues.

Behavioral Data and Cohort Analysis

Behavioral analytics is a recent trend that typically reveals new insights into e-commerce sites, online gaming, mobile and smartphone applications, IoT user behavior, and many more [ 112 ]. The behavioral analysis aims to understand how and why the consumers or users behave, allowing accurate predictions of how they are likely to behave in the future. For instance, it allows advertisers to make the best offers with the right client segments at the right time. Behavioral analytics, including traffic data such as navigation paths, clicks, social media interactions, purchase decisions, and marketing responsiveness, use the large quantities of raw user event information gathered during sessions in which people use apps, games, or websites. In our earlier papers Sarker et al. [ 101 , 111 , 113 ] we have discussed how to extract users phone usage behavioral patterns utilizing real-life phone log data for various purposes.

In the real-world scenario, behavioral analytics is often used in e-commerce, social media, call centers, billing systems, IoT systems, political campaigns, and other applications, to find opportunities for optimization to achieve particular outcomes. Cohort analysis is a branch of behavioral analytics that involves studying groups of people over time to see how their behavior changes. For instance, it takes data from a given data set (e.g., an e-commerce website, web application, or online game) and separates it into related groups for analysis. Various machine learning techniques such as behavioral data clustering [ 111 ], behavioral decision tree classification [ 109 ], behavioral association rules [ 113 ], etc. can be used in the area according to the goal. Besides, the concept of RecencyMiner, proposed in our earlier paper Sarker et al. [ 108 ] that takes into account recent behavioral patterns could be effective while analyzing behavioral data as it may not be static in the real-world changes over time.

Anomaly Detection or Outlier Analysis

Anomaly detection, also known as Outlier analysis is a data mining step that detects data points, events, and/or findings that deviate from the regularities or normal behavior of a dataset. Anomalies are usually referred to as outliers, abnormalities, novelties, noise, inconsistency, irregularities, and exceptions [ 63 , 114 ]. Techniques of anomaly detection may discover new situations or cases as deviant based on historical data through analyzing the data patterns. For instance, identifying fraud or irregular transactions in finance is an example of anomaly detection.

It is often used in preprocessing tasks for the deletion of anomalous or inconsistency in the real-world data collected from various data sources including user logs, devices, networks, and servers. For anomaly detection, several machine learning techniques can be used, such as k-nearest neighbors, isolation forests, cluster analysis, etc [ 105 ]. The exclusion of anomalous data from the dataset also results in a statistically significant improvement in accuracy during supervised learning [ 101 ]. However, extracting appropriate features, identifying normal behaviors, managing imbalanced data distribution, addressing variations in abnormal behavior or irregularities, the sparse occurrence of abnormal events, environmental variations, etc. could be challenging in the process of anomaly detection. Detection of anomalies can be applicable in a variety of domains such as cybersecurity analytics, intrusion detections, fraud detection, fault detection, health analytics, identifying irregularities, detecting ecosystem disturbances, and many more. This anomaly detection can be considered a significant task for building effective systems with higher accuracy within the area of data science.

Factor Analysis

Factor analysis is a collection of techniques for describing the relationships or correlations between variables in terms of more fundamental entities known as factors [ 23 ]. It’s usually used to organize variables into a small number of clusters based on their common variance, where mathematical or statistical procedures are used. The goals of factor analysis are to determine the number of fundamental influences underlying a set of variables, calculate the degree to which each variable is associated with the factors, and learn more about the existence of the factors by examining which factors contribute to output on which variables. The broad purpose of factor analysis is to summarize data so that relationships and patterns can be easily interpreted and understood [ 143 ].