An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Korean J Anesthesiol

- v.69(1); 2016 Feb

Nonparametric statistical tests for the continuous data: the basic concept and the practical use

Francis sahngun nahm.

Department of Anesthesiology and Pain Medicine, Seoul National University Bundang Hospital, Seongnam, Korea.

Conventional statistical tests are usually called parametric tests. Parametric tests are used more frequently than nonparametric tests in many medical articles, because most of the medical researchers are familiar with and the statistical software packages strongly support parametric tests. Parametric tests require important assumption; assumption of normality which means that distribution of sample means is normally distributed. However, parametric test can be misleading when this assumption is not satisfied. In this circumstance, nonparametric tests are the alternative methods available, because they do not required the normality assumption. Nonparametric tests are the statistical methods based on signs and ranks. In this article, we will discuss about the basic concepts and practical use of nonparametric tests for the guide to the proper use.

Introduction

Statistical analysis is a universal method with which to assess the validity of a conclusion. It is one of the most important aspects of a medical paper. Statistical analysis grants meaning to otherwise meaningless series of numbers and allow researchers to draw conclusions from uncertain facts. Hence, it is a work of creation that breathes life into data. However, the inappropriate use of statistical techniques results in faulty conclusions, inducing errors and undermining the significance of the article. Moreover, medical researchers must pay more attention to acquiring statistical validity as evidence-based medicine has taken center stage on the medicine scene in these days. Recently, rapid advances in statistical analysis packages have opened doors to more convenient analyses. However, easier methods of performing statistical analyses, such as inputting data on software and simply pressing the "analysis" or "OK" button to compute the P value without understanding the basic concepts of statistics, have increased the risk of using incorrect statistical analysis methods or misinterpreting analytical results [ 1 ].

Several journals, including the Korean Journal of Anesthesiology , have been striving to identify and to reduce statistical errors overall in medical journals [ 2 , 3 , 4 , 5 ]. As a result, a wide array of statistical errors has been found in many papers. This has further motivated the editors of each journal to enhance the quality of their journals by developing checklists or guidelines for authors and reviewers [ 6 , 7 , 8 , 9 ] to reduce statistical errors. One of the most common statistical errors found in journals is the application of parametric statistical techniques to nonparametric data [ 4 , 5 ]. This is presumed to be due to the fact that medical researchers have had relatively few opportunities to use nonparametric statistical techniques as compared to parametric techniques because they have been trained mostly on parametric statistics, and many statistics software packages strongly support parametric statistical techniques. Therefore, the present paper seeks to boost our understanding of nonparametric statistical analysis by providing actual cases of the use of nonparametric statistical techniques, which have only been introduced rarely in the past.

The History of Nonparametric Statistical Analysis

John Arbuthnott, a Scottish mathematician and physician, was the first to introduce nonparametric analytical methods in 1710 [ 10 ]. He performed a statistical analysis similar to the sign test used today in his paper "An Argument for divine providence, taken from the constant regularity observ'd in the Births of both sexes." Nonparametric analysis was not used for a while after that paper, until Jacob Wolfowitz used the term "nonparametric" again in 1942 [ 11 ]. Then, in 1945, Frank Wilcoxon introduced a nonparametric analysis method using rank, which is the most commonly used method today [ 12 ]. In 1947, Henry Mann and his student Donald Ransom Whitney expanded on Wilcoxon's technique to develop a technique for comparing two groups of different number of samples [ 13 ]. In 1951, William Kruskal and Allen Wallis introduced a nonparametric test method to compare three or more groups using rank data [ 14 ]. Since then, several studies have reported that nonparametric analyses are just as efficient as parametric methods; it is known that the asymptotic relative efficiency of nonparametric statistical analysis, specifically Wilcoxon's signed rank test and the Mann-Whitney test, is 0.955 against the t-test when the data satisfies the assumption of normality [ 15 , 16 ]. Ever since when Tukey developed a method to compute confidence intervals using a nonparametric method, nonparametric analysis was established as a commonly used analytical method in medical and natural science research [ 17 ].

The Basic Principle of Nonparametric Statistical Analysis

Traditional statistical methods, such as the t-test and analysis of variance, of the types that are widely used in medical research, require certain assumptions about the distribution of the population or sample. In particular, the assumption of normality, which specifies that the means of the sample group are normally distributed, and the assumption of equal variance, which specifies that the variances of the samples and of their corresponding population are equal, are two most basic prerequisites for parametric statistical analysis. Hence, parametric statistical analyses are conducted on the premise that the above assumptions are satisfied. However, if these assumptions are not satisfied, that is, if the distribution of the sample is skewed toward one side or the distribution is unknown due to the small sample size, parametric statistical techniques cannot be used. In such cases, nonparametric statistical techniques are excellent alternatives.

Nonparametric statistical analysis greatly differs from parametric statistical analysis in that it only uses + or - signs or the rank of data sizes instead of the original values of the data. In other words, nonparametric analysis focuses on the order of the data size rather than on the value of the data per se. For example, let's pretend that we have the following five data for a variable X.

After listing the data in the order of their sizes, each instance of data is ranked from one to five; the data with the lowest value (18) is ranked 1, and the data with the greatest value (99) is ranked 5. There are two data instances with values of 32, and these are accordingly given a rank of 2.5. Furthermore, the signs assigned to each data instance are a + for those values greater than the reference value and a − for those values less than the reference value. If we assign a reference value of 50 for these instances, there would only be one value greater than 50, resulting in one + and four − signs. While parametric analysis focuses on the difference in the means of the groups to be compared, nonparametric analysis focuses on the rank, thereby putting more emphasis differences of the median values than the mean.

As shown above, nonparametric analysis converts the original data in the order of size and only uses the rank or signs. Although this can result in a loss of information of the original data, nonparametric analysis has more statistical power than parametric analysis when the data are not normally distributed. In fact, as shown in the above example, one particular feature of nonparametric analysis is that it is minimally affected by extreme values because the size of the maximum value (99) does not affect the rank or the sign even if it is greater than 99.

Advantages and Disadvantages of Nonparametric Statistical Analysis

Nonparametric statistical techniques have the following advantages:

- There is less of a possibility to reach incorrect conclusions because assumptions about the population are unnecessary. In other words, this is a conservative method.

- It is more intuitive and does not require much statistical knowledge.

- Statistics are computed based on signs or ranks and thus are not greatly affected by outliers.

- This method can be used even for small samples.

On the other hand, nonparametric statistical techniques are associated with the following disadvantages:

- Actual differences in a population cannot be known because the distribution function cannot be stated.

- The information acquired from nonparametric methods is limited compared to that from parametric methods, and it is more difficult to interpret it.

- Compared to parametric methods, there are only a few analytical methods.

- The information in the data is not fully utilized.

- Computation becomes complicated for a large sample.

In summary, using nonparametric analysis methods reduces the risk of drawing incorrect conclusions because these methods do not make any assumptions about the population, whereas can have lower statistical power. In other words, nonparametric methods are "always valid, but not always efficient," while parametric methods are "always efficient, but not always valid." Therefore, parametric methods are recommended when they can in fact be used.

Types of Nonparametric Statistical Analyses

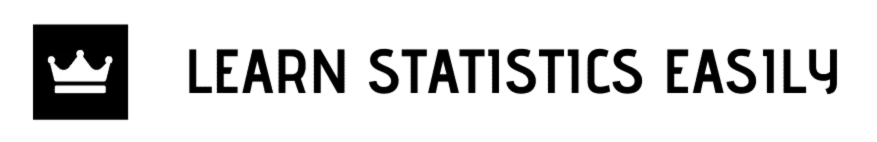

In this section, I explain the median test for one sample, a comparison of two paired samples, a comparison of two independent samples, and a comparison of three or more samples. The types of nonparametric analysis techniques and the corresponding parametric analysis techniques are delineated in Table 1 .

Median test for one sample: the sign test and Wilcoxon's signed rank test

The sign test and Wilcoxon's signed rank test are used for median tests of one sample. These tests examine whether one instance of sample data is greater or smaller than the median (reference value).

The sign test is the simplest test among all nonparametric tests regarding the location of a sample. This test examines the hypothesis about the median θ 0 of a population, and it involves testing the null hypothesis H 0 : θ = θ 0 . If the observed value (X i ) is greater than the reference value (θ 0 ), it is marked as +, and it is given a − sign if the observed value is smaller than the reference value, after which the number of + values is calculated. If there is an observed value in the sample that is equal to the reference value (θ 0 ), the said observed value is eliminated from the sample. Accordingly, the size of the sample is then reduced to proceed with the sign test. The number of sample data instances given the + sign is denoted as 'B' and is referred to as the sign statistic. If the null hypothesis is true, the number of + signs and the number of − signs are equal. The sign test ignores the actual values of the data and only uses + or − signs. Therefore, it is useful when it is difficult to measure the values.

Wilcoxon's signed rank test

The sign test has one drawback in that it may lead to a loss of information because only + or − signs are used in the comparison of the given data with the reference value of θ 0 . In contrast, Wilcoxon's signed rank test not only examines the observed values in comparison with θ 0 but also considers the relative sizes, thus mitigating the limitation of the sign test. Wilcoxon's signed rank test has more statistical power because it can reduce the loss of information that arises from only using signs. As in the sign test, if there is an observed value that is equal to the reference value θ 0 , this observed value is eliminated from the sample and the sample size is adjusted accordingly. Here, given a sample with five data points (X i ), as shown in Table 2 , we test whether the median (θ 0 ) of this sample is 50.

Let the median (θ 0 ) is 50. The original data were transformed into rank and sign data. +/- mean X i > 50 and < 50 respectively. The round bracket means rank.

In this case, if we subtract θ 0 from each data point (R i = X i - θ 0 ), find the absolute value, and rank the values in increasing order, the resulting rank is equal to the value in the parenthesis in Table 2 . With Wilcoxon's signed rank test, only the ranks with positive values are added as per the following equation:

Comparison of a paired sample: sign test and Wilcoxon's signed rank test

In the previously described one-sample sign test, the given data was compared to the median value (θ 0 ). The sign test for a paired sample compares the scores before and after treatment, with everything else identical to how the one-sample sign test is run. The sign test does not use ranks of the scores but only considers the number of + or − signs. Thus, it is rarely affected by extreme outliers. At the same time, it cannot utilize all of the information in the given data. Instead, it can only provide information about the direction of the difference between two samples, but not about the size of the difference between two samples.

This test is a nonparametric method of a paired t test. The only difference between this test and the previously described one-sample test is that the one-sample test compares the given data to the reference value (θ 0 ), while the paired test compares the pre- and post-treatment scores. In the example with five paired data instances (X ij ), as shown in Table 3 , which shows scores before and after education, X 1j refers to the pre-score of student j, and X 2j refers to the post-score of student j. First, we calculate the change in the score before and after education (R j = X 1j - X 2j ). When R j is listed in the order of its absolute values, the resulting rank is represented by the values within the parentheses in Table 3 . Wilcoxon's signed rank test is then conducted by adding the number of + signs, as in the one-sample test. If the null hypothesis is true, the number of + signs and the number of − signs should be nearly equal.

Under the null hypothesis (no difference between the pre/post scores), test statistics (W + , the sum of the positive rank) would be close to 7.5 ( = ∑ k = 1 5 k 2 ), but get far from 7.5 when the alternative hypothesis is true. According to the table for Wilcoxon's rank sum test, the P value = 0. 1363 when test statistics (W + ) 3 under α = 0.05 (two tailed test) and the sample size = 5. Therefore, null hypothesis cannot be rejected.

The sign test is limited in that it cannot reflect the degree of change between paired scores. Wilcoxon's signed rank test has more statistical power than the sign test because it not only considers the direction of the change but also ranks the degree of change between the paired scores, providing more information for the analysis.

Comparison of two independent samples: Wilcoxon's rank sum test, the Mann-Whitney test, and the Kolmogorov-Smirnov test

Wilcoxon's rank sum test and mann-whitney test.

Wilcoxon's rank sum test ranks all data points in order, calculates the rank sum of each sample, and compares the difference in the rank sums ( Table 4 ). If two groups have similar scores, their rank sums will be similar; however, if the score of one group is higher or lower than that of the other group, the rank sums between the two groups will be farther apart.

There are two independent groups with the sample sizes of group X (m) is 5 and group Y (n) is 4. Under the null hypothesis (no difference between the 2 groups), the rank sum of group X (W X ) and group Y (W Y ) would be close to 22.5 ( = ∑ k = 1 9 k 2 , but get far from 22.5 when the alternative hypothesis is true. According to the table for Wilcoxon's rank sum test, the P value = 0. 0556 when test statistics (W Y ) = 13 under α = 0.05 (two tailed test) at m = 5 and n = 4. Therefore, null hypothesis cannot be rejected.

On the other hand, the Mann-Whitney test compares all data x i belonging in the X group and all data y i belonging in the Y group and calculates the probability of xi being greater than y i : P(x i > y i ). The null hypothesis states that P(x i > y i ) = P(x i < y i ) = ½, while the alternative hypothesis states that P(x i > y i ) ≠ ½. The process of the Mann-Whitney test is illustrated in Table 5 . Although the Mann-Whitney test and Wilcoxon's rank sum test differ somewhat in their calculation processes, they are widely considered equal methods because they use the same statistics.

There are two independent groups with the sample sizes of group X (m) is 5 and group Y (n) is 4. Under the null hypothesis (no difference between the 2 groups), the test statistics (U) gets closer to 10 ( = m × n 2 ), but gets more extreme (smaller in this example) when the alternative hypothesis is true. The test statistics of this data is U = 3, which is greater than the reference value of 1 under α = 0.05 (two tailed test) at m = 5 and n = 4. Therefore, null hypothesis cannot be rejected.

Kolmogorov-Smirnov test (K-S test)

The K-S test is commonly used to examine the normality of a data set. However, it is originally a method that examines the cumulative distributions of two independent samples to examine whether the two samples are extracted from two populations with an equal distribution or the same population. If they were extracted from the same population, the shapes of their cumulative distributions would be equal. In contrast, if the two samples show different cumulative distributions, it can be assumed that they were extracted from different populations. Let's use the example in Table 6 for an actual analysis. First, we need to identify the distribution pattern of two samples in order to compare two independent samples. In Table 6 , the range of the samples is 43 with a minimum value of 50 and a maximum value of 93. The statistical power of the K-S test is affected by the interval that is set. If the interval is too wide, the statistical power can be reduced due to a small number of intervals; similarly, if the interval is too narrow, the calculations become too complicated due to the excessive number of intervals. The data shown in Table 6 has a range of 43; hence, we will establish an interval range of 4 and set the number of intervals to 11. As shown in Table 6 , a cumulative probability distribution table must be created for each interval (S X , S Y ), and the value with the greatest difference between the cumulative distributions of two variables (Max(S X - S Y )) must be determined. This maximum difference is the test statistic. We compare this difference to the reference value to test the homogeneity of the two samples. The actual analysis process is described in Table 6 .

There are two independent groups with the sample sizes of group X (N X ) and group Y (N Y ) are 15. The maximal difference between the cumulative probability density of X (S X ) and Y (S Y ) is 8/15 (0.533), which is greater than the rejection value of 0.467 under α = 0.05 (two tailed test) at N X = N Y = 15. Therefore, there is a significant difference between the group X and group Y.

Comparison of k independent samples: the Kruskal-Wallis test and the Jonckheere test

Kruskal-wallis test.

The Kruskal-Wallis test is a nonparametric technique with which to analyze the variance. In other words, it analyzes whether there is a difference in the median values of three or more independent samples. The Kruskal-Wallis test is similar to the Mann-Whitney test in that it ranks the original data values. That is, it collects all data instances from the samples and ranks them in increasing order. If two scores are equal, it uses the average of the two ranks to be given. The rank sums are then calculated and the Kruskal-Wallis test statistic (H) is calculated as per the following equation [ 14 ]:

Jonckheere test

Greater statistical power can be acquired if a rank alternative hypothesis is established using prior information. Let's think about a case in which we can predict the order of the effects of a treatment when increasing the degree of the treatment. For example, when we are evaluating the efficacy of an analgesic, we can predict that the effect will increase depending on the dosage, dividing the groups into a control group, a low-dosage group, and a high-dosage group. In this case, the null hypothesis H 2 is better than the null hypothesis H 1 .

H 0 : [τ 1 = τ 2 = τ 3 ]

H 1 : [τ 1 , τ 2 , τ 3 not all equal]

H 2 : [τ 1 ≤ τ 2 ≤ τ 3 , with at least strict inequality]

The Jonckheere test is a nonparametric technique that can be used to test such a rank alternative hypothesis [ 18 ].

The actual analysis process is described with illustration in Table 7 .

The test statistic J = 55 and P (J ≥ 55) = 0.035. Therefore, the null hypothesis (τ 1 = τ 2 = τ 3 ) is rejected and the alternative hypothesis (τ 1 ≤ τ 2 ≤ τ 3 , with at least strict inequality) is accepted under α = 0.05.

Nonparametric tests and parametric tests: which should we use?

As there is more than one treatment modality for a disease, there is also more than one method of statistical analysis. Nonparametric analysis methods are clearly the correct choice when the assumption of normality is clearly violated; however, they are not always the top choice for cases with small sample sizes because they have less statistical power compared to parametric techniques and difficulties in calculating the "95% confidence interval," which assists the understanding of the readers. Parametric methods may lead to significant results in some cases, while nonparametric methods may result in more significant results in other cases. Whatever methods can be selected to support the researcher's arguments most powerfully and to help the reader's easy understandings, when parametric methods are selected, researchers should ensure that the required assumptions are all satisfied. If this is not the case, it is more valid to use nonparametric methods because they are "always valid, but not always efficient," while parametric methods are "always efficient, but not always valid".

We use cookies to ensure that we give you the best experience on our website. By continuing to browse this repository, you give consent for essential cookies to be used. You can read more about our Privacy and Cookie Policy .

- Departments

- University Research

- About the University

Nonparametric predictive inference for future order statistics

ALQIFARI, HANA,NASSER,A (2017) Nonparametric predictive inference for future order statistics. Doctoral thesis, Durham University.

Quick links

- Latest additions

- Browse by year

- Browse by department

- Deposit thesis

- Usage statistics

Prospective students

- International students

- Research degrees

- Durham e-Theses

- Deposit Guide

Last Modified: Summer 2013 | Disclaimer | Trading name | Powered by EPrints 3

- Subject List

- Take a Tour

- For Authors

- Subscriber Services

- Publications

- African American Studies

- African Studies

- American Literature

- Anthropology

- Architecture Planning and Preservation

- Art History

- Atlantic History

- Biblical Studies

- British and Irish Literature

- Childhood Studies

- Chinese Studies

- Cinema and Media Studies

- Communication

- Criminology

- Environmental Science

- Evolutionary Biology

- International Law

- International Relations

- Islamic Studies

- Jewish Studies

- Latin American Studies

- Latino Studies

- Linguistics

- Literary and Critical Theory

- Medieval Studies

- Military History

- Political Science

- Public Health

- Renaissance and Reformation

- Social Work

- Urban Studies

- Victorian Literature

- Browse All Subjects

How to Subscribe

- Free Trials

In This Article Expand or collapse the "in this article" section Nonparametric Statistical Analysis in Psychology

Introduction, landmark sources.

- General Overviews

- Critical Evaluations

- Reference Works

- Encyclopedias and Dictionaries

- Bibliographies

- Statistical Journals

- Online Statistical Resources

Related Articles Expand or collapse the "related articles" section about

About related articles close popup.

Lorem Ipsum Sit Dolor Amet

Vestibulum ante ipsum primis in faucibus orci luctus et ultrices posuere cubilia Curae; Aliquam ligula odio, euismod ut aliquam et, vestibulum nec risus. Nulla viverra, arcu et iaculis consequat, justo diam ornare tellus, semper ultrices tellus nunc eu tellus.

- Effect Size

- Exploratory Data Analysis

- Factor Analysis

- Inferential Statistics in Psychology

- Mathematical Psychology

- Path Models

- Research Methods

Other Subject Areas

Forthcoming articles expand or collapse the "forthcoming articles" section.

- Data Visualization

- Remote Work

- Workforce Training Evaluation

- Find more forthcoming articles...

- Export Citations

- Share This Facebook LinkedIn Twitter

Nonparametric Statistical Analysis in Psychology by Gregory J. Privitera , James J. Gillespie LAST REVIEWED: 23 June 2023 LAST MODIFIED: 23 June 2023 DOI: 10.1093/obo/9780199828340-0221

While nonparametric testing was first introduced in the early 1700s in a paper that utilized a version of the sign test, most nonparametric tests utilized today were developed later in the twentieth century, primarily since the late 1930s. Nonparametric testing has three unique characteristics that make it advantageous for analysis: (a) it can be used to analyze data that are not scaled , that is, data on a nominal or an ordinal scale of measurement; (b) it generally does not require assumptions about population parameters; and (c) it generally does not require that the distribution in a given population is normal, often referred to as “distribution free” tests. In terms of computation, the analysis of nonparametric tests are achieved without needing the value of a sample mean and sample variance, thereby making it possible for these tests to evaluate “effects” in populations with any type of distribution, that is, these tests can be computed without assumptions related to variability in a population. Two critical concerns highlight the need for the elucidation of nonparametric testing in terms of its role in psychology. First, much of human behavior and performance does not conform to a normal distribution, which drives the need for “distribution free” tests to comprehensively study human behavior. Second, the disclosure and reporting of statistical testing can be problematic. To some extent, a disconnect exists in the peer-review literature between the reporting of parametric tests and the corresponding assumptions for those tests, that is, assumptions often are not mentioned at all to justify the use of parametric testing. It is largely left to the reader to accept that all assumptions were satisfied. Further evidence exists of misreported statistical outcomes in the peer-review psychological literature tending to favor the researchers’ expectations of an outcome. These discrepancies highlight the need for a broader understanding of the role and utility of nonparametric statistical alternatives in null hypothesis significance testing. This article aims to provide resources, both in text and online, for introducing and explaining nonparametric statistics and advanced nonparametric methodologies in psychology.

This section presents landmark studies that introduced the nonparametric techniques most often employed in the field of psychology. In the early 1700s, the sign test, or a version of it, was the first nonparametric test to be introduced, found in the paper Arbuthnott 1710 . Wolfowitz 1942 was written by the first researcher to formally coin the term nonparametric as an alternative framework to parametric statistics. Early advances in nonparametric testing were most commonly applied to analyze the frequency of nominal or categorical data, thus the chi-square test was first evaluated in Pearson 1900 to calculate the goodness of fit for frequency distributions. To analyze correlational data, Spearman 1904a and Spearman 1904b introduced rho as a nonparametric alternative to the Pearson correlation coefficient. Among the nonparametric tests most commonly applied in the psychological sciences to analyze ordinal data was the Friedman test, found in Friedman 1937 and Friedman 1939 , as a nonparametric alternative to the one-way repeated measures analysis of variance; the Kruskal-Wallis H test in Kruskal and Wallis 1952 as a nonparametric alternative to the one-way between-subjects analysis of variance; the Wilcoxon Signed-Ranks T test in Wilcoxon 1945 , as a nonparametric alternative to the paired samples t test; and the Mann-Whitney U test in Mann and Whitney 1947 as a nonparametric alternative to the independent-samples t test. The authors of these works helped pioneer the growth and development of nonparametric testing as an alternative framework to parametric statistics.

Arbuthnott, J. 1710. An argument for divine providence, taken from the constant regularity observed in the births of both sexes. Philosophical Transactions 27.328: 186–190.

DOI: 10.1098/rstl.1710.0011

This is the first article known to introduce a nonparametric test, the sign test, to assess differences in births between two groups, males and females.

Friedman, M. 1937. The use of ranks to avoid the assumption of normality implicit in the analysis of variance. Journal of the American Statistical Association 32.200: 675–701.

DOI: 10.1080/01621459.1937.10503522

This is the first article to introduce a nonparametric alternative to a one-way repeated measures analysis of variance, the Friedman test.

Friedman, M. A. 1939. A correction: The use of ranks to avoid the assumption of normality implicit in the analysis of variance. Journal of the American Statistical Association 34.205: 109.

DOI: 10.2307/2279169

This article adds a correction to a formula published in Friedman 1937 on p. 695, placing the denominator of the fraction under a square sign.

Kruskal, W. H., and W. A. Wallis. 1952. Use of ranks in one-criterion variance analysis. Journal of the American Statistical Association 47: 583–621.

DOI: 10.2307/2280779

This is the first article to introduce a nonparametric alternative to a one-way between-subjects analysis of variance, the Kruskal-Wallis H test.

Mann, H. B., and D. R. Whitney. 1947. On a test whether one of two random variables is stochastically larger than the other. Annals of Mathematical Statistics 18.1: 50–60.

DOI: 10.1214/aoms/1177730491

This is the first article to introduce a nonparametric alternative to an independent-samples t test, the Mann-Whitney U test.

Pearson, K. 1900. On the criterion that a given system of deviations from the probable in the case of a correlated system of variables is such that it can be reasonably supposed to have arisen from random sampling. Philosophical Magazine , Series 5 50.302: 157–175.

DOI: 10.1080/14786440009463897

This is the first article to investigate a nonparametric test to analyze frequency data for nominal variables, the chi-square test.

Spearman, C. E. 1904a. The proof and measurement of association between two things. American Journal of Psychology 15.1: 72–101.

DOI: 10.2307/1412159

This is the first article to introduce a nonparametric alternative to a Pearson correlation coefficient, the Spearman rho.

Spearman, C. E. 1904b. “General intelligence,” objectively determined and measured. American Journal of Psychology 15.2: 201–292.

DOI: 10.2307/1412107

This article is the second publication by Charles Spearman for introducing a nonparametric alternative to a Pearson correlation coefficient, the Spearman rho.

Wilcoxon, F. 1945. Individual comparisons by ranking methods. Biometrics 1.6: 80–83.

DOI: 10.2307/3001968

This is the first article to introduce a nonparametric alternative to a paired samples t test, the Wilcoxon Signed-Ranks T test.

Wolfowitz, J. 1942. Additive Partition Functions and a Class of Statistical Hypotheses. Annals of Mathematical Statistics 13.3: 247–279.

DOI: 10.1214/aoms/1177731566

This article is a landmark publication in that it is the first article to introduce the term “nonparametric” into the statistical and peer-reviewed literature.

back to top

Users without a subscription are not able to see the full content on this page. Please subscribe or login .

Oxford Bibliographies Online is available by subscription and perpetual access to institutions. For more information or to contact an Oxford Sales Representative click here .

- About Psychology »

- Meet the Editorial Board »

- Abnormal Psychology

- Academic Assessment

- Acculturation and Health

- Action Regulation Theory

- Action Research

- Addictive Behavior

- Adolescence

- Adoption, Social, Psychological, and Evolutionary Perspect...

- Advanced Theory of Mind

- Affective Forecasting

- Affirmative Action

- Ageism at Work

- Allport, Gordon

- Alzheimer’s Disease

- Ambulatory Assessment in Behavioral Science

- Analysis of Covariance (ANCOVA)

- Animal Behavior

- Animal Learning

- Anxiety Disorders

- Art and Aesthetics, Psychology of

- Artificial Intelligence, Machine Learning, and Psychology

- Assessment and Clinical Applications of Individual Differe...

- Attachment in Social and Emotional Development across the ...

- Attention-Deficit/Hyperactivity Disorder (ADHD) in Adults

- Attention-Deficit/Hyperactivity Disorder (ADHD) in Childre...

- Attitudinal Ambivalence

- Attraction in Close Relationships

- Attribution Theory

- Authoritarian Personality

- Bayesian Statistical Methods in Psychology

- Behavior Therapy, Rational Emotive

- Behavioral Economics

- Behavioral Genetics

- Belief Perseverance

- Bereavement and Grief

- Biological Psychology

- Birth Order

- Body Image in Men and Women

- Bystander Effect

- Categorical Data Analysis in Psychology

- Childhood and Adolescence, Peer Victimization and Bullying...

- Clark, Mamie Phipps

- Clinical Neuropsychology

- Clinical Psychology

- Cognitive Consistency Theories

- Cognitive Dissonance Theory

- Cognitive Neuroscience

- Communication, Nonverbal Cues and

- Comparative Psychology

- Competence to Stand Trial: Restoration Services

- Competency to Stand Trial

- Computational Psychology

- Conflict Management in the Workplace

- Conformity, Compliance, and Obedience

- Consciousness

- Coping Processes

- Correspondence Analysis in Psychology

- Counseling Psychology

- Creativity at Work

- Critical Thinking

- Cross-Cultural Psychology

- Cultural Psychology

- Daily Life, Research Methods for Studying

- Data Science Methods for Psychology

- Data Sharing in Psychology

- Death and Dying

- Deceiving and Detecting Deceit

- Defensive Processes

- Depressive Disorders

- Development, Prenatal

- Developmental Psychology (Cognitive)

- Developmental Psychology (Social)

- Diagnostic and Statistical Manual of Mental Disorders (DSM...

- Discrimination

- Dissociative Disorders

- Drugs and Behavior

- Eating Disorders

- Ecological Psychology

- Educational Settings, Assessment of Thinking in

- Embodiment and Embodied Cognition

- Emerging Adulthood

- Emotional Intelligence

- Empathy and Altruism

- Employee Stress and Well-Being

- Environmental Neuroscience and Environmental Psychology

- Ethics in Psychological Practice

- Event Perception

- Evolutionary Psychology

- Expansive Posture

- Experimental Existential Psychology

- Eyewitness Testimony

- Eysenck, Hans

- Festinger, Leon

- Five-Factor Model of Personality

- Flynn Effect, The

- Forensic Psychology

- Forgiveness

- Friendships, Children's

- Fundamental Attribution Error/Correspondence Bias

- Gambler's Fallacy

- Game Theory and Psychology

- Geropsychology, Clinical

- Global Mental Health

- Habit Formation and Behavior Change

- Health Psychology

- Health Psychology Research and Practice, Measurement in

- Heider, Fritz

- Heuristics and Biases

- History of Psychology

- Human Factors

- Humanistic Psychology

- Implicit Association Test (IAT)

- Industrial and Organizational Psychology

- Insanity Defense, The

- Intelligence

- Intelligence, Crystallized and Fluid

- Intercultural Psychology

- Intergroup Conflict

- International Classification of Diseases and Related Healt...

- International Psychology

- Interviewing in Forensic Settings

- Intimate Partner Violence, Psychological Perspectives on

- Introversion–Extraversion

- Item Response Theory

- Law, Psychology and

- Lazarus, Richard

- Learned Helplessness

- Learning Theory

- Learning versus Performance

- LGBTQ+ Romantic Relationships

- Lie Detection in a Forensic Context

- Life-Span Development

- Locus of Control

- Loneliness and Health

- Meaning in Life

- Mechanisms and Processes of Peer Contagion

- Media Violence, Psychological Perspectives on

- Mediation Analysis

- Memories, Autobiographical

- Memories, Flashbulb

- Memories, Repressed and Recovered

- Memory, False

- Memory, Human

- Memory, Implicit versus Explicit

- Memory in Educational Settings

- Memory, Semantic

- Meta-Analysis

- Metacognition

- Metaphor, Psychological Perspectives on

- Microaggressions

- Military Psychology

- Mindfulness

- Mindfulness and Education

- Minnesota Multiphasic Personality Inventory (MMPI)

- Money, Psychology of

- Moral Conviction

- Moral Development

- Moral Psychology

- Moral Reasoning

- Nature versus Nurture Debate in Psychology

- Neuroscience of Associative Learning

- Nonergodicity in Psychology and Neuroscience

- Nonparametric Statistical Analysis in Psychology

- Observational (Non-Randomized) Studies

- Obsessive-Complusive Disorder (OCD)

- Occupational Health Psychology

- Olfaction, Human

- Operant Conditioning

- Optimism and Pessimism

- Organizational Justice

- Parenting Stress

- Parenting Styles

- Parents' Beliefs about Children

- Peace Psychology

- Perception, Person

- Performance Appraisal

- Personality and Health

- Personality Disorders

- Personality Psychology

- Person-Centered and Experiential Psychotherapies: From Car...

- Phenomenological Psychology

- Placebo Effects in Psychology

- Play Behavior

- Positive Psychological Capital (PsyCap)

- Positive Psychology

- Posttraumatic Stress Disorder (PTSD)

- Prejudice and Stereotyping

- Pretrial Publicity

- Prisoner's Dilemma

- Problem Solving and Decision Making

- Procrastination

- Prosocial Behavior

- Prosocial Spending and Well-Being

- Protocol Analysis

- Psycholinguistics

- Psychological Literacy

- Psychological Perspectives on Food and Eating

- Psychology, Political

- Psychoneuroimmunology

- Psychophysics, Visual

- Psychotherapy

- Psychotic Disorders

- Publication Bias in Psychology

- Reasoning, Counterfactual

- Rehabilitation Psychology

- Relationships

- Reliability–Contemporary Psychometric Conceptions

- Religion, Psychology and

- Replication Initiatives in Psychology

- Risk Taking

- Role of the Expert Witness in Forensic Psychology, The

- Sample Size Planning for Statistical Power and Accurate Es...

- Schizophrenic Disorders

- School Psychology

- School Psychology, Counseling Services in

- Self, Gender and

- Self, Psychology of the

- Self-Construal

- Self-Control

- Self-Deception

- Self-Determination Theory

- Self-Efficacy

- Self-Esteem

- Self-Monitoring

- Self-Regulation in Educational Settings

- Self-Report Tests, Measures, and Inventories in Clinical P...

- Sensation Seeking

- Sex and Gender

- Sexual Minority Parenting

- Sexual Orientation

- Signal Detection Theory and its Applications

- Simpson's Paradox in Psychology

- Single People

- Single-Case Experimental Designs

- Skinner, B.F.

- Sleep and Dreaming

- Small Groups

- Social Class and Social Status

- Social Cognition

- Social Neuroscience

- Social Support

- Social Touch and Massage Therapy Research

- Somatoform Disorders

- Spatial Attention

- Sports Psychology

- Stanford Prison Experiment (SPE): Icon and Controversy

- Stereotype Threat

- Stereotypes

- Stress and Coping, Psychology of

- Student Success in College

- Subjective Wellbeing Homeostasis

- Taste, Psychological Perspectives on

- Teaching of Psychology

- Terror Management Theory

- Testing and Assessment

- The Concept of Validity in Psychological Assessment

- The Neuroscience of Emotion Regulation

- The Reasoned Action Approach and the Theories of Reasoned ...

- The Weapon Focus Effect in Eyewitness Memory

- Theory of Mind

- Therapy, Cognitive-Behavioral

- Thinking Skills in Educational Settings

- Time Perception

- Trait Perspective

- Trauma Psychology

- Twin Studies

- Type A Behavior Pattern (Coronary Prone Personality)

- Unconscious Processes

- Video Games and Violent Content

- Virtues and Character Strengths

- Women and Science, Technology, Engineering, and Math (STEM...

- Women, Psychology of

- Work Well-Being

- Wundt, Wilhelm

- Privacy Policy

- Cookie Policy

- Legal Notice

- Accessibility

Powered by:

- [66.249.64.20|185.148.24.167]

- 185.148.24.167

Parametric and Nonparametric Statistics

Most statistics courses tend to focus on parametric statistics; however, you might find that as you prepare to analyze your dissertation data, parametric statistics might not be an appropriate choice for your research. The following are some of the differences between parametric and nonparametric statistics.

Parametric Statistics

Parametric statistics are any statistical tests based on underlying assumptions about data’s distribution. In other words, parametric statistics are based on the parameters of the normal curve. Because parametric statistics are based on the normal curve, data must meet certain assumptions, or parametric statistics cannot be calculated. Prior to running any parametric statistics, you should always be sure to test the assumptions for the tests that you are planning to run.

Nonparametric Statistics

As implied by the name, nonparametric statistics are not based on the parameters of the normal curve. Therefore, if your data violate the assumptions of a usual parametric and nonparametric statistics might better define the data, try running the nonparametric equivalent of the parametric test. You should also consider using nonparametric equivalent tests when you have limited sample sizes (e.g., n < 30). Though nonparametric statistical tests have more flexibility than do parametric statistical tests, nonparametric tests are not as robust; therefore, most statisticians recommend that when appropriate, parametric statistics are preferred.

Parametric and Nonparametric Equivalencies

The table below outlines some common research designs and their appropriate parametric and nonparametric equivalents.

Browse More on PhDStudent

Everything You Need to Know About References and Citations: Part 1

When you conduct your research, it is important to record the details of all the information you find to provide accurate references, …

How to Write a Proposal: For a Master’s Thesis or Dissertation

Note: Many thanks to fellow PhDStudent blogger Ryan Krone for his contributions and insight to this post. Your thesis/dissertation proposal provides an …

How to Find Free Money for Graduate School Part 2

Getting into graduate school is already a challenge on its own, and funding the program once admitted is even harder. Graduate studies …

How to Find Free Money for Graduate School

You’ve finally earned your Bachelor’s degree and have made it into graduate school. Whether you already have massive student loans from undergrad …

Part 3 of How to Pick Your Defense Committee

What strategies can a doctoral student employ to maneuver the trials and tribulations of a dissertation committee? In Part 2, we …

Best Dissertation Proofreading and Editing Tips to Make Your Work Spotless

You’ve heard this statement many times before: “the dissertation is the most important project you’ve ever worked on.” That may sound like …

Diving Deeper into Limitations and Delimitations

If you are working on a thesis, dissertation, or other formal research project, chances are your advisor or committee will ask you …

Part 2 of How to Pick Your Defense Committee

Choosing a committee can be a daunting task for a doctoral student. We’ve already covered two strategies that can help you through this …

Part 1 of How to Pick Your Defense Committee

So you’re ready to pick your committee members; there are a few things to keep in mind first—after all, it is a …

Intro To Series on How to Pick Your Defense Committee

Choosing the right defense committee can potentially be the difference between a smooth transition of receiving your doctoral degree or dodging bullets …

On Babies and Dissertations: Part 3

I recently had the experience of expecting my first baby a month before I graduated. Throughout the process, I accidentally learned several …

On Babies and Dissertations: Part 2

Click here to cancel reply.

You must be logged in to post a comment.

Copyright © 2024 PhDStudent.com. All rights reserved. Designed by Divergent Web Solutions, LLC .

LEARN STATISTICS EASILY

Learn Data Analysis Now!

Non-Parametric Statistics: A Comprehensive Guide

Exploring the Versatile World of Non-Parametric Statistics: Mastering Flexible Data Analysis Techniques.

Introduction

Non-parametric statistics serve as a critical toolset in data analysis. They are known for their adaptability and the capacity to provide valid results without the stringent prerequisites demanded by parametric counterparts. This article delves into the fundamentals of non-parametric techniques, shedding light on their operational mechanisms, advantages, and scenarios of optimal application. By equipping readers with a solid grasp of non-parametric statistics , we aim to enhance their analytical capabilities, enabling the effective handling of diverse datasets, especially those that challenge conventional parametric assumptions. Through a precise, technical exposition, this guide seeks to elevate the reader’s proficiency in applying non-parametric methods to extract meaningful insights from data, irrespective of its distribution or scale.

- Non-parametric statistics bypass assumptions for true data integrity.

- Flexible methods in non-parametric statistics reveal hidden data patterns.

- Real-world applications of non-parametric statistics solve complex issues.

- Non-parametric techniques like Mann-Whitney U bring clarity to data.

- Ethical data analysis through non-parametric statistics upholds truth.

Ad description. Lorem ipsum dolor sit amet, consectetur adipiscing elit.

Understanding Non-Parametric Statistics

Non-parametric statistics are indispensable in data analysis, mainly due to their capacity to process data without the necessity for predefined distribution assumptions. This distinct attribute sets non-parametric methods apart from parametric ones, which mandate that data adhere to certain distribution norms, such as the normal distribution. The utility of non-parametric techniques becomes especially pronounced with datasets where the distribution is either unknown, non-normal, or insufficient sample size to validate any distributional assumptions.

The cornerstone of non-parametric statistics is their reliance on the ranks or order of data points instead of the actual data values. This approach renders them inherently resilient to outliers and aptly suited for analyzing non-linear relationships within the data. Such versatility makes non-parametric methods applicable across diverse data types and research contexts, including situations involving ordinal data or instances where scale measurements are infeasible.

By circumventing the assumption of a specific underlying distribution, non-parametric methods facilitate a more authentic data analysis, capturing its intrinsic structure and characteristics. This capability allows researchers to derive conclusions that are more aligned with the actual nature of their data, which is particularly beneficial in disciplines where data may not conform to the conventional assumptions underpinning parametric tests.

Non-Parametric Statistics Flexibility

The core advantage of Non-Parametric Statistics lies in its inherent flexibility, which is crucial for analyzing data that doesn’t conform to the assumptions required by traditional parametric methods. This flexibility stems from the ability of non-parametric techniques to make fewer assumptions about the data distribution, allowing for a broader application across various types of data structures and distributions.

For instance, non-parametric methods do not assume a specific underlying distribution (such as normal distribution), making them particularly useful for skewed, outliers, or ordinal data. This is a significant technical benefit when dealing with real-world data, often deviating from idealized statistical assumptions.

Moreover, non-parametric statistics are adept at handling small sample sizes where the central limit theorem might not apply, and parametric tests could be unreliable. This makes them invaluable in fields where large samples are difficult to obtain, such as in rare disease research or highly specialized scientific studies.

Another technical aspect of non-parametric methods is their use in hypothesis testing, particularly with the Wilcoxon Signed-Rank Test for paired data and the Mann-Whitney U Test for independent samples. These tests are robust alternatives to the t-test when the data does not meet the necessary parametric assumptions, providing a means to conduct meaningful statistical analysis without the stringent requirements of normality and homoscedasticity.

The flexibility of non-parametric methods extends to their application in correlation analysis with Spearman’s rank correlation and in estimating distribution functions with the Kaplan-Meier estimator, among others. These tools are indispensable in fields ranging from medical research to environmental studies, where the nature of the data and the research questions do not fit neatly into parametric frameworks.

Techniques and Methods

In non-parametric statistics , several essential techniques and methods stand out for their utility and versatility across various types of data analysis. This section delves into six standard non-parametric tests, providing a technical overview of each method and its application.

Mann-Whitney U Test : Often employed as an alternative to the t-test for independent samples, the Mann-Whitney U test is pivotal when comparing two independent groups. It assesses whether their distributions differ significantly, relying not on the actual data values but on the ranks of these values. This test is instrumental when the data doesn’t meet the normality assumption required by parametric tests.

Wilcoxon Signed-Rank Test : This test is a non-parametric alternative to the paired t-test, used when assessing the differences between two related samples, matched samples, or repeated measurements on a single sample. The Wilcoxon test evaluates whether the median differences between pairs of observations are zero. It is ideal for the paired differences that do not follow a normal distribution.

Kruskal-Wallis Test : As the non-parametric counterpart to the one-way ANOVA, the Kruskal-Wallis test extends the Mann-Whitney U test to more than two independent groups. It evaluates whether the populations from which the samples are drawn have identical distributions. Like the Mann-Whitney U, it bases its analysis on the rank of the data, making it suitable for data that does not follow a normal distribution.

Friedman Test : Analogous to the repeated measures ANOVA in parametric statistics, the Friedman test is a non-parametric method for detecting differences in treatments across multiple test attempts. It is beneficial for analyzing data from experiments where measurements are taken from the same subjects under different conditions, allowing for assessing the effects of other treatments on a single sample population.

Spearman’s Rank Correlation : Spearman’s rank correlation coefficient offers a non-parametric measure of the strength and direction of association between two variables. It is especially applicable in scenarios where the variables are measured on an ordinal scale or when the relationship between variables is not linear. This method emphasizes the monotonic relationship between variables, providing insights into the data’s behavior beyond linear correlations.

Kendall’s Tau : Kendall’s Tau is a correlation measure designed to assess the association between two measured quantities. It determines the strength and direction of the relationship, much like Spearman’s rank correlation, but focuses on the concordance and discordance between data points. Kendall’s Tau is particularly useful for data that involves ordinal or ranked variables, providing insight into the monotonic relationship without assuming linearity.

Chi-square Test: The Chi-square test is a non-parametric statistical tool used to determine whether there is a significant difference between the expected frequencies and the observed frequencies in one or more categories. It is beneficial in categorical data analysis, where the variables are nominal or ordinal, and the data are in the form of frequencies or counts. This test is valuable when evaluating hypotheses on the independence of two variables or the goodness of fit for a particular distribution.

Non-Parametric Statistics Real-World Applications

The practical utility of Non-Parametric Statistics is vast and varied, spanning numerous fields and research disciplines. This section showcases real-world case studies and examples where non-parametric methods have provided insightful solutions to complex problems, highlighting the depth and versatility of these techniques.

Environmental Science : In a study examining the impact of industrial pollution on river water quality, researchers employed the Kruskal-Wallis test to compare the pH levels across multiple sites. This non-parametric method was chosen due to the non-normal distribution of pH levels and the presence of outliers caused by sporadic pollution events. The test revealed significant differences in water quality, guiding policymakers in identifying pollution hotspots.

Medical Research : In a longitudinal study on chronic pain management, the Wilcoxon Signed-Rank Test was employed to assess the effectiveness of a novel therapy compared to conventional treatment. Each patient underwent both treatments in different periods, with pain scores recorded on an ordinal scale before and after each treatment phase. Given the non-normal distribution of differences in pain scores before and after each treatment for the same patient, the Wilcoxon test facilitated a statistically robust analysis. It revealed a significant reduction in pain intensity with the new therapy compared to conventional treatment, thereby demonstrating its superior efficacy in a manner that was both robust and suited to the paired nature of the data.

Market Research : A market research firm used Spearman’s Rank Correlation to analyze survey data to understand customer satisfaction across various service sectors. The ordinal ranking of satisfaction levels and the non-linear relationship between service features and customer satisfaction made Spearman’s correlation an ideal choice, uncovering critical drivers of customer loyalty.

Education : In educational research, the Friedman test was utilized to assess the effectiveness of different teaching methods on student performance over time. With data collected from the same group of students under three distinct teaching conditions, the test provided insights into which method led to significant improvements, informing curriculum development.

Social Sciences : Kendall’s Tau was applied in a sociological study to examine the relationship between social media usage and community engagement among youths. Given the ordinal data and the interest in understanding the direction and strength of the association without assuming linearity, Kendall’s Tau offered nuanced insights, revealing a weak but significant negative correlation.

Non-Parametric Statistics Implementation in R

Implementing non-parametric statistical methods in R involves a systematic approach to ensure accurate and ethical analysis. This step-by-step guide will walk you through the process, from data preparation to result interpretation, while emphasizing the importance of data integrity and ethical considerations.

1. Data Preparation:

- Begin by importing your dataset into R using functions like read.csv() for CSV files or read.table() for tab-delimited data.

- Perform initial data exploration using functions like summary(), str(), and head() to understand your data’s structure, variables, and any apparent issues like missing values or outliers.

2. Choosing the Right Test:

- Determine the appropriate non-parametric test based on your data type and research question. For two independent samples, consider the Mann-Whitney U test (wilcox.test() function); for paired samples, use the Wilcoxon Signed-Rank test (wilcox.test() with paired = TRUE); for more than two independent groups, use the Kruskal-Wallis test (kruskal.test()); and for correlation analysis, use Spearman’s rank correlation (cor.test() with method = “spearman”).

3. Executing the Test:

- Execute the chosen test using its corresponding function. Ensure your data meets the test’s requirements, such as correctly ranked or categorized.

- For example, to run a Mann-Whitney U test, use wilcox.test(group1, group2), replacing group1 and group2 with your actual data vectors.

4. Result Interpretation:

- Carefully interpret the output, paying attention to the test statistic and p-value. A p-value less than your significance level (commonly 0.05) indicates a statistically significant difference or correlation.

- Consider the effect size and confidence intervals to assess the practical significance of your findings.

5. Data Integrity and Ethical Considerations:

- Ensure data integrity by double-checking data entry, handling missing values appropriately, and conducting outlier analysis.

- Maintain ethical standards by respecting participant confidentiality, obtaining necessary permissions for data use, and reporting findings honestly without data manipulation.

6. Reporting:

- When documenting your analysis, include a detailed methodology section that outlines the non-parametric tests used, reasons for their selection, and any data preprocessing steps.

- Present your results using visual aids like plots or tables where applicable, and discuss the implications of your findings in the context of your research question.

Throughout this article, we have underscored the significance and value of non-parametric statistics in data analysis. These methods enable us to approach data sets with unknown or non-normal distributions, providing genuine insights and unveiling the truth and beauty hidden within the data. We encourage readers to maintain an open mind and a steadfast commitment to uncovering authentic insights when applying statistical methods to their research and projects. We invite you to explore the potential of non-parametric statistics in your endeavors and to share your findings with the scientific and academic community, contributing to the collective enrichment of knowledge and the advancement of science.

Recommended Articles

Discover more about the transformative power of data analysis in our collection of articles. Dive deeper into the world of statistics with our curated content and join our community of truth-seeking analysts.

- Understanding the Assumptions for Chi-Square Test of Independence

- What is the difference between t-test and Mann-Whitney test?

- Mastering the Mann-Whitney U Test: A Comprehensive Guide

A Comprehensive Guide to Hypotheses Tests in Statistics

- A Guide to Hypotheses Tests

Frequently Asked Questions (FAQs)

Q1: What Are Non-Parametric Statistics? Non-parametric statistics are methods that don’t rely on data from specific distributions. They are used when data doesn’t meet the assumptions of parametric tests.

Q2: Why Choose Non-Parametric Methods? They offer flexibility in analyzing data with unknown distributions or small sample sizes, providing a more ethical approach to data analysis.

Q3: What Is the Mann-Whitney U Test? It’s a non-parametric test for assessing whether two independent samples come from the same distribution, especially useful when data doesn’t meet normality assumptions.

Q4: How Do Non-Parametric Methods Enhance Data Integrity? By not imposing strict assumptions on data, non-parametric methods respect the natural form of data, leading to more truthful insights.

Q5: Can Non-Parametric Statistics Handle Outliers? Yes, non-parametric statistics are less sensitive to outliers, making them suitable for datasets with extreme values.

Q6: What Is the Kruskal-Wallis Test? This test is a non-parametric method for comparing more than two independent samples, proper when the ANOVA assumptions are not met.

Q7: How Does Spearman’s Rank Correlation Work? Spearman’s rank correlation measures the strength and direction of association between two ranked variables, ideal for non-linear relationships.

Q8: What Are the Real-World Applications of Non-Parametric Statistics? They are widely used in fields like environmental science, education, and medicine, where data may not follow standard distributions.

Q9: What Are the Benefits of Using Non-Parametric Statistics in Data Analysis? They provide a more inclusive data analysis, accommodating various data types and distributions and revealing deeper insights.

Q10: How to Get Started with Non-Parametric Statistical Analysis? Begin by understanding the nature of your data and choosing appropriate non-parametric methods that align with your analysis goals.

Similar Posts

The Misconception of Peakedness in Kurtosis

Explore the misconception of Kurtosis as peakedness, learn its true purpose as a tail behavior measure, understanding its applications.

Take your data analysis skills to the next level with a deep understanding of hypotheses tests. Learn the fundamentals and applications.

Cramer’s V and Its Application for Data Analysis

Explore the depths of Cramer’s V for analyzing categorical data relationships in our guide, complete with R and Python applications.

T-test vs Z-test: When to Use Each Test and Why It Matters

Explore the differences between the t-test vs z-test, understand their assumptions, and learn when to use each test for accurate data analysis.

Kendall Tau-b vs Spearman: Which Correlation Coefficient Wins?

Discover why Kendall Tau-b vs Spearman Correlation is crucial for your data analysis and which coefficient offers the most reliable results.

Assumptions in Linear Regression: A Comprehensive Guide

Discover assumptions in linear regression, learn to validate them using real-world examples, and enhance your data analysis skills.

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Save my name, email, and website in this browser for the next time I comment.

- Twitter (X)

Nonparametric statistics

Nonparametric methods are used to make inferences about infinite dimensional parameters in statistical models.

Group members

- Pierre Lafaye De Micheaux

- Gery Geenens

- Spiridon Penev

In situations when a very precise knowledge about a distribution function, a curve or a surface is available, parametric methods are used to identify them when noisy data set is available. When the knowledge is much less precise, non-parametric methods are used. In such situations, the modelling assumptions are that a curve or a surface belongs to certain class of functions. When using the limited information from the noisy data, attempt is made by the statistician to identify the function from the class that “best” fits the data. Typically, expansions of the function in a suitable basis are used and coefficients of the expansion up to certain order are evaluated using the data. Depending on the bases used, one ends up with kernel methods, wavelet methods, spline-based methods, Edgeworth expansions etc. The semi-parametric approach in inference is also widely used in cases where the main component of interest is parametric but there is a non-parametric “nuisance” component involved in the model specification.

The group has strengths in:

Wavelet methods in non-parametric inference. These include applications in density estimation, non-parametric regression and in signal analysis. Wavelets are specific orthonormal bases used for series expansions of curves that exhibit local irregularities and abrupt changes. When estimating spatially inhomogeneous curves wavelet methods outperform traditional nonparametric methods. We have focused on improving the flexibility of wavelet methods in curve estimation. We are looking for a finer balance between stochastic and approximation terms in the mean integrated squared error decomposition. We are also dealing with data-based recommendations for choosing the tuning parameters in the wavelet-based estimation procedure, and with implementing some modifications in the wavelet estimators as to make them satisfy some additional shape constraints like non-negativity, integral equal to one etc. Another interesting application is in choosing multiple sampling rates for economic sampling of signals. The technique involves increasing the sampling rate when high-frequency terms are incorporated in the wavelet estimator, and decreasing it when signal complexity is judged to have decreased. The size of the wavelet coefficients at suitable resolution levels is used in deciding how and when to switch rates. Wavelets also have found wide-range applications in image analysis.

Edgeworth and Saddlepoint approximations for densities and tail-area probabilities. Although being asymptotic in spirit with respect to the sample size, they give accurate approximations even down to a sample size of one. Similar in spirit to Saddlepoint is the Wiener germ approximation which we have applied to approximating the non-central chi-square distribution and its quantiles. When a saddlepoint approximation is ’’inverted”, it can be used for quantile evaluation as an alternative to the Cornish-Fisher method. Quantile evaluation becomes an important area of research because of its application in financial risk evaluation.

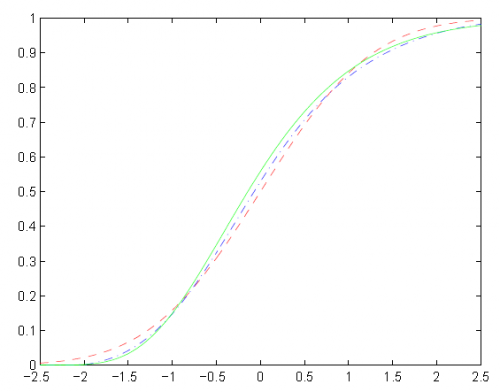

(Higher order) Edgeworth expansions deliver better approximations to the limiting distribution of a statistic in comparison to first order approximations delivered by the Central Limit Theorem. They are beneficial when sample sizes are small to moderate. The graph shows results of the approximation of the distribution of a kernel estimator of the 0.9-quantile of the standard exponential distribution based on 15 observations only. The true distribution: continuous line, one-term Edgeworth approximation: dot-dashed line, Central Limit Theorem-based normal approximation: dashed line. Nonparametric and semi-parametric inference in regression, density estimation and in inference about copulae. Modern regression models are often semiparametric. Hereby the influence of some linear predictors is combined with covariate from other covariates such as time, spatial position etc. The influence of the latter is modelled non-parametrically thus resulting in a final flexible semiparametric model. Issues about efficient estimation of the parametric component are of interest.

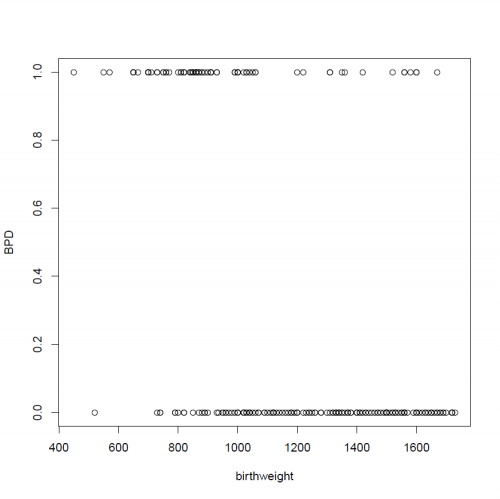

Non-parametric binary regression . When the response in a regression problem is binary (that is, it can only take on the values 0 and 1), the regression function is just the conditional probability that Y takes the value 1 given X. In that case, the panacea seems to fit a logistic model (or sometimes a probit model). However, these models are built on strong parametric constraints, whose validity is evidently rarely checked. The binary nature of the response indeed makes usual visual tools for checking model adequacy (scatter plots, residuals plots, etc.) unavailable. Blindly fitting a logistic model is thus just the same thing as fitting a linear regression model without looking at the scatter plot! To prevent model misspecification, and so misleading conclusions with serious consequences, non-parametric methods may be used. In the example to the left, the model is meant to predict the probability that a newborn is affected by Bronchopulmonary dysplasia (BPD) (a chronic lung disease particularly affecting premature babies), in function of the baby's birth weight. Obviously, the “scatter-plot” of the observations at disposal is not informative (no shape in the cloud of the points, if this can be called a cloud of points). Basing on a logistic (red) or a probit (blue) model, we would conclude that the probability of being affected is very low for babies with birth weight higher than 1400 g. This is however not what the data suggest! The non-parametric estimate (green) clearly shows that this probability remains approximately constant (around 0.15) for birth weight above 1300g.

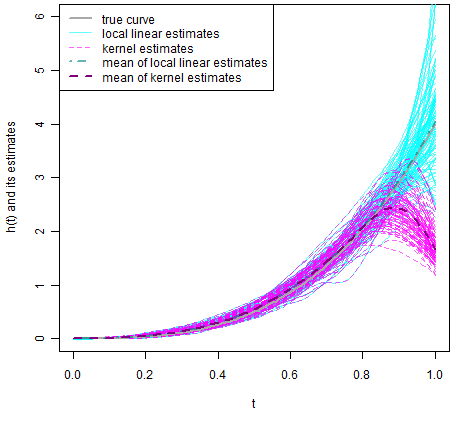

Nonparametric methods in counting process intensity function estimation . In such areas as medical statistics, seismology, insurance, finance, and engineering, a very useful statistical model is the counting process, which is just a stochastic process that registers the number of the “interesting” events that have happened up to any specified time point. Some examples of the events of interest include death of a study subject, failure of an human organ or a part of an industrial product, infection by an infectious disease, relapse of a disease, recovery from a disease, earthquake, trade of a stock, update of a stock index level, default on payment of credit card bills, default of a bond issuer, and firing of an excited neural cell. In the application of counting process, a relevant concept is the intensity function of the counting process, which played an important role in understanding the underlying mechanism that generates the events of interest and in predicting when the event will occur/reoccur or how many events to be expected in a specified interval. In different applications, the intensity function goes by different names, such as hazard rate function in survival data analysis and reliability theory, mortality rate function in actuarial science, and infection rate in epidemiology. When not enough prior knowledge about the specific form of the intensity function is available for the statistician to use a parametric method to estimate the intensity function, one of several nonparametric methods can be used, such as the kernel smoothing method, the roughness penalty method, the spline method, the wavelet shrinkage method, and the local polynomial method. The local polynomial method has been demonstrated to retain the computational ease of the kernel method and meanwhile it does not suffer from the boundary effects of the kernel method. For instance, the above figure shows the estimates of a specific hazard rate function based on 100 simulated right-censored survival data sets using both the kernel method and the local linear method. It's clear that the local linear method outperforms the kernel method.

Functional data analysis. Functional data analysis has recently become a very hot topic in statistical research, as recent technological progress in measuring devices now allows one to observe any spatio-temporal phenomena on arbitrarily fine grids, that is, almost in a continuous manner. The infinite dimension of those functional objects often poses a problem, though. There is thus a clear need for efficient functional data analysis methods. This said, like for the binary regression problem, the infinite-dimension of the considered object makes unconceivable any graphical representation and so any visual guide to specify and validate a model. The risk of misspecification is thus here even higher than in classical data analysis methods, which motivates the use of non-parametric techniques. These have however to be carefully thought about, as infinite-dimensional functional methods are affected by a severe version of the infamous “Curse of Dimensionality”.

Nonparametric Perspective of Deep Learning

Degree type.

- Doctor of Philosophy

Campus location

- West Lafayette

Advisor/Supervisor/Committee Chair

Additional committee member 2, additional committee member 3, additional committee member 4, usage metrics.

- Statistics not elsewhere classified

IMAGES

VIDEO

COMMENTS

Developing nonparametric statistical methods and inference procedures for high-dimensional large data have been a challenging frontier problem of statistics. To attack this problem, in recent years, a clear rising trend has been observed with a radically di erent viewpoint{\Graph-based Nonparametrics," which is the main

The History of Nonparametric Statistical Analysis. John Arbuthnott, a Scottish mathematician and physician, was the first to introduce nonparametric analytical methods in 1710 [].He performed a statistical analysis similar to the sign test used today in his paper "An Argument for divine providence, taken from the constant regularity observ'd in the Births of both sexes."

Doctor of Philosophy in Economics and Statistics Abstract This thesis consists of three chapters. In each chapter I consider a particular problem ... nonparametric identi cation and estimation of treatment e ects is possible in this setting. I present novel nonparametric identi- cation results that motivate simple and `well-posed' nonparametric ...

Department of Statistics have examined a dissertation entitled Inference on nonparametric targets and discrete structures presented by Lu Zhang candidate for the degree of Doctor of Philosophy and hereby certify that it is worthy of acceptance. Signature _____ Typed name: Prof. Lucas Janson

Nonparametric predictive inference (NPI) has been developed for a range of data types, and for a variety of applications and problems in statistics. In this thesis, further theory will be developed on NPI for multiple future observations, with attention to order statistics. The present thesis consists of three main, related contributions. First, new probabilistic theory is presented on NPI for ...

Thoroughly revised and updated, the new edition of Nonparametric Statistical Methods includes additional modern topics and procedures, more practical data sets, and new problems from real-life situations. The book continues to emphasize the importance of nonparametric methods as a significant branch of modern statistics and equips readers with ...

Dear Colleagues, You are kindly invited to contribute to this Special Issue on "Nonparametric Statistical Methods and Their Applications" with an original research paper or a comprehensive review. The main focus is on new theoretical proposals, applications and/or computational aspects of nonparametric statistical methods.

Abstract. Preface The subject of Nonparametric statistics is statistical inference applied to noisy obser-vations of infinite-dimensional "parameters" like images and time-dependent signals. This ...

Wolfowitz 1942 was written by the first researcher to formally coin the term nonparametric as an alternative framework to parametric statistics. Early advances in nonparametric testing were most commonly applied to analyze the frequency of nominal or categorical data, thus the chi-square test was first evaluated in Pearson 1900 to calculate the ...

PhD students (in Statistics) at Columbia University. The choice of topics is very eclectic and mostly re ect: (a) my background and research interests, and (b) some of the topics I wanted to learn more systematically in 2016. The rst part of this lecture notes is on nonparametric function estimation | density

As implied by the name, nonparametric statistics are not based on the parameters of the normal curve. Therefore, if your data violate the assumptions of a usual parametric and nonparametric statistics might better define the data, try running the nonparametric equivalent of the parametric test. ... Your thesis/dissertation proposal provides an