Thank you for visiting nature.com. You are using a browser version with limited support for CSS. To obtain the best experience, we recommend you use a more up to date browser (or turn off compatibility mode in Internet Explorer). In the meantime, to ensure continued support, we are displaying the site without styles and JavaScript.

- View all journals

- My Account Login

- Explore content

- About the journal

- Publish with us

- Sign up for alerts

- Data Descriptor

- Open access

- Published: 11 September 2023

A high-resolution daily global dataset of statistically downscaled CMIP6 models for climate impact analyses

- Solomon Gebrechorkos ORCID: orcid.org/0000-0001-7498-0695 1 , 2 ,

- Julian Leyland 1 ,

- Louise Slater ORCID: orcid.org/0000-0001-9416-488X 2 ,

- Michel Wortmann 2 ,

- Philip J. Ashworth 3 ,

- Georgina L. Bennett ORCID: orcid.org/0000-0002-4812-8180 4 ,

- Richard Boothroyd ORCID: orcid.org/0000-0001-9742-4229 5 ,

- Hannah Cloke 6 ,

- Pauline Delorme ORCID: orcid.org/0000-0002-5865-714X 7 ,

- Helen Griffith 6 ,

- Richard Hardy 8 ,

- Laurence Hawker ORCID: orcid.org/0000-0002-8317-7084 9 ,

- Stuart McLelland 7 ,

- Jeffrey Neal ORCID: orcid.org/0000-0001-5793-9594 9 ,

- Andrew Nicholas 4 ,

- Andrew J. Tatem ORCID: orcid.org/0000-0002-7270-941X 1 ,

- Ellie Vahidi 4 ,

- Daniel R. Parsons ORCID: orcid.org/0000-0002-5142-4466 7 &

- Stephen E. Darby ORCID: orcid.org/0000-0001-8778-4394 1

Scientific Data volume 10 , Article number: 611 ( 2023 ) Cite this article

10k Accesses

4 Citations

5 Altmetric

Metrics details

- Climate and Earth system modelling

- Projection and prediction

A large number of historical simulations and future climate projections are available from Global Climate Models, but these are typically of coarse resolution, which limits their effectiveness for assessing local scale changes in climate and attendant impacts. Here, we use a novel statistical downscaling model capable of replicating extreme events, the Bias Correction Constructed Analogues with Quantile mapping reordering (BCCAQ), to downscale daily precipitation, air-temperature, maximum and minimum temperature, wind speed, air pressure, and relative humidity from 18 GCMs from the Coupled Model Intercomparison Project Phase 6 (CMIP6). BCCAQ is calibrated using high-resolution reference datasets and showed a good performance in removing bias from GCMs and reproducing extreme events. The globally downscaled data are available at the Centre for Environmental Data Analysis ( https://doi.org/10.5285/c107618f1db34801bb88a1e927b82317 ) for the historical (1981–2014) and future (2015–2100) periods at 0.25° resolution and at daily time step across three Shared Socioeconomic Pathways (SSP2-4.5, SSP5-3.4-OS and SSP5-8.5). This new climate dataset will be useful for assessing future changes and variability in climate and for driving high-resolution impact assessment models.

Similar content being viewed by others

An ensemble of bias-adjusted CMIP6 climate simulations based on a high-resolution North American reanalysis

Downscaling and bias-correction contribute considerable uncertainty to local climate projections in CMIP6

A Large Ensemble Global Dataset for Climate Impact Assessments

Background & summary.

A large number of climate projections are available from Global Climate Models (GCMs), but these projections are typically of relatively coarse spatial resolution (~1–3°) and with large biases and uncertainties. These GCM data are used to understand and assess potential changes and variability in climate and climate extremes at a global scale 1 , 2 , 3 , but their coarse resolution means that they are not suitable for direct use in impact assessment studies or for decision-making processes at a local scale 4 , 5 , 6 , 7 . In addition, GCMs are known to have large biases and uncertainties in representing the historical and future climate, especially for extreme events, and these biases and uncertainties increase from the global to the local scale 8 , 9 , 10 . Overall, the coarse spatial resolution and large bias and uncertainty in GCMs currently limit their applicability for local-scale climate studies which are most meaningful for impact assessments 4 , 5 , 10 . Therefore, robust climate data with a high spatial and temporal resolution are urgently needed to assess the impacts of climate change on critical sectors such as agriculture, water resources and energy 4 , 5 , 11 .

To develop high-resolution climate data from GCMs and to reduce biases, a number of statistical and dynamical downscaling techniques have been developed 12 , 13 . Regional Climate Models (RCMs) are dynamical models which use local information such as topography to produce high-resolution (e.g. the Coordinated Regional climate Downscaling Experiment; CORDEX 14 ) climate data from GCMs. However, RCMs suffer from large biases, errors and sensitivity to the boundary conditions of the driving GCMs, which limits their application for local scale impact assessments 15 , 16 . In addition, dynamical models are computationally expensive and require large data storage and processing times 13 , 15 , 16 , 17 , 18 . In contrast, downscaling based on statistical methods provides a high resolution equivalent to downscaling based on dynamical methods, but with much less resource and computational demand 1 , 19 . Statistical downscaling models are known to significantly reduce biases in individual GCMs and the ensemble means of multiple GCMs at a local scale 6 . Statistical methods involve the development of a statistical relationship between observed and model data during a historical period (e.g., 1981–2014) and then application of this relationship to downscale and bias correct the future climate parameters. In these statistical methods, it is assumed that the established historical link between local scale and large-scale climate variables will remain relatively constant in the future period 13 , 19 . In general, considering the simplicity and computational advantages of statistical methods they are widely used in climate change and variability, hydro-climate extremes and impact assessment studies at regional and local scales in sectors such as agriculture, energy and water resources 16 , 20 , 21 , 22 , 23 , 24 .

During the last few decades, several statistical downscaling methods such as the Bias Correction Constructed Analogues with Quantile mapping reordering (BCCAQ) 25 , 26 , Quantile Delta Mapping (QDM) 25 , Statistical Downscaling Model (SDSM) 19 , bias correction spatial disaggregation (BCSD) 27 , climate imprint delta method (CI) 28 , bias-corrected climate imprint delta method (BCCI) 28 , and equidistant cumulative distribution function (EDCDF) 29 have been introduced and used in impact studies. Here, we used the BCCAQ gridded statistical downscaling method to develop daily high-resolution climate datasets globally from 18 CMIP6 (Coupled Model Intercomparison Project Phase 6) models across three Shared Socioeconomic Pathways (SSPs) scenarios. Compared to other gridded downscaling techniques such BCSD, CI, and BCCI, BCCAQ has been demonstrated to have superior performance when the downscaled variables are used for simulating hydrological extremes 26 . BCCAQ has been used to develop high-resolution climate datasets for assessing climate extremes in British Columbia 30 , and climate change impact assessment studies 31 , 32 but it has not previously been applied globally. In addition, our new dataset 33 provides high-resolution data for seven frequently used variables (Table 1 ) downscaled from 18 GCMs and 3 scenarios, compared with ClimateImpactLab/downscaleCMIP6 34 which provides only temperature and precipitation datasets based on the Quantile Delta Mapping (QDM) method. Similarly, the NASA global downscaled projection 35 uses the BCSD method and does not include air pressure, which is required in most hydrological models 36 , 37 . The Inter-Sectoral Impact Model Intercomparison Project (ISIMIP, https://www.isimip.org/ ), has also developed downscaled and bias-corrected climate data from CMIP6 models but it has a relatively coarse spatial resolution (0.5°). Our high-resolution (0.25°) daily climate dataset will be useful for assessing changes and variability in the climate and for driving a range of impact assessment models, including hydrological models incorporating analysis of extreme events. The new dataset is freely available to download from the Centre for Environmental Data Analysis (CEDA; https://doi.org/10.5285/c107618f1db34801bb88a1e927b82317 ) 33 .

Data acquisition

Gridded high-resolution bias-corrected meteorological datasets were obtained from GloH2O ( http://www.gloh2o.org/mswx/ ) to calibrate the downscaling model over the historical period (1981–2014). GloH2O provides daily and sub-daily meteorological datasets (Multi-Source Weather; MSWX) such as mean temperature, maximum and minimum temperature, surface pressure, relative humidity and wind speed at a spatial resolution of 0.1° and for the period 1979-present 38 . The MSWX is developed based on multiple observational data sources, downscaling and bias-correction methods. For example, the average air temperature is developed by resampling the Climatologies at High resolution for the Earth’s Land Surface Areas (CHELSA) 39 dataset to 0.1° and it is corrected using the climatology of the Climatic Research Unit Time Series (CRU TS) data 38 . For precipitation, Multi-Source Weighted-Ensemble Precipitation (MSWEP) available from GloH2O ( www.gloh2o.org/mswep ) is used. MSWEP is developed by blending multiple sources such as ground observations, satellite and reanalysis datasets 40 , 41 and has been shown to better represent extreme events 42 . MSWEP includes more than 77,000 gauge data from Global Historical Climatology Network Daily (GHCNd), Global Summary of the Day (GSOD), and Global Precipitation Climatology Centre (GPCC), remote sensing-based precipitation products such as Climate Prediction Center morphing technique (CMORPH), Tropical Rainfall Measuring Mission (TRMM), Multi-satellite Precipitation Analysis (TMPA), and Global Satellite Mapping of Precipitation (GSMaP), and reanalysis data from the Japanese 55-year reanalysis and European Centre for Medium-Range Weather Forecasts (ECMWF) interim reanalysis. The spatial resolution of MSWEP and MSWX is bilinearly interpolated to 0.25° for the downscaling process.

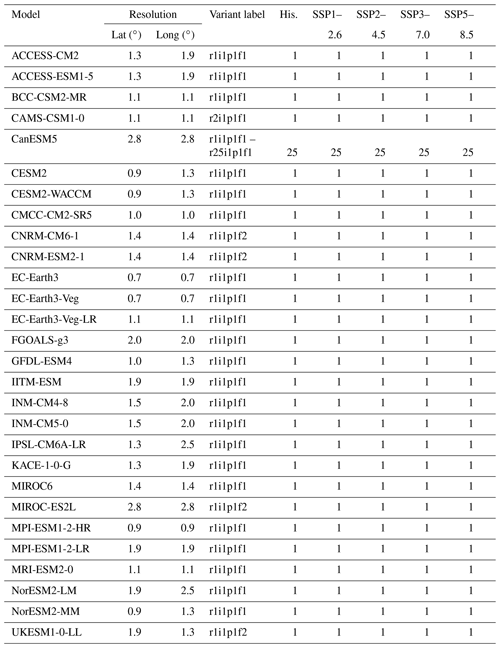

Herein we chose to assess three future (2015–2100) projections of climate based on the latest Shared Socioeconomic Pathway (SSP) scenarios outlined in the IPCC sixth assessment 43 . SSP2-4.5 represents a commonly used lower bound of warming, whereby a ‘middle of the road’ SSP is selected, keeping CO2 relatively low. In contrast, SSP5-8.5 represents a high-emissions SSP which is reliant upon fossil fuels 44 , but is now considered as unlikely 45 . In addition, we also chose to downscale SSP5-3.4-OS, which is known as an ‘overshoot scenario’, where warming follows a worst-case trajectory until 2040 before a rapid decrease driven by mitigation 44 . The historical and future climate data from the CMIP6 models were obtained from the Centre for Environmental Data Analysis (CEDA, https://esgf-index1.ceda.ac.uk/projects/cmip6-ceda/ ). We selected 18 GCMs based on the availability of daily data for precipitation, temperature, maximum and minimum temperature, air pressure, relative humidity and wind speed (Table 1 ). These variables are selected due to their frequent use in many environmental impact assessment models, notably hydrological models 36 , 37 .

Downscaling process

The statistical downscaling model used here to develop high-resolution climate data globally is the Bias Correction Constructed Analogues with Quantile mapping reordering (BCCAQ 25 , 26 ). This is a hybrid downscaling model which combines the Bias Correction Constructed Analogs (BCCA 31 ) and Bias Correction Climate Imprint (BCCI 28 ) to produce daily climate variables, replicating extreme events and spatial covariance effectively 26 . BCCAQ, which combines different downscaling techniques, is more effective in replicating extreme events, spatial covariance and daily sequencing than using a single method 30 . The BCCI method interpolates the coarser climate data from climate models into a finer resolution and bias corrects the data using Quantile Delta Mapping (QDM 25 ). The BCCA is used to perform quantile mapping between the climate model data and spatially aggregated reference dataset to the resolution of the climate models. The relationship between the reference dataset and climate model is used to bias-correct the model data. During the downscaling, the BCCI, BCCA and QDM algorithms run independently and the BCCAQ combines the outputs. In previous applications, BCCAQ was used by the Pacific Climate Impacts Consortium to downscale GCM data for Canada ( https://data.pacificclimate.org/portal/downscaled_cmip6/map/ ). Here we apply the technique to global datasets for the first time. BCCAQ is calibrated using reference datasets of precipitation (MSWEP) and weather (MSWX) during the historical period (1981–2014) and then the calibration is used to downscale future scenarios. Further information about the BCCAQ downscaling model can be found at the Pacific Climate Impacts Consortium (PCIC, https://pacificclimate.org/ ).

Compared to other downscaling methods, such as Statistical DownScaling Model (SDSM) and Bias Correction and Spatial Downscaling (BCSD), BCCAQ is an extremely computationally intensive algorithm, requiring high memory compute nodes (~3 TB RAM per compute node) for global scale downscaling. We used the UK’s data analysis facility for environmental science (JASMIN, https://jasmin.ac.uk/ ) and the University of Southampton ( https://www.southampton.ac.uk/isolutions/staff/iridis.page ) and University of Oxford ( https://www.arc.ox.ac.uk/home ) High-Performance Computing (HPC) resources. The downscaling was implemented using the ClimDown package, written in R ( https://github.com/pacificclimate/ClimDown ) 46 . Global input data for each of our simulations needed to be divided into 17 smaller areas to enable the analysis to complete within the allocated wall time of the HPC facilities (~48 hrs). The Climate Data Operators (CDO 47 ) package was used to split and merge the datasets and adjust model grid types.

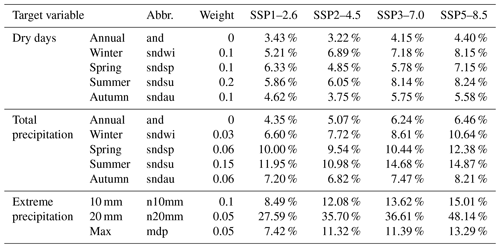

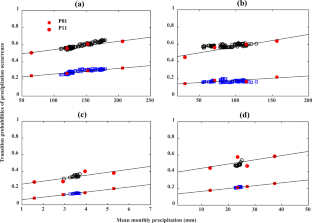

Evaluation methods

To assess the quality of the downscaled data several statistical and graphical methods are used. The downscaling data is compared against the reference dataset using the Pearson correlation coefficient, root mean square error (RMSE), bias and standard deviation. In addition, the Taylor diagram 48 is used to summarise the performance of individual models for each variable. The Taylor diagram is a graphical method frequently used for comparing a set of variables from observations and models using correlation coefficient, standard deviation, and centred RMSE. Furthermore, extreme indices are used to assess the performance of the downscaled data for detecting extreme events such as heavy precipitation days and very warm and cold days. The indices are based on the definition of the Expert Team on Climate Change Detection and Indices (ETCCDI) 49 . Heavy precipitation is defined as the number of precipitation days where daily precipitation is greater than 10 mm. The very warm days indicate the percentage of days where the daily maximum temperature is greater than the 90 th percentile of the daily maximum temperature of the reference period (1981–2014). In addition, very cold days represent the percentage of days where the daily maximum temperature is less than the 10 th percentile of the daily maximum temperature of the reference period.

Data Records

The downscaled (0.25°) daily data from the 18 GCMs for each of the seven climatological variables (Table 2 ) and three SSP scenarios (SSP2-4.5, SSP5-3.4-0S and SSP5-8.5) for the future (2015–2100) and historical (1981–2014) periods are available at the Centre for Environmental Data Analysis (CEDA, https://doi.org/10.5285/c107618f1db34801bb88a1e927b82317 ) 33 . The CEDA data can be accessed by anyone from anywhere. The data are available in compressed NetCDF format. Individual files (i.e. global time series of a single variable) are large, each in the order of about 30 (historical) to 98 (SSPs) GB for historical and future data, respectively. As such, whilst they can be downloaded individually for any use, for UK based environmental science researchers they are best accessed via the JASMIN HPC cluster ( https://jasmin.ac.uk/ ), which is linked to CEDA and provides direct access to our data using a linux machine (cd to /badc/evoflood/data/Downscaled_CMIP6_Climate_Data/). Data for each variable is located in one of four folders according to the scenario modelled: Historical, SSP2-4.5, SSP5-3.4OS, and SSP5-8.5. For the future period 2015–2100 the file name conventions for all variables and scenarios are set as “Global_ variable _Downscaled_ Model _2015–2100_ experiment .nc”, where “variable” is the name of the downscaled variable (e.g., pr and tas), “Model” is the name of the downscaled GCM, and “experiment” is the future SSP scenario. For the historical period, the relevant records are denoted “Global_ variable _Downscaled_ Model _1981–2014.nc”. Note that, unlike SSP2-4.5 and SSP5-8.5, only a few GCMs provide data for SSP5-3.4-OS (Table 2 ).

Technical Validation

Comparison of downscaled and gcms data.

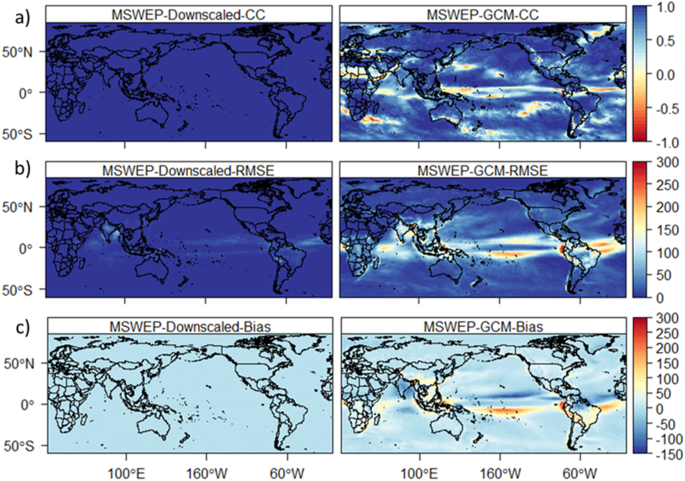

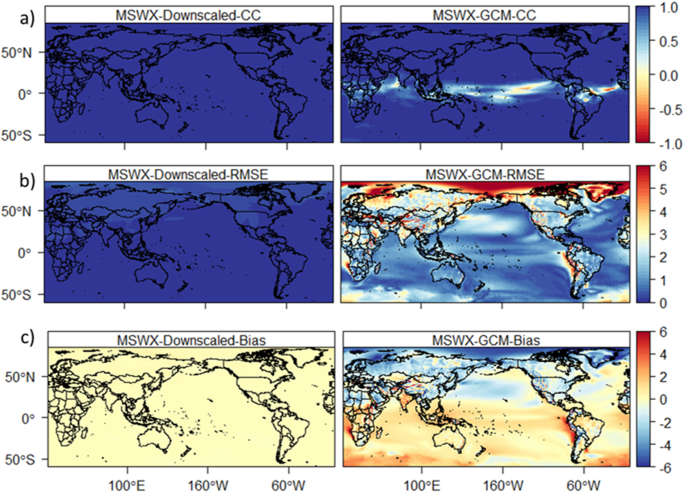

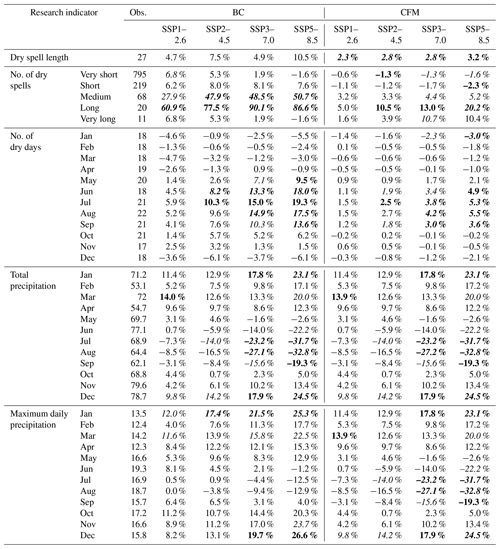

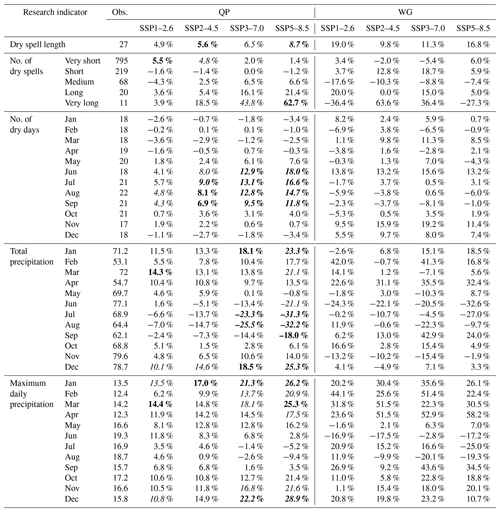

The downscaled high-resolution datasets are compared with the reference data and raw-GCMs (GCMs) during the period 1981–2014. In addition to producing high-resolution data, the performance of BCCAQ in removing biases and errors in GCMs is assessed. Figure 1 shows a comparison between reference (MSWEP) and downscaled and a GCM (ACCESS-CM2) climatological precipitation (pr). The downscaled data shows a higher correlation and lower bias and RMSE compared to the GCM. On the contrary, the GCM shows a large bias (up to ± 150 mm) and Root Mean Square Error (RMSE) in different parts of the world, particularly in Asia and South America and the Indian and Pacific oceans. The RMSE of monthly climatology precipitation from the GCM is very high compared to the downscaled GCM (Fig. 1b ). The downscaled precipitation shows a maximum error (up to 100 mm) only in parts of India. In contrast, the GCM showed an error of up to 300 mm in Africa, Asia and South America. Additionally, the downscaled data show a very low bias compared to the GCM, which showed a bias of up to 300 mm (Fig. 1c ). Unlike the high correlation between the downscaled and reference data, the GCM shows a lower correlation in different parts of the world (Fig. 1a ). The downscaled climatological average temperature (tas) shows a higher correlation and lower bias and RMSE compared to the GCM (Fig. 2 ). For example, the GCM shows a lower correlation in Central Africa and South America and a large bias (±5 °C) and RMSE (up to 6 °C) globally. Overall, the GCMs show a large bias and RMSE for all variables compared to the downscaled data.

Temporal correlation ( a ), RMSE ( b ) and bias ( c ) between MSWEP and downscaled GCM (ACCESS-CM2, left) and raw GCM (ACCESS-CM2, right) for monthly climatology precipitation during 1981–2014.

Temporal correlation ( a ), RMSE ( b ) and bias ( c ) between MSWX temperature (MSWX) and downscaled GCM (ACCESS-CM2, left) and raw GCM (ACCESS-CM2, right) for monthly climatological average temperature during 1981–2014.

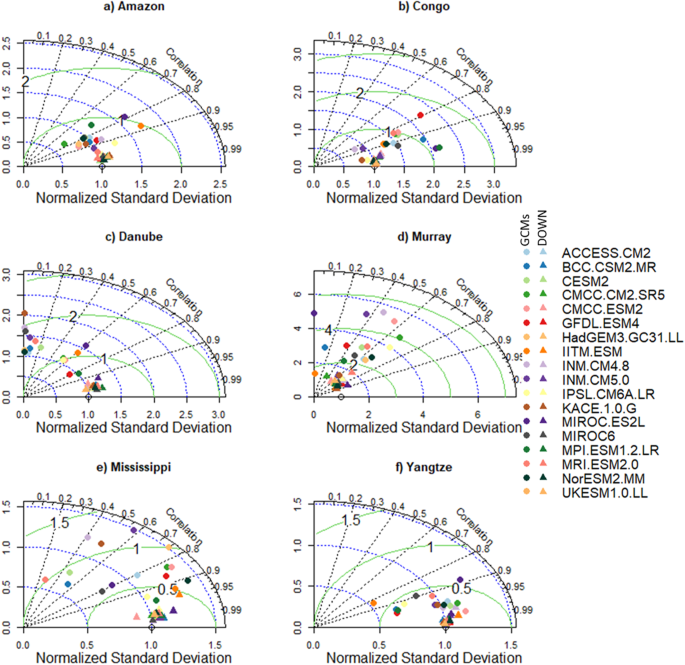

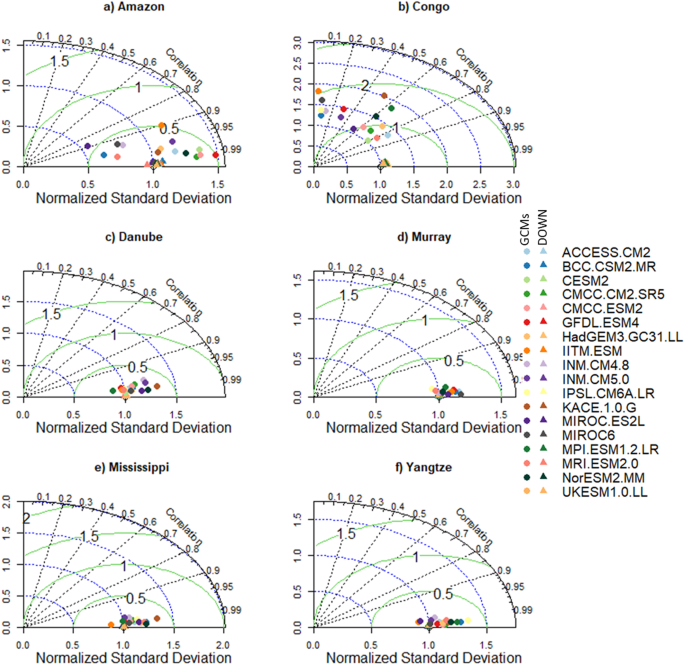

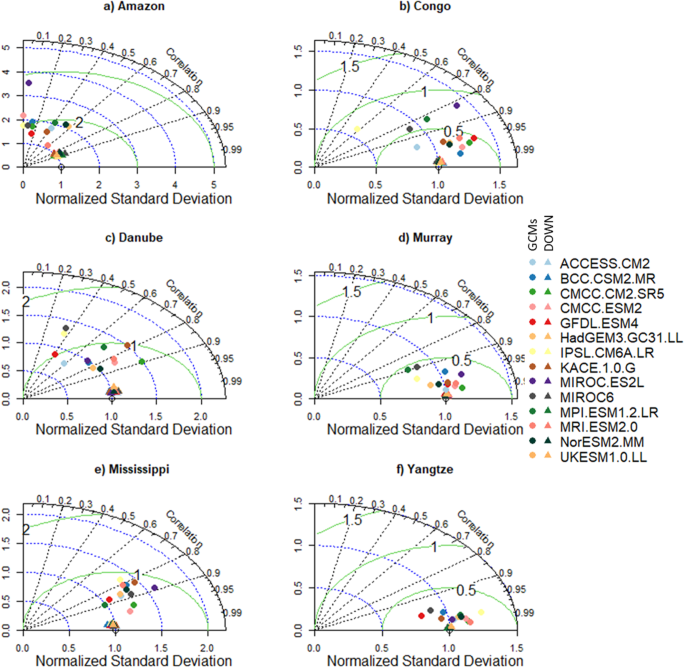

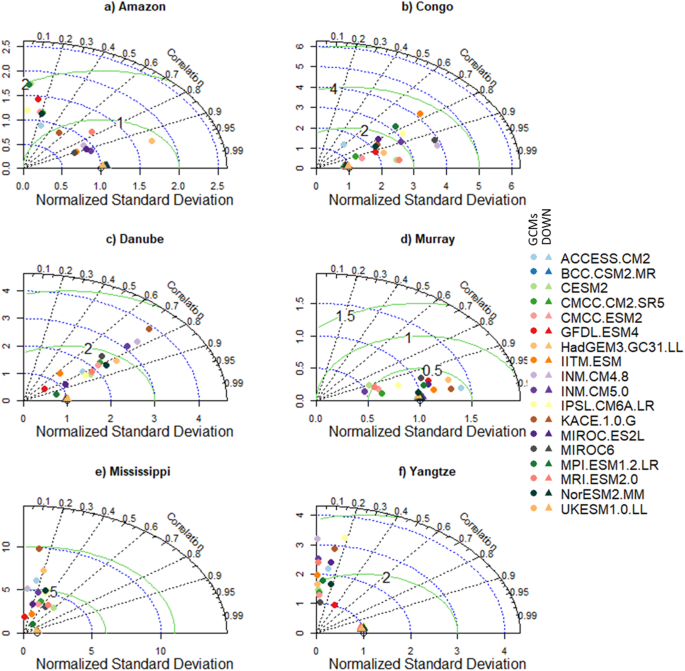

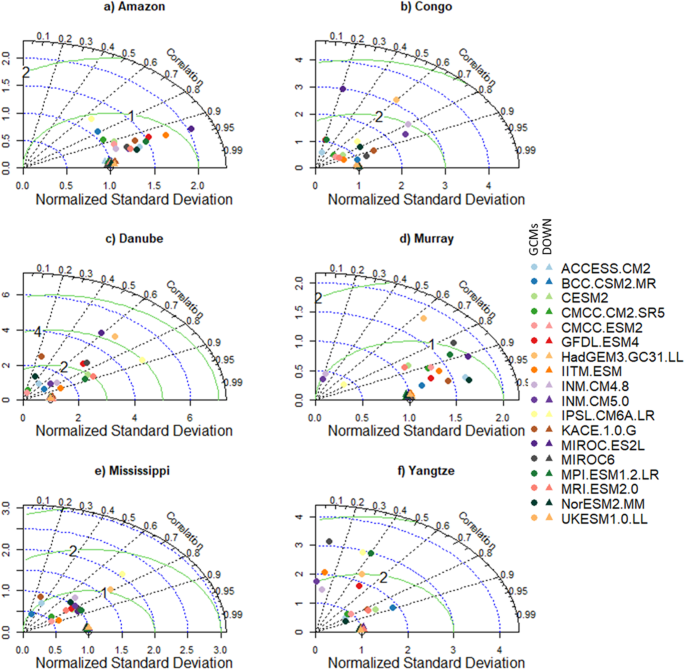

To highlight the need for bias correlation and spatial downscaling and the utility of our new dataset, we selected six morpho-climatologically diverse river basins from around the world. The basins are Amazon, Congo, Danube, Murray–Darling (Murray), Mississippi, and the Yangtze. For each basin, the climatological average of the seven variables from the downscaled and GCMs are compared against the reference dataset. The comparison between the reference and downscaled and GCMs for all the variables and selected basins is summarised in Figs. 3 – 8 . For climatological average pr, most of the downscaled models show a correlation higher than 0.95 and a similar standard deviation (SD) to the reference datasets (Fig. 3 ). The GCMs, however, show a lower correlation and a higher SD and centred RMSE than the downscaled data in all the basins. Comparing all the basins, the downscaled data showed a lower correlation (0.4–0.92) in Murray, although this was still considerably better than the GCMs. For tas, the downscaled data, compared to GCMS, show a higher correlation in all the basins (Fig. 4 ). In addition to the lower correlation, the GCMs show a higher SD and cRMSE than the downscaled data. For example, in Congo, the GCMs show a correlation between 0.1 to 0.8 with a mean of 0.42, whereas the downscale data show a correlation higher than 0.98. The performance of the downscale data is also clear for air pressure (ps, Fig. 5 ), relative humidity (hurs, Fig. 6 ), and wind speed (sfcWind, Fig. 7 ), which show a higher correlation, similar SD to the reference data, and lower centred RMSE. In general, the downscaled data is more accurate than the GCMs in terms of correlation, bias, and errors.

Comparison of Statistically Downscaled (DOWN, triangle) and raw GCMs (GCMs, circles) climatological precipitation for ( a ) Amazon, ( b ) Congo, ( c ) Danube, ( d ) Murray-Darling (Murray), ( e ) Mississippi, and ( f ) Yangtze.

Comparison of Statistically Downscaled (DOWN, triangle) and raw GCMs (GCMs, circles) climatological average temperature for ( a ) Amazon, ( b ) Congo, ( c ) Danube, ( d ) Murray-Darling (Murray), ( e ) Mississippi, and ( f ) Yangtze.

Comparison of Statistically Downscaled (DOWN, triangle) and raw GCMs (GCMs, circles) climatological average surface air pressure (ps) for ( a ) Amazon, ( b ) Congo, ( c ) Danube, ( d ) Murray-Darling (Murray), ( e ) Mississippi, and ( f ) Yangtze.

Comparison of Statistically Downscaled (DOWN, triangle) and raw GCMs (GCMs, circles) climatological average relative humidity (hurs) for ( a ) Amazon, ( b ) Congo, ( c ) Danube, ( d ) Murray-Darling (Murray), ( e ) Mississippi, and ( f ) Yangtze.

Comparison of Statistically Downscaled (DOWN, triangle) and raw GCMs (GCMs, circles) climatological average wind speed (sfcWind) for ( a ) Amazon, ( b ) Congo, ( c ) Danube, ( d ) Murray-Darling (Murray), ( e ) Mississippi, and ( f ) Yangtze.

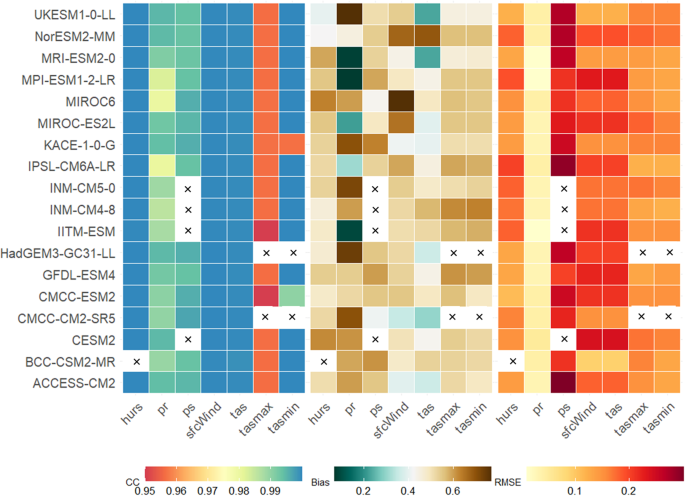

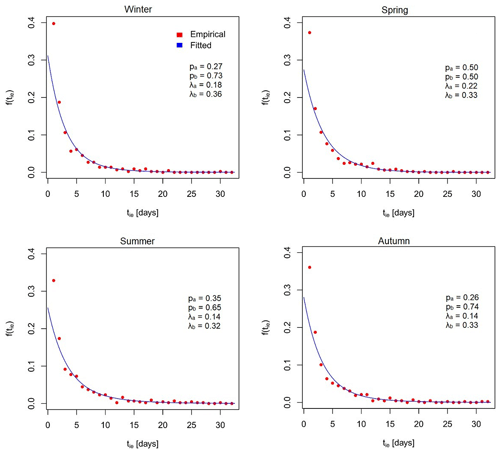

The spatial average correlation (CC), bias, and RMSE between the reference and the downscaled models for climatological average hurs, pr, ps, sfcWind, tas, tasmax, and tasmin. The bias and RMSE are normalised using the maximum and minimum values of the bias and RMSE, respectively. A normalized 0.5 means that the bias or RMSE falls between the minimum and maximum bias and RMSE value in the dataset. The X mark indicates the non-availability of downscaled data.

Global comparison of downscaled and reference datasets

The comparison between the downscaled and GCMs clearly shows the advantage of the downscaling method in removing biases and errors from GCMs and developing high-resolution climate datasets to drive impact models. Here we focus on assessing the performance of the downscaling model in reproducing the climatology of the reference dataset. Figure 8 shows the global average (averaged over all grids) correlation, bias, and RMSE for all the models and variables. Based on the global average correlation, all models perform very well for hurs, sfcWind, tas, and tasmin with a correlation of higher than 0.98. It is, however, slightly lower (>0.95) for pr and tasmax. In addition to the high correlation, the average bias and RMSE are very low for the variables. For example, the average bias and RMSE for tas and pr are 0.06 °C and 0.1 °C and 0.25 mm and 5.1 mm, respectively.

To identify the performance of the downscaled data from all the models and all variables spatial correlation (SFigs. 1 – 5 ) and RMSE (SFigs. 6 – 10 ) maps are provided in the supplementary material. It is evident from the global maps that the CC is higher than 0.8 for all variables in most parts of the world. This high correlation suggests that the downscaling model has performed well in downscaling the variables and may also represent any biases in the reference dataset. Compared to wind speed (SFig. 2 ), temperature (SFig. 3 ) and relative humidity (SFig. 4 ), which all have a CC of greater than 0.9 in all parts of the world, the correlations obtained are typically slightly lower for precipitation (SFig. 1 ). For precipitation, the MPI-ESM1-2-LR and IITM-ESM GCMs reveal a lower CC (up to −0.6) in parts of Central Africa, but show similar performance to other downscaled GCMs (typical correlations >0.8) in other parts of the world.

Similarly, the downscaled data show a lower RMSE in most of the world (SFigs. 6 – 10 ). For precipitation, the IPSl-CM6A-LR, INM-CM4-8 and INM-CM5-0 show the highest RMSE up to 120 mm in South America (SFig. 6 ). The downscaled sfcWind data also shows a lower RMSE over land compared to Oceans, which shows an error of up to 0.3 m/s (SFig. 7 ). The BCC-CSM2-MR, compared to the other models show the highest RMSE over the Arctic Ocean (~0.3 m/s). Most of the downscaled models show a similar pattern of error for tas (up to 0.98 °C), particularly over the temperate zone and the Arctic Ocean (SFig. 8 ). The average RMSE of the hurs of all models is between 0.2 and 0.4% (SFig. 9 ). Unlike to the error in sfcWind, hurs show higher RMSE over land compared to Oceans. The BCC-CSM2-MR, IPSL-CM6A-LR, MRI-ESM2-0, and NorESM2-MM, compared to the other models, show a higher RMSE (up to 0.12 kPa) for ps over the Arctic Ocean (SFig. 10 ). However, most of the models show a smaller RMSE for ps over the land. Overall, the climatology of the downscaled data from all the models and variables shows good agreement with the observed data.

Time series of global average downscaled and reference datasets

The global average annual pr, sfcWind, tas, hurs, and ps are also well reproduced by the downscaling model (SFigs. 11 – 15 ). The global average encompasses both land and ocean areas across all longitudes, spanning latitudes from 60°S to 85°N. Global average pr based on the reference datasets (i.e., MSWEP) during 1981–2010 is 1083 mm with a standard deviation (SD) of 13 mm (SFig. 11 ). All downscaled models reproduce a similar annual average pr with a SD of between 9.9 mm and 31.3 mm. Even though the models reproduce the global average annual precipitation very well, some models such as CMCC-CM2-SR5, IPSL-CM6A-LR and NorESM2-MM showed a higher annual variability with a SD of about 31 mm. In addition, ACCESS-CM2, CMCC-ESM2, MPI-ESM1-2-LR and MRI-ESM3-0 show a SD of about 21 mm. The multi-model mean (MMM) of all models also shows an average precipitation of 1082.7 mm.

Global average annual tas based on the reference dataset is 16.52 °C (SD = 0.2 °C) and this was well reproduced by all models (between 16.50 °C–16.52 °C) and the MMM (16.51 °C) (SFig. 12 ). Compared to the other models, BCC-CSM2-MR, CMCC-CM2-SR5, HadGEM3-GC31-LL, IPSL-CM6A-LR, and UKESM1 show a higher annual variability (SD between 0.3–0.34 °C). Similarly, the average annual sfcWind is well reproduced by all the models (SFig. 13 ). The global average sfcWindfrom the reference data and all models and MMM is 5.99 m/s. Compared to the individual models (SD = 0.3 m/s) and MMM (SD = 0.1 m/s), the reference data show a slightly higher annual variability (SD of 0.6 m/s). The average annual hurs, similar to sfcWind, is accurately reproduced by all the models and MMM (SFig. 14 ). Based on the reference and all the models, the global average annual hurs is 74.9%. The standard deviation of all the models is 0.1%, whereas the reference datasets show an SD of 0.2%. Further, the downscaled GCMs accurately represent the average annual ps when compared to the reference dataset (SFig. 15 ). The global average ps from the reference and individual models and MMM is 99.17 kPa and shows a similar (except BCC-CSM2-MR and MRI-ESM2) SD of 0.1 kPa. The BCC-CSM2-MR and MRI-ESM2 show an SD of 0.2 kPa.

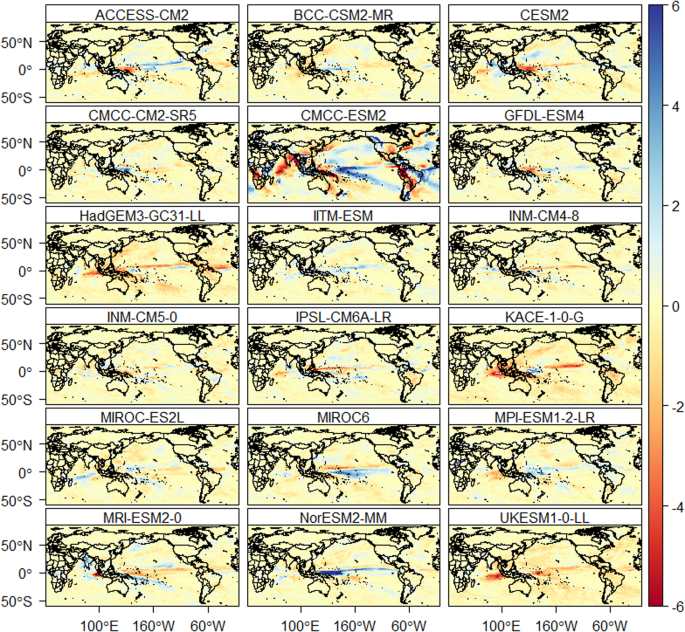

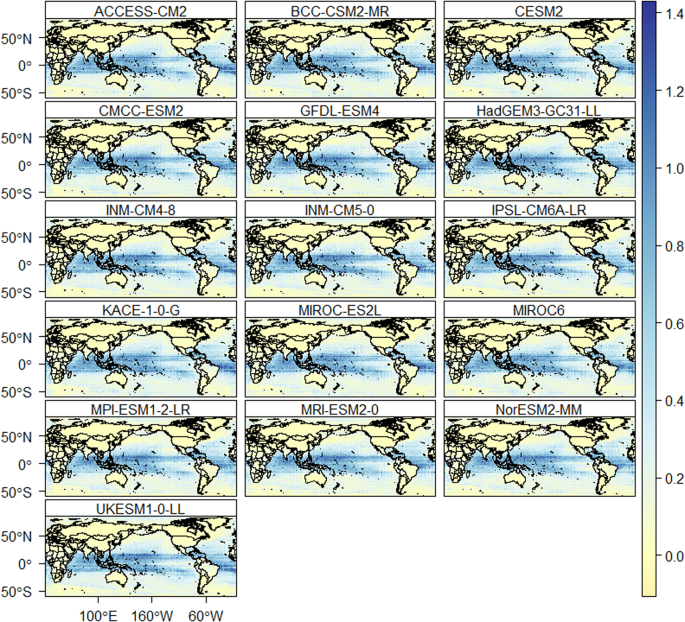

Daily climate extremes

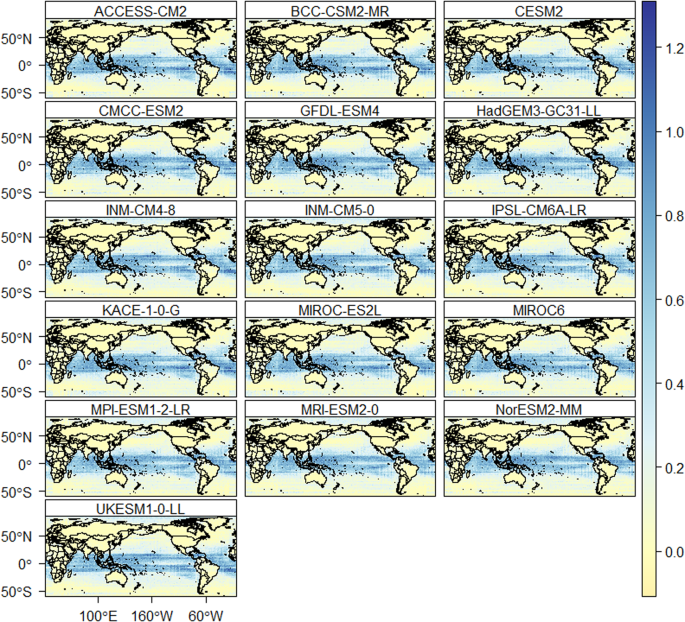

The downscaled data is also assessed for daily extreme events. The number of heavy precipitation days is well reproduced by all models (Fig. 9 ). Figure 9 provides the average difference in the number of annual heavy precipitation days between the models and reference data. Most of the models show an accurate representation of the number of heavy precipitation days over land compared to oceans. Based on the reference data, the average number of heavy precipitation days is 25 days per year. The CMCC-ESM2, compared to the other models, show a higher difference (±6 days per year) with reference data for heavy precipitation days such as in South America, Asia, and Africa. The average percentage of very warm days based on the reference data is 8.5% of the reference period. All models reproduce the percentage of very warm days very well over the land, except in some places of South East Asia (Indonesia and Thailand) and Central America (Fig. 10 ). However, all models show a higher percentage of very warm days (up to 1.4% higher than the reference data) over the oceans (Pacific, Indian and Atlantic oceans). Similarly, the average percentage of very cold days based on the reference dataset is 8.5%. All the models represented the percentage of very cold days very well over land than oceans (Fig. 11 ). The percentage of wet days is overestimated by up to 1.3% in oceans and few areas in South East Asia and Central America.

The difference in average annual number of heavy precipitation days (days/year) between the downscaled models and the reference data. The red and blue colour indicates underestimation and overestimation of heavy precipitation days, respectively.

The difference in percentage of very warm days (%) between the downscaled models and the reference data. The blue colour indicates an overestimation of the percentage of very warm days (%).

The difference in percentage of very cold days (%) between the downscaled models and reference data. The blue colour indicates an overestimation of the percentage of very cold days (%).

In summary, the downscaled data accurately reproduces observed data from the historical period for most areas. The high correlation and accurate representation of the global annual and climatological averages of all variables suggest that the downscaling model might also capture any biases in the reference dataset. Even though we used the most comprehensive and high-resolution historical climate datasets to calibrate and downscale the GCMs, it is the case that these datasets might add additional uncertainties in the historical and future climate through propagation of any errors. Specific to precipitation, as a key driver of global hydrological simulations, MSWEP has been evaluated globally and used in various hydro-climate studies 50 , 51 . Based on recent evaluations 52 , MSWEP was found to outperform 22 other global and quasi-global precipitation datasets such as European Centre for Medium-range Weather Forecasts ReAnalysis Interim (ERA-Interim) 53 , Japanese 55-year ReAnalysis (JRA-55) 54 , and National Centers for Environmental Prediction (NCEP) Climate Forecast system reanalysis (NCEP-CFSR) 55 . In addition, MSWEP was found to capture extreme events better than other satellite-based precipitation datasets 42 . Finally, we note that alongside the uncertainties in the reference climate datasets, it is important to consider the assumptions made in statistical downscaling models (e.g., the assumption of stationarity). However, these uncertainties aside, we are confident that our new downscaled high-resolution climate data can be used in global, regional and local scale impact assessment studies with high accuracy compared to GCMs.

Code availability

The BCCAQ code used to downscale the CMIP6 GCMs can be found at the Pacific Climate Impacts Consortium (PCIC, https://pacificclimate.org/resources/software-library ) page and on the R Package Documentation ( https://rdrr.io/cran/ClimDown/ ).

Gebrechorkos, S. H., Hülsmann, S. & Bernhofer, C. Statistically downscaled climate dataset for East Africa. Scientific Data 6 , 31 (2019).

PubMed PubMed Central Google Scholar

IPCC. Climate Change 2013: The Physical Science Basis ( eds Stocker et al.) . 1535 http://www.ipcc.ch/report/ar5/wg1/ (2013).

IPCC. Managing the Risks of Extreme Events and Disasters to Advance Climate Change Adaptation ( eds Field , C. B . et al . ) . https://www.ipcc.ch/report/managing-the-risks-of-extreme-events-and-disasters-to-advance-climate-change-adaptation/ (2012).

Gutmann, E. D. et al . A Comparison of Statistical and Dynamical Downscaling of Winter Precipitation over Complex Terrain. J. Climate 25 , 262–281 (2012).

ADS Google Scholar

Meenu, R., Rehana, S. & Mujumdar, P. P. Assessment of hydrologic impacts of climate change in Tunga–Bhadra river basin, India with HEC-HMS and SDSM. Hydrological Processes 27 , 1572–1589 (2013).

Gebrechorkos, S. H., Hülsmann, S. & Bernhofer, C. Regional climate projections for impact assessment studies in East Africa. Environ. Res. Lett. 14 , 044031 (2019).

Navarro-Racines, C., Tarapues, J., Thornton, P., Jarvis, A. & Ramirez-Villegas, J. High-resolution and bias-corrected CMIP5 projections for climate change impact assessments. Sci Data 7 , 7 (2020).

Lutz, A. F. et al . Selecting representative climate models for climate change impact studies: an advanced envelope-based selection approach. Int. J. Climatol. 36 , 3988–4005 (2016).

Google Scholar

Joetzjer, E., Douville, H., Delire, C. & Ciais, P. Present-day and future Amazonian precipitation in global climate models: CMIP5 versus CMIP3. Clim Dyn 41 , 2921–2936 (2013).

Knutti, R. & Sedláček, J. Robustness and uncertainties in the new CMIP5 climate model projections. Nature Climate Change 3 , 369–373 (2013).

Challinor, A. J. et al . Methods and Resources for Climate Impacts Research: Achieving Synergy. Bulletin of the American Meteorological Society 90 , 836–848 (2009).

Coulibaly, P., Dibike, Y. B. & Anctil, F. Downscaling Precipitation and Temperature with Temporal Neural Networks. J. Hydrometeor. 6 , 483–496 (2005).

Wilby, R. L. & Dawson, C. W. The Statistical DownScaling Model: insights from one decade of application. Int. J. Climatol. 33 , 1707–1719 (2013).

Giorgi, F., Jones, C. & Asrar, G. Addressing climate information needs at the regional level: The CORDEX framework. WMO Bull 53 , (2009).

Hamlet, A. F., Salathé, E. P. & Carrasco, P. Statistical downscaling techniques for global climate model simulations of temperature and precipitation with application to water resources planning studies. https://doi.org/10.6069/bjoayxkb (2010).

Brown, C., Greene, A. M., Block, P. J. & Giannini, A. Review of Downscaling Methodologies for Africa Climate Applications . http://academiccommons.columbia.edu/catalog/ac:126383 (2008).

Gebrechorkos, S. H., Hülsmann, S. & Bernhofer, C. Evaluation of multiple climate data sources for managing environmental resources in East Africa. Hydrology and Earth System Sciences 22 , 4547–4564 (2018).

Maraun, D. Bias Correction, Quantile Mapping, and Downscaling: Revisiting the Inflation Issue. Journal of Climate 26 , 2137–2143 (2013).

Wilby, R. L. & Dawson, C. W. sdsm — a decision support tool for the assessment of regional climate change impacts. Environmental Modelling & Software 17 , 145–157 (2004).

Tavakol‐Davani, H., Nasseri, M. & Zahraie, B. Improved statistical downscaling of daily precipitation using SDSM platform and data‐mining methods. International Journal of Climatology 33 , 2561–2578 (2012).

Khan, M. S. & Coulibaly, P. Assessing Hydrologic Impact of Climate Change with Uncertainty Estimates: Bayesian Neural Network Approach. J. Hydrometeor. 11 , 482–495 (2009).

Gebrechorkos, S. H., Bernhofer, C. & Hülsmann, S. Impacts of projected change in climate on water balance in basins of East Africa. Science of The Total Environment https://doi.org/10.1016/j.scitotenv.2019.05.053 (2019).

Gebrechorkos, S. H., Bernhofer, C. & Hülsmann, S. Climate change impact assessment on the hydrology of a large river basin in Ethiopia using a local-scale climate modelling approach. Science of The Total Environment 742 , 140504 (2020).

CAS PubMed ADS Google Scholar

Gebrechorkos, S. H., Taye, M. T., Birhanu, B., Solomon, D. & Demissie, T. Future Changes in Climate and Hydroclimate Extremes in East Africa. Earth’s Future 11 , e2022EF003011 (2023).

Cannon, A. J., Sobie, S. R. & Murdock, T. Q. Bias Correction of GCM Precipitation by Quantile Mapping: How Well Do Methods Preserve Changes in Quantiles and Extremes? Journal of Climate 28 , 6938–6959 (2015).

Werner, A. T. & Cannon, A. J. Hydrologic extremes – an intercomparison of multiple gridded statistical downscaling methods. Hydrology and Earth System Sciences 20 , 1483–1508 (2016).

Wood, A. W., Leung, L. R., Sridhar, V. & Lettenmaier, D. P. Hydrologic Implications of Dynamical and Statistical Approaches to Downscaling Climate Model Outputs. Climatic Change 62 , 189–216 (2004).

Hunter, R. D. & Meentemeyer, R. K. Climatologically Aided Mapping of Daily Precipitation and Temperature. Journal of Applied Meteorology and Climatology 44 , 1501–1510 (2005).

Yang, X. et al . Bias Correction of Historical and Future Simulations of Precipitation and Temperature for China from CMIP5 Models. Journal of Hydrometeorology 19 , 609–623 (2018).

Sobie, S. R. & Murdock, T. Q. High-Resolution Statistical Downscaling in Southwestern British Columbia. Journal of Applied Meteorology and Climatology 56 , 1625–1641 (2017).

Maurer, E. P., Hidalgo, H. G., Das, T., Dettinger, M. D. & Cayan, D. R. The utility of daily large-scale climate data in the assessment of climate change impacts on daily streamflow in California. Hydrology and Earth System Sciences 14 , 1125–1138 (2010).

Pirani, F., Bakhtiari, S., Najafi, M., Shrestha, R. & Nouri, M. S. The Effects of Climate Change on Flood-Generating Mechanisms in the Assiniboine and Red River Basins. 2021 , NH15F-0514 (2021).

Gebrechorkos, S. H., Leyland, J., Darby, S. & Parsons, D. High-resolution daily global climate dataset of BCCAQ statistically downscaled CMIP6 models for the EVOFLOOD project. NERC EDS Centre for Environmental Data Analysis. https://doi.org/10.5285/C107618F1DB34801BB88A1E927B82317 (2022).

Malevich, B. et al . ClimateImpactLab/downscaleCMIP6. Zenodo https://doi.org/10.5281/zenodo.6403794 (2022).

Thrasher, B. et al . NASA Global Daily Downscaled Projections, CMIP6. Sci Data 9 , 262 (2022).

Liang, X., Lettenmaier, D. P., Wood, E. F. & Burges, S. J. A simple hydrologically based model of land surface water and energy fluxes for general circulation models. Journal of Geophysical Research: Atmospheres 99 , 14415–14428 (1994).

Cohen, S., Kettner, A. J., Syvitski, J. P. M. & Fekete, B. M. WBMsed, a distributed global-scale riverine sediment flux model: Model description and validation. Computers & Geosciences 53 , 80–93 (2013).

Beck, H. E. et al . MSWX: Global 3-Hourly 0.1° Bias-Corrected Meteorological Data Including Near-Real-Time Updates and Forecast Ensembles. Bulletin of the American Meteorological Society 103 , E710–E732 (2022).

Karger, D. N. et al . Climatologies at high resolution for the earth’s land surface areas. Sci Data 4 , 170122 (2017).

Beck, H. E. et al . MSWEP: 3-hourly 0.25°global gridded precipitation (1979–2015) by merging gauge, satellite, and reanalysis data. Hydrology and Earth System Sciences 21 , 589–615 (2017).

Beck, H. E. et al . MSWEP V2 Global 3-Hourly 0.1° Precipitation: Methodology and Quantitative Assessment. Bulletin of the American Meteorological Society 100 , 473–500 (2019).

Tang, X., Zhang, J., Gao, C., Ruben, G. B. & Wang, G. Assessing the Uncertainties of Four Precipitation Products for Swat Modeling in Mekong River Basin. Remote Sensing 11 , 304 (2019).

Kikstra, J. et al . The IPCC Sixth Assessment Report WGIII climate assessment of mitigation pathways: from emissions to global temperatures . https://doi.org/10.5194/egusphere-2022-471 (2022).

O’Neill, B. C. et al . The Scenario Model Intercomparison Project (ScenarioMIP) for CMIP6. Geoscientific Model Development 9 , 3461–3482 (2016).

Hausfather, Z. & Peters, G. P. Emissions – the ‘business as usual’ story is misleading. Nature 577 , 618–620 (2020).

Hiebert, J., Cannon, A. J., Murdock, T., Sobie, S. & Werner, A. ClimDown: Climate Downscaling in R. Journal of Open Source Software 3 , 360 (2018).

Schulzweida, U., Kornblueh, L. & Quast, R. CDO - Climate Data Operators -Project Management Service. (2009).

Taylor, K. E. Summarizing multiple aspects of model performance in a single diagram. Journal of Geophysical Research: Atmospheres 106 , 7183–7192 (2001).

Karl, T. R., Nicholls, N. & Ghazi, A. CLIVAR/GCOS/WMO Workshop on Indices and Indicators for Climate Extremes Workshop Summary. in Weather and Climate Extremes: Changes, Variations and a Perspective from the Insurance Industry (eds. Karl, T. R., Nicholls, N. & Ghazi, A.) 3–7, https://doi.org/10.1007/978-94-015-9265-9_2 (Springer Netherlands, 1999).

Gebrechorkos, S. H. et al . Variability and changes in hydrological drought in the Volta Basin, West Africa. Journal of Hydrology: Regional Studies 42 , 101143 (2022).

Gebrechorkos, S. H. et al . Global High-Resolution Drought Indices for 1981-2022. Earth System Science Data Discussions 1–28, https://doi.org/10.5194/essd-2023-276 (2023).

Beck, H. E. et al . Global-scale evaluation of 22 precipitation datasets using gauge observations and hydrological modeling. Hydrology and Earth System Sciences 21 , 6201–6217 (2017).

CAS ADS Google Scholar

Dee, D. P. et al . The ERA-Interim reanalysis: configuration and performance of the data assimilation system. Quarterly Journal of the Royal Meteorological Society 137 , 553–597 (2011).

Kobayashi, S. et al . The JRA-55 Reanalysis: General Specifications and Basic Characteristics. Journal of the Meteorological Society of Japan. Ser. II 93 , 5–48 (2015).

Saha, S. et al . The NCEP Climate Forecast System Reanalysis. Bull. Amer. Meteor. Soc. 91 , 1015–1058 (2010).

Bi, D. et al . Configuration and spin-up of ACCESS-CM2, the new generation Australian Community Climate and Earth System Simulator Coupled Model. JSHESS 70 , 225–251 (2020).

Wu, T. et al . The Beijing Climate Center Climate System Model (BCC-CSM): the main progress from CMIP5 to CMIP6. Geoscientific Model Development 12 , 1573–1600 (2019).

Hurrell, J. W. et al . The Community Earth System Model: A Framework for Collaborative Research. Bulletin of the American Meteorological Society 94 , 1339–1360 (2013).

Cherchi, A. et al . Global Mean Climate and Main Patterns of Variability in the CMCC-CM2 Coupled Model. Journal of Advances in Modeling Earth Systems 11 , 185–209 (2019).

Delworth, T. L. et al . GFDL’s CM2 Global Coupled Climate Models. Part I: Formulation and Simulation Characteristics. Journal of Climate 19 , 643–674 (2006).

Collins, W. J. et al . Development and evaluation of an Earth-System model – HadGEM2. Geoscientific Model Development 4 , 1051–1075 (2011).

Raghavan, K. et al . The IITM Earth System Model (IITM ESM) . (2021).

Volodin, E. M., Dianskii, N. A. & Gusev, A. V. Simulating present-day climate with the INMCM4.0 coupled model of the atmospheric and oceanic general circulations. Izv. Atmos. Ocean. Phys. 46 , 414–431 (2010).

Dufresne, J.-L. et al . Climate change projections using the IPSL-CM5 Earth System Model: from CMIP3 to CMIP5. Clim Dyn 40 , 2123–2165 (2013).

Lee, J. et al . Evaluation of the Korea Meteorological Administration Advanced Community Earth-System model (K-ACE). Asia-Pacific J Atmos Sci 56 , 381–395 (2020).

Watanabe, S. et al . MIROC-ESM 2010: model description and basic results of CMIP5-20c3m experiments. Geoscientific Model Development 4 , 845–872 (2011).

Reick, C. H., Raddatz, T., Brovkin, V. & Gayler, V. Representation of natural and anthropogenic land cover change in MPI-ESM. Journal of Advances in Modeling Earth Systems 5 , 459–482 (2013).

Yukimoto, S. et al . A New Global Climate Model of the Meteorological Research Institute: MRI-CGCM3 —Model Description and Basic Performance—. Journal of the Meteorological Society of Japan. Ser. II 90A , 23–64 (2012).

Iversen, T. et al . The Norwegian Earth System Model, NorESM1-M – Part 2: Climate response and scenario projections. Geoscientific Model Development 6 , 389–415 (2013).

Senior, C. A. et al . U.K. Community Earth System Modeling for CMIP6. Journal of Advances in Modeling Earth Systems 12 , e2019MS002004 (2020).

PubMed PubMed Central ADS Google Scholar

Download references

Acknowledgements

This work is part of the Evolution of Global Flood Hazard and Risk (EVOFLOOD) project [NE/S015817/1] supported by the Natural Environment Research Council (NERC). We acknowledge the Centre for Environmental Data Analysis (CEDA) for storing the downscaled data. We thank JASMIN (UK’s data analysis facility for environmental science), University of Southampton (IRIDIS) and the University of Oxford (ARC) and their team members for providing access to the High-Performance Computing (HPC) systems that were used to perform the downscaling process undertaken herein.

Author information

Authors and affiliations.

School of Geography and Environmental Science, University of Southampton, Southampton, SO17 1BJ, UK

Solomon Gebrechorkos, Julian Leyland, Andrew J. Tatem & Stephen E. Darby

School of Geography and the Environment, University of Oxford, Oxford, UK

Solomon Gebrechorkos, Louise Slater & Michel Wortmann

School of Applied Sciences, University of Brighton, Sussex, BN2 4AT, Brighton, UK

Philip J. Ashworth

Department of Geography, Faculty of Environment, Science and Economy, University of Exeter, Exeter, EX4 4RJ, UK

Georgina L. Bennett, Andrew Nicholas & Ellie Vahidi

School of Geographical & Earth Sciences, University of Glasgow, Glasgow, UK

Richard Boothroyd

Geography and Environmental Science, University of Reading, Reading, UK

Hannah Cloke & Helen Griffith

Energy and Environment Institute, University of Hull, Hull, UK

Pauline Delorme, Stuart McLelland & Daniel R. Parsons

Department of Geography, Durham University, Lower Mountjoy, South Road, Durham, DH1 3LE, UK

Richard Hardy

School of Geographical Sciences, University of Bristol, Bristol, BS8 1SS, UK

Laurence Hawker & Jeffrey Neal

You can also search for this author in PubMed Google Scholar

Contributions

J.L. and S.G. conceived the study, with input from all co-authors. S.G. led the work and performed the downscaling and data processing, and J.L. & L.S. assisted with computing resources and data storage. S.G. carried out the testing and technical validation of the downscaled data. All co-authors contributed to the development (writing and editing) of the manuscript.

Corresponding author

Correspondence to Solomon Gebrechorkos .

Ethics declarations

Competing interests.

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Supplementary information, rights and permissions.

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/ .

Reprints and permissions

About this article

Cite this article.

Gebrechorkos, S., Leyland, J., Slater, L. et al. A high-resolution daily global dataset of statistically downscaled CMIP6 models for climate impact analyses. Sci Data 10 , 611 (2023). https://doi.org/10.1038/s41597-023-02528-x

Download citation

Received : 14 February 2023

Accepted : 31 August 2023

Published : 11 September 2023

DOI : https://doi.org/10.1038/s41597-023-02528-x

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

Quick links

- Explore articles by subject

- Guide to authors

- Editorial policies

Sign up for the Nature Briefing newsletter — what matters in science, free to your inbox daily.

- Reference Manager

- Simple TEXT file

People also looked at

Original research article, spatio-temporal downscaling of climate data using convolutional and error-predicting neural networks.

- 1 Department of Computer Science, ETH Zurich, Zurich, Switzerland

- 2 Department of Computer Science, Friedrich-Alexander-Universität Erlangen-Nürnberg, Erlangen, Germany

- 3 Department of Atmospheric and Cryospheric Science, University of Innsbruck, Innsbruck, Austria

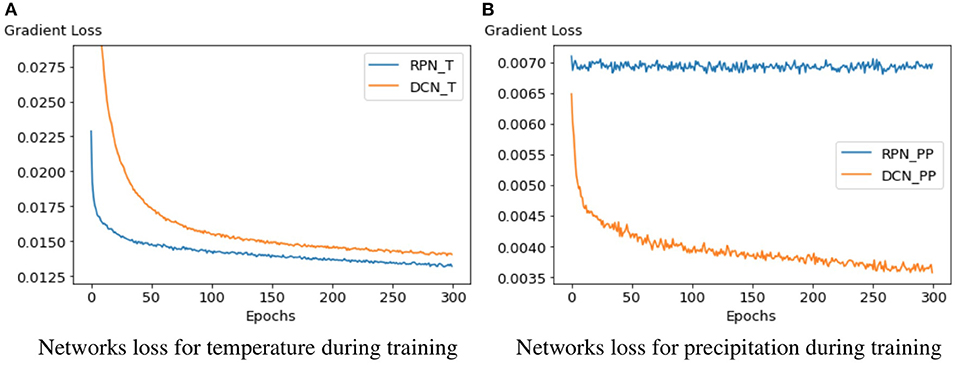

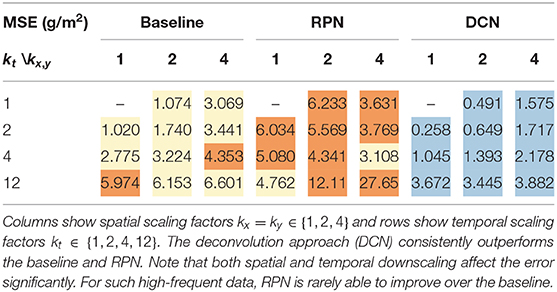

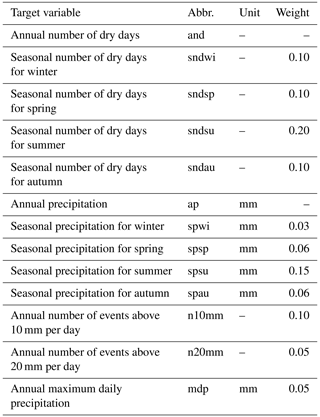

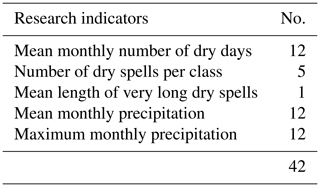

Numerical weather and climate simulations nowadays produce terabytes of data, and the data volume continues to increase rapidly since an increase in resolution greatly benefits the simulation of weather and climate. In practice, however, data is often available at lower resolution only, for which there are many practical reasons, such as data coarsening to meet memory constraints, limited computational resources, favoring multiple low-resolution ensemble simulations over few high-resolution simulations, as well as limits of sensing instruments in observations. In order to enable a more insightful analysis, we investigate the capabilities of neural networks to reconstruct high-resolution data from given low-resolution simulations. For this, we phrase the data reconstruction as a super-resolution problem from multiple data sources, tailored toward meteorological and climatological data. We therefore investigate supervised machine learning using multiple deep convolutional neural network architectures to test the limits of data reconstruction for various spatial and temporal resolutions, low-frequent and high-frequent input data, and the generalization to numerical and observed data. Once such downscaling networks are trained, they serve two purposes: First, legacy low-resolution simulations can be downscaled to reconstruct high-resolution detail. Second, past observations that have been taken at lower resolutions can be increased to higher resolutions, opening new analysis possibilities. For the downscaling of high-frequent fields like precipitation, we show that error-predicting networks are far less suitable than deconvolutional neural networks due to the poor learning performance. We demonstrate that deep convolutional downscaling has the potential to become a building block of modern weather and climate analysis in both research and operational forecasting, and show that the ideal choice of the network architecture depends on the type of data to predict, i.e., there is no single best architecture for all variables.

1. Introduction

A universal challenge of modern scientific computing is the rapid growth of data. For example, numerical weather and climate simulations are nowadays run at kilometer-scale resolution on global and regional domains ( Prein et al., 2015 ), producing a data avalanche of hundreds of terabytes ( Schär et al., 2020 ). In practice, however, data is often available at lower resolution only, for which there are many practical reasons. For example, older archived simulations have been computed on lower resolution or were reduced due to memory capacity constraints. Also, when allocating the computational budget running multiple low-resolution ensemble simulations might be favored over few high-resolution simulations. The loss of high-resolution information is a serious problem that must be addressed for two critical reasons. First, the loss of data limits any form of post-hoc data analysis, sacrificing valuable information. Second, an in-situ data analysis ( Ma, 2009 ), i.e., the processing of the data on the simulation cluster, is not reproducible by the scientific community, since the original raw data has never been stored. Even if a large amount of computing resources is available for re-running entire simulations and outputting higher frequency data for analysis, it still requires reproducible code, which is cumbersome to maintain due to the changes in super computing architectures ( Schär et al., 2020 ). For these reasons, reconstruction algorithms from partial data are a promising research direction to improve data analysis and reproducibility. Not only is the reconstruction of higher spatial and temporal resolutions valuable for numerical simulations, meteorological observations are also only available at certain temporal resolutions and suffer from sparse observational networks. In many applications, such as in hydrology, higher temporal resolutions are desperately needed, for example to inform urban planners in the design of infrastructures that support future precipitation amounts ( Mailhot and Duchesne, 2010 ).

In climate science, deep learning has recently been applied to a number of different problems, including microphysics ( Seifert and Rasp, 2020 ), radiative transfer ( Min et al., 2020 ), convection ( O'Gorman and Dwyer, 2018 ), forecasting ( Roesch and Günther, 2019 ; Selbesoglu, 2019 ; Weyn et al., 2019 ), and empirical-statistical downscaling ( Baño-Medina et al., 2020 ). For example, Yuval et al. (2021) have applied deep learning for parametrization of subgrid scale atmospheric processes like convection. They have trained neural networks on high-resolution data and have applied it as parametrization for coarse resolution simulation. Using deep learning, they demonstrated that they could decrease the computational cost without affecting the quality of simulations.

In computer vision, the problem of increasing the resolution of an image is referred to as the single-image super-resolution problem ( Yang et al., 2014 ). The super-resolution problem is inherently ill-posed, since infinitely many high-resolution images look identical after coarsening. Usually, the recovery of a higher resolution requires assumptions and priors, which are nowadays learned from examples via deep learning, which–in the context of climate data–has proven to outperform simple linear baselines ( Baño-Medina et al., 2020 ). For single-image super-resolution, Dong et al. (2015) introduced a convolutional architecture (CNN). Their method receives as input an image that was already downscaled with a conventional method, such as bicubic interpolation, and then predicts an improved result. The CNN is thereby applied to patches of the image, which are combined to result in the final image. The prior selection of an interpolation method is not necessarily optimal, as it places assumptions and alters the data. Thus, both Mao et al. (2016) and Lu and Chen (2019) proposed variants that take the low-resolution image as input. Their architectures build on top of the well known U-Net by Ronneberger et al. (2015) . The method learns in a encoder-decoder fashion a sub-pixel convolution filter or deconvolution filter, respectively, which were shown to be equivalent by Shi et al. (2016) . A multi-scale reconstruction of multiple resolutions has been proposed by Wang et al. (2019) . Further, Wang et al. (2018) explored the usage of generative adversarial networks (GANs). A GAN models the data distribution and samples one potential explanation rather than finding a blurred compromise of multiple explanations. These generative networks hallucinate plausible detail, which is easy to mistake for real information. Despite the suitability of generative methods in the light of perceptual quality metrics, the presence of possibly false information is a problem for scientific data analysis that has not been fully explored yet. For a single-image super-resolution benchmark in computer vision, we refer to Yang et al. (2019) .

Next, we revisit the deep learning-based downscaling in meteorology and climate science, cf. Baño-Medina et al. (2020) . Rodrigues et al. (2018) took a supervised deep learning approach using CNNs to combine and downscale multiple ensemble runs spatially. Their approach is most promising in situations where the ensemble runs deviate only slightly from each other. In very diverse situations, a standard CNN will give blurry results, since the CNN finds a least-squares compromise of the many possible explanations that fit the statistical variations. In computer vision terms, this approach can be considered a multi-view super-resolution problem, whereas we investigate the more challenging single-image super-resolution. Höhlein et al. (2020) studied multiple architectures for spatial downscaling of wind velocity data, including a U-Net based architecture and deep networks with residual learning. The latter resulted in the best performance on the wind velocity fields that they studied. Following Vandal et al. (2017) , they included additional variables, such as geopotential height and forecast surface roughness, as well as static high-resolution fields, such as land sea mask and topography. They demonstrated that the learning overhead of such a network is justified, when considering the computation time difference between a low-resolution and high-resolution simulation. Later, we will show that residual networks will not generally outperform direct convolutional approaches on our data, since the best choice of network is data-dependent, and we also include temporal downscaling in our experiments. Pouliot et al. (2018) studied the super-resolution enhancement of Landsat data. Vandal et al. (2017 , 2019) stacked multiple CNNs to learn multiple higher spatial resolutions from a given precipitation field. Cheng et al. (2020) proposed a convolutional architecture with residual connections to downscale precipitation spatially. In contrast, we also focus on the temporal downscaling of precipitation data, which is a more challenging problem due to motion and temporal variation. Toderici et al. (2017) solved the compression problem of high-resolution data and did not consider the downscaling problem. In principle, it would be imaginable to not store a coarsened version of the high-resolution data (which would be possible in our pipeline), but to store the compressed latent space as encoded by the network (as done by Toderici et al., 2017 ). The latter requires to keep the encoding/decoding code alongside the data and has the potential downside that many (old) codes have to be maintained, which could turn out impractical for operational scenarios. Instead, we investigate a pipeline in which we start from coarsened data. It is clear, however, that a learnt encoder could provide a better compression than a simple coarsening. CNNs tend to produce oversmoothed results, as they produce a compromise of the possible explanations that satisfy the incomplete data. Different approaches have been tested to improve the spatial detail, including the application of relevance vector machines ( Sachindra et al., 2018 ) and (conditioned) generative neural networks ( Singh et al., 2019 ; Han and Wang, 2020 ; Stengel et al., 2020 ). While the latter improves the visual quality, it is not yet clear how much the interpretability of the result is impeded by the inherent hallucination.

When considering the various meteorological variables that are at play, we can observe large differences between the rates at which the structures in the data evolve temporally, how they correlate with spatial locations–for example convection near complex topography, and how much spatial variation they experience. For this reason, we investigate and evaluate meteorological fields from both ends of the spectrum: low-frequent and high-frequent signals. Fundamentally, two different approaches are imaginable. A deep neural network could either predict a high-resolution field directly, or an error-corrector from a strong baseline approach could be learnt, utilizing the strengths of contemporary methods. Thereby, the success of the error-predicting approach depends on the quality of the baseline. We explore both types of architecture in the light of the underlying signal frequency, as we hypothesize that for high-frequent data the baseline might not reach the significant quality needed to be useful for the error-predicting network. In order to avoid over-smoothing of the results, we augment the loss function to enforce the preservation of derivatives. Further, numerically simulated data and measured data have different signal-specific characteristic in terms of smoothness, occurrence of noise and differentiability. As both domains–simulation and observations–profit greatly from highly-resolved data, we investigate the spatial and temporal downscaling on both simulated and observed data.

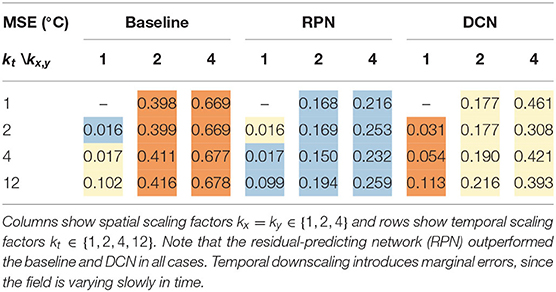

2. Method and Data

Formally, we aim to downscale a time-dependent meteorological scalar field s ( x, y, t ) from a low number of grid points X × Y × T to a higher number of grid points X ¯ × Y ¯ × T ¯ , with X ¯ = k x X , Y ¯ = k y Y , and T ¯ = k t T . Thereby, k x , k y , and k t are called the downscaling factors. We approach the problem through supervised deep learning, i.e., at training time we carefully prepare groundtruth pairs of low-resolution and high-resolution scalar field patches. A patch is a randomly cropped space-time region from the meteorological data. Afterwards, convolutional neural networks are trained to recall the high-resolution patch from a given low-resolution patch. Using patches enables direct control over the batch size, which is an important hyper-parameter during training, as it influences the loss convergence. Since our network architectures are convolutional, the networks can later be applied to full domains, i.e., cropping of patches is not necessary at inference time after training. We follow prior network architectures based on the U-Net by Ronneberger et al. (2015) , one called UnetSR by Lu and Chen (2019) –an end-to-end network directly predicting the downscaled output, the other one called REDNet by Mao et al. (2016) –a residual prediction network. Both networks receive trivially downscaled data as input and have an encoder-decoder architecture where skip connections connect the feature maps from the encoder to their mirrored counterpart in the decoder. In the following, we refer to our residual predicting network as RPN and the end-to-end deconvolution approach as DCN. Before explaining the network architectures in detail, we introduce the data and explain the coarsening of high-resolution data to obtain groundtruth pairs for the training process.

Here we describe the two data sets on which we apply and test the method. The data originates from two sources: climate model simulations and observations.

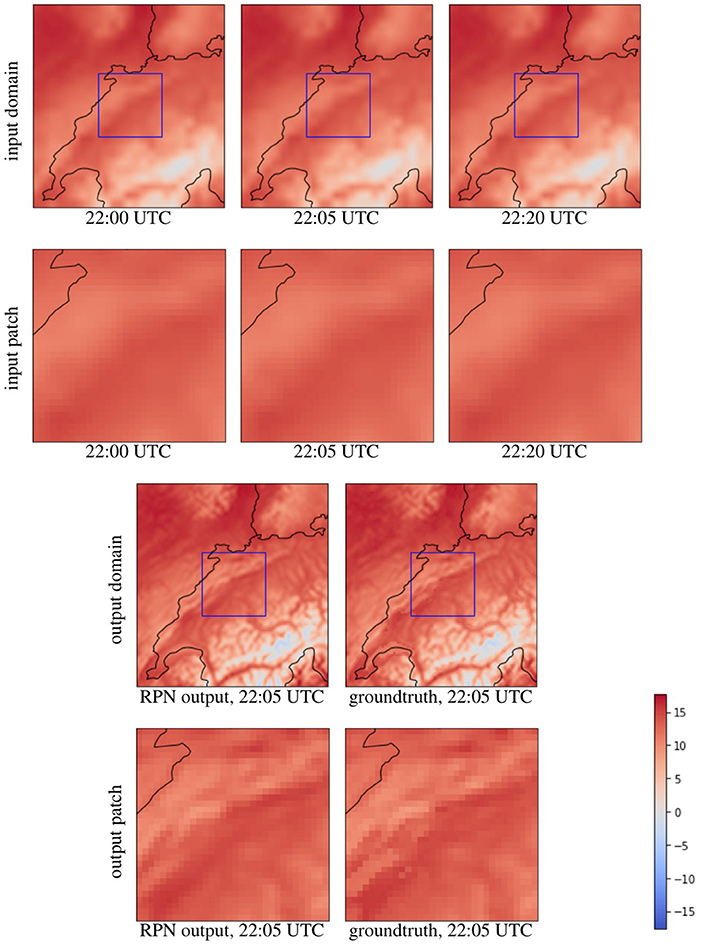

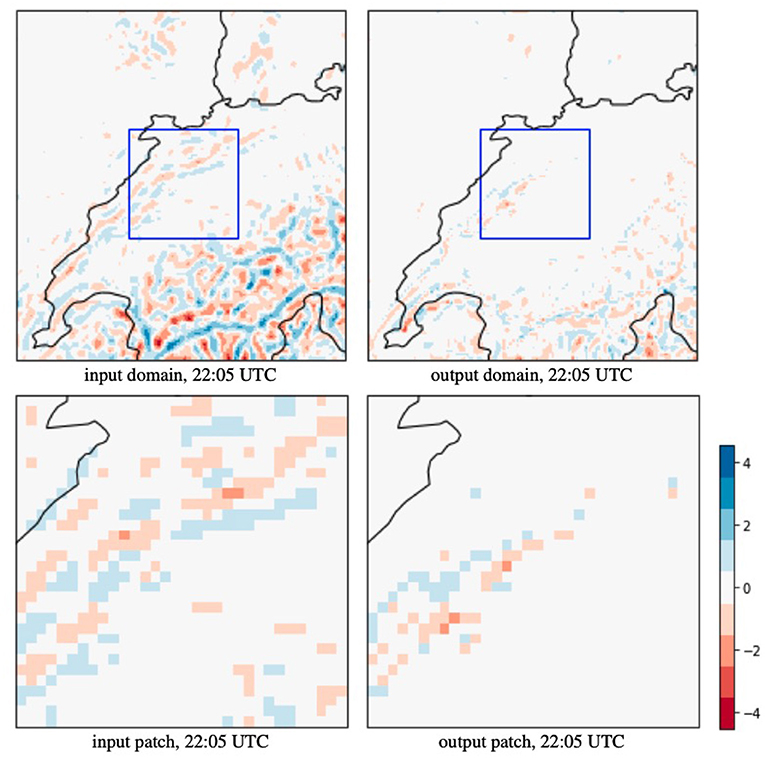

2.1.1. Climate Model Data

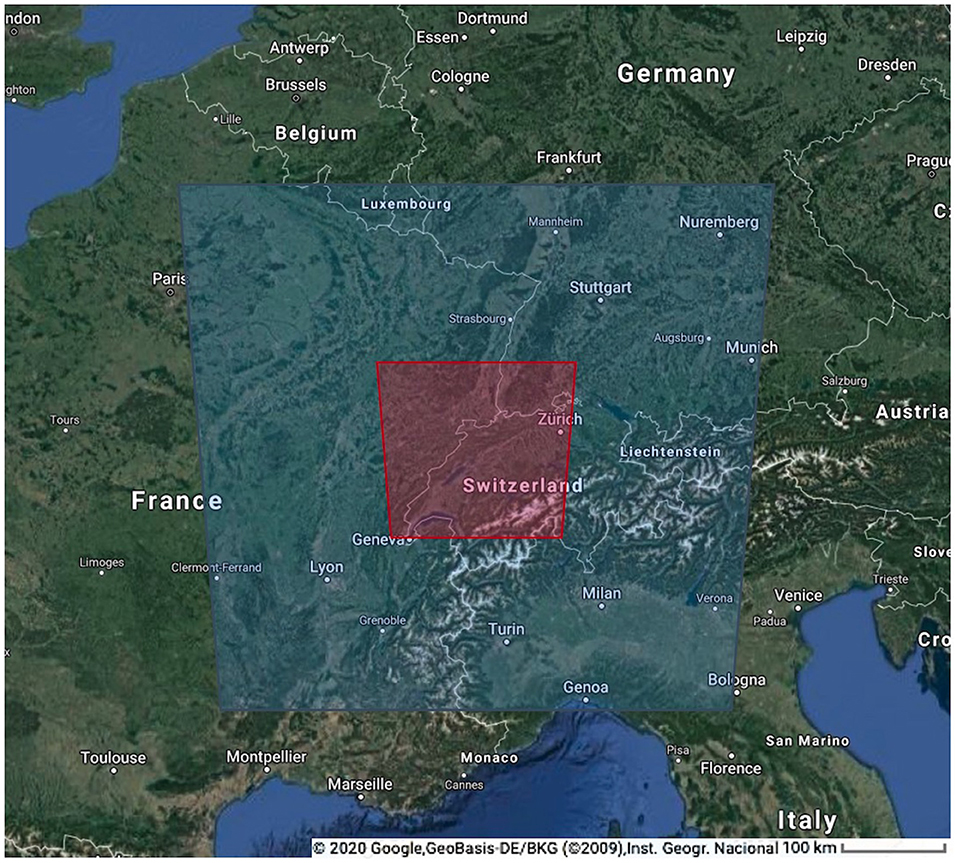

The described method and approach is tested on the climate data produced by a regional climate model COSMO (Consortium for Small Scale Modeling). It is a non-hydrostatic, limited-area, atmospheric model designed for applications from the meso-β to the meso-γ scales ( Steppeler et al., 2003 ). The data has been produced by a version of COSMO that is capable of running on GPUs ( Fuhrer et al., 2014 ), and has been presented and evaluated in Hentgen et al. (2019) . The climate simulation has been conducted with a horizontal grid spacing of 2.2 km (see Leutwyler et al., 2017 ; Hentgen et al., 2019 ). The red box in Figure 1 shows the domain that we use for the temperature predictions. Since precipitation can be close to zero in many regions of the domain, we expanded the domain to the blue box for the precipitation experiments. We used temperature and precipitation fields available every 5 min for the months June and July in 2008.

Figure 1 . The analysis region over central Europe used in this study indicated with red box (temperature) and blue box (precipitation).

2.1.2. Observations

The observational data set used in this study is a gridded precipitation dataset for year 2004, covering the area of Switzerland. The horizontal grid spacing of the data is 1 km ( Wüest et al., 2010 ) and it is available at hourly frequency. It is generated using a combination of station data with radar-based disaggregation. The data is often used for climate model evaluation (see e.g., Ban et al., 2014 ).

2.2. Supervised Machine Learning for Downscaling of Meteorological Data

Let X be a coarse patch with X × Y × T regular grid points, and let Y be the corresponding downscaled patch with X ¯ × Y ¯ × T ¯ grid points. Further, let f ( Y ) be a map that coarsens a high-resolution patch Y into its corresponding low-resolution patch X :

The inverse problem f −1 , i.e., the downscaling problem, is usually ill-posed, since the map f is not bijective. While any high-resolution patch can be turned into a unique low-resolution patch via coarsening, the reverse will have multiple possible solutions, i.e., f is surjective, but not injective.

However, not every possible solution to Y = f −1 ( X ) is physically meaningful and realizable in real-world data. It therefore makes sense to construct the inverse map f −1 in a data-driven manner from real-world data to only include mappings that have actually been seen during training, which is the key idea behind supervised machine learning. The inverse map is thereby parameterized by a concatenation of multiple weighted sums of inputs that each go through a non-linear mapping. The composition–a deep neural network–thereby becomes a differentiable, highly non-linear mapping between the input and output space, and can be iteratively trained via gradient descent.

The success of a deep neural network thereby hinges on three key criteria:

1. the architecture of the neural network combines low-frequent and high-frequent information, and the gradients d Y / d X are well defined to facilitate the training process,

2. the training data is of high quality and expressive, i.e., we explore the space of possible mappings sufficiently and the mappings are sufficiently distinct.

3. the loss function expresses the desired goal well and the energy manifold is well-behaved to allow a stable (stochastic) gradient descent.

In the following subsections, we elaborate on the network architectures in section 2.2.1, the training data generation in section 2.2.2, and the training procedure and loss function in section 2.2.3.

2.2.1. Network Architecture

When observing meteorological variables, such as temperature and precipitation, we can see vast differences in their spatial and temporal variability. While temperature varies slowly in space and time, i.e., it is a comparatively low-frequent signal, precipitation is far more localized and varies faster, i.e., it is a high-frequent signal that is harder to predict with conventional downscaling techniques. To leverage the data characteristics, we design two separate convolutional neural networks to represent the inverse mapping f −1 .

2.2.1.1. Low-Frequent Data: Residual-Predicting Network (RPN)

In case, the data has low spatial and temporal variability, a conventional downscaling technique might already take us close to the desired solution. Rather than learning the entire downscaling process, it will then be an easier task to correct the conventional downscaling method, which is the core concept of residual learning (cf. Dong et al., 2015 ). Let f ^ - 1 be an existing downscaling technique, such as trilinear interpolation in space-time. Then, the inverse f −1 ( X ) can be formed by:

where our neural network only learns to predict the residual r ( f ^ - 1 ( X ) ) of the trilinear downscaling method. For this, we follow the architecture of Mao et al. (2016) , who applied an encoder-decoder architecture, which is detailed further below. The advantage of this approach is that it is comparatively easier to improve over the existing trilinear baseline method in contrast to learning a downscaling method from scratch. If f ^ - 1 ( X ) performs poorly, for example since the scalar field exhibits too much temporal variability, then the next approach will perform better.

2.2.1.2. High-Frequent Data: Deconvolutional Network (DCN)

Consider a case in which too much motion occurred between time steps, e.g., a cloud got transported to a new location not overlapping with its previous location. Then, the trilinear downscaling method might interpolate two small clouds in the time step in-between at the original and the final location, rather than obtaining a single translating cloud in the middle. Other than before, the linear downscaling in time might not be close enough to benefit from residual prediction. In such cases where a a conventional temporal downscaling method is not helpful, we learn the partial mapping p ( f ~ - 1 ( X ) ) from spatially-downscaled data to the high resolution:

where f ~ - 1 ( X ) performs only spatial downscaling using bilinear interpolation, but not temporal downscaling and where p ( f ~ - 1 ( X ) ) performs both the temporal downscaling and improves over the result of f ~ - 1 . Since f ~ - 1 ( X ) does not interpolate information in time, a residual prediction is no longer applicable. Hence, the high-resolution data is predicted directly. For the network architecture, we follow a typical U-Net architecture ( Ronneberger et al., 2015 ), which is a general design not limited to downscaling problems. In our downscaling setting, the input data is spatially downscaled with a bilinear baseline method, as was proposed by Lu and Chen (2019) for image super-resolution. In the following, we explain how the networks are structured and which modifications improved the performance for meteorological downscaling problems.

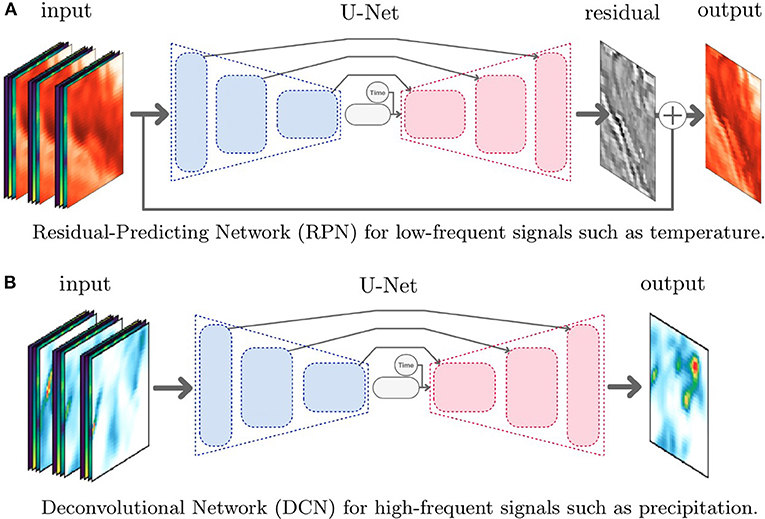

2.2.1.3. Layers and Skip Connections

The neural network architectures are illustrated in Figure 2 . In both architectures, the network consists of convolutional layers only. Among the most recent convolutional neural network architectures, U-Nets by Ronneberger et al. (2015) are often the most promising approach. A U-Net extracts both low-frequency and high-frequency features from the data by repeatedly performing feature extraction and coarsening. In the so-called contraction phase, we apply successively two convolutional layers followed by a MaxPooling layer to extract features and then reduce the resolution. To handle convolutions on image boundaries, we use zero-padding and apply the convolutions with a stride of 1, i.e., every pixel of the input data will once be the center of a convolution kernel. We repeat this structure four times where the last time we omit the pooling layer. Within each layer, we extract a certain number of features. Starting with 64 features maps, we double the size until 512 feature maps are reached in the last layer. This is the amount of information available in the subsequent step: the synthesis of the output in the expansion phase. In the expansion phase, the goal is to reconstruct a high resolution image from all previously extracted features by iteratively increasing the number of grid points until the target resolution is reached. We do this by using so-called UpSampling layers, which repeat the values to a larger data block, followed by three convolutional layers. The key to success is to provide in each level of the U-Net the feature maps that have been extracted previously on the same resolution during contraction. This is achieved by skip connections from the contraction to the expansion phase. Adding the skip connections as in the U-Net by Ronneberger et al. (2015) has two positive effects. First, it was shown to smooth the loss landscape (c.f., Li et al., 2018 ), which makes it easier to perform gradient descent during training. Second, the skip connections give access to the high-frequency information of earlier layers, which greatly helps to construct high-frequent outputs.

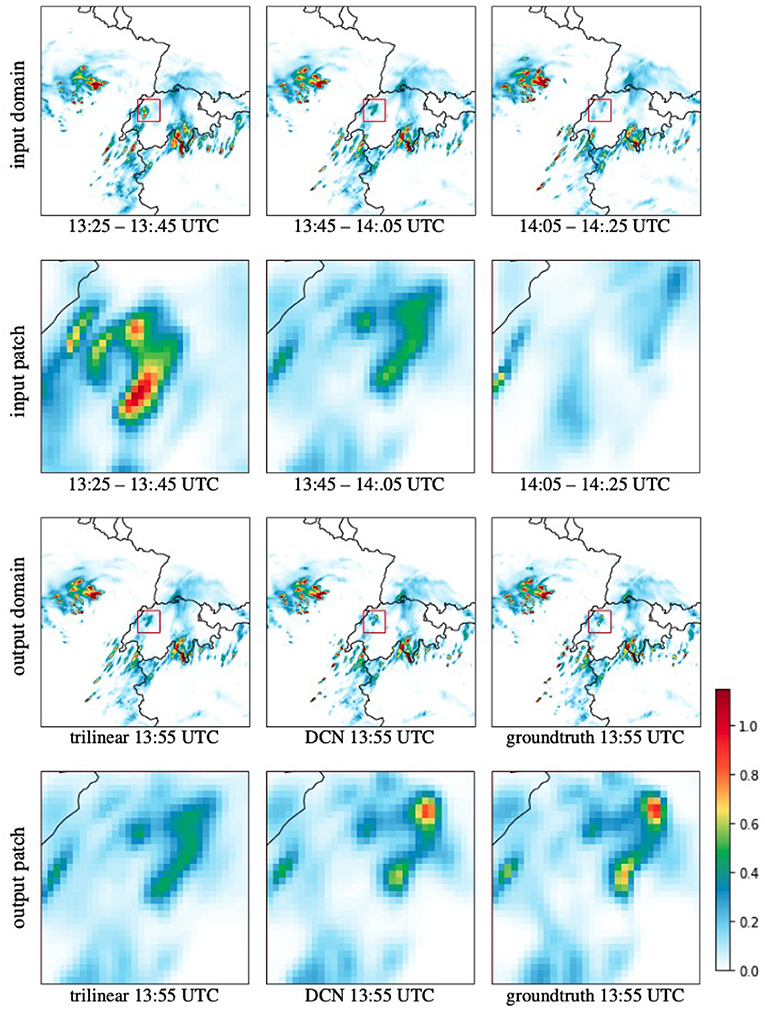

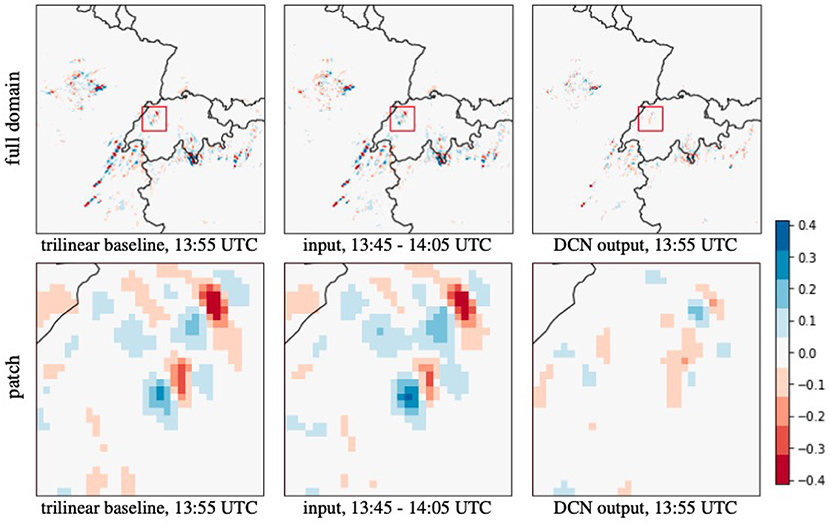

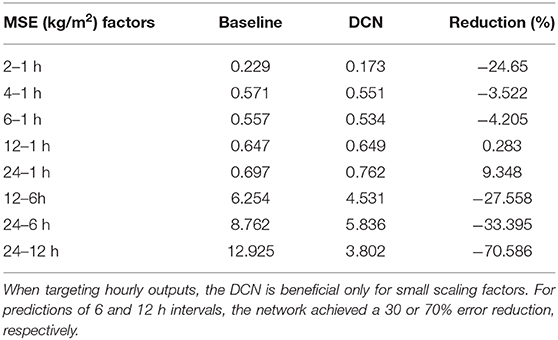

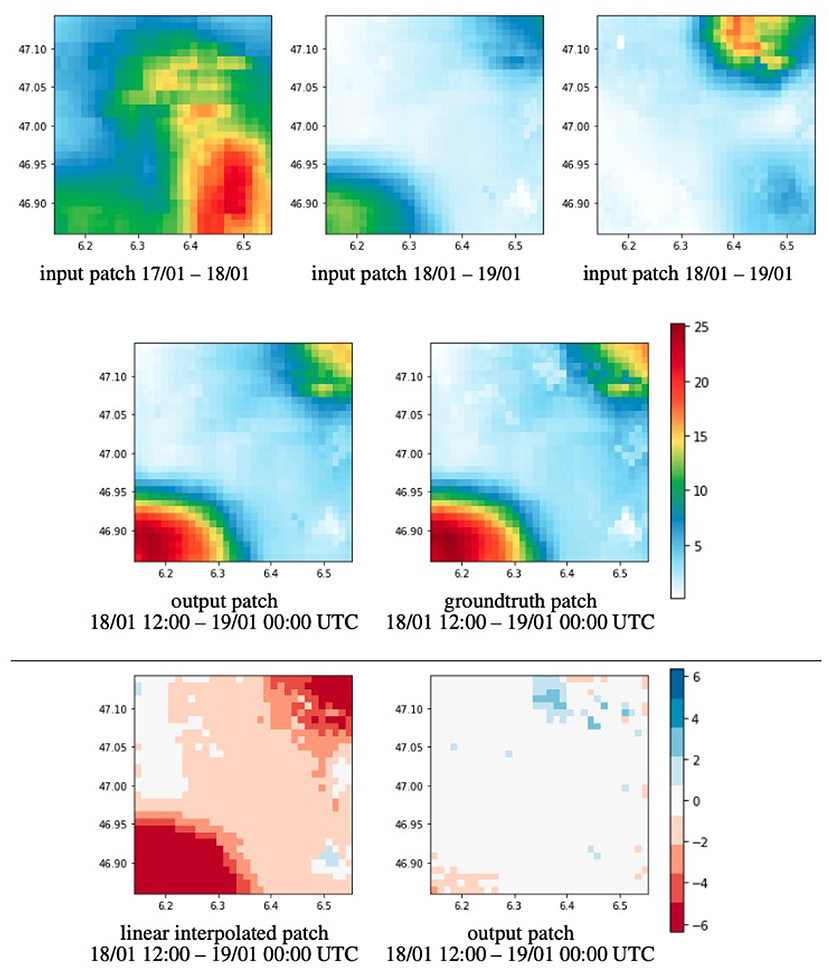

Figure 2 . Illustrations of the convolutional neural network architectures for downscaling of low-frequent and high-frequent meteorological variables. Both architectures receive three time steps of the scalar field to predict (temperature or precipitation) and additional fields (longitude, latitude, surface height) as input. For RPN, the input data is downscaled conventionally in both space and time. For DCN, the input data is downscaled conventionally only in space. In both networks, the time variable is appended in the latent space and indicates at which relative time between the input frames the output should be downscaled at. (A) Residual-predicting network (RPN) for low-frequent signals, such as temperature. (B) Deconvolutional network (DCN) for high-frequent signals, such as precipitation.

2.2.1.4. Inputs and Outputs

Since we intend to downscale data both in space and time, we provide the network with both spatial and temporal information. Thus, the input to the model is a 4D data block, one dimension is used for the time steps, two for the spatial information, and the last one holds the so-called channels. A difference to the conventional U-Net is that we experimented with additional data channels that provide more information to resolve ambiguities in the inverse map f −1 . The effectiveness of additional data channels was already demonstrated by Vandal et al. (2017) and Höhlein et al. (2020) for downscaling. These additional channels include latitude and longitude such that the network can learn regional weather patterns, and altitude to include dependencies on the topography. For example, we observed that adding these additional fields improved the residual by 7.3%, for a precipitation downscaling with k x = k y = k t = 4. In addition, we provide temporal information to the network, which allows us to give the model information about the relative time between the next known adjacent time steps. Since the time variable is a constant and not a spatially-varying map–unlike all other previously mentioned additional information, we append the time to the latent space, i.e., the feature map at the end of the feature extraction phase. Other options to include the time are imaginable. Including time as a constant separate slice in the input would increase the network size, which is why we opted for appending it to the latent space. Our data concentrated on a specific season. Including the day of the year as additional variable in order to learn seasonal effects would be straightforward to add.

The output of the network depends on the chosen architecture. As described above, we predict the error residual for the low-frequent data in the RPN, e.g., for the temperature field. In the case of high-frequent data, such as precipitation, we directly predict the high-resolution outputs in the DCN. In both cases, the networks are convolutional, thus the network can be applied at inference time to the full input domain at once.

2.2.2. Training Data Generation

Supervised machine learning requires groundtruth pairs of low-resolution and corresponding high-resolution patches. In the following, we describe how these groundtruth pairs are generated from the given high-resolution meteorological data. The coarsening operation depends on the units of the data. When the units remain the same (e.g., absolute temperature in K), then we use an average operation only. When the units change (e.g., total precipitation depends on the time step), then we apply averaging and convert the units afterwards. In case of precipitation, the coarsening in time is equal to an accumulation of the precipitation values. Generally, we recommend to use an averaging operation to do the coarsening, since a max operation or a simple subsampling would cause aliasing artifacts that would not be present if the data was simulated or measured on lower resolution. For the residual predicting network (RPN), we downscale the low-resolution data with a conventional trilinear interpolation method, and feed the downscaled data to the network in order to predict the residual (c.f., section Low-frequent data: residual-predicting network (RPN)). In this work, we applied linear interpolation to avoid extrapolation of minima and maxima. Any other existing downscaling method, such as cubic interpolation, would conceptually also be imaginable. For DCN, the network receives spatially-downscaled input, similar to RPN. In the temporal direction, we input the coarse resolution, since a linear interpolation would cause blending and ghosting artifacts that the network would have to learn to undo. During training, we randomly crop patches with a resolution of 32 × 32 from the high-resolution and (conventionally downscaled) low-resolution data. We thereby separate the time sequence into a training period and a testing period to assure that the training and testing sets are independent. For this, we used the last 10% of the time range for testing.

Since the input fields (temperature or precipitation, and longitude, latitude, and surface height) would have different value ranges, we normalize all fields globally across the entire data set to the unit interval [0, 1], which is a common preprocess with neural networks. The scaling factors are stored, such that the results of the network can be scaled back to the physical units later.

2.2.3. Training Procedure and Loss

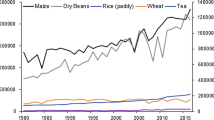

As loss function, we measure the difference between the predicted result Y and the groundtruth Y ¯ . Convolutional neural networks are known to oversmooth the output. Hence, we assess the difference with an L 1 norm that is combined with a gradient loss to not only penalize differences in the values but also in the derivatives, which aids in the reconstruction of higher-frequency details. We refer to Kim and Günther (2019) and Kim et al. (2019) for a discussion of the norms and weights of the gradient loss.