Hypothesis Testing - Chi Squared Test

Lisa Sullivan, PhD

Professor of Biostatistics

Boston University School of Public Health

Introduction

This module will continue the discussion of hypothesis testing, where a specific statement or hypothesis is generated about a population parameter, and sample statistics are used to assess the likelihood that the hypothesis is true. The hypothesis is based on available information and the investigator's belief about the population parameters. The specific tests considered here are called chi-square tests and are appropriate when the outcome is discrete (dichotomous, ordinal or categorical). For example, in some clinical trials the outcome is a classification such as hypertensive, pre-hypertensive or normotensive. We could use the same classification in an observational study such as the Framingham Heart Study to compare men and women in terms of their blood pressure status - again using the classification of hypertensive, pre-hypertensive or normotensive status.

The technique to analyze a discrete outcome uses what is called a chi-square test. Specifically, the test statistic follows a chi-square probability distribution. We will consider chi-square tests here with one, two and more than two independent comparison groups.

Learning Objectives

After completing this module, the student will be able to:

- Perform chi-square tests by hand

- Appropriately interpret results of chi-square tests

- Identify the appropriate hypothesis testing procedure based on type of outcome variable and number of samples

Tests with One Sample, Discrete Outcome

Here we consider hypothesis testing with a discrete outcome variable in a single population. Discrete variables are variables that take on more than two distinct responses or categories and the responses can be ordered or unordered (i.e., the outcome can be ordinal or categorical). The procedure we describe here can be used for dichotomous (exactly 2 response options), ordinal or categorical discrete outcomes and the objective is to compare the distribution of responses, or the proportions of participants in each response category, to a known distribution. The known distribution is derived from another study or report and it is again important in setting up the hypotheses that the comparator distribution specified in the null hypothesis is a fair comparison. The comparator is sometimes called an external or a historical control.

In one sample tests for a discrete outcome, we set up our hypotheses against an appropriate comparator. We select a sample and compute descriptive statistics on the sample data. Specifically, we compute the sample size (n) and the proportions of participants in each response

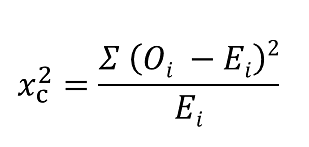

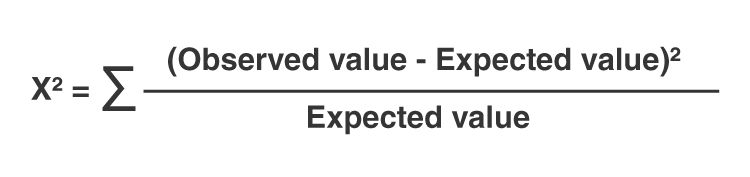

Test Statistic for Testing H 0 : p 1 = p 10 , p 2 = p 20 , ..., p k = p k0

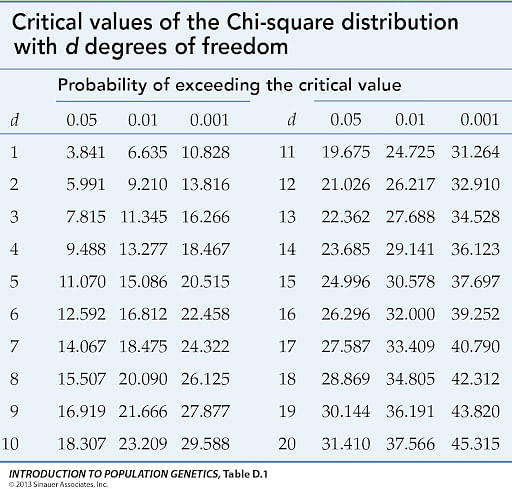

We find the critical value in a table of probabilities for the chi-square distribution with degrees of freedom (df) = k-1. In the test statistic, O = observed frequency and E=expected frequency in each of the response categories. The observed frequencies are those observed in the sample and the expected frequencies are computed as described below. χ 2 (chi-square) is another probability distribution and ranges from 0 to ∞. The test above statistic formula above is appropriate for large samples, defined as expected frequencies of at least 5 in each of the response categories.

When we conduct a χ 2 test, we compare the observed frequencies in each response category to the frequencies we would expect if the null hypothesis were true. These expected frequencies are determined by allocating the sample to the response categories according to the distribution specified in H 0 . This is done by multiplying the observed sample size (n) by the proportions specified in the null hypothesis (p 10 , p 20 , ..., p k0 ). To ensure that the sample size is appropriate for the use of the test statistic above, we need to ensure that the following: min(np 10 , n p 20 , ..., n p k0 ) > 5.

The test of hypothesis with a discrete outcome measured in a single sample, where the goal is to assess whether the distribution of responses follows a known distribution, is called the χ 2 goodness-of-fit test. As the name indicates, the idea is to assess whether the pattern or distribution of responses in the sample "fits" a specified population (external or historical) distribution. In the next example we illustrate the test. As we work through the example, we provide additional details related to the use of this new test statistic.

A University conducted a survey of its recent graduates to collect demographic and health information for future planning purposes as well as to assess students' satisfaction with their undergraduate experiences. The survey revealed that a substantial proportion of students were not engaging in regular exercise, many felt their nutrition was poor and a substantial number were smoking. In response to a question on regular exercise, 60% of all graduates reported getting no regular exercise, 25% reported exercising sporadically and 15% reported exercising regularly as undergraduates. The next year the University launched a health promotion campaign on campus in an attempt to increase health behaviors among undergraduates. The program included modules on exercise, nutrition and smoking cessation. To evaluate the impact of the program, the University again surveyed graduates and asked the same questions. The survey was completed by 470 graduates and the following data were collected on the exercise question:

Based on the data, is there evidence of a shift in the distribution of responses to the exercise question following the implementation of the health promotion campaign on campus? Run the test at a 5% level of significance.

In this example, we have one sample and a discrete (ordinal) outcome variable (with three response options). We specifically want to compare the distribution of responses in the sample to the distribution reported the previous year (i.e., 60%, 25%, 15% reporting no, sporadic and regular exercise, respectively). We now run the test using the five-step approach.

- Step 1. Set up hypotheses and determine level of significance.

The null hypothesis again represents the "no change" or "no difference" situation. If the health promotion campaign has no impact then we expect the distribution of responses to the exercise question to be the same as that measured prior to the implementation of the program.

H 0 : p 1 =0.60, p 2 =0.25, p 3 =0.15, or equivalently H 0 : Distribution of responses is 0.60, 0.25, 0.15

H 1 : H 0 is false. α =0.05

Notice that the research hypothesis is written in words rather than in symbols. The research hypothesis as stated captures any difference in the distribution of responses from that specified in the null hypothesis. We do not specify a specific alternative distribution, instead we are testing whether the sample data "fit" the distribution in H 0 or not. With the χ 2 goodness-of-fit test there is no upper or lower tailed version of the test.

- Step 2. Select the appropriate test statistic.

The test statistic is:

We must first assess whether the sample size is adequate. Specifically, we need to check min(np 0 , np 1, ..., n p k ) > 5. The sample size here is n=470 and the proportions specified in the null hypothesis are 0.60, 0.25 and 0.15. Thus, min( 470(0.65), 470(0.25), 470(0.15))=min(282, 117.5, 70.5)=70.5. The sample size is more than adequate so the formula can be used.

- Step 3. Set up decision rule.

The decision rule for the χ 2 test depends on the level of significance and the degrees of freedom, defined as degrees of freedom (df) = k-1 (where k is the number of response categories). If the null hypothesis is true, the observed and expected frequencies will be close in value and the χ 2 statistic will be close to zero. If the null hypothesis is false, then the χ 2 statistic will be large. Critical values can be found in a table of probabilities for the χ 2 distribution. Here we have df=k-1=3-1=2 and a 5% level of significance. The appropriate critical value is 5.99, and the decision rule is as follows: Reject H 0 if χ 2 > 5.99.

- Step 4. Compute the test statistic.

We now compute the expected frequencies using the sample size and the proportions specified in the null hypothesis. We then substitute the sample data (observed frequencies) and the expected frequencies into the formula for the test statistic identified in Step 2. The computations can be organized as follows.

Notice that the expected frequencies are taken to one decimal place and that the sum of the observed frequencies is equal to the sum of the expected frequencies. The test statistic is computed as follows:

- Step 5. Conclusion.

We reject H 0 because 8.46 > 5.99. We have statistically significant evidence at α=0.05 to show that H 0 is false, or that the distribution of responses is not 0.60, 0.25, 0.15. The p-value is p < 0.005.

In the χ 2 goodness-of-fit test, we conclude that either the distribution specified in H 0 is false (when we reject H 0 ) or that we do not have sufficient evidence to show that the distribution specified in H 0 is false (when we fail to reject H 0 ). Here, we reject H 0 and concluded that the distribution of responses to the exercise question following the implementation of the health promotion campaign was not the same as the distribution prior. The test itself does not provide details of how the distribution has shifted. A comparison of the observed and expected frequencies will provide some insight into the shift (when the null hypothesis is rejected). Does it appear that the health promotion campaign was effective?

Consider the following:

If the null hypothesis were true (i.e., no change from the prior year) we would have expected more students to fall in the "No Regular Exercise" category and fewer in the "Regular Exercise" categories. In the sample, 255/470 = 54% reported no regular exercise and 90/470=19% reported regular exercise. Thus, there is a shift toward more regular exercise following the implementation of the health promotion campaign. There is evidence of a statistical difference, is this a meaningful difference? Is there room for improvement?

The National Center for Health Statistics (NCHS) provided data on the distribution of weight (in categories) among Americans in 2002. The distribution was based on specific values of body mass index (BMI) computed as weight in kilograms over height in meters squared. Underweight was defined as BMI< 18.5, Normal weight as BMI between 18.5 and 24.9, overweight as BMI between 25 and 29.9 and obese as BMI of 30 or greater. Americans in 2002 were distributed as follows: 2% Underweight, 39% Normal Weight, 36% Overweight, and 23% Obese. Suppose we want to assess whether the distribution of BMI is different in the Framingham Offspring sample. Using data from the n=3,326 participants who attended the seventh examination of the Offspring in the Framingham Heart Study we created the BMI categories as defined and observed the following:

- Step 1. Set up hypotheses and determine level of significance.

H 0 : p 1 =0.02, p 2 =0.39, p 3 =0.36, p 4 =0.23 or equivalently

H 0 : Distribution of responses is 0.02, 0.39, 0.36, 0.23

H 1 : H 0 is false. α=0.05

The formula for the test statistic is:

We must assess whether the sample size is adequate. Specifically, we need to check min(np 0 , np 1, ..., n p k ) > 5. The sample size here is n=3,326 and the proportions specified in the null hypothesis are 0.02, 0.39, 0.36 and 0.23. Thus, min( 3326(0.02), 3326(0.39), 3326(0.36), 3326(0.23))=min(66.5, 1297.1, 1197.4, 765.0)=66.5. The sample size is more than adequate, so the formula can be used.

Here we have df=k-1=4-1=3 and a 5% level of significance. The appropriate critical value is 7.81 and the decision rule is as follows: Reject H 0 if χ 2 > 7.81.

We now compute the expected frequencies using the sample size and the proportions specified in the null hypothesis. We then substitute the sample data (observed frequencies) into the formula for the test statistic identified in Step 2. We organize the computations in the following table.

The test statistic is computed as follows:

We reject H 0 because 233.53 > 7.81. We have statistically significant evidence at α=0.05 to show that H 0 is false or that the distribution of BMI in Framingham is different from the national data reported in 2002, p < 0.005.

Again, the χ 2 goodness-of-fit test allows us to assess whether the distribution of responses "fits" a specified distribution. Here we show that the distribution of BMI in the Framingham Offspring Study is different from the national distribution. To understand the nature of the difference we can compare observed and expected frequencies or observed and expected proportions (or percentages). The frequencies are large because of the large sample size, the observed percentages of patients in the Framingham sample are as follows: 0.6% underweight, 28% normal weight, 41% overweight and 30% obese. In the Framingham Offspring sample there are higher percentages of overweight and obese persons (41% and 30% in Framingham as compared to 36% and 23% in the national data), and lower proportions of underweight and normal weight persons (0.6% and 28% in Framingham as compared to 2% and 39% in the national data). Are these meaningful differences?

In the module on hypothesis testing for means and proportions, we discussed hypothesis testing applications with a dichotomous outcome variable in a single population. We presented a test using a test statistic Z to test whether an observed (sample) proportion differed significantly from a historical or external comparator. The chi-square goodness-of-fit test can also be used with a dichotomous outcome and the results are mathematically equivalent.

In the prior module, we considered the following example. Here we show the equivalence to the chi-square goodness-of-fit test.

The NCHS report indicated that in 2002, 75% of children aged 2 to 17 saw a dentist in the past year. An investigator wants to assess whether use of dental services is similar in children living in the city of Boston. A sample of 125 children aged 2 to 17 living in Boston are surveyed and 64 reported seeing a dentist over the past 12 months. Is there a significant difference in use of dental services between children living in Boston and the national data?

We presented the following approach to the test using a Z statistic.

- Step 1. Set up hypotheses and determine level of significance

H 0 : p = 0.75

H 1 : p ≠ 0.75 α=0.05

We must first check that the sample size is adequate. Specifically, we need to check min(np 0 , n(1-p 0 )) = min( 125(0.75), 125(1-0.75))=min(94, 31)=31. The sample size is more than adequate so the following formula can be used

This is a two-tailed test, using a Z statistic and a 5% level of significance. Reject H 0 if Z < -1.960 or if Z > 1.960.

We now substitute the sample data into the formula for the test statistic identified in Step 2. The sample proportion is:

We reject H 0 because -6.15 < -1.960. We have statistically significant evidence at a =0.05 to show that there is a statistically significant difference in the use of dental service by children living in Boston as compared to the national data. (p < 0.0001).

We now conduct the same test using the chi-square goodness-of-fit test. First, we summarize our sample data as follows:

H 0 : p 1 =0.75, p 2 =0.25 or equivalently H 0 : Distribution of responses is 0.75, 0.25

We must assess whether the sample size is adequate. Specifically, we need to check min(np 0 , np 1, ...,np k >) > 5. The sample size here is n=125 and the proportions specified in the null hypothesis are 0.75, 0.25. Thus, min( 125(0.75), 125(0.25))=min(93.75, 31.25)=31.25. The sample size is more than adequate so the formula can be used.

Here we have df=k-1=2-1=1 and a 5% level of significance. The appropriate critical value is 3.84, and the decision rule is as follows: Reject H 0 if χ 2 > 3.84. (Note that 1.96 2 = 3.84, where 1.96 was the critical value used in the Z test for proportions shown above.)

(Note that (-6.15) 2 = 37.8, where -6.15 was the value of the Z statistic in the test for proportions shown above.)

We reject H 0 because 37.8 > 3.84. We have statistically significant evidence at α=0.05 to show that there is a statistically significant difference in the use of dental service by children living in Boston as compared to the national data. (p < 0.0001). This is the same conclusion we reached when we conducted the test using the Z test above. With a dichotomous outcome, Z 2 = χ 2 ! In statistics, there are often several approaches that can be used to test hypotheses.

Tests for Two or More Independent Samples, Discrete Outcome

Here we extend that application of the chi-square test to the case with two or more independent comparison groups. Specifically, the outcome of interest is discrete with two or more responses and the responses can be ordered or unordered (i.e., the outcome can be dichotomous, ordinal or categorical). We now consider the situation where there are two or more independent comparison groups and the goal of the analysis is to compare the distribution of responses to the discrete outcome variable among several independent comparison groups.

The test is called the χ 2 test of independence and the null hypothesis is that there is no difference in the distribution of responses to the outcome across comparison groups. This is often stated as follows: The outcome variable and the grouping variable (e.g., the comparison treatments or comparison groups) are independent (hence the name of the test). Independence here implies homogeneity in the distribution of the outcome among comparison groups.

The null hypothesis in the χ 2 test of independence is often stated in words as: H 0 : The distribution of the outcome is independent of the groups. The alternative or research hypothesis is that there is a difference in the distribution of responses to the outcome variable among the comparison groups (i.e., that the distribution of responses "depends" on the group). In order to test the hypothesis, we measure the discrete outcome variable in each participant in each comparison group. The data of interest are the observed frequencies (or number of participants in each response category in each group). The formula for the test statistic for the χ 2 test of independence is given below.

Test Statistic for Testing H 0 : Distribution of outcome is independent of groups

and we find the critical value in a table of probabilities for the chi-square distribution with df=(r-1)*(c-1).

Here O = observed frequency, E=expected frequency in each of the response categories in each group, r = the number of rows in the two-way table and c = the number of columns in the two-way table. r and c correspond to the number of comparison groups and the number of response options in the outcome (see below for more details). The observed frequencies are the sample data and the expected frequencies are computed as described below. The test statistic is appropriate for large samples, defined as expected frequencies of at least 5 in each of the response categories in each group.

The data for the χ 2 test of independence are organized in a two-way table. The outcome and grouping variable are shown in the rows and columns of the table. The sample table below illustrates the data layout. The table entries (blank below) are the numbers of participants in each group responding to each response category of the outcome variable.

Table - Possible outcomes are are listed in the columns; The groups being compared are listed in rows.

In the table above, the grouping variable is shown in the rows of the table; r denotes the number of independent groups. The outcome variable is shown in the columns of the table; c denotes the number of response options in the outcome variable. Each combination of a row (group) and column (response) is called a cell of the table. The table has r*c cells and is sometimes called an r x c ("r by c") table. For example, if there are 4 groups and 5 categories in the outcome variable, the data are organized in a 4 X 5 table. The row and column totals are shown along the right-hand margin and the bottom of the table, respectively. The total sample size, N, can be computed by summing the row totals or the column totals. Similar to ANOVA, N does not refer to a population size here but rather to the total sample size in the analysis. The sample data can be organized into a table like the above. The numbers of participants within each group who select each response option are shown in the cells of the table and these are the observed frequencies used in the test statistic.

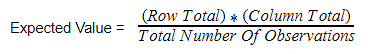

The test statistic for the χ 2 test of independence involves comparing observed (sample data) and expected frequencies in each cell of the table. The expected frequencies are computed assuming that the null hypothesis is true. The null hypothesis states that the two variables (the grouping variable and the outcome) are independent. The definition of independence is as follows:

Two events, A and B, are independent if P(A|B) = P(A), or equivalently, if P(A and B) = P(A) P(B).

The second statement indicates that if two events, A and B, are independent then the probability of their intersection can be computed by multiplying the probability of each individual event. To conduct the χ 2 test of independence, we need to compute expected frequencies in each cell of the table. Expected frequencies are computed by assuming that the grouping variable and outcome are independent (i.e., under the null hypothesis). Thus, if the null hypothesis is true, using the definition of independence:

P(Group 1 and Response Option 1) = P(Group 1) P(Response Option 1).

The above states that the probability that an individual is in Group 1 and their outcome is Response Option 1 is computed by multiplying the probability that person is in Group 1 by the probability that a person is in Response Option 1. To conduct the χ 2 test of independence, we need expected frequencies and not expected probabilities . To convert the above probability to a frequency, we multiply by N. Consider the following small example.

The data shown above are measured in a sample of size N=150. The frequencies in the cells of the table are the observed frequencies. If Group and Response are independent, then we can compute the probability that a person in the sample is in Group 1 and Response category 1 using:

P(Group 1 and Response 1) = P(Group 1) P(Response 1),

P(Group 1 and Response 1) = (25/150) (62/150) = 0.069.

Thus if Group and Response are independent we would expect 6.9% of the sample to be in the top left cell of the table (Group 1 and Response 1). The expected frequency is 150(0.069) = 10.4. We could do the same for Group 2 and Response 1:

P(Group 2 and Response 1) = P(Group 2) P(Response 1),

P(Group 2 and Response 1) = (50/150) (62/150) = 0.138.

The expected frequency in Group 2 and Response 1 is 150(0.138) = 20.7.

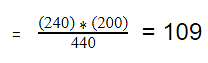

Thus, the formula for determining the expected cell frequencies in the χ 2 test of independence is as follows:

Expected Cell Frequency = (Row Total * Column Total)/N.

The above computes the expected frequency in one step rather than computing the expected probability first and then converting to a frequency.

In a prior example we evaluated data from a survey of university graduates which assessed, among other things, how frequently they exercised. The survey was completed by 470 graduates. In the prior example we used the χ 2 goodness-of-fit test to assess whether there was a shift in the distribution of responses to the exercise question following the implementation of a health promotion campaign on campus. We specifically considered one sample (all students) and compared the observed distribution to the distribution of responses the prior year (a historical control). Suppose we now wish to assess whether there is a relationship between exercise on campus and students' living arrangements. As part of the same survey, graduates were asked where they lived their senior year. The response options were dormitory, on-campus apartment, off-campus apartment, and at home (i.e., commuted to and from the university). The data are shown below.

Based on the data, is there a relationship between exercise and student's living arrangement? Do you think where a person lives affect their exercise status? Here we have four independent comparison groups (living arrangement) and a discrete (ordinal) outcome variable with three response options. We specifically want to test whether living arrangement and exercise are independent. We will run the test using the five-step approach.

H 0 : Living arrangement and exercise are independent

H 1 : H 0 is false. α=0.05

The null and research hypotheses are written in words rather than in symbols. The research hypothesis is that the grouping variable (living arrangement) and the outcome variable (exercise) are dependent or related.

- Step 2. Select the appropriate test statistic.

The condition for appropriate use of the above test statistic is that each expected frequency is at least 5. In Step 4 we will compute the expected frequencies and we will ensure that the condition is met.

The decision rule depends on the level of significance and the degrees of freedom, defined as df = (r-1)(c-1), where r and c are the numbers of rows and columns in the two-way data table. The row variable is the living arrangement and there are 4 arrangements considered, thus r=4. The column variable is exercise and 3 responses are considered, thus c=3. For this test, df=(4-1)(3-1)=3(2)=6. Again, with χ 2 tests there are no upper, lower or two-tailed tests. If the null hypothesis is true, the observed and expected frequencies will be close in value and the χ 2 statistic will be close to zero. If the null hypothesis is false, then the χ 2 statistic will be large. The rejection region for the χ 2 test of independence is always in the upper (right-hand) tail of the distribution. For df=6 and a 5% level of significance, the appropriate critical value is 12.59 and the decision rule is as follows: Reject H 0 if c 2 > 12.59.

We now compute the expected frequencies using the formula,

Expected Frequency = (Row Total * Column Total)/N.

The computations can be organized in a two-way table. The top number in each cell of the table is the observed frequency and the bottom number is the expected frequency. The expected frequencies are shown in parentheses.

Notice that the expected frequencies are taken to one decimal place and that the sums of the observed frequencies are equal to the sums of the expected frequencies in each row and column of the table.

Recall in Step 2 a condition for the appropriate use of the test statistic was that each expected frequency is at least 5. This is true for this sample (the smallest expected frequency is 9.6) and therefore it is appropriate to use the test statistic.

We reject H 0 because 60.5 > 12.59. We have statistically significant evidence at a =0.05 to show that H 0 is false or that living arrangement and exercise are not independent (i.e., they are dependent or related), p < 0.005.

Again, the χ 2 test of independence is used to test whether the distribution of the outcome variable is similar across the comparison groups. Here we rejected H 0 and concluded that the distribution of exercise is not independent of living arrangement, or that there is a relationship between living arrangement and exercise. The test provides an overall assessment of statistical significance. When the null hypothesis is rejected, it is important to review the sample data to understand the nature of the relationship. Consider again the sample data.

Because there are different numbers of students in each living situation, it makes the comparisons of exercise patterns difficult on the basis of the frequencies alone. The following table displays the percentages of students in each exercise category by living arrangement. The percentages sum to 100% in each row of the table. For comparison purposes, percentages are also shown for the total sample along the bottom row of the table.

From the above, it is clear that higher percentages of students living in dormitories and in on-campus apartments reported regular exercise (31% and 23%) as compared to students living in off-campus apartments and at home (10% each).

Test Yourself

Pancreaticoduodenectomy (PD) is a procedure that is associated with considerable morbidity. A study was recently conducted on 553 patients who had a successful PD between January 2000 and December 2010 to determine whether their Surgical Apgar Score (SAS) is related to 30-day perioperative morbidity and mortality. The table below gives the number of patients experiencing no, minor, or major morbidity by SAS category.

Question: What would be an appropriate statistical test to examine whether there is an association between Surgical Apgar Score and patient outcome? Using 14.13 as the value of the test statistic for these data, carry out the appropriate test at a 5% level of significance. Show all parts of your test.

In the module on hypothesis testing for means and proportions, we discussed hypothesis testing applications with a dichotomous outcome variable and two independent comparison groups. We presented a test using a test statistic Z to test for equality of independent proportions. The chi-square test of independence can also be used with a dichotomous outcome and the results are mathematically equivalent.

In the prior module, we considered the following example. Here we show the equivalence to the chi-square test of independence.

A randomized trial is designed to evaluate the effectiveness of a newly developed pain reliever designed to reduce pain in patients following joint replacement surgery. The trial compares the new pain reliever to the pain reliever currently in use (called the standard of care). A total of 100 patients undergoing joint replacement surgery agreed to participate in the trial. Patients were randomly assigned to receive either the new pain reliever or the standard pain reliever following surgery and were blind to the treatment assignment. Before receiving the assigned treatment, patients were asked to rate their pain on a scale of 0-10 with higher scores indicative of more pain. Each patient was then given the assigned treatment and after 30 minutes was again asked to rate their pain on the same scale. The primary outcome was a reduction in pain of 3 or more scale points (defined by clinicians as a clinically meaningful reduction). The following data were observed in the trial.

We tested whether there was a significant difference in the proportions of patients reporting a meaningful reduction (i.e., a reduction of 3 or more scale points) using a Z statistic, as follows.

H 0 : p 1 = p 2

H 1 : p 1 ≠ p 2 α=0.05

Here the new or experimental pain reliever is group 1 and the standard pain reliever is group 2.

We must first check that the sample size is adequate. Specifically, we need to ensure that we have at least 5 successes and 5 failures in each comparison group or that:

In this example, we have

Therefore, the sample size is adequate, so the following formula can be used:

Reject H 0 if Z < -1.960 or if Z > 1.960.

We now substitute the sample data into the formula for the test statistic identified in Step 2. We first compute the overall proportion of successes:

We now substitute to compute the test statistic.

- Step 5. Conclusion.

We now conduct the same test using the chi-square test of independence.

H 0 : Treatment and outcome (meaningful reduction in pain) are independent

H 1 : H 0 is false. α=0.05

The formula for the test statistic is:

For this test, df=(2-1)(2-1)=1. At a 5% level of significance, the appropriate critical value is 3.84 and the decision rule is as follows: Reject H0 if χ 2 > 3.84. (Note that 1.96 2 = 3.84, where 1.96 was the critical value used in the Z test for proportions shown above.)

We now compute the expected frequencies using:

The computations can be organized in a two-way table. The top number in each cell of the table is the observed frequency and the bottom number is the expected frequency. The expected frequencies are shown in parentheses.

A condition for the appropriate use of the test statistic was that each expected frequency is at least 5. This is true for this sample (the smallest expected frequency is 22.0) and therefore it is appropriate to use the test statistic.

(Note that (2.53) 2 = 6.4, where 2.53 was the value of the Z statistic in the test for proportions shown above.)

Chi-Squared Tests in R

The video below by Mike Marin demonstrates how to perform chi-squared tests in the R programming language.

Answer to Problem on Pancreaticoduodenectomy and Surgical Apgar Scores

We have 3 independent comparison groups (Surgical Apgar Score) and a categorical outcome variable (morbidity/mortality). We can run a Chi-Squared test of independence.

H 0 : Apgar scores and patient outcome are independent of one another.

H A : Apgar scores and patient outcome are not independent.

Chi-squared = 14.3

Since 14.3 is greater than 9.49, we reject H 0.

There is an association between Apgar scores and patient outcome. The lowest Apgar score group (0 to 4) experienced the highest percentage of major morbidity or mortality (16 out of 57=28%) compared to the other Apgar score groups.

Chi-Square (Χ²) Test & How To Calculate Formula Equation

Benjamin Frimodig

Science Expert

B.A., History and Science, Harvard University

Ben Frimodig is a 2021 graduate of Harvard College, where he studied the History of Science.

Learn about our Editorial Process

Saul Mcleod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul Mcleod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

On This Page:

Chi-square (χ2) is used to test hypotheses about the distribution of observations into categories with no inherent ranking.

What Is a Chi-Square Statistic?

The Chi-square test (pronounced Kai) looks at the pattern of observations and will tell us if certain combinations of the categories occur more frequently than we would expect by chance, given the total number of times each category occurred.

It looks for an association between the variables. We cannot use a correlation coefficient to look for the patterns in this data because the categories often do not form a continuum.

There are three main types of Chi-square tests, tests of goodness of fit, the test of independence, and the test for homogeneity. All three tests rely on the same formula to compute a test statistic.

These tests function by deciphering relationships between observed sets of data and theoretical or “expected” sets of data that align with the null hypothesis.

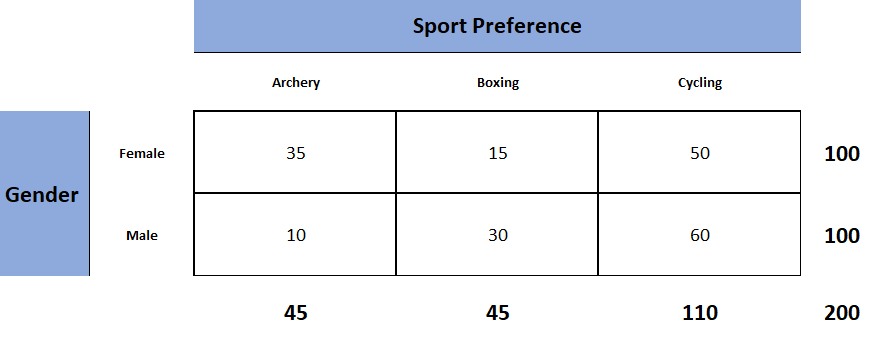

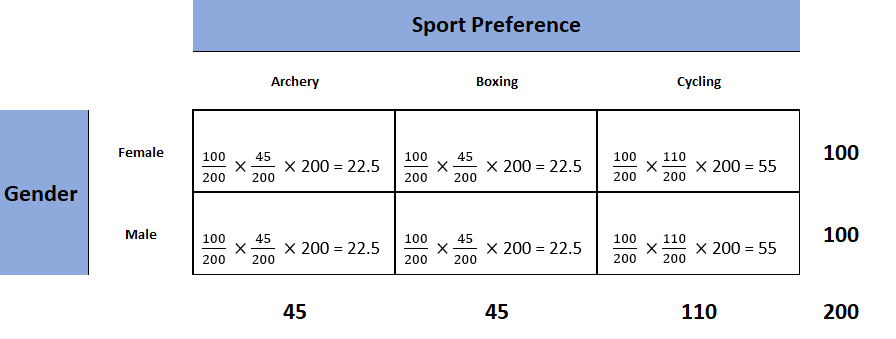

What is a Contingency Table?

Contingency tables (also known as two-way tables) are grids in which Chi-square data is organized and displayed. They provide a basic picture of the interrelation between two variables and can help find interactions between them.

In contingency tables, one variable and each of its categories are listed vertically, and the other variable and each of its categories are listed horizontally.

Additionally, including column and row totals, also known as “marginal frequencies,” will help facilitate the Chi-square testing process.

In order for the Chi-square test to be considered trustworthy, each cell of your expected contingency table must have a value of at least five.

Each Chi-square test will have one contingency table representing observed counts (see Fig. 1) and one contingency table representing expected counts (see Fig. 2).

Figure 1. Observed table (which contains the observed counts).

To obtain the expected frequencies for any cell in any cross-tabulation in which the two variables are assumed independent, multiply the row and column totals for that cell and divide the product by the total number of cases in the table.

Figure 2. Expected table (what we expect the two-way table to look like if the two categorical variables are independent).

To decide if our calculated value for χ2 is significant, we also need to work out the degrees of freedom for our contingency table using the following formula: df= (rows – 1) x (columns – 1).

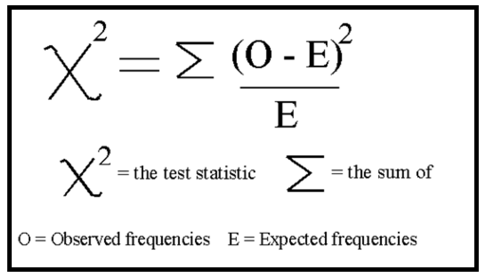

Formula Calculation

Calculate the chi-square statistic (χ2) by completing the following steps:

- Calculate the expected frequencies and the observed frequencies.

- For each observed number in the table, subtract the corresponding expected number (O — E).

- Square the difference (O —E)².

- Divide the squares obtained for each cell in the table by the expected number for that cell (O – E)² / E.

- Sum all the values for (O – E)² / E. This is the chi-square statistic.

- Calculate the degrees of freedom for the contingency table using the following formula; df= (rows – 1) x (columns – 1).

Once we have calculated the degrees of freedom (df) and the chi-squared value (χ2), we can use the χ2 table (often at the back of a statistics book) to check if our value for χ2 is higher than the critical value given in the table. If it is, then our result is significant at the level given.

Interpretation

The chi-square statistic tells you how much difference exists between the observed count in each table cell to the counts you would expect if there were no relationship at all in the population.

Small Chi-Square Statistic: If the chi-square statistic is small and the p-value is large (usually greater than 0.05), this often indicates that the observed frequencies in the sample are close to what would be expected under the null hypothesis.

The null hypothesis usually states no association between the variables being studied or that the observed distribution fits the expected distribution.

In theory, if the observed and expected values were equal (no difference), then the chi-square statistic would be zero — but this is unlikely to happen in real life.

Large Chi-Square Statistic : If the chi-square statistic is large and the p-value is small (usually less than 0.05), then the conclusion is often that the data does not fit the model well, i.e., the observed and expected values are significantly different. This often leads to the rejection of the null hypothesis.

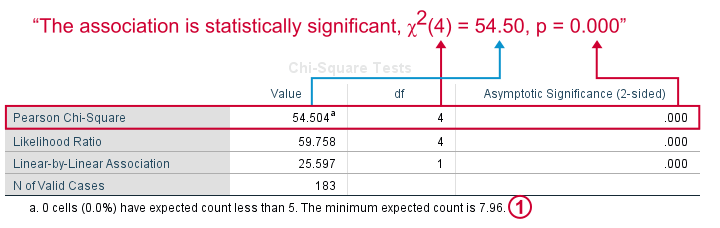

How to Report

To report a chi-square output in an APA-style results section, always rely on the following template:

χ2 ( degrees of freedom , N = sample size ) = chi-square statistic value , p = p value .

In the case of the above example, the results would be written as follows:

A chi-square test of independence showed that there was a significant association between gender and post-graduation education plans, χ2 (4, N = 101) = 54.50, p < .001.

APA Style Rules

- Do not use a zero before a decimal when the statistic cannot be greater than 1 (proportion, correlation, level of statistical significance).

- Report exact p values to two or three decimals (e.g., p = .006, p = .03).

- However, report p values less than .001 as “ p < .001.”

- Put a space before and after a mathematical operator (e.g., minus, plus, greater than, less than, equals sign).

- Do not repeat statistics in both the text and a table or figure.

p -value Interpretation

You test whether a given χ2 is statistically significant by testing it against a table of chi-square distributions , according to the number of degrees of freedom for your sample, which is the number of categories minus 1. The chi-square assumes that you have at least 5 observations per category.

If you are using SPSS then you will have an expected p -value.

For a chi-square test, a p-value that is less than or equal to the .05 significance level indicates that the observed values are different to the expected values.

Thus, low p-values (p< .05) indicate a likely difference between the theoretical population and the collected sample. You can conclude that a relationship exists between the categorical variables.

Remember that p -values do not indicate the odds that the null hypothesis is true but rather provide the probability that one would obtain the sample distribution observed (or a more extreme distribution) if the null hypothesis was true.

A level of confidence necessary to accept the null hypothesis can never be reached. Therefore, conclusions must choose to either fail to reject the null or accept the alternative hypothesis, depending on the calculated p-value.

The four steps below show you how to analyze your data using a chi-square goodness-of-fit test in SPSS (when you have hypothesized that you have equal expected proportions).

Step 1 : Analyze > Nonparametric Tests > Legacy Dialogs > Chi-square… on the top menu as shown below:

Step 2 : Move the variable indicating categories into the “Test Variable List:” box.

Step 3 : If you want to test the hypothesis that all categories are equally likely, click “OK.”

Step 4 : Specify the expected count for each category by first clicking the “Values” button under “Expected Values.”

Step 5 : Then, in the box to the right of “Values,” enter the expected count for category one and click the “Add” button. Now enter the expected count for category two and click “Add.” Continue in this way until all expected counts have been entered.

Step 6 : Then click “OK.”

The four steps below show you how to analyze your data using a chi-square test of independence in SPSS Statistics.

Step 1 : Open the Crosstabs dialog (Analyze > Descriptive Statistics > Crosstabs).

Step 2 : Select the variables you want to compare using the chi-square test. Click one variable in the left window and then click the arrow at the top to move the variable. Select the row variable and the column variable.

Step 3 : Click Statistics (a new pop-up window will appear). Check Chi-square, then click Continue.

Step 4 : (Optional) Check the box for Display clustered bar charts.

Step 5 : Click OK.

Goodness-of-Fit Test

The Chi-square goodness of fit test is used to compare a randomly collected sample containing a single, categorical variable to a larger population.

This test is most commonly used to compare a random sample to the population from which it was potentially collected.

The test begins with the creation of a null and alternative hypothesis. In this case, the hypotheses are as follows:

Null Hypothesis (Ho) : The null hypothesis (Ho) is that the observed frequencies are the same (except for chance variation) as the expected frequencies. The collected data is consistent with the population distribution.

Alternative Hypothesis (Ha) : The collected data is not consistent with the population distribution.

The next step is to create a contingency table that represents how the data would be distributed if the null hypothesis were exactly correct.

The sample’s overall deviation from this theoretical/expected data will allow us to draw a conclusion, with a more severe deviation resulting in smaller p-values.

Test for Independence

The Chi-square test for independence looks for an association between two categorical variables within the same population.

Unlike the goodness of fit test, the test for independence does not compare a single observed variable to a theoretical population but rather two variables within a sample set to one another.

The hypotheses for a Chi-square test of independence are as follows:

Null Hypothesis (Ho) : There is no association between the two categorical variables in the population of interest.

Alternative Hypothesis (Ha) : There is no association between the two categorical variables in the population of interest.

The next step is to create a contingency table of expected values that reflects how a data set that perfectly aligns the null hypothesis would appear.

The simplest way to do this is to calculate the marginal frequencies of each row and column; the expected frequency of each cell is equal to the marginal frequency of the row and column that corresponds to a given cell in the observed contingency table divided by the total sample size.

Test for Homogeneity

The Chi-square test for homogeneity is organized and executed exactly the same as the test for independence.

The main difference to remember between the two is that the test for independence looks for an association between two categorical variables within the same population, while the test for homogeneity determines if the distribution of a variable is the same in each of several populations (thus allocating population itself as the second categorical variable).

Null Hypothesis (Ho) : There is no difference in the distribution of a categorical variable for several populations or treatments.

Alternative Hypothesis (Ha) : There is a difference in the distribution of a categorical variable for several populations or treatments.

The difference between these two tests can be a bit tricky to determine, especially in the practical applications of a Chi-square test. A reliable rule of thumb is to determine how the data was collected.

If the data consists of only one random sample with the observations classified according to two categorical variables, it is a test for independence. If the data consists of more than one independent random sample, it is a test for homogeneity.

What is the chi-square test?

The Chi-square test is a non-parametric statistical test used to determine if there’s a significant association between two or more categorical variables in a sample.

It works by comparing the observed frequencies in each category of a cross-tabulation with the frequencies expected under the null hypothesis, which assumes there is no relationship between the variables.

This test is often used in fields like biology, marketing, sociology, and psychology for hypothesis testing.

What does chi-square tell you?

The Chi-square test informs whether there is a significant association between two categorical variables. Suppose the calculated Chi-square value is above the critical value from the Chi-square distribution.

In that case, it suggests a significant relationship between the variables, rejecting the null hypothesis of no association.

How to calculate chi-square?

To calculate the Chi-square statistic, follow these steps:

1. Create a contingency table of observed frequencies for each category.

2. Calculate expected frequencies for each category under the null hypothesis.

3. Compute the Chi-square statistic using the formula: Χ² = Σ [ (O_i – E_i)² / E_i ], where O_i is the observed frequency and E_i is the expected frequency.

4. Compare the calculated statistic with the critical value from the Chi-square distribution to draw a conclusion.

Related Articles

Exploratory Data Analysis

Research Methodology , Statistics

What Is Face Validity In Research? Importance & How To Measure

Criterion Validity: Definition & Examples

Convergent Validity: Definition and Examples

Content Validity in Research: Definition & Examples

Construct Validity In Psychology Research

User Preferences

Content preview.

Arcu felis bibendum ut tristique et egestas quis:

- Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris

- Duis aute irure dolor in reprehenderit in voluptate

- Excepteur sint occaecat cupidatat non proident

Keyboard Shortcuts

S.4 chi-square tests, chi-square test of independence section .

Do you remember how to test the independence of two categorical variables? This test is performed by using a Chi-square test of independence.

Recall that we can summarize two categorical variables within a two-way table, also called an r × c contingency table, where r = number of rows, c = number of columns. Our question of interest is “Are the two variables independent?” This question is set up using the following hypothesis statements:

\[E=\frac{\text{row total}\times\text{column total}}{\text{sample size}}\]

We will compare the value of the test statistic to the critical value of \(\chi_{\alpha}^2\) with the degree of freedom = ( r - 1) ( c - 1), and reject the null hypothesis if \(\chi^2 \gt \chi_{\alpha}^2\).

Example S.4.1 Section

Is gender independent of education level? A random sample of 395 people was surveyed and each person was asked to report the highest education level they obtained. The data that resulted from the survey are summarized in the following table:

Question : Are gender and education level dependent at a 5% level of significance? In other words, given the data collected above, is there a relationship between the gender of an individual and the level of education that they have obtained?

Here's the table of expected counts:

So, working this out, \(\chi^2= \dfrac{(60−50.886)^2}{50.886} + \cdots + \dfrac{(57 − 48.132)^2}{48.132} = 8.006\)

The critical value of \(\chi^2\) with 3 degrees of freedom is 7.815. Since 8.006 > 7.815, we reject the null hypothesis and conclude that the education level depends on gender at a 5% level of significance.

JMP | Statistical Discovery.™ From SAS.

Statistics Knowledge Portal

A free online introduction to statistics

The Chi-Square Test

What is a chi-square test.

A Chi-square test is a hypothesis testing method. Two common Chi-square tests involve checking if observed frequencies in one or more categories match expected frequencies.

Is a Chi-square test the same as a χ² test?

Yes, χ is the Greek symbol Chi.

What are my choices?

If you have a single measurement variable, you use a Chi-square goodness of fit test . If you have two measurement variables, you use a Chi-square test of independence . There are other Chi-square tests, but these two are the most common.

Types of Chi-square tests

You use a Chi-square test for hypothesis tests about whether your data is as expected. The basic idea behind the test is to compare the observed values in your data to the expected values that you would see if the null hypothesis is true.

There are two commonly used Chi-square tests: the Chi-square goodness of fit test and the Chi-square test of independence . Both tests involve variables that divide your data into categories. As a result, people can be confused about which test to use. The table below compares the two tests.

Visit the individual pages for each type of Chi-square test to see examples along with details on assumptions and calculations.

Table 1: Choosing a Chi-square test

How to perform a chi-square test.

For both the Chi-square goodness of fit test and the Chi-square test of independence , you perform the same analysis steps, listed below. Visit the pages for each type of test to see these steps in action.

- Define your null and alternative hypotheses before collecting your data.

- Decide on the alpha value. This involves deciding the risk you are willing to take of drawing the wrong conclusion. For example, suppose you set α=0.05 when testing for independence. Here, you have decided on a 5% risk of concluding the two variables are independent when in reality they are not.

- Check the data for errors.

- Check the assumptions for the test. (Visit the pages for each test type for more detail on assumptions.)

- Perform the test and draw your conclusion.

Both Chi-square tests in the table above involve calculating a test statistic. The basic idea behind the tests is that you compare the actual data values with what would be expected if the null hypothesis is true. The test statistic involves finding the squared difference between actual and expected data values, and dividing that difference by the expected data values. You do this for each data point and add up the values.

Then, you compare the test statistic to a theoretical value from the Chi-square distribution . The theoretical value depends on both the alpha value and the degrees of freedom for your data. Visit the pages for each test type for detailed examples.

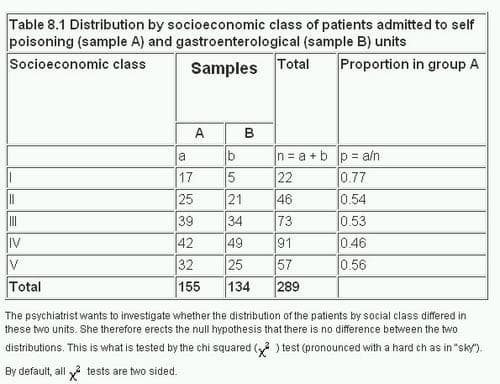

8. The Chi squared tests

The χ²tests.

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

Margin Size

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

11.2: Chi-Square One-Sample Goodness-of-Fit Tests

- Last updated

- Save as PDF

- Page ID 514

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\( \newcommand{\id}{\mathrm{id}}\) \( \newcommand{\Span}{\mathrm{span}}\)

( \newcommand{\kernel}{\mathrm{null}\,}\) \( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\) \( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\) \( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\id}{\mathrm{id}}\)

\( \newcommand{\kernel}{\mathrm{null}\,}\)

\( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\)

\( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\)

\( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\Span}{\mathrm{span}}\) \( \newcommand{\AA}{\unicode[.8,0]{x212B}}\)

\( \newcommand{\vectorA}[1]{\vec{#1}} % arrow\)

\( \newcommand{\vectorAt}[1]{\vec{\text{#1}}} % arrow\)

\( \newcommand{\vectorB}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vectorC}[1]{\textbf{#1}} \)

\( \newcommand{\vectorD}[1]{\overrightarrow{#1}} \)

\( \newcommand{\vectorDt}[1]{\overrightarrow{\text{#1}}} \)

\( \newcommand{\vectE}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash{\mathbf {#1}}}} \)

Learning Objectives

- To understand how to use a chi-square test to judge whether a sample fits a particular population well.

Suppose we wish to determine if an ordinary-looking six-sided die is fair, or balanced, meaning that every face has probability \(1/6\) of landing on top when the die is tossed. We could toss the die dozens, maybe hundreds, of times and compare the actual number of times each face landed on top to the expected number, which would be \(1/6\) of the total number of tosses. We wouldn’t expect each number to be exactly \(1/6\) of the total, but it should be close. To be specific, suppose the die is tossed \(n=60\) times with the results summarized in Table \(\PageIndex{1}\). For ease of reference we add a column of expected frequencies, which in this simple example is simply a column of \(10s\). The result is shown as Table \(\PageIndex{2}\). In analogy with the previous section we call this an “updated” table. A measure of how much the data deviate from what we would expect to see if the die really were fair is the sum of the squares of the differences between the observed frequency \(O\) and the expected frequency \(E\) in each row, or, standardizing by dividing each square by the expected number, the sum

\[\dfrac{Σ(O−E)^2}{E} \nonumber \]

If we formulate the investigation as a test of hypotheses, the test is

\[H_0: \text{The die is fair}\\ vs.\\ H_a: \text{The die is not fair} \nonumber \]

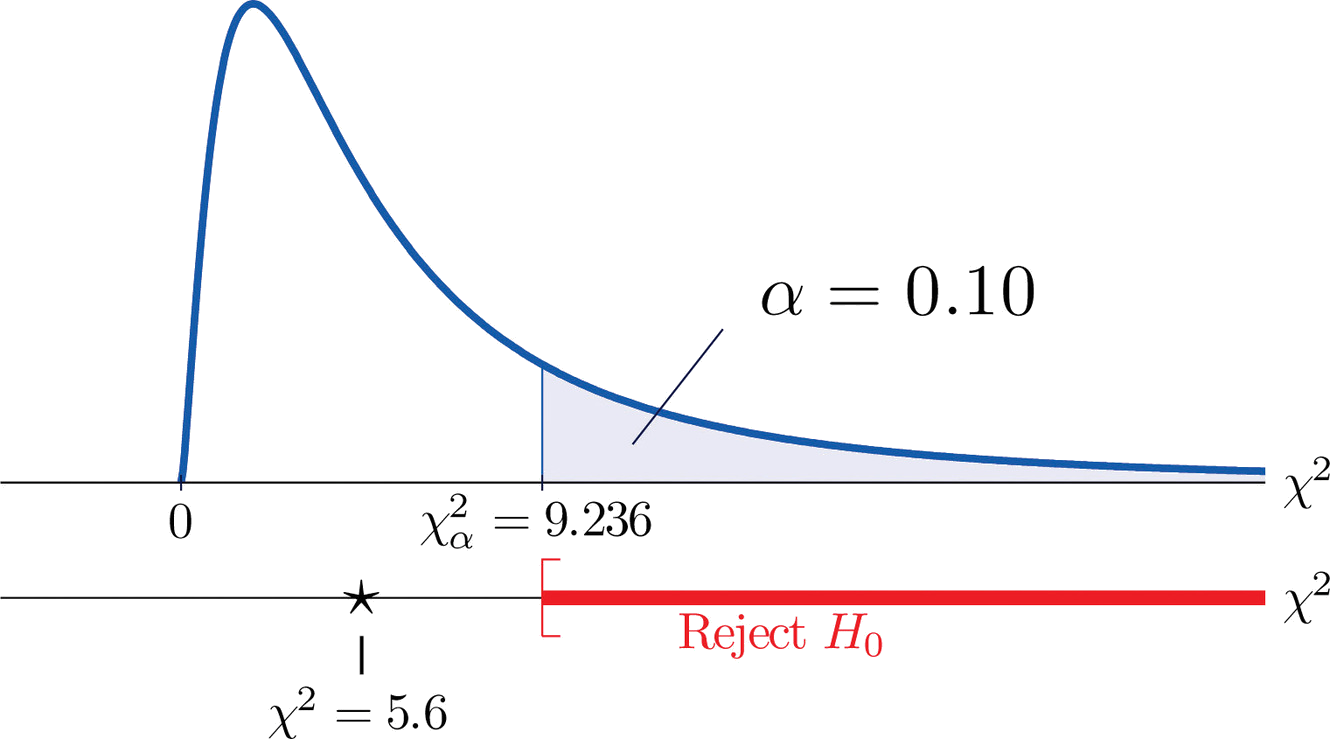

We would reject the null hypothesis that the die is fair only if the number \(\dfrac{Σ(O−E)^2}{E}\) is large, so the test is right-tailed. In this example the random variable \(\dfrac{Σ(O−E)^2}{E}\) has the chi-square distribution with five degrees of freedom. If we had decided at the outset to test at the \(10\%\) level of significance, the critical value defining the rejection region would be, reading from Figure 7.1.6, \(\chi _{\alpha }^{2}=\chi _{0.10}^{2}=9.236\), so that the rejection region would be the interval \[[9.236,\infty ) \nonumber \]. When we compute the value of the standardized test statistic using the numbers in the last two columns of Table \(\PageIndex{2}\), we obtain

\[\begin{align*} \sum \frac{(O-E)^2}{E} &= \frac{(-1)^2}{10}+\frac{(5)^2}{10}+\frac{(-1)^2}{10}+\frac{(-2)^2}{10}+\frac{(-4)^2}{10}+\frac{(3)^2}{10}\\ &= 0.1+2.5+0.1+0.4+1.6+0.9\\ &= 5.6 \end{align*} \nonumber \]

Since \(5.6<9.236\) the decision is not to reject \(H_0\). See Figure \(\PageIndex{1}\). The data do not provide sufficient evidence, at the \(10\%\) level of significance, to conclude that the die is loaded.

In the general situation we consider a discrete random variable that can take \(I\) different values, \(x_1,\: x_2,\cdots ,x_I\), for which the default assumption is that the probability distribution is

\[\begin{array}{c|c c c c} x & x_1 & x_2 & \cdots & x_I \\ \hline P(x) &p_1 &p_2 &\cdots &p_I\\ \end{array} \nonumber \]

We wish to test the hypotheses:

\[H_0: \text{The assumed probability distribution for X is valid}\\ vs.\\ H_a: \text{The assumed probability distribution for X is not valid} \nonumber \]

We take a sample of size \(n\) and obtain a list of observed frequencies. This is shown in Table \(\PageIndex{3}\). Based on the assumed probability distribution we also have a list of assumed frequencies, each of which is defined and computed by the formula

\[Ei=n×pi \nonumber \]

Table \(\PageIndex{3}\) is updated to Table \(\PageIndex{4}\) by adding the expected frequency for each value of \(X\). To simplify the notation we drop indices for the observed and expected frequencies and represent Table \(\PageIndex{4}\) by Table \(\PageIndex{5}\).

Here is the test statistic for the general hypothesis based on Table \(\PageIndex{5}\), together with the conditions that it follow a chi-square distribution.

Test Statistic for Testing Goodness of Fit to a Discrete Probability Distribution

\[\chi ^2 =\sum \frac{(O-E)^2}{E} \nonumber \]

where the sum is over all the rows of the table (one for each value of \(X\)).

- the true probability distribution of \(X\) is as assumed, and

- the observed count \(O\) of each cell in Table \(\PageIndex{5}\) is at least \(5\),

then \(\chi ^2\) approximately follows a chi-square distribution with \(df=I-1\) degrees of freedom.

The test is known as a goodness-of-fit \(\chi ^2\) test since it tests the null hypothesis that the sample fits the assumed probability distribution well. It is always right-tailed, since deviation from the assumed probability distribution corresponds to large values of \(\chi ^2\).

Testing is done using either of the usual five-step procedures.

Example \(\PageIndex{1}\)

Table \(\PageIndex{6}\) shows the distribution of various ethnic groups in the population of a particular state based on a decennial U.S. census. Five years later a random sample of \(2,500\) residents of the state was taken, with the results given in Table \(\PageIndex{7}\) (along with the probability distribution from the census year). Test, at the \(1\%\) level of significance, whether there is sufficient evidence in the sample to conclude that the distribution of ethnic groups in this state five years after the census had changed from that in the census year.

We test using the critical value approach.

- Step 1 . The hypotheses of interest in this case can be expressed as \[H_0: \text{The distribution of ethnic groups has not changed}\\ vs.\\ H_a: \text{The distribution of ethnic groups has changed} \nonumber \]

- Step 2 . The distribution is chi-square.

- Step 3 . To compute the value of the test statistic we must first compute the expected number for each row of Table \(\PageIndex{7}\). Since \(n=2500\), using the formula \(E_i=n\times p_i\) and the values of \(p_i\) from either Table \(\PageIndex{6}\) or Table \(\PageIndex{7}\), \[E_1=2500×0.743=1857.5\\ E_2=2500×0.216=540\\ E_3=2500×0.012=30\\ E_4=2500×0.012=30\\ E_5=2500×0.008=20\\ E_6=2500×0.009=22.5 \nonumber \]

Table \(\PageIndex{7}\) is updated to Table \(\PageIndex{8}\).

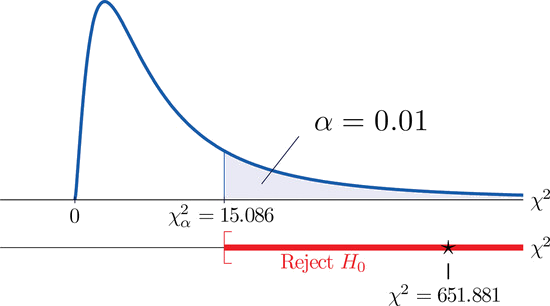

The value of the test statistic is

\[\begin{align*} \chi ^2 &= \sum \frac{(O-E)^2}{E}\\ &= \frac{(1732-1857.5)^2}{1857.5}+\frac{(538-540)^2}{540}+\frac{(32-30)^2}{30}+\frac{(42-30)^2}{30}+\frac{(133-20)^2}{20}+\frac{(23-22.5)^2}{22.5}\\ &= 651.881 \end{align*} \nonumber \]

Since the random variable takes six values, \(I=6\). Thus the test statistic follows the chi-square distribution with \(df=6-1=5\) degrees of freedom.

Since the test is right-tailed, the critical value is \(\chi _{0.01}^{2}\). Reading from Figure 7.1.6, \(\chi _{0.01}^{2}=15.086\), so the rejection region is \([15.086,\infty )\).

Since \(651.881>15.086\) the decision is to reject the null hypothesis. See Figure \(\PageIndex{2}\). The data provide sufficient evidence, at the \(1\%\) level of significance, to conclude that the ethnic distribution in this state has changed in the five years since the U.S. census.

Key Takeaway

- The chi-square goodness-of-fit test can be used to evaluate the hypothesis that a sample is taken from a population with an assumed specific probability distribution.

- Flashes Safe Seven

- FlashLine Login

- Faculty & Staff Phone Directory

- Emeriti or Retiree

- All Departments

- Maps & Directions

- Building Guide

- Departments

- Directions & Parking

- Faculty & Staff

- Give to University Libraries

- Library Instructional Spaces

- Mission & Vision

- Newsletters

- Circulation

- Course Reserves / Core Textbooks

- Equipment for Checkout

- Interlibrary Loan

- Library Instruction

- Library Tutorials

- My Library Account

- Open Access Kent State

- Research Support Services

- Statistical Consulting

- Student Multimedia Studio

- Citation Tools

- Databases A-to-Z

- Databases By Subject

- Digital Collections

- Discovery@Kent State

- Government Information

- Journal Finder

- Library Guides

- Connect from Off-Campus

- Library Workshops

- Subject Librarians Directory

- Suggestions/Feedback

- Writing Commons

- Academic Integrity

- Jobs for Students

- International Students

- Meet with a Librarian

- Study Spaces

- University Libraries Student Scholarship

- Affordable Course Materials

- Copyright Services

- Selection Manager

- Suggest a Purchase

Library Locations at the Kent Campus

- Architecture Library

- Fashion Library

- Map Library

- Performing Arts Library

- Special Collections and Archives

Regional Campus Libraries

- East Liverpool

- College of Podiatric Medicine

- Kent State University

- SPSS Tutorials

Chi-Square Test of Independence

Spss tutorials: chi-square test of independence.

- The SPSS Environment

- The Data View Window

- Using SPSS Syntax

- Data Creation in SPSS

- Importing Data into SPSS

- Variable Types

- Date-Time Variables in SPSS

- Defining Variables

- Creating a Codebook

- Computing Variables

- Computing Variables: Mean Centering

- Computing Variables: Recoding Categorical Variables

- Computing Variables: Recoding String Variables into Coded Categories (Automatic Recode)

- rank transform converts a set of data values by ordering them from smallest to largest, and then assigning a rank to each value. In SPSS, the Rank Cases procedure can be used to compute the rank transform of a variable." href="https://libguides.library.kent.edu/SPSS/RankCases" style="" >Computing Variables: Rank Transforms (Rank Cases)

- Weighting Cases

- Sorting Data

- Grouping Data

- Descriptive Stats for One Numeric Variable (Explore)

- Descriptive Stats for One Numeric Variable (Frequencies)

- Descriptive Stats for Many Numeric Variables (Descriptives)

- Descriptive Stats by Group (Compare Means)

- Frequency Tables

- Working with "Check All That Apply" Survey Data (Multiple Response Sets)

- Pearson Correlation

- One Sample t Test

- Paired Samples t Test

- Independent Samples t Test

- One-Way ANOVA

- How to Cite the Tutorials

Sample Data Files

Our tutorials reference a dataset called "sample" in many examples. If you'd like to download the sample dataset to work through the examples, choose one of the files below:

- Data definitions (*.pdf)

- Data - Comma delimited (*.csv)

- Data - Tab delimited (*.txt)

- Data - Excel format (*.xlsx)

- Data - SAS format (*.sas7bdat)

- Data - SPSS format (*.sav)

- SPSS Syntax (*.sps) Syntax to add variable labels, value labels, set variable types, and compute several recoded variables used in later tutorials.

- SAS Syntax (*.sas) Syntax to read the CSV-format sample data and set variable labels and formats/value labels.

The Chi-Square Test of Independence determines whether there is an association between categorical variables (i.e., whether the variables are independent or related). It is a nonparametric test.

This test is also known as:

- Chi-Square Test of Association.

This test utilizes a contingency table to analyze the data. A contingency table (also known as a cross-tabulation , crosstab , or two-way table ) is an arrangement in which data is classified according to two categorical variables. The categories for one variable appear in the rows, and the categories for the other variable appear in columns. Each variable must have two or more categories. Each cell reflects the total count of cases for a specific pair of categories.

There are several tests that go by the name "chi-square test" in addition to the Chi-Square Test of Independence. Look for context clues in the data and research question to make sure what form of the chi-square test is being used.

Common Uses

The Chi-Square Test of Independence is commonly used to test the following:

- Statistical independence or association between two categorical variables.

The Chi-Square Test of Independence can only compare categorical variables. It cannot make comparisons between continuous variables or between categorical and continuous variables. Additionally, the Chi-Square Test of Independence only assesses associations between categorical variables, and can not provide any inferences about causation.

If your categorical variables represent "pre-test" and "post-test" observations, then the chi-square test of independence is not appropriate . This is because the assumption of the independence of observations is violated. In this situation, McNemar's Test is appropriate.

Data Requirements

Your data must meet the following requirements:

- Two categorical variables.

- Two or more categories (groups) for each variable.

- There is no relationship between the subjects in each group.

- The categorical variables are not "paired" in any way (e.g. pre-test/post-test observations).

- Expected frequencies for each cell are at least 1.

- Expected frequencies should be at least 5 for the majority (80%) of the cells.

The null hypothesis ( H 0 ) and alternative hypothesis ( H 1 ) of the Chi-Square Test of Independence can be expressed in two different but equivalent ways:

H 0 : "[ Variable 1 ] is independent of [ Variable 2 ]" H 1 : "[ Variable 1 ] is not independent of [ Variable 2 ]"

H 0 : "[ Variable 1 ] is not associated with [ Variable 2 ]" H 1 : "[ Variable 1 ] is associated with [ Variable 2 ]"

Test Statistic

The test statistic for the Chi-Square Test of Independence is denoted Χ 2 , and is computed as:

$$ \chi^{2} = \sum_{i=1}^{R}{\sum_{j=1}^{C}{\frac{(o_{ij} - e_{ij})^{2}}{e_{ij}}}} $$

\(o_{ij}\) is the observed cell count in the i th row and j th column of the table

\(e_{ij}\) is the expected cell count in the i th row and j th column of the table, computed as

$$ e_{ij} = \frac{\mathrm{ \textrm{row } \mathit{i}} \textrm{ total} * \mathrm{\textrm{col } \mathit{j}} \textrm{ total}}{\textrm{grand total}} $$

The quantity ( o ij - e ij ) is sometimes referred to as the residual of cell ( i , j ), denoted \(r_{ij}\).

The calculated Χ 2 value is then compared to the critical value from the Χ 2 distribution table with degrees of freedom df = ( R - 1)( C - 1) and chosen confidence level. If the calculated Χ 2 value > critical Χ 2 value, then we reject the null hypothesis.

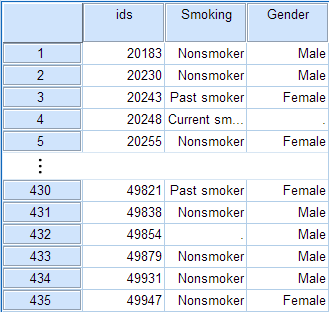

Data Set-Up

There are two different ways in which your data may be set up initially. The format of the data will determine how to proceed with running the Chi-Square Test of Independence. At minimum, your data should include two categorical variables (represented in columns) that will be used in the analysis. The categorical variables must include at least two groups. Your data may be formatted in either of the following ways:

If you have the raw data (each row is a subject):

- Cases represent subjects, and each subject appears once in the dataset. That is, each row represents an observation from a unique subject.

- The dataset contains at least two nominal categorical variables (string or numeric). The categorical variables used in the test must have two or more categories.

If you have frequencies (each row is a combination of factors):

An example of using the chi-square test for this type of data can be found in the Weighting Cases tutorial .

- Each row in the dataset represents a distinct combination of the categories.

- The value in the "frequency" column for a given row is the number of unique subjects with that combination of categories.

- You should have three variables: one representing each category, and a third representing the number of occurrences of that particular combination of factors.

- Before running the test, you must activate Weight Cases, and set the frequency variable as the weight.

Run a Chi-Square Test of Independence

In SPSS, the Chi-Square Test of Independence is an option within the Crosstabs procedure. Recall that the Crosstabs procedure creates a contingency table or two-way table , which summarizes the distribution of two categorical variables.

To create a crosstab and perform a chi-square test of independence, click Analyze > Descriptive Statistics > Crosstabs .

A Row(s): One or more variables to use in the rows of the crosstab(s). You must enter at least one Row variable.

B Column(s): One or more variables to use in the columns of the crosstab(s). You must enter at least one Column variable.

Also note that if you specify one row variable and two or more column variables, SPSS will print crosstabs for each pairing of the row variable with the column variables. The same is true if you have one column variable and two or more row variables, or if you have multiple row and column variables. A chi-square test will be produced for each table. Additionally, if you include a layer variable, chi-square tests will be run for each pair of row and column variables within each level of the layer variable.

C Layer: An optional "stratification" variable. If you have turned on the chi-square test results and have specified a layer variable, SPSS will subset the data with respect to the categories of the layer variable, then run chi-square tests between the row and column variables. (This is not equivalent to testing for a three-way association, or testing for an association between the row and column variable after controlling for the layer variable.)

D Statistics: Opens the Crosstabs: Statistics window, which contains fifteen different inferential statistics for comparing categorical variables.

To run the Chi-Square Test of Independence, make sure that the Chi-square box is checked.

E Cells: Opens the Crosstabs: Cell Display window, which controls which output is displayed in each cell of the crosstab. (Note: in a crosstab, the cells are the inner sections of the table. They show the number of observations for a given combination of the row and column categories.) There are three options in this window that are useful (but optional) when performing a Chi-Square Test of Independence:

1 Observed : The actual number of observations for a given cell. This option is enabled by default.

2 Expected : The expected number of observations for that cell (see the test statistic formula).

3 Unstandardized Residuals : The "residual" value, computed as observed minus expected.

F Format: Opens the Crosstabs: Table Format window, which specifies how the rows of the table are sorted.

Example: Chi-square Test for 3x2 Table

Problem statement.

In the sample dataset, respondents were asked their gender and whether or not they were a cigarette smoker. There were three answer choices: Nonsmoker, Past smoker, and Current smoker. Suppose we want to test for an association between smoking behavior (nonsmoker, current smoker, or past smoker) and gender (male or female) using a Chi-Square Test of Independence (we'll use α = 0.05).

Before the Test

Before we test for "association", it is helpful to understand what an "association" and a "lack of association" between two categorical variables looks like. One way to visualize this is using clustered bar charts. Let's look at the clustered bar chart produced by the Crosstabs procedure.

This is the chart that is produced if you use Smoking as the row variable and Gender as the column variable (running the syntax later in this example):

The "clusters" in a clustered bar chart are determined by the row variable (in this case, the smoking categories). The color of the bars is determined by the column variable (in this case, gender). The height of each bar represents the total number of observations in that particular combination of categories.

This type of chart emphasizes the differences within the categories of the row variable. Notice how within each smoking category, the heights of the bars (i.e., the number of males and females) are very similar. That is, there are an approximately equal number of male and female nonsmokers; approximately equal number of male and female past smokers; approximately equal number of male and female current smokers. If there were an association between gender and smoking, we would expect these counts to differ between groups in some way.

Running the Test

- Open the Crosstabs dialog ( Analyze > Descriptive Statistics > Crosstabs ).

- Select Smoking as the row variable, and Gender as the column variable.

- Click Statistics . Check Chi-square , then click Continue .

- (Optional) Check the box for Display clustered bar charts .

The first table is the Case Processing summary, which tells us the number of valid cases used for analysis. Only cases with nonmissing values for both smoking behavior and gender can be used in the test.

The next tables are the crosstabulation and chi-square test results.

The key result in the Chi-Square Tests table is the Pearson Chi-Square.

- The value of the test statistic is 3.171.

- The footnote for this statistic pertains to the expected cell count assumption (i.e., expected cell counts are all greater than 5): no cells had an expected count less than 5, so this assumption was met.

- Because the test statistic is based on a 3x2 crosstabulation table, the degrees of freedom (df) for the test statistic is $$ df = (R - 1)*(C - 1) = (3 - 1)*(2 - 1) = 2*1 = 2 $$.

- The corresponding p-value of the test statistic is p = 0.205.

Decision and Conclusions

Since the p-value is greater than our chosen significance level ( α = 0.05), we do not reject the null hypothesis. Rather, we conclude that there is not enough evidence to suggest an association between gender and smoking.

Based on the results, we can state the following:

- No association was found between gender and smoking behavior ( Χ 2 (2)> = 3.171, p = 0.205).

Example: Chi-square Test for 2x2 Table

Let's continue the row and column percentage example from the Crosstabs tutorial, which described the relationship between the variables RankUpperUnder (upperclassman/underclassman) and LivesOnCampus (lives on campus/lives off-campus). Recall that the column percentages of the crosstab appeared to indicate that upperclassmen were less likely than underclassmen to live on campus:

- The proportion of underclassmen who live off campus is 34.8%, or 79/227.

- The proportion of underclassmen who live on campus is 65.2%, or 148/227.

- The proportion of upperclassmen who live off campus is 94.4%, or 152/161.

- The proportion of upperclassmen who live on campus is 5.6%, or 9/161.

Suppose that we want to test the association between class rank and living on campus using a Chi-Square Test of Independence (using α = 0.05).

The clustered bar chart from the Crosstabs procedure can act as a complement to the column percentages above. Let's look at the chart produced by the Crosstabs procedure for this example:

The height of each bar represents the total number of observations in that particular combination of categories. The "clusters" are formed by the row variable (in this case, class rank). This type of chart emphasizes the differences within the underclassmen and upperclassmen groups. Here, the differences in number of students living on campus versus living off-campus is much starker within the class rank groups.

- Select RankUpperUnder as the row variable, and LiveOnCampus as the column variable.