The Future of AI Research: 20 Thesis Ideas for Undergraduate Students in Machine Learning and Deep Learning for 2023!

A comprehensive guide for crafting an original and innovative thesis in the field of ai..

By Aarafat Islam on 2023-01-11

“The beauty of machine learning is that it can be applied to any problem you want to solve, as long as you can provide the computer with enough examples.” — Andrew Ng

This article provides a list of 20 potential thesis ideas for an undergraduate program in machine learning and deep learning in 2023. Each thesis idea includes an introduction , which presents a brief overview of the topic and the research objectives . The ideas provided are related to different areas of machine learning and deep learning, such as computer vision, natural language processing, robotics, finance, drug discovery, and more. The article also includes explanations, examples, and conclusions for each thesis idea, which can help guide the research and provide a clear understanding of the potential contributions and outcomes of the proposed research. The article also emphasized the importance of originality and the need for proper citation in order to avoid plagiarism.

1. Investigating the use of Generative Adversarial Networks (GANs) in medical imaging: A deep learning approach to improve the accuracy of medical diagnoses.

Introduction: Medical imaging is an important tool in the diagnosis and treatment of various medical conditions. However, accurately interpreting medical images can be challenging, especially for less experienced doctors. This thesis aims to explore the use of GANs in medical imaging, in order to improve the accuracy of medical diagnoses.

2. Exploring the use of deep learning in natural language generation (NLG): An analysis of the current state-of-the-art and future potential.

Introduction: Natural language generation is an important field in natural language processing (NLP) that deals with creating human-like text automatically. Deep learning has shown promising results in NLP tasks such as machine translation, sentiment analysis, and question-answering. This thesis aims to explore the use of deep learning in NLG and analyze the current state-of-the-art models, as well as potential future developments.

3. Development and evaluation of deep reinforcement learning (RL) for robotic navigation and control.

Introduction: Robotic navigation and control are challenging tasks, which require a high degree of intelligence and adaptability. Deep RL has shown promising results in various robotics tasks, such as robotic arm control, autonomous navigation, and manipulation. This thesis aims to develop and evaluate a deep RL-based approach for robotic navigation and control and evaluate its performance in various environments and tasks.

4. Investigating the use of deep learning for drug discovery and development.

Introduction: Drug discovery and development is a time-consuming and expensive process, which often involves high failure rates. Deep learning has been used to improve various tasks in bioinformatics and biotechnology, such as protein structure prediction and gene expression analysis. This thesis aims to investigate the use of deep learning for drug discovery and development and examine its potential to improve the efficiency and accuracy of the drug development process.

5. Comparison of deep learning and traditional machine learning methods for anomaly detection in time series data.

Introduction: Anomaly detection in time series data is a challenging task, which is important in various fields such as finance, healthcare, and manufacturing. Deep learning methods have been used to improve anomaly detection in time series data, while traditional machine learning methods have been widely used as well. This thesis aims to compare deep learning and traditional machine learning methods for anomaly detection in time series data and examine their respective strengths and weaknesses.

Photo by Joanna Kosinska on Unsplash

6. Use of deep transfer learning in speech recognition and synthesis.

Introduction: Speech recognition and synthesis are areas of natural language processing that focus on converting spoken language to text and vice versa. Transfer learning has been widely used in deep learning-based speech recognition and synthesis systems to improve their performance by reusing the features learned from other tasks. This thesis aims to investigate the use of transfer learning in speech recognition and synthesis and how it improves the performance of the system in comparison to traditional methods.

7. The use of deep learning for financial prediction.

Introduction: Financial prediction is a challenging task that requires a high degree of intelligence and adaptability, especially in the field of stock market prediction. Deep learning has shown promising results in various financial prediction tasks, such as stock price prediction and credit risk analysis. This thesis aims to investigate the use of deep learning for financial prediction and examine its potential to improve the accuracy of financial forecasting.

8. Investigating the use of deep learning for computer vision in agriculture.

Introduction: Computer vision has the potential to revolutionize the field of agriculture by improving crop monitoring, precision farming, and yield prediction. Deep learning has been used to improve various computer vision tasks, such as object detection, semantic segmentation, and image classification. This thesis aims to investigate the use of deep learning for computer vision in agriculture and examine its potential to improve the efficiency and accuracy of crop monitoring and precision farming.

9. Development and evaluation of deep learning models for generative design in engineering and architecture.

Introduction: Generative design is a powerful tool in engineering and architecture that can help optimize designs and reduce human error. Deep learning has been used to improve various generative design tasks, such as design optimization and form generation. This thesis aims to develop and evaluate deep learning models for generative design in engineering and architecture and examine their potential to improve the efficiency and accuracy of the design process.

10. Investigating the use of deep learning for natural language understanding.

Introduction: Natural language understanding is a complex task of natural language processing that involves extracting meaning from text. Deep learning has been used to improve various NLP tasks, such as machine translation, sentiment analysis, and question-answering. This thesis aims to investigate the use of deep learning for natural language understanding and examine its potential to improve the efficiency and accuracy of natural language understanding systems.

Photo by UX Indonesia on Unsplash

11. Comparing deep learning and traditional machine learning methods for image compression.

Introduction: Image compression is an important task in image processing and computer vision. It enables faster data transmission and storage of image files. Deep learning methods have been used to improve image compression, while traditional machine learning methods have been widely used as well. This thesis aims to compare deep learning and traditional machine learning methods for image compression and examine their respective strengths and weaknesses.

12. Using deep learning for sentiment analysis in social media.

Introduction: Sentiment analysis in social media is an important task that can help businesses and organizations understand their customers’ opinions and feedback. Deep learning has been used to improve sentiment analysis in social media, by training models on large datasets of social media text. This thesis aims to use deep learning for sentiment analysis in social media, and evaluate its performance against traditional machine learning methods.

13. Investigating the use of deep learning for image generation.

Introduction: Image generation is a task in computer vision that involves creating new images from scratch or modifying existing images. Deep learning has been used to improve various image generation tasks, such as super-resolution, style transfer, and face generation. This thesis aims to investigate the use of deep learning for image generation and examine its potential to improve the quality and diversity of generated images.

14. Development and evaluation of deep learning models for anomaly detection in cybersecurity.

Introduction: Anomaly detection in cybersecurity is an important task that can help detect and prevent cyber-attacks. Deep learning has been used to improve various anomaly detection tasks, such as intrusion detection and malware detection. This thesis aims to develop and evaluate deep learning models for anomaly detection in cybersecurity and examine their potential to improve the efficiency and accuracy of cybersecurity systems.

15. Investigating the use of deep learning for natural language summarization.

Introduction: Natural language summarization is an important task in natural language processing that involves creating a condensed version of a text that preserves its main meaning. Deep learning has been used to improve various natural language summarization tasks, such as document summarization and headline generation. This thesis aims to investigate the use of deep learning for natural language summarization and examine its potential to improve the efficiency and accuracy of natural language summarization systems.

Photo by Windows on Unsplash

16. Development and evaluation of deep learning models for facial expression recognition.

Introduction: Facial expression recognition is an important task in computer vision and has many practical applications, such as human-computer interaction, emotion recognition, and psychological studies. Deep learning has been used to improve facial expression recognition, by training models on large datasets of images. This thesis aims to develop and evaluate deep learning models for facial expression recognition and examine their performance against traditional machine learning methods.

17. Investigating the use of deep learning for generative models in music and audio.

Introduction: Music and audio synthesis is an important task in audio processing, which has many practical applications, such as music generation and speech synthesis. Deep learning has been used to improve generative models for music and audio, by training models on large datasets of audio data. This thesis aims to investigate the use of deep learning for generative models in music and audio and examine its potential to improve the quality and diversity of generated audio.

18. Study the comparison of deep learning models with traditional algorithms for anomaly detection in network traffic.

Introduction: Anomaly detection in network traffic is an important task that can help detect and prevent cyber-attacks. Deep learning models have been used for this task, and traditional methods such as clustering and rule-based systems are widely used as well. This thesis aims to compare deep learning models with traditional algorithms for anomaly detection in network traffic and analyze the trade-offs between the models in terms of accuracy and scalability.

19. Investigating the use of deep learning for improving recommender systems.

Introduction: Recommender systems are widely used in many applications such as online shopping, music streaming, and movie streaming. Deep learning has been used to improve the performance of recommender systems, by training models on large datasets of user-item interactions. This thesis aims to investigate the use of deep learning for improving recommender systems and compare its performance with traditional content-based and collaborative filtering approaches.

20. Development and evaluation of deep learning models for multi-modal data analysis.

Introduction: Multi-modal data analysis is the task of analyzing and understanding data from multiple sources such as text, images, and audio. Deep learning has been used to improve multi-modal data analysis, by training models on large datasets of multi-modal data. This thesis aims to develop and evaluate deep learning models for multi-modal data analysis and analyze their potential to improve performance in comparison to single-modal models.

I hope that this article has provided you with a useful guide for your thesis research in machine learning and deep learning. Remember to conduct a thorough literature review and to include proper citations in your work, as well as to be original in your research to avoid plagiarism. I wish you all the best of luck with your thesis and your research endeavors!

Continue Learning

Understanding the mechanics: how ai art generators produce unique artworks, the best free ai tool for image generation: not midjourney, midjourney lighting guide: tips and advice, wondershare virbo reviewed: the best ai video creator, how ai is altering our memories and perception of reality, prompt engineering: how to turn your words into works of art.

A list of completed theses and new thesis topics from the Computer Vision Group.

Are you about to start a BSc or MSc thesis? Please read our instructions for preparing and delivering your work.

Below we list possible thesis topics for Bachelor and Master students in the areas of Computer Vision, Machine Learning, Deep Learning and Pattern Recognition. The project descriptions leave plenty of room for your own ideas. If you would like to discuss a topic in detail, please contact the supervisor listed below and Prof. Paolo Favaro to schedule a meeting. Note that for MSc students in Computer Science it is required that the official advisor is a professor in CS.

AI deconvolution of light microscopy images

Level: master.

Background Light microscopy became an indispensable tool in life sciences research. Deconvolution is an important image processing step in improving the quality of microscopy images for removing out-of-focus light, higher resolution, and beter signal to noise ratio. Currently classical deconvolution methods, such as regularisation or blind deconvolution, are implemented in numerous commercial software packages and widely used in research. Recently AI deconvolution algorithms have been introduced and being currently actively developed, as they showed a high application potential.

Aim Adaptation of available AI algorithms for deconvolution of microscopy images. Validation of these methods against state-of-the -art commercially available deconvolution software.

Material and Methods Student will implement and further develop available AI deconvolution methods and acquire test microscopy images of different modalities. Performance of developed AI algorithms will be validated against available commercial deconvolution software.

- Al algorithm development and implementation: 50%.

- Data acquisition: 10%.

- Comparison of performance: 40 %.

Requirements

- Interest in imaging.

- Solid knowledge of AI.

- Good programming skills.

Supervisors Paolo Favaro, Guillaume Witz, Yury Belyaev.

Institutes Computer Vison Group, Digital Science Lab, Microscopy imaging Center.

Contact Yury Belyaev, Microscopy imaging Center, [email protected] , + 41 78 899 0110.

Instance segmentation of cryo-ET images

Level: bachelor/master.

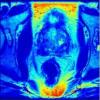

In the 1600s, a pioneering Dutch scientist named Antonie van Leeuwenhoek embarked on a remarkable journey that would forever transform our understanding of the natural world. Armed with a simple yet ingenious invention, the light microscope, he delved into uncharted territory, peering through its lens to reveal the hidden wonders of microscopic structures. Fast forward to today, where cryo-electron tomography (cryo-ET) has emerged as a groundbreaking technique, allowing researchers to study proteins within their natural cellular environments. Proteins, functioning as vital nano-machines, play crucial roles in life and understanding their localization and interactions is key to both basic research and disease comprehension. However, cryo-ET images pose challenges due to inherent noise and a scarcity of annotated data for training deep learning models.

Credit: S. Albert et al./PNAS (CC BY 4.0)

To address these challenges, this project aims to develop a self-supervised pipeline utilizing diffusion models for instance segmentation in cryo-ET images. By leveraging the power of diffusion models, which iteratively diffuse information to capture underlying patterns, the pipeline aims to refine and accurately segment cryo-ET images. Self-supervised learning, which relies on unlabeled data, reduces the dependence on extensive manual annotations. Successful implementation of this pipeline could revolutionize the field of structural biology, facilitating the analysis of protein distribution and organization within cellular contexts. Moreover, it has the potential to alleviate the limitations posed by limited annotated data, enabling more efficient extraction of valuable information from cryo-ET images and advancing biomedical applications by enhancing our understanding of protein behavior.

Methods The segmentation pipeline for cryo-electron tomography (cryo-ET) images consists of two stages: training a diffusion model for image generation and training an instance segmentation U-Net using synthetic and real segmentation masks.

1. Diffusion Model Training: a. Data Collection: Collect and curate cryo-ET image datasets from the EMPIAR database (https://www.ebi.ac.uk/empiar/). b. Architecture Design: Select an appropriate architecture for the diffusion model. c. Model Evaluation: Cryo-ET experts will help assess image quality and fidelity through visual inspection and quantitative measures 2. Building the Segmentation dataset: a. Synthetic and real mask generation: Use the trained diffusion model to generate synthetic cryo-ET images. The diffusion process will be seeded from either a real or a synthetic segmentation mask. This will yield to pairs of cryo-ET images and segmentation masks. 3. Instance Segmentation U-Net Training: a. Architecture Design: Choose an appropriate instance segmentation U-Net architecture. b. Model Evaluation: Evaluate the trained U-Net using precision, recall, and F1 score metrics.

By combining the diffusion model for cryo-ET image generation and the instance segmentation U-Net, this pipeline provides an efficient and accurate approach to segment structures in cryo-ET images, facilitating further analysis and interpretation.

References 1. Kwon, Diana. "The secret lives of cells-as never seen before." Nature 598.7882 (2021): 558-560. 2. Moebel, Emmanuel, et al. "Deep learning improves macromolecule identification in 3D cellular cryo-electron tomograms." Nature methods 18.11 (2021): 1386-1394. 3. Rice, Gavin, et al. "TomoTwin: generalized 3D localization of macromolecules in cryo-electron tomograms with structural data mining." Nature Methods (2023): 1-10.

Contacts Prof. Thomas Lemmin Institute of Biochemistry and Molecular Medicine Bühlstrasse 28, 3012 Bern ( [email protected] )

Prof. Paolo Favaro Institute of Computer Science Neubrückstrasse 10 3012 Bern ( [email protected] )

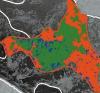

Adding and removing multiple sclerosis lesions with to imaging with diffusion networks

Background multiple sclerosis lesions are the result of demyelination: they appear as dark spots on t1 weighted mri imaging and as bright spots on flair mri imaging. image analysis for ms patients requires both the accurate detection of new and enhancing lesions, and the assessment of atrophy via local thickness and/or volume changes in the cortex. detection of new and growing lesions is possible using deep learning, but made difficult by the relative lack of training data: meanwhile cortical morphometry can be affected by the presence of lesions, meaning that removing lesions prior to morphometry may be more robust. existing ‘lesion filling’ methods are rather crude, yielding unrealistic-appearing brains where the borders of the removed lesions are clearly visible., aim: denoising diffusion networks are the current gold standard in mri image generation [1]: we aim to leverage this technology to remove and add lesions to existing mri images. this will allow us to create realistic synthetic mri images for training and validating ms lesion segmentation algorithms, and for investigating the sensitivity of morphometry software to the presence of ms lesions at a variety of lesion load levels., materials and methods: a large, annotated, heterogeneous dataset of mri data from ms patients, as well as images of healthy controls without white matter lesions, will be available for developing the method. the student will work in a research group with a long track record in applying deep learning methods to neuroimaging data, as well as experience training denoising diffusion networks..

Nature of the Thesis:

Literature review: 10%

Replication of Blob Loss paper: 10%

Implementation of the sliding window metrics:10%

Training on MS lesion segmentation task: 30%

Extension to other datasets: 20%

Results analysis: 20%

Fig. Results of an existing lesion filling algorithm, showing inadequate performance

Requirements:

Interest/Experience with image processing

Python programming knowledge (Pytorch bonus)

Interest in neuroimaging

Supervisor(s):

PD. Dr. Richard McKinley

Institutes: Diagnostic and Interventional Neuroradiology

Center for Artificial Intelligence in Medicine (CAIM), University of Bern

References: [1] Brain Imaging Generation with Latent Diffusion Models , Pinaya et al, Accepted in the Deep Generative Models workshop @ MICCAI 2022 , https://arxiv.org/abs/2209.07162

Contact : PD Dr Richard McKinley, Support Centre for Advanced Neuroimaging ( [email protected] )

Improving metrics and loss functions for targets with imbalanced size: sliding window Dice coefficient and loss.

Background The Dice coefficient is the most commonly used metric for segmentation quality in medical imaging, and a differentiable version of the coefficient is often used as a loss function, in particular for small target classes such as multiple sclerosis lesions. Dice coefficient has the benefit that it is applicable in instances where the target class is in the minority (for example, in case of segmenting small lesions). However, if lesion sizes are mixed, the loss and metric is biased towards performance on large lesions, leading smaller lesions to be missed and harming overall lesion detection. A recently proposed loss function (blob loss[1]) aims to combat this by treating each connected component of a lesion mask separately, and claims improvements over Dice loss on lesion detection scores in a variety of tasks.

Aim: The aim of this thesisis twofold. First, to benchmark blob loss against a simple, potentially superior loss for instance detection: sliding window Dice loss, in which the Dice loss is calculated over a sliding window across the area/volume of the medical image. Second, we will investigate whether a sliding window Dice coefficient is better corellated with lesion-wise detection metrics than Dice coefficient and may serve as an alternative metric capturing both global and instance-wise detection.

Materials and Methods: A large, annotated, heterogeneous dataset of MRI data from MS patients will be available for benchmarking the method, as well as our existing codebases for MS lesion segmentation. Extension of the method to other diseases and datasets (such as covered in the blob loss paper) will make the method more plausible for publication. The student will work alongside clinicians and engineers carrying out research in multiple sclerosis lesion segmentation, in particular in the context of our running project supported by the CAIM grant.

Fig. An annotated MS lesion case, showing the variety of lesion sizes

References: [1] blob loss: instance imbalance aware loss functions for semantic segmentation, Kofler et al, https://arxiv.org/abs/2205.08209

Idempotent and partial skull-stripping in multispectral MRI imaging

Background Skull stripping (or brain extraction) refers to the masking of non-brain tissue from structural MRI imaging. Since 3D MRI sequences allow reconstruction of facial features, many data providers supply data only after skull-stripping, making this a vital tool in data sharing. Furthermore, skull-stripping is an important pre-processing step in many neuroimaging pipelines, even in the deep-learning era: while many methods could now operate on data with skull present, they have been trained only on skull-stripped data and therefore produce spurious results on data with the skull present.

High-quality skull-stripping algorithms based on deep learning are now widely available: the most prominent example is HD-BET [1]. A major downside of HD-BET is its behaviour on datasets to which skull-stripping has already been applied: in this case the algorithm falsely identifies brain tissue as skull and masks it. A skull-stripping algorithm F not exhibiting this behaviour would be idempotent: F(F(x)) = F(x) for any image x. Furthermore, legacy datasets from before the availability of high-quality skull-stripping algorithms may still contain images which have been inadequately skull-stripped: currently the only solution to improve the skull-stripping on this data is to go back to the original datasource or to manually correct the skull-stripping, which is time-consuming and prone to error.

Aim: In this project, the student will develop an idempotent skull-stripping network which can also handle partially skull-stripped inputs. In the best case, the network will operate well on a large subset of the data we work with (e.g. structural MRI, diffusion-weighted MRI, Perfusion-weighted MRI, susceptibility-weighted MRI, at a variety of field strengths) to maximize the future applicability of the network across the teams in our group.

Materials and Methods: Multiple datasets, both publicly available and internal (encompassing thousands of 3D volumes) will be available. Silver standard reference data for standard sequences at 1.5T and 3T can be generated using existing tools such as HD-BET: for other sequences and field strengths semi-supervised learning or methods improving robustness to domain shift may be employed. Robustness to partial skull-stripping may be induced by a combination of learning theory and model-based approaches.

Dataset curation: 10%

Idempotent skull-stripping model building: 30%

Modelling of partial skull-stripping:10%

Extension of model to handle partial skull: 30%

Results analysis: 10%

Fig. An example of failed skull-stripping requiring manual correction

References: [1] Isensee, F, Schell, M, Pflueger, I, et al. Automated brain extraction of multisequence MRI using artificial neural networks. Hum Brain Mapp . 2019; 40: 4952– 4964. https://doi.org/10.1002/hbm.24750

Automated leaf detection and leaf area estimation (for Arabidopsis thaliana)

Correlating plant phenotypes such as leaf area or number of leaves to the genotype (i.e. changes in DNA) is a common goal for plant breeders and molecular biologists. Such data can not only help to understand fundamental processes in nature, but also can help to improve ecotypes, e.g., to perform better under climate change, or reduce fertiliser input. However, collecting data for many plants is very time consuming and automated data acquisition is necessary.

The project aims at building a machine learning model to automatically detect plants in top-view images (see examples below), segment their leaves (see Fig C) and to estimate the leaf area. This information will then be used to determine the leaf area of different Arabidopsis ecotypes. The project will be carried out in collaboration with researchers of the Institute of Plant Sciences at the University of Bern. It will also involve the design and creation of a dataset of plant top-views with the corresponding annotation (provided by experts at the Institute of Plant Sciences).

Contact: Prof. Dr. Paolo Favaro ( [email protected] )

Master Projects at the ARTORG Center

The Gerontechnology and Rehabilitation group at the ARTORG Center for Biomedical Engineering is offering multiple MSc thesis projects to students, which are interested in working with real patient data, artificial intelligence and machine learning algorithms. The goal of these projects is to transfer the findings to the clinic in order to solve today’s healthcare problems and thus to improve the quality of life of patients. Assessment of Digital Biomarkers at Home by Radar. [PDF] Comparison of Radar, Seismograph and Ballistocardiography and to Monitor Sleep at Home. [PDF] Sentimental Analysis in Speech. [PDF] Contact: Dr. Stephan Gerber ( [email protected] )

Internship in Computational Imaging at Prophesee

A 6 month intership at Prophesee, Grenoble is offered to a talented Master Student.

The topic of the internship is working on burst imaging following the work of Sam Hasinoff , and exploring ways to improve it using event-based vision.

A compensation to cover the expenses of living in Grenoble is offered. Only students that have legal rights to work in France can apply.

Anyone interested can send an email with the CV to Daniele Perrone ( [email protected] ).

Using machine learning applied to wearables to predict mental health

This Master’s project lies at the intersection of psychiatry and computer science and aims to use machine learning techniques to improve health. Using sensors to detect sleep and waking behavior has as of yet unexplored potential to reveal insights into health. In this study, we make use of a watch-like device, called an actigraph, which tracks motion to quantify sleep behavior and waking activity. Participants in the study consist of healthy and depressed adolescents and wear actigraphs for a year during which time we query their mental health status monthly using online questionnaires. For this masters thesis we aim to make use of machine learning methods to predict mental health based on the data from the actigraph. The ability to predict mental health crises based on sleep and wake behavior would provide an opportunity for intervention, significantly impacting the lives of patients and their families. This Masters thesis is a collaboration between Professor Paolo Favaro at the Institute of Computer Science ( [email protected] ) and Dr Leila Tarokh at the Universitäre Psychiatrische Dienste (UPD) ( [email protected] ). We are looking for a highly motivated individual interested in bridging disciplines.

Bachelor or Master Projects at the ARTORG Center

The Gerontechnology and Rehabilitation group at the ARTORG Center for Biomedical Engineering is offering multiple BSc- and MSc thesis projects to students, which are interested in working with real patient data, artificial intelligence and machine learning algorithms. The goal of these projects is to transfer the findings to the clinic in order to solve today’s healthcare problems and thus to improve the quality of life of patients. Machine Learning Based Gait-Parameter Extraction by Using Simple Rangefinder Technology. [PDF] Detection of Motion in Video Recordings [PDF] Home-Monitoring of Elderly by Radar [PDF] Gait feature detection in Parkinson's Disease [PDF] Development of an arthroscopic training device using virtual reality [PDF] Contact: Dr. Stephan Gerber ( [email protected] ), Michael Single ( [email protected]. ch )

Dynamic Transformer

Level: bachelor.

Visual Transformers have obtained state of the art classification accuracies [ViT, DeiT, T2T, BoTNet]. Mixture of experts could be used to increase the capacity of a neural network by learning instance dependent execution pathways in a network [MoE]. In this research project we aim to push the transformers to their limit and combine their dynamic attention with MoEs, compared to Switch Transformer [Switch], we will use a much more efficient formulation of mixing [CondConv, DynamicConv] and we will use this idea in the attention part of the transformer, not the fully connected layer.

- Input dependent attention kernel generation for better transformer layers.

Publication Opportunity: Dynamic Neural Networks Meets Computer Vision (a CVPR 2021 Workshop)

Extensions:

- The same idea could be extended to other ViT/Transformer based models [DETR, SETR, LSTR, TrackFormer, BERT]

Related Papers:

- Visual Transformers: Token-based Image Representation and Processing for Computer Vision [ViT]

- DeiT: Data-efficient Image Transformers [DeiT]

- Bottleneck Transformers for Visual Recognition [BoTNet]

- Tokens-to-Token ViT: Training Vision Transformers from Scratch on ImageNet [T2TViT]

- Outrageously Large Neural Networks: The Sparsely-Gated Mixture-of-Experts Layer [MoE]

- Switch Transformers: Scaling to Trillion Parameter Models with Simple and Efficient Sparsity [Switch]

- CondConv: Conditionally Parameterized Convolutions for Efficient Inference [CondConv]

- Dynamic Convolution: Attention over Convolution Kernels [DynamicConv]

- End-to-End Object Detection with Transformers [DETR]

- Rethinking Semantic Segmentation from a Sequence-to-Sequence Perspective with Transformers [SETR]

- End-to-end Lane Shape Prediction with Transformers [LSTR]

- TrackFormer: Multi-Object Tracking with Transformers [TrackFormer]

- BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding [BERT]

Contact: Sepehr Sameni

Visual Transformers have obtained state of the art classification accuracies for 2d images[ViT, DeiT, T2T, BoTNet]. In this project, we aim to extend the same ideas to 3d data (videos), which requires a more efficient attention mechanism [Performer, Axial, Linformer]. In order to accelerate the training process, we could use [Multigrid] technique.

- Better video understanding by attention blocks.

Publication Opportunity: LOVEU (a CVPR workshop) , Holistic Video Understanding (a CVPR workshop) , ActivityNet (a CVPR workshop)

- Rethinking Attention with Performers [Performer]

- Axial Attention in Multidimensional Transformers [Axial]

- Linformer: Self-Attention with Linear Complexity [Linformer]

- A Multigrid Method for Efficiently Training Video Models [Multigrid]

GIRAFFE is a newly introduced GAN that can generate scenes via composition with minimal supervision [GIRAFFE]. Generative methods can implicitly learn interpretable representation as can be seen in GAN image interpretations [GANSpace, GanLatentDiscovery]. Decoding GIRAFFE could give us per-object interpretable representations that could be used for scene manipulation, data augmentation, scene understanding, semantic segmentation, pose estimation [iNeRF], and more.

In order to invert a GIRAFFE model, we will first train the generative model on Clevr and CompCars datasets, then we add a decoder to the pipeline and train this autoencoder. We can make the task easier by knowing the number of objects in the scene and/or knowing their positions.

Goals:

Scene Manipulation and Decomposition by Inverting the GIRAFFE

Publication Opportunity: DynaVis 2021 (a CVPR workshop on Dynamic Scene Reconstruction)

Related Papers:

- GIRAFFE: Representing Scenes as Compositional Generative Neural Feature Fields [GIRAFFE]

- Neural Scene Graphs for Dynamic Scenes

- pixelNeRF: Neural Radiance Fields from One or Few Images [pixelNeRF]

- NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis [NeRF]

- Neural Volume Rendering: NeRF And Beyond

- GANSpace: Discovering Interpretable GAN Controls [GANSpace]

- Unsupervised Discovery of Interpretable Directions in the GAN Latent Space [GanLatentDiscovery]

- Inverting Neural Radiance Fields for Pose Estimation [iNeRF]

Quantized ViT

Visual Transformers have obtained state of the art classification accuracies [ViT, CLIP, DeiT], but the best ViT models are extremely compute heavy and running them even only for inference (not doing backpropagation) is expensive. Running transformers cheaply by quantization is not a new problem and it has been tackled before for BERT [BERT] in NLP [Q-BERT, Q8BERT, TernaryBERT, BinaryBERT]. In this project we will be trying to quantize pretrained ViT models.

Quantizing ViT models for faster inference and smaller models without losing accuracy

Publication Opportunity: Binary Networks for Computer Vision 2021 (a CVPR workshop)

Extensions:

- Having a fast pipeline for image inference with ViT will allow us to dig deep into the attention of ViT and analyze it, we might be able to prune some attention heads or replace them with static patterns (like local convolution or dilated patterns), We might be even able to replace the transformer with performer and increase the throughput even more [Performer].

- The same idea could be extended to other ViT based models [DETR, SETR, LSTR, TrackFormer, CPTR, BoTNet, T2TViT]

- Learning Transferable Visual Models From Natural Language Supervision [CLIP]

- Visual Transformers: Token-based Image Representation and Processing for Computer Vision [ViT]

- DeiT: Data-efficient Image Transformers [DeiT]

- BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding [BERT]

- Q-BERT: Hessian Based Ultra Low Precision Quantization of BERT [Q-BERT]

- Q8BERT: Quantized 8Bit BERT [Q8BERT]

- TernaryBERT: Distillation-aware Ultra-low Bit BERT [TernaryBERT]

- BinaryBERT: Pushing the Limit of BERT Quantization [BinaryBERT]

- Rethinking Attention with Performers [Performer]

- End-to-End Object Detection with Transformers [DETR]

- Rethinking Semantic Segmentation from a Sequence-to-Sequence Perspective with Transformers [SETR]

- End-to-end Lane Shape Prediction with Transformers [LSTR]

- TrackFormer: Multi-Object Tracking with Transformers [TrackFormer]

- CPTR: Full Transformer Network for Image Captioning [CPTR]

- Bottleneck Transformers for Visual Recognition [BoTNet]

- Tokens-to-Token ViT: Training Vision Transformers from Scratch on ImageNet [T2TViT]

Multimodal Contrastive Learning

Recently contrastive learning has gained a lot of attention for self-supervised image representation learning [SimCLR, MoCo]. Contrastive learning could be extended to multimodal data, like videos (images and audio) [CMC, CoCLR]. Most contrastive methods require large batch sizes (or large memory pools) which makes them expensive for training. In this project we are going to use non batch size dependent contrastive methods [SwAV, BYOL, SimSiam] to train multimodal representation extractors.

Our main goal is to compare the proposed method with the CMC baseline, so we will be working with STL10, ImageNet, UCF101, HMDB51, and NYU Depth-V2 datasets.

Inspired by the recent works on smaller datasets [ConVIRT, CPD], to accelerate the training speed, we could start with two pretrained single-modal models and finetune them with the proposed method.

- Extending SwAV to multimodal datasets

- Grasping a better understanding of the BYOL

Publication Opportunity: MULA 2021 (a CVPR workshop on Multimodal Learning and Applications)

- Most knowledge distillation methods for contrastive learners also use large batch sizes (or memory pools) [CRD, SEED], the proposed method could be extended for knowledge distillation.

- One could easily extend this idea to multiview learning, for example one could have two different networks working on the same input and train them with contrastive learning, this may lead to better models [DeiT] by cross-model inductive biases communications.

- Self-supervised Co-training for Video Representation Learning [CoCLR]

- Learning Spatiotemporal Features via Video and Text Pair Discrimination [CPD]

- Audio-Visual Instance Discrimination with Cross-Modal Agreement [AVID-CMA]

- Self-Supervised Learning by Cross-Modal Audio-Video Clustering [XDC]

- Contrastive Multiview Coding [CPC]

- Contrastive Learning of Medical Visual Representations from Paired Images and Text [ConVIRT]

- A Simple Framework for Contrastive Learning of Visual Representations [SimCLR]

- Momentum Contrast for Unsupervised Visual Representation Learning [MoCo]

- Bootstrap your own latent: A new approach to self-supervised Learning [BYOL]

- Exploring Simple Siamese Representation Learning [SimSiam]

- Unsupervised Learning of Visual Features by Contrasting Cluster Assignments [SwAV]

- Contrastive Representation Distillation [CRD]

- SEED: Self-supervised Distillation For Visual Representation [SEED]

Robustness of Neural Networks

Neural Networks have been found to achieve surprising performance in several tasks such as classification, detection and segmentation. However, they are also very sensitive to small (controlled) changes to the input. It has been shown that some changes to an image that are not visible to the naked eye may lead the network to output an incorrect label. This thesis will focus on studying recent progress in this area and aim to build a procedure for a trained network to self-assess its reliability in classification or one of the popular computer vision tasks.

Contact: Paolo Favaro

Masters projects at sitem center

The Personalised Medicine Research Group at the sitem Center for Translational Medicine and Biomedical Entrepreneurship is offering multiple MSc thesis projects to the biomed eng MSc students that may also be of interest to the computer science students. Automated quantification of cartilage quality for hip treatment decision support. PDF Automated quantification of massive rotator cuff tears from MRI. PDF Deep learning-based segmentation and fat fraction analysis of the shoulder muscles using quantitative MRI. PDF Unsupervised Domain Adaption for Cross-Modality Hip Joint Segmentation. PDF Contact: Dr. Kate Gerber

Internships/Master thesis @ Chronocam

3-6 months internships on event-based computer vision. Chronocam is a rapidly growing startup developing event-based technology, with more than 15 PhDs working on problems like tracking, detection, classification, SLAM, etc. Event-based computer vision has the potential to solve many long-standing problems in traditional computer vision, and this is a super exciting time as this potential is becoming more and more tangible in many real-world applications. For next year we are looking for motivated Master and PhD students with good software engineering skills (C++ and/or python), and preferable good computer vision and deep learning background. PhD internships will be more research focused and possibly lead to a publication. For each intern we offer a compensation to cover the expenses of living in Paris. List of some of the topics we want to explore:

- Photo-realistic image synthesis and super-resolution from event-based data (PhD)

- Self-supervised representation learning (PhD)

- End-to-end Feature Learning for Event-based Data

- Bio-inspired Filtering using Spiking Networks

- On-the fly Compression of Event-based Streams for Low-Power IoT Cameras

- Tracking of Multiple Objects with a Dual-Frequency Tracker

- Event-based Autofocus

- Stabilizing an Event-based Stream using an IMU

- Crowd Monitoring for Low-power IoT Cameras

- Road Extraction from an Event-based Camera Mounted in a Car for Autonomous Driving

- Sign detection from an Event-based Camera Mounted in a Car for Autonomous Driving

- High-frequency Eye Tracking

Email with attached CV to Daniele Perrone at [email protected] .

Contact: Daniele Perrone

Object Detection in 3D Point Clouds

Today we have many 3D scanning techniques that allow us to capture the shape and appearance of objects. It is easier than ever to scan real 3D objects and transform them into a digital model for further processing, such as modeling, rendering or animation. However, the output of a 3D scanner is often a raw point cloud with little to no annotations. The unstructured nature of the point cloud representation makes it difficult for processing, e.g. surface reconstruction. One application is the detection and segmentation of an object of interest. In this project, the student is challenged to design a system that takes a point cloud (a 3D scan) as input and outputs the names of objects contained in the scan. This output can then be used to eliminate outliers or points that belong to the background. The approach involves collecting a large dataset of 3D scans and training a neural network on it.

Contact: Adrian Wälchli

Shape Reconstruction from a Single RGB Image or Depth Map

A photograph accurately captures the world in a moment of time and from a specific perspective. Since it is a projection of the 3D space to a 2D image plane, the depth information is lost. Is it possible to restore it, given only a single photograph? In general, the answer is no. This problem is ill-posed, meaning that many different plausible depth maps exist, and there is no way of telling which one is the correct one. However, if we cover one of our eyes, we are still able to recognize objects and estimate how far away they are. This motivates the exploration of an approach where prior knowledge can be leveraged to reduce the ill-posedness of the problem. Such a prior could be learned by a deep neural network, trained with many images and depth maps.

CNN Based Deblurring on Mobile

Deblurring finds many applications in our everyday life. It is particularly useful when taking pictures on handheld devices (e.g. smartphones) where camera shake can degrade important details. Therefore, it is desired to have a good deblurring algorithm implemented directly in the device. In this project, the student will implement and optimize a state-of-the-art deblurring method based on a deep neural network for deployment on mobile phones (Android). The goal is to reduce the number of network weights in order to reduce the memory footprint while preserving the quality of the deblurred images. The result will be a camera app that automatically deblurs the pictures, giving the user a choice of keeping the original or the deblurred image.

Depth from Blur

If an object in front of the camera or the camera itself moves while the aperture is open, the region of motion becomes blurred because the incoming light is accumulated in different positions across the sensor. If there is camera motion, there is also parallax. Thus, a motion blurred image contains depth information. In this project, the student will tackle the problem of recovering a depth-map from a motion-blurred image. This includes the collection of a large dataset of blurred- and sharp images or videos using a pair or triplet of GoPro action cameras. Two cameras will be used in stereo to estimate the depth map, and the third captures the blurred frames. This data is then used to train a convolutional neural network that will predict the depth map from the blurry image.

Unsupervised Clustering Based on Pretext Tasks

The idea of this project is that we have two types of neural networks that work together: There is one network A that assigns images to k clusters and k (simple) networks of type B perform a self-supervised task on those clusters. The goal of all the networks is to make the k networks of type B perform well on the task. The assumption is that clustering in semantically similar groups will help the networks of type B to perform well. This could be done on the MNIST dataset with B being linear classifiers and the task being rotation prediction.

Adversarial Data-Augmentation

The student designs a data augmentation network that transforms training images in such a way that image realism is preserved (e.g. with a constrained spatial transformer network) and the transformed images are more difficult to classify (trained via adversarial loss against an image classifier). The model will be evaluated for different data settings (especially in the low data regime), for example on the MNIST and CIFAR datasets.

Unsupervised Learning of Lip-reading from Videos

People with sensory impairment (hearing, speech, vision) depend heavily on assistive technologies to communicate and navigate in everyday life. The mass production of media content today makes it impossible to manually translate everything into a common language for assistive technologies, e.g. captions or sign language. In this project, the student employs a neural network to learn a representation for lip-movement in videos in an unsupervised fashion, possibly with an encoder-decoder structure where the decoder reconstructs the audio signal. This requires collecting a large dataset of videos (e.g. from YouTube) of speakers or conversations where lip movement is visible. The outcome will be a neural network that learns an audio-visual representation of lip movement in videos, which can then be leveraged to generate captions for hearing impaired persons.

Learning to Generate Topographic Maps from Satellite Images

Satellite images have many applications, e.g. in meteorology, geography, education, cartography and warfare. They are an accurate and detailed depiction of the surface of the earth from above. Although it is relatively simple to collect many satellite images in an automated way, challenges arise when processing them for use in navigation and cartography. The idea of this project is to automatically convert an arbitrary satellite image, of e.g. a city, to a map of simple 2D shapes (streets, houses, forests) and label them with colors (semantic segmentation). The student will collect a dataset of satellite image and topological maps and train a deep neural network that learns to map from one domain to the other. The data could be obtained from a Google Maps database or similar.

Optimization of OmniMotion, a tracking algorithm

Martí farré farrús · june 2024.

This thesis presents Quasi-OmniFastTrack, an improved version of the OmniMotion algorithm for long-term pixel tracking in videos. The key contribution is reducing the computational expense and training time of OmniMotion while maintaining comparable tracking performance. The main bottleneck in OmniMotion was identified to be the NeRF network used for 3D scene representation. Quasi-OmniFastTrack replaces this with a pre-trained depth estimation model, significantly reducing training time, based on the work introduced in OmniFastTrack, hence the name. The invertible neural network for mapping between local and canonical coordinates is retained, but optimized depths are used to lift 2D pixels to 3D. Experiments show that Quasi-OmniFastTrack reduces training time by over 50% compared to OmniMotion while achieving similar qualitative tracking results on sequences with occlusions. Performance degrades somewhat on fast-moving scenes. The ablation studies demonstrate the importance of optimizing the initial depth estimates during training. While not matching OmniMotion's robustness in all scenarios, Quasi-OmniFastTrack offers a compelling speed-accuracy tradeoff, enabling long-term tracking on more videos in practical timeframes. Future work on incorporating other modifications introduced in OmniFastTrack, like long-term semantic features, could further improve tracking consistency.

New Variables of Brain Morphometry: the Potential and Limitations of CNN Regression

Timo blattner · sept. 2022.

The calculation of variables of brain morphology is computationally very expensive and time-consuming. A previous work showed the feasibility of ex- tracting the variables directly from T1-weighted brain MRI images using a con- volutional neural network. We used significantly more data and extended their model to a new set of neuromorphological variables, which could become inter- esting biomarkers in the future for the diagnosis of brain diseases. The model shows for nearly all subjects a less than 5% mean relative absolute error. This high relative accuracy can be attributed to the low morphological variance be- tween subjects and the ability of the model to predict the cortical atrophy age trend. The model however fails to capture all the variance in the data and shows large regional differences. We attribute these limitations in part to the moderate to poor reliability of the ground truth generated by FreeSurfer. We further investigated the effects of training data size and model complexity on this regression task and found that the size of the dataset had a significant impact on performance, while deeper models did not perform better. Lack of interpretability and dependence on a silver ground truth are the main drawbacks of this direct regression approach.

Home Monitoring by Radar

Lars ziegler · sept. 2022.

Detection and tracking of humans via UWB radars is a promising and continuously evolving field with great potential for medical technology. This contactless method of acquiring data of a patients movement patterns is ideal for in home application. As irregularities in a patients movement patterns are an indicator for various health problems including neurodegenerative diseases, the insight this data could provide may enable earlier detection of such problems. In this thesis a signal processing pipeline is presented with which a persons movement is modeled. During an experiment 142 measurements were recorded by two separate radar systems and one lidar system which each consisted of multiple sensors. The models that were calculated on these measurements by the signal processing pipeline were used to predict the times when a person stood up or sat down. The predictions showed an accuracy of 72.2%.

Revisiting non-learning based 3D reconstruction from multiple images

Aaron sägesser · oct. 2021.

Arthroscopy consists of challenging tasks and requires skills that even today, young surgeons still train directly throughout the surgery. Existing simulators are expensive and rarely available. Through the growing potential of virtual reality(VR) (head-mounted) devices for simulation and their applicability in the medical context, these devices have become a promising alternative that would be orders of magnitude cheaper and could be made widely available. To build a VR-based training device for arthroscopy is the overall aim of our project, as this would be of great benefit and might even be applicable in other minimally invasive surgery (MIS). This thesis marks a first step of the project with its focus to explore and compare well-known algorithms in a multi-view stereo (MVS) based 3D reconstruction with respect to imagery acquired by an arthroscopic camera. Simultaneously with this reconstruction, we aim to gain essential measures to compare the VR environment to the real world, as validation of the realism of future VR tasks. We evaluate 3 different feature extraction algorithms with 3 different matching techniques and 2 different algorithms for the estimation of the fundamental (F) matrix. The evaluation of these 18 different setups is made with a reconstruction pipeline embedded in a jupyter notebook implemented in python based on common computer vision libraries and compared with imagery generated with a mobile phone as well as with the reconstruction results of state-of-the-art (SOTA) structure-from-motion (SfM) software COLMAP and Multi-View Environment (MVE). Our comparative analysis manifests the challenges of heavy distortion, the fish-eye shape and weak image quality of arthroscopic imagery, as all results are substantially worse using this data. However, there are huge differences regarding the different setups. Scale Invariant Feature Transform (SIFT) and Oriented FAST Rotated BRIEF (ORB) in combination with k-Nearest Neighbour (kNN) matching and Least Median of Squares (LMedS) present the most promising results. Overall, the 3D reconstruction pipeline is a useful tool to foster the process of gaining measurements from the arthroscopic exploration device and to complement the comparative research in this context.

Examination of Unsupervised Representation Learning by Predicting Image Rotations

Eric lagger · sept. 2020.

In recent years deep convolutional neural networks achieved a lot of progress. To train such a network a lot of data is required and in supervised learning algorithms it is necessary that the data is labeled. To label data there is a lot of human work needed and this takes a lot of time and money to be done. To avoid the inconveniences that come with this we would like to find systems that don’t need labeled data and therefore are unsupervised learning algorithms. This is the importance of unsupervised algorithms, even though their outcome is not yet on the same qualitative level as supervised algorithms. In this thesis we will discuss an approach of such a system and compare the results to other papers. A deep convolutional neural network is trained to learn the rotations that have been applied to a picture. So we take a large amount of images and apply some simple rotations and the task of the network is to discover in which direction the image has been rotated. The data doesn’t need to be labeled to any category or anything else. As long as all the pictures are upside down we hope to find some high dimensional patterns for the network to learn.

StitchNet: Image Stitching using Autoencoders and Deep Convolutional Neural Networks

Maurice rupp · sept. 2019.

This thesis explores the prospect of artificial neural networks for image processing tasks. More specifically, it aims to achieve the goal of stitching multiple overlapping images to form a bigger, panoramic picture. Until now, this task is solely approached with ”classical”, hardcoded algorithms while deep learning is at most used for specific subtasks. This thesis introduces a novel end-to-end neural network approach to image stitching called StitchNet, which uses a pre-trained autoencoder and deep convolutional networks. Additionally to presenting several new datasets for the task of supervised image stitching with each 120’000 training and 5’000 validation samples, this thesis also conducts various experiments with different kinds of existing networks designed for image superresolution and image segmentation adapted to the task of image stitching. StitchNet outperforms most of the adapted networks in both quantitative as well as qualitative results.

Facial Expression Recognition in the Wild

Luca rolshoven · sept. 2019.

The idea of inferring the emotional state of a subject by looking at their face is nothing new. Neither is the idea of automating this process using computers. Researchers used to computationally extract handcrafted features from face images that had proven themselves to be effective and then used machine learning techniques to classify the facial expressions using these features. Recently, there has been a trend towards using deeplearning and especially Convolutional Neural Networks (CNNs) for the classification of these facial expressions. Researchers were able to achieve good results on images that were taken in laboratories under the same or at least similar conditions. However, these models do not perform very well on more arbitrary face images with different head poses and illumination. This thesis aims to show the challenges of Facial Expression Recognition (FER) in this wild setting. It presents the currently used datasets and the present state-of-the-art results on one of the biggest facial expression datasets currently available. The contributions of this thesis are twofold. Firstly, I analyze three famous neural network architectures and their effectiveness on the classification of facial expressions. Secondly, I present two modifications of one of these networks that lead to the proposed STN-COV model. While this model does not outperform all of the current state-of-the-art models, it does beat several ones of them.

A Study of 3D Reconstruction of Varying Objects with Deformable Parts Models

Raoul grossenbacher · july 2019.

This work covers a new approach to 3D reconstruction. In traditional 3D reconstruction one uses multiple images of the same object to calculate a 3D model by taking information gained from the differences between the images, like camera position, illumination of the images, rotation of the object and so on, to compute a point cloud representing the object. The characteristic trait shared by all these approaches is that one can almost change everything about the image, but it is not possible to change the object itself, because one needs to find correspondences between the images. To be able to use different instances of the same object, we used a 3D DPM model that can find different parts of an object in an image, thereby detecting the correspondences between the different pictures, which we then can use to calculate the 3D model. To take this theory to practise, we gave a 3D DPM model, which was trained to detect cars, pictures of different car brands, where no pair of images showed the same vehicle and used the detected correspondences and the Factorization Method to compute the 3D point cloud. This technique leads to a completely new approach in 3D reconstruction, because changing the object itself was never done before.

Motion deblurring in the wild replication and improvements

Alvaro juan lahiguera · jan. 2019, coma outcome prediction with convolutional neural networks, stefan jonas · oct. 2018, automatic correction of self-introduced errors in source code, sven kellenberger · aug. 2018, neural face transfer: training a deep neural network to face-swap, till nikolaus schnabel · july 2018.

This thesis explores the field of artificial neural networks with realistic looking visual outputs. It aims at morphing face pictures of a specific identity to look like another individual by only modifying key features, such as eye color, while leaving identity-independent features unchanged. Prior works have covered the topic of symmetric translation between two specific domains but failed to optimize it on faces where only parts of the image may be changed. This work applies a face masking operation to the output at training time, which forces the image generator to preserve colors while altering the face, fitting it naturally inside the unmorphed surroundings. Various experiments are conducted including an ablation study on the final setting, decreasing the baseline identity switching performance from 81.7% to 75.8 % whilst improving the average χ2 color distance from 0.551 to 0.434. The provided code-based software gives users easy access to apply this neural face swap to images and videos of arbitrary crop and brings Computer Vision one step closer to replacing Computer Graphics in this specific area.

A Study of the Importance of Parts in the Deformable Parts Model

Sammer puran · june 2017, self-similarity as a meta feature, lucas husi · april 2017, a study of 3d deformable parts models for detection and pose-estimation, simon jenni · march 2015, accelerated federated learning on client silos with label noise: rho selection in classification and segmentation, irakli kelbakiani · may 2024.

Federated Learning has recently gained more research interest. This increased attention is caused by factors including the growth of decentralized data, privacy concerns, and new privacy regulations. In Federated Learning, remote servers keep training a model on local datasets independently, and subsequently, local models are aggregated into a global model, which achieves better overall performance. Sending local model weights instead of the entire dataset is a significant advantage of Federated Learning over centralized classical machine learning algorithms. Federated learning involves uploading and downloading model parameters multiple times, so there are multiple communication rounds between the global server and remote client servers, which imposes challenges. The high number of necessary communication rounds not only increases high-cost communication overheads but is also a critical limitation for servers with low network bandwidth, which leads to latency and a higher probability of training failures caused by communication breakdowns. To mitigate these challenges, we aim to provide a fast-convergent Federated Learning training methodology that decreases the number of necessary communication rounds. We found a paper about Reducible Holdout Loss Selection (RHO-Loss) batch selection methodology, which ”selects low-noise, task-relevant, non-redundant points for training” [1]; we hypothesize, if client silos employ RHO-Loss methodology and successfully avoid training their local models on noisy and non-relevant samples, clients may offer stable and consistent updates to the global server, which could lead to faster convergence of the global model. Our contribution focuses on investigating the RHO-Loss method in a simulated federated setting for the Clothing1M dataset. We also examine its applicability to medical datasets and check its effectiveness in a simulated federated environment. Our experimental results show a promising outcome, specifically a reduction in communication rounds for the Clothing1M dataset. However, as the success of the RHO-Loss selection method depends on the availability of sufficient training data for the target RHO model and for the Irreducible RHO model, we emphasize that our contribution applies to those Federated Learning scenarios where client silos hold enough training data to successfully train and benefit from their RHO model on their local dataset.

Amodal Leaf Segmentation

Nicolas maier · nov. 2023.

Plant phenotyping is the process of measuring and analyzing various traits of plants. It provides essential information on how genetic and environmental factors affect plant growth and development. Manual phenotyping is highly time-consuming; therefore, many computer vision and machine learning based methods have been proposed in the past years to perform this task automatically based on images of the plants. However, the publicly available datasets (in particular, of Arabidopsis thaliana) are limited in size and diversity, making them unsuitable to generalize to new unseen environments. In this work, we propose a complete pipeline able to automatically extract traits of interest from an image of Arabidopsis thaliana. Our method uses a minimal amount of existing annotated data from a source domain to generate a large synthetic dataset adapted to a different target domain (e.g., different backgrounds, lighting conditions, and plant layouts). In addition, unlike the source dataset, the synthetic one provides ground-truth annotations for the occluded parts of the leaves, which are relevant when measuring some characteristics of the plant, e.g., its total area. This synthetic dataset is then used to train a model to perform amodal instance segmentation of the leaves to obtain the total area, leaf count, and color of each plant. To validate our approach, we create a small dataset composed of manually annotated real images of Arabidopsis thaliana, which is used to assess the performance of the models.

Assessment of movement and pose in a hospital bed by ambient and wearable sensor technology in healthy subjects

Tony licata · sept. 2022.

The use of automated systems describing the human motion has become possible in various domains. Most of the proposed systems are designed to work with people moving around in a standing position. Because such system could be interesting in a medical environment, we propose in this work a pipeline that can effectively predict human motion from people lying on beds. The proposed pipeline is tested with a data set composed of 41 participants executing 7 predefined tasks in a bed. The motion of the participants is measured with video cameras, accelerometers and pressure mat. Various experiments are carried with the information retrieved from the data set. Two approaches combining the data from the different measure technologies are explored. The performance of the different carried experiments is measured, and the proposed pipeline is composed with components providing the best results. Later on, we show that the proposed pipeline only needs to use the video cameras, which make the proposed environment easier to implement in real life situations.

Machine Learning Based Prediction of Mental Health Using Wearable-measured Time Series

Seyedeh sharareh mirzargar · sept. 2022.

Depression is the second major cause for years spent in disability and has a growing prevalence in adolescents. The recent Covid-19 pandemic has intensified the situation and limited in-person patient monitoring due to distancing measures. Recent advances in wearable devices have made it possible to record the rest/activity cycle remotely with high precision and in real-world contexts. We aim to use machine learning methods to predict an individual's mental health based on wearable-measured sleep and physical activity. Predicting an impending mental health crisis of an adolescent allows for prompt intervention, detection of depression onset or its recursion, and remote monitoring. To achieve this goal, we train three primary forecasting models; linear regression, random forest, and light gradient boosted machine (LightGBM); and two deep learning models; block recurrent neural network (block RNN) and temporal convolutional network (TCN); on Actigraph measurements to forecast mental health in terms of depression, anxiety, sleepiness, stress, sleep quality, and behavioral problems. Our models achieve a high forecasting performance, the random forest being the winner to reach an accuracy of 98% for forecasting the trait anxiety. We perform extensive experiments to evaluate the models' performance in accuracy, generalization, and feature utilization, using a naive forecaster as the baseline. Our analysis shows minimal mental health changes over two months, making the prediction task easily achievable. Due to these minimal changes in mental health, the models tend to primarily use the historical values of mental health evaluation instead of Actigraph features. At the time of this master thesis, the data acquisition step is still in progress. In future work, we plan to train the models on the complete dataset using a longer forecasting horizon to increase the level of mental health changes and perform transfer learning to compensate for the small dataset size. This interdisciplinary project demonstrates the opportunities and challenges in machine learning based prediction of mental health, paving the way toward using the same techniques to forecast other mental disorders such as internalizing disorder, Parkinson's disease, Alzheimer's disease, etc. and improving the quality of life for individuals who have some mental disorder.

CNN Spike Detector: Detection of Spikes in Intracranial EEG using Convolutional Neural Networks

Stefan jonas · oct. 2021.

The detection of interictal epileptiform discharges in the visual analysis of electroencephalography (EEG) is an important but very difficult, tedious, and time-consuming task. There have been decades of research on computer-assisted detection algorithms, most recently focused on using Convolutional Neural Networks (CNNs). In this thesis, we present the CNN Spike Detector, a convolutional neural network to detect spikes in intracranial EEG. Our dataset of 70 intracranial EEG recordings from 26 subjects with epilepsy introduces new challenges in this research field. We report cross-validation results with a mean AUC of 0.926 (+- 0.04), an area under the precision-recall curve (AUPRC) of 0.652 (+- 0.10) and 12.3 (+- 7.47) false positive epochs per minute for a sensitivity of 80%. A visual examination of false positive segments is performed to understand the model behavior leading to a relatively high false detection rate. We notice issues with the evaluation measures and highlight a major limitation of the common approach of detecting spikes using short segments, namely that the network is not capable to consider the greater context of the segment with regards to its origination. For this reason, we present the Context Model, an extension in which the CNN Spike Detector is supplied with additional information about the channel. Results show promising but limited performance improvements. This thesis provides important findings about the spike detection task for intracranial EEG and lays out promising future research directions to develop a network capable of assisting experts in real-world clinical applications.

PolitBERT - Deepfake Detection of American Politicians using Natural Language Processing

Maurice rupp · april 2021.

This thesis explores the application of modern Natural Language Processing techniques to the detection of artificially generated videos of popular American politicians. Instead of focusing on detecting anomalies and artifacts in images and sounds, this thesis focuses on detecting irregularities and inconsistencies in the words themselves, opening up a new possibility to detect fake content. A novel, domain-adapted, pre-trained version of the language model BERT combined with several mechanisms to overcome severe dataset imbalances yielded the best quantitative as well as qualitative results. Additionally to the creation of the biggest publicly available dataset of English-speaking politicians consisting of 1.5 M sentences from over 1000 persons, this thesis conducts various experiments with different kinds of text classification and sequence processing algorithms applied to the political domain. Furthermore, multiple ablations to manage severe data imbalance are presented and evaluated.

A Study on the Inversion of Generative Adversarial Networks

Ramona beck · march 2021.

The desire to use generative adversarial networks (GANs) for real-world tasks such as object segmentation or image manipulation is increasing as synthesis quality improves, which has given rise to an emerging research area called GAN inversion that focuses on exploring methods for embedding real images into the latent space of a GAN. In this work, we investigate different GAN inversion approaches using an existing generative model architecture that takes a completely unsupervised approach to object segmentation and is based on StyleGAN2. In particular, we propose and analyze algorithms for embedding real images into the different latent spaces Z, W, and W+ of StyleGAN following an optimization-based inversion approach, while also investigating a novel approach that allows fine-tuning of the generator during the inversion process. Furthermore, we investigate a hybrid and a learning-based inversion approach, where in the former we train an encoder with embeddings optimized by our best optimization-based inversion approach, and in the latter we define an autoencoder, consisting of an encoder and the generator of our generative model as a decoder, and train it to map an image into the latent space. We demonstrate the effectiveness of our methods as well as their limitations through a quantitative comparison with existing inversion methods and by conducting extensive qualitative and quantitative experiments with synthetic data as well as real images from a complex image dataset. We show that we achieve qualitatively satisfying embeddings in the W and W+ spaces with our optimization-based algorithms, that fine-tuning the generator during the inversion process leads to qualitatively better embeddings in all latent spaces studied, and that the learning-based approach also benefits from a variable generator as well as a pre-training with our hybrid approach. Furthermore, we evaluate our approaches on the object segmentation task and show that both our optimization-based and our hybrid and learning-based methods are able to generate meaningful embeddings that achieve reasonable object segmentations. Overall, our proposed methods illustrate the potential that lies in the GAN inversion and its application to real-world tasks, especially in the relaxed version of the GAN inversion where the weights of the generator are allowed to vary.

Multi-scale Momentum Contrast for Self-supervised Image Classification

Zhao xueqi · dec. 2020.

With the maturity of supervised learning technology, people gradually shift the research focus to the field of self-supervised learning. ”Momentum Contrast” (MoCo) proposes a new self-supervised learning method and raises the correct rate of self-supervised learning to a new level. Inspired by another article ”Representation Learning by Learning to Count”, if a picture is divided into four parts and passed through a neural network, it is possible to further improve the accuracy of MoCo. Different from the original MoCo, this MoCo variant (Multi-scale MoCo) does not directly pass the image through the encoder after the augmented images. Multi-scale MoCo crops and resizes the augmented images, and the obtained four parts are respectively passed through the encoder and then summed (upsampled version do not do resize to input but resize the contrastive samples). This method of images crop is not only used for queue q but also used for comparison queue k, otherwise the weights of queue k might be damaged during the moment update. This will further discussed in the experiments chapter between downsampled Multi-scale version and downsampled both Multi-scale version. Human beings also have the same principle of object recognition: when human beings see something they are familiar with, even if the object is not fully displayed, people can still guess the object itself with a high probability. Because of this, Multi-scale MoCo applies this concept to the pretext part of MoCo, hoping to obtain better feature extraction. In this thesis, there are three versions of Multi-scale MoCo, downsampled input samples version, downsampled input samples and contrast samples version and upsampled input samples version. The differences between these versions will be described in more detail later. The neural network architecture comparison includes ResNet50 , and the tested data set is STL-10. The weights obtained in pretext will be transferred to self-supervised learning, and in the process of self-supervised learning, the weights of other layers except the final linear layer are frozen without changing (these weights come from pretext).

Self-Supervised Learning Using Siamese Networks and Binary Classifier

Dušan mihajlov · march 2020.