Automated Scoring of Writing

- Open Access

- First Online: 15 September 2023

Cite this chapter

You have full access to this open access chapter

- Stephanie Link ORCID: orcid.org/0000-0002-5586-1495 8 &

- Svetlana Koltovskaia 9

4713 Accesses

For decades, automated essay scoring (AES) has operated behind the scenes of major standardized writing assessments to provide summative scores of students’ writing proficiency (Dikli in J Technol Learn Assess 5(1), 2006). Today, AES systems are increasingly used in low-stakes assessment contexts and as a component of instructional tools in writing classrooms. Despite substantial debate regarding their use, including concerns about writing construct representation (Condon in Assess Writ 18:100–108, 2013; Deane in Assess Writ 18:7–24, 2013), AES has attracted the attention of school administrators, educators, testing companies, and researchers and is now commonly used in an attempt to reduce human efforts and improve consistency issues in assessing writing (Ramesh and Sanampudi in Artif Intell Rev 55:2495–2527, 2021). This chapter introduces the affordances and constraints of AES for writing assessment, surveys research on AES effectiveness in classroom practice, and emphasizes implications for writing theory and practice.

You have full access to this open access chapter, Download chapter PDF

- Automated essay scoring

- Summative assessment

Automated essay scoring (AES) is used internationally to rapidly assess writing and provide summative holistic scores and score descriptors for formal and informal assessments. The ease of using AES for response to writing is especially attractive for large-scale essay evaluation, providing also a low-cost supplement to human scoring and feedback provision. Additionally, intended benefits of AES include the elimination of human bias, such as rater fatigue, expertise, severity/leniency, inconsistency, and Halo effect. While AES developers also commonly suggest that their engines perform as reliably as human scorers (e.g., Burstein & Chodorow, 2010 ; Riordan et al., 2017 ; Rudner et al., 2006 ), AES is not free of critique. Automated scoring is frequently under scrutiny for use with university-level composition students in the United States (Condon, 2013 ) and second language writers (Crusan, 2010 ), with some writing practitioners discouraging its replacement of adequate literacy education because of its inability to evaluate meaning from a humanistic, socially-situated perspective (Deane, 2013 ; NCTE, 2013 ). AES also suffers from biases, such as imperfections in the quality and representation of training data to develop the systems and inform feedback generation. These biases question the fairness of AES (Loukina et al., 2019 ), especially if scores are modeled based on data that does not adequately represent a user population—a particular concern for use of AES with minoritized populations.

Despite reservations, the utility of AES in writing practices has increased significantly in recent years (Ramesh & Sanampudi, 2021 ), partially due to its integration into classroom-based tools (see Cotos, “ Automated Feedback on Writing ” for a review of automated writing evaluation). Thus, the affordances of AES for language testing are now readily available to writing practitioners and researchers, and the time is ripe for better understanding its potential impact on the pedagogical approaches to writing studies by first better understanding the history that drives AES development.

Dating back to the 1960s, AES started with the advent of Project Essay Grade (Page, 1966 ). Since then, automated scoring has advanced into leading technologies, including e-rater by the Educational Testing Service (ETS) (Attali & Burstein, 2006 ), Intelligent Essay Assessor (IEA) by Knowledge Analysis Technologies (Landauer et al., 2003 ), Intellimetric by Vantage Learning (Elliot, 2003 ), and a large number of prospective newcomers (e.g., Nguyen & Dery, 2016 ; Riordan et al., 2017 ). These AES engines are used for tests like the Test of English as a Foreign Language (TOEFL iBT), Graduate Management Admissions Test (GMAT), and the Pearson Test of English (PTE). In such tests, AES researchers not only found the scores reliable, but some argued that they also allowed for reproducibility, tractability, consistency, objectivity, item specification, granularity, and efficiency (William et al., 1999 ), characteristics that human raters can lack (Williamson et al., 2012 ).

The immediate AES response to writing is without much question a salient feature of automated scoring for testing contexts. However, research on classroom-based implementation has suggested that instructors can utilize the AES feedback to flag students’ writing that requires teachers’ special attention (Li et al., 2014 ), highlighting its potential for constructing individual development plans or conducting analysis of students’ writing needs. AES also provides constant, individualized feedback to lighten instructors’ feedback load (Kellogg et al., 2010 ), enhance student autonomy (Wang et al., 2013 ), and stimulate editing and revision (Li et al., 2014 ).

2 Core Idea of the Technology

Automated essay scoring involves automatic assessment of a students’ written work, usually in response to a writing prompt. This assessment generally includes (1) a holistic score of students’ performance, knowledge, and/or skill and (2) a score descriptor on how the student can improve the text. For example, e-rater by ETS ( 2013 ) scores essays on a scale from 0 to 6. A score of 6 may include the following feedback:

Score of 6: Excellent

Looks at the topic from a number of angles and responds to all aspects.

Responds thoughtfully and insightfully to the issues in the topic.

Develops with a superior structure and apt reasons or examples.

Uses sentence styles and language that have impact and energy.

Demonstrates that you know the mechanics of correct sentence structure.

AES engine developers over the years have undertaken a core goal of making the assessment of writing accurate, unbiased, and fair (Madnani & Cahill, 2018 ). The differences in score generation, however, are stark given the variation in philosophical foundations, intended purposes, extraction of features for scoring writing, and criteria used to test the systems (Yang et al., 2002 ). To this end, it is important to understand the prescribed use of automated systems so that they are not implemented inappropriately. For instance, if a system is meant to measure students’ writing proficiency, the system should not be used to assess students’ aptitude. Thus, scoring models for developing AES engines are valuable and effective in distinct ways and for their specific purposes.

Because each engine may be designed to assess different levels, genres, and/or skills of writing, developers utilize different natural language processing (NLP) techniques for establishing construct validity, or the extent to which an AES scoring engine measures what it intends to measure—a common concern for AES critics (Condon, 2013 ; Perelman, 2014 , 2020 ). NLP helps computers understand human input (text and speech) by starting with human and/or computer analysis of textual features so that a computer can process the textual input and offer reliable output (e.g., a holistic score and score descriptor) on new text. These features may include statistical features (e.g., essay length, word co-occurrences also known as n-grams), style-based features (e.g., sentence structure, grammar, part-of-speech), and content-based features (e.g., cohesion, semantics, prompt relevance) (see Ramesh & Sanampudi, 2021 , for an overview of features). Construct validity should thus be interpreted in relation to feature extraction of a given AES system to adequately appreciate (or challenge) the capabilities that system offers writing studies.

In addition to a focus on a variety of textual features, AES developers have utilized varied machine learning (ML) techniques to establish construct validity and efficient score modeling. Machine learning is a category of artificial intelligence (AI) that helps computers recognize patterns in data and continuously learn from the data to make accurate holistic score predictions and adjustments without further programming (IBM, 2020 ). Early AES research utilized standard multiple regression analysis to predict holistic scores based on a set of rater-defined textual features. This approach was utilized in the early 1960s for developing Project Essay Grade by Page ( 1966 ), but it has been criticized for its bias in favor of longer texts (Hearst, 2000 ) and its ignorance towards content and domain knowledge (Ramesh & Sanampudi, 2021 ).

In subsequent years, classification models, such as the bag of words approach (BOW), were common (e.g., Chen et al., 2010 ; Leacock & Chodorow, 2003 ). BOW models extract features in writing using NLP by counting the occurrences and co-occurrences of words within and across texts. Texts with multiple shared word strings are classified into similar holistic score categories (e.g., low, medium, high) (Chen et al., 2010 ; Zhang et al., 2010 ). E-rater by ETS is a good example of this approach. The aforementioned approaches are human-labor intensive. Latent semantic analysis (LSA) is advantageous in this regard; it is also strong in evaluating semantics. In LSA, the semantic representation of a text is compared to the semantic representation of other similarly scored responses. This analysis is done by training the computer on specific corpora that mimics a given writing prompt. Landauer et al. ( 2003 ) used LSA in Intelligent Essay Grade.

Advances in NLP and progress in ML have motivated AES researchers to move away from statistical regression-based modeling and classification approaches to advanced models involving neural network approaches (Dong et al., 2017 ; Kumar & Boulanger, 2020 ; Riordan et al., 2017 ). To develop these AES models, data undergoes a process of supervised learning, where the computer is provided with labeled data that enables it to produce a score as a human would. The supervised learning process often starts with a training set—a large corpus of representative, unbiased writing that is typically human- or auto-coded for specific linguistic features with each text receiving a holistic score. Models are then generated to teach a computer to identify and extract these features and provide a holistic score that correlates with the human rating. The models are evaluated on a testing set that the computer has never seen previously. Accuracy of algorithms is then evaluated by using testing set scores and human scores to determine human–computer consistency and reliability. Common evaluations are quadrated weighted kappa, Mean Absolute Error, and Pearson Correlation Coefficient.

Once accuracy results meet an industry standard (Powers et al., 2015 ), which varies across disciplines (Weigle, 2013 ), the algorithms are made public through user-friendly interfaces for testing contexts (i.e., to provide summative feedback, formal assessments to assess students’ performance or proficiency) and direct classroom use (i.e., informal assessments to improve students’ learning). For the classroom, teachers should be active in evaluating the feedback to determine whether it is reasonably accurate in assessing a learning goal, does not lead students away from the goal, and encourages students to engage in different ways with their text and/or the course content. Effective evaluation of AES should start with an awareness of AES affordances that can impact writing practice and then continue with the training of students in the utility of these affordances.

3 Functional Specifications

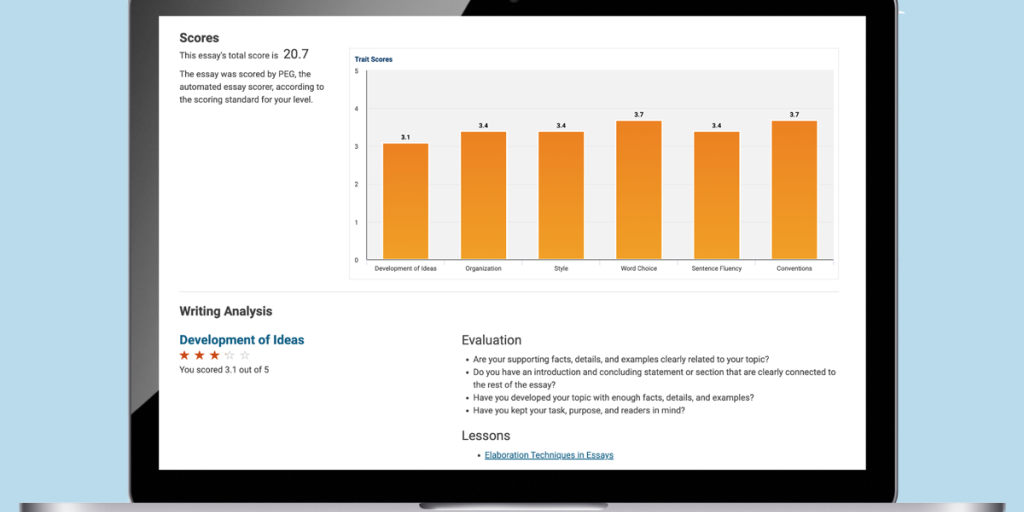

The overall functionality of AES for classroom use is to provide summative assessment of writing quality. AES accomplishes this through two key affordances: a holistic score and score descriptor.

Holistic score: The summative score provides an overall, generic assessment of writing quality. For example, Grammarly provides a holistic score or “performance” score out of 100%. The score represents the quality of writing (as determined by features, such as word count, readability statistics, vocabulary usage). If a student receives a score below 60–70%, this means that it could be understood by a reader who has a 9th grade education. For the text to be readable by 80% of English speakers, Grammarly suggests getting at least 60–70%.

Score descriptor: The holistic score is typically accompanied by a descriptor that indicates what the score represents. This characterization of the score meaning can be used to interpret the feedback, evaluate the feedback, and make decisions regarding editing and revising.

That is, these key affordances can be utilized to complete several main activities.

Interpreting feedback : Once students receive the holistic score along with the descriptor, they should interpret the score. Information provided for adequate score interpretation varies across AES systems, so students may need help in interpreting the meaning of this feedback.

Evaluating feedback : After interpreting the score and the descriptor, students need to think critically about how the feedback applies to their writing. That is, students need to determine whether the computer feedback is an adequate representation of their writing weaknesses. Evaluating feedback thus entails noticing the gap or problem found in one’s own writing and becoming consciously aware of how the feedback might be used to increase the quality of writing through self-editing (Ferris, 2011 ).

Making a decision about action : Once students evaluate their writing based on a given score and descriptor, they then need to decide whether to address the issues highlighted in the descriptor or seek additional feedback. Making and executing a revision plan can ensure that the student is being critical towards the feedback rather than accepting it outright.

Revising/editing : The student then revises the paper and resubmits it to the system to see if the score improves—an indicator of higher quality writing. If needed, the student can repeat the above actions or move on to editing of surface-level writing concerns.

4 Research on AES

AES research can be categorized along two lines: system-centric research that evaluates the system itself and user-centric research that evaluates use/impact of a system on learning. From a system-centric perspective, various studies have been conducted to validate AES-system-generated scores for the testing context. The majority have focused on reliability, or the extent to which results can be considered consistent or stable (Brown, 2005 ). They often evaluate reliability based on agreement between human and computer scoring (e.g., Burstein & Chodorow, 1999 ; Elliot, 2003 ; Streeter et al., 2011 ). (See Table 1 for a summary of reliability statistics from three major AES developers.)

The process of establishing validity should not start and stop with inter-coder reliability; however, automated scoring presents some distinctive validity challenges, such as “the potential to under- or misrepresent the construct of interest, vulnerability to cheating, impact on examinee behavior, and score users’ interpretation and use of scores” (Williamson et al., 2012 , p. 3). Thus, some researchers have also demonstrated reliability by using alternative measures, such as the association with independent measures (Attali et al., 2010 ) and the generalizability of scores (Attali et al., 2010 ). Others have gone a step further and suggested a unified approach to AES validation (Weigle, 2013 , Williamson et al., 2012 ). In general, results reveal promising developments in AES with modest correlations between AES and external criteria, such as independent proficiency assessments (Attali et al., 2010 ; Powers et al., 2015 , suggesting that automated scores can relate in a similar manner to select assessment criteria and that both have the potential to reflect similar constructs, although results across AES systems can vary, and not all data are readily available to the public.

While much research has focused on reliability of AES, little is known about the quality of holistic scores in testing or classroom contexts as well as teachers’ and students’ use and perceptions of automatically generated scores. In a testing context, James ( 2006 ) compared the IntelliMetric scores of the ACCUPLACER OnLine WritePlacer Plus test to the scores of “untrained” faculty raters. Results revealed a relatively high level of correspondence between the two. In a similar study with a group of developmental writing students in a two-year college in South Texas, Wang and Brown ( 2007 ) found that ACCUPLACER’s overall holistic mean score showed significant difference between IntelliMetric and human raters, indicating that IntelliMetric tends to assign higher scores than human raters do. Li et al. ( 2014 ) investigated the correlation between Criterion’s numeric scores with the English as a second language instructors’ numeric grades and analytic ratings for classroom-based assessment. The results showed low to moderate positive correlations between Criterion’s scores and instructors’ scores and analytic ratings. Taken together, these studies suggest limited continuity of findings on AES reliability across tools.

Results of multiple studies demonstrate varied uses for holistic scores and varied teachers’ and students’ perceptions toward the scores. For example, Li et al. ( 2014 ) found that Criterion’s holistic scores in the English as a second language classroom were used in three ways. First, instructors used the scores as a forewarning. That is, the scores alerted instructors to problematic writing. Second, the scores were used as a pre-submission benchmark. That is, the students were required to obtain a certain score before submitting a final draft to their teacher. Finally, Criterion's scores were utilized as an assessment tool—scores were part of course grading. Similar findings were reported in Chen and Cheng’s ( 2008 ) study that focused on EFL Tawainese teachers’ and students’ use and perception of My Access! While one teacher used My Access! as a pre-submission benchmark, the other used it for both formative and summative assessment, heavily relying on the scores to assessing writing performance. The third teacher did not make My Access! a requirement and asked the students to use it if they needed to.

In terms of teachers’ perceptions of holistic scores, holistic scores seem to be motivators for promoting student revision (Li et al. 2014 ; Scharber et al., 2008 ) although a few teachers in Maeng ( 2010 ) commented that the score caused some stress albeit was still helpful for facilitating the feedback process (i.e., for providing sample writing and revising). Teachers also tend to have mixed confidence in holistic scores (Chen & Cheng, 2008 ; Li et al, 2014 ). For example, in Li et al.’s ( 2014 ) study, English as a second language instructors had high trust in Criterion’s low holistic scores as the essays Criterion scored low were, in fact, poor essays. However, instructors possessed low levels of trust when Criterion assigned high scores to writing as instructors judged such writing lower.

Students also tend to have low trust in holistic scores (Chen & Cheng, 2008 ; Scharber et al., 2008 ). For example, Chen and Cheng ( 2008 ) found that EFL Taiwanese students’ low level of trust in holistic scores was influenced by teachers’ low level of trust in the scores as well as discrepancies in teachers’ scores and holistic scores of My Access! that students noticed. Similar findings were reported in Scharber et al.’s ( 2008 ) study that focused on Educational Theory into Practice Software’s (ETIPS) automated scorer implemented in a post-baccalaureate program at a large public Midwestern US university. The students in their study experienced negative emotions due to discrepancies in teachers’ and ETIPS’ holistic scores. ETIPS scores were one point lower than teachers’ scores. Additionally, the students found holistic scores with the short descriptor insufficient in guiding them as to how to actually improve their essays.

5 Implications of This Technology for Writing Theory and Practice

The rapid advancement of NLP and ML approaches to automated scoring lends well to theoretical contributions that help to (re-)define traditional notions of how learning takes place and the phenomena that underscores language development. Social- and cognitive-based theories to writing studies can be expanded with the integration of AES technology by offering new, socially-situated learning opportunities in online environments that can impact how students respond to feedback. These digitally-rich learning opportunities can thus significantly impact the writing process, offering a new mode of feedback that can be meaningful, constant, timely, and manageable while addressing individual learner needs. From a traditional pen-and-paper approach, these benefits are known to contribute significantly to writing accuracy (Hartshorn et al., 2010 ), and so the addition of rapid technology has the potential to add new knowledge to writing development research.

AES research can also contribute to practice. Due to its instantaneous nature, AES holistic scores could be used for placement purposes (e.g., by using ACCUPLACER) at schools, colleges, and universities. However, relying on the AES holistic score alone may not be adequate. Therefore, just like in large-scale tests, it is important that students’ writing is double-rated to enhance reliability, with a third rater used if there is a discrepancy in AES holistic score and a human rater’s score. Similarly, AES holistic scores could be used for diagnostic assessment. Diagnostic assessment is given prior to or at the start of the semester/course to get information about students’ language proficiency as well as their strengths and weaknesses in writing. Finally, AES scoring could be used for summative classroom assessment. For example, teachers could use AES scores as a pre-submission benchmark and require students to revise their essays until they get a predetermined score, or teachers could use the AES score for partial (rather than sole) assessment of goal attainment (Li et al., 2014 ; Weigle, 2013 ). Overall, in order to avoid pitfalls such as students focusing too intensively on obtaining high scores without actually improving their writing skills, teachers and students need to be trained or seek training on the different merits and demerits of a selected AES scoring system.

6 Concluding Remarks

While traditional approaches to written corrective feedback are still leading writing studies research, the ever-changing digitalization of the writing process shines light on new opportunities for enhancing the nature of feedback provision. The evolution of AI will undoubtedly expand the affordances of AES so that writing in digital spaces can be supplemented by computer-based feedback that is increasingly accurate and reliable. For now, these technologies are only foregrounding what can come from technological advancements, and in the meantime, it is the task of researchers and practitioners to cast a critical eye while also remaining open to the potential for AES technologies to promote autonomous, lifelong learning and writing development.

7 Tool List

List of well-known Automated Essay Scoring (AES) Tools

Attali, Y., Bridgeman, B., & Trapani, C. (2010). Performance of a generic approach in automated essay scoring. Journal of Technology , Learning, and Assessment, 10 (3). http://www.jtla.org

Attali, Y., & Burstein, J. (2006). Automated essay scoring with e-rater V.2. Journal of Technology, Learning, and Assessment, 4 (3), 1–30.

Google Scholar

Brown, J. D. (2005). Testing in language programs. A comprehensive guide to English language assessment . McGraw Hill.

Burstein, J., & Chodorow, M. (1999). Automated essay scoring for nonnative English speakers . Proceedings of the ACL99 Workshop on Computer-Mediated Language Assessment and Evaluation of Natural Language Processing. http://www.ets.org/Media/Research/pdf/erater_acl99rev.pdf

Burstein, J., & Chodorow, M. (2010). Progress and new directions in technology for automated essay evaluation. In R. Kaplan (Ed.), The Oxford handbook of applied linguistics (2nd ed., pp. 487–497). Oxford University Press.

Chen, C., & Cheng, W. (2008). Beyond the design of automated writing evaluation: Pedagogical practices and perceived learning effectiveness in EFL writing classes. Language Learning & Technology, 12 (2), 94–112.

Chen, Y. Y., Liu, C. L., Chang, T. H., & Lee, C. H. (2010). An unsupervised automated essay scoring system. IEEE Intelligent Systems, 25 (5), 61–67. https://doi.org/10.1109/MIS.2010.3

Article Google Scholar

Condon, W. (2013). Large-scale assessment, locally-developed measures, and automated scoring of essays: Fishing for red herrings? Assessing Writing, 18 , 100–108. https://doi.org/10.1016/j.asw.2012.11.001

Crusan, D. (2010). Assessment in the second language writing classroom . University of Michigan Press.

Book Google Scholar

Deane, P. (2013). On the relation between automated essay scoring and modern views of the writing construct. Assessing Writing, 18 , 7–24.

Dexter, S. (2007). Educational theory into practice software. In D. Gibson, C. Aldrich, & M. Prensky (Eds.), Games and simulations in online learning: Research and development frameworks (pp. 223–238). IGI Global. https://doi.org/10.4018/978-1-59904-304-3.ch011

Dikli, S. (2006). An overview of automated scoring of essays. The Journal of Technology, Learning and Assessment, 5 (1). https://ejournals.bc.edu/index.php/jtla/article/view/1640

Dong, F., Zhang, Y., & Yang, J. (2017). Attention-based recurrent convolutional neural network for automatic essay scoring . Proceedings of the 21st Conference on Computational Natural Language Learning (CoNLL 2017). https://aclanthology.org/K17-1017.pdf

Elliot, S. (2003). IntelliMetric: From here to validity. In M. D. Shermis & J. C. Burstein (Eds.), Automatic essay scoring: A cross-disciplinary perspective (pp. 71–86). Lawrence Erlbaum Associates.

ETS. (2013). Criterion scoring guide . Retrieved September 27, 2013, from http://www.ets.org/Media/Products/Criterion/topics/co-1s.htm

Ferris, D. R. (2011). Treatment of errors in second language student writing (2nd ed.). The University of Michigan Press.

Hartshorn, K. J., Evans, N. W., Merrill, P. F., Sudweeks, R. R., Strong-Krause, D., & Anderson, N. J. (2010). Effects of dynamic corrective feedback on ESL writing accuracy. TESOL Quarterly, 44 , 84–109.

Hearst, M. (2000). The debate on automated essay grading. IEEE Intelligent Systems and their Applications, 15 (5), 22–37. https://doi.org/10.1109/5254.889104

IBM. (2020). Machine learning . IBM Cloud Education. https://www.ibm.com/cloud/learn/machine-learning

James, C. (2006). Validating a computerized scoring system for assessing writing and placing students in composition courses. Assessing Writing, 11 (3), 167–178.

Kellogg, R., Whiteford, A., & Quinlan, T. (2010). Does automated feedback help students learn to write? Journal of Educational Computing Research, 42 , 173–196.

Kumar, V., & Boulanger, D. (2020). Explainable automated essay scoring: Deep learning really has pedagogical value. Frontiers in Education (Lausanne) , 5 . https://doi.org/10.3389/feduc.2020.572367

Landauer, T. K., Laham, D., & Foltz, P. (2003). Automatic essay assessment. Assessment in Education, 10 (3), 295–308.

Leacock, C., & Chodorow, M. (2003). C-rater: Automated scoring of short-answer questions. Computers and the Humanities, 37 , 389–405.

Li, Z., Link, S., Ma, H., Yang, H., & Hegelheimer, V. (2014). The role of automated writing evaluation holistic scores in the ESL classroom. System, 44 , 66–78. https://doi.org/10.1016/j.system.2014.02.007

Loukina, A., et al. (2019). The many dimensions of algorithmic fairness in educational applications . BEA@ACL.

Madnani, N., & Cahill, A. (2018). Automated scoring: Beyond natural language processing . COLING.

Maeng, U. (2010). The effect and teachers’ perception of using an automated essay scoring system in L2 writing. English Language and Linguistics, 16 (1), 247–275.

NCTE. (2013, April 20). NCTE position statement on machine scoring . National Council of Teachers of English. https://ncte.org/statement/machine_scoring/

Nguyen, H., & Dery, L. (2016). Neural networks for automated essay grading (pp. 1–11). CS224d Stanford Reports.

Page, E. B. (1966). The imminence of grading essays by computer. Phi Delta Kappan, 48 , 238–243.

Perelman, L. (2014). When “the state of the art” is counting words. Assessing Writing, 21 , 104–111.

Perelman, L. (2020). The BABEL generator and E-rater: 21st century writing constructs and automated essay scoring (AES). Journal of Writing Assessment, 13 (1).

Powers, D. E., Escoffery, D. S., & Duchnowski, M. P. (2015). Validating automated essay scoring: A (modest) refinement of the “gold standard.” Applied Measurement in Education, 28 (2), 130–142. https://doi.org/10.1080/08957347.2014.1002920

Ramesh, D., & Sanampudi, S. K. (2021). An automated essay scoring systems: A systematic literature review. The Artificial Intelligence Review, 55 (3), 2495–2527. https://doi.org/10.1007/s10462-021-10068-2

Riordan, B., Horbach, A., Cahill, A., Zesch, T., & Lee, C. M. (2017). Investigating neural architectures for short answer scoring . Proceedings of the 12th Workshop on Innovative Use of NLP for Building Educational Applications. https://aclanthology.org/W17-5017.pdf

Rudner, L., Garcia, V., & Welch, C. (2006). An evaluation of IntelliMetricTM essay scoring system. Journal of Technology, Learning, and Assessment, 4 (4). http://escholarship.bc.edu/ojs/index.php/jtla/article/view/1651/1493

Scharber, C., Dexter, S., & Riedel, E. (2008). Students’ experiences with an automated essay scorer. Journal of Technology, Learning and Assessment, 7 (1), 1–45. https://ejournals.bc.edu/index.php/jtla/article/view/1628

Streeter, L., Bernstein, J., Foltz, P., & DeLand, D. (2011). Pearson’s automated scoring of writing, speaking, and mathematics . White Paper. http://images.pearsonassessments.com/images/tmrs/PearsonsAutomatedScoringofWritingSpeakingandMathematics.pdf

Wang, J., & Brown, M. S. (2007). Automated essay scoring versus human scoring: A comparative study. Journal of Technology, Learning, and Assessment, 6 (2). http://www.jtla.org

Wang, Y., Shang, H., & Briody, P. (2013). Exploring the impact of using automated writing evaluation in English as a foreign language university students’ writing. Computer Assisted Language Learning, 26 (3), 1–24.

Weigle, S. C. (2013). English as a second language writing and automated essay evaluation. In M. D. Shermis & J. C. Burstein (Eds.), Handbook of automated essay evaluation: Current applications and new directions (pp. 36–54). Routledge.

William, D. M., Bejar, I. I., & Hone, A. S. (1999). ’Mental model’ comparison of automated and human scoring. Journal of Educational Measurement, 35 (2), 158–184.

Williamson, D., Xi, X., & Breyer, F. J. (2012). A framework for evaluation and use of automated scoring. Educational Measurement: Issues and Practice, 31 (1), 2–13.

Yang, Y., Buckendahl, C. W., Juszkiewicz, P. J., & Bhola, D. S. (2002). A review of strategies for validating computer-automated scoring. Applied Measurement in Education, 15 (4), 391–412. https://doi.org/10.1207/S15324818AME1504_04

Zhang, Y., Jin, R., & Zhou, Z. H. (2010). Understanding bag-of-words model: A statistical framework. International Journal of Machine Learning and Cybernetics, 1 , 43–52.

Download references

Author information

Authors and affiliations.

Oklahoma State University, 205 Morrill Hall, Stillwater, OK, 74078, USA

Stephanie Link

Department of Languages and Literature, Northeastern State University, Tahlequah, OK, 74464, USA

Svetlana Koltovskaia

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Stephanie Link .

Editor information

Editors and affiliations.

School of Applied Linguistics, Zurich University of Applied Sciences, Winterthur, Switzerland

School of Management and Law, Center for Innovative Teaching and Learning, Zurich University of Applied Sciences, Winterthur, Switzerland

Christian Rapp

North Carolina State University, Raleigh, NC, USA

Chris M. Anson

TECFA, Faculty of Psychology and Educational Sciences, University of Geneva, Geneva, Switzerland

Kalliopi Benetos

English Department, Iowa State University, Ames, IA, USA

Elena Cotos

School of Education, Trinity College Dublin, Dublin, Ireland

TD School, University of Technology Sydney, Sydney, NSW, Australia

Antonette Shibani

Rights and permissions

Open Access This chapter is licensed under the terms of the Creative Commons Attribution 4.0 International License ( http://creativecommons.org/licenses/by/4.0/ ), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made.

The images or other third party material in this chapter are included in the chapter's Creative Commons license, unless indicated otherwise in a credit line to the material. If material is not included in the chapter's Creative Commons license and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder.

Reprints and permissions

Copyright information

© 2023 The Author(s)

About this chapter

Link, S., Koltovskaia, S. (2023). Automated Scoring of Writing. In: Kruse, O., et al. Digital Writing Technologies in Higher Education . Springer, Cham. https://doi.org/10.1007/978-3-031-36033-6_21

Download citation

DOI : https://doi.org/10.1007/978-3-031-36033-6_21

Published : 15 September 2023

Publisher Name : Springer, Cham

Print ISBN : 978-3-031-36032-9

Online ISBN : 978-3-031-36033-6

eBook Packages : Education Education (R0)

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

- Readers Read

- Screenwriting

- Songwriting

- Writing Contests

Karlie Kloss to Relaunch Life Magazine at Bedford Media

NBF Expands National Book Awards Eligibility Criteria

Striking Writers and Actors March Together on Hollywood Streets

Vice Media Files for Chapter 11 Bankruptcy

Oprah Selects The Covenant of Water as 101st Book Club Pick

- Self-publishing

- Technical Writing

- Writing Prompts

Cookie Policy

Our website uses cookies to understand content and feature usage to drive site improvements over time. To learn more, review our Terms of Use and Privacy Policy .

Project Score: Write Like a Historian

Build structured, rubric-based support into your writing instruction. Provide additional support where needed based on Score, the OER Project’s automated essay-scoring service.

Every history teacher deserves a writing assistant that helps identify and focus on areas for student improvement. Project Score includes lessons, tools, prompts, and an automated essay-scoring service proven to help students write better and improve their historical writing and literacy skills.

Why Teach with Project Score?

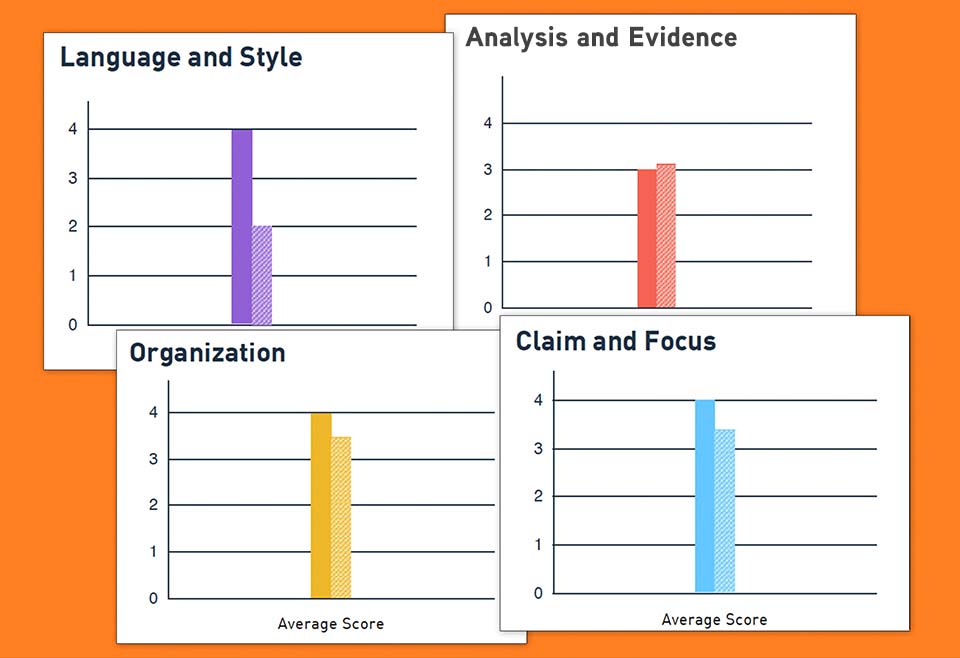

This course features Score, the free OER Project essay-scoring service. When combined with writing prompts and scaffolded pre- and post-writing activities, this powerful tool provides consistent formative feedback and loads of data to inform instruction and discussion.

Prompts: A variety of writing prompts address enduring historical questions about our planet, life, and humanity.

Prewriting: Scaffolded warm-up activities help prepare students for writing by introducing them to key elements of the Score Writing Rubric and giving them ample opportunity to prepare for successful writing.

Postwriting: Getting students to revise their writing can be a challenge. Scaffolded revision activities, focused on the Score Writing Rubric, give students the guidance and structure they need.

Consistent, instant feedback: Students can independently check their writing and receive immediate feedback to help inform their writing.

Informs instruction: Teachers receive individual and class data to help identify who needs extra help or where a class is struggling.

Sparks discussion: Score is not a grading tool—it’s a conversation starter for you and your students and a way for you to make data-driven instructional decisions.

Flexible. Ready to Use. Fully Supported.

Project Score can be taught as a two-week course, or you can use the activities anywhere within the Big History Project or World History Project courses for additional writing instruction. Find comprehensive support from the OER Project team and our vibrant teacher community.

Help yourself to everything our free, online history courses have to offer: mind-blowing content balanced with critical skills development and lots of support.

Get started building student writing capabilities—with confidence.

Not sure how you will use this in the classroom? Connect with fellow teachers and scholars in the OER Project Online Teacher Community to get your questions answered.

Extend Student Learning with our Supplemental Units

Try an OER Project supplemental unit to boost skill development in your classroom. These extension materials are the perfect supplement to an existing history or language arts curriculum.

Climate Project Extension

A supplemental unit that starts with evidence and ends with student-developed plans to reach net carbon zero by 2050.

Help students understand and use data to confront urgent world topics such as poverty, democracy, and climate. This 2-week supplement is designed for high-school students and includes 10 data-exploration exercises that lead up to a final class presentation.

Access this course

Join OER Project to get instant access to the Project Score course for FREE with no hidden catches.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Springer Nature - PMC COVID-19 Collection

An automated essay scoring systems: a systematic literature review

Dadi ramesh.

1 School of Computer Science and Artificial Intelligence, SR University, Warangal, TS India

2 Research Scholar, JNTU, Hyderabad, India

Suresh Kumar Sanampudi

3 Department of Information Technology, JNTUH College of Engineering, Nachupally, Kondagattu, Jagtial, TS India

Associated Data

Assessment in the Education system plays a significant role in judging student performance. The present evaluation system is through human assessment. As the number of teachers' student ratio is gradually increasing, the manual evaluation process becomes complicated. The drawback of manual evaluation is that it is time-consuming, lacks reliability, and many more. This connection online examination system evolved as an alternative tool for pen and paper-based methods. Present Computer-based evaluation system works only for multiple-choice questions, but there is no proper evaluation system for grading essays and short answers. Many researchers are working on automated essay grading and short answer scoring for the last few decades, but assessing an essay by considering all parameters like the relevance of the content to the prompt, development of ideas, Cohesion, and Coherence is a big challenge till now. Few researchers focused on Content-based evaluation, while many of them addressed style-based assessment. This paper provides a systematic literature review on automated essay scoring systems. We studied the Artificial Intelligence and Machine Learning techniques used to evaluate automatic essay scoring and analyzed the limitations of the current studies and research trends. We observed that the essay evaluation is not done based on the relevance of the content and coherence.

Supplementary Information

The online version contains supplementary material available at 10.1007/s10462-021-10068-2.

Introduction

Due to COVID 19 outbreak, an online educational system has become inevitable. In the present scenario, almost all the educational institutions ranging from schools to colleges adapt the online education system. The assessment plays a significant role in measuring the learning ability of the student. Most automated evaluation is available for multiple-choice questions, but assessing short and essay answers remain a challenge. The education system is changing its shift to online-mode, like conducting computer-based exams and automatic evaluation. It is a crucial application related to the education domain, which uses natural language processing (NLP) and Machine Learning techniques. The evaluation of essays is impossible with simple programming languages and simple techniques like pattern matching and language processing. Here the problem is for a single question, we will get more responses from students with a different explanation. So, we need to evaluate all the answers concerning the question.

Automated essay scoring (AES) is a computer-based assessment system that automatically scores or grades the student responses by considering appropriate features. The AES research started in 1966 with the Project Essay Grader (PEG) by Ajay et al. ( 1973 ). PEG evaluates the writing characteristics such as grammar, diction, construction, etc., to grade the essay. A modified version of the PEG by Shermis et al. ( 2001 ) was released, which focuses on grammar checking with a correlation between human evaluators and the system. Foltz et al. ( 1999 ) introduced an Intelligent Essay Assessor (IEA) by evaluating content using latent semantic analysis to produce an overall score. Powers et al. ( 2002 ) proposed E-rater and Intellimetric by Rudner et al. ( 2006 ) and Bayesian Essay Test Scoring System (BESTY) by Rudner and Liang ( 2002 ), these systems use natural language processing (NLP) techniques that focus on style and content to obtain the score of an essay. The vast majority of the essay scoring systems in the 1990s followed traditional approaches like pattern matching and a statistical-based approach. Since the last decade, the essay grading systems started using regression-based and natural language processing techniques. AES systems like Dong et al. ( 2017 ) and others developed from 2014 used deep learning techniques, inducing syntactic and semantic features resulting in better results than earlier systems.

Ohio, Utah, and most US states are using AES systems in school education, like Utah compose tool, Ohio standardized test (an updated version of PEG), evaluating millions of student's responses every year. These systems work for both formative, summative assessments and give feedback to students on the essay. Utah provided basic essay evaluation rubrics (six characteristics of essay writing): Development of ideas, organization, style, word choice, sentence fluency, conventions. Educational Testing Service (ETS) has been conducting significant research on AES for more than a decade and designed an algorithm to evaluate essays on different domains and providing an opportunity for test-takers to improve their writing skills. In addition, they are current research content-based evaluation.

The evaluation of essay and short answer scoring should consider the relevance of the content to the prompt, development of ideas, Cohesion, Coherence, and domain knowledge. Proper assessment of the parameters mentioned above defines the accuracy of the evaluation system. But all these parameters cannot play an equal role in essay scoring and short answer scoring. In a short answer evaluation, domain knowledge is required, like the meaning of "cell" in physics and biology is different. And while evaluating essays, the implementation of ideas with respect to prompt is required. The system should also assess the completeness of the responses and provide feedback.

Several studies examined AES systems, from the initial to the latest AES systems. In which the following studies on AES systems are Blood ( 2011 ) provided a literature review from PEG 1984–2010. Which has covered only generalized parts of AES systems like ethical aspects, the performance of the systems. Still, they have not covered the implementation part, and it’s not a comparative study and has not discussed the actual challenges of AES systems.

Burrows et al. ( 2015 ) Reviewed AES systems on six dimensions like dataset, NLP techniques, model building, grading models, evaluation, and effectiveness of the model. They have not covered feature extraction techniques and challenges in features extractions. Covered only Machine Learning models but not in detail. This system not covered the comparative analysis of AES systems like feature extraction, model building, and level of relevance, cohesion, and coherence not covered in this review.

Ke et al. ( 2019 ) provided a state of the art of AES system but covered very few papers and not listed all challenges, and no comparative study of the AES model. On the other hand, Hussein et al. in ( 2019 ) studied two categories of AES systems, four papers from handcrafted features for AES systems, and four papers from the neural networks approach, discussed few challenges, and did not cover feature extraction techniques, the performance of AES models in detail.

Klebanov et al. ( 2020 ). Reviewed 50 years of AES systems, listed and categorized all essential features that need to be extracted from essays. But not provided a comparative analysis of all work and not discussed the challenges.

This paper aims to provide a systematic literature review (SLR) on automated essay grading systems. An SLR is an Evidence-based systematic review to summarize the existing research. It critically evaluates and integrates all relevant studies' findings and addresses the research domain's specific research questions. Our research methodology uses guidelines given by Kitchenham et al. ( 2009 ) for conducting the review process; provide a well-defined approach to identify gaps in current research and to suggest further investigation.

We addressed our research method, research questions, and the selection process in Sect. 2 , and the results of the research questions have discussed in Sect. 3 . And the synthesis of all the research questions addressed in Sect. 4 . Conclusion and possible future work discussed in Sect. 5 .

Research method

We framed the research questions with PICOC criteria.

Population (P) Student essays and answers evaluation systems.

Intervention (I) evaluation techniques, data sets, features extraction methods.

Comparison (C) Comparison of various approaches and results.

Outcomes (O) Estimate the accuracy of AES systems,

Context (C) NA.

Research questions

To collect and provide research evidence from the available studies in the domain of automated essay grading, we framed the following research questions (RQ):

RQ1 what are the datasets available for research on automated essay grading?

The answer to the question can provide a list of the available datasets, their domain, and access to the datasets. It also provides a number of essays and corresponding prompts.

RQ2 what are the features extracted for the assessment of essays?

The answer to the question can provide an insight into various features so far extracted, and the libraries used to extract those features.

RQ3, which are the evaluation metrics available for measuring the accuracy of algorithms?

The answer will provide different evaluation metrics for accurate measurement of each Machine Learning approach and commonly used measurement technique.

RQ4 What are the Machine Learning techniques used for automatic essay grading, and how are they implemented?

It can provide insights into various Machine Learning techniques like regression models, classification models, and neural networks for implementing essay grading systems. The response to the question can give us different assessment approaches for automated essay grading systems.

RQ5 What are the challenges/limitations in the current research?

The answer to the question provides limitations of existing research approaches like cohesion, coherence, completeness, and feedback.

Search process

We conducted an automated search on well-known computer science repositories like ACL, ACM, IEEE Explore, Springer, and Science Direct for an SLR. We referred to papers published from 2010 to 2020 as much of the work during these years focused on advanced technologies like deep learning and natural language processing for automated essay grading systems. Also, the availability of free data sets like Kaggle (2012), Cambridge Learner Corpus-First Certificate in English exam (CLC-FCE) by Yannakoudakis et al. ( 2011 ) led to research this domain.

Search Strings : We used search strings like “Automated essay grading” OR “Automated essay scoring” OR “short answer scoring systems” OR “essay scoring systems” OR “automatic essay evaluation” and searched on metadata.

Selection criteria

After collecting all relevant documents from the repositories, we prepared selection criteria for inclusion and exclusion of documents. With the inclusion and exclusion criteria, it becomes more feasible for the research to be accurate and specific.

Inclusion criteria 1 Our approach is to work with datasets comprise of essays written in English. We excluded the essays written in other languages.

Inclusion criteria 2 We included the papers implemented on the AI approach and excluded the traditional methods for the review.

Inclusion criteria 3 The study is on essay scoring systems, so we exclusively included the research carried out on only text data sets rather than other datasets like image or speech.

Exclusion criteria We removed the papers in the form of review papers, survey papers, and state of the art papers.

Quality assessment

In addition to the inclusion and exclusion criteria, we assessed each paper by quality assessment questions to ensure the article's quality. We included the documents that have clearly explained the approach they used, the result analysis and validation.

The quality checklist questions are framed based on the guidelines from Kitchenham et al. ( 2009 ). Each quality assessment question was graded as either 1 or 0. The final score of the study range from 0 to 3. A cut off score for excluding a study from the review is 2 points. Since the papers scored 2 or 3 points are included in the final evaluation. We framed the following quality assessment questions for the final study.

Quality Assessment 1: Internal validity.

Quality Assessment 2: External validity.

Quality Assessment 3: Bias.

The two reviewers review each paper to select the final list of documents. We used the Quadratic Weighted Kappa score to measure the final agreement between the two reviewers. The average resulted from the kappa score is 0.6942, a substantial agreement between the reviewers. The result of evolution criteria shown in Table Table1. 1 . After Quality Assessment, the final list of papers for review is shown in Table Table2. 2 . The complete selection process is shown in Fig. Fig.1. 1 . The total number of selected papers in year wise as shown in Fig. Fig.2. 2 .

Quality assessment analysis

Final list of papers

Selection process

Year wise publications

What are the datasets available for research on automated essay grading?

To work with problem statement especially in Machine Learning and deep learning domain, we require considerable amount of data to train the models. To answer this question, we listed all the data sets used for training and testing for automated essay grading systems. The Cambridge Learner Corpus-First Certificate in English exam (CLC-FCE) Yannakoudakis et al. ( 2011 ) developed corpora that contain 1244 essays and ten prompts. This corpus evaluates whether a student can write the relevant English sentences without any grammatical and spelling mistakes. This type of corpus helps to test the models built for GRE and TOFEL type of exams. It gives scores between 1 and 40.

Bailey and Meurers ( 2008 ), Created a dataset (CREE reading comprehension) for language learners and automated short answer scoring systems. The corpus consists of 566 responses from intermediate students. Mohler and Mihalcea ( 2009 ). Created a dataset for the computer science domain consists of 630 responses for data structure assignment questions. The scores are range from 0 to 5 given by two human raters.

Dzikovska et al. ( 2012 ) created a Student Response Analysis (SRA) corpus. It consists of two sub-groups: the BEETLE corpus consists of 56 questions and approximately 3000 responses from students in the electrical and electronics domain. The second one is the SCIENTSBANK(SemEval-2013) (Dzikovska et al. 2013a ; b ) corpus consists of 10,000 responses on 197 prompts on various science domains. The student responses ladled with "correct, partially correct incomplete, Contradictory, Irrelevant, Non-domain."

In the Kaggle (2012) competition, released total 3 types of corpuses on an Automated Student Assessment Prize (ASAP1) (“ https://www.kaggle.com/c/asap-sas/ ” ) essays and short answers. It has nearly 17,450 essays, out of which it provides up to 3000 essays for each prompt. It has eight prompts that test 7th to 10th grade US students. It gives scores between the [0–3] and [0–60] range. The limitations of these corpora are: (1) it has a different score range for other prompts. (2) It uses statistical features such as named entities extraction and lexical features of words to evaluate essays. ASAP + + is one more dataset from Kaggle. It is with six prompts, and each prompt has more than 1000 responses total of 10,696 from 8th-grade students. Another corpus contains ten prompts from science, English domains and a total of 17,207 responses. Two human graders evaluated all these responses.

Correnti et al. ( 2013 ) created a Response-to-Text Assessment (RTA) dataset used to check student writing skills in all directions like style, mechanism, and organization. 4–8 grade students give the responses to RTA. Basu et al. ( 2013 ) created a power grading dataset with 700 responses for ten different prompts from US immigration exams. It contains all short answers for assessment.

The TOEFL11 corpus Blanchard et al. ( 2013 ) contains 1100 essays evenly distributed over eight prompts. It is used to test the English language skills of a candidate attending the TOFEL exam. It scores the language proficiency of a candidate as low, medium, and high.

International Corpus of Learner English (ICLE) Granger et al. ( 2009 ) built a corpus of 3663 essays covering different dimensions. It has 12 prompts with 1003 essays that test the organizational skill of essay writing, and13 prompts, each with 830 essays that examine the thesis clarity and prompt adherence.

Argument Annotated Essays (AAE) Stab and Gurevych ( 2014 ) developed a corpus that contains 102 essays with 101 prompts taken from the essayforum2 site. It tests the persuasive nature of the student essay. The SCIENTSBANK corpus used by Sakaguchi et al. ( 2015 ) available in git-hub, containing 9804 answers to 197 questions in 15 science domains. Table Table3 3 illustrates all datasets related to AES systems.

ALL types Datasets used in Automatic scoring systems

Features play a major role in the neural network and other supervised Machine Learning approaches. The automatic essay grading systems scores student essays based on different types of features, which play a prominent role in training the models. Based on their syntax and semantics and they are categorized into three groups. 1. statistical-based features Contreras et al. ( 2018 ); Kumar et al. ( 2019 ); Mathias and Bhattacharyya ( 2018a ; b ) 2. Style-based (Syntax) features Cummins et al. ( 2016 ); Darwish and Mohamed ( 2020 ); Ke et al. ( 2019 ). 3. Content-based features Dong et al. ( 2017 ). A good set of features appropriate models evolved better AES systems. The vast majority of the researchers are using regression models if features are statistical-based. For Neural Networks models, researches are using both style-based and content-based features. The following table shows the list of various features used in existing AES Systems. Table Table4 4 represents all set of features used for essay grading.

Types of features

We studied all the feature extracting NLP libraries as shown in Fig. Fig.3. that 3 . that are used in the papers. The NLTK is an NLP tool used to retrieve statistical features like POS, word count, sentence count, etc. With NLTK, we can miss the essay's semantic features. To find semantic features Word2Vec Mikolov et al. ( 2013 ), GloVe Jeffrey Pennington et al. ( 2014 ) is the most used libraries to retrieve the semantic text from the essays. And in some systems, they directly trained the model with word embeddings to find the score. From Fig. Fig.4 4 as observed that non-content-based feature extraction is higher than content-based.

Usages of tools

Number of papers on content based features

RQ3 which are the evaluation metrics available for measuring the accuracy of algorithms?

The majority of the AES systems are using three evaluation metrics. They are (1) quadrated weighted kappa (QWK) (2) Mean Absolute Error (MAE) (3) Pearson Correlation Coefficient (PCC) Shehab et al. ( 2016 ). The quadratic weighted kappa will find agreement between human evaluation score and system evaluation score and produces value ranging from 0 to 1. And the Mean Absolute Error is the actual difference between human-rated score to system-generated score. The mean square error (MSE) measures the average squares of the errors, i.e., the average squared difference between the human-rated and the system-generated scores. MSE will always give positive numbers only. Pearson's Correlation Coefficient (PCC) finds the correlation coefficient between two variables. It will provide three values (0, 1, − 1). "0" represents human-rated and system scores that are not related. "1" represents an increase in the two scores. "− 1" illustrates a negative relationship between the two scores.

RQ4 what are the Machine Learning techniques being used for automatic essay grading, and how are they implemented?

After scrutinizing all documents, we categorize the techniques used in automated essay grading systems into four baskets. 1. Regression techniques. 2. Classification model. 3. Neural networks. 4. Ontology-based approach.

All the existing AES systems developed in the last ten years employ supervised learning techniques. Researchers using supervised methods viewed the AES system as either regression or classification task. The goal of the regression task is to predict the score of an essay. The classification task is to classify the essays belonging to (low, medium, or highly) relevant to the question's topic. Since the last three years, most AES systems developed made use of the concept of the neural network.

Regression based models

Mohler and Mihalcea ( 2009 ). proposed text-to-text semantic similarity to assign a score to the student essays. There are two text similarity measures like Knowledge-based measures, corpus-based measures. There eight knowledge-based tests with all eight models. They found the similarity. The shortest path similarity determines based on the length, which shortest path between two contexts. Leacock & Chodorow find the similarity based on the shortest path's length between two concepts using node-counting. The Lesk similarity finds the overlap between the corresponding definitions, and Wu & Palmer algorithm finds similarities based on the depth of two given concepts in the wordnet taxonomy. Resnik, Lin, Jiang&Conrath, Hirst& St-Onge find the similarity based on different parameters like the concept, probability, normalization factor, lexical chains. In corpus-based likeness, there LSA BNC, LSA Wikipedia, and ESA Wikipedia, latent semantic analysis is trained on Wikipedia and has excellent domain knowledge. Among all similarity scores, correlation scores LSA Wikipedia scoring accuracy is more. But these similarity measure algorithms are not using NLP concepts. These models are before 2010 and basic concept models to continue the research automated essay grading with updated algorithms on neural networks with content-based features.

Adamson et al. ( 2014 ) proposed an automatic essay grading system which is a statistical-based approach in this they retrieved features like POS, Character count, Word count, Sentence count, Miss spelled words, n-gram representation of words to prepare essay vector. They formed a matrix with these all vectors in that they applied LSA to give a score to each essay. It is a statistical approach that doesn’t consider the semantics of the essay. The accuracy they got when compared to the human rater score with the system is 0.532.

Cummins et al. ( 2016 ). Proposed Timed Aggregate Perceptron vector model to give ranking to all the essays, and later they converted the rank algorithm to predict the score of the essay. The model trained with features like Word unigrams, bigrams, POS, Essay length, grammatical relation, Max word length, sentence length. It is multi-task learning, gives ranking to the essays, and predicts the score for the essay. The performance evaluated through QWK is 0.69, a substantial agreement between the human rater and the system.

Sultan et al. ( 2016 ). Proposed a Ridge regression model to find short answer scoring with Question Demoting. Question Demoting is the new concept included in the essay's final assessment to eliminate duplicate words from the essay. The extracted features are Text Similarity, which is the similarity between the student response and reference answer. Question Demoting is the number of repeats in a student response. With inverse document frequency, they assigned term weight. The sentence length Ratio is the number of words in the student response, is another feature. With these features, the Ridge regression model was used, and the accuracy they got 0.887.

Contreras et al. ( 2018 ). Proposed Ontology based on text mining in this model has given a score for essays in phases. In phase-I, they generated ontologies with ontoGen and SVM to find the concept and similarity in the essay. In phase II from ontologies, they retrieved features like essay length, word counts, correctness, vocabulary, and types of word used, domain information. After retrieving statistical data, they used a linear regression model to find the score of the essay. The accuracy score is the average of 0.5.

Darwish and Mohamed ( 2020 ) proposed the fusion of fuzzy Ontology with LSA. They retrieve two types of features, like syntax features and semantic features. In syntax features, they found Lexical Analysis with tokens, and they construct a parse tree. If the parse tree is broken, the essay is inconsistent—a separate grade assigned to the essay concerning syntax features. The semantic features are like similarity analysis, Spatial Data Analysis. Similarity analysis is to find duplicate sentences—Spatial Data Analysis for finding Euclid distance between the center and part. Later they combine syntax features and morphological features score for the final score. The accuracy they achieved with the multiple linear regression model is 0.77, mostly on statistical features.

Süzen Neslihan et al. ( 2020 ) proposed a text mining approach for short answer grading. First, their comparing model answers with student response by calculating the distance between two sentences. By comparing the model answer with student response, they find the essay's completeness and provide feedback. In this approach, model vocabulary plays a vital role in grading, and with this model vocabulary, the grade will be assigned to the student's response and provides feedback. The correlation between the student answer to model answer is 0.81.

Classification based Models

Persing and Ng ( 2013 ) used a support vector machine to score the essay. The features extracted are OS, N-gram, and semantic text to train the model and identified the keywords from the essay to give the final score.

Sakaguchi et al. ( 2015 ) proposed two methods: response-based and reference-based. In response-based scoring, the extracted features are response length, n-gram model, and syntactic elements to train the support vector regression model. In reference-based scoring, features such as sentence similarity using word2vec is used to find the cosine similarity of the sentences that is the final score of the response. First, the scores were discovered individually and later combined two features to find a final score. This system gave a remarkable increase in performance by combining the scores.

Mathias and Bhattacharyya ( 2018a ; b ) Proposed Automated Essay Grading Dataset with Essay Attribute Scores. The first concept features selection depends on the essay type. So the common attributes are Content, Organization, Word Choice, Sentence Fluency, Conventions. In this system, each attribute is scored individually, with the strength of each attribute identified. The model they used is a random forest classifier to assign scores to individual attributes. The accuracy they got with QWK is 0.74 for prompt 1 of the ASAS dataset ( https://www.kaggle.com/c/asap-sas/ ).

Ke et al. ( 2019 ) used a support vector machine to find the response score. In this method, features like Agreeability, Specificity, Clarity, Relevance to prompt, Conciseness, Eloquence, Confidence, Direction of development, Justification of opinion, and Justification of importance. First, the individual parameter score obtained was later combined with all scores to give a final response score. The features are used in the neural network to find whether the sentence is relevant to the topic or not.

Salim et al. ( 2019 ) proposed an XGBoost Machine Learning classifier to assess the essays. The algorithm trained on features like word count, POS, parse tree depth, and coherence in the articles with sentence similarity percentage; cohesion and coherence are considered for training. And they implemented K-fold cross-validation for a result the average accuracy after specific validations is 68.12.

Neural network models

Shehab et al. ( 2016 ) proposed a neural network method that used learning vector quantization to train human scored essays. After training, the network can provide a score to the ungraded essays. First, we should process the essay to remove Spell checking and then perform preprocessing steps like Document Tokenization, stop word removal, Stemming, and submit it to the neural network. Finally, the model will provide feedback on the essay, whether it is relevant to the topic. And the correlation coefficient between human rater and system score is 0.7665.

Kopparapu and De ( 2016 ) proposed the Automatic Ranking of Essays using Structural and Semantic Features. This approach constructed a super essay with all the responses. Next, ranking for a student essay is done based on the super-essay. The structural and semantic features derived helps to obtain the scores. In a paragraph, 15 Structural features like an average number of sentences, the average length of sentences, and the count of words, nouns, verbs, adjectives, etc., are used to obtain a syntactic score. A similarity score is used as semantic features to calculate the overall score.

Dong and Zhang ( 2016 ) proposed a hierarchical CNN model. The model builds two layers with word embedding to represents the words as the first layer. The second layer is a word convolution layer with max-pooling to find word vectors. The next layer is a sentence-level convolution layer with max-pooling to find the sentence's content and synonyms. A fully connected dense layer produces an output score for an essay. The accuracy with the hierarchical CNN model resulted in an average QWK of 0.754.

Taghipour and Ng ( 2016 ) proposed a first neural approach for essay scoring build in which convolution and recurrent neural network concepts help in scoring an essay. The network uses a lookup table with the one-hot representation of the word vector of an essay. The final efficiency of the network model with LSTM resulted in an average QWK of 0.708.

Dong et al. ( 2017 ). Proposed an Attention-based scoring system with CNN + LSTM to score an essay. For CNN, the input parameters were character embedding and word embedding, and it has attention pooling layers and used NLTK to obtain word and character embedding. The output gives a sentence vector, which provides sentence weight. After CNN, it will have an LSTM layer with an attention pooling layer, and this final layer results in the final score of the responses. The average QWK score is 0.764.

Riordan et al. ( 2017 ) proposed a neural network with CNN and LSTM layers. Word embedding, given as input to a neural network. An LSTM network layer will retrieve the window features and delivers them to the aggregation layer. The aggregation layer is a superficial layer that takes a correct window of words and gives successive layers to predict the answer's sore. The accuracy of the neural network resulted in a QWK of 0.90.

Zhao et al. ( 2017 ) proposed a new concept called Memory-Augmented Neural network with four layers, input representation layer, memory addressing layer, memory reading layer, and output layer. An input layer represents all essays in a vector form based on essay length. After converting the word vector, the memory addressing layer takes a sample of the essay and weighs all the terms. The memory reading layer takes the input from memory addressing segment and finds the content to finalize the score. Finally, the output layer will provide the final score of the essay. The accuracy of essay scores is 0.78, which is far better than the LSTM neural network.

Mathias and Bhattacharyya ( 2018a ; b ) proposed deep learning networks using LSTM with the CNN layer and GloVe pre-trained word embeddings. For this, they retrieved features like Sentence count essays, word count per sentence, Number of OOVs in the sentence, Language model score, and the text's perplexity. The network predicted the goodness scores of each essay. The higher the goodness scores, means higher the rank and vice versa.

Nguyen and Dery ( 2016 ). Proposed Neural Networks for Automated Essay Grading. In this method, a single layer bi-directional LSTM accepting word vector as input. Glove vectors used in this method resulted in an accuracy of 90%.

Ruseti et al. ( 2018 ) proposed a recurrent neural network that is capable of memorizing the text and generate a summary of an essay. The Bi-GRU network with the max-pooling layer molded on the word embedding of each document. It will provide scoring to the essay by comparing it with a summary of the essay from another Bi-GRU network. The result obtained an accuracy of 0.55.

Wang et al. ( 2018a ; b ) proposed an automatic scoring system with the bi-LSTM recurrent neural network model and retrieved the features using the word2vec technique. This method generated word embeddings from the essay words using the skip-gram model. And later, word embedding is used to train the neural network to find the final score. The softmax layer in LSTM obtains the importance of each word. This method used a QWK score of 0.83%.

Dasgupta et al. ( 2018 ) proposed a technique for essay scoring with augmenting textual qualitative Features. It extracted three types of linguistic, cognitive, and psychological features associated with a text document. The linguistic features are Part of Speech (POS), Universal Dependency relations, Structural Well-formedness, Lexical Diversity, Sentence Cohesion, Causality, and Informativeness of the text. The psychological features derived from the Linguistic Information and Word Count (LIWC) tool. They implemented a convolution recurrent neural network that takes input as word embedding and sentence vector, retrieved from the GloVe word vector. And the second layer is the Convolution Layer to find local features. The next layer is the recurrent neural network (LSTM) to find corresponding of the text. The accuracy of this method resulted in an average QWK of 0.764.

Liang et al. ( 2018 ) proposed a symmetrical neural network AES model with Bi-LSTM. They are extracting features from sample essays and student essays and preparing an embedding layer as input. The embedding layer output is transfer to the convolution layer from that LSTM will be trained. Hear the LSRM model has self-features extraction layer, which will find the essay's coherence. The average QWK score of SBLSTMA is 0.801.

Liu et al. ( 2019 ) proposed two-stage learning. In the first stage, they are assigning a score based on semantic data from the essay. The second stage scoring is based on some handcrafted features like grammar correction, essay length, number of sentences, etc. The average score of the two stages is 0.709.

Pedro Uria Rodriguez et al. ( 2019 ) proposed a sequence-to-sequence learning model for automatic essay scoring. They used BERT (Bidirectional Encoder Representations from Transformers), which extracts the semantics from a sentence from both directions. And XLnet sequence to sequence learning model to extract features like the next sentence in an essay. With this pre-trained model, they attained coherence from the essay to give the final score. The average QWK score of the model is 75.5.

Xia et al. ( 2019 ) proposed a two-layer Bi-directional LSTM neural network for the scoring of essays. The features extracted with word2vec to train the LSTM and accuracy of the model in an average of QWK is 0.870.

Kumar et al. ( 2019 ) Proposed an AutoSAS for short answer scoring. It used pre-trained Word2Vec and Doc2Vec models trained on Google News corpus and Wikipedia dump, respectively, to retrieve the features. First, they tagged every word POS and they found weighted words from the response. It also found prompt overlap to observe how the answer is relevant to the topic, and they defined lexical overlaps like noun overlap, argument overlap, and content overlap. This method used some statistical features like word frequency, difficulty, diversity, number of unique words in each response, type-token ratio, statistics of the sentence, word length, and logical operator-based features. This method uses a random forest model to train the dataset. The data set has sample responses with their associated score. The model will retrieve the features from both responses like graded and ungraded short answers with questions. The accuracy of AutoSAS with QWK is 0.78. It will work on any topics like Science, Arts, Biology, and English.

Jiaqi Lun et al. ( 2020 ) proposed an automatic short answer scoring with BERT. In this with a reference answer comparing student responses and assigning scores. The data augmentation is done with a neural network and with one correct answer from the dataset classifying reaming responses as correct or incorrect.

Zhu and Sun ( 2020 ) proposed a multimodal Machine Learning approach for automated essay scoring. First, they count the grammar score with the spaCy library and numerical count as the number of words and sentences with the same library. With this input, they trained a single and Bi LSTM neural network for finding the final score. For the LSTM model, they prepared sentence vectors with GloVe and word embedding with NLTK. Bi-LSTM will check each sentence in both directions to find semantic from the essay. The average QWK score with multiple models is 0.70.

Ontology based approach

Mohler et al. ( 2011 ) proposed a graph-based method to find semantic similarity in short answer scoring. For the ranking of answers, they used the support vector regression model. The bag of words is the main feature extracted in the system.

Ramachandran et al. ( 2015 ) also proposed a graph-based approach to find lexical based semantics. Identified phrase patterns and text patterns are the features to train a random forest regression model to score the essays. The accuracy of the model in a QWK is 0.78.

Zupanc et al. ( 2017 ) proposed sentence similarity networks to find the essay's score. Ajetunmobi and Daramola ( 2017 ) recommended an ontology-based information extraction approach and domain-based ontology to find the score.

Speech response scoring