- Accessibility Tools

- Current Students

- Press Office

- News and Events

New review says ineffective ‘learning styles’ theory persists in education around the world

- News Archive

- Information for Journalists

- Information for Staff

A new review by Swansea University reveals there is widespread belief around the world in a teaching method that is not only ineffective but may actually be harmful to learners.

For decades educators have been advised to match their teaching to the supposed ‘learning styles’ of students. There are more than 70 different classification systems, but the most well-known (VARK) sees individuals being categorised as visual, auditory, read-write or kinesthetic learners.

However, a new paper by Professor Phil Newton, of Swansea University Medical School, highlights that this ineffective approach is still believed by teachers and calls for a more evidence-based approach to teacher-training.

He explained that various reviews, carried out since the mid-2000s, have concluded there is no evidence to support the idea that matching instructional methods to the supposed learning style of a student does improve learning.

Professor Newton said: “This apparent widespread belief in an ineffective teaching method that is also potentially harmful has caused concern among the education community.”

His review, carried out with Swansea University student Atharva Salvi , found a substantial majority of educators, almost 90 per cent, from samples all over the world in all types of education, reported that they believe in the efficacy of learning styles

But the study points out that a learner could be a risk of being pigeonholed and consequently lose their motivation as a result.

He said: “For example, a student categorized as an auditory learner may end up thinking there is no point in pursuing studies in visual subjects such as art, or written subjects like journalism and then be demotivated during those classes..”

An additional concern is the creation of unwarranted and unrealistic expectations among educators.

Professor Newton said: “If students do not achieve the academic grades they expect, or do not enjoy their learning; if students are not taught in a way that matches their supposed learning style, then they may attribute these negative experiences to a lack of matching and be further demotivated for future study.”

He added: “Spending time trying to match a student to a learning style could be a waste of valuable time and resources.”

The paper points out that there are many other teaching methods which demonstrably promote learning and are simple and easy to learn, such as use of practice tests, or the spacing of instruction, and it would be better to focus on promoting them instead.

In the paper, published in journal Frontiers in Education , the researchers detail how they conducted a review of relevant studies to see if the data does suggest there is confusion.

They found 89.1 per cent of 15,045 educators believed that individuals learn better when they receive information in their preferred learning style.

He said: “Perhaps the most concerning finding is that there is no evidence that this belief is decreasing.”

Professor Newton suggests history is repeating itself: “If educators are themselves screened using learning styles instruments as students then it seems reasonable that they would then enter teacher-training with a view that the use of learning styles is a good thing, and so the cycle of belief would be self-perpetuating.”

The study concludes that belief in matching instruction to learning styles is remains high.

He said: “There is no sign that this is declining, despite many years of work, in the academic literature and popular press, highlighting this lack of evidence.

However, he also cautioned against over-reaction to the data, much of which was derived from studies where it may not be clear that educators were asked about specific learning styles instruments, rather than individual preferences for learning or other interpretations of the theory.

“To understand this fully, future work should focus on the objective behaviour of educators. How many of us actually match instruction to the individual learning styles of students, and what are the consequences when we do? Should we instead focus on promoting effective approaches rather than debunking myths?”

Share Story

- Wednesday 6 January 2021 12:45 GMT

- Kathy Thomas

- [email protected]

- 01792 604290

Learning Styles Debunked: There is No Evidence Supporting Auditory and Visual Learning, Psychologists Say

- Auditory Perception

- Child Development

- Cognitive Psychology

- Learning Styles

- Methodology

- Psychological Science in the Public Interest

- Visual Perception

Are you a verbal learner or a visual learner? Chances are, you’ve pegged yourself or your children as either one or the other and rely on study techniques that suit your individual learning needs. And you’re not alone— for more than 30 years, the notion that teaching methods should match a student’s particular learning style has exerted a powerful influence on education. The long-standing popularity of the learning styles movement has in turn created a thriving commercial market amongst researchers, educators, and the general public.

The wide appeal of the idea that some students will learn better when material is presented visually and that others will learn better when the material is presented verbally, or even in some other way, is evident in the vast number of learning-style tests and teaching guides available for purchase and used in schools. But does scientific research really support the existence of different learning styles, or the hypothesis that people learn better when taught in a way that matches their own unique style?

Unfortunately, the answer is no, according to a major report published in Psychological Science in the Public Interest , a journal of the Association for Psychological Science. The report, authored by a team of eminent researchers in the psychology of learning—Hal Pashler (University of San Diego), Mark McDaniel (Washington University in St. Louis), Doug Rohrer (University of South Florida), and Robert Bjork (University of California, Los Angeles)—reviews the existing literature on learning styles and finds that although numerous studies have purported to show the existence of different kinds of learners (such as “auditory learners” and “visual learners”), those studies have not used the type of randomized research designs that would make their findings credible.

Nearly all of the studies that purport to provide evidence for learning styles fail to satisfy key criteria for scientific validity. Any experiment designed to test the learning-styles hypothesis would need to classify learners into categories and then randomly assign the learners to use one of several different learning methods, and the participants would need to take the same test at the end of the experiment. If there is truth to the idea that learning styles and teaching styles should mesh, then learners with a given style, say visual-spatial, should learn better with instruction that meshes with that style. The authors found that of the very large number of studies claiming to support the learning-styles hypothesis, very few used this type of research design. Of those that did, some provided evidence flatly contradictory to this meshing hypothesis, and the few findings in line with the meshing idea did not assess popular learning-style schemes.

No less than 71 different models of learning styles have been proposed over the years. Most have no doubt been created with students’ best interests in mind, and to create more suitable environments for learning. But psychological research has not found that people learn differently, at least not in the ways learning-styles proponents claim. Given the lack of scientific evidence, the authors argue that the currently widespread use of learning-style tests and teaching tools is a wasteful use of limited educational resources.

Could you please direct me to the source material for this? Thank you.

I found the study here: https://www.psychologicalscience.org/journals/pspi/PSPI_9_3.pdf

The study is here: http://www.psychologicalscience.org/journals/pspi/PSPI_9_3.pdf

I doubt a valid study could be created. There are too many variables. I expect we learn by a combination of all inputs. How could a study overcome the issues of quality of the teachers’ presentation, quality of visuals used compared to quality of auditory materials?

Larry, speaking as a statistics student, I’ll propose an answer to the issue of how a “valid study” can be designed. Feel free to call me out if there is an inherent flaw with my proposal.

I will be referring to American students specifically since this is an issue debated for the American school system. I assume the author is talking about the same thing, but I’ll admit I don’t know if this teaching idea is prevalent in other countries. For the sake of this argument, it really doesn’t matter anyways as this variable is easily changed.

The sample is the most difficult part here, I expect there to be a lot of chosen students who’s parents do not wish their children to be a part of the study for some reason or another. It would also have to be conducted locally, or over a short period of time, though doing it locally would have a greater chance of acceptance among chosen participants. The greatest effort should be made to account for demographics, but, again, this would be difficult. (^Not a great way to start, apologies, but I’m sure a seasoned statistician could come up with the solution that I’m afraid I can’t)

Now, you have your grouping of students, say 1,500 for a reasonable number that would provide relatively a relatively small margin of error. Split each of these students into groups of 500, and assign them to a 25 student-per-teacher classroom that each taught only through auditory, visual, or “hands-on” learning. The students are specifically instructed not to take notes. For this example, let’s say they are learning the properties of liquids. The visual classes are taught through packets that each student is given. The “hands-on” class is given a sheet instructing them how to perform a lab and giving them blanks to fill in. Obviously, for this one, a teacher will tell them how to properly handle equipment and said equipment will be protected against the children hurting themselves inadvertently.(ie, no bunsen burners, but maybe a low-heat burner with students only able to turn it on/off and not touch the hot surface) The hearing group will be given a lecture on the subject, with questions being allowed afterward. After a few days learning this way, every student in every class would be given the same test. Then they would all switch, this time learning about the properties of a solid through the same methods, before being tested on it. Lastly, they would switch to learning and testing on the properties of a gas. As a control, through the same selection process, 500 students could be selected to be taught using all three of the described methods in the same timeframe. That is, instead of a packet, a lecture, or a lab, they could receive a lecture while being shown a powerpoint, followed by a lab.

To prevent previous learning bias, I would suggest all students in the sampling population be the same age, while having not received formal education prior. Also, every student should be taught to use the equipment before the experiment so that the “hands-on” group wouldn’t be at an initial disadvantage.

I’m not a teacher, a psychologist, or a professional statistician. This is just my proposal using my current knowledge of statistics. Take it with a grain of salt and form your own opinions, this is simply being put forth in the effort to show that such an experiment seems to be viable given the proper infrastructure and coordination.

Of course, your method makes sense, but it borders on unethical because it is wrong to teach a child anything in a way that they will not understand. I’m not saying you are unethical, but that any scheme that teaches inappropriately (“don’t take notes”) for more than 5 minutes is unethical.

What a bunch of arrogant people to think that they know if there exists one learning style…!? The only learning style we know is the one in our head. How can you say that there is no other creative ways of learning? What about Autistic people? What about Blind people? What about Deaf people? And Bipolar people? And what about Dyslexic people? And people who have a part of verbal speech comprehensions damages in their brain???? Why give so much importance to a little psychology paper? Any body can do a 3 year psychology degree and then write a paper claiming blabla bla

That’s not what they’re saying at all. They’re saying that there are no categories, or boxes, that people can be put in based on their learning style. They’re not saying there is just one way to learn. No need to get so worked up. People with damage to specific parts of their brains or sensory organs are obviously the outlier. Obviously they are going to be radically different.

And publishing a paper in an esteemed journal takes a _little_ bit more than a 3 year BSc in psychology. It’s that comment that really reveales the depth of your ignorance.

As someone diagnosed with high-functioning autism and currently in a concurrent education course, it is much more dangerous to tell someone they should be okay with only learning in one way rather than teaching them to be flexible and learn to absorb information from all sorts of mediums. So I’m gonna assume you’re blind, dyslexic, and autistic because you’ve assumed you can speak for all of them, yes? Your example of someone being blind also helps to further disprove learning theory — which implies nature over nurture — because clearly the ‘visual’ learners who are rendered blind must learn to learn in a different way (which statistically is shown to affect their learning no differently).

…SOMEBODY doesn’t at all understand the scientific method, reasoning or science in general.

I hope that we can finally move past these always dubious “sensory” learning styles. They’re really “modes,” different ways of learning. I’ve long argued that anyone who feels weak at using any of them needs to practice using that mode more, not less. But another old branch of learning styles based on differing neurotransmitter biases seemed to have better prospects, even if I’ve seen little done with it for decades now. I hope we don’t toss out the entire learning style baby with the dirty “sensory style” bathwater. With our updated technology, we could probably go much farther with it. For background, see dated and rather poorly written but better reasoned explanatory work by Jane Gear.

“I’ve long argued that anyone who feels weak at using any of them needs to practice using that mode more, not less.” As a kid already struggling through school with learning disabilities and the resulting long term stress and exhaustion the last thing I needed was to make things more difficult.

Allow me to state categorically that there are learning styles of which to speak specific to learners. To get the issue on hand, the methods proposed by these researchers as a way to disregard the widespread validity or to invalidate the validity of learning proclivities as a concept is not only inapposite, but also akin to saying that every learner approaches the universe of learning in the exact same way. If that is the measure of what we are to agree on as what constitutes scientific efficacy on any issue, then all forms of research are mitigatable and a suspect in the sense of their nature, methods, outcomes, and overall usefulness.

Such a view to research pieces is clearly misguided, ill-informed and half-scientific … even from a commonsense perspective. It serves no social and scientific utility, but for the interest of the investigators.

Mind you, we are not referring to the efficacy of styles presumptive of or correlative to bettering grade acquisition; rather that it should be argued that there are humane, less torturous, comfortable, less arduous and even naturalistic way of teaching students by emphasizing their uniquely preferred styles, wherever determinable.

Even where indeterminable, instructors are to be encouraged to vary their teaching methods to accommodate the learning needs of their captive audience, in this case, their students, and especially not to think that students learn essentially in the very same way as, for example, their instructors.

To think that all learners learn the same way whether in styles or approaches and to even suppose that instruction is a form of a “straight-jacket” and should work with all “body sizes” is in itself a form of miseducation, misrepresentation and,or a type of stiff recalcitrance that should not ever conduce to the mind of an educator, much less a group of psychologists.

Conbach and Snow’s [in the 60’s] work on learning differences, along with findings affecting Trait/Factor analysis are some of few materials that may well serve as enviable pivots for the current exchange.

When it comes to research concerning learning styles…the human dynamics of learning is so complex that attempting to isolate independent variables that may affect learning is like trying to determine the direction of an automobile by studying petroleum chemistry.

The big problem of understanding this is that people don’t focus on the clear and precise language being used, and don’t understand how experimental science works.

What is being said is that “learning styles” theories which denote specific “auditory” and “visual” learning styles do not have any scientific evidence for them. Those who are evaluated to be predominantly “auditory” in terms of a “learning style” do not in fact perform better or differently when taught “visually” and vice versa.

This is important, because while it seems intuitively true that some people might learn better with a specific medium, there is no evidence for it. What there is evidence for is the superiority of multi-modal or multi-media instruction, in terms of learning outcomes.

The main point is don’t waste time on something that has no evidence to support it. See a ranking of effect size on educational reforms to see what is most important, and what is least: https://visible-learning.org/hattie-ranking-influences-effect-sizes-learning-achievement/

I am currently studying to be an ESL teacher and have come across these “learning styles” with in the course. I do have a rather concerning view about them.

I can see that many minds are put behind how we are going to teach and get the “message” across to learners, but sadly i feel like there is an overdose of ego on “who has the better way to teach”. I know that’s a pretty heavy assumption but i can’t conclude much else except maybe there is a fear that the future generation may not learn correctly, which if this is the case, this manifests into over thinking techniques and deviding the way how individuals learn. I do however believe that segregating ways in which people learn is crazy and an over analysed attempt.

As i was studying this i couldn’t help but scrunch my nose in confusion when alot of the individual “learning styles” were something that i have as a “whole” and as an “individual”. I strongly believe that everything works hand in hand.

If i was to simply hold up a picture of someone playing golf and not attach a word or action to it, they would simply know what it looks like but not know what to call it or how it works. Auditory and kinaesthetic would be eliminated and the student will be deprived. But what concerns me is, that i would be compelled to put action to something like this(in a teachers mind) and tell them what we call it (golf). So to be segregating “learning styles” you must be going against a law within your conscience as to how we ALL “learn” this seriously is a no brainer for me.

I must say though not everything is based on science, simply using your brain can solve many of complications. I say that encouragingly not as a rivalry. Hope this was helpful.

Teaching golf would integrate all 4 learning styles. Why not use kinesthetic methods to complement visual, auditory, and logical when appropriate?

Howdy folks! I’d like to understand this a little better. So these guys invalidated all of the studies because they didn’t meet their standard and for that reason they declare that everyone has the same learning style? Is that what they are saying? I don’t see that they setup and carried out a scientific study that meets their own criteria to prove their hypothesis that we all the same learning style. Did I miss something there? Let’s just say the science wasn’t good enough as they say, then that only means that the science hasn’t proved anything. If the science isn’t good enough to prove it right…. then I’m thinking that it doesn’t prove it wrong either. Wouldn’t that just mean that the hypothesis just remains unproven? I wonder too if someone can explain what learning style I’m using when I’m learning how to play my drums? So I’m trying to learn a double stroke roll and feeling the stick bounce and snapping my fingers and wrist at the right moment… it’s all about the feel. To me that’s my kinesthetic learning channel. I’m programming my “muscle memory” is yet another frame for explaining it. Does their conclusion invalidate this learning channel? When it comes to learning songs, I listen by sound. I listen and repeat. I have friends who can only play along with sheet music. They read and play. I didn’t carry out a study to figure this out. I just talk to other drummers and there’s clearly 2 sets of learning styles right there. Many drummers can only sight read. I can’t. I ask then… how is this possible if we all have the same learning style? And the argument is that we should stop wasting money trying to make education better? Really? I think I’ll disengage my gullible learning style and turn on my critical thinking style. …or does that not exist either?

You have to learn to read sheet music , just like reading a book. but it takes effort and once you learn it is good. learning by ear is more natural and maybe you will be more creative because music is audio. The Beatles could not read music. They seem to be saying it has not been determined if audio or visual leaning styles exist. Not whether one is better than the other and if we don’t know if they exist then why spend money behind them. You could invent many other plausible teaching methods and theories and spend a lot of money but maybe the best money spent is on things we know make a difference.

I dont what most people in here are even talking about. Scientific research? In the end it comes down to enjoyment.

The individual is as diverse from one another both in appearance and behaviours. It is not been proven that learning styles are debunked, only that on review by some eminent scientists, a shadow of doubt challenges the premise. Thus if we are diverse creatures it follows we will take in the world in diverse ways, some of us will have more developed auditory facilities, some hardwiring may mean visuals are easier – this is not a study but a fact. We as humans do everything differently than others, perhaps the universal categories should not be bandied about carelessly. But in education in particular, we certainly do take our world in in many and varied forms, construct how we see it and enact a life we see fit, all embedded in our social environment

I was encouraged that the psychologists got put in their place by those of us(teachers) who understand that all children learn differently. Why would you want to frustrate any child with visual learning material that leads to nothing but failure, when the same child can find success with teaching methods that match the child’s learning style?

Byron Thorne author of Toward A Failure-Proof Methodology for Learning To Read.

I love the article and the follow up debate. As a long time educator and student of education it is positive to see all the different perspectives. How to make things manageable for learners? Multi-modal presentations with options for showing one’s understanding and learning. Any teaching can be presented in various ways concurrently as long as we give the students what they need to have access to. My question would be more about how to best engage students so they would be engaged and self-motivated. Love the conversation.

I don’t agree or believe you! I am a visual and auditory learner. It works for me! I was a teacher and everyone has preferred learning styles. Some people do better with a snack. Some are tactile. Your study may be flawed but your conclusions are wrong.c Nance

They can say what they like, but I have seen very different foci in various individuals, with the same-system adherants failing miserably, more often than not, relative to more flexible instructors. I myself cannot grasp complex ideas without first having, or mentally generating, a visual reference.

APS regularly opens certain online articles for discussion on our website. Effective February 2021, you must be a logged-in APS member to post comments. By posting a comment, you agree to our Community Guidelines and the display of your profile information, including your name and affiliation. Any opinions, findings, conclusions, or recommendations present in article comments are those of the writers and do not necessarily reflect the views of APS or the article’s author. For more information, please see our Community Guidelines .

Please login with your APS account to comment.

Teaching: Successive Relearning / Thriving After Psychopathology

Lesson plans about successive relearning and finding happiness after being diagnosed with a mental disorder.

Pursuing Best Practices in STEM Education: The Peril and Promise of Active Learning

Active learning is a promising yet loosely defined STEM instructional technique.

Natural Selection: The Mentoring Edition

In today’s society they may be hidden, but good shepherds do exist. They nurture. They guide. They use their foresight to keep their flock safe and ensure its survival. As graduate students, we often find

Privacy Overview

May 29, 2018

The Problem with "Learning Styles"

There is little scientific support for this fashionable idea—and stronger evidence for other learning strategies

By Cindi May

Getty Images

When it comes to home projects, I am a step-by-step kind of girl. I read the instructions from start to finish, and then reread and execute each step. My husband, on the other hand, prefers to study the diagrams and then jump right in. Think owner’s manual versus IKEA instructions. This preference for one approach over another when learning new information is not uncommon. Indeed the notion that people learn in different ways is such a pervasive belief in American culture that there is a thriving industry dedicated to identifying learning styles and training teachers to meet the needs of different learners.

Just because a notion is popular, however, doesn’t make it true. A recent review of the scientific literature on learning styles found scant evidence to clearly support the idea that outcomes are best when instructional techniques align with individuals’ learning styles. In fact, there are several studies that contradict this belief. It is clear that people have a strong sense of their own learning preferences (e.g., visual, kinesthetic, intuitive), but it is less clear that these preferences matter.

Research by Polly Hussman and Valerie Dean O’Loughlin at Indiana University takes a new look at this important question. Most previous investigations on learning styles focused on classroom learning, and assessed whether instructional style impacted outcomes for different types of learners. But is the classroom really where most of the serious learning occurs? Some might argue that, in this era of flipped classrooms and online course materials, students master more of the information on their own. That might explain why instructional style in the classroom matters little. It also raises the possibility that learning styles do matter—perhaps a match between students’ individual learning styles and their study strategies is the key to optimal outcomes.

On supporting science journalism

If you're enjoying this article, consider supporting our award-winning journalism by subscribing . By purchasing a subscription you are helping to ensure the future of impactful stories about the discoveries and ideas shaping our world today.

To explore this possibility, Hussman and O’Loughlin asked students enrolled in an anatomy class to complete an online learning styles assessment and answer questions about their study strategies. More than 400 students completed the VARK (visual, auditory, reading/writing, kinesthetic) learning styles evaluation and reported details about the techniques they used for mastering material outside of class (e.g., flash cards, review of lecture notes, anatomy coloring books). Researchers also tracked their performance in both the lecture and lab components of the course.

Scores on the VARK suggested that most students used multiple learning styles (e.g., visual + kinesthetic or reading/writing + visual + auditory), but that no particular style (or combination of styles) resulted in better outcomes than another. The focus in this study, however, was not on whether a particular learning style was more advantageous. Instead, the research addressed two primary questions: First, do students who take the VARK questionnaire to identify their personal learning style adopt study strategies that align with that style? Second, are the learning outcomes better for students whose strategies match their VARK profile than for students whose strategies do not?

Despite knowing their own, self-reported learning preferences, nearly 70% of students failed to employ study techniques that supported those preferences. Most visual learners did not rely heavily on visual strategies (e.g., diagrams, graphics), nor did most reading/writing learners rely predominantly on reading strategies (e.g., review of notes or textbook), and so on. Given the prevailing belief that learning styles matter, and the fact many students blame poor academic performance on the lack of a match between their learning style and teachers’ instructional methods, one might expect students to rely on techniques that support their personal learning preferences when working on their own.

Perhaps the best students do. Nearly a third of the students in the study did choose strategies that were consistent with their reported learning style. Did that pay off? In a word, no. Students whose study strategies aligned with their VARK scores performed no better in either the lecture or lab component of the course.

So most students are not employing study strategies that mesh with self-reported learning preferences, and the minority who do show no academic benefit. Although students believe that learning preferences influence performance , this research affirms the mounting evidence that they do not, even when students are mastering information on their own. These findings suggest a general lack of student awareness about the processes and behaviors that support effective learning. Consistent with this notion, Hussman and O’Loughlin also found negative correlations between many of the common study strategies reported by students (e.g., making flashcards, use of outside websites) and course performance. Thus regardless of individual learning style or the alignment of the style with study techniques, many students are adopting strategies that simply do not support comprehension and retention of information.

Fortunately, cognitive science has identified a number of methods to enhance knowledge acquisition, and these techniques have fairly universal benefit. Students are more successful when they space out their study sessions over time, experience the material in multiple modalities , test themselves on the material as part of their study practices, and elaborate on material to make meaningful connections rather than engaging in activities that involve simple repetition of information (e.g., making flashcards or recopying notes). These effective strategies were identified decades ago and have convincing and significant empirical support. Why then, do we persist in our belief that learning styles matter, and ignore these tried and true techniques?

The popularity of the learning styles mythology may stem in part from the appeal of finding out what “type of person” you are, along with the desire to be treated as an individual within the education system. In contrast, the notion that universal strategies may enhance learning for all belies the idea that we are unique, individual learners. In addition, most empirically-supported techniques involve planning (e.g., scheduling study sessions over a series of days) and significant effort (e.g., taking practice tests in advance of a classroom assessment), and let’s face it, we don’t want to work that hard.

Cindi May is a professor of psychology at the College of Charleston. She explores avenues for improving cognitive function and outcomes in college students, older adults and individuals who are neurodiverse.

Center for Teaching

Learning styles, what are learning styles, why are they so popular.

The term learning styles is widely used to describe how learners gather, sift through, interpret, organize, come to conclusions about, and “store” information for further use. As spelled out in VARK (one of the most popular learning styles inventories), these styles are often categorized by sensory approaches: v isual, a ural, verbal [ r eading/writing], and k inesthetic. Many of the models that don’t resemble the VARK’s sensory focus are reminiscent of Felder and Silverman’s Index of Learning Styles , with a continuum of descriptors for how learners process and organize information: active-reflective, sensing-intuitive, verbal-visual, and sequential-global.

There are well over 70 different learning styles schemes (Coffield, 2004), most of which are supported by “a thriving industry devoted to publishing learning-styles tests and guidebooks” and “professional development workshops for teachers and educators” (Pashler, et al., 2009, p. 105).

Despite the variation in categories, the fundamental idea behind learning styles is the same: that each of us has a specific learning style (sometimes called a “preference”), and we learn best when information is presented to us in this style. For example, visual learners would learn any subject matter best if given graphically or through other kinds of visual images, kinesthetic learners would learn more effectively if they could involve bodily movements in the learning process, and so on. The message thus given to instructors is that “optimal instruction requires diagnosing individuals’ learning style[s] and tailoring instruction accordingly” (Pashler, et al., 2009, p. 105).

Despite the popularity of learning styles and inventories such as the VARK, it’s important to know that there is no evidence to support the idea that matching activities to one’s learning style improves learning . It’s not simply a matter of “the absence of evidence doesn’t mean the evidence of absence.” On the contrary, for years researchers have tried to make this connection through hundreds of studies.

In 2009, Psychological Science in the Public Interest commissioned cognitive psychologists Harold Pashler, Mark McDaniel, Doug Rohrer, and Robert Bjork to evaluate the research on learning styles to determine whether there is credible evidence to support using learning styles in instruction. They came to a startling but clear conclusion: “Although the literature on learning styles is enormous,” they “found virtually no evidence” supporting the idea that “instruction is best provided in a format that matches the preference of the learner.” Many of those studies suffered from weak research design, rendering them far from convincing. Others with an effective experimental design “found results that flatly contradict the popular” assumptions about learning styles (p. 105). In sum,

“The contrast between the enormous popularity of the learning-styles approach within education and the lack of credible evidence for its utility is, in our opinion, striking and disturbing” (p. 117).

Pashler and his colleagues point to some reasons to explain why learning styles have gained—and kept—such traction, aside from the enormous industry that supports the concept. First, people like to identify themselves and others by “type.” Such categories help order the social environment and offer quick ways of understanding each other. Also, this approach appeals to the idea that learners should be recognized as “unique individuals”—or, more precisely, that differences among students should be acknowledged —rather than treated as a number in a crowd or a faceless class of students (p. 107). Carried further, teaching to different learning styles suggests that “ all people have the potential to learn effectively and easily if only instruction is tailored to their individual learning styles ” (p. 107).

There may be another reason why this approach to learning styles is so widely accepted. They very loosely resemble the concept of metacognition , or the process of thinking about one’s thinking. For instance, having your students describe which study strategies and conditions for their last exam worked for them and which didn’t is likely to improve their studying on the next exam (Tanner, 2012). Integrating such metacognitive activities into the classroom—unlike learning styles—is supported by a wealth of research (e.g., Askell Williams, Lawson, & Murray-Harvey, 2007; Bransford, Brown, & Cocking, 2000; Butler & Winne, 1995; Isaacson & Fujita, 2006; Nelson & Dunlosky, 1991; Tobias & Everson, 2002).

Importantly, metacognition is focused on planning, monitoring, and evaluating any kind of thinking about thinking and does nothing to connect one’s identity or abilities to any singular approach to knowledge. (For more information about metacognition, see CFT Assistant Director Cynthia Brame’s “ Thinking about Metacognition ” blog post, and stay tuned for a Teaching Guide on metacognition this spring.)

There is, however, something you can take away from these different approaches to learning—not based on the learner, but instead on the content being learned . To explore the persistence of the belief in learning styles, CFT Assistant Director Nancy Chick interviewed Dr. Bill Cerbin, Professor of Psychology and Director of the Center for Advancing Teaching and Learning at the University of Wisconsin-La Crosse and former Carnegie Scholar with the Carnegie Academy for the Scholarship of Teaching and Learning. He points out that the differences identified by the labels “visual, auditory, kinesthetic, and reading/writing” are more appropriately connected to the nature of the discipline:

“There may be evidence that indicates that there are some ways to teach some subjects that are just better than others , despite the learning styles of individuals…. If you’re thinking about teaching sculpture, I’m not sure that long tracts of verbal descriptions of statues or of sculptures would be a particularly effective way for individuals to learn about works of art. Naturally, these are physical objects and you need to take a look at them, you might even need to handle them.” (Cerbin, 2011, 7:45-8:30 )

Pashler and his colleagues agree: “An obvious point is that the optimal instructional method is likely to vary across disciplines” (p. 116). In other words, it makes disciplinary sense to include kinesthetic activities in sculpture and anatomy courses, reading/writing activities in literature and history courses, visual activities in geography and engineering courses, and auditory activities in music, foreign language, and speech courses. Obvious or not, it aligns teaching and learning with the contours of the subject matter, without limiting the potential abilities of the learners.

- Askell-Williams, H., Lawson, M. & Murray, Harvey, R. (2007). ‘ What happens in my university classes that helps me to learn?’: Teacher education students’ instructional metacognitive knowledge. International Journal of the Scholarship of Teaching and Learning , 1. 1-21.

- Bransford, J. D., Brown, A. L. & Cocking, R. R., (Eds.). (2000). How people learn: Brain, mind, experience, and school (Expanded Edition). Washington, D.C.: National Academy Press.

- Butler, D. L., & Winne, P. H. (1995) Feedback and self-regulated learning: A theoretical synthesis . Review of Educational Research , 65, 245-281.

- Cerbin, William. (2011). Understanding learning styles: A conversation with Dr. Bill Cerbin . Interview with Nancy Chick. UW Colleges Virtual Teaching and Learning Center .

- Coffield, F., Moseley, D., Hall, E., & Ecclestone, K. (2004). Learning styles and pedagogy in post-16 learning. A systematic and critical review . London: Learning and Skills Research Centre.

- Isaacson, R. M. & Fujita, F. (2006). Metacognitive knowledge monitoring and self-regulated learning: Academic success and reflections on learning . Journal of the Scholarship of Teaching and Learning , 6, 39-55.

- Nelson, T.O. & Dunlosky, J. (1991). The delayed-JOL effect: When delaying your judgments of learning can improve the accuracy of your metacognitive monitoring. Psychological Science , 2, 267-270.

- Pashler, Harold, McDaniel, M., Rohrer, D., & Bjork, R. (2008). Learning styles: Concepts and evidence . Psychological Science in the Public Interest . 9.3 103-119.

- Tobias, S., & Everson, H. (2002). Knowing what you know and what you don’t: Further research on metacognitive knowledge monitoring . College Board Report No. 2002-3 . College Board, NY.

Teaching Guides

- Online Course Development Resources

- Principles & Frameworks

- Pedagogies & Strategies

- Reflecting & Assessing

- Challenges & Opportunities

- Populations & Contexts

Quick Links

- Services for Departments and Schools

- Examples of Online Instructional Modules

- Research article

- Open access

- Published: 01 October 2021

Adaptive e-learning environment based on learning styles and its impact on development students' engagement

- Hassan A. El-Sabagh ORCID: orcid.org/0000-0001-5463-5982 1 , 2

International Journal of Educational Technology in Higher Education volume 18 , Article number: 53 ( 2021 ) Cite this article

66k Accesses

68 Citations

27 Altmetric

Metrics details

Adaptive e-learning is viewed as stimulation to support learning and improve student engagement, so designing appropriate adaptive e-learning environments contributes to personalizing instruction to reinforce learning outcomes. The purpose of this paper is to design an adaptive e-learning environment based on students' learning styles and study the impact of the adaptive e-learning environment on students’ engagement. This research attempts as well to outline and compare the proposed adaptive e-learning environment with a conventional e-learning approach. The paper is based on mixed research methods that were used to study the impact as follows: Development method is used in designing the adaptive e-learning environment, a quasi-experimental research design for conducting the research experiment. The student engagement scale is used to measure the following affective and behavioral factors of engagement (skills, participation/interaction, performance, emotional). The results revealed that the experimental group is statistically significantly higher than those in the control group. These experimental results imply the potential of an adaptive e-learning environment to engage students towards learning. Several practical recommendations forward from this paper: how to design a base for adaptive e-learning based on the learning styles and their implementation; how to increase the impact of adaptive e-learning in education; how to raise cost efficiency of education. The proposed adaptive e-learning approach and the results can help e-learning institutes in designing and developing more customized and adaptive e-learning environments to reinforce student engagement.

Introduction

In recent years, educational technology has advanced at a rapid rate. Once learning experiences are customized, e-learning content becomes richer and more diverse (El-Sabagh & Hamed, 2020 ; Yang et al., 2013 ). E-learning produces constructive learning outcomes, as it allows students to actively participate in learning at anytime and anyplace (Chen et al., 2010 ; Lee et al., 2019 ). Recently, adaptive e-learning has become an approach that is widely implemented by higher education institutions. The adaptive e-learning environment (ALE) is an emerging research field that deals with the development approach to fulfill students' learning styles by adapting the learning environment within the learning management system "LMS" to change the concept of delivering e-content. Adaptive e-learning is a learning process in which the content is taught or adapted based on the responses of the students' learning styles or preferences. (Normadhi et al., 2019 ; Oxman & Wong, 2014 ). By offering customized content, adaptive e-learning environments improve the quality of online learning. The customized environment should be adaptable based on the needs and learning styles of each student in the same course. (Franzoni & Assar, 2009 ; Kolekar et al., 2017 ). Adaptive e-learning changes the level of instruction dynamically based on student learning styles and personalizes instruction to enhance or accelerate a student's success. Directing instruction to each student's strengths and content needs can minimize course dropout rates, increase student outcomes and the speed at which they are accomplished. The personalized learning approach focuses on providing an effective, customized, and efficient path of learning so that every student can participate in the learning process (Hussein & Al-Chalabi, 2020 ). Learning styles, on the other hand, represent an important issue in learning in the twenty-first century, with students expected to participate actively in developing self-understanding as well as their environment engagement. (Klasnja-Milicevic et al., 2011 ; Nuankaew et al., 2019 ; Truong, 2016 ).

In current conventional e-learning environments, instruction has traditionally followed a “one style fits all” approach, which means that all students are exposed to the same learning procedures. This type of learning does not take into account the different learning styles and preferences of students. Currently, the development of e-learning systems has accommodated and supported personalized learning, in which instruction is fitted to a students’ individual needs and learning styles (Beldagli & Adiguzel, 2010 ; Benhamdi et al., 2017 ; Pashler et al., 2008 ). Some personalized approaches let students choose content that matches their personality (Hussein & Al-Chalabi, 2020 ). The delivery of course materials is an important issue of personalized learning. Moreover, designing a well-designed, effective, adaptive e-learning system represents a challenge due to complication of adapting to the different needs of learners (Alshammari, 2016 ). Regardless of using e-learning claims that shifting to adaptive e-learning environments to be able to reinforce students' engagement. However, a learning environment cannot be considered adaptive if it is not flexible enough to accommodate students' learning styles. (Ennouamani & Mahani, 2017 ).

On the other hand, while student engagement has become a central issue in learning, it is also an indicator of educational quality and whether active learning occurs in classes. (Lee et al., 2019 ; Nkomo et al., 2021 ; Robinson & Hullinger, 2008 ). Veiga et al. ( 2014 ) suggest that there is a need for further research in engagement because assessing students’ engagement is a predictor of learning and academic progress. It is important to clarify the distinction between causal factors such as learning environment and outcome factors such as achievement. Accordingly, student engagement is an important research topic because it affects a student's final grade, and course dropout rate (Staikopoulos et al., 2015 ).

The Umm Al-Qura University strategic plan through common first-year deanship has focused on best practices that increase students' higher-order skills. These skills include communication skills, problem-solving skills, research skills, and creative thinking skills. Although the UQU action plan involves improving these skills through common first-year academic programs, the student's learning skills need to be encouraged and engaged more (Umm Al-Qura University Agency, 2020 ). As a result of the author's experience, The conventional methods of instruction in the "learning skills" course were observed, in which the content is presented to all students in one style that is dependent on understanding the content regardless of the diversity of their learning styles.

According to some studies (Alshammari & Qtaish, 2019 ; Lee & Kim, 2012 ; Shih et al., 2008 ; Verdú, et al., 2008 ; Yalcinalp & Avc, 2019 ), there is little attention paid to the needs and preferences of individual learners, and as a result, all learners are treated in the same way. More research into the impact of educational technologies on developing skills and performance among different learners is recommended. This “one-style-fits-all” approach implies that all learners are expected to use the same learning style as prescribed by the e-learning environment. Subsequently, a review of the literature revealed that an adaptive e-learning environment can affect learning outcomes to fill the identified gap. In conclusion: Adaptive e-learning environments rely on the learner's preferences and learning style as a reference that supports to create adaptation.

To confirm the above: the author conducted an exploratory study via an open interview that included some questions with a sample of 50 students in the learning skills department of common first-year. Questions asked about the difficulties they face when learning a "learning skills" course, what is the preferred way of course content. Students (88%) agreed that the way students are presented does not differ according to their differences and that they suffer from a lack of personal learning that is compatible with their style of work. Students (82%) agreed that they lack adaptive educational content that helps them to be engaged in the learning process. Accordingly, the author handled the research problem.

This research supplements to the existing body of knowledge on the subject. It is considered significant because it improves understanding challenges involved in designing the adaptive environments based on learning styles parameter. Subsequently, this paper is structured as follows: The next section presents the related work cited in the literature, followed by research methodology, then data collection, results, discussion, and finally, some conclusions and future trends are discussed.

Theoretical framework

This section briefly provides a thorough review of the literature about the adaptive E-learning environments based on learning styles.

Adaptive e-learning environments based on learning styles

The adaptive e-learning employment in higher education has been slower to evolve, and challenges that led to the slow implementation still exist. The learning management system offers the same tools to all learners, although individual learners need different details based on learning style and preferences. (Beldagli & Adiguzel, 2010 ; Kolekar et al., 2017 ). The interactive e-learning environment requisite evaluating the learner's desired learning style, before the course delivery, such as an online quiz or during the course delivery, such as tracking student reactions (DeCapua & Marshall, 2015 ).

In e-learning environments, adaptation is constructed on a series of well-designed processes to fit the instructional materials. The adaptive e-learning framework attempt to match instructional content to the learners' needs and styles. According to Qazdar et al. ( 2015 ), adaptive e-learning (AEL) environments rely on constructing a model of each learner's needs, preferences, and styles. It is well recognized that such adaptive behavior can increase learners' development and performance, thus enriching learning experience quality. (Shi et al., 2013 ). The following features of adaptive e-learning environments can be identified through diversity, interactivity, adaptability, feedback, performance, and predictability. Although adaptive framework taxonomy and characteristics related to various elements, adaptive learning includes at least three elements: a model of the structure of the content to be learned with detailed learning outcomes (a content model). The student's expertise based on success, as well as a method of interpreting student strengths (a learner model), and a method of matching the instructional materials and how it is delivered in a customized way (an instructional model) (Ali et al., 2019 ). The number of adaptive e-learning studies has increased over the last few years. Adaptive e-learning is likely to increase at an accelerating pace at all levels of instruction (Hussein & Al-Chalabi, 2020 ; Oxman & Wong, 2014 ).

Many studies assured the power of adaptive e-learning in delivering e-content for learners in a way that fitting their needs, and learning styles, which helps improve the process of students' acquisition of knowledge, experiences and develop their higher thinking skills (Ali et al., 2019 ; Behaz & Djoudi, 2012 ; Chun-Hui et al., 2017 ; Daines et al., 2016 ; Dominic et al., 2015 ; Mahnane et al., 2013 ; Vassileva, 2012 ). Student characteristics of learning style are recognized as an important issue and a vital influence in learning and are frequently used as a foundation to generate personalized learning experiences (Alshammari & Qtaish, 2019 ; El-Sabagh & Hamed, 2020 ; Hussein & Al-Chalabi, 2020 ; Klasnja-Milicevic et al., 2011 ; Normadhi et al., 2019 ; Ozyurt & Ozyurt, 2015 ).

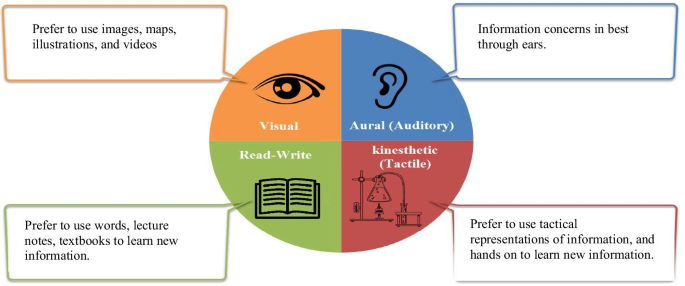

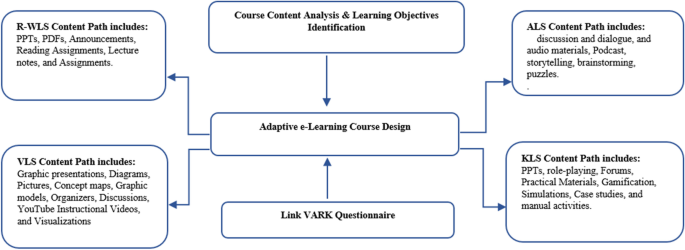

The learning style is a parameter of designing adaptive e-learning environments. Individuals differ in their learning styles when interacting with the content presented to them, as many studies emphasized the relationship between e-learning and learning styles to be motivated in learning situations, consequently improving the learning outcomes (Ali et al., 2019 ; Alshammari, 2016 ; Alzain et al., 2018a , b ; Liang, 2012 ; Mahnane et al., 2013 ; Nainie et al., 2010 ; Velázquez & Assar, 2009 ). The word "learning style" refers to the process by which the learner organizes, processes, represents, and combines this information and stores it in his cognitive source, then retrieves the information and experiences in the style that reflects his technique of communicating them. (Fleming & Baume, 2006 ; Jaleel & Thomas, 2019 ; Jonassen & Grabowski, 2012 ; Klasnja-Milicevic et al., 2011 ; Nuankaew et al., 2019 ; Pashler et al., 2008 ; Willingham et al., 2105 ; Zhang, 2017 ). The concept of learning style is founded based on the fact that students vary in their styles of receiving knowledge and thought, to help them recognizing and combining information in their mind, as well as acquire experiences and skills. (Naqeeb, 2011 ). The extensive scholarly literature on learning styles is distributed with few strong experimental findings (Truong, 2016 ), and a few findings on the effect of adapting instruction to learning style. There are many models of learning styles (Aldosarim et al., 2018 ; Alzain et al., 2018a , 2018b ; Cletus & Eneluwe, 2020 ; Franzoni & Assar, 2009 ; Willingham et al., 2015 ), including the VARK model, which is one of the most well-known models used to classify learning styles. The VARK questionnaire offers better thought about information processing preferences (Johnson, 2009 ). Fleming and Baume ( 2006 ) developed the VARK model, which consists of four students' preferred learning types. The letter "V" represents for visual and means the visual style, while the letter "A" represents for auditory and means the auditory style, and the letter "R/W" represents "write/read", means the reading/writing style, and the letter "K" represents the word "Kinesthetic" and means the practical style. Moreover, VARK distinguishes the visual category further into graphical and textual or visual and read/write learners (Murphy et al., 2004 ; Leung, et al., 2014 ; Willingham et al., 2015 ). The four categories of The VARK Learning Style Inventory are shown in the Fig. 1 below.

VARK learning styles

According to the VARK model, learners are classified into four groups representing basic learning styles based on their responses which have 16 questions, there are four potential responses to each question, where each answer agrees to one of the extremes of the dimension (Hussain, 2017 ; Silva, 2020 ; Zhang, 2017 ) to support instructors who use it to create effective courses for students. Visual learners prefer to take instructional materials and send assignments using tools such as maps, graphs, images, and other symbols, according to Fleming and Baume ( 2006 ). Learners who can read–write prefer to use written textual learning materials, they use glossaries, handouts, textbooks, and lecture notes. Aural learners, on the other hand, prefer to learn through spoken materials, dialogue, lectures, and discussions. Direct practice and learning by doing are preferred by kinesthetic learners (Becker et al., 2007 ; Fleming & Baume, 2006 ; Willingham et al., 2015 ). As a result, this research work aims to provide a comprehensive discussion about how these individual parameters can be applied in adaptive e-learning environment practices. Dominic et al., ( 2015 ) presented a framework for an adaptive educational system that personalized learning content based on student learning styles (Felder-Silverman learning model) and other factors such as learners' learning subject competency level. This framework allowed students to follow their adaptive learning content paths based on filling in "ils" questionnaire. Additionally, providing a customized framework that can automatically respond to students' learning styles and suggest online activities with complete personalization. Similarly, El Bachari et al. ( 2011 ) attempted to determine a student's unique learning style and then adapt instruction to that individual interests. Adaptive e-learning focused on learner experience and learning style has a higher degree of perceived usability than a non-adaptive e-learning system, according to Alshammari et al. ( 2015 ). This can also improve learners' satisfaction, engagement, and motivation, thus improving their learning.

According to the findings of (Akbulut & Cardak, 2012 ; Alshammari & Qtaish, 2019 ; Alzain et al., 2018a , b ; Shi et al., 2013 ; Truong, 2016 ), adaptation based on a combination of learning style, and information level yields significantly better learning gains. Researchers have recently initiated to focus on how to personalize e-learning experiences using personal characteristics such as the student's preferred learning style. Personal learning challenges are addressed by adaptive learning programs, which provide learners with courses that are fit to their specific needs, such as their learning styles.

- Student engagement

Previous research has emphasized that student participation is a key factor in overcoming academic problems such as poor academic performance, isolation, and high dropout rates (Fredricks et al., 2004 ). Student participation is vital to student learning, especially in an online environment where students may feel isolated and disconnected (Dixson, 2015 ). Student engagement is the degree to which students consciously engage with a course's materials, other students, and the instructor. Student engagement is significant for keeping students engaged in the course and, as a result, in their learning (Barkley & Major, 2020 ; Lee et al., 2019 ; Rogers-Stacy, et al, 2017 ). Extensive research was conducted to investigate the degree of student engagement in web-based learning systems and traditional education systems. For instance, using a variety of methods and input features to test the relationship between student data and student participation (Hussain et al., 2018 ). Guo et al. ( 2014 ) checked the participation of students when they watched videos. The input characteristics of the study were based on the time they watched it and how often students respond to the assessment.

Atherton et al. ( 2017 ) found a correlation between the use of course materials and student performance; course content is more expected to lead to better grades. Pardo et al., ( 2016 ) found that interactive students with interactive learning activities have a significant impact on student test scores. The course results are positively correlated with student participation according to previous research. For example, Atherton et al. ( 2017 ) explained that students accessed learning materials online and passed exams regularly to obtain higher test scores. Other studies have shown that students with higher levels of participation in questionnaires and course performance tend to perform well (Mutahi et al., 2017 ).

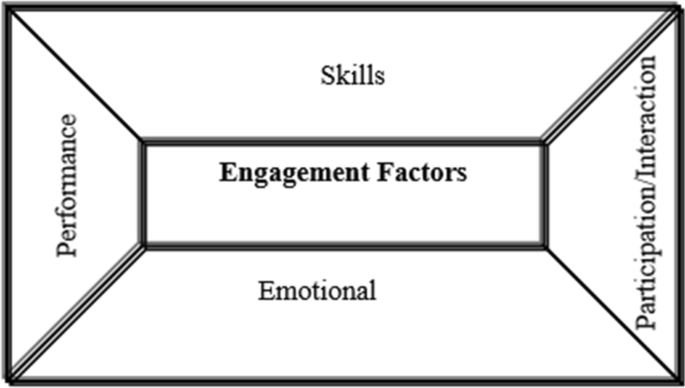

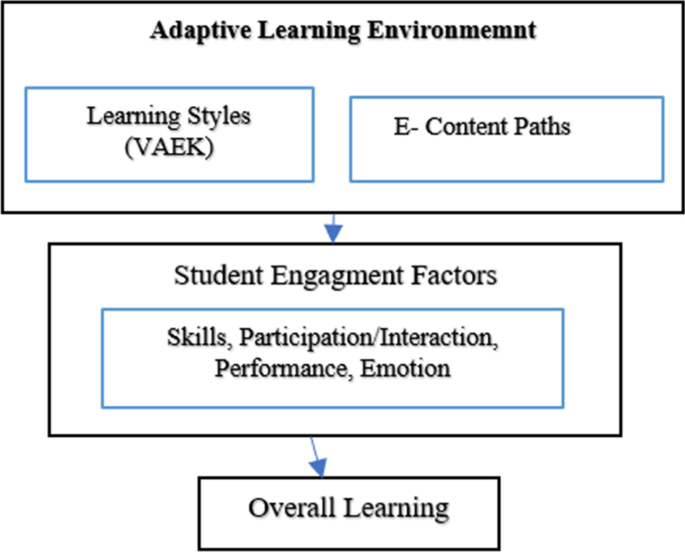

Skills, emotion, participation, and performance, according to Dixson ( 2015 ), were factors in online learning engagement. Skills are a type of learning that includes things like practicing on a daily foundation, paying attention while listening and reading, and taking notes. Emotion refers to how the learner feels about learning, such as how much you want to learn. Participation refers to how the learner act in a class, such as chat, discussion, or conversation. Performance is a result, such as a good grade or a good test score. In general, engagement indicated that students spend time, energy learning materials, and skills to interact constructively with others in the classroom, and at least participate in emotional learning in one way or another (that is, be motivated by an idea, willing to learn and interact). Student engagement is produced through personal attitudes, thoughts, behaviors, and communication with others. Thoughts, effort, and feelings to a certain level when studying. Therefore, the student engagement scale attempts to measure what students are doing (thinking actively), how they relate to their learning, and how they relate to content, faculty members, and other learners including the following factors as shown in Fig. 2 . (skills, participation/interaction, performance, and emotions). Hence, previous research has moved beyond comparing online and face-to-face classes to investigating ways to improve online learning (Dixson, 2015 ; Gaytan & McEwen, 2007 ; Lévy & Wakabayashi, 2008 ; Mutahi et al., 2017 ). Learning effort, involvement in activities, interaction, and learning satisfaction, according to reviews of previous research on student engagement, are significant measures of student engagement in learning environments (Dixson, 2015 ; Evans et al., 2017 ; Lee et al., 2019 ; Mutahi et al., 2017 ; Rogers-Stacy et al., 2017 ). These results point to several features of e-learning environments that can be used as measures of student participation. Successful and engaged online learners learn actively, have the psychological inspiration to learn, make good use of prior experience, and make successful use of online technology. Furthermore, they have excellent communication abilities and are adept at both cooperative and self-directed learning (Dixson, 2015 ; Hong, 2009 ; Nkomo et al., 2021 ).

Engagement factors

Overview of designing the adaptive e-learning environment

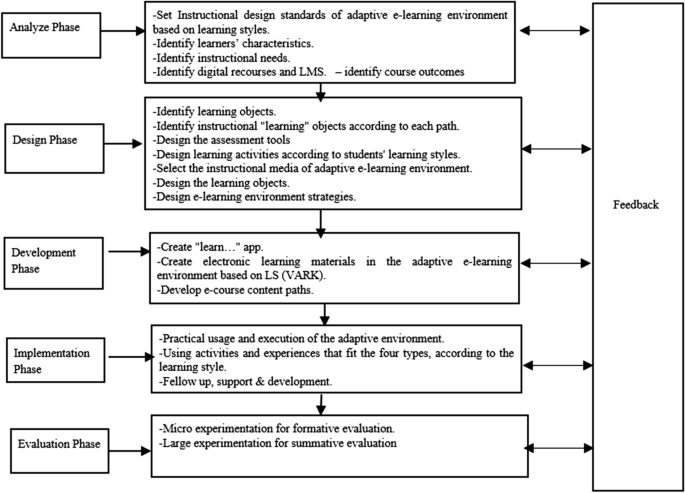

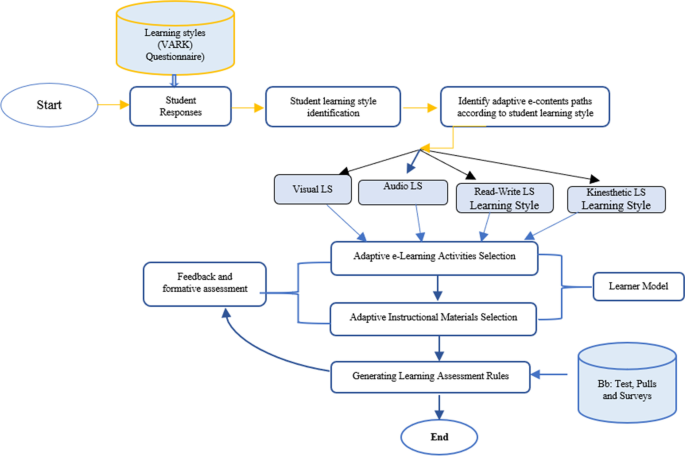

The paper follows the (ADDIE) Instructional Design Model: analysis, design, develop, implement, and evaluate to answer the first research question. The adaptive learning environment offers an interactive decentralized media environment that takes into account individual differences among students. Moreover, the environment can spread the culture of self-learning, attract students, and increase their engagement in learning.

Any learning environment that is intended to accomplish a specific goal should be consistent to increase students' motivation to learn. so that they have content that is personalized to their specific requirements, rather than one-size-fits-all content. As a result, a set of instructional design standards for designing an adaptive e-learning framework based on learning styles was developed according to the following diagram (Fig. 3 ).

The ID (model) of the adaptive e-learning environment

According to the previous figure, The analysis phase included identifying the course materials and learning tools (syllabus and course plan modules) used for the study. The learning objectives were included in the high-level learning objectives (C4-C6: analysis, synthesis, evaluation).

The design phase included writing SMART objectives, the learning materials were written within the modules plan. To support adaptive learning, four content paths were identified, choosing learning models, processes, and evaluation. Course structure and navigation were planned. The adaptive structural design identified the relationships between the different components, such as introduction units, learning materials, quizzes. Determining the four path materials. The course instructional materials were identified according to the following Figure 4 .

Adaptive e-course design

The development phase included: preparing and selecting the media for the e-course according to each content path in an adaptive e-learning environment. During this process, the author accomplished the storyboard and the media to be included on each page of the storyboard. A category was developed for the instructional media for each path (Fig. 5 )

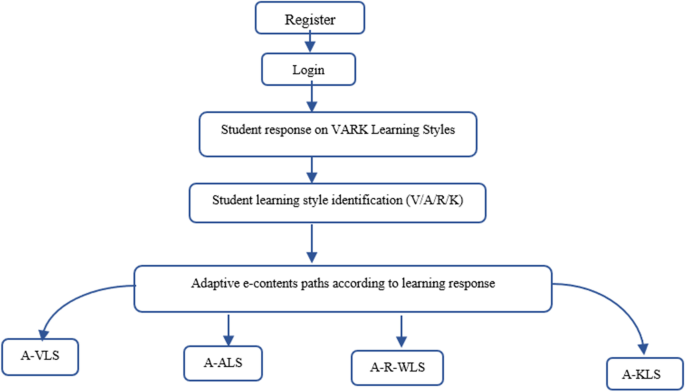

Roles and deployment diagram of the adaptive e-learning environment

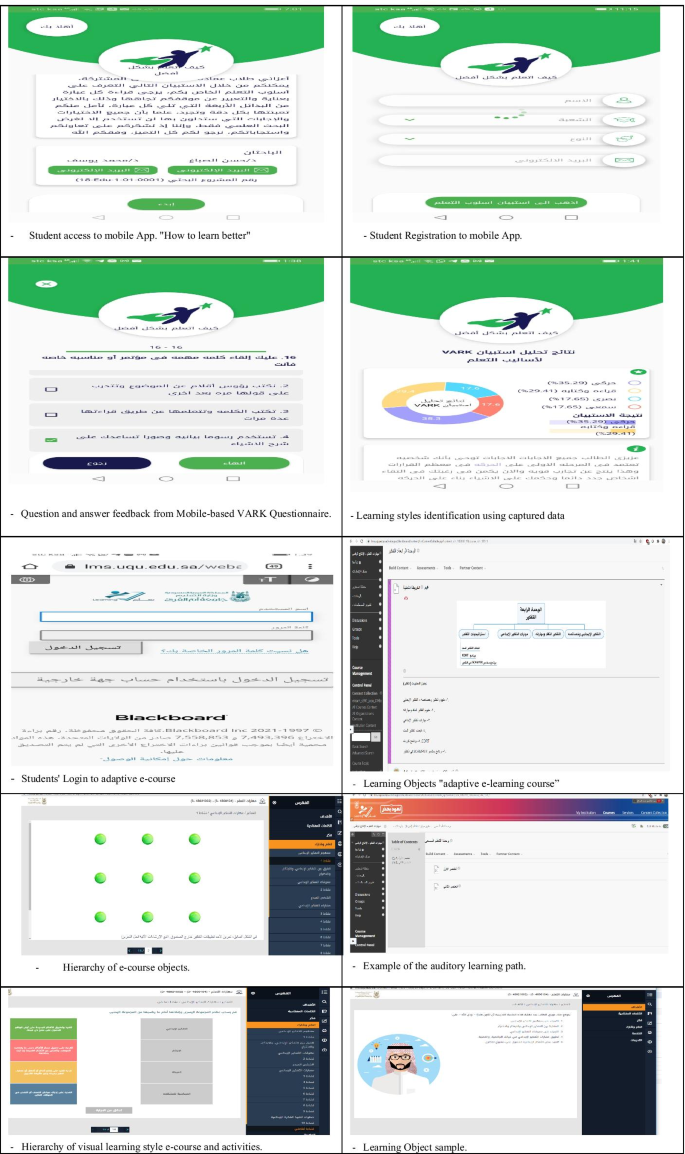

The author developed a learning styles questionnaire via a mobile App. as follows: https://play.google.com/store/apps/details?id=com.pointability.vark . Then, the students accessed the adaptive e-course modules based on their learning styles.

The Implementation phase involved the following: The professional validation of the course instructional materials. Expert validation is used to evaluate the consistency of course materials (syllabi and modules). The validation was performed including the following: student learning activities, learning implementation capability, and student reactions to modules. The learner's behaviors, errors, navigation, and learning process are continuously geared toward improving the learner's modules based on the data the learner gathered about him.

The Evaluation phase included five e-learning specialists who reviewed the adaptive e-learning. After that, the framework was revised based on expert recommendations and feedback. Content assessment, media evaluation in three forms, instructional design, interface design, and usage design included in the evaluation. Adaptive learners checked the proposed framework. It was divided into two sections. Pilot testing where the proposed environment was tested by ten learners who represented the sample in the first phase. Each learner's behavior was observed, questions were answered, and learning control, media access, and time spent learning were all verified.

Research methodology

Research purpose and questions.

This research aims to investigate the impact of designing an adaptive e-learning environment on the development of students' engagement. The research conceptual framework is illustrated in Fig. 6 . Therefore, the articulated research questions are as follows: the main research question is "What is the impact of an adaptive e-learning environment based on (VARK) learning styles on developing students' engagement? Accordingly, there are two sub research questions a) "What is the instructional design of the adaptive e-learning environment?" b) "What is the impact of an adaptive e-learning based on (VARK) learning styles on development students' engagement (skills, participation, performance, emotional) in comparison with conventional e-learning?".

The conceptual framework (model) of the research questions

Research hypotheses

The research aims to verify the validity of the following hypothesis:

There is no statistically significant difference between the students' mean scores of the experimental group that exposed to the adaptive e-learning environment and the scores of the control group that was exposed to the conventional e-learning environment in pre-application of students' engagement scale.

There is a statistically significant difference at the level of (0.05) between the students' mean scores of the experimental group (adaptive e-learning) and the scores of the control group (conventional e-learning) in post-application of students' engagement factors in favor of the experimental group.

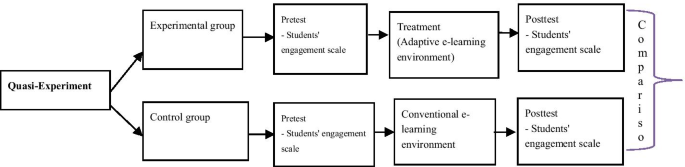

Research design

This research was a quasi-experimental research with the pretest-posttest. Research variables were independent and dependent as shown in the following Fig. 7 .

Research "Experimental" design

Both groups were informed with the learning activities tracks, the experimental group was instructed to use the adaptive learning environment to accomplish the learning goals; on the other hand, the control group was exposed to the conventional e-learning environment without the adaptive e-learning parameters.

Research participants

The sample consisted of students studying the "learning skills" course in the common first-year deanship aged between (17–18) years represented the population of the study. All participants were chosen in the academic year 2109–2020 at the first term which was taught by the same instructors. The research sample included two classes (118 students), selected randomly from the learning skills department. First-group was randomly assigned as the control group (N = 58, 31 males and 27 females), the other was assigned as experimental group (N = 60, 36 males and 24 females) was assigned to the other class. The following Table 1 shows the distribution of students' sample "Demographics data".

The instructional materials were not presented to the students before. The control group was expected to attend the conventional e-learning class, where they were provided with the learning environment without adaptive e-learning parameter based on the learning styles that introduced the "learning skills" course. The experimental group was exposed to the use of adaptive e-learning based on learning styles to learn the same course instructional materials within e-course. Moreover, all the student participants were required to read the guidelines to indicate their readiness to participate in the research experiment with permission.

Research instruments

In this research, the measuring tools included the VARK questionnaire and the students' engagement scale including the following factors (skills, participation/interaction, performance, emotional). To begin, the pre-post scale was designed to assess the level of student engagement related to the "learning skills" course before and after participating in the experiment.

VARK questionnaire

Questionnaires are a common method for collecting data in education research (McMillan & Schumacher, 2006 ). The VARK questionnaire had been organized electronically and distributed to the student through the developed mobile app and registered on the UQU system. The questionnaire consisted of 16 items within the scale as MCQ classified into four main factors (kinesthetic, auditory, visual, and R/W).

Reliability and Validity of The VARK questionnaire

For reliability analysis, Cronbach’s alpha is used for evaluating research internal consistency. Internal consistency was calculated through the calculation of correlation of each item with the factor to which it fits and correlation among other factors. The value of 0.70 and above are normally recognized as high-reliability values (Hinton et al., 2014 ). The Cronbach's Alpha correlation coefficient for the VARK questionnaire was 0.83, indicating that the questionnaire was accurate and suitable for further research.

Students' engagement scale

The engagement scale was developed after a review of the literature on the topic of student engagement. The Dixson scale was used to measure student engagement. The scale consisted of 4 major factors as follows (skills, participation/interaction, performance, emotional). The author adapted the original "Dixson scale" according to the following steps. The Dixson scale consisted of 48 statements was translated and accommodated into Arabic by the author. After consulting with experts, the instrument items were reduced to 27 items after adaptation according to the university learning environment. The scale is rated on a 5-point scale.

The final version of the engagement scale comprised 4 factors as follows: The skills engagement included (ten items) to determine keeping up with, reading instructional materials, and exerting effort. Participation/interaction engagement involved (five items) to measure having fun, as well as regularly engaging in group discussion. The performance engagement included (five items) to measure test performance and receiving a successful score. The emotional engagement involved (seven items) to decide whether or not the course was interesting. Students can access to respond engagement scale from the following link: http://bit.ly/2PXGvvD . Consequently, the objective of the scale is to measure the possession of common first-year students of the basic engagement factors before and after instruction with adaptive e-learning compared to conventional e-learning.

Reliability and validity of the engagement scale

The alpha coefficient of the scale factors scores was presented. All four subscales have a strong degree of internal accuracy (0.80–0.87), indicating strong reliability. The overall reliability of the instruments used in this study was calculated using Alfa-alpha, Cronbach's with an alpha value of 0.81 meaning that the instruments were accurate. The instruments used in this research demonstrated strong validity and reliability, allowing for an accurate assessment of students' engagement in learning. The scale was applied to a pilot sample of 20 students, not including the experimental sample. The instrument, on the other hand, had a correlation coefficient of (0.74–0.82), indicating a degree of validity that enables the instrument's use. Table 2 shows the correlation coefficient and Cronbach's alpha based on the interaction scale.

On the other hand, to verify the content validity; the scale was to specialists to take their views on the clarity of the linguistic formulation and its suitability to measure students' engagement, and to suggest what they deem appropriate in terms of modifications.

Research procedures

To calculate the homogeneity and group equivalence between both groups, the validity of the first hypothesis was examined which stated "There is no statistically significant difference between the students' mean scores of the experimental group that exposed to the adaptive e-learning environment and the scores of the control group that was exposed to the conventional e-learning environment in pre-application of students' engagement scale", the author applied the engagement scale to both groups beforehand, and the scores of the pre-application were examined to verify the equivalence of the two groups (experimental and control) in terms of students' engagement.

The t-test of independent samples was calculated for the engagement scale to confirm the homogeneity of the two classes before the experiment. The t-values were not significant at the level of significance = 0.05, meaning that the two groups were homogeneous in terms of students' engagement scale before the experiment.

Since there was no significant difference in the mean scores of both groups ( p > 0.05), the findings presented in Table 3 showed that there was no significant difference between both experimental and control groups in engagement as a whole, and each student engagement factor separately. The findings showed that the two classes were similar before start of research experiment.

Learner content path in adaptive e-learning environment

The previous well-designed processes are the foundation for adaptation in e-learning environments. There are identified entries for accommodating materials, including classification depending on learning style.: kinesthetic, auditory, visual, and R/W. The present study covered the 1st semester during the 2019/2020 academic year. The course was divided into modules that concentrated on various topics; eleven of the modules included the adaptive learning exercise. The exercises and quizzes were assigned to specific textbook modules. To reduce irrelevant variation, all objects of the course covered the same content, had equal learning results, and were taught by the same instructor.

The experimental group—in which students were asked to bring smartphones—was taught, where the how-to adaptive learning application for adaptive learning was downloaded, and a special account was created for each student, followed by access to the channel designed by the through the application, and the students were provided with instructions and training on how entering application with the appropriate default element of the developed learning objects, while the control group used the variety of instructional materials in the same course for the students.

In this adaptive e-course, students in the experimental group are presented with a questionnaire asked to answer that questions via a developed mobile App. They are provided with four choices. Students are allowed to answer the questions. The correct answer is shown in the students' responses to the results, but the learning module is marked as incomplete. If a student chooses to respond to a question, the correct answer is found immediately, regardless of the student's reaction.