- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

Margin Size

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

8.1: Samples, Populations and Sampling

- Last updated

- Save as PDF

- Page ID 36115

- Danielle Navarro

- University of New South Wales

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\( \newcommand{\id}{\mathrm{id}}\) \( \newcommand{\Span}{\mathrm{span}}\)

( \newcommand{\kernel}{\mathrm{null}\,}\) \( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\) \( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\) \( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\id}{\mathrm{id}}\)

\( \newcommand{\kernel}{\mathrm{null}\,}\)

\( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\)

\( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\)

\( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\Span}{\mathrm{span}}\) \( \newcommand{\AA}{\unicode[.8,0]{x212B}}\)

\( \newcommand{\vectorA}[1]{\vec{#1}} % arrow\)

\( \newcommand{\vectorAt}[1]{\vec{\text{#1}}} % arrow\)

\( \newcommand{\vectorB}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vectorC}[1]{\textbf{#1}} \)

\( \newcommand{\vectorD}[1]{\overrightarrow{#1}} \)

\( \newcommand{\vectorDt}[1]{\overrightarrow{\text{#1}}} \)

\( \newcommand{\vectE}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash{\mathbf {#1}}}} \)

In the prelude to Part I discussed the riddle of induction, and highlighted the fact that all learning requires you to make assumptions. Accepting that this is true, our first task to come up with some fairly general assumptions about data that make sense. This is where sampling theory comes in. If probability theory is the foundations upon which all statistical theory builds, sampling theory is the frame around which you can build the rest of the house. Sampling theory plays a huge role in specifying the assumptions upon which your statistical inferences rely. And in order to talk about “making inferences” the way statisticians think about it, we need to be a bit more explicit about what it is that we’re drawing inferences from (the sample) and what it is that we’re drawing inferences about (the population).

In almost every situation of interest, what we have available to us as researchers is a sample of data. We might have run experiment with some number of participants; a polling company might have phoned some number of people to ask questions about voting intentions; etc. Regardless: the data set available to us is finite, and incomplete. We can’t possibly get every person in the world to do our experiment; a polling company doesn’t have the time or the money to ring up every voter in the country etc. In our earlier discussion of descriptive statistics (Chapter 5, this sample was the only thing we were interested in. Our only goal was to find ways of describing, summarising and graphing that sample. This is about to change.

Defining a population

A sample is a concrete thing. You can open up a data file, and there’s the data from your sample. A population , on the other hand, is a more abstract idea. It refers to the set of all possible people, or all possible observations, that you want to draw conclusions about, and is generally much bigger than the sample. In an ideal world, the researcher would begin the study with a clear idea of what the population of interest is, since the process of designing a study and testing hypotheses about the data that it produces does depend on the population about which you want to make statements. However, that doesn’t always happen in practice: usually the researcher has a fairly vague idea of what the population is and designs the study as best he/she can on that basis.

Sometimes it’s easy to state the population of interest. For instance, in the “polling company” example that opened the chapter, the population consisted of all voters enrolled at the a time of the study – millions of people. The sample was a set of 1000 people who all belong to that population. In most situations the situation is much less simple. In a typical a psychological experiment, determining the population of interest is a bit more complicated. Suppose I run an experiment using 100 undergraduate students as my participants. My goal, as a cognitive scientist, is to try to learn something about how the mind works. So, which of the following would count as “the population”:

- All of the undergraduate psychology students at the University of Adelaide?

- Undergraduate psychology students in general, anywhere in the world?

- Australians currently living?

- Australians of similar ages to my sample?

- Anyone currently alive?

- Any human being, past, present or future?

- Any biological organism with a sufficient degree of intelligence operating in a terrestrial environment?

- Any intelligent being?

Each of these defines a real group of mind-possessing entities, all of which might be of interest to me as a cognitive scientist, and it’s not at all clear which one ought to be the true population of interest. As another example, consider the Wellesley-Croker game that we discussed in the prelude. The sample here is a specific sequence of 12 wins and 0 losses for Wellesley. What is the population?

- All outcomes until Wellesley and Croker arrived at their destination?

- All outcomes if Wellesley and Croker had played the game for the rest of their lives?

- All outcomes if Wellseley and Croker lived forever and played the game until the world ran out of hills?

- All outcomes if we created an infinite set of parallel universes and the Wellesely/Croker pair made guesses about the same 12 hills in each universe?

Again, it’s not obvious what the population is.

Irrespective of how I define the population, the critical point is that the sample is a subset of the population, and our goal is to use our knowledge of the sample to draw inferences about the properties of the population. The relationship between the two depends on the procedure by which the sample was selected. This procedure is referred to as a sampling method , and it is important to understand why it matters.

To keep things simple, let’s imagine that we have a bag containing 10 chips. Each chip has a unique letter printed on it, so we can distinguish between the 10 chips. The chips come in two colours, black and white. This set of chips is the population of interest, and it is depicted graphically on the left of Figure 10.1. As you can see from looking at the picture, there are 4 black chips and 6 white chips, but of course in real life we wouldn’t know that unless we looked in the bag. Now imagine you run the following “experiment”: you shake up the bag, close your eyes, and pull out 4 chips without putting any of them back into the bag. First out comes the a chip (black), then the c chip (white), then j (white) and then finally b (black). If you wanted, you could then put all the chips back in the bag and repeat the experiment, as depicted on the right hand side of Figure 10.1. Each time you get different results, but the procedure is identical in each case. The fact that the same procedure can lead to different results each time, we refer to it as a random process. 147 However, because we shook the bag before pulling any chips out, it seems reasonable to think that every chip has the same chance of being selected. A procedure in which every member of the population has the same chance of being selected is called a simple random sample . The fact that we did not put the chips back in the bag after pulling them out means that you can’t observe the same thing twice, and in such cases the observations are said to have been sampled without replacement .

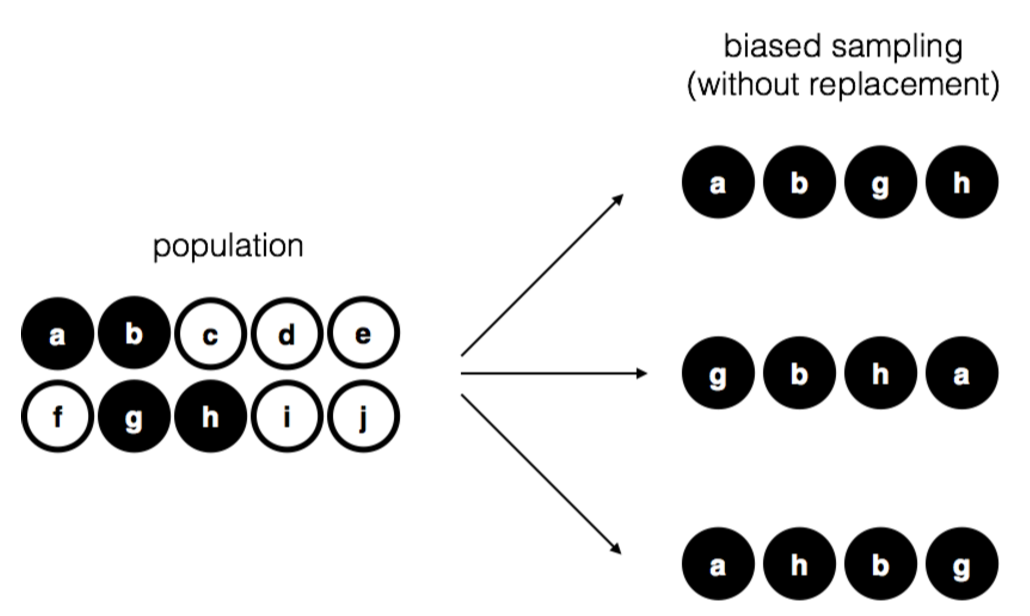

To help make sure you understand the importance of the sampling procedure, consider an alternative way in which the experiment could have been run. Suppose that my 5-year old son had opened the bag, and decided to pull out four black chips without putting any of them back in the bag. This biased sampling scheme is depicted in Figure 10.2. Now consider the evidentiary value of seeing 4 black chips and 0 white chips. Clearly, it depends on the sampling scheme, does it not? If you know that the sampling scheme is biased to select only black chips, then a sample that consists of only black chips doesn’t tell you very much about the population! For this reason, statisticians really like it when a data set can be considered a simple random sample, because it makes the data analysis much easier.

A third procedure is worth mentioning. This time around we close our eyes, shake the bag, and pull out a chip. This time, however, we record the observation and then put the chip back in the bag. Again we close our eyes, shake the bag, and pull out a chip. We then repeat this procedure until we have 4 chips. Data sets generated in this way are still simple random samples, but because we put the chips back in the bag immediately after drawing them it is referred to as a sample with replacement . The difference between this situation and the first one is that it is possible to observe the same population member multiple times, as illustrated in Figure 10.3.

In my experience, most psychology experiments tend to be sampling without replacement, because the same person is not allowed to participate in the experiment twice. However, most statistical theory is based on the assumption that the data arise from a simple random sample with replacement. In real life, this very rarely matters. If the population of interest is large (e.g., has more than 10 entities!) the difference between sampling with- and without- replacement is too small to be concerned with. The difference between simple random samples and biased samples, on the other hand, is not such an easy thing to dismiss.

Most samples are not simple random samples

As you can see from looking at the list of possible populations that I showed above, it is almost impossible to obtain a simple random sample from most populations of interest. When I run experiments, I’d consider it a minor miracle if my participants turned out to be a random sampling of the undergraduate psychology students at Adelaide university, even though this is by far the narrowest population that I might want to generalise to. A thorough discussion of other types of sampling schemes is beyond the scope of this book, but to give you a sense of what’s out there I’ll list a few of the more important ones:

- Stratified sampling . Suppose your population is (or can be) divided into several different subpopulations, or strata . Perhaps you’re running a study at several different sites, for example. Instead of trying to sample randomly from the population as a whole, you instead try to collect a separate random sample from each of the strata. Stratified sampling is sometimes easier to do than simple random sampling, especially when the population is already divided into the distinct strata. It can also be more efficient that simple random sampling, especially when some of the subpopulations are rare. For instance, when studying schizophrenia it would be much better to divide the population into two 148 strata (schizophrenic and not-schizophrenic), and then sample an equal number of people from each group. If you selected people randomly, you would get so few schizophrenic people in the sample that your study would be useless. This specific kind of of stratified sampling is referred to as oversampling because it makes a deliberate attempt to over-represent rare groups.

- Snowball sampling is a technique that is especially useful when sampling from a “hidden” or hard to access population, and is especially common in social sciences. For instance, suppose the researchers want to conduct an opinion poll among transgender people. The research team might only have contact details for a few trans folks, so the survey starts by asking them to participate (stage 1). At the end of the survey, the participants are asked to provide contact details for other people who might want to participate. In stage 2, those new contacts are surveyed. The process continues until the researchers have sufficient data. The big advantage to snowball sampling is that it gets you data in situations that might otherwise be impossible to get any. On the statistical side, the main disadvantage is that the sample is highly non-random, and non-random in ways that are difficult to address. On the real life side, the disadvantage is that the procedure can be unethical if not handled well, because hidden populations are often hidden for a reason. I chose transgender people as an example here to highlight this: if you weren’t careful you might end up outing people who don’t want to be outed (very, very bad form), and even if you don’t make that mistake it can still be intrusive to use people’s social networks to study them. It’s certainly very hard to get people’s informed consent before contacting them, yet in many cases the simple act of contacting them and saying “hey we want to study you” can be hurtful. Social networks are complex things, and just because you can use them to get data doesn’t always mean you should.

- Convenience sampling is more or less what it sounds like. The samples are chosen in a way that is convenient to the researcher, and not selected at random from the population of interest. Snowball sampling is one type of convenience sampling, but there are many others. A common example in psychology are studies that rely on undergraduate psychology students. These samples are generally non-random in two respects: firstly, reliance on undergraduate psychology students automatically means that your data are restricted to a single subpopulation. Secondly, the students usually get to pick which studies they participate in, so the sample is a self selected subset of psychology students not a randomly selected subset. In real life, most studies are convenience samples of one form or another. This is sometimes a severe limitation, but not always.

much does it matter if you don’t have a simple random sample?

Okay, so real world data collection tends not to involve nice simple random samples. Does that matter? A little thought should make it clear to you that it can matter if your data are not a simple random sample: just think about the difference between Figures 10.1 and 10.2. However, it’s not quite as bad as it sounds. Some types of biased samples are entirely unproblematic. For instance, when using a stratified sampling technique you actually know what the bias is because you created it deliberately, often to increase the effectiveness of your study, and there are statistical techniques that you can use to adjust for the biases you’ve introduced (not covered in this book!). So in those situations it’s not a problem.

More generally though, it’s important to remember that random sampling is a means to an end, not the end in itself. Let’s assume you’ve relied on a convenience sample, and as such you can assume it’s biased. A bias in your sampling method is only a problem if it causes you to draw the wrong conclusions. When viewed from that perspective, I’d argue that we don’t need the sample to be randomly generated in every respect: we only need it to be random with respect to the psychologically-relevant phenomenon of interest. Suppose I’m doing a study looking at working memory capacity. In study 1, I actually have the ability to sample randomly from all human beings currently alive, with one exception: I can only sample people born on a Monday. In study 2, I am able to sample randomly from the Australian population. I want to generalise my results to the population of all living humans. Which study is better? The answer, obviously, is study 1. Why? Because we have no reason to think that being “born on a Monday” has any interesting relationship to working memory capacity. In contrast, I can think of several reasons why “being Australian” might matter. Australia is a wealthy, industrialised country with a very well-developed education system. People growing up in that system will have had life experiences much more similar to the experiences of the people who designed the tests for working memory capacity. This shared experience might easily translate into similar beliefs about how to “take a test”, a shared assumption about how psychological experimentation works, and so on. These things might actually matter. For instance, “test taking” style might have taught the Australian participants how to direct their attention exclusively on fairly abstract test materials relative to people that haven’t grown up in a similar environment; leading to a misleading picture of what working memory capacity is.

There are two points hidden in this discussion. Firstly, when designing your own studies, it’s important to think about what population you care about, and try hard to sample in a way that is appropriate to that population. In practice, you’re usually forced to put up with a “sample of convenience” (e.g., psychology lecturers sample psychology students because that’s the least expensive way to collect data, and our coffers aren’t exactly overflowing with gold), but if so you should at least spend some time thinking about what the dangers of this practice might be.

Secondly, if you’re going to criticise someone else’s study because they’ve used a sample of convenience rather than laboriously sampling randomly from the entire human population, at least have the courtesy to offer a specific theory as to how this might have distorted the results. Remember, everyone in science is aware of this issue, and does what they can to alleviate it. Merely pointing out that “the study only included people from group BLAH” is entirely unhelpful, and borders on being insulting to the researchers, who are of course aware of the issue. They just don’t happen to be in possession of the infinite supply of time and money required to construct the perfect sample. In short, if you want to offer a responsible critique of the sampling process, then be helpful . Rehashing the blindingly obvious truisms that I’ve been rambling on about in this section isn’t helpful.

Population parameters and sample statistics

Okay. Setting aside the thorny methodological issues associated with obtaining a random sample and my rather unfortunate tendency to rant about lazy methodological criticism, let’s consider a slightly different issue. Up to this point we have been talking about populations the way a scientist might. To a psychologist, a population might be a group of people. To an ecologist, a population might be a group of bears. In most cases the populations that scientists care about are concrete things that actually exist in the real world. Statisticians, however, are a funny lot. On the one hand, they are interested in real world data and real science in the same way that scientists are. On the other hand, they also operate in the realm of pure abstraction in the way that mathematicians do. As a consequence, statistical theory tends to be a bit abstract in how a population is defined. In much the same way that psychological researchers operationalise our abstract theoretical ideas in terms of concrete measurements (Section 2.1, statisticians operationalise the concept of a “population” in terms of mathematical objects that they know how to work with. You’ve already come across these objects in Chapter 9: they’re called probability distributions.

The idea is quite simple. Let’s say we’re talking about IQ scores. To a psychologist, the population of interest is a group of actual humans who have IQ scores. A statistician “simplifies” this by operationally defining the population as the probability distribution depicted in Figure ?? . IQ tests are designed so that the average IQ is 100, the standard deviation of IQ scores is 15, and the distribution of IQ scores is normal. These values are referred to as the population parameters because they are characteristics of the entire population. That is, we say that the population mean μ is 100, and the population standard deviation σ is 15.

Now suppose I run an experiment. I select 100 people at random and administer an IQ test, giving me a simple random sample from the population. My sample would consist of a collection of numbers like this:

Each of these IQ scores is sampled from a normal distribution with mean 100 and standard deviation 15. So if I plot a histogram of the sample, I get something like the one shown in Figure 10.4b. As you can see, the histogram is roughly the right shape, but it’s a very crude approximation to the true population distribution shown in Figure 10.4a. When I calculate the mean of my sample, I get a number that is fairly close to the population mean 100 but not identical. In this case, it turns out that the people in my sample have a mean IQ of 98.5, and the standard deviation of their IQ scores is 15.9. These sample statistics are properties of my data set, and although they are fairly similar to the true population values, they are not the same. In general, sample statistics are the things you can calculate from your data set, and the population parameters are the things you want to learn about. Later on in this chapter I’ll talk about how you can estimate population parameters using your sample statistics (Section 10.4 and how to work out how confident you are in your estimates (Section 10.5 but before we get to that there’s a few more ideas in sampling theory that you need to know about.

Introduction to Research Methods

7 samples and populations.

So you’ve developed your research question, figured out how you’re going to measure whatever you want to study, and have your survey or interviews ready to go. Now all your need is other people to become your data.

You might say ‘easy!’, there’s people all around you. You have a big family tree and surely them and their friends would have happy to take your survey. And then there’s your friends and people you’re in class with. Finding people is way easier than writing the interview questions or developing the survey. That reaction might be a strawman, maybe you’ve come to the conclusion none of this is easy. For your data to be valuable, you not only have to ask the right questions, you have to ask the right people. The “right people” aren’t the best or the smartest people, the right people are driven by what your study is trying to answer and the method you’re using to answer it.

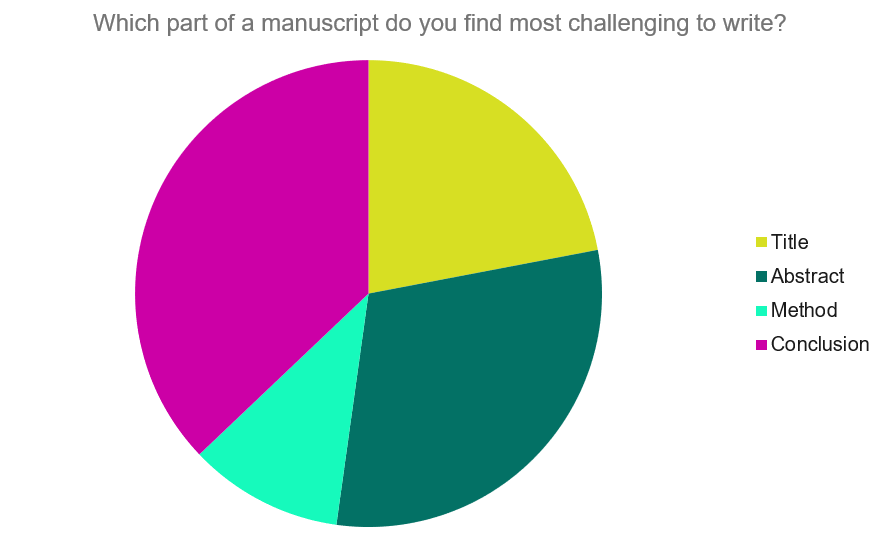

Remember way back in chapter 2 when we looked at this chart and discussed the differences between qualitative and quantitative data.

One of the biggest differences between quantitative and qualitative data was whether we wanted to be able to explain something for a lot of people (what percentage of residents in Oklahoma support legalizing marijuana?) versus explaining the reasons for those opinions (why do some people support legalizing marijuana and others not?). The underlying differences there is whether our goal is explain something about everyone, or whether we’re content to explain it about just our respondents.

‘Everyone’ is called the population . The population in research is whatever group the research is trying to answer questions about. The population could be everyone on planet Earth, everyone in the United States, everyone in rural counties of Iowa, everyone at your university, and on and on. It is simply everyone within the unit you are intending to study.

In order to study the population, we typically take a sample or a subset. A sample is simply a smaller number of people from the population that are studied, which we can use to then understand the characteristics of the population based on that subset. That’s why a poll of 1300 likely voters can be used to guess at who will win your states Governor race. It isn’t perfect, and we’ll talk about the math behind all of it in a later chapter, but for now we’ll just focus on the different types of samples you might use to study a population with a survey.

If correctly sampled, we can use the sample to generalize information we get to the population. Generalizability , which we defined earlier, means we can assume the responses of people to our study match the responses everyone would have given us. We can only do that if the sample is representative of the population, meaning that they are alike on important characteristics such as race, gender, age, education. If something makes a large difference in people’s views on a topic in your research and your sample is not balanced, you’ll get inaccurate results.

Generalizability is more of a concern with surveys than with interviews. The goal of a survey is to explain something about people beyond the sample you get responses from. You’ll never see a news headline saying that “53% of 1250 Americans that responded to a poll approve of the President”. It’s only worth asking those 1250 people if we can assume the rest of the United States feels the same way overall. With interviews though we’re looking for depth from their responses, and so we are less hopefully that the 15 people we talk to will exactly match the American population. That doesn’t mean the data we collect from interviews doesn’t have value, it just has different uses.

There are two broad types of samples, with several different techniques clustered below those. Probability sampling is associated with surveys, and non-probability sampling is often used when conducting interviews. We’ll first describe probability samples, before discussing the non-probability options.

The type of sampling you’ll use will be based on the type of research you’re intending to do. There’s no sample that’s right or wrong, they can just be more or less appropriate for the question you’re trying to answer. And if you use a less appropriate sampling strategy, the answer you get through your research is less likely to be accurate.

7.1 Types of Probability Samples

So we just hinted at the idea that depending on the sample you use, you can generalize the data you collect from the sample to the population. That will depend though on whether your sample represents the population. To ensure that your sample is representative of the population, you will want to use a probability sample. A representative sample refers to whether the characteristics (race, age, income, education, etc) of the sample are the same as the population. Probability sampling is a sampling technique in which every individual in the population has an equal chance of being selected as a subject for the research.

There are several different types of probability samples you can use, depending on the resources you have available.

Let’s start with a simple random sample . In order to use a simple random sample all you have to do is take everyone in your population, throw them in a hat (not literally, you can just throw their names in a hat), and choose the number of names you want to use for your sample. By drawing blindly, you can eliminate human bias in constructing the sample and your sample should represent the population from which it is being taken.

However, a simple random sample isn’t quite that easy to build. The biggest issue is that you have to know who everyone is in order to randomly select them. What that requires is a sampling frame , a list of all residents in the population. But we don’t always have that. There is no list of residents of New York City (or any other city). Organizations that do have such a list wont just give it away. Try to ask your university for a list and contact information of everyone at your school so you can do a survey? They wont give it to you, for privacy reasons. It’s actually harder to think of popultions you could easily develop a sample frame for than those you can’t. If you can get or build a sampling frame, the work of a simple random sample is fairly simple, but that’s the biggest challenge.

Most of the time a true sampling frame is impossible to acquire, so researcher have to settle for something approximating a complete list. Earlier generations of researchers could use the random dial method to contact a random sample of Americans, because every household had a single phone. To use it you just pick up the phone and dial random numbers. Assuming the numbers are actually random, anyone might be called. That method actually worked somewhat well, until people stopped having home phone numbers and eventually stopped answering the phone. It’s a fun mental exercise to think about how you would go about creating a sampling frame for different groups though; think through where you would look to find a list of everyone in these groups:

Plumbers Recent first-time fathers Members of gyms

The best way to get an actual sampling frame is likely to purchase one from a private company that buys data on people from all the different websites we use.

Let’s say you do have a sampling frame though. For instance, you might be hired to do a survey of members of the Republican Party in the state of Utah to understand their political priorities this year, and the organization could give you a list of their members because they’ve hired you to do the reserach. One method of constructing a simple random sample would be to assign each name on the list a number, and then produce a list of random numbers. Once you’ve matched the random numbers to the list, you’ve got your sample. See the example using the list of 20 names below

and the list of 5 random numbers.

Systematic sampling is similar to simple random sampling in that it begins with a list of the population, but instead of choosing random numbers one would select every kth name on the list. What the heck is a kth? K just refers to how far apart the names are on the list you’re selecting. So if you want to sample one-tenth of the population, you’d select every tenth name. In order to know the k for your study you need to know your sample size (say 1000) and the size of the population (75000). You can divide the size of the population by the sample (75000/1000), which will produce your k (750). As long as the list does not contain any hidden order, this sampling method is as good as the random sampling method, but its only advantage over the random sampling technique is simplicity. If we used the same list as above and wanted to survey 1/5th of the population, we’d include 4 of the names on the list. It’s important with systematic samples to randomize the starting point in the list, otherwise people with A names will be oversampled. If we started with the 3rd name, we’d select Annabelle Frye, Cristobal Padilla, Jennie Vang, and Virginia Guzman, as shown below. So in order to use a systematic sample, we need three things, the population size (denoted as N ), the sample size we want ( n ) and k , which we calculate by dividing the population by the sample).

N= 20 (Population Size) n= 4 (Sample Size) k= 5 {20/4 (kth element) selection interval}

We can also use a stratified sample , but that requires knowing more about the population than just their names. A stratified sample divides the study population into relevant subgroups, and then draws a sample from each subgroup. Stratified sampling can be used if you’re very concerned about ensuring balance in the sample or there may be a problem of underrepresentation among certain groups when responses are received. Not everyone in your sample is equally likely to answer a survey. Say for instance we’re trying to predict who will win an election in a county with three cities. In city A there are 1 million college students, in city B there are 2 million families, and in City C there are 3 million retirees. You know that retirees are more likely than busy college students or parents to respond to a poll. So you break the sample into three parts, ensuring that you get 100 responses from City A, 200 from City B, and 300 from City C, so the three cities would match the population. A stratified sample provides the researcher control over the subgroups that are included in the sample, whereas simple random sampling does not guarantee that any one type of person will be included in the final sample. A disadvantage is that it is more complex to organize and analyze the results compared to simple random sampling.

Cluster sampling is an approach that begins by sampling groups (or clusters) of population elements and then selects elements from within those groups. A researcher would use cluster sampling if getting access to elements in an entrie population is too challenging. For instance, a study on students in schools would probably benefit from randomly selecting from all students at the 36 elementary schools in a fictional city. But getting contact information for all students would be very difficult. So the researcher might work with principals at several schools and survey those students. The researcher would need to ensure that the students surveyed at the schools are similar to students throughout the entire city, and greater access and participation within each cluster may make that possible.

The image below shows how this can work, although the example is oversimplified. Say we have 12 students that are in 6 classrooms. The school is in total 1/4th green (3/12), 1/4th yellow (3/12), and half blue (6/12). By selecting the right clusters from within the school our sample can be representative of the entire school, assuming these colors are the only significant difference between the students. In the real world, you’d want to match the clusters and population based on race, gender, age, income, etc. And I should point out that this is an overly simplified example. What if 5/12s of the school was yellow and 1/12th was green, how would I get the right proportions? I couldn’t, but you’d do the best you could. You still wouldn’t want 4 yellows in the sample, you’d just try to approximiate the population characteristics as best you can.

7.2 Actually Doing a Survey

All of that probably sounds pretty complicated. Identifying your population shouldn’t be too difficult, but how would you ever get a sampling frame? And then actually identifying who to include… It’s probably a bit overwhelming and makes doing a good survey sound impossible.

Researchers using surveys aren’t superhuman though. Often times, they use a little help. Because surveys are really valuable, and because researchers rely on them pretty often, there has been substantial growth in companies that can help to get one’s survey to its intended audience.

One popular resource is Amazon’s Mechanical Turk (more commonly known as MTurk). MTurk is at its most basic a website where workers look for jobs (called hits) to be listed by employers, and choose whether to do the task or not for a set reward. MTurk has grown over the last decade to be a common source of survey participants in the social sciences, in part because hiring workers costs very little (you can get some surveys completed for penny’s). That means you can get your survey completed with a small grant ($1-2k at the low end) and get the data back in a few hours. Really, it’s a quick and easy way to run a survey.

However, the workers aren’t perfectly representative of the average American. For instance, researchers have found that MTurk respondents are younger, better educated, and earn less than the average American.

One way to get around that issue, which can be used with MTurk or any survey, is to weight the responses. Because with MTurk you’ll get fewer responses from older, less educated, and richer Americans, those responses you do give you want to count for more to make your sample more representative of the population. Oversimplified example incoming!

Imagine you’re setting up a pizza party for your class. There are 9 people in your class, 4 men and 5 women. You only got 4 responses from the men, and 3 from the women. All 4 men wanted peperoni pizza, while the 3 women want a combination. Pepperoni wins right, 4 to 3? Not if you assume that the people that didn’t respond are the same as the ones that did. If you weight the responses to match the population (the full class of 9), a combination pizza is the winner.

Because you know the population of women is 5, you can weight the 3 responses from women by 5/3 = 1.6667. If we weight (or multiply) each vote we did receive from a woman by 1.6667, each vote for a combination now equals 1.6667, meaning that the 3 votes for combination total 5. Because we received a vote from every man in the class, we just weight their votes by 1. The big assumption we have to make is that the people we didn’t hear from (the 2 women that didn’t vote) are similar to the ones we did hear from. And if we don’t get any responses from a group we don’t have anything to infer their preferences or views from.

Let’s go through a slightly more complex example, still just considering one quality about people in the class. Let’s say your class actually has 100 students, but you only received votes from 50. And, what type of pizza people voted for is mixed, but men still prefer peperoni overall, and women still prefer combination. The class is 60% female and 40% male.

We received 21 votes from women out of the 60, so we can weight their responses by 60/21 to represent the population. We got 29 votes out of the 40 for men, so their responses can be weighted by 40/29. See the math below.

53.8 votes for combination? That might seem a little odd, but weighting isn’t a perfect science. We can’t identify what a non-respondent would have said exactly, all we can do is use the responses of other similar people to make a good guess. That issue often comes up in polling, where pollsters have to guess who is going to vote in a given election in order to project who will win. And we can weight on any characteristic of a person we think will be important, alone or in combination. Modern polls weight on age, gender, voting habits, education, and more to make the results as generalizable as possible.

There’s an appendix later in this book where I walk through the actual steps of creating weights for a sample in R, if anyone actually does a survey. I intended this section to show that doing a good survey might be simpler than it seemed, but now it might sound even more difficult. A good lesson to take though is that there’s always another door to go through, another hurdle to improve your methods. Being good at research just means being constantly prepared to be given a new challenge, and being able to find another solution.

7.3 Non-Probability Sampling

Qualitative researchers’ main objective is to gain an in-depth understanding on the subject matter they are studying, rather than attempting to generalize results to the population. As such, non-probability sampling is more common because of the researchers desire to gain information not from random elements of the population, but rather from specific individuals.

Random selection is not used in nonprobability sampling. Instead, the personal judgment of the researcher determines who will be included in the sample. Typically, researchers may base their selection on availability, quotas, or other criteria. However, not all members of the population are given an equal chance to be included in the sample. This nonrandom approach results in not knowing whether the sample represents the entire population. Consequently, researchers are not able to make valid generalizations about the population.

As with probability sampling, there are several types of non-probability samples. Convenience sampling , also known as accidental or opportunity sampling, is a process of choosing a sample that is easily accessible and readily available to the researcher. Researchers tend to collect samples from convenient locations such as their place of employment, a location, school, or other close affiliation. Although this technique allows for quick and easy access to available participants, a large part of the population is excluded from the sample.

For example, researchers (particularly in psychology) often rely on research subjects that are at their universities. That is highly convenient, students are cheap to hire and readily available on campuses. However, it means the results of the study may have limited ability to predict motivations or behaviors of people that aren’t included in the sample, i.e., people outside the age of 18-22 that are going to college.

If I ask you to get find out whether people approve of the mayor or not, and tell you I want 500 people’s opinions, should you go stand in front of the local grocery store? That would be convinient, and the people coming will be random, right? Not really. If you stand outside a rural Piggly Wiggly or an urban Whole Foods, do you think you’ll see the same people? Probably not, people’s chracteristics make the more or less likely to be in those locations. This technique runs the high risk of over- or under-representation, biased results, as well as an inability to make generalizations about the larger population. As the name implies though, it is convenient.

Purposive sampling , also known as judgmental or selective sampling, refers to a method in which the researcher decides who will be selected for the sample based on who or what is relevant to the study’s purpose. The researcher must first identify a specific characteristic of the population that can best help answer the research question. Then, they can deliberately select a sample that meets that particular criterion. Typically, the sample is small with very specific experiences and perspectives. For instance, if I wanted to understand the experiences of prominent foreign-born politicians in the United States, I would purposefully build a sample of… prominent foreign-born politicians in the United States. That would exclude anyone that was born in the United States or and that wasn’t a politician, and I’d have to define what I meant by prominent. Purposive sampling is susceptible to errors in judgment by the researcher and selection bias due to a lack of random sampling, but when attempting to research small communities it can be effective.

When dealing with small and difficult to reach communities researchers sometimes use snowball samples , also known as chain referral sampling. Snowball sampling is a process in which the researcher selects an initial participant for the sample, then asks that participant to recruit or refer additional participants who have similar traits as them. The cycle continues until the needed sample size is obtained.

This technique is used when the study calls for participants who are hard to find because of a unique or rare quality or when a participant does not want to be found because they are part of a stigmatized group or behavior. Examples may include people with rare diseases, sex workers, or a child sex offenders. It would be impossible to find an accurate list of sex workers anywhere, and surveying the general population about whether that is their job will produce false responses as people will be unwilling to identify themselves. As such, a common method is to gain the trust of one individual within the community, who can then introduce you to others. It is important that the researcher builds rapport and gains trust so that participants can be comfortable contributing to the study, but that must also be balanced by mainting objectivity in the research.

Snowball sampling is a useful method for locating hard to reach populations but cannot guarantee a representative sample because each contact will be based upon your last. For instance, let’s say you’re studying illegal fight clubs in your state. Some fight clubs allow weapons in the fights, while others completely ban them; those two types of clubs never interreact because of their disagreement about whether weapons should be allowed, and there’s no overlap between them (no members in both type of club). If your initial contact is with a club that uses weapons, all of your subsequent contacts will be within that community and so you’ll never understand the differences. If you didn’t know there were two types of clubs when you started, you’ll never even know you’re only researching half of the community. As such, snowball sampling can be a necessary technique when there are no other options, but it does have limitations.

Quota Sampling is a process in which the researcher must first divide a population into mutually exclusive subgroups, similar to stratified sampling. Depending on what is relevant to the study, subgroups can be based on a known characteristic such as age, race, gender, etc. Secondly, the researcher must select a sample from each subgroup to fit their predefined quotas. Quota sampling is used for the same reason as stratified sampling, to ensure that your sample has representation of certain groups. For instance, let’s say that you’re studying sexual harassment in the workplace, and men are much more willing to discuss their experiences than women. You might choose to decide that half of your final sample will be women, and stop requesting interviews with men once you fill your quota. The core difference is that while stratified sampling chooses randomly from within the different groups, quota sampling does not. A quota sample can either be proportional or non-proportional . Proportional quota sampling refers to ensuring that the quotas in the sample match the population (if 35% of the company is female, 35% of the sample should be female). Non-proportional sampling allows you to select your own quota sizes. If you think the experiences of females with sexual harassment are more important to your research, you can include whatever percentage of females you desire.

7.4 Dangers in sampling

Now that we’ve described all the different ways that one could create a sample, we can talk more about the pitfalls of sampling. Ensuring a quality sample means asking yourself some basic questions:

- Who is in the sample?

- How were they sampled?

- Why were they sampled?

A meal is often only as good as the ingredients you use, and your data will only be as good as the sample. If you collect data from the wrong people, you’ll get the wrong answer. You’ll still get an answer, it’ll just be inaccurate. And I want to reemphasize here wrong people just refers to inappropriate for your study. If I want to study bullying in middle schools, but I only talk to people that live in a retirement home, how accurate or relevant will the information I gather be? Sure, they might have grandchildren in middle school, and they may remember their experiences. But wouldn’t my information be more relevant if I talked to students in middle school, or perhaps a mix of teachers, parents, and students? I’ll get an answer from retirees, but it wont be the one I need. The sample has to be appropriate to the research question.

Is a bigger sample always better? Not necessarily. A larger sample can be useful, but a more representative one of the population is better. That was made painfully clear when the magazine Literary Digest ran a poll to predict who would win the 1936 presidential election between Alf Landon and incumbent Franklin Roosevelt. Literary Digest had run the poll since 1916, and had been correct in predicting the outcome every time. It was the largest poll ever, and they received responses for 2.27 million people. They essentially received responses from 1 percent of the American population, while many modern polls use only 1000 responses for a much more populous country. What did they predict? They showed that Alf Landon would be the overwhelming winner, yet when the election was held Roosevelt won every state except Maine and Vermont. It was one of the most decisive victories in Presidential history.

So what went wrong for the Literary Digest? Their poll was large (gigantic!), but it wasn’t representative of likely voters. They polled their own readership, which tended to be more educated and wealthy on average, along with people on a list of those with registered automobiles and telephone users (both of which tended to be owned by the wealthy at that time). Thus, the poll largely ignored the majority of Americans, who ended up voting for Roosevelt. The Literary Digest poll is famous for being wrong, but led to significant improvements in the science of polling to avoid similar mistakes in the future. Researchers have learned a lot in the century since that mistake, even if polling and surveys still aren’t (and can’t be) perfect.

What kind of sampling strategy did Literary Digest use? Convenience, they relied on lists they had available, rather than try to ensure every American was included on their list. A representative poll of 2 million people will give you more accurate results than a representative poll of 2 thousand, but I’ll take the smaller more representative poll than a larger one that uses convenience sampling any day.

7.5 Summary

Picking the right type of sample is critical to getting an accurate answer to your reserach question. There are a lot of differnet options in how you can select the people to participate in your research, but typically only one that is both correct and possible depending on the research you’re doing. In the next chapter we’ll talk about a few other methods for conducting reseach, some that don’t include any sampling by you.

- USC Libraries

- Research Guides

Organizing Your Social Sciences Research Paper

- 9. The Conclusion

- Purpose of Guide

- Design Flaws to Avoid

- Independent and Dependent Variables

- Glossary of Research Terms

- Reading Research Effectively

- Narrowing a Topic Idea

- Broadening a Topic Idea

- Extending the Timeliness of a Topic Idea

- Academic Writing Style

- Applying Critical Thinking

- Choosing a Title

- Making an Outline

- Paragraph Development

- Research Process Video Series

- Executive Summary

- The C.A.R.S. Model

- Background Information

- The Research Problem/Question

- Theoretical Framework

- Citation Tracking

- Content Alert Services

- Evaluating Sources

- Primary Sources

- Secondary Sources

- Tiertiary Sources

- Scholarly vs. Popular Publications

- Qualitative Methods

- Quantitative Methods

- Insiderness

- Using Non-Textual Elements

- Limitations of the Study

- Common Grammar Mistakes

- Writing Concisely

- Avoiding Plagiarism

- Footnotes or Endnotes?

- Further Readings

- Generative AI and Writing

- USC Libraries Tutorials and Other Guides

- Bibliography

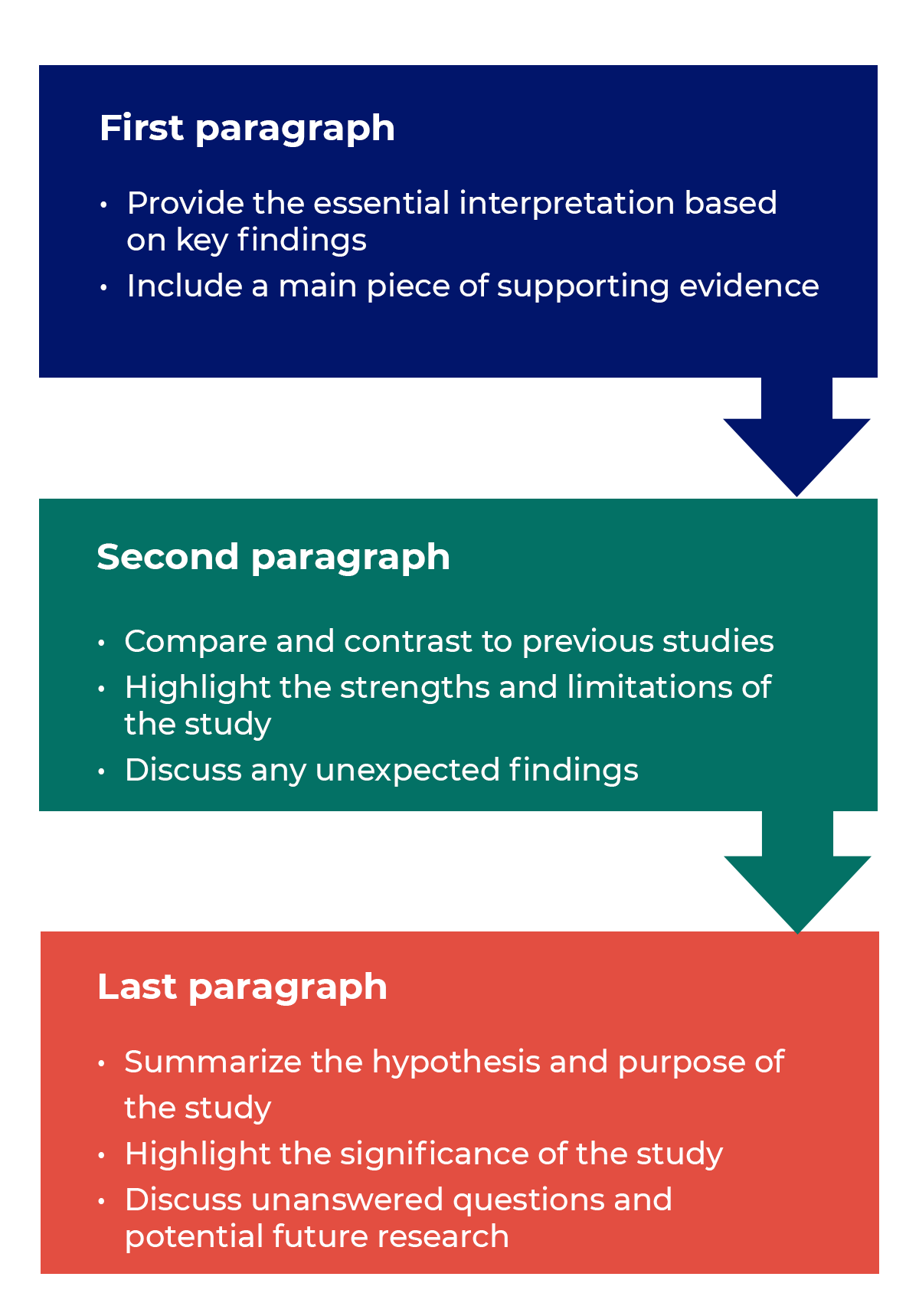

The conclusion is intended to help the reader understand why your research should matter to them after they have finished reading the paper. A conclusion is not merely a summary of the main topics covered or a re-statement of your research problem, but a synthesis of key points derived from the findings of your study and, if applicable, where you recommend new areas for future research. For most college-level research papers, two or three well-developed paragraphs is sufficient for a conclusion, although in some cases, more paragraphs may be required in describing the key findings and their significance.

Conclusions. The Writing Center. University of North Carolina; Conclusions. The Writing Lab and The OWL. Purdue University.

Importance of a Good Conclusion

A well-written conclusion provides you with important opportunities to demonstrate to the reader your understanding of the research problem. These include:

- Presenting the last word on the issues you raised in your paper . Just as the introduction gives a first impression to your reader, the conclusion offers a chance to leave a lasting impression. Do this, for example, by highlighting key findings in your analysis that advance new understanding about the research problem, that are unusual or unexpected, or that have important implications applied to practice.

- Summarizing your thoughts and conveying the larger significance of your study . The conclusion is an opportunity to succinctly re-emphasize your answer to the "So What?" question by placing the study within the context of how your research advances past research about the topic.

- Identifying how a gap in the literature has been addressed . The conclusion can be where you describe how a previously identified gap in the literature [first identified in your literature review section] has been addressed by your research and why this contribution is significant.

- Demonstrating the importance of your ideas . Don't be shy. The conclusion offers an opportunity to elaborate on the impact and significance of your findings. This is particularly important if your study approached examining the research problem from an unusual or innovative perspective.

- Introducing possible new or expanded ways of thinking about the research problem . This does not refer to introducing new information [which should be avoided], but to offer new insight and creative approaches for framing or contextualizing the research problem based on the results of your study.

Bunton, David. “The Structure of PhD Conclusion Chapters.” Journal of English for Academic Purposes 4 (July 2005): 207–224; Conclusions. The Writing Center. University of North Carolina; Kretchmer, Paul. Twelve Steps to Writing an Effective Conclusion. San Francisco Edit, 2003-2008; Conclusions. The Writing Lab and The OWL. Purdue University; Assan, Joseph. "Writing the Conclusion Chapter: The Good, the Bad and the Missing." Liverpool: Development Studies Association (2009): 1-8.

Structure and Writing Style

I. General Rules

The general function of your paper's conclusion is to restate the main argument . It reminds the reader of the strengths of your main argument(s) and reiterates the most important evidence supporting those argument(s). Do this by clearly summarizing the context, background, and necessity of pursuing the research problem you investigated in relation to an issue, controversy, or a gap found in the literature. However, make sure that your conclusion is not simply a repetitive summary of the findings. This reduces the impact of the argument(s) you have developed in your paper.

When writing the conclusion to your paper, follow these general rules:

- Present your conclusions in clear, concise language. Re-state the purpose of your study, then describe how your findings differ or support those of other studies and why [i.e., what were the unique, new, or crucial contributions your study made to the overall research about your topic?].

- Do not simply reiterate your findings or the discussion of your results. Provide a synthesis of arguments presented in the paper to show how these converge to address the research problem and the overall objectives of your study.

- Indicate opportunities for future research if you haven't already done so in the discussion section of your paper. Highlighting the need for further research provides the reader with evidence that you have an in-depth awareness of the research problem but that further investigations should take place beyond the scope of your investigation.

Consider the following points to help ensure your conclusion is presented well:

- If the argument or purpose of your paper is complex, you may need to summarize the argument for your reader.

- If, prior to your conclusion, you have not yet explained the significance of your findings or if you are proceeding inductively, use the end of your paper to describe your main points and explain their significance.

- Move from a detailed to a general level of consideration that returns the topic to the context provided by the introduction or within a new context that emerges from the data [this is opposite of the introduction, which begins with general discussion of the context and ends with a detailed description of the research problem].

The conclusion also provides a place for you to persuasively and succinctly restate the research problem, given that the reader has now been presented with all the information about the topic . Depending on the discipline you are writing in, the concluding paragraph may contain your reflections on the evidence presented. However, the nature of being introspective about the research you have conducted will depend on the topic and whether your professor wants you to express your observations in this way. If asked to think introspectively about the topics, do not delve into idle speculation. Being introspective means looking within yourself as an author to try and understand an issue more deeply, not to guess at possible outcomes or make up scenarios not supported by the evidence.

II. Developing a Compelling Conclusion

Although an effective conclusion needs to be clear and succinct, it does not need to be written passively or lack a compelling narrative. Strategies to help you move beyond merely summarizing the key points of your research paper may include any of the following:

- If your essay deals with a critical, contemporary problem, warn readers of the possible consequences of not attending to the problem proactively.

- Recommend a specific course or courses of action that, if adopted, could address a specific problem in practice or in the development of new knowledge leading to positive change.

- Cite a relevant quotation or expert opinion already noted in your paper in order to lend authority and support to the conclusion(s) you have reached [a good source would be from your literature review].

- Explain the consequences of your research in a way that elicits action or demonstrates urgency in seeking change.

- Restate a key statistic, fact, or visual image to emphasize the most important finding of your paper.

- If your discipline encourages personal reflection, illustrate your concluding point by drawing from your own life experiences.

- Return to an anecdote, an example, or a quotation that you presented in your introduction, but add further insight derived from the findings of your study; use your interpretation of results from your study to recast it in new or important ways.

- Provide a "take-home" message in the form of a succinct, declarative statement that you want the reader to remember about your study.

III. Problems to Avoid

Failure to be concise Your conclusion section should be concise and to the point. Conclusions that are too lengthy often have unnecessary information in them. The conclusion is not the place for details about your methodology or results. Although you should give a summary of what was learned from your research, this summary should be relatively brief, since the emphasis in the conclusion is on the implications, evaluations, insights, and other forms of analysis that you make. Strategies for writing concisely can be found here .

Failure to comment on larger, more significant issues In the introduction, your task was to move from the general [the field of study] to the specific [the research problem]. However, in the conclusion, your task is to move from a specific discussion [your research problem] back to a general discussion framed around the implications and significance of your findings [i.e., how your research contributes new understanding or fills an important gap in the literature]. In short, the conclusion is where you should place your research within a larger context [visualize your paper as an hourglass--start with a broad introduction and review of the literature, move to the specific analysis and discussion, conclude with a broad summary of the study's implications and significance].

Failure to reveal problems and negative results Negative aspects of the research process should never be ignored. These are problems, deficiencies, or challenges encountered during your study. They should be summarized as a way of qualifying your overall conclusions. If you encountered negative or unintended results [i.e., findings that are validated outside the research context in which they were generated], you must report them in the results section and discuss their implications in the discussion section of your paper. In the conclusion, use negative results as an opportunity to explain their possible significance and/or how they may form the basis for future research.

Failure to provide a clear summary of what was learned In order to be able to discuss how your research fits within your field of study [and possibly the world at large], you need to summarize briefly and succinctly how it contributes to new knowledge or a new understanding about the research problem. This element of your conclusion may be only a few sentences long.

Failure to match the objectives of your research Often research objectives in the social and behavioral sciences change while the research is being carried out. This is not a problem unless you forget to go back and refine the original objectives in your introduction. As these changes emerge they must be documented so that they accurately reflect what you were trying to accomplish in your research [not what you thought you might accomplish when you began].

Resist the urge to apologize If you've immersed yourself in studying the research problem, you presumably should know a good deal about it [perhaps even more than your professor!]. Nevertheless, by the time you have finished writing, you may be having some doubts about what you have produced. Repress those doubts! Don't undermine your authority as a researcher by saying something like, "This is just one approach to examining this problem; there may be other, much better approaches that...." The overall tone of your conclusion should convey confidence to the reader about the study's validity and realiability.

Assan, Joseph. "Writing the Conclusion Chapter: The Good, the Bad and the Missing." Liverpool: Development Studies Association (2009): 1-8; Concluding Paragraphs. College Writing Center at Meramec. St. Louis Community College; Conclusions. The Writing Center. University of North Carolina; Conclusions. The Writing Lab and The OWL. Purdue University; Freedman, Leora and Jerry Plotnick. Introductions and Conclusions. The Lab Report. University College Writing Centre. University of Toronto; Leibensperger, Summer. Draft Your Conclusion. Academic Center, the University of Houston-Victoria, 2003; Make Your Last Words Count. The Writer’s Handbook. Writing Center. University of Wisconsin Madison; Miquel, Fuster-Marquez and Carmen Gregori-Signes. “Chapter Six: ‘Last but Not Least:’ Writing the Conclusion of Your Paper.” In Writing an Applied Linguistics Thesis or Dissertation: A Guide to Presenting Empirical Research . John Bitchener, editor. (Basingstoke,UK: Palgrave Macmillan, 2010), pp. 93-105; Tips for Writing a Good Conclusion. Writing@CSU. Colorado State University; Kretchmer, Paul. Twelve Steps to Writing an Effective Conclusion. San Francisco Edit, 2003-2008; Writing Conclusions. Writing Tutorial Services, Center for Innovative Teaching and Learning. Indiana University; Writing: Considering Structure and Organization. Institute for Writing Rhetoric. Dartmouth College.

Writing Tip

Don't Belabor the Obvious!

Avoid phrases like "in conclusion...," "in summary...," or "in closing...." These phrases can be useful, even welcome, in oral presentations. But readers can see by the tell-tale section heading and number of pages remaining that they are reaching the end of your paper. You'll irritate your readers if you belabor the obvious.

Assan, Joseph. "Writing the Conclusion Chapter: The Good, the Bad and the Missing." Liverpool: Development Studies Association (2009): 1-8.

Another Writing Tip

New Insight, Not New Information!

Don't surprise the reader with new information in your conclusion that was never referenced anywhere else in the paper. This why the conclusion rarely has citations to sources. If you have new information to present, add it to the discussion or other appropriate section of the paper. Note that, although no new information is introduced, the conclusion, along with the discussion section, is where you offer your most "original" contributions in the paper; the conclusion is where you describe the value of your research, demonstrate that you understand the material that you’ve presented, and position your findings within the larger context of scholarship on the topic, including describing how your research contributes new insights to that scholarship.

Assan, Joseph. "Writing the Conclusion Chapter: The Good, the Bad and the Missing." Liverpool: Development Studies Association (2009): 1-8; Conclusions. The Writing Center. University of North Carolina.

- << Previous: Limitations of the Study

- Next: Appendices >>

- Last Updated: May 18, 2024 11:38 AM

- URL: https://libguides.usc.edu/writingguide

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

Margin Size

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

15.2: Inferences from Samples to Populations

- Last updated

- Save as PDF

- Page ID 95149

- Jason Southworth & Chris Swoyer

- Fort Hays State & University University of Oklahoma

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\( \newcommand{\id}{\mathrm{id}}\) \( \newcommand{\Span}{\mathrm{span}}\)

( \newcommand{\kernel}{\mathrm{null}\,}\) \( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\) \( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\) \( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\id}{\mathrm{id}}\)

\( \newcommand{\kernel}{\mathrm{null}\,}\)

\( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\)

\( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\)

\( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\Span}{\mathrm{span}}\) \( \newcommand{\AA}{\unicode[.8,0]{x212B}}\)

\( \newcommand{\vectorA}[1]{\vec{#1}} % arrow\)

\( \newcommand{\vectorAt}[1]{\vec{\text{#1}}} % arrow\)

\( \newcommand{\vectorB}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vectorC}[1]{\textbf{#1}} \)

\( \newcommand{\vectorD}[1]{\overrightarrow{#1}} \)

\( \newcommand{\vectorDt}[1]{\overrightarrow{\text{#1}}} \)

\( \newcommand{\vectE}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash{\mathbf {#1}}}} \)

We frequently use sample statistics to draw inductive inferences about population parameters. When a newspaper conducts a poll to see how many people think President Trump should be impeached, they check with a sample, say 2,000 adults across the U. S. and draw a conclusion about what American adults in general think. Their results would be more accurate if they checked with everyone, but when a population is large, it simply isn’t practical to examine all its members. We have no choice but to rely on a sample from the population and make an inference based on it. When scientists engage in such inferences, they are said to be using inferential statistics . But all of us draw inferences from samples to populations many times every day.

Sampling in Everyday Life

Inferences based on samples are common in medical research, the social sciences, and polling. In these settings, scientists use what are called inferential statistics to move from claims about samples to conclusions about populations.

But we all draw similar inferences many times each day. You are driving through Belleville, KS for the first time and trying to decide where to eat. You have had good experiences at McDonalds restaurants in the past (the set of McDonald’s restaurants where you have eaten in the past at constitutes your sample). So, you might conclude that all McDonald’s restaurants (the population) are likely to be good and decide to sample the culinary delights of the one in Belleville. Or suppose you know six people (this is your sample) who have dated Wilbur, and all of them found him boring. You may well conclude that almost everyone (this is the population) would find him boring.

Whenever you make a generalization based on several (but not all) of the cases, you used sampling. You are drawing a conclusion about some larger group based on what you’ve observed about one of its subgroups.

Most learning from experience involves drawing inferences about unobserved cases (populations) from information about a limited number of cases that we have observed (our samples). You know what strategies worked in the cases you have experienced (your sample) for getting a date, quitting smoking, getting your car running when the battery seems dead, or doing well on an exam. And you then draw conclusions about the relevant populations based on this knowledge.

An examination is really a sampling procedure to determine how much you have learned. When your calculus professor makes up an examination, they hope to sample items from the population of things you have learned in the course and to use your grade as an indicator of how much information you have acquired.

Samples and Inference

We often infer a conclusion about a population from a description of a sample that was drawn from it. When we do:

- Our premises are claims about the sample .

- Our conclusion is a claim about the population .

.png?revision=1&size=bestfit&width=264&height=268)

For example, we might draw a conclusion about the divorce rate of people living in Florida from premises describing the divorce rates among 800 couples that our school’s Human Relations Department sampled.

In such a case, our inference is not deductively valid. It involves an inductive leap. The conclusion goes beyond the information in the argument’s premises, because it contains information about the entire population , while the premises only contain information about the sample. But if we are careful, our inference can still be inductively strong. This means that if we begin with true premises (which in this case means a correct description of the sample), we are likely to arrive at a true conclusion (about the entire population).

Good Samples

A good inductive inference from a sample to a population requires:

- A large enough sample.

- A representative (unbiased) sample.

We would need to delve more deeply into probability to say exactly how large is large enough, but we won’t worry about that here. The important point is that in everyday life we very often rely on samples that are clearly too small.

We also need a sample that is as representative of the entire population as possible. A sample that is not representative is said to be biased. An unbiased sample is typical of the population. By contrast, in a biased sample, some portions of the population are overrepresented and others are underrepresented .

The problem with a very small sample is that it is not likely to be representative. Other things being equal, a bigger sample will be more representative. But there are costs to gathering information—a price in time, dollars, and energy—so it is rarely feasible to get huge samples.

We can never be certain that a sample is unbiased, but we can strive to avoid any non-trivial biases we can discover. With some thought, the worst biases are often obvious. Suppose, for example, that we want to know what the U.S. adult public (our population) thinks about the consumption of alcohol. We would clearly get a biased sample if we distributed questionnaires only at a pool hall (we would have an overrepresentation of drinkers and an underrepresentation of those favoring temperance), or only at the local meetings of MADD (here, the biases would be reversed).

A classic example of a biased sample occurred in 1936, when a magazine, The Literary Digest , conducted a poll using names in telephone directories and on car registration lists. Most of the people they sampled favored Alf Landon over Franklin Roosevelt in that year’s Presidential election, but when election day rolled around, Roosevelt won in a landslide. What went wrong? News organizations now use telephone polling routinely, but in 1936, a relatively small percentage of people had telephones and cars, and most of them were affluent. These were the people most likely to vote for the Republican candidate, Landon, and so the sample was not representative of all voters.

There are other cases where bias is likely, even though it won’t be this blatant. For example, any time members of the sample volunteer, e.g., by returning a questionnaire in the mail, we are likely to have a biased sample. People willing to return a questionnaire are likely to differ in various ways from people who are not. Or, to take an example closer to home, tests that focus on only some of the material covered in class are likely to elicit a biased, unrepresentative sample of what you have learned. They aren’t a fair sample of what you know. Unfortunately, in some cases biases may be difficult to detect, and it may require a good deal of expertise to find it at all.

Random Sampling

The best way to obtain an unbiased sample is to use random sampling. A random sample is a method of sampling in which each member of the population has an equally good chance of being chosen for the sample. Random sampling does not guarantee a representative sample—nothing short of checking the entire population can guarantee that—but it does make it more likely. Random sampling avoids the biases involved with many other methods of sampling.

We can rarely get a truly random sample, but under some conditions, typically in carefully conducted studies and surveys, we can come reasonably close. But even in daily life we can use samples that are much less biased than those we often rely on.

Random Digit Dialing (RDD)

Modern technology now allows national polling organizations with large resources to approach the ideal of random sampling. Polls like the New York Times/CBS News poll use what is called Random Digit Dialing (RDD). The goal here is to give every residential phone number an equal chance of being called for an interview. Nowadays, almost all major polls use some form of RDD. For example, the New York Times/CBS News poll uses the GENESYS system, which employs a database of over 42,000 residential telephone numbers throughout the U.S. that is updated every few months. The system also employs software that draws a random sample of phone numbers from this database and then randomly makes up the last four digits of the number to be called. Of course, some sorts of people are harder to reach on the phone than others, and some sorts are more willing to volunteer information over the phone (the elderly tend to be more available, more willing to share, and more likely to answer an unknown number, for instance). But RDD constitutes an impressive step in the direction of randomization.

Stratified Random Sampling

Scientists sometimes go a step further and use a stratified random sample . Here, the aim is to ensure that there is a certain percentage of members of various subpopulations in our sample (e.g., an equal number of men and of women). They separate the population into relevant categories or “strata” before sampling (e.g., into the categories or subpopulations of men and of women). Then they sample randomly within each category. The thinking here is that results will be more accurate than mere random sampling because random sampling might accidentally over-weigh some group (because they were sampled at a higher rate through variance). So, rather than doing a random statewide poll about who is favored in the gubernatorial election, a poll using stratified random polling would make sure to poll all counties in proportion to turnout expectations (they might also stratify further based on gender, race, education, etc.).

A growing practical problem in recent years has been the decline in the public’s participation in polls. Pollsters are getting more and more “nonresponses.” Some of these result from the difficulty in contacting people by phone (people are at work, unwillingness to answer unknown numbers, etc.). But those contacted are also less willing to participate than they were in the past. The reasons for this aren’t completely clear, but growing disillusionment with politics and lack of patience for unsolicited calls resulting from the increase in telemarketing may be part of the reason.

The use of push polling also leads to a wariness about polls. Push polling is not really polling at all. Instead, an organization, e.g., the campaign organization of a candidate running for Senate, calls thousands of homes. The caller says they are conducting a poll, but in fact no results are collected and instead a damaging— and often false—claim about the other side is implanted in what is presented as a neutral question. For example, the caller might ask, “Do you agree with Candidate X’s goal to cut social security payments over the next six years?” Such deceptive uses of polling are likely to make the public more cynical about polls.

Sampling Variability