Have a thesis expert improve your writing

Check your thesis for plagiarism in 10 minutes, generate your apa citations for free.

- Knowledge Base

- Null and Alternative Hypotheses | Definitions & Examples

Null and Alternative Hypotheses | Definitions & Examples

Published on 5 October 2022 by Shaun Turney . Revised on 6 December 2022.

The null and alternative hypotheses are two competing claims that researchers weigh evidence for and against using a statistical test :

- Null hypothesis (H 0 ): There’s no effect in the population .

- Alternative hypothesis (H A ): There’s an effect in the population.

The effect is usually the effect of the independent variable on the dependent variable .

Table of contents

Answering your research question with hypotheses, what is a null hypothesis, what is an alternative hypothesis, differences between null and alternative hypotheses, how to write null and alternative hypotheses, frequently asked questions about null and alternative hypotheses.

The null and alternative hypotheses offer competing answers to your research question . When the research question asks “Does the independent variable affect the dependent variable?”, the null hypothesis (H 0 ) answers “No, there’s no effect in the population.” On the other hand, the alternative hypothesis (H A ) answers “Yes, there is an effect in the population.”

The null and alternative are always claims about the population. That’s because the goal of hypothesis testing is to make inferences about a population based on a sample . Often, we infer whether there’s an effect in the population by looking at differences between groups or relationships between variables in the sample.

You can use a statistical test to decide whether the evidence favors the null or alternative hypothesis. Each type of statistical test comes with a specific way of phrasing the null and alternative hypothesis. However, the hypotheses can also be phrased in a general way that applies to any test.

The null hypothesis is the claim that there’s no effect in the population.

If the sample provides enough evidence against the claim that there’s no effect in the population ( p ≤ α), then we can reject the null hypothesis . Otherwise, we fail to reject the null hypothesis.

Although “fail to reject” may sound awkward, it’s the only wording that statisticians accept. Be careful not to say you “prove” or “accept” the null hypothesis.

Null hypotheses often include phrases such as “no effect”, “no difference”, or “no relationship”. When written in mathematical terms, they always include an equality (usually =, but sometimes ≥ or ≤).

Examples of null hypotheses

The table below gives examples of research questions and null hypotheses. There’s always more than one way to answer a research question, but these null hypotheses can help you get started.

*Note that some researchers prefer to always write the null hypothesis in terms of “no effect” and “=”. It would be fine to say that daily meditation has no effect on the incidence of depression and p 1 = p 2 .

The alternative hypothesis (H A ) is the other answer to your research question . It claims that there’s an effect in the population.

Often, your alternative hypothesis is the same as your research hypothesis. In other words, it’s the claim that you expect or hope will be true.

The alternative hypothesis is the complement to the null hypothesis. Null and alternative hypotheses are exhaustive, meaning that together they cover every possible outcome. They are also mutually exclusive, meaning that only one can be true at a time.

Alternative hypotheses often include phrases such as “an effect”, “a difference”, or “a relationship”. When alternative hypotheses are written in mathematical terms, they always include an inequality (usually ≠, but sometimes > or <). As with null hypotheses, there are many acceptable ways to phrase an alternative hypothesis.

Examples of alternative hypotheses

The table below gives examples of research questions and alternative hypotheses to help you get started with formulating your own.

Null and alternative hypotheses are similar in some ways:

- They’re both answers to the research question

- They both make claims about the population

- They’re both evaluated by statistical tests.

However, there are important differences between the two types of hypotheses, summarized in the following table.

To help you write your hypotheses, you can use the template sentences below. If you know which statistical test you’re going to use, you can use the test-specific template sentences. Otherwise, you can use the general template sentences.

The only thing you need to know to use these general template sentences are your dependent and independent variables. To write your research question, null hypothesis, and alternative hypothesis, fill in the following sentences with your variables:

Does independent variable affect dependent variable ?

- Null hypothesis (H 0 ): Independent variable does not affect dependent variable .

- Alternative hypothesis (H A ): Independent variable affects dependent variable .

Test-specific

Once you know the statistical test you’ll be using, you can write your hypotheses in a more precise and mathematical way specific to the test you chose. The table below provides template sentences for common statistical tests.

Note: The template sentences above assume that you’re performing one-tailed tests . One-tailed tests are appropriate for most studies.

The null hypothesis is often abbreviated as H 0 . When the null hypothesis is written using mathematical symbols, it always includes an equality symbol (usually =, but sometimes ≥ or ≤).

The alternative hypothesis is often abbreviated as H a or H 1 . When the alternative hypothesis is written using mathematical symbols, it always includes an inequality symbol (usually ≠, but sometimes < or >).

A research hypothesis is your proposed answer to your research question. The research hypothesis usually includes an explanation (‘ x affects y because …’).

A statistical hypothesis, on the other hand, is a mathematical statement about a population parameter. Statistical hypotheses always come in pairs: the null and alternative hypotheses. In a well-designed study , the statistical hypotheses correspond logically to the research hypothesis.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the ‘Cite this Scribbr article’ button to automatically add the citation to our free Reference Generator.

Turney, S. (2022, December 06). Null and Alternative Hypotheses | Definitions & Examples. Scribbr. Retrieved 14 May 2024, from https://www.scribbr.co.uk/stats/null-and-alternative-hypothesis/

Is this article helpful?

Shaun Turney

Other students also liked, levels of measurement: nominal, ordinal, interval, ratio, the standard normal distribution | calculator, examples & uses, types of variables in research | definitions & examples.

Publication bias: Why null results are not necessarily ‘dull’ results

Any result is a good result

Negative results are sometimes hidden discoveries

The publication of research findings is crucial to scientific progress. It is one of the primary ways scientists share their research with their peers and contemporaries. Indeed, it enables scientists to share their knowledge with, and inspire, future generations by preserving and keeping a record of their work that lights the way for future discoveries.

Nonetheless:

“To light a candle is to cast a shadow” —Ursula Le Guin, A Wizard of Earthsea.

While the publication of research ‘lights a candle’ by illuminating discoveries and allowing researchers to learn of, and build on, each other’s work, publication bias potentially casts a shadow over a trail of valuable hidden discoveries and research.

Publication bias typically describes the phenomenon whereby positive results which support a hypothesis are more likely to be published than negative or null results, thus some discoveries remain hidden from view.

This has led to the potential distortion of scientific literature, from the view that bilingualism confers certain cognitive advantages, to the exaggeration of neurological sex differences.

Publication bias, isn't just a matter of academic rigour nor some niche pretentious academic concern, it has real consequences and null results do matter.

Misleading estimates of drug efficacy due to publication bias in antidepressant drug trials could cause doctors to undertake distorted risk-benefit analyses when prescribing drugs for patients.

Moreover, publication bias can misdirect research efforts resulting in wasted time, effort, resources, and funding which ultimately slow scienfitic progress as the overstated success of drug candidates in animal trials could lead to ineffective drug candidates being brought to clinical trial.

Furthermore, publication bias can skew the results of meta-analyses when it is not accounted for—thus compromising the effectiveness of a powerful scientific tool.

This is significant; meta-analyses are designed to account for variations and errors between studies by systematically synthesising and statistically analysing their combined findings.

Clearly, null results are important in science.

Publication bias can arise from a researcher’s decision not to publish negative results due to a combination of personal, financial, and professional pressures to publish ‘exciting’ results.

For example, the results may be contrary to existing research or the researcher’s beliefs; they may deem the results to be ‘null and dull’ and unlikely to attract further funding; and negative results may even be omitted to save page space in a journal and better highlight positive results from a study.

Some may even re-analyse their negative results or aggregate outcomes into a new endpoint to extract positive results for publication.

Researchers may also fail to submit a study showing negative results due to their perception that journal editors and peer-reviewers are biased against such results.

Publication bias can also arise from decisions of journal editors and peer reviewers to reject studies with null results because: “negative results have never made riveting reading”; the study may be similar to the peer-reviewer’s own work; they may be biased against research in different fields; or because studies with null results tend to be scrutinised more than positive ones.

Organisational and business conflicts of interest can also produce publication bias.

For example, a business may not publish negative results because they could endanger profits by diminishing the perceived effectiveness of a product or test.

Science is a rigorous and dynamic discipline that is attempting to implement strategies to mitigate publication bias.

This includes methods to discern and assess the extent of publication bias; statistical methods to reduce its impact in meta-analyses;10 the pre-registering of experiment design;1 and certain journals requesting and publishing null results.

So next time you peruse a scientific journal, spare a thought for the null results that never made it onto the page and the importance of these hidden discoveries.

- Chase, J. M., The Shadow of Bias. PLOS. Biol. 2013, 11 (7), e1001608.

- Eliot, L., You don’t have a male or female brain – the more brains scientists study, the weaker the evidence for sex differences. The Conversation, April 22, 2021.

- Bialystok, E.; Kroll, J. F.; Green, D. W.; MacWhinney, B.; Craik, F. I., Publication Bias and the Validity of Evidence: What's the Connection? Psychol. Sci. 2015, 26 (6), 944-6.

- Turner, E. H.; Matthews, A. M.; Linardatos, E.; Tell, R. A.; Rosenthal, R., Selective Publication of Antidepressant Trials and Its Influence on Apparent Efficacy. N. Engl. J. Med. 2008, 358 (3), 252-260.

- Ropovik, I.; Adamkovic, M.; Greger, D., Neglect of publication bias compromises meta-analyses of educational research. PLoS One 2021, 16 (6), e0252415.

- Murad, M. H.; Chu, H.; Lin, L.; Wang, Z., The effect of publication bias magnitude and direction on the certainty in evidence. BMJ. Evid. Based. Med. 2018, 23 (3), 84-86.

- Kepes, S.; Banks, G. C.; Oh, I.-S., Avoiding Bias in Publication Bias Research: The Value of “Null” Findings. J. Bus. Psychol. 2014, 29 (2), 183-203.

- Thornton, A.; Lee, P., Publication bias in meta-analysis: its causes and consequences. J. Clin. Epidemiol. 2000, 53 (2), 207-216.

- Roest, A.; Williams, C., Does publication bias make antidepressants seem more effective at treating anxiety than they really are? The Conversation, May 6, 2015.

- Stanley, T. D.; Doucouliagos, H.; Ioannidis, J. P. A., Finding the power to reduce publication bias. Stat. Med. 2017, 36 (10), 1580-1598. 1598.

- DeVito, N. J.; Goldacre, B., Catalogue of bias: publication bias. BMJ. Evid. Based. Med. 2019, 24 (2), 53-54.

Study with us

Written by louis casey, third-year student and dalyell scholar, bachelor of science and advanced studies, the university of sydney, related articles, new hybrid agriculture model brings food and energy benefits .

Combining traditional agricultural farming land with renewable energy technologies reap potential benefits.

Open the door to a dynamic career in veterinary medicine

Improved wheat to counter climate extremes.

Rising temperatures threaten our ability to grow crops. Partnerships between academia and industry have created top-level research with a tangible impact.

When you choose to publish with PLOS, your research makes an impact. Make your work accessible to all, without restrictions, and accelerate scientific discovery with options like preprints and published peer review that make your work more Open.

- PLOS Biology

- PLOS Climate

- PLOS Complex Systems

- PLOS Computational Biology

- PLOS Digital Health

- PLOS Genetics

- PLOS Global Public Health

- PLOS Medicine

- PLOS Mental Health

- PLOS Neglected Tropical Diseases

- PLOS Pathogens

- PLOS Sustainability and Transformation

- PLOS Collections

- About This Blog

- About PLOS ONE

- Official PLOS Blog

- EveryONE Blog

- Speaking of Medicine

- PLOS Biologue

- Absolutely Maybe

- DNA Science

- PLOS ECR Community

- All Models Are Wrong

- About PLOS Blogs

Filling in the Scientific Record: The Importance of Negative and Null Results

PLOS strives to publish scientific research with transparency, openness, and integrity. Whether that means giving authors the choice to preregister their study, publish peer review comments, or diversifying publishing outputs; we’re here to support researchers as they work to uncover and communicate discoveries that advance scientific progress. Negative and null results are an important part of this process.

This is something we agree on across our journal portfolio — the most recent updates from PLOS Biology being one example– and it’s something we care about especially on PLOS ONE . Our journal’s mission is to provide researchers with a quality, peer-reviewed and Open Access venue for all rigorously conducted research, regardless of novelty or impact. Our role in the publishing ecosystem is to provide a complete, transparent view of scientific literature to enable discovery. While negative and null results can often be overlooked — by authors and publishers alike — their publication is equally as important as positive outcomes and can help fill in critical gaps in the scientific record.

We encourage researchers to share their negative and null results.

To provide checks and balances for emerging research

Positive results are often viewed as more impactful. From authors, editors, and publishers alike, there is a tendency to favor the publication of positive results over negative ones and, yes, there is evidence to suggest that positive results are more frequently cited by other researchers.

Negative results, however, are crucial to providing a system of checks and balances against similar positive findings. Studies have attempted to determine to what extent the lack of negative results in scientific literature has inflated the efficacy of certain treatments or allowed false positives to remain unchecked.

The effect is particularly dramatic in meta-analyses which are typically undertaken with the assumption that the sample of retrieved studies is representative of all conducted studies:

“ However, it is clear that a positive bias is introduced when studies with negative results remain unreported, thereby jeopardizing the validity of meta-analysis ( 25 , 26 ). This is potentially harmful as the false positive outcome of meta-analysis misinforms researchers, doctors, policymakers and greater scientific community, specifically when the wrong conclusions are drawn on the benefit of the treatment.” — Mlinarić, et al (2017). Dealing with publication bias: why you should really publish your negative results . Biochem Med (Zagreb) 27(3): 030201

As important as it is to report on studies that show a positive effect, it is equally vital to document instances where the same processes were not effective. We should be actively reporting, evaluating, and sharing negative and null results with the same emphasis we give to positive outcomes.

To reduce time and resources needed for researchers to continue investigation

Regardless of the outcomes, new research requires time and financial resources to complete. At the end of the process, something is learned — even if the answer is unexpected or less clear than you had hoped for. Nevertheless, these efforts can provide valuable insights to other research groups.

If you’re seeking the answer to a particular scientific question, chances are that another research group is looking for that answer as well: either as a main focus or to provide additional background for a different study. Independent verification of the results through replication studies are also an important piece of solidifying the foundation of future research. This also can only happen when researchers have a complete record of previous results to work from.

By making more findings available, we can help increase efficiencies and advance scientific discovery faster.

To fill in the scientific record and increase reproducibility

It’s difficult to draw reliable conclusions from a set of data that we know is incomplete. This lack of information affects the entire scientific ecosystem. Readers are often unaware that negative results for a particular study may even exist, and it may even be more difficult for researchers to replicate studies where pieces of the data have been left out of the published record.

Some researchers opt to obtain specific null and negative results from outside the published literature, from non peer-reviewed depositories, or by requesting data directly from the authors. The inclusions of this “ grey literature ” can improve accuracy, but the additional time and effort that goes into obtaining and verifying this information would be prohibitive for many to include.

This is where publishers can play a pivotal role in ensuring that authors not only feel welcome to submit and publish negative results, but to make sure those efforts are properly recognized and credited. Published, peer-reviewed results allow for a more complete analysis of all available data and increased trust in the scientific record.

We know it’s difficult to get into the lab right now and many researchers are having to rethink the way that they work or focus on other projects. We encourage anyone with previously unpublished negative and null results to submit their work to PLOS ONE and help fill in the gaps of the scientific record, or consider doing so in the future.

This is a great initiative. I have a number of study manuscripts sitting in the draw.

I think that the idea for publishing of negative and null results is very good. Most scientists reject data which do not have a possitive effect on expected end results. Publishing of negative and null results will make research credible.

Leave a Reply Cancel reply

Your email address will not be published. Required fields are marked *

Save my name and email for the next time I comment.

Staff & Students

- Staff Email

- Semester Dates

- Parking (SOUPS)

- For Students

- Student Email

- SurreyLearn

Members of the CoGDeV lab share their research findings, research experiences, news and events summaries.

The value of null results.

Until very recently I had never heard of Open Research and what it meant. I came across an article in The Conversation that had a headline that caught my attention ‘Medical research is broken: here’s how to fix it’. On reading the article I learned about the reproducibility crisis. I was astounded to read that as much as 85% of medical research is ‘wasted’ and that about 50% of medical research is not published (Lloyd & Bradley, 2020). No domains are immune to the reproducibility crisis. For example, in psychology, in an attempt to reproduce findings of 100 studies The Open Science Collaboration found that only 36% of these studies could be reproduced (Gilbert & Strohminger, 2015). When another researcher cannot replicate an experiment we naturally question the validity of the results of the original experiment. This has serious implications for research and science as replicating studies is crucial to verify findings, to advance knowledge and to build a robust evidence-based literature. So how could that be? The relatively high prevalence of questionable research practices (QRPs) such as selectively reporting hypotheses and excluding data post-hoc (John et al., 2012) as well as the non-publication of failed studies, ‘null results’, all seem to be playing a role in maintaining the reproducibility crisis. QRPs such as excluding data after looking at the impact of doing so on the results can significantly improve the probability of finding evidence in support of a hypothesis, making it harder to subsequently replicate the study (John et al, 2012). QRPs although problematic and widespread are not unequivocally unacceptable but call for greater transparency in the research process. The factor I am most intrigued about is the non-publication of null results. A null result is a result that does not support the experimental hypothesis and is therefore difficult to publish because many perceive these results as less interesting.

Journal editors tend to publish studies that are statistically significant, which are more likely to be cited and therefore raise the reach and impact of their journals (Fanelli, 2010). Journal editors unwittingly contribute to a vicious cycle: researchers are more likely to engage in QPRs to get a positive result so their research has a higher chance to get cited and be accepted for publication by editors (Wenzel, 2016). Unfortunately, this unhelpful pattern works against the publication of many rigorously well-conducted studies that yield a null result.

Publishing only successful studies falsely gives the impression that experiments almost always work and that research gets it right every time. Research is usually, by nature, exploratory; some studies will yield positive results, but null results form an integral part of the process of exploration. Many researchers would be willing to spend time and effort to publish a result that does not support their hypothesis, however they face a barrier of finding a journal that will be willing to publish it. Many journals and editors steer away from null findings because null findings are cited less frequently (Mlinarić, Horvat, & Šupak Smolčić, 2017). This means that there is a publication bias against the null result whereby successful studies are more likely to be published. Since null results do not always support what we expected to find it can make them difficult to interpret however they are an integral part of scientific research. The endeavour of research involves keeping track of our unsuccessful attempts and learning from them. As researchers, we reflect and review what worked and what did not work, make the necessary amendments and try again. It takes us many trials to refine and master a new skill. The same is true in science; many trials are often necessary to understand our findings.

Unfortunately, the non-publication of null results means that useful data is kept away from collective knowledge. Publishing null results helps the scientific community by allowing researchers to build on previous studies. The publication of unsuccessful attempts has the potential to save other researchers’ time. Indeed, a new researcher may use a similar study protocol than a previously unpublished null study and risk having their findings go against their predictions leading to a null result.

I also wonder about the influence of the researcher’s experience and belief system on their willingness to publish their null results. It is conceivable that some researchers may fear exposing that their theory had to change in light of the null findings. This could undermine their previous papers. This may be particularly true at the start of a career when the desire to be published in a renowned journal is highly enticing, which may encourage researchers to hold back from sharing novel hypothesis or engage in QRPs. Moreover, those researchers who publish a null result that challenges the current theoretical understanding of a particular issue may fear the consequences of questioning the status quo. It takes time and resources to publish results that do not support a carefully thought out hypothesis. It is understandable that researchers are reluctant to publish their null results, as there is little incentive to do so. Furthermore, established and new researchers alike may wish to preserve their status and not publish a null result that would contradict previous findings and may attract ‘the wrath’ of the original researcher (Nature editorial, 2017).

In the field of psychology, progress would not have happened if researchers had not carefully considered why their result went against their prediction. That is, null findings have the potential to move theory forward and challenge current theoretical understanding. For instance, a comprehensive study on candidate genes for depression challenged previous studies that had seemingly demonstrated that there was an association between genes and major depression. This comprehensive study yielded a null result. Because the study had been rigorously conducted it suggested that there was no significant association between genes and major depression (Border et al., 2019). More recently, the null findings of a study on approximate arithmetic training challenged the claim that training improves arithmetic fluency in adults; a theory that had been supported by several training studies with a positive result (Szkudlarek, Park & Brannon, 2020).

Commitment to open research practices by universities, funders and publishers is starting to have an impact on this issue by raising the visibility of null findings among students and researchers. The Registered Report which is a publishing model that works on the basis of ‘in-principle acceptance’- a study is accepted for publication before knowing its outcome – can help alleviate the publication bias. The in principle acceptance is likely to increase the publication of null results as the criteria for publication is not the result itself but the quality of research (Soderberg et al., 2020).

In order to challenge the publication bias a group of editors from prestigious journals (European Journal of Work and Organizational Psychology, Journal of Business & Psychology, etc.) have committed to publish null results resulting from rigorously conducted research. This is part of a new initiative to enhance the integrity and quality of research (Wenzel, 2016). Perhaps another solution to encourage the scientific community to share their null results would be to publish a summary of all studies in an online ‘null results’ journal, which would enable other researchers to access them. An additional solution could be to add a mandatory section in every paper summarising the previous known null results, what was learned from them, and how they led to the publication of the conclusive study. Pre-registration – a time-stamped record of a study design & analysis plan created prior to the data collection ( https://www.ukrn.org/primers/ ; Farran, 2020)- could also help that process by encouraging researchers to be transparent about their protocol, data analysis and outcome expectations from the outset. It takes a level of confidence to publicly admit that after rigorously conducting a study our results do not support our experimental hypothesis. I wonder if that ‘admission’ could be eased by re-affirming that research is often exploratory: it would be unrealistic to always expect a positive result in research. Changing the narrative of null results into a story of learning and progress may be supportive of researchers sharing their null results with the wider scientific community. By taking the focus of research off the results and moving it onto the quality of the study protocol and how rigorously a study has been conducted, I believe we can reduce the prevalence of QRPs and give null findings their rightful place in the world of scientific research.

I believe that increasing co-operation could also be helpful. The publication of null results carries the risk that other researchers using a similar protocol and have a positive outcome potentially taking credit away from the original researcher. A change in culture where collaboration is valued above competition may help that movement. We also need a common framework for replication of studies with standardised protocols including the publication and/or consideration of null results.

Null findings tend not to be published, which I feel is a waste of resources. The publications of these findings would benefit the wider scientific community. Research at its best is a collective effort with collaboration between organisations, increased transparency, and a smaller emphasis on competition and protection of resources.

Border, R., Johnson, E. C., Evans, L. M., Smolen, A., Berley, N., Sullivan, P. F., & Keller, M. C. (2019). No Support for Historical Candidate Gene or Candidate Gene-by-Interaction Hypotheses for Major Depression Across Multiple Large Samples. The American journal of psychiatry , 176(5), 376–387. https://doi.org/10.1176/appi.ajp.2018.18070881

Fanelli, D. (2010). “Positive” results increase down the Hierarchy of the Sciences. PloS one , 5(4), e10068. https://doi.org/10.1371/journal.pone.0010068

Farran E. K., (2020). Is pre-registration for you? Retrieved from https://blogs.surrey.ac.uk/doctoralcollege/2020/01/06/guest-blog-prof-emily-farran-is-pre-registration-for-you/

Gilbert, E., Strohminger, N. (2015) We found only one-third of published psychology research is reliable – now what? The conversation . https://theconversation.com/we-found-only-one-third-of-published-psychology-research-is-reliable-now-what-46596

John, L. K., Loewenstein, G., & Prelec, D. (2012). Measuring the Prevalence of Questionable Research Practices With Incentives for Truth Telling. Psychological Science , 23(5), 524–532. https://doi.org/10.1177/0956797611430953

Lloyd, K. E., Bradley, S. (2020). Medical research is broken here’s how we can fix it reference. The conversation. https://theconversation.com/medical-research-is-broken-heres-how-we-can-fix-it-145281

Mlinarić, A., Horvat, M., & Šupak Smolčić, V. (2017). Dealing with the positive publication bias: Why you should really publish your negative results. Biochemia medica , 27(3), 030201. https://doi.org/10.11613/BM.2017.030201

Rewarding negative results keeps science on track. Nature 551, 414 (2017). https://doi.org/10.1038/d41586-017-07325-2

Soderberg, C. K., Errington, T. M., Schiavone, S. R., Bottesini, J. G., Singleton Thorn, F., Vazire, S., … Nosek, B. A. (2020, November 16). Initial Evidence of Research Quality of Registered Reports Compared to the Traditional Publishing Model. https://doi.org/10.31222/osf.io/7x9vy

Szkudlarek, E., Park, J., & Brannon , E. (2020). Failure to replicate the benefit of approximate arithmetic training for symbolic arithmetic fluency in adults. Cognition . 207. 104521. 10.1016/j.cognition.2020.104521.

Wenzel, R. (2016). Business journals to tackle publication bias, will publish ‘null’ results. The conversation. https://theconversation.com/business-journals-to-tackle-publication-bias-will-publish-null-results-52818

Written by Badri Bechlem

Undergraduate’s perspective on Open Research

Centre for educational neuroscience online seminar: “why do children with dcd trip and fall more than others looking around for answers”.

Accessibility | Contact the University | Privacy | Cookies | Disclaimer | Freedom of Information

© University of Surrey, Guildford, Surrey, GU2 7XH, United Kingdom. +44 (0)1483 300800

View the latest institution tables

View the latest country/territory tables

5 tips for dealing with non-significant results

Credit: Image Source/Getty Images

It might look like failure, but don’t let go just yet.

16 September 2019

Image Source/Getty Images

When researchers fail to find a statistically significant result, it’s often treated as exactly that – a failure. Non-significant results are difficult to publish in scientific journals and, as a result, researchers often choose not to submit them for publication.

This means that the evidence published in scientific journals is biased towards studies that find effects.

A study published in Science by a team from Stanford University who investigated 221 survey-based experiments funded by the National Science Foundation found that nearly two-thirds of the social science experiments that produced null results were filed away, never to be published.

By comparison, 96% of the studies with statistically strong results were written up .

“These biases imperil the robustness of scientific evidence,” says David Mehler, a psychologist at the University of Münster in Germany . “But they also harm early career researchers in particular who depend on building up a track record.”

Mehler is the co-author of a recent article published in the Journal of European Psychology Students about appreciating the significance of non-significant findings.

So, what can researchers do to avoid unpublishable results?

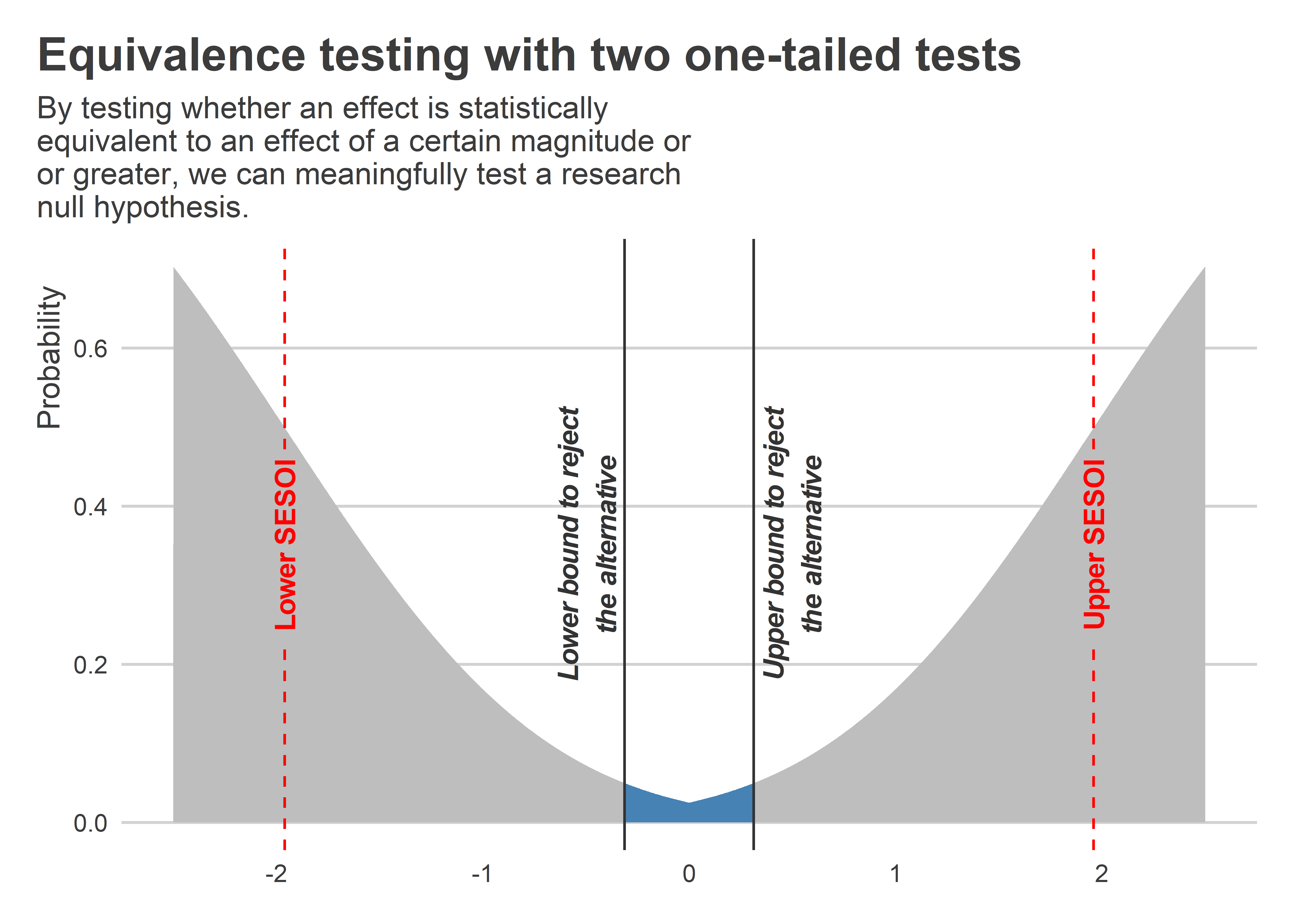

#1: Perform an equivalence test

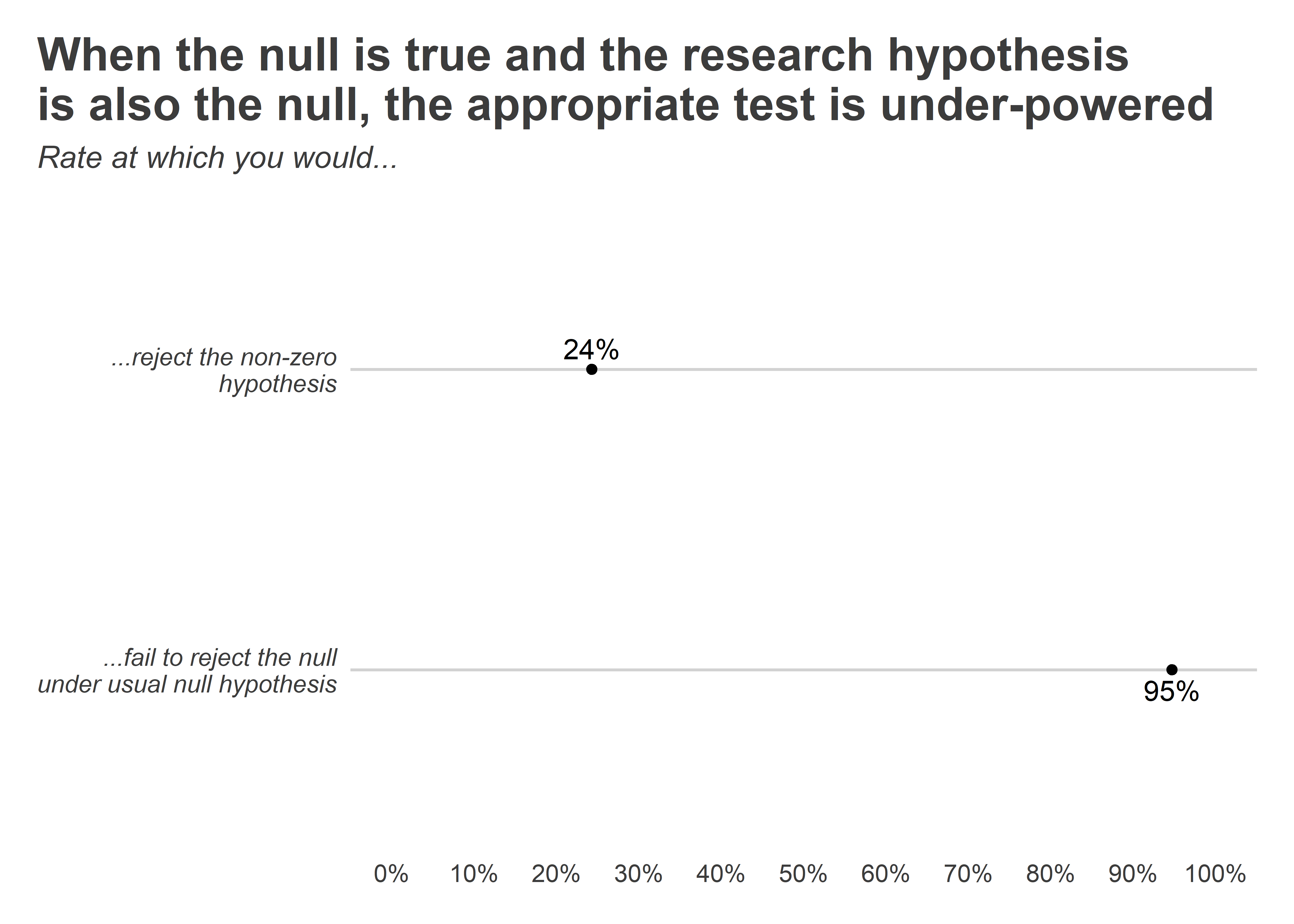

The problem with a non-significant result is that it’s ambiguous, explains Daniël Lakens , a psychologist at Eindhoven University of Technology in the Netherlands .

It could mean that the null hypothesis is true – there really is no effect. But it could also indicate that the data are inconclusive either way.

Lakens says performing an ‘equivalence test’ can help you distinguish between these two possibilities. It can’t tell you that there is no effect, but it can tell you that an effect – if it exists – is likely to be of negligible practical or theoretical significance.

Bayesian statistics offer an alternative way of performing this test, and in Lakens’ experience, “either is better than current practice”.

#2 Collaborate to collect more data

Equivalence tests and Bayesian analyses can be helpful, but if you don’t have enough data, their results are likely to be inconclusive.

“The root problem remains that researchers want to conduct confirmatory hypothesis tests for effects that their studies are mostly underpowered to detect,” says Mehler.

This, he adds, is a particular problem for students and early career researchers, whose limited resources often constrain them to small sample sizes.

One solution is to collaborate with other researchers to collect more data. In psychology, the StudySwap website is one way for researchers to team up and combine resources.

#3 Use directional tests to increase statistical power

If resources are scarce, it’s important to use them as efficiently as possible. Lakens suggests a number of ways in which researchers can tweak their research design to increase statistical power – the likelihood of finding an effect if it really does exist.

In some circumstances, he says, researchers should consider ‘directional’ or ‘one-sided’ tests.

For example, if your hypothesis clearly states that patients receiving a new drug should have better outcomes than those receiving a placebo, it makes sense to test that prediction rather than looking for a difference between the groups in either direction.

“It’s basically free statistical power just for making a prediction,” says Lakens.

#4 Perform sequential analyses to improve data collection efficiency

Efficiency can also be increased by conducting sequential analyses, whereby data collection is terminated if there is already enough evidence to support the hypothesis, or it’s clear that further data will not lead to it being supported.

This approach is often taken in clinical trials where it might be unethical to test patients beyond the point that the efficacy of the treatment can already be determined.

A common concern is that performing multiple analyses increases the probability of finding an effect that doesn’t exist. However, this can be addressed by adjusting the threshold for statistical significance, Lakens explains.

#5 Submit a Registered Report

Whichever approach is taken, it’s important to describe the sampling and analyses clearly to permit a fair evaluation by peer reviewers and readers, says Mehler.

Ideally, studies should be preregistered. This allows authors to demonstrate that the tests were determined before rather than after the results were known. In fact, Mehler argues, the best way to ensure that results are published is to submit a Registered Report.

In this format, studies are evaluated and provisionally accepted based on the methods and analysis plan. The paper is then guaranteed to be published if the researchers follow this preregistered plan – whatever the results.

In a recent investigation , Mehler and his colleague, Chris Allen from Cardiff University in the UK , found that Registered Reports led to a much increased rate of null results: 61% compared with 5 to 20% for traditional papers.

First analysis of ‘pre-registered’ studies shows sharp rise in null findings

This simple tool shows you how to choose your mentors

Q&A Niamh Brennan: 100 rules for publishing in top journals

- Translators

- Graphic Designers

Please enter the email address you used for your account. Your sign in information will be sent to your email address after it has been verified.

How to Write About Negative (Or Null) Results in Academic Research

Researchers are often disappointed when their work yields "negative" results, meaning that the null hypothesis cannot be rejected. However, negative results are essential for research to progress. Negative results tell researchers that they are on the wrong path, or that their current techniques are ineffective. This is a natural and necessary part of discovering something that was previously unknown. Solving problems that lead to negative results is an integral part of being an effective researcher. Publishing negative results that are the result of rigorous research contributes to scientific progress.

There are three main reasons for negative results:

- The original hypothesis was incorrect

- The findings of a published report cannot be replicated

- Technical problems

Here, we will discuss how to write about negative results, first focusing on the most common reason: technical problems.

Writing about technical problems

Technical problems might include faulty reagents, inappropriate study design, and insufficient statistical power. Most researchers would prefer to resolve technical problems before presenting their work, and focus instead on their convincing results. In reality, researchers often need to present their work at a conference or to a thesis committee before some problems can be resolved.

When presenting at a conference, the objective should be to clearly describe your overall research goal and why it is important, your preliminary results, the current problem, and how previously published work is informing the steps you are taking to resolve the problem. Here, you want to take advantage of the collective expertise at the conference. By being straightforward about your difficulties, you increase the chance that someone can help you find a solution.

When presenting to a thesis committee, much of what you discuss will be the same (overall research goal and why it is important, results, problem(s) and possible solutions). Your primarily goal is to show that you are well prepared to move forward in your research career, despite the recent difficulties. The thesis defense is a defined stopping point, so most thesis students should write about solutions they would pursue if they were to continue the work. For example, "To resolve this problem, it would be advisable to increase the survey area by a factor of 4, and then…" In contrast, researchers who will be continuing their work should write about possible solutions using present and future tense. For example, "To resolve this problem, we are currently testing a wider variety of standards, and will then conduct preliminary experiments to determine…"

Putting the "re" in "research"

Whether you are presenting at a conference, defending a thesis, applying for funding, or simply trying to make progress in your research, you will often need to search through the academic literature to determine the best path forward. This is especially true when you get unexpected results—either positive or negative. When trying to resolve a technical problem, you should often find yourself carefully reading the materials and methods sections of papers that address similar research questions, or that used similar techniques to explore very different problems. For example, a single computer algorithm might be adapted to address research questions in many different fields.

In searching through published papers and less formal methods of communication—such as conference abstracts—you may come to appreciate the important details that good researchers will include when discussing technical problems or other negative results. For example, "We found that participants were more likely to complete the process when light refreshments were provided between the two sessions." By including this information, the authors may help other researchers save time and resources.

Thus, you are advised to be as thorough as possible in reviewing the relevant literature, to find the most promising solutions for technical problems. When presenting your work, show that you have carefully considered the possibilities, and have developed a realistic plan for moving forward. This will help a thesis committee view your efforts favorably, and can also convince possible collaborators or advisors to invest time in helping you.

Publishing negative results

Negative results due to technical problems may be acceptable for a conference presentation or a thesis at the undergraduate or master's degree level. Negative results due to technical problems are not sufficient for publication, a Ph.D. dissertation, or tenure. In those situations, you will need to resolve the technical problem and generate high quality results (either positive or negative) that stand up to rigorous analysis. Depending on the research field, high quality negative results might include multiple readouts and narrow confidence intervals.

Researchers are often reluctant to publish negative results, especially if their data don't support an interesting alternative hypothesis. Traditionally, journals have been reluctant to publish negative results that are not paired with positive results, even if the study is well designed and the results have sufficient statistical power. This is starting to change— especially for medical research —but publishing negative results can still be an uphill battle.

Not publishing high quality negative results is a disservice to the scientific community and the people who support it (including tax payers), since other scientists may need to repeat the work. For studies involving animal research or human tissue samples, not publishing would squander significant sacrifices. For research involving medical treatments—especially studies that contradict a published report—not publishing negative results leads to an inaccurate understanding of treatment efficacy.

So how can researchers write about negative results in a way that reflects its importance? Let's consider a common reason for negative results: the original hypothesis was incorrect.

Writing about negative results when the original hypothesis was incorrect

Researchers should be comfortable with being wrong some of the time, such as when results don't support an initial hypothesis. After all, research wouldn't be necessary if we already knew the answer to every possible question. The next step is usually to revise the hypothesis after reconsidering the available data, reading through the relevant literature, and consulting with colleagues.

Ideally, a revised hypothesis will lead to results that allow you to reject a (revised) null hypothesis. The negative results can then be reported alongside the positive results, possibly bolstering the significance of both. For example, "The DNA mutations in region A had a significant effect on gene expression, while the mutations outside of domain A had no effect. Don't forget to include important details about how you overcame technical problems, so that other researchers don't need to reinvent the wheel.

Unfortunately, it isn't always possible to pair negative results with related positive results. For example, imagine a year-long study on the effect of COVID-19 shelter-in-place orders on the mental health of avid video game players compared to people who don't play video games. Despite using well-established tools for measuring mental health, having a large sample size, and comparing multiple subpopulations (e.g. gamers who live alone vs. gamers who live with others), no significant differences were identified. There is no way to modify and repeat this study because the same shelter-in-place conditions no longer exist. So how can this research be presented effectively?

Writing when you only have negative results

When you write a scientific paper to report negative results, the sections will be the same as for any other paper: Introduction, Materials and Methods, Results and Discussion. In the introduction, you should prepare your reader for the possibility of negative results. You can highlight gaps or inconsistencies in past research, and point to data that could indicate an incomplete understanding of the situation.

In the example about video game players, you might highlight data showing that gamers are statistically very similar to large chunks of the population in terms of age, education, marital status, etc. You might discuss how the stigma associated with playing video games might be unfair and harmful to people in certain situations. You could discuss research showing the benefits of playing video games, and contrast gaming with engaging in social media, which is another modern hobby. Putting a positive spin on negative results can make the difference between a published manuscript and rejection.

In a paper that focuses on negative results—especially one that contradicts published findings—the research design and data analysis must be impeccable. You may need to collaborate with other researchers to ensure that your methods are sound, and apply multiple methods of data analysis.

As long as the research is rigorous, negative results should be used to inform and guide future experiments. This is how science improves our understanding of the world.

Related Posts

Completely Randomized Design: The One-Factor Approach

Single-blind vs. Double-blind Peer Review

- Academic Writing Advice

- All Blog Posts

- Writing Advice

- Admissions Writing Advice

- Book Writing Advice

- Short Story Advice

- Employment Writing Advice

- Business Writing Advice

- Web Content Advice

- Article Writing Advice

- Magazine Writing Advice

- Grammar Advice

- Dialect Advice

- Editing Advice

- Freelance Advice

- Legal Writing Advice

- Poetry Advice

- Graphic Design Advice

- Logo Design Advice

- Translation Advice

- Blog Reviews

- Short Story Award Winners

- Scholarship Winners

Need an academic editor before submitting your work?

Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

Chapter 13: Inferential Statistics

Understanding Null Hypothesis Testing

Learning Objectives

- Explain the purpose of null hypothesis testing, including the role of sampling error.

- Describe the basic logic of null hypothesis testing.

- Describe the role of relationship strength and sample size in determining statistical significance and make reasonable judgments about statistical significance based on these two factors.

The Purpose of Null Hypothesis Testing

As we have seen, psychological research typically involves measuring one or more variables for a sample and computing descriptive statistics for that sample. In general, however, the researcher’s goal is not to draw conclusions about that sample but to draw conclusions about the population that the sample was selected from. Thus researchers must use sample statistics to draw conclusions about the corresponding values in the population. These corresponding values in the population are called parameters . Imagine, for example, that a researcher measures the number of depressive symptoms exhibited by each of 50 clinically depressed adults and computes the mean number of symptoms. The researcher probably wants to use this sample statistic (the mean number of symptoms for the sample) to draw conclusions about the corresponding population parameter (the mean number of symptoms for clinically depressed adults).

Unfortunately, sample statistics are not perfect estimates of their corresponding population parameters. This is because there is a certain amount of random variability in any statistic from sample to sample. The mean number of depressive symptoms might be 8.73 in one sample of clinically depressed adults, 6.45 in a second sample, and 9.44 in a third—even though these samples are selected randomly from the same population. Similarly, the correlation (Pearson’s r ) between two variables might be +.24 in one sample, −.04 in a second sample, and +.15 in a third—again, even though these samples are selected randomly from the same population. This random variability in a statistic from sample to sample is called sampling error . (Note that the term error here refers to random variability and does not imply that anyone has made a mistake. No one “commits a sampling error.”)

One implication of this is that when there is a statistical relationship in a sample, it is not always clear that there is a statistical relationship in the population. A small difference between two group means in a sample might indicate that there is a small difference between the two group means in the population. But it could also be that there is no difference between the means in the population and that the difference in the sample is just a matter of sampling error. Similarly, a Pearson’s r value of −.29 in a sample might mean that there is a negative relationship in the population. But it could also be that there is no relationship in the population and that the relationship in the sample is just a matter of sampling error.

In fact, any statistical relationship in a sample can be interpreted in two ways:

- There is a relationship in the population, and the relationship in the sample reflects this.

- There is no relationship in the population, and the relationship in the sample reflects only sampling error.

The purpose of null hypothesis testing is simply to help researchers decide between these two interpretations.

The Logic of Null Hypothesis Testing

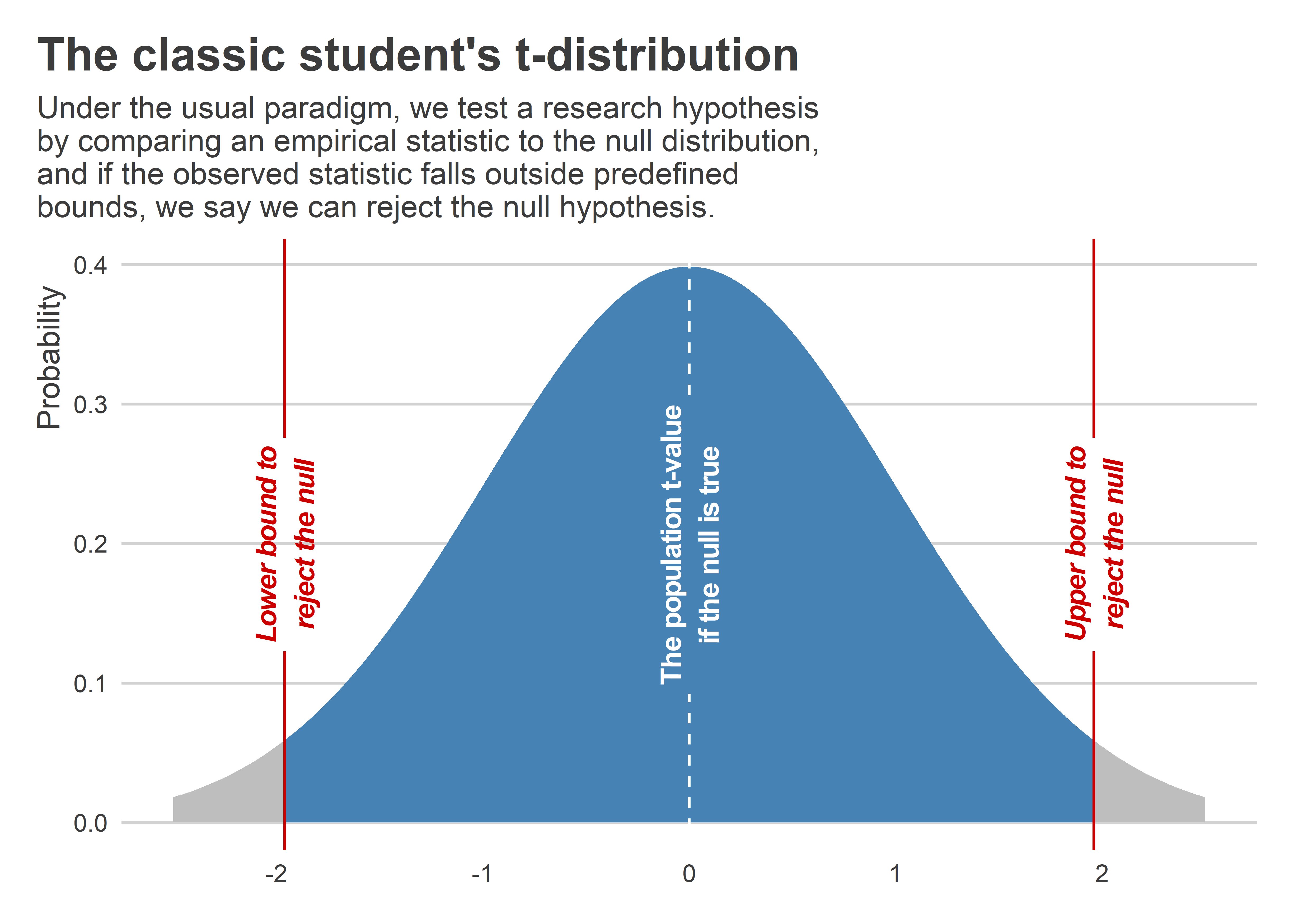

Null hypothesis testing is a formal approach to deciding between two interpretations of a statistical relationship in a sample. One interpretation is called the null hypothesis (often symbolized H 0 and read as “H-naught”). This is the idea that there is no relationship in the population and that the relationship in the sample reflects only sampling error. Informally, the null hypothesis is that the sample relationship “occurred by chance.” The other interpretation is called the alternative hypothesis (often symbolized as H 1 ). This is the idea that there is a relationship in the population and that the relationship in the sample reflects this relationship in the population.

Again, every statistical relationship in a sample can be interpreted in either of these two ways: It might have occurred by chance, or it might reflect a relationship in the population. So researchers need a way to decide between them. Although there are many specific null hypothesis testing techniques, they are all based on the same general logic. The steps are as follows:

- Assume for the moment that the null hypothesis is true. There is no relationship between the variables in the population.

- Determine how likely the sample relationship would be if the null hypothesis were true.

- If the sample relationship would be extremely unlikely, then reject the null hypothesis in favour of the alternative hypothesis. If it would not be extremely unlikely, then retain the null hypothesis .

Following this logic, we can begin to understand why Mehl and his colleagues concluded that there is no difference in talkativeness between women and men in the population. In essence, they asked the following question: “If there were no difference in the population, how likely is it that we would find a small difference of d = 0.06 in our sample?” Their answer to this question was that this sample relationship would be fairly likely if the null hypothesis were true. Therefore, they retained the null hypothesis—concluding that there is no evidence of a sex difference in the population. We can also see why Kanner and his colleagues concluded that there is a correlation between hassles and symptoms in the population. They asked, “If the null hypothesis were true, how likely is it that we would find a strong correlation of +.60 in our sample?” Their answer to this question was that this sample relationship would be fairly unlikely if the null hypothesis were true. Therefore, they rejected the null hypothesis in favour of the alternative hypothesis—concluding that there is a positive correlation between these variables in the population.

A crucial step in null hypothesis testing is finding the likelihood of the sample result if the null hypothesis were true. This probability is called the p value . A low p value means that the sample result would be unlikely if the null hypothesis were true and leads to the rejection of the null hypothesis. A high p value means that the sample result would be likely if the null hypothesis were true and leads to the retention of the null hypothesis. But how low must the p value be before the sample result is considered unlikely enough to reject the null hypothesis? In null hypothesis testing, this criterion is called α (alpha) and is almost always set to .05. If there is less than a 5% chance of a result as extreme as the sample result if the null hypothesis were true, then the null hypothesis is rejected. When this happens, the result is said to be statistically significant . If there is greater than a 5% chance of a result as extreme as the sample result when the null hypothesis is true, then the null hypothesis is retained. This does not necessarily mean that the researcher accepts the null hypothesis as true—only that there is not currently enough evidence to conclude that it is true. Researchers often use the expression “fail to reject the null hypothesis” rather than “retain the null hypothesis,” but they never use the expression “accept the null hypothesis.”

The Misunderstood p Value

The p value is one of the most misunderstood quantities in psychological research (Cohen, 1994) [1] . Even professional researchers misinterpret it, and it is not unusual for such misinterpretations to appear in statistics textbooks!

The most common misinterpretation is that the p value is the probability that the null hypothesis is true—that the sample result occurred by chance. For example, a misguided researcher might say that because the p value is .02, there is only a 2% chance that the result is due to chance and a 98% chance that it reflects a real relationship in the population. But this is incorrect . The p value is really the probability of a result at least as extreme as the sample result if the null hypothesis were true. So a p value of .02 means that if the null hypothesis were true, a sample result this extreme would occur only 2% of the time.

You can avoid this misunderstanding by remembering that the p value is not the probability that any particular hypothesis is true or false. Instead, it is the probability of obtaining the sample result if the null hypothesis were true.

Role of Sample Size and Relationship Strength

Recall that null hypothesis testing involves answering the question, “If the null hypothesis were true, what is the probability of a sample result as extreme as this one?” In other words, “What is the p value?” It can be helpful to see that the answer to this question depends on just two considerations: the strength of the relationship and the size of the sample. Specifically, the stronger the sample relationship and the larger the sample, the less likely the result would be if the null hypothesis were true. That is, the lower the p value. This should make sense. Imagine a study in which a sample of 500 women is compared with a sample of 500 men in terms of some psychological characteristic, and Cohen’s d is a strong 0.50. If there were really no sex difference in the population, then a result this strong based on such a large sample should seem highly unlikely. Now imagine a similar study in which a sample of three women is compared with a sample of three men, and Cohen’s d is a weak 0.10. If there were no sex difference in the population, then a relationship this weak based on such a small sample should seem likely. And this is precisely why the null hypothesis would be rejected in the first example and retained in the second.

Of course, sometimes the result can be weak and the sample large, or the result can be strong and the sample small. In these cases, the two considerations trade off against each other so that a weak result can be statistically significant if the sample is large enough and a strong relationship can be statistically significant even if the sample is small. Table 13.1 shows roughly how relationship strength and sample size combine to determine whether a sample result is statistically significant. The columns of the table represent the three levels of relationship strength: weak, medium, and strong. The rows represent four sample sizes that can be considered small, medium, large, and extra large in the context of psychological research. Thus each cell in the table represents a combination of relationship strength and sample size. If a cell contains the word Yes , then this combination would be statistically significant for both Cohen’s d and Pearson’s r . If it contains the word No , then it would not be statistically significant for either. There is one cell where the decision for d and r would be different and another where it might be different depending on some additional considerations, which are discussed in Section 13.2 “Some Basic Null Hypothesis Tests”

Although Table 13.1 provides only a rough guideline, it shows very clearly that weak relationships based on medium or small samples are never statistically significant and that strong relationships based on medium or larger samples are always statistically significant. If you keep this lesson in mind, you will often know whether a result is statistically significant based on the descriptive statistics alone. It is extremely useful to be able to develop this kind of intuitive judgment. One reason is that it allows you to develop expectations about how your formal null hypothesis tests are going to come out, which in turn allows you to detect problems in your analyses. For example, if your sample relationship is strong and your sample is medium, then you would expect to reject the null hypothesis. If for some reason your formal null hypothesis test indicates otherwise, then you need to double-check your computations and interpretations. A second reason is that the ability to make this kind of intuitive judgment is an indication that you understand the basic logic of this approach in addition to being able to do the computations.

Statistical Significance Versus Practical Significance

Table 13.1 illustrates another extremely important point. A statistically significant result is not necessarily a strong one. Even a very weak result can be statistically significant if it is based on a large enough sample. This is closely related to Janet Shibley Hyde’s argument about sex differences (Hyde, 2007) [2] . The differences between women and men in mathematical problem solving and leadership ability are statistically significant. But the word significant can cause people to interpret these differences as strong and important—perhaps even important enough to influence the college courses they take or even who they vote for. As we have seen, however, these statistically significant differences are actually quite weak—perhaps even “trivial.”

This is why it is important to distinguish between the statistical significance of a result and the practical significance of that result. Practical significance refers to the importance or usefulness of the result in some real-world context. Many sex differences are statistically significant—and may even be interesting for purely scientific reasons—but they are not practically significant. In clinical practice, this same concept is often referred to as “clinical significance.” For example, a study on a new treatment for social phobia might show that it produces a statistically significant positive effect. Yet this effect still might not be strong enough to justify the time, effort, and other costs of putting it into practice—especially if easier and cheaper treatments that work almost as well already exist. Although statistically significant, this result would be said to lack practical or clinical significance.

Key Takeaways

- Null hypothesis testing is a formal approach to deciding whether a statistical relationship in a sample reflects a real relationship in the population or is just due to chance.

- The logic of null hypothesis testing involves assuming that the null hypothesis is true, finding how likely the sample result would be if this assumption were correct, and then making a decision. If the sample result would be unlikely if the null hypothesis were true, then it is rejected in favour of the alternative hypothesis. If it would not be unlikely, then the null hypothesis is retained.

- The probability of obtaining the sample result if the null hypothesis were true (the p value) is based on two considerations: relationship strength and sample size. Reasonable judgments about whether a sample relationship is statistically significant can often be made by quickly considering these two factors.

- Statistical significance is not the same as relationship strength or importance. Even weak relationships can be statistically significant if the sample size is large enough. It is important to consider relationship strength and the practical significance of a result in addition to its statistical significance.

- Discussion: Imagine a study showing that people who eat more broccoli tend to be happier. Explain for someone who knows nothing about statistics why the researchers would conduct a null hypothesis test.

- The correlation between two variables is r = −.78 based on a sample size of 137.

- The mean score on a psychological characteristic for women is 25 ( SD = 5) and the mean score for men is 24 ( SD = 5). There were 12 women and 10 men in this study.

- In a memory experiment, the mean number of items recalled by the 40 participants in Condition A was 0.50 standard deviations greater than the mean number recalled by the 40 participants in Condition B.

- In another memory experiment, the mean scores for participants in Condition A and Condition B came out exactly the same!

- A student finds a correlation of r = .04 between the number of units the students in his research methods class are taking and the students’ level of stress.

Long Descriptions

“Null Hypothesis” long description: A comic depicting a man and a woman talking in the foreground. In the background is a child working at a desk. The man says to the woman, “I can’t believe schools are still teaching kids about the null hypothesis. I remember reading a big study that conclusively disproved it years ago.” [Return to “Null Hypothesis”]

“Conditional Risk” long description: A comic depicting two hikers beside a tree during a thunderstorm. A bolt of lightning goes “crack” in the dark sky as thunder booms. One of the hikers says, “Whoa! We should get inside!” The other hiker says, “It’s okay! Lightning only kills about 45 Americans a year, so the chances of dying are only one in 7,000,000. Let’s go on!” The comic’s caption says, “The annual death rate among people who know that statistic is one in six.” [Return to “Conditional Risk”]

Media Attributions

- Null Hypothesis by XKCD CC BY-NC (Attribution NonCommercial)

- Conditional Risk by XKCD CC BY-NC (Attribution NonCommercial)

- Cohen, J. (1994). The world is round: p < .05. American Psychologist, 49 , 997–1003. ↵

- Hyde, J. S. (2007). New directions in the study of gender similarities and differences. Current Directions in Psychological Science, 16 , 259–263. ↵

Values in a population that correspond to variables measured in a study.

The random variability in a statistic from sample to sample.

A formal approach to deciding between two interpretations of a statistical relationship in a sample.

The idea that there is no relationship in the population and that the relationship in the sample reflects only sampling error.

The idea that there is a relationship in the population and that the relationship in the sample reflects this relationship in the population.

When the relationship found in the sample would be extremely unlikely, the idea that the relationship occurred “by chance” is rejected.

When the relationship found in the sample is likely to have occurred by chance, the null hypothesis is not rejected.

The probability that, if the null hypothesis were true, the result found in the sample would occur.

How low the p value must be before the sample result is considered unlikely in null hypothesis testing.

When there is less than a 5% chance of a result as extreme as the sample result occurring and the null hypothesis is rejected.

Research Methods in Psychology - 2nd Canadian Edition Copyright © 2015 by Paul C. Price, Rajiv Jhangiani, & I-Chant A. Chiang is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

Share This Book

Researcher Alert! 5 Ways to Deal With Null, Inconclusive, or Insignificant Results

- Was your original hypothesis based on credible literature sources and background information?

- Do you need to re-run certain experiments or run additional experiments with other variables?

- Have you correctly assessed your data?

What if the Results are not Statistically Significant?

Validate your methods for their sensitivity and specificity, contact the authors of original studies, illustration of the role of communication, collaborate with experts in your field, prevention is better than cure.

- Authors may submit a detailed description of the intended study (objectives, sample size, methods, planned analyses) to a credible study registry such as the Open Science Framework .

- Authors may provide a complete account of their work (background literature, hypothesis and the rationale, objectives, methodologies and the planned statistical analyses and pilot data if applicable) to the target journal for peer review prior to data collection. This allows the referees to evaluate the theoretical basis and experimental design before beginning actual research.

An in-depth peer review analysis is performed to judge the quality of your proposed work. If found suitable, studies are provisionally accepted. Following the provisional acceptance, authors can proceed with their study. The feedback provided by the reviewers can help you to effectively plan and improve your study design, before embarking on actual experimental work. On completion of all the experiments, authors have to submit their final manuscript for a second round of peer review to confirm sensible interpretation of the results. If the manuscript passes this quality check, it is likely to receive acceptance, regardless of the results – negative, null or insignificant.

Have you ever had such results? We would love to hear how you dealt with them! if you have any questions related to publishing of negative, null or insignificant results, post them here and our experts will be happy to answer them! You can also visit our Q&A forum for frequently asked questions related to research writing and publishing answered by our team that comprises subject-matter experts, eminent researchers, and publication experts.

Rate this article Cancel Reply

Your email address will not be published.

Enago Academy's Most Popular Articles

- AI in Academia

AI vs. AI: How to detect image manipulation and avoid academic misconduct

The scientific community is facing a new frontier of controversy as artificial intelligence (AI) is…

- Diversity and Inclusion

Need for Diversifying Academic Curricula: Embracing missing voices and marginalized perspectives

In classrooms worldwide, a single narrative often dominates, leaving many students feeling lost. These stories,…

- Career Corner

- Trending Now

Recognizing the signs: A guide to overcoming academic burnout

As the sun set over the campus, casting long shadows through the library windows, Alex…

Reassessing the Lab Environment to Create an Equitable and Inclusive Space

The pursuit of scientific discovery has long been fueled by diverse minds and perspectives. Yet…

- Reporting Research

How to Improve Lab Report Writing: Best practices to follow with and without AI-assistance

Imagine you’re a scientist who just made a ground-breaking discovery! You want to share your…

3 Quick Tips on How Researchers Can Handle Lack of Literature in Original Research

How to Effectively Structure an Opinion Article

5 Simple Tips on How to Patent Your Research

From Thesis to Journal Articles: Expert Tips on Publishing in PubMed

Sign-up to read more

Subscribe for free to get unrestricted access to all our resources on research writing and academic publishing including:

- 2000+ blog articles

- 50+ Webinars

- 10+ Expert podcasts

- 50+ Infographics

- 10+ Checklists

- Research Guides

We hate spam too. We promise to protect your privacy and never spam you.

I am looking for Editing/ Proofreading services for my manuscript Tentative date of next journal submission:

As a researcher, what do you consider most when choosing an image manipulation detector?

The career path of a scholar, regardless of their field, usually begins with the passion to make a change in the world somehow. Their goals of making a difference lead them to want to perform research with positive, major outcomes by finding a significant scientific result that impacts their field somehow. But while this does happen occasionally, researchers are more likely to engage in experiments that are mundane, with minimal results or even “null” findings.

When the outcome is so minimally impactful, or neutral entirely, it’s tempting to chalk the work up to wasted time or lessons learned and move on without spending the next few months on a research manuscript that shows no exciting conclusion. However, these findings are often just as important, if not more so, than some positive correlations, and writing them up can be beneficial - and even essential - to research progress.

What is a “Null” Finding?

The term “null,” in regards to reporting research findings, is derived from the crucial null hypothesis, which is part of the scientific method itself. Null is the neutral component of any research experiment in which variables are attempted to be controlled, and then, through your hypothesis and study design, you work to nullify - that is, disprove - the null hypothesis you initially set up.

Because it’s more likely that your null hypothesis is accurate and no change will occur, these null results are typical. However, most journals don’t want to publish an experiment in which no positive outcome occurred. This includes both null findings and negative results. Positive findings are published more frequently and faster than either of the other outcomes. Yet null reporting plays a crucial role in a lot of evidence-based research and growth.

The Importance of Publishing Your Null Results

As a scholar, you of all people understand the frustration that comes with doing work that results in information that was already available. If only the knowledge had been readily accessible, you could have based your next research step on it and saved yourself lots of time.

Null results might not have a major implication for you , but they could be huge for other researchers. Publishing your findings has benefits such as:

● Offering a form of checks and balances for new research ideas. When all findings are always positive, there is no way to check to ensure that they are all accurate and unbiased. False positives exist in science, and without null or negative results, it’s harder to see the whole picture of treatment or outcome.

● Scholars have less time and resources wasted on their experiment when the processes are already there and don’t need to be repeated. Questions can be duplicated among researchers as they look for answers to lead them through their investigation. When the results they find are verified by other studies or the studies are already performed and can be built off of, it saves time and resources.

● The replicability crisis is reduced since there are more recordings of null or negative findings to aid in a meta-analysis of results or to help with replicating studies that haven’t been thoroughly documented.

More journals today are realizing the importance of the null finding. Some even focus primarily on publishing articles with null or negative outcomes. As a scholar, please understand that your null and negative experiments are worthy of just as much importance as a positive outcome, and should be treated the same way.

- Afghanistan

- Åland Islands

- American Samoa

- Antigua and Barbuda

- Bolivia (Plurinational State of)

- Bonaire, Sint Eustatius and Saba

- Bosnia and Herzegovina

- Bouvet Island

- British Indian Ocean Territory

- Brunei Darussalam

- Burkina Faso

- Cayman Islands

- Central African Republic

- Christmas Island

- Cocos (Keeling) Islands

- Congo (Democratic Republic of the)

- Cook Islands

- Côte d'Ivoire

- Curacao !Curaçao

- Dominican Republic

- El Salvador

- Equatorial Guinea

- Falkland Islands (Malvinas)

- Faroe Islands

- French Guiana

- French Polynesia

- French Southern Territories

- Guinea-Bissau

- Heard Island and McDonald Islands

- Iran (Islamic Republic of)

- Isle of Man

- Korea (Democratic Peoples Republic of)

- Korea (Republic of)

- Lao People's Democratic Republic

- Liechtenstein

- Marshall Islands

- Micronesia (Federated States of)

- Moldova (Republic of)

- Netherlands

- New Caledonia

- New Zealand

- Norfolk Island

- North Macedonia

- Northern Mariana Islands

- Palestine, State of

- Papua New Guinea

- Philippines

- Puerto Rico

- Russian Federation

- Saint Barthélemy

- Saint Helena, Ascension and Tristan da Cunha

- Saint Kitts and Nevis

- Saint Lucia

- Saint Martin (French part)

- Saint Pierre and Miquelon

- Saint Vincent and the Grenadines

- Sao Tome and Principe

- Saudi Arabia

- Sierra Leone

- Sint Maarten (Dutch part)

- Solomon Islands

- South Africa

- South Georgia and the South Sandwich Islands

- South Sudan

- Svalbard and Jan Mayen

- Switzerland

- Syrian Arab Republic

- Tanzania, United Republic of

- Timor-Leste

- Trinidad and Tobago

- Turkmenistan

- Turks and Caicos Islands

- United Arab Emirates

- United Kingdom of Great Britain and Northern Ireland

- United States of America

- United States Minor Outlying Islands

- Venezuela (Bolivarian Republic of)

- Virgin Islands (British)

- Virgin Islands (U.S.)

- Wallis and Futuna

- Western Sahara

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.