Software Defect-Based Prediction Using Logistic Regression: Review and Challenges

- Conference paper

- First Online: 19 October 2021

- Cite this conference paper

- Jayanti Goyal 18 &

- Ripu Ranjan Sinha 18

Part of the book series: Advances in Intelligent Systems and Computing ((AISC,volume 1235))

473 Accesses

5 Citations

Software analysis and prediction system development is the significant and much-needed field of software testing in software engineering. The automatic software predictors analyze, predict, and classify a variety of errors, faults, and defects using different learning-based methods. Many research contributions have evolved in this direction. In recent years, however, they have faced the challenges of software validation, non-balanced and unequal data, classifier selection, code size, code dependence, resources, accuracy, and performance. There is, therefore, a great need for an effective and automated software defect-based prediction system that uses machine learning techniques, with great efficiency. In this paper, a variety of such studies and systems are discussed and compared. Their measurement methods such as metrics, features, parameters, classifiers, accuracy, and data sets are found and discriminated. In addition to this, their challenges, threats, and limitations are also stated to demonstrate their system’s effectiveness. Therefore, it was discovered that such systems accounted for 44% use of the NASA’s PROMISE data samples, 68.18% metrics use of software, and also 16% use of the Logistic Regression method.

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

P.K. Singh, R.K. Panda, O. Prakash, A critical analysis on software fault prediction techniques. World Appl. Sci. 33 (3), 371–379 (2015)

Google Scholar

R. Malhotra, A systematic review of machine learning techniques for software fault prediction. App. Soft Comput. 27 , 504–518 (2015)

Article Google Scholar

L. Goel, D. Damodaran, S.K. Khatri, M. Sharma, A literature review on cross-project defect prediction, in 4th International Conference on Electrical, Computer and Electronics (IEEE, 2017), pp. 680–685

N. Kalaivani, R. Beena, Overview of software defect prediction using machine learning algorithms. Int. J. Pure App. Math. 118 (20), 3863–3873 (2018)

S. Kumar, S.S. Rathore, Types of software fault prediction, in Software Fault Prediction, Springer Briefs in Computer Science (Springer, 2018), pp. 23–30

S.S. Rathore, S. Kumar, A study on software fault prediction techniques. Art. Int. Rev. 51 , 255–327 (2019)

Z. Tian, J. Xiang, S. Zhenxiao, Z. Yi, Y. Yunqiang, Software defect prediction based on machine learning algorithms, in International Conference on Computer and Communication Systems (IEEE, 2019), pp. 520–525

B. Eken, Assessing personalized software defect predictors, in 40th International Conference on Software Engineering: Companion (IEEE, 2018), pp. 488–491

G. Mauša, T.G. Grbac, B.D. Bašic, Multi-variate logistic regression prediction of fault-proneness in software modules, in Proceedings of the 35th International Convention MIPRO (IEEE, 2012), pp. 698–703

K. Gao, T.M. Khoshgoftaar, A. Napolitano, A hybrid approach to coping with high dimensionality and class imbalance for software defect prediction, in 11th International Conferences on Machine Learning and Apps (IEEE, 2012), pp. 281–288

K.V.S. Reddy, B.R. Babu, Logistic regression approach to software reliability engineering with failure prediction. Int. J. Softw. Eng. App. 4 (1), 55–65 (2013)

A. Panichella, R. Oliveto, A.D. Lucia, Cross-project defect prediction models: L'Union fait la force, in Software Evolution Week-Conference on Software Maintenance, Reengineering, and Reverse Engineering (IEEE, 2014), pp. 164–173

D. Kumari, K. Rajnish, Comparing efficiency of software fault prediction models developed through binary and multinomial logistic regression techniques, in Information Systems Design and Intelligent Applications, Advances in Intelligent Systems and Computing , vol. 339, ed. by J. Mandal, S. Satapathy, M. Kumar Sanyal, P. Sarkar, A. Mukhopadhyay (Springer, 2015), pp. 187–197

F. Thung, X.D. Le, D. Lo, Active semi-supervised defect categorization, in 23rd International Conference on Program Comprehension (IEEE Press, 2015), pp. 60–70

G.K. Rajbahadur, S. Wang, Y. Kamei, A.E. Hassan, The impact of using regression models to build defect classifiers, in 14th International Conference on Mining Software Repositories (IEEE, 2017), pp. 135–145

S.O. Kini, A. Tosun, Periodic developer metrics in software defect prediction, in 18th International Working Conference on Source Code Analysis & Manipulation (IEEE, 2018), pp. 72–81

K. Bashir, T. Ali, M. Yahaya, A.S. Hussein, A hybrid data preprocessing technique based on maximum likelihood logistic regression with filtering for enhancing software defect prediction, in 14th International Conferences on Intelligent Systems and Knowledge Engineering (IEEE, 2019), pp. 921–927

P. Singh, R. Malhotra, S. Bansal, Analyzing the effectiveness of machine learning algorithms for determining faulty classes: a comparative analysis, in 9th International Conference on Cloud Computing, Data Science and Engineering (IEEE, 2019), pp. 325–330

S. Agarwal, S. Gupta, R. Aggarwal, S. Maheshwari, L. Goel, S. Gupta, Substantiation of software defect prediction using statistical learning: an empirical study, in 4th International Conference on Internet of Things: Smart Innovation and Usages (IEEE Press, 2019), pp. 1–6

F. Wang, J. Ai, Z. Zou, A cluster-based hybrid feature selection method for defect prediction, in 19th International Conference on Software Quality, Reliability and Security (IEEE, 2019), pp. 1–9

H. Wang, T.M. Khoshgoftaar, A study on software metric selection for software fault prediction, in 18th International Conferences on Machine Learning and Applications (IEEE, 2019), pp. 1045–1050

P Singh, Stacking based approach for prediction of faulty modules, in Conference on Information and Communication Technology (IEEE, 2019) , pp. 1–6

S. Moudache, M. Badri, Software fault prediction based on fault probability and impact, in 18th International Conferences on Machine Learning and Applications (IEEE, 2019), pp. 1178–1185

T. Yu, W. Wen, X. Han, J.H. Hayes, ConPredictor: concurrency defect prediction in real-world applications. IEEE Trans. Softw. Eng. 45 (6), 558–575 (2019)

K. Kaewbanjong, S. Intakosum, Statistical analysis with prediction models of user satisfaction in software project factors, in 17th International Conferences on Electrical Engineering/Electronics, Computer, Telecommunications and Information Technology (IEEE, 2020), pp. 637–643

M. Cetiner, O.K. Sahingoz, A comparative analysis for machine learning based software defect prediction systems, in 11th International Conference on Computing Communication & Networking Technologies (IEEE, 2020), pp. 1–7

M.A. Ibraigheeth, S.A. Fadzli, Software project failures prediction using logistic regression modeling, in 2nd International Conference on Information Science (IEEE, 2020), pp. 1–5

E. Elahi, S. Kanwal, A.N. Asif, A new ensemble approach for software fault prediction, in 17th International Bhurban Conference on Applied Sciences and Technology (IEEE, 2020), pp. 407–412

J. Deng, L. Lu, S. Qiu, Y. Ou, A suitable AST node granularity and multi-kernel transfer convolutional neural network for cross-project defect prediction. IEEE (2020), pp. 66647–66661

F. Yucalar, A. Ozcift, E. Borandag, D Kilinc, Multiple-classifiers in software quality engineering: combining predictors to improve software fault prediction ability. Eng. Sci. Tech. Int. J. 23 (4), 938–950 (2020)

Download references

Author information

Authors and affiliations.

RTU, Kota, Rajasthan, India

Jayanti Goyal & Ripu Ranjan Sinha

You can also search for this author in PubMed Google Scholar

Editor information

Editors and affiliations.

The PNG University of Technology, Lae, Papua New Guinea

Ashish Kumar Luhach

CHRIST (Deemed to be University), Bangalore, India

Ramesh Chandra Poonia

University of Eastern Finland, Kuopio, Finland

Xiao-Zhi Gao

Namibia University of Science and Technology, Windhoek, Namibia

Dharm Singh Jat

Rights and permissions

Reprints and permissions

Copyright information

© 2022 The Author(s), under exclusive license to Springer Nature Singapore Pte Ltd.

About this paper

Cite this paper.

Goyal, J., Ranjan Sinha, R. (2022). Software Defect-Based Prediction Using Logistic Regression: Review and Challenges. In: Luhach, A.K., Poonia, R.C., Gao, XZ., Singh Jat, D. (eds) Second International Conference on Sustainable Technologies for Computational Intelligence. Advances in Intelligent Systems and Computing, vol 1235. Springer, Singapore. https://doi.org/10.1007/978-981-16-4641-6_20

Download citation

DOI : https://doi.org/10.1007/978-981-16-4641-6_20

Published : 19 October 2021

Publisher Name : Springer, Singapore

Print ISBN : 978-981-16-4640-9

Online ISBN : 978-981-16-4641-6

eBook Packages : Intelligent Technologies and Robotics Intelligent Technologies and Robotics (R0)

Share this paper

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Lippincott Open Access

Logistic Regression in Medical Research

Patrick schober.

From the * Department of Anesthesiology, Amsterdam UMC, Vrije Universiteit Amsterdam, Amsterdam, the Netherlands

Thomas R. Vetter

† Department of Surgery and Perioperative Care, Dell Medical School, University of Texas, Austin, Texas.

Logistic regression is used to estimate the relationship between one or more independent variables and a binary (dichotomous) outcome variable.

Related Article, see p 367

In this issue of Anesthesia & Analgesia , Park et al 1 report results of an observational study on the risk of hypoxemia (defined as a peripheral oxygen saturation <90%) during rapid sequence induction (RSI) versus a modified RSI technique in infants and neonates undergoing pyloromyotomy. The authors used logistic regression to analyze the association between the induction technique and the risk of hypoxemia while controlling for potential confounders.

Logistic regression is used to estimate the association of one or more independent (predictor) variables with a binary dependent (outcome) variable. 2 A binary (or dichotomous) variable is a categorical variable that can only take 2 different values or levels, such as “positive for hypoxemia versus negative for hypoxemia” or “dead versus alive.” A simple example with only one independent variable ( X ) is shown in the Figure, where the dependent variable can have a value of either 0 or 1. In this example, as the value of the independent variable increases, the probability that the dependent variable takes value of 1 also seems to increase. More formally, logistic regression can be used to estimate the probability (or risk) of a particular outcome given the value(s) of the independent variable(s).

Relationship between a continuous independent variable X and a binary outcome that can take values 0 (eg, “no”) or 1 (eg, “yes”). As shown, the probability that the value of the outcome is 1 seems to increase with increasing values of X . A, Using a straight line to model the relationship of the independent variable with the probability provides a poor fit; results in estimated probabilities <0 and >1; and grossly violates the assumptions of linear regression. Logistic regression models a linear relationship of the independent variable with the natural logarithm (ln) of the odds of the outcome variable. B, This translates to a sigmoid relationship between the independent variable and the probability of the outcome being 1, with predicted probabilities appropriately constrained between 0 and 1.

Logistic regression is actually an extension of linear regression. 2 , 3 Rather than modeling a linear relationship between the independent variable ( X ) and the probability of the outcome (Figure A), which is unnatural since it would allow predicted probabilities outside the range of 0–1, it assumes a linear (straight line) relationship with the logit (the natural logarithm of the odds) of the outcome. The regression coefficients represent the intercept ( b 0 ) and slope ( b 1 ) of this line

When solving this equation for the probability ( P ), the probability has a sigmoidal relationship with the independent variable (Figure A), and the estimated probabilities are now appropriately constrained between 0 and 1.

As with linear regression, logistic regression can be easily extended to accommodate >1 independent (predictor) variable. Researchers can then study the relationship between each variable and the binary (dichotomous) outcome while holding constant the values of the other independent variables. This is particularly useful not only to understand the independent relationship of each variable with the outcome, but also, as done by Park et al, 1 to adjust the estimates for the effects of confounding variables 4 in observational research.

A major advantage of logistic regression compared to other similar approaches like probit regression—and therefore, a reason for its popularity among medical researchers—is that the exponentiated logistic regression slope coefficient ( e b ) can be conveniently interpreted as an odds ratio. The odds ratio indicates how much the odds of a particular outcome change for a 1-unit increase in the independent variable (for continuous independent variables) or versus a reference category (for categorical variables). For example, the adjusted odds ratio of 2.8 (95% confidence interval [CI], 1.5-5.3) reported by Park et al 1 indicates that the odds of hypoxemia is estimated to be almost 3 times higher when receiving conventional RSI as compared to the modified technique (the reference category in their analysis), after controlling for and thus accounting for the potential confounders.

Valid inferences of logistic regression rely on its assumptions being met, which include

- For simple logistic regression with a continuous independent variable, its relationship with the logit is assumed to be linear. This is basically also true for multivariable logistic regression, but the model can be specified to accommodate a curved relationship.

- Observations must be independent of each other (eg, must not be repeated measurements within the same subjects). Other techniques, like generalized linear mixed-effects models, are required for correlated data. 5

- The model needs to be specified correctly, as explained in more detail in the Statistical Minute on linear regression in the previous issue of Anesthesia & Analgesia . 3 Several methods are available to assess (a) the calibration (how closely the observed risk matches the predicted risk)―commonly assessed with the Hosmer-Lemeshow goodness of fit test; and (b) the discrimination (how well the binary outcome can be predicted)―commonly assessed by the area under a receiver operator characteristic curve (also referred to as the c -statistic) of the logistic regression model. 6

This Statistical Minute focuses on binary logistic regression, which is usually simply referred to as “logistic regression.” Additional techniques are available for categorical data (multinomial logistic regression) and ordinal data (ordinal logistic regression).

- Open access

- Published: 28 December 2018

A logistic regression investigation of the relationship between the Learning Assistant model and failure rates in introductory STEM courses

- Jessica L. Alzen ORCID: orcid.org/0000-0002-1706-2975 1 ,

- Laurie S. Langdon 1 &

- Valerie K. Otero 1

International Journal of STEM Education volume 5 , Article number: 56 ( 2018 ) Cite this article

29k Accesses

46 Citations

4 Altmetric

Metrics details

Large introductory STEM courses historically have high failure rates, and failing such courses often leads students to change majors or even drop out of college. Instructional innovations such as the Learning Assistant model can influence this trend by changing institutional norms. In collaboration with faculty who teach large-enrollment introductory STEM courses, undergraduate learning assistants (LAs) use research-based instructional strategies designed to encourage active student engagement and elicit student thinking. These instructional innovations help students master the types of skills necessary for college success such as critical thinking and defending ideas. In this study, we use logistic regression with pre-existing institutional data to investigate the relationship between exposure to LA support in large introductory STEM courses and general failure rates in these same and other introductory courses at University of Colorado Boulder.

Our results indicate that exposure to LA support in any STEM gateway course is associated with a 63% reduction in odds of failure for males and a 55% reduction in odds of failure for females in subsequent STEM gateway courses.

Conclusions

The LA program appears related to lower course failure rates in introductory STEM courses, but each department involved in this study implements the LA program in different ways. We hypothesize that these differences may influence student experiences in ways that are not apparent in the current analysis, but more work is necessary to support this hypothesis. Despite this potential limitation, we see that the LA program is consistently associated with lower failure rates in introductory STEM courses. These results extend the research base regarding the relationship between the LA program and positive student outcomes.

Science, technology, engineering, and mathematics (STEM) departments at institutes of higher education historically offer introductory courses that can serve up to 1000 students per semester. Introductory courses of this size, often referred to as “gateway courses,” are cost-effective due to the number of students able to receive instruction in each semester, but they often lend themselves to lecture as the primary method of instruction. Thus, there are few opportunities for substantive interaction between the instructor and students or among students (Matz et al., 2017 ; Talbot, Hartley, Marzetta, & Wee, 2015 ). Further, these courses typically have high failure rates (Webb, Stade, & Grover, 2014 ) and lead many students who begin as STEM majors to either switch majors or drop out of college without a degree (Crisp, Nora, & Taggart, 2009 ). In efforts to address these issues, STEM departments across the nation now implement active engagement strategies in their classes such as peer instruction and interactive student response systems (i.e., clicker questions) during large lecture meetings (Caldwell, 2007 ; Chan & Bauer, 2015 ; Mitchell, Ippolito, & Lewis, 2012 ; Wilson & Varma-Nelson, 2016 ). In addition to classroom-specific active engagement, interventions are programs designed to guide larger instructional innovations from an institution level, such as the Learning Assistant (LA) model.

The LA model was established at University of Colorado Boulder in 2001. The program represents an effort to change institutional values and practices through a low-stakes, bottom-up system of course assistance. The program supports faculty to facilitate increased learner-centered instruction in ways that are most valued by the individual faculty member. A key component of the LA model is undergraduate learning assistants (LAs). LAs are undergraduate students who, through guidance, encourage active engagement in classes. LAs facilitate discussions, help students manage course material, offer study tips, and motivate students. LAs also benefit as they develop content mastery, teaching, and leadership skills. LAs get a monthly stipend for working 10 h per week, and they also receive training in teaching and learning theories by enrolling in a math and science education seminar taught by discipline-based education researchers. In addition, LAs meet with faculty members once a week to develop deeper understanding of the content, share insights about how students are learning, and prepare for future class meetings (Otero, 2015 ).

LAs are not peer tutors and typically do not work one-on-one with students. They do not provide direct answers to questions or systematically work out problems with students. Instead, LAs facilitate discussion about conceptual problems among groups of students and they focus on eliciting student thinking and helping students make connections between concepts. This is typically done both in the larger lecture section of the course as well as smaller meetings after the weekly lectures, often referred to as recitation. LAs guide students in learning specific content, but also in developing and defending ideas—important skills for higher-order learning in general. The model for training LAs and the design of the LA program at large are aimed at making a difference in the ways students think and learn in college overall and not just in specific courses. That is, we expect exposure to the program to influence student success in college generally.

Prior research indicates a positive relationship between exposure to LAs and course learning outcomes in STEM courses (Pollock, 2009 ; Talbot et al., 2015 ). Other research suggests that modifying instruction to be more learner-centered helps to address high failure rates (Cracolice & Deming, 2001 ; Close, Mailloux-Huberdeau, Close, & Donnelly, 2018 ; Webb et al., 2014 ). This study seeks to further understand the relationship between the LA program and probability of student success. Specifically, we answer the following research question: How do failure rates in STEM gateway courses compare for students who do and do not receive LA support in any STEM gateway course? We investigate this question because, as a model for institutional change, we expect that LAs help students develop skills and dispositions necessary for success in college such as higher-order thinking skills, navigating course content, articulating and defending ideas, and feelings of self-efficacy. Since skills such as these extend beyond a single course, we investigate the extent to which students exposed to the LA program have lower failure rates in STEM gateway courses generally than students who are not exposed to the program.

Literature review

The LA model is not itself a research-based instructional strategy. Instead, it is a model of social and structural organization that induces and supports the adoption of existing (or creation of new) research-based instructional strategies that require increased teacher-student ratio. The LA program is at its core, a faculty development program. However, it does not push specific reforms or try to change faculty directly. Instead, the opt-in program offers resources and structures that lead to changes in values and practices among faculty, departments, students, and the institution (Close et al., 2018 ; Sewell, 1992 ). Faculty members write proposals to receive LAs (these proposals must involve course innovation using active engagement and student collaboration), students apply to be LAs, and departments match funding for their faculty’s requests for LAs. Thus, the LA program has become a valued part of the campus community.

The body of research that documents the relationship between student outcomes and the LA program is growing. Pollock ( 2006 ) provided evidence regarding the relationship between instructional innovation including LAs and course outcomes in introductory physics courses at University of Colorado Boulder by comparing three different introductory physics course models (outlined in Table 1 ).

Pollock provides two sources of evidence related to student outcomes regarding the relative effectiveness of these three course models. First, he discussed average normalized learning gains on the force and motion concept evaluation (FMCE; Thornton & Sokoloff, 1998 ) generally. The FMCE is a concept inventory commonly used in undergraduate physics education to provide information about student learning on the topics of force and motion. Normalized learning gains are calculated by finding the difference in average post-test and pre-test in a class and dividing that value by the difference between 100 and the average pre-test score. It is conceptualized as the amount the students learned divided by the amount they could have learned (Hake, 1998 ).

Prior research suggests that traditional instructional strategies yield an average normalized learning gain of about 15% and research-based instructional methods such as active engagement and collaborative learning yield on average about 63% average normalized learning gains (Thornton, Kuhl, Cummings, & Marx, 2009 ). The approach using the University of Washington Tutorials with LAs saw a normalized learning gain of 66% on the FMCE from pre-test to post-test. Average learning gains for the approach using Knight’s ( 2004 ) workbooks with TAs were about 59%, and average normalized learning gains for the traditional approach were about 45%. The average normalized learning gains for all three methods in Pollock’s study are much higher than what the literature would expect from traditional instruction, but the course model including LAs is aligned with what is expected from research-based instructional strategies. Second, Pollock further investigated the impact of the different course implementations on higher and lower achieving students on FMCE scores. To do this, he considered students with high pre-test scores (those with pre-test scores > 50%) and students with low pre-test scores (those with pre-test scores < 15%). For both groups of students, the course implementation that included recitation facilitated by trained TAs and LAs had the highest normalized learning gains as measured by the FMCE.

In a similar study at Florida International University, Goertzen et al. ( 2011 ) investigated the influence of instructional innovations through the LA program in introductory physics. As opposed to the University of Washington Tutorials in the Pollock ( 2006 ) study, the research-based curriculum materials used by Florida International University were Open Source Tutorials (Elby, Scherr, Goertzen, & Conlin, 2008 ) developed at University of Maryland, College Park. Goertzen et al. ( 2011 ) used the Force Concept Inventory (FCI; Hestenes, Wells, & Swackhamer, 1992 ) as the outcome of interest in their study. Despite the different curriculum from the Pollock ( 2006 ) context, Goertzen et al. found that those students exposed to the LA-supported courses had a 0.24 increase in mean raw gain in scores from pre-test to post-test while students in classes that did not include instructional innovations only saw raw gains of 0.16.

In an attempt to understand the broader relationship between the LA program and student outcomes, White et al. ( 2016 ) investigated the impacts of the LA model on student learning in physics across institutions. In their study, White et al. used paired pre-/post-tests from four concept inventories (FCI, FMCE, Brief Electricity and Magnetism Assessment [BEMA; Ding, Chabay, Sherwood, & Beichner, 2006 ], and Conceptual Survey of Electricity and Magnetism [CSEM]) at 17 different institutions. Researchers used data contributed to the Learning Assistant Alliance through their online assessment tool, Learning About STEM Student Outcomes Footnote 1 (LASSO). This platform allows institutions to administer several common concept inventories, with data securely stored on a central database to make investigation across institutions possible (Learning Assistant Alliance, 2018 ). In order to identify differences in learning gains for students who did and did not receive LA support, White et al. tested differences in course mean effect sizes between the two groups using a two-sample t test. Across all of the concept inventories, White et al. found average Cohen’s d effect sizes 1.4 times higher for LA-supported courses compared to courses that did not receive LA support.

The research about the LA model shows that students exposed to the model tend to have better outcomes than those in more traditional lecture-based learning environments. However, due to the design of the program and the goals of the LA model, there is a reason to expect that there are implications for more long-term outcomes. LAs are trained to help students develop skills such as developing and defending ideas, making connections between concepts, and solving conceptual problems. Prior research suggests that skills such as these develop higher-order thinking for students. Martin et al. ( 2007 ) compared learning outcomes and innovative problem-solving for biomedical engineering students in inquiry-based, active engagement and traditional lecture biotransport courses. They found that both groups reached similar learning gains but that the active engagement group showed greater improvement in innovative thinking abilities. In a similar study, Jensen and Lawson ( 2011 ) investigated achievement and reasoning gains for students in either inquiry-based, active engagement or lecture-based, didactic instruction in undergraduate biology. Results indicated that students in active engagement environments outperformed students in didactic environments on more cognitively demanding items, while the groups performed equally well on items requiring low levels of cognition. In addition, students in active engagement groups showed greater ability to transfer reasoning among contexts.

This research suggests that active engagement such as what is facilitated with the LA model may do more than help students gain knowledge in a particular discipline in a particular course. Over and above, active engagement helps learners grow in reasoning and transfer abilities generally. This increase in higher-order thinking may help students to develop skills that extend beyond the immediate course. However, there is only one study focused on the LA model that investigates long-term outcomes related to the program. Pollock ( 2009 ) investigated the potential long-term relationship between exposure to the LA program and conceptual understanding in physics. In this line of inquiry, Pollock compared BEMA assessment scores for those upper-division physics majors who did and did not receive LA support in their introductory Physics II course, the course in which electricity and magnetism is first covered. Pollock’s results indicate that those students who received LA support in Physics II had higher BEMA scores following upper-division physics courses than those students who did not receive LA support in Physics II. This research provides some evidence to the long-term relationship between exposure to the LA program and conceptual learning. In the current study, we continue this line of inquiry by investigating the relationship between receiving LA support in a gateway course and the potential relationship to course failure in subsequent gateway courses. This study also contributes to the literature on the LA program as no prior research attempts to examine the relationship between taking LA-supported courses and student outcomes while controlling for variables that may confound this relationship. This study thus represents an extension of the previous work regarding the LA model in terms of both the methodology and the outcome of interest.

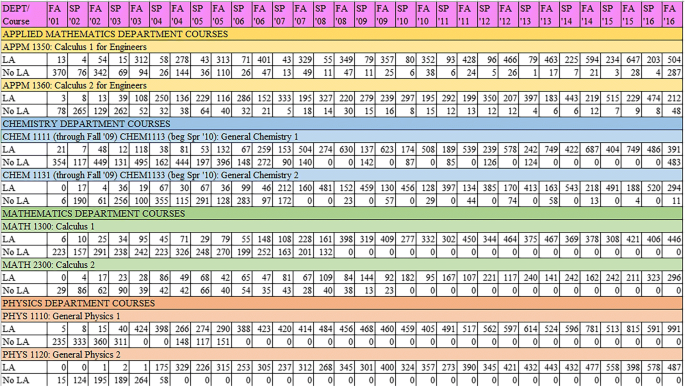

Data for this study come from administrative records at University of Colorado Boulder. We focus on 16 cohorts of students who entered the university as full-time freshmen for the first time each fall semester from 2001 to 2016 and took Physics I/II, General Chemistry I/II, Calculus I/II (Math department), and/or Calculus I/II for Engineers (Applied Math department). The dataset includes information for 32,071 unique students, 23,074 of whom took at least one of the above courses with LA support. Student-level data includes information such as race/ethnicity, gender, first-generation status, and whether a student ever received financial aid. Additional variables include number of credits upon enrollment, high school grade point average (GPA), and admissions test scores. We translate SAT total scores to ACT Composite Scores using a concordance table provided by the College Board to have a common admissions test score for all students (College Board, 2016 ). We exclude students with no admissions test scores (about 6% of the sample). We also have data on the instructor of record for each course. The outcome of interest in this study is failing an introductory STEM course. We define failing as receiving either a D or an F or withdrawing from the course altogether after the university drop date (i.e., “DFW”).

An important consideration in creating the data set for this study is timing of receiving LA support relative to taking any STEM gateway course. The data begin with all students who took at least one of the courses included in this study. We keep all students who took all of their STEM LA courses either with or without LA support. We also include all students who received LA support in the very first STEM gateway course they took, regardless of if they had LA support in subsequent STEM gateway courses. We would exclude any student who took a STEM gateway course without LA support and then took another STEM gateway course in a subsequent semester with LA support.

This data limitation ensures that exposure to the LA program happened before or at the same time as the opportunity to fail any STEM gateway course. If it were the case that a student failed a STEM gateway course without LA support, say, in their first year and then took LA-supported courses in the second year, this student would be indicated as an LA student in the data, but the courses taken during the first year would not have been affected by the LA program. Students with experiences such as this would misrepresent the relationship between being exposed to the LA program and probability of course failure. Conveniently, there were not any students with this experience in the current dataset. In other words, for every student in our study who took more than one of the courses of interest, their first experience with any of the STEM gateway courses under consideration included LA support if there was ever exposure to the LA program. Although we did not have to exclude any students from our study for timing reasons, other institutions carrying out similar studies should carefully consider such cases when finalizing their data for analysis.

We provide Fig. 1 as a way for readers to gain a better understanding of the adoption of the LA program in each of the departments in this study. This figure also gives information regarding the number of students exposed to LAs or not in each department, course, and term in our study.

Course enrollment over time by LA exposure

Ideally, we would design a controlled experiment to estimate the causal effect of LA exposure on the probability of failing introductory STEM courses. To do this, we would need two groups of students: first, those who were exposed to LA support in a STEM gateway course, and second, a comparable group, on average, that significantly differed only in that they were not exposed to LA support in any STEM gateway course. However, many institutions do not begin their LA programs with such studies in mind, so the available data do not come from a controlled experiment. Instead, we must rely on historical institutional data that was not gathered for this type of study. Thus, this study not only contributes to the body of literature regarding the relationship between LA exposure and student outcomes, but it also serves as a model for other institutions with LA programs that would like to use historical institutional data for similar investigations.

Selection bias

The ways students are assigned to receive LA support in each of the departments represented in this study are not random, and the ways LAs are used in each department are not identical. These characteristics of pre-existing institutional data manifest themselves as issues related to selection bias within a study. For example, in the chemistry department, LA support was only offered in the “on semester” sections of chemistry from 2008 to 2013. “On semester” indicates General Chemistry I in the fall and General Chemistry II in the spring. Thus, there were few opportunities for those students who took the sequence in the “off semester,” or General Chemistry I in the spring and General Chemistry II in the fall to receive LA support in these courses during the span of time covered in this analysis. The most typical reasons why students take classes in the “off semester” are that they simply prioritize other courses more in the fall semester, so there is insufficient space to take General Chemistry I; they do not feel prepared for General Chemistry I in the fall and take a more introductory chemistry class first; or they fail General Chemistry I the first time in the fall and re-take General Chemistry I in the spring. This method of assignment to receiving LA support may overstate the relationship between receiving LA support and course failure in this department. That is, it might be the case that those students who received LA support were those who were more likely to pass introductory chemistry to begin with. Our analysis includes prior achievement variables (described below) to attempt to address these selection bias issues.

In chemistry, LAs attend the weekly lecture meetings and assist small groups of students during activities such as answering clicker questions. Instructors present questions designed to elicit student levels of conceptual understanding. The questions are presented to the students; they discuss the questions in groups and then respond using individual clickers based on their selection from one of several multiple-choice options. LAs help students think about and answer these questions in the large lecture meetings. In addition, every student enrolled in General Chemistry I and II is also enrolled in a recitation section. Recitations are smaller group meetings of approximately 20 students. In these recitation sections, LAs work with graduate TAs to facilitate small group activities related to the weekly lecture material. The materials for these recitation sections are created by the lead instructor for the course and are designed to help students investigate common areas of confusion related to the weekly material.

In the physics and math departments, the introductory courses went from no LA support in any section in any semester to all sections in all semesters receiving LA support. This historical issue affects selection bias in a different way than the off-semester chemistry sequence. One interpretation of decreased course failure rates could be that LA support caused the difference. However, we could not rule out the possibility that failure rates decreased due to other factors that also changed over time. It could be that the university implemented other student supports in addition to the LA model at the same time or that the types of students who enrolled in STEM courses changed. There is no way to determine conclusively which of these (or other) factors may have caused changes in failure rates. Thus, causal estimates of the effect of LA support on failure rates would be threatened by any historic changes that occurred. We have no way of knowing if we might over or underestimate the relationship between LA exposure and course failure rates due to the ways students were exposed (or not) to the LA program in these departments. In order to address this issue, we control for student cohort. This adjustment, described below, attempts to account for differences that might exist among cohorts of students that might be related to probability of failing a course.

The use of LAs in the math department only occurs during weekly recitation meetings. During this weekly meeting, students work in small groups to complete carefully constructed activities designed to enhance conceptual understanding of the materials covered during the weekly lecture. An anomaly in the math department is that though Calculus I/II are considered gateway courses, the math department at this institution is committed to keeping course enrollment under 40. This means that LA support is tied to smaller class sizes in this department. However, since this condition is constant across the timeframe in our study, it does not influence selection bias.

Similar to the math department, the physics department only uses LAs in the weekly recitation meeting. An additional anomaly in physics is that, not incidentally, the switch to the LA model happened concurrently with the adoption of the University of Washington Tutorials in introductory physics (McDermott & Shaffer, 2002 ). LAs facilitate small group work with the materials in the University of Washington Tutorials during recitation meetings. In other words, it is not possible to separate the effects of the content presentation in the Tutorials from the LAs facilitating the learning of the content in this department. Thus, data from this department might overestimate the relationship between receiving LA support and course failure. However, it should be noted that the University of Washington Tutorials require a low student-teacher ratio, and proper implementation of this curriculum is not possible without the undergraduate LAs helping to make that ratio possible.

Finally, every student in every section of Calculus I and II in the applied math department had the opportunity to be exposed to LA support. This is because LAs are not used in lecture or required recitation meetings, but instead facilitate an additional weekly one-unit course, called workgroup, that is open to all students. Thus, students who sign up for workgroup not only gain exposure to LA support, but they also gain an additional 90 min of time each week formally engaging in calculus material. It is not possible to know if lower failure rates might be due to the additional time on task generally, or exposure to LAs during that time specifically. This might cause us to overestimate the relationship between LA support and course failure. Additionally, those students who are expected to struggle in calculus (based on placement scores on the Assessment and LEarning in Knowledge Spaces [ALEKS] assessment) or are not confident in their own math abilities are more strongly encouraged to sign up for the weekly meeting by their instructors and advisors. Thus, those students who sign up for LA support might be more likely to fail calculus. This might lead us to underestimate the relationship between LA exposure and course failure. Similar to the chemistry department, we use prior achievement variables (described below) to address this issue to the best of our abilities.

We mention one final assumption about the LA model before describing our methods of statistical adjustment. Our data span 32 semesters of 8 courses (see Fig. 1 ). Although it is surely the case that the LA model adapted and changed in some ways over the course of this time, we make the assumption that the program was relatively stable within department throughout the time period represented in this study.

Statistical adjustment

Although we do not have a controlled experiment that warrants causal claims, we desire to estimate a causal effect. The current study includes a control group, but it is not ideal because of the potential selection bias in each department described above. However, this study is warranted because it takes advantage of historical data. Our analytic approach is to control for some sources of selection bias. Specifically, we use R to control for standardized high school GPA, standardized admissions test scores, and standardized credits at entry to try and account for issues related to prior aptitude. This helps to address the selection bias issues in the chemistry and applied math departments. Additionally, we control for student cohort to account for some of the historical bias in the physics and math departments. We also control for instructor and course as well as gender (coded 1 = female; 0 = male), race/ethnicity (coded 1 = nonwhite; 0 = white), first-generation status (coded 1 = first-generation college student; 0 = not first-generation college student), and financial aid status (coded 1 = received financial aid ever; 0 = never received financial aid) to disentangle other factors that might bias our results in any department. Finally, we consider possible interaction effects between exposure to LA support and various student characteristics. Table 2 presents the successive model specifications explored in this study. Model 1 controls only for student characteristics. Model 2 adds course, cohort, and instructor factor variables. Model 3 adds an interaction between exposure to the LA program and gender to the model 2 specification.

The control variables in Table 2 help to account for the selection bias described above as well as other unobserved bias in our samples, but we are limited by the availability of observed covariates. Thus, the results presented here lie somewhere between “true” causal effects and correlations. We know that our results tell us more than simple correlations, but we also know that we are surely missing key control variables that are typically not collected by institutes of higher education such as a measure of student self-efficacy, social and emotional health, or family support. Thus, we anticipate weak model fit, and the results presented here are not direct causal effects. Instead, they provide information about the partial association between course failure and LA support.

We begin our analysis by providing raw counts of failure rates for the students who did and did not receive LA support in STEM gateway courses. Next, we describe the differences between those students who did and did not receive LA support with respect to available covariates. If it is the case that we see large differences in our covariates between the group of students who did and did not receive LA support, we expect that controlling for those factors in the regression analysis will affect our results in meaningful ways. Thus, we close with estimating logistic regression models to disentangle some of the relationship between LA-support and course failure. The variable of most interest in this analysis is the indicator for exposure to the LA program. A student received a “1” for this variable if they were exposed to the LA program either concurrently or prior to taking STEM gateway courses, and a 0 if they took any classes in the study but never had any LA support in those classes.

Table 3 includes raw pass and failure rates across all courses. Students are counted every time they enrolled in one of the courses included in our study. We see that those students who were exposed to the LA program in at least one STEM gateway course had 6% lower failure rates in concurrent or subsequent STEM gateway course. We also provide the unadjusted odds ratios for ease of comparison with the logistic regression results. The odds ratio represents the odds that course failure will occur given exposure to the LA program, compared to the odds of course failure occurring without LA exposure. Odds ratios equal to 1.0 indicates the odds of failure is the same for both groups. Odds ratios less than 1.0 indicates that exposure to LA support is associated with a lower chance of failing, while odds ratios greater than 1.0 indicates that exposure to LA support is associated with a higher chance of failing. Thus, the odds ratio of 0.65 in Table 3 indicates a lower chance of failure with LA exposure compared to no LA exposure.

Although the raw data indicates that students exposed to LA support have lower course failure rates, these differences could be due, at least in part, to factors outside of LA support. To explore this possibility, we next examine demographic and academic achievement differences between the groups. In Table 4 , we present the mean values for all of our predictor variables for students who did and did not receive LA support. The top panel presents all of the binary variables, so averages indicate the percentage of students who identify with the respective characteristics. The bottom panel shows the average for the continuous variables. The p values are for the comparisons of means from a t test across the two groups for each variable. Table 4 indicates that students exposed to the LA program were more likely to be male, nonwhite, non-first-generation students who did not received financial aid. They also had more credits at entry, higher high school GPAs, and higher admissions test scores. These higher prior achievement variables might lead us to think that students exposed to LA support are more likely to pass STEM gateway courses. If this is true, then the relationship between LA exposure and failure in Table 3 may overestimate the actual relationship between exposure to LAs and probability for course failure. Thus, we next use logistic regression to control for potentially confounding variables and investigate any resulting change in the odds ratio.

R calculates logistic regression estimates in logits, but these estimates are often expressed in odds ratios. We present abbreviated logit estimates in the Appendix and abbreviated odds ratios estimates in Table 5 . Estimates for all factor variables (i.e., course, cohort, and instructor) are suppressed in these tables for ease of presentation. In order to make the transformation from logits to odds ratios, the logit estimates were exponentiated to calculate the odds ratios presented in Table 5 . For example, the logit estimate for exposure to LA in model 1 from the Appendix converts to the odds ratio estimate in Table 5 by finding exp(− 1.41) = 0.24.

We start off by discussing the results for model 3 as it is the full model for this analysis. Discussion of models 1 and 2 are saved for the discussion of model fit below. The results in model 3 provide information about what we can expect, on average, across all courses and instructors in the sample. We include confidence intervals with the odds ratios. Confidence intervals that include 1.0 suggest results that are not statistically significant (Long, 1997 ). The odds ratio estimate in Table 5 for model 3 is 0.367 for LA exposure with a confidence interval from (0.337–0.400). Since the odds ratio is less than 1.0, LA exposure is associated with a lower probability of failing, on average, and the relationship is statistically significant because the confidence interval does not include 1.0. Compared to the odds ratio in Table 3 (0.65), these results indicate that covariate adjustment has a large impact on this odds ratio. Failure to adjust for possible sources of confounding variables lead to an understatement of the “effect” of exposure to the LA program on course failure.

Our results show that LA exposure is associated with lower odds of failing STEM gateway courses. We also see that the interaction between exposure to the LA program and gender is statistically significant. The odds ratio of 0.37 for exposure to LA support in Table 5 is for male students. In order to find the relationship for female students, we must exponentiate the logit estimates for exposure to the LA program, female, and the interaction between the two variables (i.e. exp[01.002–0.092 + 0.297] = 0.45; see the Appendix ). This means that the LA program actually lowers the odds of failing for male students slightly more than female students. Recall that Table 3 illustrated that the raw odds ratio for failure when exposed to LA support was 0.65. Our results show that after controlling for possibly confounding variables, the relationship between LA support and odds of course failure is better for both male (0.37) and female (0.45) students.

Discussion and limitations

Throughout this paper, we have been upfront about the limitations of the current analysis. Secondary analysis of institutional data for longstanding programs is complex and difficult. In this penultimate section, we mention a few other limitations to the study as well as identify some ideas for future research that could potentially bolster the results found here or identify where this analysis may have gone astray.

First, and most closely related to the results presented above is model fit. The McFadden pseudo R-squared (Verbeek, 2008 ) values for the three models are 0.0708, 0.1793, and 0.1797 respectively. These values indicate two things: (1) that the data do not fit any of the models well and (2) that the addition of the interaction term does little to improve model fit. This is also seen in the comparison of AIC and log likelihood values in Table 5 . We spend significant time on the front end of this paper describing why these data are not ideal for understanding the relationship between exposure to the LA program and probability of failing, so we do not spend additional time here discussing this lack of goodness-of-fit. Instead, we acknowledge this as a limitation of the current analysis and reiterate the desire to conduct a similar type analysis to what is presented here with data more likely to fit the model. Such situations would include institutions that have the ability to compare, for example, large samples of students with and without LA exposure within the same semester, course, and instructor. Another way to improve such data would be to include a way to control for student confidence and feelings of self-efficacy. For example, the descriptions of selection bias above indicate that students in Applied Math might systematically be students who differ in terms of self-confidence. Data that could control for such factors would better facilitate understanding of the relationship between exposure to LA support and course failure. Alternatively, it may be more appropriate to consider the nested structure of the data (i.e., students nested within courses nested within departments) in a context with data better suited for such analysis. Hierarchical linear modeling might even be appropriate for a within-department study if it would be reasonable to consider students nested within classes if there was sufficient sample size at the instructor level.

Second, in addition to a measure of student self-efficacy, there are other variables that might be interesting to investigate such as transfer, out-of-state, or international student status; if students live on-campus; and a better measure of socioeconomic status than receiving financial aid. These are other important student characteristics that might uncover differential relationships between the LA program and particular types of students. Such analysis is important because persistence and retention in gateway courses—particularly for students from traditionally marginalized groups—are an important concern for institutions generally and STEM departments specifically. If we are to maintain and even build diversity in these departments, it is crucial we have solid and clear work in these areas.

Third, although this study controls for course- and instructor-level factors, there are surely complications introduced into this study due to the differential way the LA program is implemented in each department. A more careful study within department is another interesting and valuable approach to understanding the influence of the LA program but one that this data is not well-suited for. Again, there is a need for data which includes students exposed to the LA program and not exposed within the same term, course, and instructor to better disentangle the relationship. Due to the nature of the way the LA program was taken up at University of Colorado Boulder, we do not have the appropriate data for such an analysis.

Finally, an interesting consideration is the choice of outcome variable made in this analysis. Course failure rates are particularly important in gateway courses because failing such a course can lead students to switch majors or drop out of college. We do see a relationship between the LA model and lower failure rates in the current analysis. However, other approaches to course outcomes include course grades, pass rates, average GPA in other courses, and average grade anomaly (Freeman et al., 2014 ; Haak et al., 2011 ; Matz et al., 2017 ; Webb, Stade, & Grover, 2014 ). Similar investigations to what is presented here with other course outcomes are also of interest. For example, course grades would provide more nuanced information regarding how the LA model influences student outcomes. A measure such as Matz et al.’s ( 2017 ) average GPA in other courses could provide more information about how the LA program impacts course other than the ones in which the LA exposure occurred. In either of these situations, it would be interesting to see if the LA program would continue to appear to have a greater impact for male students than female. In short, there are a wide variety of student outcomes that have yet to be fully investigated with data from the LA model and more nuanced information would be a valuable contribution to the research literature.

In this study, we attempt to disentangle the relationship between LA support and course failure in introductory STEM courses. Our results indicate that failure to control for confounding variables underestimates the relationship between exposure to the LA program and course failure. The results here extend the prior literature regarding the LA model by providing evidence to suggest that exposure to the program increases student outcomes in subsequent as well as current courses. Programs such as the LA model that facilitate instructional innovations where students are more likely to be successful increase student retention.

Preliminary qualitative work suggests potential hypotheses for the relationship between LA support and student success. Observations of student-LA interactions indicate that LAs develop safe yet vulnerable environments necessary for learning. Undergraduates are more comfortable revealing their thinking to LAs than to TAs and instructors and are therefore better able to receive input about their ideas. Researchers find that LAs exhibit pedagogical skills introduced in the pedagogy course and course experience that promote deep understanding of relevant content as well as critical thinking and questioning needed in higher education (Top, Schoonraad, & Otero, 2018 ). Also, through their interactions with LAs, faculty seem to be learning how to embrace the diversity of student identities and structure educational experiences accordingly. Finally, institutional norms are changing as more courses adopt new ways of teaching students. For example, the applied math department provides additional time on task because of the LA program. Although we do not know if it is the additional time on task, the presence of LAs, or a combination of both that drives the relationship between LA exposure and lower course failure rates, both the additional time and LA exposure occur because of the LA program generally.

Further work is necessary to more fully understand the relationship between the LA program and student success. Although we controlled for several student-level variables, we surely missed key variables that contribute to these relationships. Despite this limitation, the regression analysis represents an improvement over unadjusted comparisons. We used the available institutional data to control for variables related to the selection bias present in each department’s method of assigning students to receive LA support. More research is needed to identify if the emerging themes in the present study are apparent at other institutions. Additional research with data better suited to isolate potential causal effects is also needed to bolster the results presented here. Despite the noted limitations discussed here, the current findings are encouraging for further development and implementation of the LA program in STEM gateway courses. Identifying relationships between models for change and lower course failure rates are helpful for informing future decisions regarding those models.

For more information about joining LASSO and resources available to support LA programs, visit https://www.learningassistantalliance.org /

Abbreviations

Brief Electricity and Magnetism Assessment

Conceptual Survey of Electricity and Magnetism

Force Concept Inventory

Force and Motion Concept Evaluation

Learning Assistant model

Learning assistants

Peer-led team learning

Science, technology, engineering, and mathematics

Caldwell, J. E. (2007). Clickers in the large classroom: current research and best-practice tips. CBE-Life Sci Educ, 6 (1), 9–20.

Article Google Scholar

Chan, J. Y., & Bauer, C. F. (2015). Effect of peer-led team learning (PLTL) on student achievement, attitude, and self-concept in college general chemistry in randomized and quasi experimental designs. J Res Sci Teach, 52 (3), 319–346.

Close, E. W., Mailloux-Huberdeau, J. M., Close, H. G., & Donnelly, D. (2018). Characterization of time scale for detecting impacts of reforms in an undergraduate physics program. In L. Ding, A. Traxler, & Y. Cao (Eds.), AIP Conference Proceedings: 2017 Physics Education Research Conference .

Google Scholar

College Board. (2016). Concordance tables. Retrieved from https://collegereadiness.collegeboard.org/pdf/higher-ed-brief-sat-concordance.pdf

Cracolice, M. S., & Deming, J. C. (2001). Peer-led team learning. Sci Teach, 68 (1), 20.

Crisp, G., Nora, A., & Taggart, A. (2009). Student characteristics, pre-college, college, and environmental factors as predictors of majoring in and earning a STEM degree: an analysis of students attending a Hispanic serving institution. Am Educ Res J, 46 (4), 924–942 Retrieved from http://www.jstor.org/stable/40284742 .

Ding, L., Chabay, R., Sherwood, B., & Beichner, R. (2006). Evaluating an electricity and magnetism assessment tool: brief electricity and magnetism assessment. Physical Rev Special Topics Physics Educ Res, 2 (1), 010105.

Elby, A., Scherr, R. E., Goertzen, R. M., & Conlin, L. (2008). Open-source tutorials in physics sense making. Retrieved from http://umdperg.pbworks.com/w/page/10511238/Tutorials%20from%20the%20UMd%20PERG

Freeman, S., Eddy, S. L., McDonough, M., Smith, M. K., Okoroafor, N., Jordt, H., & Wenderoth, M. P. (2014). Active learning increases student performance in science, engineering, and mathematics. Proc Nat Acad Sci, 111 (23), 8410–8415.

Goertzen, R. M., Brewe, E., Kramer, L. H., Wells, L., & Jones, D. (2011). Moving toward change: institutionalizing reform through implementation of the Learning Assistant model and Open Source Tutorials. Physical Rev Special Topics Physics Education Research, 7 (2), 020105.

Haak, D. C., HilleRisLambers, J., Pitre, E., & Freeman, S. (2011). Increased structure and active learning reduce the achievement gap in introductory biology. Science, 332 (6034), 1213–1216.

Hake, R. R. (1998). Interactive-engagement versus traditional methods: a six-thousand-student survey of mechanics test data for introductory physics courses. Am J Physics, 66 (1), 64–74.

Hestenes, D., Wells, M., & Swackhamer, G. (1992). Force concept inventory. Physics Teach, 30 (3), 141–158.

Jensen, J. L., & Lawson, A. (2011). Effects of collaborative group composition and inquiry instruction on reasoning gains and achievement in undergraduate biology. CBE-Life Sci Educ, 10 (1), 64–73.

Knight, R. (2004). Physics for scientists and engineers: A strategic approach. Upper Saddle River, NJ: Pearson/Addison Wesley.

Learning Assistant Alliance. (2018). About LASSO. Retrieved from https://www.learningassistantalliance.org/modules/public/lasso.php

Long, J. S. (1997). Advanced quantitative techniques in the social sciences series, Vol. 7. Regression models for categorical and limited dependent variables. Thousand Oaks, CA, US.

Martin, T., Rivale, S. D., & Diller, K. R. (2007). Comparison of student learning in challenge-based and traditional instruction in biomedical engineering. Annals of Biomedical Engineering, 35 (8), 1312–1323.

Matz, R. L., Koester, B. P., Fiorini, S., Grom, G., Shepard, L., Stangor, C. G., et al. (2017). Patterns of gendered performance differences in large introductory courses at five research universities. AERA Open, 3 (4), 2332858417743754.

McDermott, L. C., and Shaffer, P. S. (2002). Tutorials in introductory physics. Upper Saddle Ridge, New Jersey: Prentice Hall.

Mitchell, Y. D., Ippolito, J., & Lewis, S. E. (2012). Evaluating peer-led team learning across the two semester general chemistry sequence. Chemistry Education Research and Practice, 13 (3), 378–383.

Otero, V. K. (2015). Effective practices in preservice teacher education. In C. Sandifer & E. Brewe (Eds.), Recruiting and educating future physics teachers: case studies and effective practices (pp. 107–127). College Park: American Physical Society.

Pollock, S. J. (2006). Transferring transformations: Learning gains, student attitudes, and the impacts of multiple instructors in large lecture courses. In P. Heron, L. McCullough, & J. Marx (Eds.), Proceedings of 2005 Physics Education Research Conference (pp. 141–144). Salt Lake City, Utah.

Pollock, S. J. (2009). Longitudinal study of student conceptual understanding in electricity and magnetism. Physical Review Special Topics-Physics Education Research, 5 (2), 1–8.

Talbot, R. M., Hartley, L. M., Marzetta, K., & Wee, B. S. (2015). Transforming undergraduate science education with learning assistants: student satisfaction in large-enrollment courses. J College Sci Teach, 44 (5), 24–30.

Thornton, R. K., & Sokoloff, D. R. (1998). Assessing student learning of Newton’s laws: the force and motion conceptual evaluation and the evaluation of active learning laboratory and lecture curricula. Am J Physics, 66 (4), 338–352.

Thornton, R. K., Kuhl, D., Cummings, K., & Marx, J. (2009). Comparing the force and motion conceptual evaluation and the force concept inventory. Physical review special topics-Physics education research, 5(1), 010105.

Top, L., Schoonraad, S., & Otero, V. (2018). Development of pedagogical knowledge among learning assistants. Int J STEM Educ, 5 (1). https://doi.org/10.1186/s40594-017-0097-9 .

Verbeek, M. (2008). A guide to modern econometrics . West Sussex: Wiley.

Webb, D. C., Stade, E., & Grover, R. (2014). Rousing students’ minds in postsecondary mathematics: the undergraduate learning assistant model. J Math Educ Teach College, 5 (2).

White, J. S. S., Van Dusen, B., & Roualdes, E. A. (2016). The impacts of learning assistants on student learning of physics. arXiv preprint arXiv, 1607.07469 . Retrieved from https://arxiv.org/ftp/arxiv/papers/1607/1607.07469.pdf .

Wilson, S. B., & Varma-Nelson, P. (2016). Small groups, significant impact: a review of peer-led team learning research with implications for STEM education researchers and faculty. J Chem Educ, 93 (10), 1686–1702.

William H. Sewell, (1992) A Theory of Structure: Duality, Agency, and Transformation. American Journal of Sociology 98 (1):1–29

Download references

Acknowledgements

There is no funding for this study.

Availability of data and materials

The datasets generated and/or analyzed during the current study are available in the LAs and Subsequent Course Failure repository, https://github.com/jalzen/LAs-and-Subsequent-Course-Failure .

Author information

Authors and affiliations.

University of Colorado Boulder, 249 UCB, Boulder, CO, 80309, USA

Jessica L. Alzen, Laurie S. Langdon & Valerie K. Otero

You can also search for this author in PubMed Google Scholar

Contributions

JLA managed the data collection and analysis. All authors participated in writing, revising, and approving the final manuscript.

Corresponding author

Correspondence to Jessica L. Alzen .

Ethics declarations

Ethics approval and consent to participate.

The IRB at University of Colorado Boulder (FWA 00003492) determined that this study did not involve human subjects research. The approval letter specifically stated the following:

The IRB determined that the proposed activity is not research involving human subjects as defined by DHHS and/or FDA regulations. IRB review and approval by this organization is not required. This determination applies only to the activities described in the IRB submission and does not apply should any changes be made. If changes are made and there are questions about whether these activities are research involving human subjects in which the organization is engaged, please submit a new request to the IRB for a determination.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License ( http://creativecommons.org/licenses/by/4.0/ ), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

Reprints and permissions

About this article

Cite this article.

Alzen, J.L., Langdon, L.S. & Otero, V.K. A logistic regression investigation of the relationship between the Learning Assistant model and failure rates in introductory STEM courses. IJ STEM Ed 5 , 56 (2018). https://doi.org/10.1186/s40594-018-0152-1

Download citation

Received : 29 August 2018

Accepted : 10 December 2018

Published : 28 December 2018

DOI : https://doi.org/10.1186/s40594-018-0152-1

Share this article

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Learning assistant

- Underrepresented students

Logistic Regression Model Optimization and Case Analysis

Ieee account.

- Change Username/Password

- Update Address

Purchase Details

- Payment Options

- Order History

- View Purchased Documents

Profile Information

- Communications Preferences

- Profession and Education

- Technical Interests

- US & Canada: +1 800 678 4333

- Worldwide: +1 732 981 0060

- Contact & Support

- About IEEE Xplore

- Accessibility

- Terms of Use

- Nondiscrimination Policy

- Privacy & Opting Out of Cookies

A not-for-profit organization, IEEE is the world's largest technical professional organization dedicated to advancing technology for the benefit of humanity. © Copyright 2024 IEEE - All rights reserved. Use of this web site signifies your agreement to the terms and conditions.

Predicting 30-Day In-Hospital Mortality in Surgical Patients: A Logistic Regression Model Using Comprehensive Perioperative Data

- Find this author on Google Scholar

- Find this author on PubMed

- Search for this author on this site

- ORCID record for Jonathan Hofmann

- ORCID record for Andrew Bouras

- For correspondence: [email protected]

- ORCID record for Dhruv Patel

- ORCID record for Nitin Chetla

- Info/History

- Preview PDF

Background: Accurate prediction of postoperative outcomes, particularly 30-day in-hospital mortality, is crucial for improving surgical planning, patient counseling, and resource allocation. This study aimed to develop and validate a logistic regression model to predict 30-day in-hospital mortality using comprehensive perioperative data from the INSPIRE dataset. Methods: We conducted a retrospective analysis of the INSPIRE dataset, comprising approximately 130,000 surgical cases from Seoul National University Hospital between 2011 and 2020. The primary objective was to develop a logistic regression model using preoperative and intraoperative variables. Key predictors included demographic information, clinical variables, laboratory values, and the emergency status of the operation. Missing data were addressed through multiple imputation, and feature selection was performed using univariate analysis and clinical judgment. The model was validated using cross-validation and assessed for performance using ROC AUC and precision-recall AUC metrics. Results: The logistic regression model demonstrated high predictive accuracy, with an ROC AUC of 0.978 and a precision-recall AUC of 0.958. Significant predictors of 30- day in-hospital mortality included emergency status of the operation (OR: 1.56), preoperative prothrombin time (PT/INR) (OR: 1.53), potassium levels (OR: 1.49), body mass index (BMI) (OR: 1.37), serum sodium (OR: 1.11), creatinine levels (OR: 1.04), and albumin levels (OR: 0.85). Conclusion: This study successfully developed and validated a logistic regression model to predict 30-day in-hospital mortality using comprehensive perioperative data. The models high predictive accuracy and reliance on routinely collected clinical and laboratory data enhance its feasibility for integration into existing clinical workflows, providing real-time risk assessments to healthcare providers. Future research should focus on external validation in diverse clinical settings and prospective studies to assess the practical impact of this predictive model.

Competing Interest Statement

The authors have declared no competing interest.

Funding Statement

This study did not receive any funding

Author Declarations

I confirm all relevant ethical guidelines have been followed, and any necessary IRB and/or ethics committee approvals have been obtained.

I confirm that all necessary patient/participant consent has been obtained and the appropriate institutional forms have been archived, and that any patient/participant/sample identifiers included were not known to anyone (e.g., hospital staff, patients or participants themselves) outside the research group so cannot be used to identify individuals.

I understand that all clinical trials and any other prospective interventional studies must be registered with an ICMJE-approved registry, such as ClinicalTrials.gov. I confirm that any such study reported in the manuscript has been registered and the trial registration ID is provided (note: if posting a prospective study registered retrospectively, please provide a statement in the trial ID field explaining why the study was not registered in advance).

I have followed all appropriate research reporting guidelines, such as any relevant EQUATOR Network research reporting checklist(s) and other pertinent material, if applicable.

Data Availability

All data produced in the present work are contained in the manuscript

https://github.com/hofmannj0n/biomedical-research

View the discussion thread.

Thank you for your interest in spreading the word about medRxiv.

NOTE: Your email address is requested solely to identify you as the sender of this article.

Citation Manager Formats

- EndNote (tagged)

- EndNote 8 (xml)

- RefWorks Tagged

- Ref Manager

- Tweet Widget

- Facebook Like

- Google Plus One

- Addiction Medicine (324)

- Allergy and Immunology (628)

- Anesthesia (165)

- Cardiovascular Medicine (2384)

- Dentistry and Oral Medicine (289)

- Dermatology (207)

- Emergency Medicine (380)

- Endocrinology (including Diabetes Mellitus and Metabolic Disease) (839)

- Epidemiology (11778)

- Forensic Medicine (10)

- Gastroenterology (703)

- Genetic and Genomic Medicine (3752)

- Geriatric Medicine (350)

- Health Economics (635)

- Health Informatics (2401)

- Health Policy (935)

- Health Systems and Quality Improvement (900)

- Hematology (341)

- HIV/AIDS (782)

- Infectious Diseases (except HIV/AIDS) (13324)

- Intensive Care and Critical Care Medicine (769)

- Medical Education (366)

- Medical Ethics (105)

- Nephrology (398)

- Neurology (3514)

- Nursing (198)

- Nutrition (528)

- Obstetrics and Gynecology (675)

- Occupational and Environmental Health (665)

- Oncology (1825)

- Ophthalmology (538)

- Orthopedics (219)

- Otolaryngology (287)

- Pain Medicine (234)

- Palliative Medicine (66)

- Pathology (446)

- Pediatrics (1035)

- Pharmacology and Therapeutics (426)

- Primary Care Research (422)

- Psychiatry and Clinical Psychology (3181)

- Public and Global Health (6152)

- Radiology and Imaging (1281)

- Rehabilitation Medicine and Physical Therapy (749)

- Respiratory Medicine (830)

- Rheumatology (379)

- Sexual and Reproductive Health (372)

- Sports Medicine (323)

- Surgery (402)

- Toxicology (50)

- Transplantation (172)

- Urology (146)

- Introduction

- Article Information