Tips to Create and Test a Value Hypothesis: A Step-by-Step Guide

Developing a robust value hypothesis is crucial as you bring a new product to market, guiding your startup toward answering a genuine market need. Constructing a verifiable value hypothesis anchors your product's development process in customer feedback and data-driven insight rather than assumptions.

This framework enables you to clarify the potential value your product offers and provides a foundation for testing and refining your approach, significantly reducing the risk of misalignment with your target market. To set the stage for success, employ logical structures and objective measures, such as creating a minimum viable product, to effectively validate your product's value proposition.

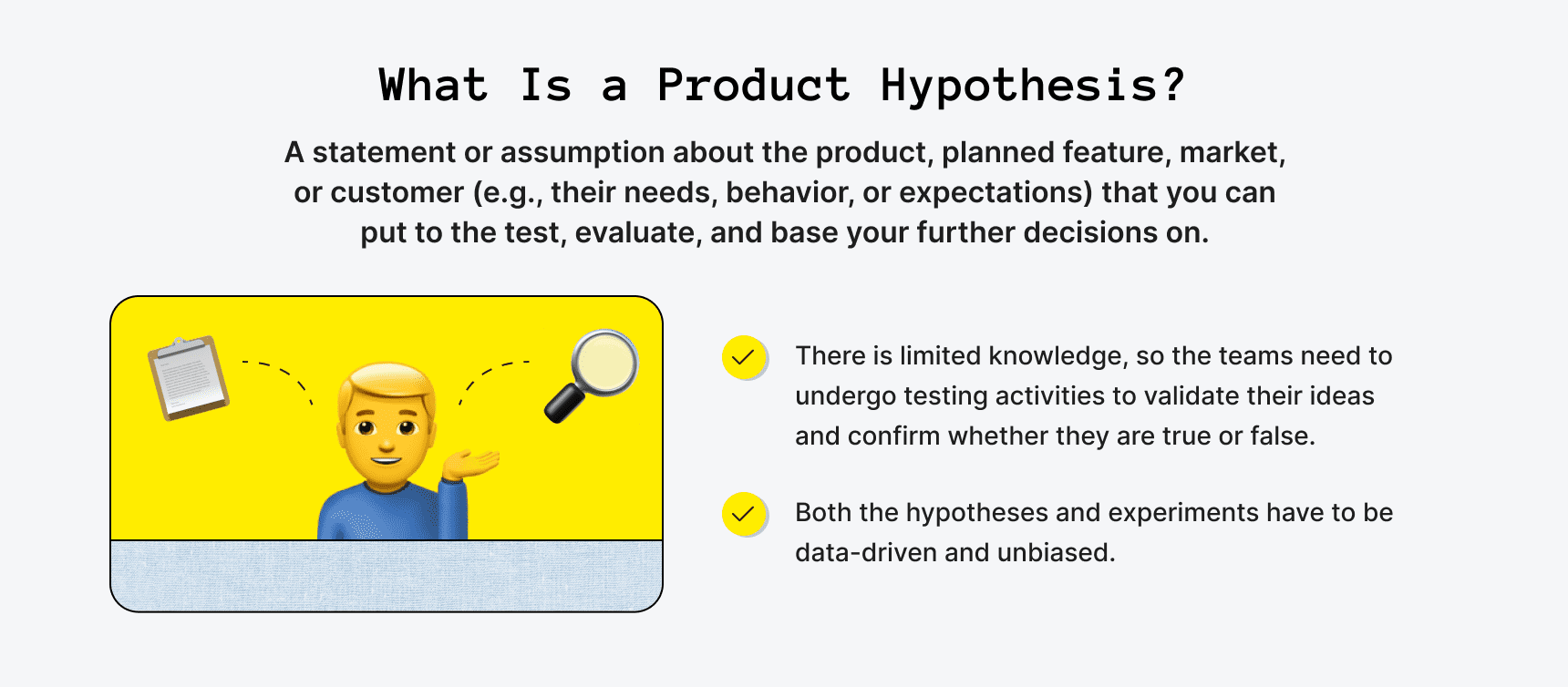

What Is a Verifiable Value Hypothesis?

A verifiable value hypothesis articulates your belief about how your product will deliver value to customers. It is a testable prediction aimed at demonstrating the expected outcomes for your target market.

To ensure that your value hypothesis is verifiable, it should adhere to the following conditions:

- Specific : Clearly defines the value proposition and the customer segment.

- Measurable : Includes metrics by which you can assess success or failure.

- Achievable : Realistic based on your resources and market conditions.

- Relevant : Directly addresses a significant customer need or desire.

- Time-Bound : Has a defined period for testing and validation.

When you create a value hypothesis, you're essentially forming the backbone of your business model. It goes beyond a mere assumption and relies on customer feedback data to inform its development. You also safeguard it with objective measures, such as a minimum viable product, to test the hypothesis in real life.

By articulating and examining a verifiable value hypothesis, you understand your product's potential impact and reduce the risk associated with new product development. It's about making informed decisions that increase your confidence in the product's potential success before committing significant resources.

Value Hypotheses vs. Growth Hypotheses

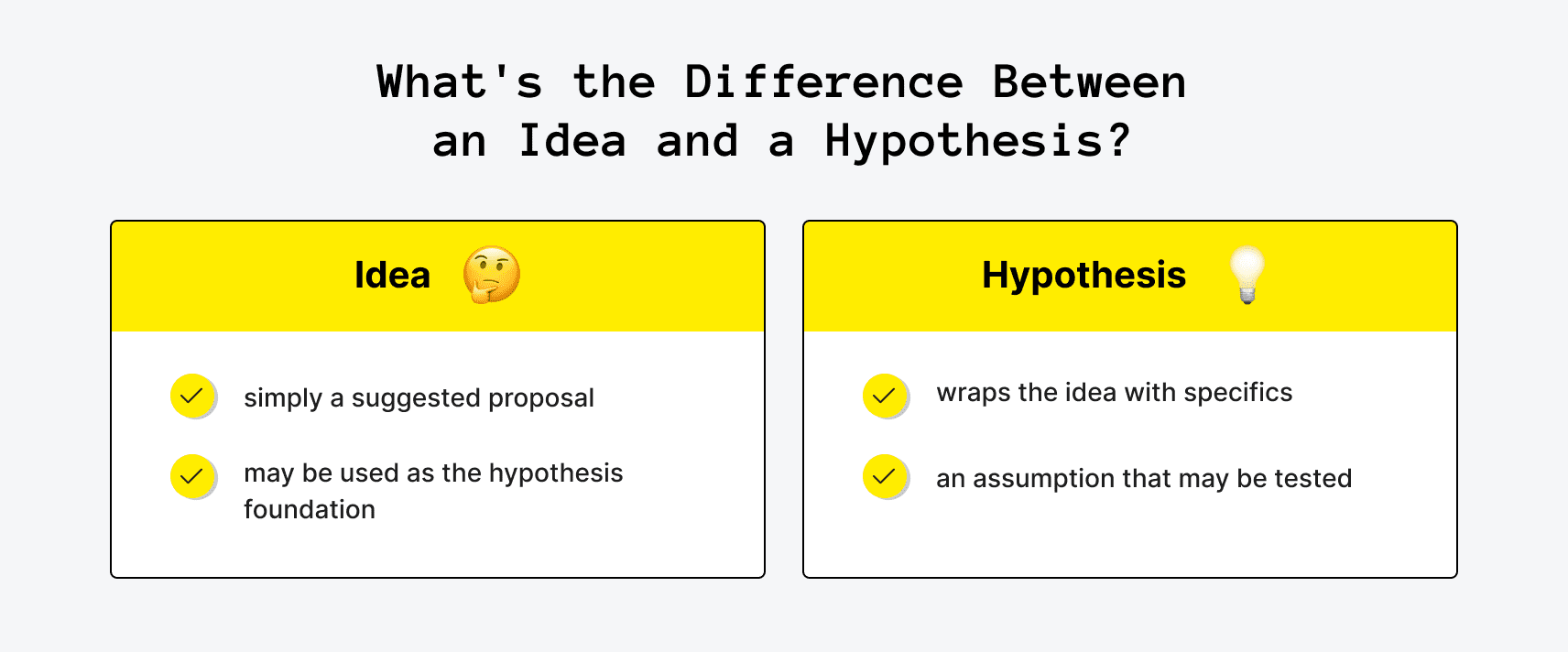

Value hypotheses and growth hypotheses are two distinct concepts often used in business, especially in the context of startups and product development.

Value Hypotheses : A value hypothesis is centered around the product itself. It focuses on whether the product truly delivers customer value. Key questions include whether the product meets a real need, how it compares to alternatives, and if customers are willing to pay for it. Valuing a value hypothesis is crucial before a business scales its operations.

Growth Hypotheses : A growth hypothesis, on the other hand, deals with the scalability and marketing aspects of the business. It involves strategies and channels used to acquire new customers. The focus is on how to grow the customer base, the cost-effectiveness of growth strategies, and the sustainability of growth. Validating a growth hypothesis is typically the next step after confirming that the product has value to the customers.

In practice, both hypotheses are crucial for the success of a business. A value hypothesis ensures the product is desirable and needed, while a growth hypothesis ensures that the product can reach a larger market effectively.

Tips to Create and Test a Verifiable Value Hypothesis

Creating a value hypothesis is crucial for understanding what drives customer interest in your product. It's an educated guess that requires rigor to define and clarity to test. When developing a value hypothesis, you're attempting to validate assumptions about your product's value to customers. Here are concise tips to help you with this process:

1. Understanding Your Market and Customers

Before formulating a hypothesis, you need a deep understanding of your market and potential customers. You're looking to uncover their pain points and needs which your product aims to address.

Begin with thorough market research and collect customer feedback to ensure your idea is built upon a solid foundation of real-world insights. This understanding is pivotal as it sets the tone for a relevant and testable hypothesis.

- Define Your Value Proposition Clearly: Articulate your product's value to the user. What problem does it solve? How does it improve the user's life or work?

- Identify Your Target Audience. Determine who your ideal customers are. Understand their needs, pain points, and how they currently address the problem your product intends to solve.

2. Defining Clear Assumptions

The next step is to outline clear assumptions based on your idea that you believe will bring value to your customers. Each assumption should be an assertion that directly relates to how your customers will find your product valuable.

For example, if your product is a task management app, you might assume that the ability to share task lists with team members is a pain point for your potential customers. Remember, assumptions are not facts—they are educated guesses that need verification.

3. Identify Key Metrics for Your Hypothesis Test

Once you've defined your assumptions, delineate the framework for testing your value hypothesis. This involves designing experiments that validate or invalidate your assumptions with measurable outcomes. Ensure that your hypothesis can be tested with measurable outcomes. This could be in the form of user engagement metrics, conversion rates, or customer satisfaction scores.

Determine what success looks like and define objective metrics that will prove your product's value. This could be user engagement, conversion rates, or revenue. Choosing the right metrics is essential for an accurate test. For instance, in your test, you might measure the increase in customer retention or the decrease in time spent on task organization with your app. Construct your test so that the results are unequivocal and actionable.

4. Construct a Testable Proposition

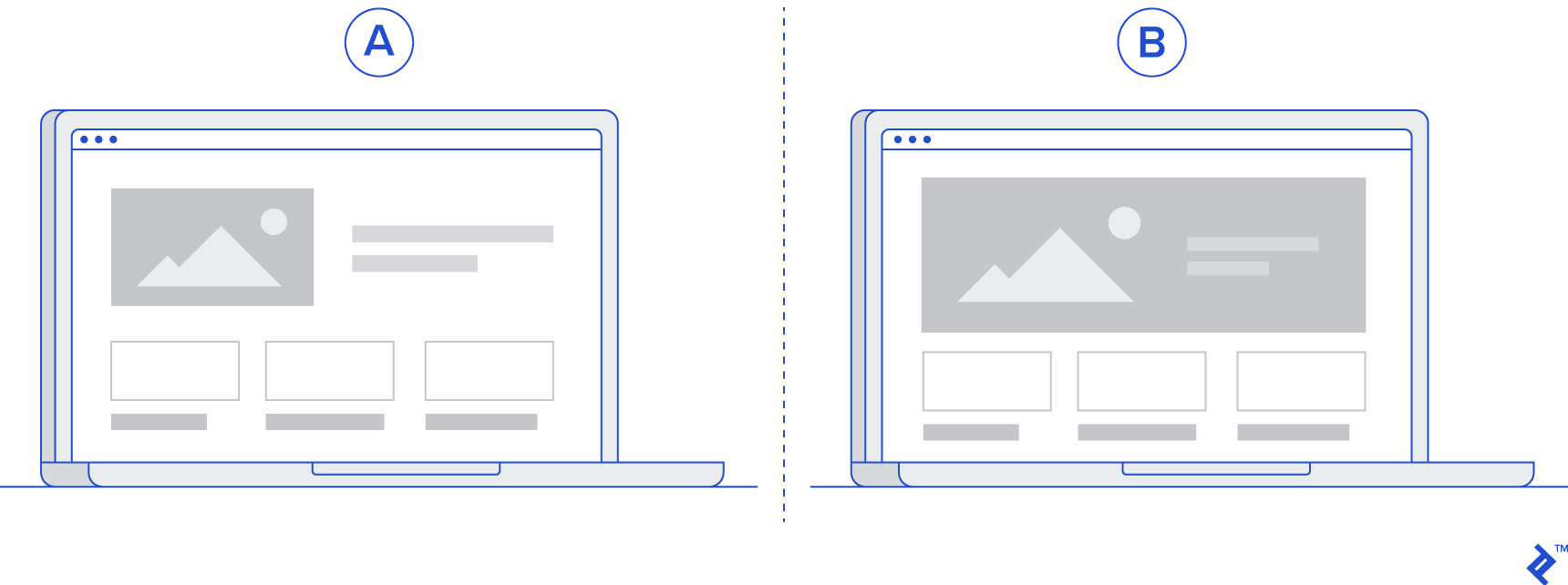

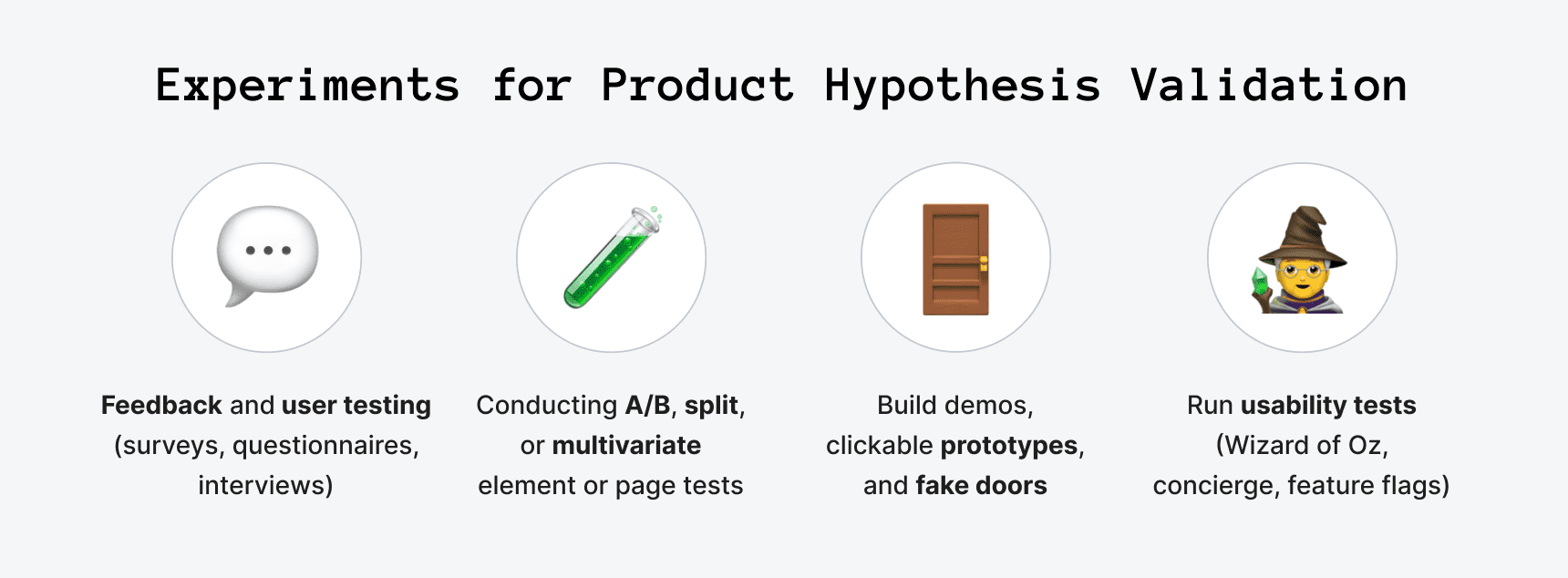

Formulate your hypothesis in a way that can be tested empirically. Use qualitative research methods such as interviews, surveys, and observation to gather data about your potential users. Formulate your value hypothesis based on insights from this research. Plan experiments that can validate or invalidate your value hypothesis. This might involve A/B testing, user testing sessions, or pilot programs.

A good example is to posit that "Introducing feature X will increase user onboarding by Y%." Avoid complexity by testing one variable simultaneously. This helps you identify which changes are actually making a difference.

5. Applying Evidence to Innovation

When your data indicates a promising avenue for product development , it's imperative that you validate your growth hypothesis through experimentation. Align your value proposition with the evidence at hand.

Develop a simplified version of your product that allows you to test the core value proposition with real users without investing in full-scale production. Start by crafting a minimum viable product ( MVP ) to begin testing in the market. This approach helps mitigate risk by not investing heavily in unproven ideas. Use analytics tools to collect data on how users interact with your MVP. Look for patterns that either support or contradict your value hypothesis.

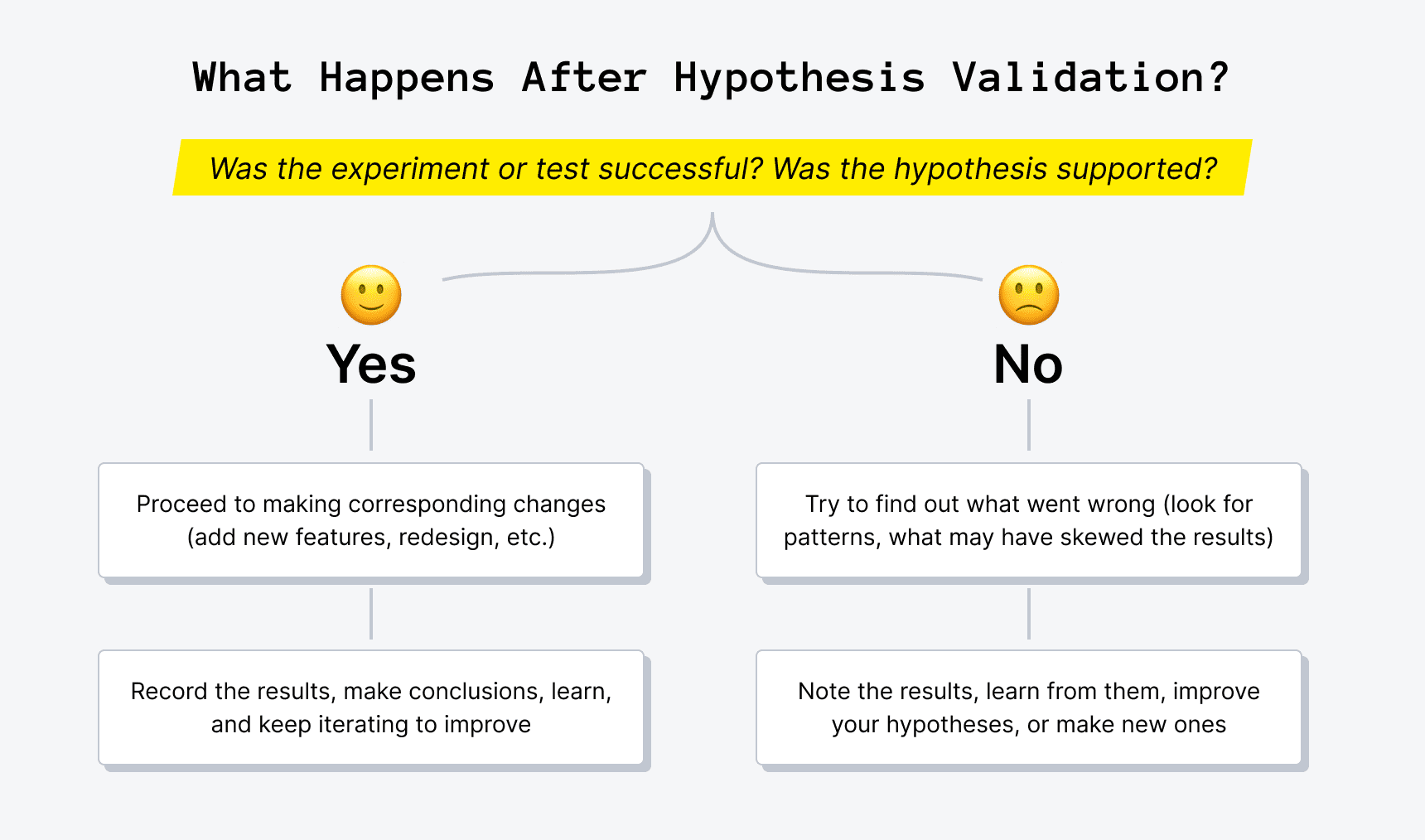

If the data suggests that your value hypothesis is wrong, be prepared to revise your hypothesis or pivot your product strategy accordingly.

6. Gather Customer Feedback

Integrating customer feedback into your product development process can create a more tailored value proposition. This step is crucial in refining your product to meet user needs and validate your hypotheses.

Use customer feedback tools to collect data on how users interact with your MVP. Look for patterns that either support or contradict your value hypothesis. Here are some ways to collect feedback effectively :

- Feedback portals

- User testing sessions

- In-app feedback

- Website widgets

- Direct interviews

- Focus groups

- Feedback forums

Create a centralized place for product feedback to keep track of different types of customer feedback and improve SaaS products while listening to their customers. Rapidr helps companies be more customer-centric by consolidating feedback across different apps, prioritizing requests, having a discourse with customers, and closing the feedback loop.

7. Analyze and Iterate Quickly

Review the data and analyze customer feedback to see if it supports your hypothesis. If your hypothesis is not supported, iterate on your assumptions, and test again. Keep a detailed record of your hypotheses, experiments, and findings. This documentation will help you understand the evolution of your product and guide future decision-making.

Use the feedback and data from your tests to make quick iterations of your product and drive product development . This allows you to refine your value proposition and improve the fit with your target audience. Engage with your users throughout the process. Real-world feedback is invaluable and can provide insights that data alone cannot.

- Identify Patterns : What commonalities are present in the feedback?

- Implement Changes : Prioritize and make adjustments based on customer insights.

9. Align with Business Goals and Stay Customer-Focused

Ensure that your value hypothesis aligns with the broader goals of your business. The value provided should ultimately contribute to the success of the company. Remember that the ultimate goal of your value hypothesis is to deliver something that customers find valuable. Maintain a strong focus on customer needs and satisfaction throughout the process.

10. Communicate with Stakeholders and Update them

Keep all stakeholders informed about your findings and the implications for the product. Clear communication helps ensure everyone is aligned and understands the rationale behind product decisions. Communicate and close the feedback loop with the help of a product changelog through which you can announce new changes and engage with customers.

Understanding and validating a value hypothesis is essential for any business, particularly startups. It involves deeply exploring whether a product or service meets customer needs and offers real value. This process ensures that resources are invested in desirable and useful products, and it's a critical step before considering scalability and growth.

By focusing on the value hypothesis, businesses can better align their offerings with market demand, leading to more sustainable success. Placing customer feedback at the center of the process of testing a value hypothesis helps you develop a product that meets your customers' needs and stands out in the market.

Rapidr helps companies be more customer-centric by consolidating feedback across different apps, prioritizing requests, having a discourse with customers, and closing the feedback loop.

Build better products with user feedback

Rapidr helps SaaS companies understand what customers need through feedback, prioritize what to build next, inform the roadmap, and notify customers on product releases

- Business Essentials

- Leadership & Management

- Credential of Leadership, Impact, and Management in Business (CLIMB)

- Entrepreneurship & Innovation

- Digital Transformation

- Finance & Accounting

- Business in Society

- For Organizations

- Support Portal

- Media Coverage

- Founding Donors

- Leadership Team

- Harvard Business School →

- HBS Online →

- Business Insights →

Business Insights

Harvard Business School Online's Business Insights Blog provides the career insights you need to achieve your goals and gain confidence in your business skills.

- Career Development

- Communication

- Decision-Making

- Earning Your MBA

- Negotiation

- News & Events

- Productivity

- Staff Spotlight

- Student Profiles

- Work-Life Balance

- AI Essentials for Business

- Alternative Investments

- Business Analytics

- Business Strategy

- Business and Climate Change

- Design Thinking and Innovation

- Digital Marketing Strategy

- Disruptive Strategy

- Economics for Managers

- Entrepreneurship Essentials

- Financial Accounting

- Global Business

- Launching Tech Ventures

- Leadership Principles

- Leadership, Ethics, and Corporate Accountability

- Leading with Finance

- Management Essentials

- Negotiation Mastery

- Organizational Leadership

- Power and Influence for Positive Impact

- Strategy Execution

- Sustainable Business Strategy

- Sustainable Investing

- Winning with Digital Platforms

A Beginner’s Guide to Hypothesis Testing in Business

- 30 Mar 2021

Becoming a more data-driven decision-maker can bring several benefits to your organization, enabling you to identify new opportunities to pursue and threats to abate. Rather than allowing subjective thinking to guide your business strategy, backing your decisions with data can empower your company to become more innovative and, ultimately, profitable.

If you’re new to data-driven decision-making, you might be wondering how data translates into business strategy. The answer lies in generating a hypothesis and verifying or rejecting it based on what various forms of data tell you.

Below is a look at hypothesis testing and the role it plays in helping businesses become more data-driven.

Access your free e-book today.

What Is Hypothesis Testing?

To understand what hypothesis testing is, it’s important first to understand what a hypothesis is.

A hypothesis or hypothesis statement seeks to explain why something has happened, or what might happen, under certain conditions. It can also be used to understand how different variables relate to each other. Hypotheses are often written as if-then statements; for example, “If this happens, then this will happen.”

Hypothesis testing , then, is a statistical means of testing an assumption stated in a hypothesis. While the specific methodology leveraged depends on the nature of the hypothesis and data available, hypothesis testing typically uses sample data to extrapolate insights about a larger population.

Hypothesis Testing in Business

When it comes to data-driven decision-making, there’s a certain amount of risk that can mislead a professional. This could be due to flawed thinking or observations, incomplete or inaccurate data , or the presence of unknown variables. The danger in this is that, if major strategic decisions are made based on flawed insights, it can lead to wasted resources, missed opportunities, and catastrophic outcomes.

The real value of hypothesis testing in business is that it allows professionals to test their theories and assumptions before putting them into action. This essentially allows an organization to verify its analysis is correct before committing resources to implement a broader strategy.

As one example, consider a company that wishes to launch a new marketing campaign to revitalize sales during a slow period. Doing so could be an incredibly expensive endeavor, depending on the campaign’s size and complexity. The company, therefore, may wish to test the campaign on a smaller scale to understand how it will perform.

In this example, the hypothesis that’s being tested would fall along the lines of: “If the company launches a new marketing campaign, then it will translate into an increase in sales.” It may even be possible to quantify how much of a lift in sales the company expects to see from the effort. Pending the results of the pilot campaign, the business would then know whether it makes sense to roll it out more broadly.

Related: 9 Fundamental Data Science Skills for Business Professionals

Key Considerations for Hypothesis Testing

1. alternative hypothesis and null hypothesis.

In hypothesis testing, the hypothesis that’s being tested is known as the alternative hypothesis . Often, it’s expressed as a correlation or statistical relationship between variables. The null hypothesis , on the other hand, is a statement that’s meant to show there’s no statistical relationship between the variables being tested. It’s typically the exact opposite of whatever is stated in the alternative hypothesis.

For example, consider a company’s leadership team that historically and reliably sees $12 million in monthly revenue. They want to understand if reducing the price of their services will attract more customers and, in turn, increase revenue.

In this case, the alternative hypothesis may take the form of a statement such as: “If we reduce the price of our flagship service by five percent, then we’ll see an increase in sales and realize revenues greater than $12 million in the next month.”

The null hypothesis, on the other hand, would indicate that revenues wouldn’t increase from the base of $12 million, or might even decrease.

Check out the video below about the difference between an alternative and a null hypothesis, and subscribe to our YouTube channel for more explainer content.

2. Significance Level and P-Value

Statistically speaking, if you were to run the same scenario 100 times, you’d likely receive somewhat different results each time. If you were to plot these results in a distribution plot, you’d see the most likely outcome is at the tallest point in the graph, with less likely outcomes falling to the right and left of that point.

With this in mind, imagine you’ve completed your hypothesis test and have your results, which indicate there may be a correlation between the variables you were testing. To understand your results' significance, you’ll need to identify a p-value for the test, which helps note how confident you are in the test results.

In statistics, the p-value depicts the probability that, assuming the null hypothesis is correct, you might still observe results that are at least as extreme as the results of your hypothesis test. The smaller the p-value, the more likely the alternative hypothesis is correct, and the greater the significance of your results.

3. One-Sided vs. Two-Sided Testing

When it’s time to test your hypothesis, it’s important to leverage the correct testing method. The two most common hypothesis testing methods are one-sided and two-sided tests , or one-tailed and two-tailed tests, respectively.

Typically, you’d leverage a one-sided test when you have a strong conviction about the direction of change you expect to see due to your hypothesis test. You’d leverage a two-sided test when you’re less confident in the direction of change.

4. Sampling

To perform hypothesis testing in the first place, you need to collect a sample of data to be analyzed. Depending on the question you’re seeking to answer or investigate, you might collect samples through surveys, observational studies, or experiments.

A survey involves asking a series of questions to a random population sample and recording self-reported responses.

Observational studies involve a researcher observing a sample population and collecting data as it occurs naturally, without intervention.

Finally, an experiment involves dividing a sample into multiple groups, one of which acts as the control group. For each non-control group, the variable being studied is manipulated to determine how the data collected differs from that of the control group.

Learn How to Perform Hypothesis Testing

Hypothesis testing is a complex process involving different moving pieces that can allow an organization to effectively leverage its data and inform strategic decisions.

If you’re interested in better understanding hypothesis testing and the role it can play within your organization, one option is to complete a course that focuses on the process. Doing so can lay the statistical and analytical foundation you need to succeed.

Do you want to learn more about hypothesis testing? Explore Business Analytics —one of our online business essentials courses —and download our Beginner’s Guide to Data & Analytics .

About the Author

12 min read

Value Hypothesis 101: A Product Manager's Guide

Talk to sales.

Humans make assumptions every day—it’s our brain’s way of making sense of the world around us, but assumptions are only valuable if they're verifiable . That’s where a value hypothesis comes in as your starting point.

A good hypothesis goes a step beyond an assumption. It’s a verifiable and validated guess based on the value your product brings to your real-life customers. When you verify your hypothesis, you confirm that the product has real-world value, thus you have a higher chance of product success.

What Is a Verifiable Value Hypothesis?

A value hypothesis is an educated guess about the value proposition of your product. When you verify your hypothesis , you're using evidence to prove that your assumption is correct. A hypothesis is verifiable if it does not prove false through experimentation or is shown to have rational justification through data, experiments, observation, or tests.

The most significant benefit of verifying a hypothesis is that it helps you avoid product failure and helps you build your product to your customers’ (and potential customers’) needs.

Verifying your assumptions is all about collecting data. Without data obtained through experiments, observations, or tests, your hypothesis is unverifiable, and you can’t be sure there will be a market need for your product.

A Verifiable Value Hypothesis Minimizes Risk and Saves Money

When you verify your hypothesis, you’re less likely to release a product that doesn’t meet customer expectations—a waste of your company’s resources. Harvard Business School explains that verifying a business hypothesis “...allows an organization to verify its analysis is correct before committing resources to implement a broader strategy.”

If you verify your hypothesis upfront, you’ll lower risk and have time to work out product issues.

UserVoice Validation makes product validation accessible to everyone. Consider using its research feature to speed up your hypothesis verification process.

Value Hypotheses vs. Growth Hypotheses

Your value hypothesis focuses on the value of your product to customers. This type of hypothesis can apply to a product or company and is a building block of product-market fit .

A growth hypothesis is a guess at how your business idea may develop in the long term based on how potential customers may find your product. It’s meant for estimating business model growth rather than individual products.

Because your value hypothesis is really the foundation for your growth hypothesis, you should focus on value hypothesis tests first and complete growth hypothesis tests to estimate business growth as a whole once you have a viable product.

4 Tips to Create and Test a Verifiable Value Hypothesis

A verifiable hypothesis needs to be based on a logical structure, customer feedback data , and objective safeguards like creating a minimum viable product. Validating your value significantly reduces risk . You can prevent wasting money, time, and resources by verifying your hypothesis in early-stage development.

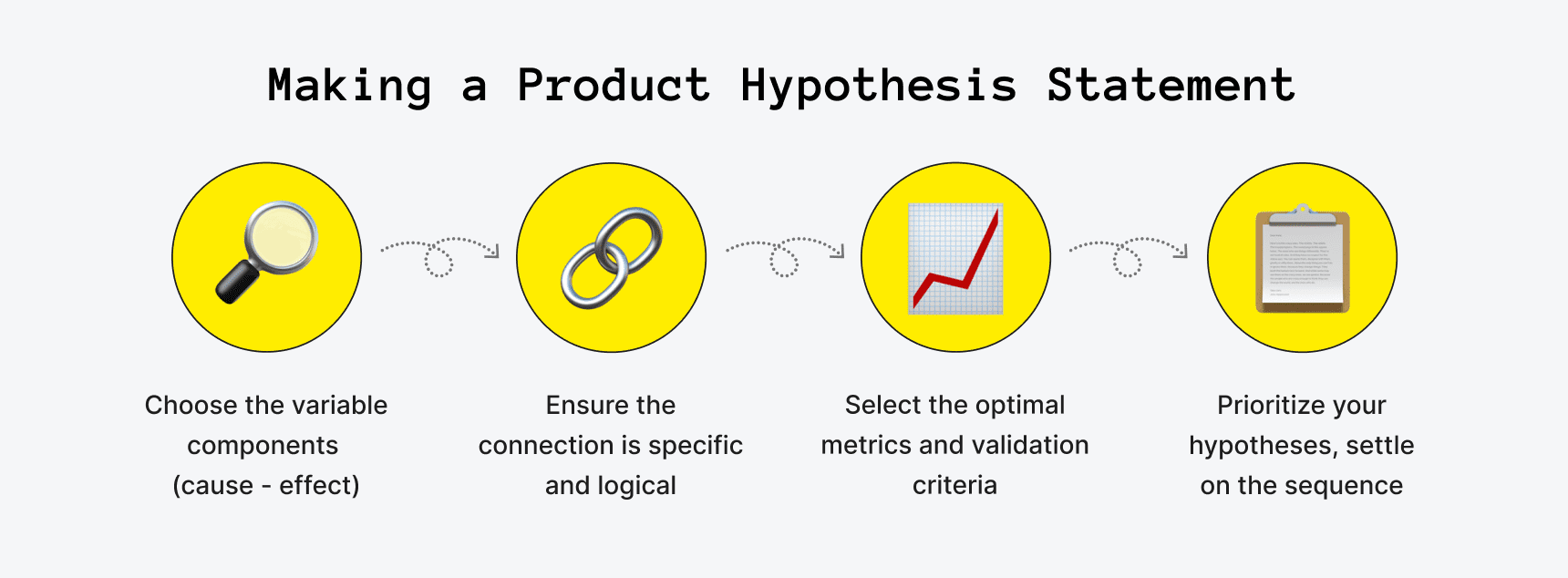

A good value hypothesis utilizes a framework (like the template below), data, and checks/balances to avoid bias.

1. Use a Template to Structure Your Value Hypothesis

By using a template structure, you can create an educated guess that includes the most important elements of a hypothesis—the who, what, where, when, and why. If you don’t structure your hypothesis correctly, you may only end up with a flimsy or leap-of-faith assumption that you can’t verify.

A true hypothesis uses a few guesses about your product and organizes them so that you can verify or falsify your assumptions. Using a template to structure your hypothesis can ensure that you’re not missing the specifics.

You can’t just throw a hypothesis together and think it will answer the question of whether your product is valuable or not. If you do, you could end up with faulty data informed by bias , a skewed significance level from polling the wrong people, or only a vague idea of what your customer would actually pay for your product.

A template will help keep your hypothesis on track by standardizing the structure of the hypothesis so that each new hypothesis always includes the specifics of your client personas, the cost of your product, and client or customer pain points.

A value hypothesis template might look like:

[Client] will spend [cost] to purchase and use our [title of product/service] to solve their [specific problem] OR help them overcome [specific obstacle].

An example of your hypothesis might look like:

B2B startups will spend $500/mo to purchase our resource planning software to solve resource over-allocation and employee burnout.

By organizing your ideas and the important elements (who, what, where, when, and why), you can come up with a hypothesis that actually answers the question of whether your product is useful and valuable to your ideal customer.

2. Turn Customer Feedback into Data to Support Your Hypothesis

Once you have your hypothesis, it’s time to figure out whether it’s true—or, more accurately, prove that it’s valid. Since a hypothesis is never considered “100% proven,” it’s referred to as either valid or invalid based on the information you discover in your experiments or tests. Additionally, your results could lead to an alternative hypothesis, which is helpful in refining your core idea.

To support value hypothesis testing, you need data. To do that, you'll want to collect customer feedback . A customer feedback management tool can also make it easier for your team to access the feedback and create strategies to implement or improve customer concerns.

If you find that potential clients are not expressing pain points that could be solved with your product or you’re not seeing an interest in the features you hope to add, you can adjust your hypothesis and absorb a lower risk. Because you didn’t invest a lot of time and money into creating the product yet, you should have more resources to put toward the product once you work out the kinks.

On the other hand, if you find that customers are requesting features your product offers or pain points your product could solve, then you can move forward with product development, confident that your future customers will value (and spend money on) the product you’re creating.

A customer feedback management tool like UserVoice can empower you to challenge assumptions from your colleagues (often based on anecdotal information) which find their way into team decision making . Having data to reevaluate an assumption helps with prioritization, and it confirms that you’re focusing on the right things as an organization.

3. Validate Your Product

Since you have a clear idea of who your ideal customer is at this point and have verified their need for your product, it’s time to validate your product and decide if it’s better than your competitors’.

At this point, simply asking your customers if they would buy your product (or spend more on your product) instead of a competitor’s isn’t enough confirmation that you should move forward, and customers may be biased or reluctant to provide critical feedback.

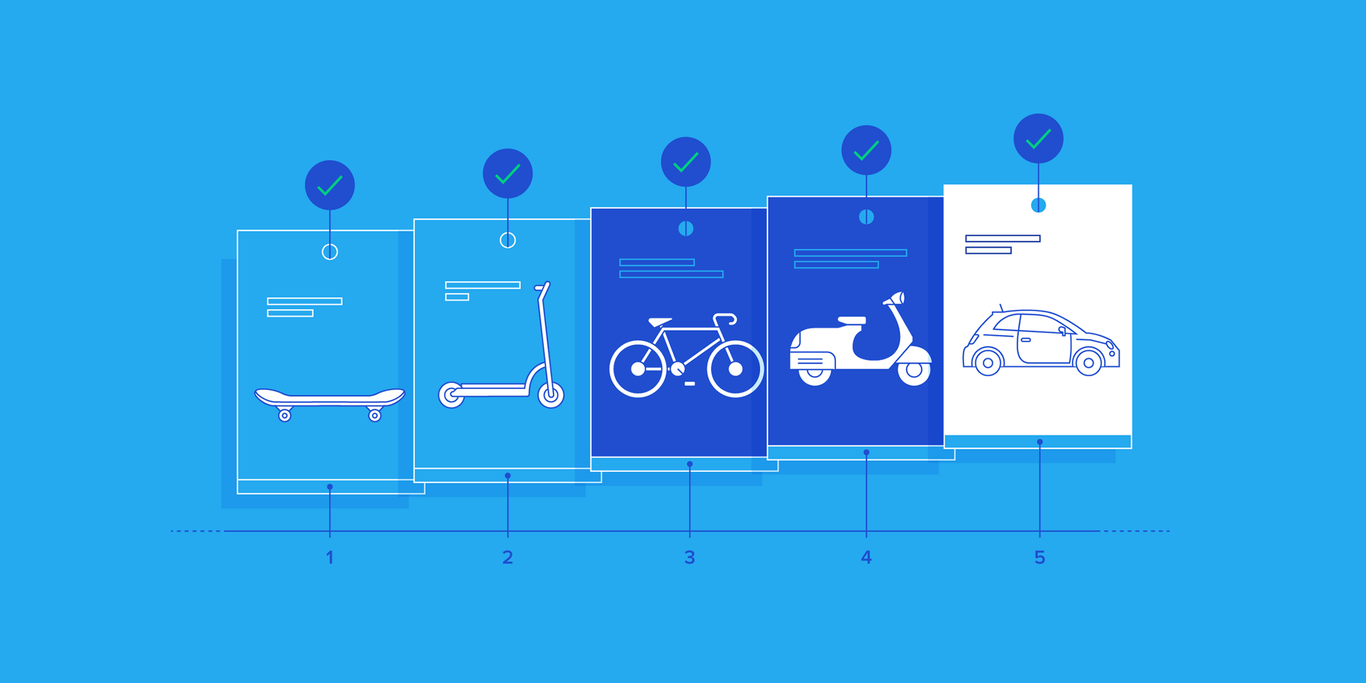

Instead, create a minimum viable product (MVP). An MVP is a working, bare-bones version of the product that you can test out without risking your whole budget. Hypothesis testing with an MVP simulates the product experience for customers and, based on their actions and usage, validates that the full product will generate revenue and be successful.

If you take the steps to first verify and then validate your hypothesis using data, your product is more likely to do well. Your focus will be on the aspect that matters most—whether your customer actually wants and would invest money in purchasing the product.

4. Use Safeguards to Remain Objective

One of the pitfalls of believing in your product and attempting to validate it is that you’re subject to confirmation bias . Because you want your product to succeed, you may pay more attention to the answers in the collected data that affirm the value of your product and gloss over the information that may lead you to conclude that your hypothesis is actually false. Confirmation bias could easily cloud your vision or skew your metrics without you even realizing it.

Since it’s hard to know when you’re engaging in confirmation bias, it’s good to have safeguards in place to keep you in check and aligned with the purpose of objectively evaluating your value hypothesis.

Safeguards include sharing your findings with third-party experts or simply putting yourself in the customer’s shoes.

Third-party experts are the business version of seeking a peer review. External parties don’t stand to benefit from the outcome of your verification and validation process, so your work is verified and validated objectively. You gain the benefit of knowing whether your hypothesis is valid in the eyes of the people who aren’t stakeholders without the risk of confirmation bias.

In addition to seeking out objective minds, look into potential counter-arguments , such as customer objections (explicit or imagined). What might your customer think about investing the time to learn how to use your product? Will they think the value is commensurate with the monetary cost of the product?

When running an experiment on validating your hypothesis, it’s important not to elevate the importance of your beliefs over the objective data you collect. While it can be exciting to push for the validity of your idea, it can lead to false assumptions and the permission of weak evidence.

Validation Is the Key to Product Success

With your new value hypothesis in hand, you can confidently move forward, knowing that there’s a true need, desire, and market for your product.

Because you’ve verified and validated your guesses, there’s less of a chance that you’re wrong about the value of your product, and there are fewer financial and resource risks for your company. With this strong foundation and the new information you’ve uncovered about your customers, you can add even more value to your product or use it to make more products that fit the market and user needs.

If you think customer feedback management software would be useful in your hypothesis validation process, consider opting into our free trial to see how UserVoice can help.

Heather Tipton

Start your free trial.

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

Margin Size

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

7.1: Introduction to Hypothesis Testing

- Last updated

- Save as PDF

- Page ID 79051

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\( \newcommand{\id}{\mathrm{id}}\) \( \newcommand{\Span}{\mathrm{span}}\)

( \newcommand{\kernel}{\mathrm{null}\,}\) \( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\) \( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\) \( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\id}{\mathrm{id}}\)

\( \newcommand{\kernel}{\mathrm{null}\,}\)

\( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\)

\( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\)

\( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\Span}{\mathrm{span}}\) \( \newcommand{\AA}{\unicode[.8,0]{x212B}}\)

\( \newcommand{\vectorA}[1]{\vec{#1}} % arrow\)

\( \newcommand{\vectorAt}[1]{\vec{\text{#1}}} % arrow\)

\( \newcommand{\vectorB}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vectorC}[1]{\textbf{#1}} \)

\( \newcommand{\vectorD}[1]{\overrightarrow{#1}} \)

\( \newcommand{\vectorDt}[1]{\overrightarrow{\text{#1}}} \)

\( \newcommand{\vectE}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash{\mathbf {#1}}}} \)

Now we are down to the bread and butter work of the statistician: developing and testing hypotheses. It is important to put this material in a broader context so that the method by which a hypothesis is formed is understood completely. Using textbook examples often clouds the real source of statistical hypotheses.

Statistical testing is part of a much larger process known as the scientific method. This method was developed more than two centuries ago as the accepted way that new knowledge could be created. Until then, and unfortunately even today, among some, "knowledge" could be created simply by some authority saying something was so, ipso dicta . Superstition and conspiracy theories were (are?) accepted uncritically.

Figure \(\PageIndex{1}\) You can use a hypothesis test to decide if a dog breeder’s claim that every Dalmatian has 35 spots is statistically sound. (Credit: Robert Neff)

The scientific method, briefly, states that only by following a careful and specific process can some assertion be included in the accepted body of knowledge. This process begins with a set of assumptions upon which a theory, sometimes called a model, is built. This theory, if it has any validity, will lead to predictions; what we call hypotheses.

As an example, in microeconomics the theory of consumer choice begins with certain assumptions concerning human behavior. From these assumptions followed a theory of how consumers make choices using indifference curves and the budget line. This theory gave rise to a very important prediction; namely, that there was an inverse relationship between price and quantity demanded. This relationship was known as the demand curve. The negative slope of the demand curve is really just a prediction, or a hypothesis, that can be tested with statistical tools.

Unless hundreds and hundreds of statistical tests of this hypothesis had not confirmed this relationship, the so-called Law of Demand would have been discarded years ago. This is the role of statistics, to test the hypotheses of various theories to determine if they should be admitted into the accepted body of knowledge, and how we understand our world. Once admitted, however, they may be later discarded if new theories come along that make better predictions.

Not long ago two scientists claimed that they could get more energy out of a process than was put in. This caused a tremendous stir for obvious reasons. They were on the cover of Time and were offered extravagant sums to bring their research work to private industry and any number of universities. It was not long until their work was subjected to the rigorous tests of the scientific method and found to be a failure. No other lab could replicate their findings. Consequently they have sunk into obscurity and their theory discarded. It may surface again when someone can pass the tests of the hypotheses required by the scientific method, but until then it is just a curiosity. Many pure frauds have been attempted over time, but most have been found out by applying the process of the scientific method.

This discussion is meant to show just where in this process statistics falls. Statistics and statisticians are not necessarily in the business of developing theories, but in the business of testing others' theories. Hypotheses come from these theories based upon an explicit set of assumptions and sound logic. The hypothesis comes first, before any data are gathered. Data do not create hypotheses; they are used to test them. If we bear this in mind as we study this section the process of forming and testing hypotheses will make more sense.

One job of a statistician is to make statistical inferences about populations based on samples taken from the population. Confidence intervals are one way to estimate a population parameter. Another way to make a statistical inference is to make a decision about the value of a specific parameter. For instance, a car dealer advertises that its new small truck gets 35 miles per gallon, on average. A tutoring service claims that its method of tutoring helps 90% of its students get an A or a B. A company says that women managers in their company earn an average of $60,000 per year.

A statistician will make a decision about these claims. This process is called " hypothesis testing ". A hypothesis test involves collecting data from a sample and evaluating the data. Then, the statistician makes a decision as to whether or not there is sufficient evidence, based upon analyses of the data, to reject the null hypothesis.

In this chapter, you will conduct hypothesis tests on single means and single proportions. You will also learn about the errors associated with these tests.

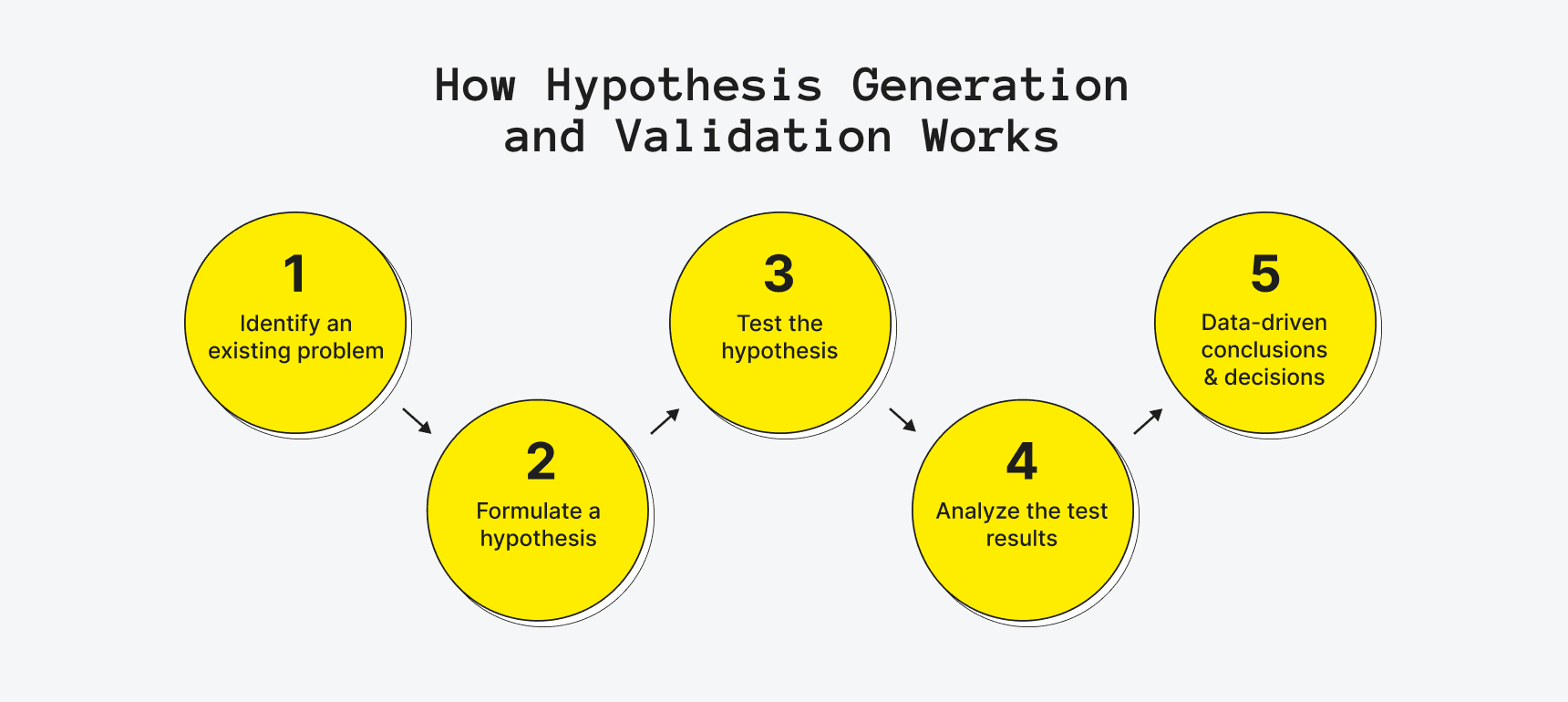

How to test your idea: start with the most critical hypotheses

To validate business ideas you need to perform many small experiments. At the centre of any one of these experiments should be a deep understanding of the most critical hypotheses and why you are testing them.

In the world of Lean Startup, the Build, Measure, Learn cycle is a means to an end to test the attractiveness of business ideas. Unfortunately, some innovators and entrepreneurs take the “Build” step too literally and immediately start building prototypes. However, at the centre of this cycle there is actually a step zero: shaping your idea and defining the most critical assumptions and hypotheses underlying it (Note: I’ll be interchanging between assumptions and hypotheses throughout the rest of the post).

Step 0 - think (& hypothesize)

Shape your idea (product, tech, market opportunity, etc.) into an attractive customer value proposition and prototype a potential profitable and scalable business model. Use the Value Proposition & Business Model Canvas to do this. Then ask: What are the critical assumptions and hypotheses that need to be true for this to work. Define assumptions as to desirability (market risk: will customers want it?); feasibility (tech & implementation risk: can I build/execute it?); and viability (financial risk: can I earn more money from it than it will cost me to build?). To test these assumptions/hypotheses you will perform many, many experiments. With your hypotheses mapped out, you can now start to move through the steps of the Build, Measure, Learn cycle:

Step 1 - build

In this step you design and build the experiments that are best suited to test your assumptions? Ask: Which hypothesis will we test first and how? Ask: Which tests will yield the most valuable data and evidence?

Step 2 - measure

In this step you actually perform the experiments. That might be through interviews and talking to a series of customers and stakeholders; by launching a landing page to see if people click on, sign up for, or even buy your (non existing because it’s not yet implemented) value proposition.

Step 3 - learn

In this step you analyze the data and gain insights. You systematically connect the evidence and data from experiments back to the initial hypotheses, how you tested them, and what you learned. This is where you identify if your initial hypotheses were right, wrong, or still unclear. You might learn that you have to reshape your idea, to pivot, to create new hypotheses, to continue testing, or you might prove with evidence that your idea has legs and you’re on the right rack. At the centre of all testing should always be a deep understanding of the critical hypotheses underlying how you intend to create value for customers (Value Proposition Canvas) and how you hope to create value for your company (Business Model Canvas). I’ve seen too many innovators and entrepreneurs get lost in building experiments, but losing sight of their initial hypotheses and the ultimate prize. At the end there’s only one thing that counts: Are you making progress in turning your initial idea into a profitable and scalable business model that creates value for customers?

About the speakers

Dr. Alexander (Alex) Osterwalder is one of the world’s most influential innovation experts, a leading author, entrepreneur and in-demand speaker whose work has changed the way established companies do business and how new ventures get started.

Download your free copy of this whitepaper now

Explore other examples, get strategyzer updates straight in your inbox.

- NUMERACY SKILLS

- Developing and Testing Hypotheses

Search SkillsYouNeed:

Numeracy Skills:

- A - Z List of Numeracy Skills

- How Good Are Your Numeracy Skills? Numeracy Quiz

- Money Management and Financial Skills

- Real-World Maths

- Numbers | An Introduction

- Special Numbers and Mathematical Concepts

- Systems of Measurement

- Common Mathematical Symbols and Terminology

- Apps to Help with Maths

- Subtraction -

- Multiplication ×

- Positive and Negative Numbers

- Ordering Mathematical Operations - BODMAS

- Mental Arithmetic – Basic Mental Maths Hacks

- Ratio and Proportion

- Percentages %

- Percentage Calculators

- Percentage Change | Increase and Decrease

- Calculating with Time

- Estimation, Approximation and Rounding

- Introduction to Geometry: Points, Lines and Planes

- Introduction to Cartesian Coordinate Systems

- Polar, Cylindrical and Spherical Coordinates

- Properties of Polygons

- Simple Transformations of 2-Dimensional Shapes

- Circles and Curved Shapes

- Perimeter and Circumference

- Calculating Area

- Three-Dimensional Shapes

- Net Diagrams of 3D Shapes

- Calculating Volume

- Area, Surface Area and Volume Reference Sheet

- Graphs and Charts

- Averages (Mean, Median & Mode)

- Simple Statistical Analysis

- Statistical Analysis: Types of Data

- Understanding Correlations

- Understanding Statistical Distributions

- Significance and Confidence Intervals

- Multivariate Analysis

- Introduction to Algebra

- Simultaneous and Quadratic Equations

- Introduction to Trigonometry

- Introduction to Probability

Subscribe to our FREE newsletter and start improving your life in just 5 minutes a day.

You'll get our 5 free 'One Minute Life Skills' and our weekly newsletter.

We'll never share your email address and you can unsubscribe at any time.

Statistical Analysis: Developing and Testing Hypotheses

Statistical hypothesis testing is sometimes known as confirmatory data analysis. It is a way of drawing inferences from data. In the process, you develop a hypothesis or theory about what you might see in your research. You then test that hypothesis against the data that you collect.

Hypothesis testing is generally used when you want to compare two groups, or compare a group against an idealised position.

Before You Start: Developing A Research Hypothesis

Before you can do any kind of research in social science fields such as management, you need a research question or hypothesis. Research is generally designed to either answer a research question or consider a research hypothesis . These two are closely linked, and generally one or the other is used, rather than both.

A research question is the question that your research sets out to answer . For example:

Do men and women like ice cream equally?

Do men and women like the same flavours of ice cream?

What are the main problems in the market for ice cream?

How can the market for ice cream be segmented and targeted?

Research hypotheses are statements of what you believe you will find in your research.

These are then tested statistically during the research to see if your belief is correct. Examples include:

Men and women like ice cream to different extents.

Men and women like different flavours of ice cream.

Men are more likely than women to like mint ice cream.

Women are more likely than men to like chocolate ice cream.

Both men and women prefer strawberry to vanilla ice cream.

Relationships vs Differences

Research hypotheses can be expressed in terms of differences between groups, or relationships between variables. However, these are two sides of the same coin: almost any hypothesis could be set out in either way.

For example:

There is a relationship between gender and liking ice cream OR

Men are more likely to like ice cream than women.

Testing Research Hypotheses

The purpose of statistical hypothesis testing is to use a sample to draw inferences about a population.

Testing research hypotheses requires a number of steps:

Step 1. Define your research hypothesis

The first step in any hypothesis testing is to identify your hypothesis, which you will then go on to test. How you define your hypothesis may affect the type of statistical testing that you do, so it is important to be clear about it. In particular, consider whether you are going to hypothesise simply that there is a relationship or speculate about the direction of the relationship.

Using the examples above:

There is a relationship between gender and liking ice cream is a non-directional hypothesis. You have simply specified that there is a relationship, not whether men or women like ice cream more.

However, men are more likely to like ice cream than women is directional : you have specified which gender is more likely to like ice cream.

Generally, it is better not to specify direction unless you are moderately sure about it.

Step 2. Define the null hypothesis

The null hypothesis is basically a statement of what you are hoping to disprove: the opposite of your ‘guess’ about the relationship. For example, in the hypotheses above, the null hypothesis would be:

Men and women like ice cream equally, or

There is no relationship between gender and ice cream.

This also defines your ‘alternative hypothesis’ which is your ‘test hypothesis’ ( men like ice cream more than women ). Your null hypothesis is generally that there is no difference, because this is the simplest position.

The purpose of hypothesis testing is to disprove the null hypothesis. If you cannot disprove the null hypothesis, you have to assume it is correct.

Step 3. Develop a summary measure that describes your variable of interest for each group you wish to compare

Our page on Simple Statistical Analysis describes several summary measures, including two of the most common, mean and median.

The next step in your hypothesis testing is to develop a summary measure for each of your groups. For example, to test the gender differences in liking for ice cream, you might ask people how much they liked ice cream on a scale of 1 to 5. Alternatively, you might have data about the number of times that ice creams are consumed each week in the summer months.

You then need to produce a summary measure for each group, usually mean and standard deviation. These may be similar for each group, or quite different.

Step 4. Choose a reference distribution and calculate a test statistic

To decide whether there is a genuine difference between the two groups, you have to use a reference distribution against which to measure the values from the two groups.

The most common source of reference distributions is a standard distribution such as the normal distribution or t - distribution. These two are the same, except that the standard deviation of the t -distribution is estimated from the sample, and that of the normal distribution is known. There is more about this in our page on Statistical Distributions .

You then compare the summary data from the two groups by using them to calculate a test statistic. There is a standard formula for every test statistic and reference distribution. The test and reference distribution depend on your data and the purpose of your testing (see below).

The test that you use to compare your groups will depend on how many groups you have, the type of data that you have collected, and how reliable your data are. In general, you would use different tests for comparing two groups than you would for comparing three or more.

Our page Surveys and Survey Design explains that there are two types of answer scale, continuous and categorical. Age, for example, is a continuous scale, although it can also be grouped into categories. You may also find it helpful to read our page on Types of Data .

Gender is a category scale.

For a continuous scale, you can use the mean values of the two groups that you are comparing.

For a category scale, you need to use the median values.

Source: Easterby-Smith, Thorpe and Jackson, Management Research 4th Edition

One- or Two-Tailed Test

The other thing that you have to decide is whether you use what is known as a ‘one-tailed’ or ‘two-tailed’ test.

This allows you to compare differences between groups in either one or both directions.

In practice, this boils down to whether your research hypothesis is expressed as ‘x is likely to be more than y’, or ‘x is likely to be different from y’. If you are confident of the direction of the distance (that is, you are sure that the only options are that ‘x is likely to be more than y’ or ‘x and y are the same’), then your test will be one-tailed. If not, it will be two-tailed .

If there is any doubt, it is better to use a two-tailed test.

You should only use a one-tailed test when you are certain about the direction of the difference, and it doesn’t matter if you are wrong.

The graph under Step 5 shows a two-tailed test.

If you are not very confident about the quality of the data collected, for example because the inputting was done quickly and cheaply, or because the data have not been checked, then you may prefer to use the median even if the data are continuous to avoid any problems with outliers. This makes the tests more robust, and the results more reliable.

Our page on correlations suggests that you may also want to plot a scattergraph before undertaking any further analysis. This will also help you to identify any outliers or potential problems with the data.

Calculating the Test Statistic

For each type of test, there is a standard formula for the test statistic. For example, for the t -test, it is:

(M1-M2)/SE(diff)

M1 is the mean of the first group

M2 is the mean of the second group

SE(diff) is the standard error of the difference, which is calculated from the standard deviation and the sample size of each group.

The formula for calculating the standard error of the difference between means is:

- sd 2 = the square of the standard deviation of the source population (i.e., the variance);

- n a = the size of sample A; and

- n b = the size of sample B.

Step 5. Identify Acceptance and Rejection Regions

The final part of the test is to see if your test statistic is significant—in other words, whether you are going to accept or reject your null hypothesis. You need to consider first what level of significance is required. This tells you the probability that you have achieved your result by chance.

Significance (or p-value) is usually required to be either 5% or 1%, meaning that you are 95% or 99% confident that your result was not achieved by chance.

NOTE: the significance level is sometimes expressed as p < 0.05 or p < 0.01.

For more about significance, you may like to read our page on Significance and Confidence Intervals .

The graph below shows a reference distribution (this one could be either the normal or the t- distribution) with the acceptance and rejection regions marked. It also shows the critical values. µ is the mean. For more about this, you may like to read our page on Statistical Distributions .

The critical values are identified from published statistical tables for your reference distribution, which are available for different levels of significance.

If your test statistic falls within either of the two rejection regions (that is, it is greater than the higher critical value, or less than the lower one), you will reject the null hypothesis. You can therefore accept your alternative hypothesis.

Step 6. Draw Conclusions and Inferences

The final step is to draw conclusions.

If your test statistic fell within the rejection region, and you have rejected the null hypothesis, you can therefore conclude that there is a gender difference in liking for ice cream, using the example above.

Types of Error

There are four possible outcomes from statistical testing (see table):

The groups are different, and you conclude that they are different (correct result)

The groups are different, but you conclude that they are not (Type II error)

The groups are the same, but you conclude that they are different (Type I error)

The groups are the same, and you conclude that they are the same (correct result).

Type I errors are generally considered more important than Type II, because they have the potential to change the status quo.

For example, if you wrongly conclude that a new medical treatment is effective, doctors are likely to move to providing that treatment. Patients may receive the treatment instead of an alternative that could have fewer side effects, and pharmaceutical companies may stop looking for an alternative treatment.

Further Reading from Skills You Need

Data Handling and Algebra Part of The Skills You Need Guide to Numeracy

This eBook covers the basics of data handling, data visualisation, basic statistical analysis and algebra. The book contains plenty of worked examples to improve understanding as well as real-world examples to show you how these concepts are useful.

Whether you want to brush up on your basics, or help your children with their learning, this is the book for you.

There are statistical software packages available that will carry out all these tests for you. However, if you have never studied statistics, and you’re not very confident about what you’re doing, you are probably best off discussing it with a statistician or consulting a detailed statistical textbook.

Poorly executed statistical analysis can invalidate very good research. It is much better to find someone to help you. However, this page will help you to understand your friendly statistician!

Continue to: Significance and Confidence Intervals Statistical Analysis: Types of Data

See also: Understanding Correlations Understanding Statistical Distributions Averages (Mean, Median and Mode)

- school Campus Bookshelves

- menu_book Bookshelves

- perm_media Learning Objects

- login Login

- how_to_reg Request Instructor Account

- hub Instructor Commons

Margin Size

- Download Page (PDF)

- Download Full Book (PDF)

- Periodic Table

- Physics Constants

- Scientific Calculator

- Reference & Cite

- Tools expand_more

- Readability

selected template will load here

This action is not available.

8.1: Steps in Hypothesis Testing

- Last updated

- Save as PDF

- Page ID 10970

\( \newcommand{\vecs}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vecd}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash {#1}}} \)

\( \newcommand{\id}{\mathrm{id}}\) \( \newcommand{\Span}{\mathrm{span}}\)

( \newcommand{\kernel}{\mathrm{null}\,}\) \( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\) \( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\) \( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\inner}[2]{\langle #1, #2 \rangle}\)

\( \newcommand{\Span}{\mathrm{span}}\)

\( \newcommand{\id}{\mathrm{id}}\)

\( \newcommand{\kernel}{\mathrm{null}\,}\)

\( \newcommand{\range}{\mathrm{range}\,}\)

\( \newcommand{\RealPart}{\mathrm{Re}}\)

\( \newcommand{\ImaginaryPart}{\mathrm{Im}}\)

\( \newcommand{\Argument}{\mathrm{Arg}}\)

\( \newcommand{\norm}[1]{\| #1 \|}\)

\( \newcommand{\Span}{\mathrm{span}}\) \( \newcommand{\AA}{\unicode[.8,0]{x212B}}\)

\( \newcommand{\vectorA}[1]{\vec{#1}} % arrow\)

\( \newcommand{\vectorAt}[1]{\vec{\text{#1}}} % arrow\)

\( \newcommand{\vectorB}[1]{\overset { \scriptstyle \rightharpoonup} {\mathbf{#1}} } \)

\( \newcommand{\vectorC}[1]{\textbf{#1}} \)

\( \newcommand{\vectorD}[1]{\overrightarrow{#1}} \)

\( \newcommand{\vectorDt}[1]{\overrightarrow{\text{#1}}} \)

\( \newcommand{\vectE}[1]{\overset{-\!-\!\rightharpoonup}{\vphantom{a}\smash{\mathbf {#1}}}} \)

CHAPTER OBJECTIVES

By the end of this chapter, the student should be able to:

- Differentiate between Type I and Type II Errors

- Describe hypothesis testing in general and in practice

- Conduct and interpret hypothesis tests for a single population mean, population standard deviation known.

- Conduct and interpret hypothesis tests for a single population mean, population standard deviation unknown.

- Conduct and interpret hypothesis tests for a single population proportion

One job of a statistician is to make statistical inferences about populations based on samples taken from the population. Confidence intervals are one way to estimate a population parameter. Another way to make a statistical inference is to make a decision about a parameter. For instance, a car dealer advertises that its new small truck gets 35 miles per gallon, on average. A tutoring service claims that its method of tutoring helps 90% of its students get an A or a B. A company says that women managers in their company earn an average of $60,000 per year.

A statistician will make a decision about these claims. This process is called "hypothesis testing." A hypothesis test involves collecting data from a sample and evaluating the data. Then, the statistician makes a decision as to whether or not there is sufficient evidence, based upon analysis of the data, to reject the null hypothesis. In this chapter, you will conduct hypothesis tests on single means and single proportions. You will also learn about the errors associated with these tests.

Hypothesis testing consists of two contradictory hypotheses or statements, a decision based on the data, and a conclusion. To perform a hypothesis test, a statistician will:

- Set up two contradictory hypotheses.

- Collect sample data (in homework problems, the data or summary statistics will be given to you).

- Determine the correct distribution to perform the hypothesis test.

- Analyze sample data by performing the calculations that ultimately will allow you to reject or decline to reject the null hypothesis.

- Make a decision and write a meaningful conclusion.

To do the hypothesis test homework problems for this chapter and later chapters, make copies of the appropriate special solution sheets. See Appendix E .

- The desired confidence level.

- Information that is known about the distribution (for example, known standard deviation).

- The sample and its size.

- Comprehensive Learning Paths

- 150+ Hours of Videos

- Complete Access to Jupyter notebooks, Datasets, References.

Hypothesis Testing – A Deep Dive into Hypothesis Testing, The Backbone of Statistical Inference

- September 21, 2023

Explore the intricacies of hypothesis testing, a cornerstone of statistical analysis. Dive into methods, interpretations, and applications for making data-driven decisions.

In this Blog post we will learn:

- What is Hypothesis Testing?

- Steps in Hypothesis Testing 2.1. Set up Hypotheses: Null and Alternative 2.2. Choose a Significance Level (α) 2.3. Calculate a test statistic and P-Value 2.4. Make a Decision

- Example : Testing a new drug.

- Example in python

1. What is Hypothesis Testing?

In simple terms, hypothesis testing is a method used to make decisions or inferences about population parameters based on sample data. Imagine being handed a dice and asked if it’s biased. By rolling it a few times and analyzing the outcomes, you’d be engaging in the essence of hypothesis testing.

Think of hypothesis testing as the scientific method of the statistics world. Suppose you hear claims like “This new drug works wonders!” or “Our new website design boosts sales.” How do you know if these statements hold water? Enter hypothesis testing.

2. Steps in Hypothesis Testing

- Set up Hypotheses : Begin with a null hypothesis (H0) and an alternative hypothesis (Ha).

- Choose a Significance Level (α) : Typically 0.05, this is the probability of rejecting the null hypothesis when it’s actually true. Think of it as the chance of accusing an innocent person.

- Calculate Test statistic and P-Value : Gather evidence (data) and calculate a test statistic.

- p-value : This is the probability of observing the data, given that the null hypothesis is true. A small p-value (typically ≤ 0.05) suggests the data is inconsistent with the null hypothesis.

- Decision Rule : If the p-value is less than or equal to α, you reject the null hypothesis in favor of the alternative.

2.1. Set up Hypotheses: Null and Alternative

Before diving into testing, we must formulate hypotheses. The null hypothesis (H0) represents the default assumption, while the alternative hypothesis (H1) challenges it.

For instance, in drug testing, H0 : “The new drug is no better than the existing one,” H1 : “The new drug is superior .”

2.2. Choose a Significance Level (α)

When You collect and analyze data to test H0 and H1 hypotheses. Based on your analysis, you decide whether to reject the null hypothesis in favor of the alternative, or fail to reject / Accept the null hypothesis.

The significance level, often denoted by $α$, represents the probability of rejecting the null hypothesis when it is actually true.

In other words, it’s the risk you’re willing to take of making a Type I error (false positive).

Type I Error (False Positive) :

- Symbolized by the Greek letter alpha (α).

- Occurs when you incorrectly reject a true null hypothesis . In other words, you conclude that there is an effect or difference when, in reality, there isn’t.

- The probability of making a Type I error is denoted by the significance level of a test. Commonly, tests are conducted at the 0.05 significance level , which means there’s a 5% chance of making a Type I error .

- Commonly used significance levels are 0.01, 0.05, and 0.10, but the choice depends on the context of the study and the level of risk one is willing to accept.

Example : If a drug is not effective (truth), but a clinical trial incorrectly concludes that it is effective (based on the sample data), then a Type I error has occurred.

Type II Error (False Negative) :

- Symbolized by the Greek letter beta (β).

- Occurs when you accept a false null hypothesis . This means you conclude there is no effect or difference when, in reality, there is.

- The probability of making a Type II error is denoted by β. The power of a test (1 – β) represents the probability of correctly rejecting a false null hypothesis.

Example : If a drug is effective (truth), but a clinical trial incorrectly concludes that it is not effective (based on the sample data), then a Type II error has occurred.

Balancing the Errors :

In practice, there’s a trade-off between Type I and Type II errors. Reducing the risk of one typically increases the risk of the other. For example, if you want to decrease the probability of a Type I error (by setting a lower significance level), you might increase the probability of a Type II error unless you compensate by collecting more data or making other adjustments.

It’s essential to understand the consequences of both types of errors in any given context. In some situations, a Type I error might be more severe, while in others, a Type II error might be of greater concern. This understanding guides researchers in designing their experiments and choosing appropriate significance levels.

2.3. Calculate a test statistic and P-Value

Test statistic : A test statistic is a single number that helps us understand how far our sample data is from what we’d expect under a null hypothesis (a basic assumption we’re trying to test against). Generally, the larger the test statistic, the more evidence we have against our null hypothesis. It helps us decide whether the differences we observe in our data are due to random chance or if there’s an actual effect.

P-value : The P-value tells us how likely we would get our observed results (or something more extreme) if the null hypothesis were true. It’s a value between 0 and 1. – A smaller P-value (typically below 0.05) means that the observation is rare under the null hypothesis, so we might reject the null hypothesis. – A larger P-value suggests that what we observed could easily happen by random chance, so we might not reject the null hypothesis.

2.4. Make a Decision

Relationship between $α$ and P-Value

When conducting a hypothesis test:

We then calculate the p-value from our sample data and the test statistic.

Finally, we compare the p-value to our chosen $α$:

- If $p−value≤α$: We reject the null hypothesis in favor of the alternative hypothesis. The result is said to be statistically significant.

- If $p−value>α$: We fail to reject the null hypothesis. There isn’t enough statistical evidence to support the alternative hypothesis.

3. Example : Testing a new drug.

Imagine we are investigating whether a new drug is effective at treating headaches faster than drug B.

Setting Up the Experiment : You gather 100 people who suffer from headaches. Half of them (50 people) are given the new drug (let’s call this the ‘Drug Group’), and the other half are given a sugar pill, which doesn’t contain any medication.

- Set up Hypotheses : Before starting, you make a prediction:

- Null Hypothesis (H0): The new drug has no effect. Any difference in healing time between the two groups is just due to random chance.

- Alternative Hypothesis (H1): The new drug does have an effect. The difference in healing time between the two groups is significant and not just by chance.

Calculate Test statistic and P-Value : After the experiment, you analyze the data. The “test statistic” is a number that helps you understand the difference between the two groups in terms of standard units.

For instance, let’s say:

- The average healing time in the Drug Group is 2 hours.

- The average healing time in the Placebo Group is 3 hours.

The test statistic helps you understand how significant this 1-hour difference is. If the groups are large and the spread of healing times in each group is small, then this difference might be significant. But if there’s a huge variation in healing times, the 1-hour difference might not be so special.

Imagine the P-value as answering this question: “If the new drug had NO real effect, what’s the probability that I’d see a difference as extreme (or more extreme) as the one I found, just by random chance?”

For instance:

- P-value of 0.01 means there’s a 1% chance that the observed difference (or a more extreme difference) would occur if the drug had no effect. That’s pretty rare, so we might consider the drug effective.

- P-value of 0.5 means there’s a 50% chance you’d see this difference just by chance. That’s pretty high, so we might not be convinced the drug is doing much.

- If the P-value is less than ($α$) 0.05: the results are “statistically significant,” and they might reject the null hypothesis , believing the new drug has an effect.

- If the P-value is greater than ($α$) 0.05: the results are not statistically significant, and they don’t reject the null hypothesis , remaining unsure if the drug has a genuine effect.

4. Example in python

For simplicity, let’s say we’re using a t-test (common for comparing means). Let’s dive into Python:

Making a Decision : “The results are statistically significant! p-value < 0.05 , The drug seems to have an effect!” If not, we’d say, “Looks like the drug isn’t as miraculous as we thought.”

5. Conclusion

Hypothesis testing is an indispensable tool in data science, allowing us to make data-driven decisions with confidence. By understanding its principles, conducting tests properly, and considering real-world applications, you can harness the power of hypothesis testing to unlock valuable insights from your data.

More Articles

Correlation – connecting the dots, the role of correlation in data analysis, sampling and sampling distributions – a comprehensive guide on sampling and sampling distributions, law of large numbers – a deep dive into the world of statistics, central limit theorem – a deep dive into central limit theorem and its significance in statistics, skewness and kurtosis – peaks and tails, understanding data through skewness and kurtosis”, similar articles, complete introduction to linear regression in r, how to implement common statistical significance tests and find the p value, logistic regression – a complete tutorial with examples in r.

Subscribe to Machine Learning Plus for high value data science content

© Machinelearningplus. All rights reserved.

Machine Learning A-Z™: Hands-On Python & R In Data Science

Free sample videos:.

Blog » Value Hypothesis & Growth Hypothesis: lean startup validation

Value Hypothesis & Growth Hypothesis: lean startup validation

Posted on September 16, 2021 |

You’ve come up with a fantastic idea for a startup and you need to discuss the hypothesis and its value? But you’re not sure if it’s a viable one or not. What do you do next? It’s essential to get your ideas right before you start developing them. 95% of new products fail in their first year of launch. Or to put it another way, only one in twenty product ideas succeed. In this article, we’ll be taking a look at why it’s so important to validate your startup idea before you start spending a lot of time and money developing it. And that’s where the Lean Startup Validation process gets into, alongside the growth hypothesis and value hypothesis. We’ll also be looking at the questions that you need to ask.

Table of contents

The lean startup validation methodology, the benefits of validating your startup idea, the value hypothesis, the growth hypothesis, recommendations and questions for creating and running a good hypothesis, in conclusion – take the time to validate your product.

What does it mean to validate a lean startup?

Validating your lean startup idea may sound like a complicated process, but it’s a lot simpler than you may think. It may be the case that you were already planning on carrying out some of the work.

Essentially, validating your startup when you check your idea to see if it solves a problem that your prospective customers have. You can do this by creating hypotheses and then carrying out research to see if these hypotheses are true or false.

The best startups have always been about finding a gap in the market and offering a product or service that solves the problem. For example, take Airbnb . Before Airbnb launched, people only had the option of staying in hotels. Airbnb opened up the hospitality industry, offering cheaper accommodation to people who could not afford to stay inexpensive hotels.

“Don’t be in a rush to get big. Be in a rush to have a great product” – Eric Ries

Validation is a crucial part of the lean startup methodology, which was devised by entrepreneur Eric Ries. The lean startup methodology is all about optimizing the amount of time that is needed to ensure a product or service is viable.

Lean Startup Validation is a critical part of the lean startup process as it helps make sure that an idea will be successful before time is spent developing the final product.

As an example of a failed idea where more validation could have helped, take Google Glass . It sounded like a good idea on paper, but the technology failed spectacularly. Customer research would have shown that $1,500 was too much money, that people were worried about health and safety, and most importantly… there was no apparent benefit to the product.

Find out more about lean startup methodology on our blog

How to create a mobile app using lean startup methodology

The key benefit of validating your lean startup idea is to make sure that the idea you have is a viable one before you start using resources to build and promote it.

There are other less obvious benefits too:

- It can help you fine-tune your idea. So, it may be the case that you wanted your idea to go in a particular direction, but user research shows that pivoting may be the best thing to do

- It can help you get funding. Investors may be more likely to invest in your startup idea if you have evidence that your idea is a viable one

The value hypothesis and the growth hypothesis – are two ways to validate your idea

“To grow a successful business, validate your idea with customers” – Chad Boyda

In Eric Rie’s book ‘ The Lean Startup’ , he identifies two different types of hypotheses that entrepreneurs can use to validate their startup idea – the growth hypothesis and the value hypothesis.

Let’s look at the two different ideas, how they compare, and how you can use them to see if your startup idea could work.

The value hypothesis tests whether your product or service provides customers with enough value and most importantly, whether they are prepared to pay for this value.

For example, let’s say that you want to develop a mobile app to help dog owners find people to help walk their dogs while they are at work. Before you start spending serious time and money developing the app, you’ll want to see if it is something of interest to your target audience.

Your value hypothesis could say, “we believe that 60% of dog owners aged between 30 and 40 would be willing to pay upwards of €10 a month for this service.”

You then find dog owners in this age range and ask them the question. You’re pleased to see that 75% say that they would be willing to pay this amount! Your hypothesis has worked! This means that you should focus your app and your advertising on this target audience.

If the data comes back and says your prospective target audience isn’t willing to pay, then it means you have to rethink and reframe your app before running another hypothesis. For example, you may want to focus on another demographic, or look at reducing the price of the subscription.

Shoe retailer Zappos used a value hypothesis when starting out. Founder Nick Swinmurn went to local shoe stores, taking photos of the shoes and posting them on the Zappos website. Then, if customers bought the shoes, he’d buy them from the store and send them out to them. This allowed him to see if there was interest in his website, without having to spend lots of money on stock.

The growth hypothesis tests how your customers will find your product or service and shows how your potential product could grow over the years.

Let’s go back to the dog-walking app we talked about earlier. You think that 80% of app downloads will come from word-of-mouth recommendations.

You create a minimal viable product ( MVP for short ) – this is a basic version of your app that may not contain all of the features just yet. So, you then upload it to the app stores and wait for people to start downloading it. When you have a baseline of customers, you send them an email asking them how they heard of your app.

When the feedback comes back, it shows that only 30% of downloads have come from word-of-mouth recommendations. This means that your growth hypothesis has not been successful in this scenario.

Does this mean that your idea is a bad one? Not necessarily. It just means that you may have to look at other ways of promoting your app. If you are relying on word-of-mouth recommendations to advertise it, then it could potentially fail.

Dropbox used growth hypotheses to its advantage when creating its software. The file-storage company constantly tweaked its website, running A/B tests to see which features and changes were most popular with customers, using them in the final product.

Like any good science experiment, there are things that you need to bear in mind when running your hypotheses. Here are our recommendations:

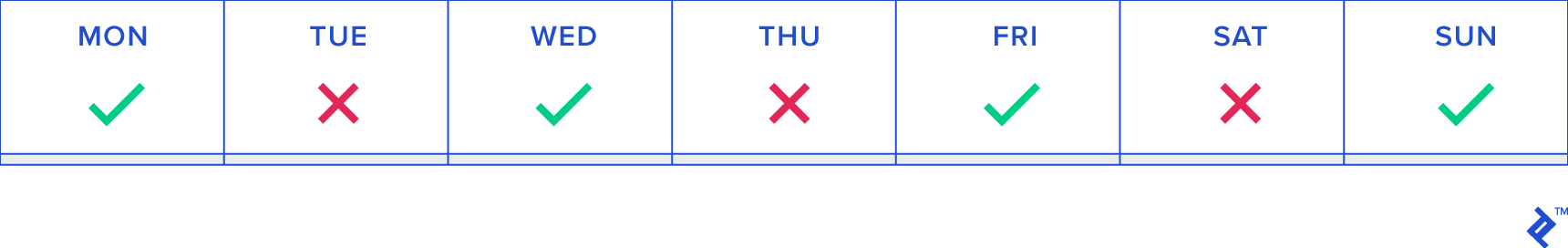

- You may be wondering which type of hypothesis you should carry out first – a growth hypothesis or a value hypothesis. Eric Ries recommends carrying out a value hypothesis first, as it makes sense to see if there is interest before seeing how many people are interested. However, the precise order may depend on the type of product or service you want to sell;

- You will probably need to run multiple hypotheses to validate your product or service. If you do this, be sure to only test one hypothesis at a time. If you end up testing multiple ones in one go, you may not be sure which hypothesis has had which result;

- Test your most critical assumption first – this is one that you are most worried about, and could affect your idea the most. It may be that solving this issue makes your product or service a viable one;