- Privacy Policy

Home » Prediction – Definition, Types and Example

Prediction – Definition, Types and Example

Table of Contents

Definition:

Prediction is the process of making an educated guess or estimation about a future event or outcome based on available information and data. It involves analyzing past patterns and trends, as well as current conditions, to forecast what may happen in the future.

Types of Prediction

Types of Prediction are as follows:

Point Prediction

This type of prediction provides a specific estimate of the future outcome. For example, predicting that a stock will reach a certain price at a particular time.

Interval Prediction

This type of prediction provides a range of possible outcomes. For example, predicting that there is a 90% chance that a hurricane will make landfall somewhere in a certain region within the next week.

Categorical Prediction

This type of prediction involves predicting the likelihood of an event occurring in a specific category. For example, predicting the likelihood of a person developing a certain disease or the likelihood of a sports team winning a game.

Long-term Prediction

This type of prediction involves forecasting events or trends that are expected to occur over a longer period, such as predicting climate change or population growth.

Short-term Prediction

This type of prediction involves forecasting events or trends that are expected to occur within a shorter period, such as predicting the weather or the stock market performance for the next day.

Qualitative Prediction

This type of prediction involves making subjective judgments or expert opinions based on non-quantifiable information, such as predicting the impact of a new technology on society.

Quantitative Prediction

This type of prediction involves using mathematical models and statistical methods to forecast future events or trends, such as predicting consumer demand for a new product.

Probabilistic Prediction

This type of prediction involves estimating the probability or likelihood of a future event or outcome occurring. For example, predicting the probability of a person surviving a medical procedure.

Deterministic Prediction

This type of prediction involves providing a definite or certain outcome based on known information. For example, predicting the result of a coin toss or the outcome of a mathematical equation.

Black box Prediction

This type of prediction involves using machine learning algorithms or other complex models to make predictions without necessarily understanding how the model arrived at its conclusion. This type of prediction is often used in applications such as fraud detection or image recognition.

Prediction Methods

Prediction Methods are as follows:

Statistical Methods

These methods involve analyzing historical data and using statistical models to identify patterns and trends that can be used to make predictions about future events or outcomes.

Machine Learning Methods

These methods involve training algorithms to learn patterns and relationships in data and using these models to make predictions about new data.

Expert Judgment

This method involves relying on the knowledge and expertise of individuals who have specialized knowledge in a particular area to make predictions.

Simulation Methods

These methods involve creating computer models that simulate real-world situations to predict outcomes. For example, simulating the spread of a virus in a population to predict the impact of different intervention strategies.

Rule-based Methods

These methods involve using a set of rules or decision trees to make predictions based on specific criteria. For example, using a set of rules to predict the likelihood of a loan being approved based on a person’s credit history and income.

Time-series Forecasting

This method involves analyzing historical data to identify patterns and trends over time and using these patterns to make predictions about future values in a series, such as predicting stock prices or demand for a product.

Neural Networks

These are a type of machine learning method that involve building networks of interconnected nodes that can learn to make predictions based on input data.

Examples of Prediction

There are numerous examples of predictions made in various fields, some of which include:

- Weather forecasting: Predicting the temperature, precipitation, and other weather conditions for a particular location and time.

- Stock market prediction : Predicting the performance of stocks, bonds, and other financial instruments based on market trends and other economic factors.

- Sports prediction : Predicting the outcomes of sporting events such as football games, horse races, and tennis matches.

- Healthcare prediction : Predicting the likelihood of a patient developing a particular disease or the effectiveness of a particular treatment.

- Natural disaster prediction : Predicting the occurrence and intensity of natural disasters such as hurricanes, earthquakes, and floods.

- Traffic prediction: Predicting traffic patterns and congestion in urban areas based on historical data and other factors.

- Retail prediction: Predicting consumer demand for products and services based on market trends, customer behavior, and other factors.

- Energy prediction : Predicting energy demand and supply based on historical data, weather patterns, and other factors.

Applications of Prediction

Predictive models and methods have numerous applications across a wide range of fields, some of which include:

- Business and finance: Predicting sales, customer behavior, and market trends to inform business planning and decision-making, and predicting stock prices and other financial market performance.

- Healthcare : Predicting disease diagnosis, treatment outcomes, and drug efficacy to inform patient care and medical research.

- Weather forecasting: Predicting weather patterns and conditions to inform emergency response planning, agriculture, and transportation.

- Transportation : Predicting traffic patterns and congestion to inform route planning and transportation infrastructure development.

- Sports : Predicting the outcomes of sporting events to inform sports betting and game strategy.

- Marketing : Predicting consumer behavior, preferences, and buying habits to inform marketing and advertising strategies.

- Education : Predicting student performance and outcomes to inform academic planning and intervention strategies.

- Energy and utilities : Predicting energy demand and supply to inform energy infrastructure planning and maintenance.

Purpose of Prediction

The purpose of prediction is to make informed decisions and take actions based on expected future outcomes. Predictions are used to estimate the likelihood of future events or outcomes, and to guide decision-making based on those estimates.

In many industries and fields, predictions are an essential tool for optimizing resources, managing risks, and improving outcomes. For example, in finance, stock market predictions are used to inform investment decisions, and in healthcare, disease prediction models are used to identify patients at risk of developing certain conditions and inform treatment decisions.

Predictions are also used to anticipate and prepare for potential future events or outcomes, such as natural disasters, epidemics, or economic downturns. By using predictions to prepare for these scenarios, businesses, governments, and organizations can reduce the impact of such events and improve their resilience.

When to Predict

Here are some common situations where predictions are made:

- Before making a decision : Predictions can be made before making a decision to inform the decision-making process. For example, predicting sales or market trends before launching a new product to help inform marketing and pricing decisions.

- During planning and forecasting : Predictions can be made during planning and forecasting processes to inform resource allocation and strategy development. For example, predicting demand for products or services to inform production and supply chain planning.

- In response to emerging situations: Predictions can be made in response to emerging situations, such as natural disasters, pandemics, or economic changes. For example, predicting the spread of a virus to inform public health interventions.

- To improve performance: Predictions can be made to identify areas for improvement and to optimize performance. For example, predicting equipment failures to inform maintenance schedules and reduce downtime.

Advantages of Prediction

Some of the advantages of prediction include:

- Improved decision-making : Predictions provide valuable insights into future outcomes, helping decision-makers to make more informed and effective decisions.

- Risk management: Predictions can help identify and manage risks by providing estimates of the likelihood and potential impact of future events.

- Resource optimization : Predictions can inform resource allocation and optimization, allowing businesses and organizations to use their resources more efficiently and effectively.

- Cost savings: Predictions can help identify opportunities to reduce costs and increase efficiency by identifying areas for improvement.

- Competitive advantage : Predictions can give businesses and organizations a competitive advantage by enabling them to anticipate market trends and respond quickly to changes.

- Improved outcomes: Predictions can lead to improved outcomes, whether in healthcare, finance, or other fields, by helping to identify high-risk individuals or optimizing treatment plans.

- Planning and forecasting: Predictions can inform planning and forecasting processes, enabling businesses and organizations to anticipate and prepare for future events and outcomes.

Disadvantages of Prediction

Here are some of the main disadvantages of prediction:

- Uncertainty : Predictions are inherently uncertain and are based on assumptions and data that may not always be accurate or complete. This can lead to errors and inaccuracies in the prediction.

- Over-reliance on predictions: Over-reliance on predictions can lead to complacency and a failure to consider other important factors or to adapt to changing circumstances.

- Ethical concerns : Predictions can raise ethical concerns, particularly when they involve sensitive topics such as healthcare or criminal justice. For example, using predictions to make decisions about medical treatment or criminal sentencing may be seen as unfair or discriminatory.

- Limited data availability : Predictions are only as good as the data that is available to support them. In some cases, there may be limited or incomplete data available, which can make it difficult to develop accurate predictions.

- Bias : Predictions may be biased if the data used to develop them is biased or if the algorithms used to generate the predictions have inherent biases.

- Unforeseen events : Predictions may not account for unforeseen events that can impact the outcome being predicted. For example, a natural disaster or other unexpected event could significantly alter the outcome being predicted.

About the author

Muhammad Hassan

Researcher, Academic Writer, Web developer

You may also like

What is Art – Definition, Types, Examples

What is Anthropology – Definition and Overview

What is Literature – Definition, Types, Examples

Economist – Definition, Types, Work Area

Anthropologist – Definition, Types, Work Area

What is History – Definitions, Periods, Methods

- - Google Chrome

Intended for healthcare professionals

- Access provided by Google Indexer

- My email alerts

- BMA member login

- Username * Password * Forgot your log in details? Need to activate BMA Member Log In Log in via OpenAthens Log in via your institution

Search form

- Advanced search

- Search responses

- Search blogs

- A guide to systematic...

A guide to systematic review and meta-analysis of prediction model performance

- Related content

- Peer review

- Thomas P A Debray , assistant professor 1 2 ,

- Johanna A A G Damen , PhD fellow 1 2 ,

- Kym I E Snell , research fellow 3 ,

- Joie Ensor , research fellow 3 ,

- Lotty Hooft , associate professor 1 2 ,

- Johannes B Reitsma , associate professor 1 2 ,

- Richard D Riley , professor 3 ,

- Karel G M Moons , professor 1 2

- 1 Cochrane Netherlands, University Medical Center Utrecht, PO Box 85500 Str 6.131, 3508 GA Utrecht, Netherlands

- 2 Julius Center for Health Sciences and Primary Care, University Medical Center Utrecht, PO Box 85500 Str 6.131, 3508 GA Utrecht, Netherlands

- 3 Research Institute for Primary Care and Health Sciences, Keele University, Staffordshire, UK

- Correspondence to: T P A Debray T.Debray{at}umcutrecht.nl

- Accepted 25 November 2016

Validation of prediction models is highly recommended and increasingly common in the literature. A systematic review of validation studies is therefore helpful, with meta-analysis needed to summarise the predictive performance of the model being validated across different settings and populations. This article provides guidance for researchers systematically reviewing and meta-analysing the existing evidence on a specific prediction model, discusses good practice when quantitatively summarising the predictive performance of the model across studies, and provides recommendations for interpreting meta-analysis estimates of model performance. We present key steps of the meta-analysis and illustrate each step in an example review, by summarising the discrimination and calibration performance of the EuroSCORE for predicting operative mortality in patients undergoing coronary artery bypass grafting.

Summary points

Systematic review of the validation studies of a prediction model might help to identify whether its predictions are sufficiently accurate across different settings and populations

Efforts should be made to restore missing information from validation studies and to harmonise the extracted performance statistics

Heterogeneity should be expected when summarising estimates of a model’s predictive performance

Meta-analysis should primarily be used to investigate variation across validation study results

Systematic reviews and meta-analysis are an important—if not the most important—source of information for evidence based medicine. 1 Traditionally, they aim to summarise the results of publications or reports of primary treatment studies and (more recently) of primary diagnostic test accuracy studies. Compared to therapeutic intervention and diagnostic test accuracy studies, there is limited guidance on the conduct of systematic reviews and meta-analysis of primary prognosis studies.

A common aim of primary prognostic studies concerns the development of so-called prognostic prediction models or indices. These models estimate the individualised probability or risk that a certain condition will occur in the future by combining information from multiple prognostic factors from an individual. Unfortunately, there is often conflicting evidence about the predictive performance of developed prognostic prediction models. For this reason, there is a growing demand for evidence synthesis of (external validation) studies assessing a model’s performance in new individuals. 2 A similar issue relates to diagnostic prediction models, where the validation performance of a model for predicting the risk of a disease being already present is of interest across multiple studies.

Previous guidance papers regarding methods for systematic reviews of predictive modelling studies have addressed the searching, 3 4 5 design, 2 data extraction, and critical appraisal 6 7 of primary studies. In this paper, we provide further guidance for systematic review and for meta-analysis of such models. Systematically reviewing the predictive performance of one or more prediction models is crucial to examine a model’s predictive ability across different study populations, settings, or locations, 8 9 10 11 and to evaluate the need for further adjustments or improvements of a model.

Although systematic reviews of prediction modelling studies are increasingly common, 12 13 14 15 16 17 researchers often refrain from undertaking a quantitative synthesis or meta-analysis of the predictive performance of a specific model. Potential reasons for this pitfall are concerns about the quality of included studies, unavailability of relevant summary statistics due to incomplete reporting, 18 or simply a lack of methodological guidance.

Based on previous publications, we therefore first describe how to define the systematic review question, to identify the relevant prediction modelling studies from the literature 3 5 and to critically appraise the identified studies. 6 7 Additionally, and not yet addressed in previous publications, we provide guidance on which predictive performance measures could be extracted from the primary studies, why they are important, and how to deal with situations when they are missing or poorly reported. The need to extract aggregate results and information from published studies provides unique challenges that are not faced when individual participant data are available, as described recently in The BMJ . 19

We subsequently discuss how to quantitatively summarise the extracted predictive performance estimates and investigate sources of between-study heterogeneity. The different steps are summarised in figure 1 ⇓ , some of which are explained further in different appendices. We illustrate each step of the review using an empirical example study—that is, the synthesis of studies validating predictive performance of the additive European system for cardiac operative risk evaluation (EuroSCORE). Here onwards, we focus on systematic review and meta-analysis of a specific prognostic prediction model. All guidance can, however, similarly be applied to the meta-analysis of diagnostic prediction models. We focus on statistical criteria of good performance (eg, in terms of discrimination and calibration) and highlight other clinically important measures of performance (such as net benefit) in the discussion.

Fig 1 Flowchart for systematically reviewing and, if considered appropriate, meta-analysis of the validation studies of a prediction model. CHARMS=checklist for critical appraisal and data extraction for systematic reviews of prediction modelling studies; PROBAST=prediction model risk of bias assessment tool; PICOTS=population, intervention, comparator, outcome(s), timing, setting; GRADE=grades of recommendation, assessment, development, and evaluation; PRISMA=preferred reporting items for systematic reviews and meta-analyses; TRIPOD=transparent reporting of a multivariable prediction model for individual prognosis or diagnosis

- Download figure

- Open in new tab

- Download powerpoint

Empirical example

As mentioned earlier, we illustrate our guidance using a published review of studies validating EuroSCORE. 13 This prognostic model aims to predict 30 day mortality in patients undergoing any type of cardiac surgery (appendix 1). It was developed by a European steering group in 1999 using logistic regression in a dataset from 13 302 adult patients undergoing cardiac surgery under cardiopulmonary bypass. The previous review identified 67 articles assessing the performance of the EuroSCORE in patients that were not used for the development of the model (external validation studies). 13 It is important to evaluate whether the predictive performance of EuroSCORE is adequate, because poor performance could eventually lead to poor decision making and thereby affect patient health.

In this paper, we focus on the validation studies that examined the predictive performance of the so-called additive EuroSCORE system in patients undergoing (only) coronary artery bypass grafting (CABG). We included a total of 22 validations, including more than 100 000 patients from 20 external validation studies and from the original development study (appendix 2).

Steps of the systematic review

Formulating the review question and protocol.

As for any other type of biomedical research, it is strongly recommended to start with a study protocol describing the rationale, objectives, design, methodology, and statistical considerations of the systematic review. 20 Guidance for formulating a review question for systematic review of prediction models has recently been provided by the CHARMS checklist (checklist for critical appraisal and data extraction for systematic reviews of prediction modelling studies). 6 This checklist addresses a modification (PICOTS) of the PICO system (population, intervention, comparison, and outcome) used in therapeutic studies, and additionally considers timing (that is, at which time point and over what time period the outcome is predicted) and setting (that is, the role or setting of the prognostic model). More information on the different items is provided in box 1 and appendix 3.

Box 1: PICOTS system

The PICOTS system, as presented in the CHARMS checklist, 6 describes key items for framing the review aim, search strategy, and study inclusion and exclusion criteria. The items are explained below in brief, and applied to our case study:

Population—define the target population in which the prediction model will be used. In our case study, the population of interest comprises patients undergoing coronary artery bypass grafting.

Intervention (model)—define the prediction model(s) under review. In the case study, the focus is on the prognostic additive EuroSCORE model.

Comparator—if applicable, one can address competing models for the prognostic model under review. The existence of alternative models was not considered in our case study.

Outcome(s)—define the outcome(s) of interest for which the model is validated. In our case study, the outcome was defined as all cause mortality. Papers validating the EuroSCORE model to predict other outcomes such as cardiovascular mortality were excluded.

Timing—specifically for prognostic models, it is important to define when and over what time period the outcome is predicted. Here, we focus on all cause mortality at 30 days, predicted using preoperative conditions.

Setting—define the intended role or setting of the prognostic model. In the case study, the intended use of the EuroSCORE model was to perform risk stratification in the assessment of cardiac surgical results, such that operative mortality could be used as a valid measure of quality of care.

The formal review question was as follows: to what extent is the additive EuroSCORE able to predict all cause mortality at 30 days in patients undergoing CABG? The question is primarily interested in the predictive performance of the original EuroSCORE, and not how it performs after it has been recalibrated or adjusted in new data.

Formulating the search strategy

When reviewing studies that evaluate the predictive performance of a specific prognostic model, it is important to ensure that the search strategy identifies all publications that validated the model for the target population, setting, or outcomes at interest. To this end, the search strategy should be formulated according to aforementioned PICOTS of interest. Often, the yield of search strategies can further be improved by making use of existing filters for identifying prediction modelling studies 3 4 5 or by adding the name or acronym of the model under review. Finally, it might help to inspect studies that cite the original publication in which the model was developed. 15

We used a generic search strategy including the terms “EuroSCORE” and “Euro SCORE” in the title and abstract. The search resulted in 686 articles. Finally, we performed a cross reference check in the retrieved articles, and identified one additional validation study of the additive EuroSCORE.

Critical appraisal

The quality of any meta-analysis of a systematic review strongly depends on the relevance and methodological quality of included studies. For this reason, it is important to evaluate their congruence with the review question, and to assess flaws in the design, conduct, and analysis of each validation study. This practice is also recommended by Cochrane, and can be implemented using the CHARMS checklist, 6 and, in the near future, using the prediction model risk of bias assessment tool (PROBAST). 7

Using the CHARMS checklist and a preliminary version of the PROBAST tool, we critically appraised the risk of bias of each retrieved validation study of the EuroSCORE, as well as of the model development study. Most (n=14) of the 22 validation studies were of low or unclear risk of bias (fig 2 ⇓ ). Unfortunately, several validation studies did not report how missing data were handled (n=13) or performed complete case analysis (n=5). We planned a sensitivity analysis that excluded all validation studies with high risk of bias for at least one domain (n=8). 21

Fig 2 Overall judgment for risk of bias of included articles in the case study (predictive performance of the EuroSCORE for all cause mortality at 30 days in patients undergoing coronary artery bypass grafting). Study references listed in appendix 2. Study participants domain=design of the included validation study, and inclusion and exclusion of its participants; predictors domain=definition, timing, and measurement of predictors in the validation study (it also assesses whether predictors have not been measured and were therefore omitted from the model in the validation study); outcome domain=definition, timing, and measurement of predicted outcomes; sample size and missing data domain=number of participants in the validation study and exclusions owing to missing data; statistical analysis domain=validation methods (eg, whether the model was recalibrated before validation). Note that there are two validations presented in Nashef 2002; the same scores apply to both model validations. *Original development study (split sample validation)

Quantitative data extraction and preparation

To allow for quantitative synthesis of the predictive performance of the prediction model under study, the necessary results or performance measures and their precision need to be extracted from each model validation study report. The CHARMS checklist can be used for this guidance. We briefly highlight the two most common statistical measures of predictive performance, discrimination and calibration, and discuss how to deal with unreported or inconsistent reporting of these performance measures.

Discrimination

Discrimination refers to a prediction model’s ability to distinguish between patients developing and not developing the outcome, and is often quantified by the concordance (C) statistic. The C statistic ranges from 0.5 (no discriminative ability) to 1 (perfect discriminative ability). Concordance is most familiar from logistic regression models, where it is also known as the area under the receiver operating characteristics (ROC) curve. Although C statistics are the most common reported estimates of prediction model performance, they can still be estimated from other reported quantities when missing. Formulas for doing this are presented in appendix 7 (along with their standard errors), and implement the transformations that are needed for conducting the meta-analysis (see meta-analysis section below).

The C statistic of a prediction model can vary substantially across different validation studies. A common cause for heterogeneity in reported C statistics relates to differences between studied populations or study designs. 8 22 In particular, it has been demonstrated that the distribution of patient characteristics (so-called case mix variation) could substantially affect the discrimination of the prediction model, even when the effects of all predictors (that is, regression coefficients) remain correct in the validation study. 22 The more similarity that exists between participants of a validation study (that is, a more homogeneous or narrower case mix), the less discrimination can be achieved by the prediction model.

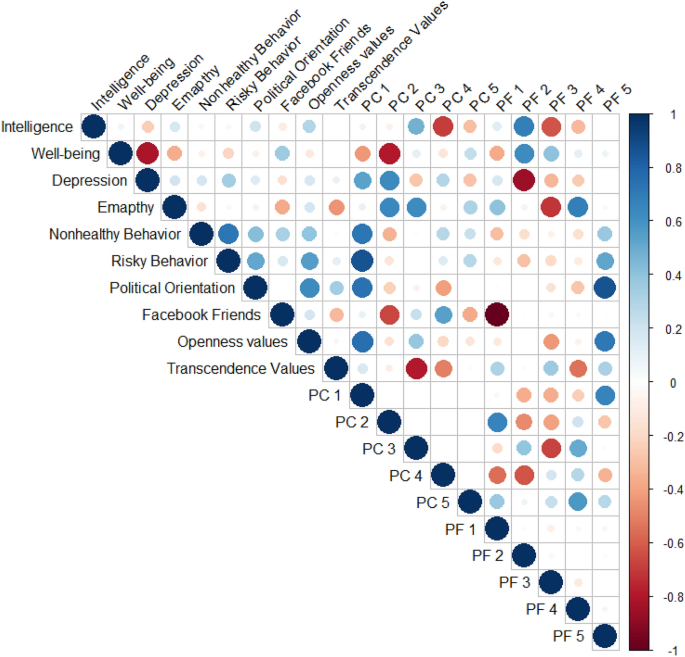

Therefore, it is important to extract information on the case mix variation between patients for each included validation study, 8 such as the standard deviation of the key characteristics of patients, or of the linear predictor (fig 3 ⇓ ). The linear predictor is the weighted sum of the values of the predictors in the validation study, where the weights are the regression coefficients of the prediction model under investigation. 23 Heterogeneity in reported C statistics might also appear when predictor effects differ across studies (eg, due to different measurement methods of predictors), or when different definitions (or different derivations) of the C statistic have been used. Recently, several concordance measures have been proposed that allow to disentangle between different sources of heterogeneity. 22 24 Unfortunately, these measures are currently rarely reported.

Fig 3 Estimation of the standard deviation of the linear predictor as a way to quantify case mix variation within a study

We found that the C statistic of the EuroSCORE was reported in 20 validations (table 1 ⇓ ). When measures of uncertainty were not reported, we approximated the standard error of the C statistic (seven studies) using the equations provided in appendix 7 (fig 4 ⇓ ). Furthermore, for each validation, we extracted the standard deviation of the age distribution and of the linear predictor of the additive EuroSCORE to help quantify the case mix variation in each study. When such information could not be retrieved, we estimated the standard deviation from reported ranges or histograms (fig 3 ⇑ ). 26

Details of the 22 validations of the additive EuroSCORE to predict overall mortality at 30 days

- View inline

Fig 4 Forest plots of extracted performance statistics of the additive EuroSCORE in the case study (to predict all cause mortality at 30 days in patients undergoing coronary artery bypass grafting). Part A shows forest plot of study specific C statistics (all 95% confidence intervals estimated on the logit scale); part B shows forest plot of study specific total O:E ratios (where O=total number of observed deaths and E=total number of expected deaths as predicted by the model; when missing, 95% confidence intervals were approximated on the log scale using the equations from appendix 7). *Performance in the original development study (split sample validation)

Calibration

Calibration refers to a model’s accuracy of predicted risk probabilities, and indicates the extent to which expected outcomes (predicted from the model) and observed outcomes agree. It is preferably reported graphically with expected outcome probabilities plotted against observed outcome frequencies (so-called calibration plots, see appendix 4), often across tenths of predicted risk. 23 Also for calibration, reported performance estimates might vary across different validation studies. Common causes for this are differences in overall prognosis (outcome incidence). These differences might appear because of differences in healthcare quality and delivery, for example, with screening programmes in some countries identifying disease at an earlier stage, and thus apparently improving prognosis in early years compared to other countries. This again emphasises the need to identify studies and participants relevant to the target population, so that a meta-analysis of calibration performance is relevant.

Summarising estimates of calibration performance is challenging because calibration plots are most often not presented, and because studies tend to report different types of summary statistics in calibration. 12 27 Therefore, we propose to extract information on the total number of observed (O) and expected (E) events, which are statistics most likely to be reported or derivable (appendix 7). The total O:E ratio provides a rough indication of the overall model calibration (across the entire range of predicted risks). The total O:E ratio is strongly related to the calibration in the large (appendix 5), but that is rarely reported. The O:E ratio might also be available in subgroups, for example, defined by tenths of predicted risk or by particular groups of interest (eg, ethnic groups, or regions). These O:E ratios could also be extracted, although it is unlikely that all studies will report the same subgroups. Finally, it would be helpful to also extract and summarise estimates of the calibration slope.

Calibration of the additive EuroSCORE was visually assessed in seven validation studies. Although the total O:E ratio was typically not reported, it could be calculated from other information for 19 of the 22 included validations. For nine of these validation studies, it was also possible to extract the proportion of observed outcomes across different risk strata of the additive EuroSCORE (appendix 8). Measures of uncertainty were often not reported (table 1 ⇑ ). We therefore approximated the standard error of the total O:E ratio (19 validation studies) using the equations provided in appendix 7. The forest plot displaying the study specific results is presented in figure 4 ⇑ . The calibration slope was not reported for any validation study and could not be derived using other information.

Performance of survival models

Although we focus on discrimination and calibration measures of prediction models with a binary outcome, similar performance measures exist for prediction models with a survival (time to event) outcome. Caution is, however, warranted when extracting reported C statistics because different adaptations have been proposed for use with time to event outcomes. 9 28 29 We therefore recommend to carefully evaluate the type of reported C statistic and to consider additional measures of model discrimination.

For instance, the D statistic gives the log hazard ratio of a model’s predicted risks dichotomised at the median value, and can be estimated from Harrell’s C statistic when missing. 30 Finally, when summarising the calibration performance of survival models, it is recommended to extract or calculate O:E ratios for particular (same) time points because they are likely to differ across time. When some events remain unobserved, owing to censoring, the total number of events and the observed outcome risk at particular time points should be derived (or approximated) using Kaplan-Meier estimates or Kaplan-Meier curves.

Meta-analysis

Once all relevant studies have been identified and corresponding results have been extracted, the retrieved estimates of model discrimination and calibration can be summarised into a weighted average. Because validation studies typically differ in design, execution, and thus case-mix, variation between their results are unlikely to occur by chance only. 8 22 For this reason, the meta-analysis should usually allow for (rather than ignore) the presence of heterogeneity and aim to produce a summary result (with its 95% confidence interval) that quantifies the average performance across studies. This can be achieved by implementing a random (rather than a fixed) effects meta-analysis model (appendix 9). The meta-analysis then also yields an estimate of the between-study standard deviation, which directly quantifies the extent of heterogeneity across studies. 19 Other meta-analysis models have also been proposed, such as by Pennells and colleagues, who suggest weighting by the number of events in each study because this is the principal determinant of study precision. 31 However, we recommend to use traditional random effects models where the weights are based on the within-study error variance. Although it is common to summarise estimates of model discrimination and calibration separately, they can also jointly be synthesised using multivariate meta-analysis. 9 This might help to increase precision of summary estimates, and to avoid exclusion of studies for which relevant estimates are missing (eg, discrimination is reported but not calibration).

To further interpret the relevance of any between-study heterogeneity, it is also helpful to calculate an approximate 95% prediction interval (appendix 9). This interval provides a range for the potential model performance in a new validation study, although it will usually be very wide if there are fewer than 10 studies. 32 It is also possible to estimate the probability of good performance when the model is applied in practice. 9 This probability can, for instance, indicate the likelihood of achieving a certain C statistic in a new population. In case of multivariate meta-analysis, it is even possible to define multiple criteria of good performance. Unfortunately, when performance estimates substantially vary across studies, summary estimates might not be very informative. Of course, it is also desirable to understand the cause of between-study heterogeneity in model performance, and we return to this issue in the next section.

Some caution is warranted when summarising estimates of model discrimination and calibration. Previous studies have demonstrated that extracted C statistics 33 34 35 and total O:E ratios 33 should be rescaled before meta-analysis to improve the validity of its underlying assumptions. Suggestions for the necessary transformations are provided in appendix 7. Furthermore, in line with previous recommendations, we propose to adopt restricted maximum likelihood (REML) estimation and to use the Hartung-Knapp-Sidik-Jonkman (HKSJ) method when calculating 95% confidence intervals for the average performance, to better account for the uncertainty in the estimated between-study heterogeneity. 36 37 The HKSJ method is implemented in several meta-analysis software packages, including the metareg module in Stata (StataCorp) and the metafor package in R (R Foundation for Statistical Computing).

To summarise the performance of the EuroSCORE, we performed random effects meta- analyses with REML estimation and HKSJ confidence interval derivation. For model discrimination, we found a summary C statistic of 0.79 (95% confidence interval 0.77 to 0.81; approximate 95% prediction interval 0.72 to 0.84). The probability of so-called good discrimination (defined as a C statistic >0.75) was 89%. For model calibration, we found a summary O:E ratio of 0.53. This implies that, on average, the additive EuroSCORE substantially overestimates the risk of all cause mortality at 30 days. The weighted average of the total O:E ratio is, however, not very informative because 95% prediction intervals are rather wide (0.19 to 1.46). This problem is also illustrated by the estimated probability of so-called good calibration (defined as an O:E ratio between 0.8 and 1.2), which was only 15%. When jointly meta-analysing discrimination and calibration performance, we found similar summary estimates for the C statistic and total O:E ratio. The joint probability of good performance (defined as C statistic >0.75 and O:E ratio between 0.8 and 1.2), however, decreased to 13% owing to the large extent of miscalibration. Therefore, it is important to investigate potential sources of heterogeneity in the calibration performance of the additive EuroSCORE model.

Investigating heterogeneity across studies

When the discrimination or calibration performance of a prediction model is heterogeneous across validation studies, it is important to investigate potential sources of heterogeneity. This may help to understand under what circumstances the model performance remains adequate, and when the model might require further improvements. As mentioned earlier, the discrimination and calibration of a prediction model can be affected by differences in the design 38 and in populations across the validation studies, for example, owing to changes in case mix variation or baseline risk. 8 22

In general, sources of heterogeneity can be explored by performing a meta-regression analysis where the dependent variable is the (transformed) estimate of the model performance measure. 39 Study level or summarised patient level characteristics (eg, mean age) are then used as explanatory or independent variables. Alternatively, it is possible to summarise model performance across different clinically relevant subgroups. This approach is also known as subgroup analysis and is most sensible when there are clearly definable subgroups. This is often only practical if individual participant data are available. 19

Key issues that could be considered as modifiers of model performance are differences in the heterogeneity between patients across the included validation studies (difference case mix variation), 8 differences in study characteristics (eg, in terms of design, follow-up time, or outcome definition), and differences in the statistical analysis or characteristics related to selective reporting and publication (eg, risk of bias, study size). The regression coefficient obtained from a meta-regression analysis describes how the dependent variable (here, the logit C statistic or log O:E ratio) changes between subgroups of studies in case of a categorical explanatory variable or with one unit increase in a continuous explanatory variable. The statistical significance measure of the regression coefficient is a test of whether there is a (linear) relation between the model’s performance and the explanatory variable. However, unless the number of studies is reasonably large (>10), the power to detect a genuine association with these tests will usually be low. In addition, it is well known that meta-regression and subgroup analysis are prone to ecological bias when investigating summarised patient level covariates as modifiers of model performance. 40

To investigate whether population differences generated heterogeneity across the included validation studies, we performed several meta-regression analyses (fig 5 ⇓ and appendix 10). We first evaluated whether the summary C statistic was related to the case mix variation, as quantified by the spread of the EuroSCORE in each validation study, or related to the spread of patient age. We then evaluated whether the summarised O:E ratio was related to the mean EuroSCORE values, year of study recruitment, or continent. Although the power was limited to detect any association, results suggest that the EuroSCORE tends to overestimate the risk of early mortality in low risk populations (with a mean EuroSCORE value <6). Similar results were found when we investigated the total O:E ratio across different subgroups, using the reported calibration tables and histograms within the included validation studies (appendix 8). Although year of study recruitment and continent did not significantly influence the calibration, we found that miscalibration was more problematic in (developed) countries with low mortality rates (appendix 10). The C statistic did not appear to differ importantly as the standard deviation of the EUROSCORE or age distribution increased.

Fig 5 Results from random effects meta-regression models in the case study (predictive performance of the EuroSCORE for all cause mortality at 30 days in patients undergoing coronary artery bypass grafting). Solid lines=regression lines; dashed lines=95% confidence intervals; dots=included validation studies

Overall, we can conclude that the additive EuroSCORE fairly discriminates between mortality and survival in patients undergoing CABG. Its overall calibration, however, is quite poor because predicted risks appear too high in low risk patients, and the extent of miscalibration substantially varies across populations. Not enough information is available to draw conclusions on the performance of EuroSCORE in high risk patients. Although it has been suggested that overprediction likely occurs due to improvements in cardiac surgery, we could not confirm this effect in the present analyses.

Sensitivity analysis

As for any meta-analysis, it is important to show that results are not distorted by low quality validation studies. For this reason, key analyses should be repeated for the studies at lower and higher risk of bias.

We performed a subgroup analysis by excluding those studies at high risk of bias, to ascertain their effect (fig 2 ⇑ ). Results in table 2 ⇓ indicate that this approach yielded similar summary estimates of discrimination and calibration as those in the full analysis of all studies.

Results from the case study (predictive performance of the EuroSCORE for all cause mortality at 30 days in patients undergoing coronary artery bypass grafting) after excluding studies with high risk of bias

Reporting and presentation

As for any other type of systematic review and meta-analysis, it is important to report the conducted research in sufficient detail. The PRISMA statement (preferred reporting items for systematic reviews and meta-analyses) 41 highlights the key issues for reporting of meta-analysis of intervention studies, which are also generally relevant for meta-analysis of model validation studies. If meta-analysis of individual participant data (IPD) has been used, then PRISMA-IPD will also be helpful. 42 Furthermore, the TRIPOD statement (transparent reporting of a multivariable prediction model for individual prognosis or diagnosis) 23 43 provides several recommendations for the reporting of studies developing, validating, or updating a prediction model, and can be considered here as well. Finally, use of the GRADE approach (grades of recommendation, assessment, development, and evaluation) might help to interpret the results of the systematic review and to present the evidence. 21

As illustrated in this article, researchers should clearly describe the review question, search strategy, tools used for critical appraisal and risk of bias assessment, quality of the included studies, methods used for data extraction and meta-analysis, data used for meta-analysis, and corresponding results and their uncertainty. Furthermore, we recommend to report details on the relevant study populations (eg, using the mean and standard deviation of the linear predictor) and to present summary estimates with confidence intervals and, if appropriate, prediction intervals. Finally, it might be helpful to report probabilities of good performance separately for each performance measure, because researchers can then decide which criteria are most relevant for their situation.

Concluding remarks

In this article, we provide guidance on how to systematically review and quantitatively synthesize the predictive performance of a prediction model. Although we focused on systematic review and meta-analysis of a prognostic model, all guidance can similarly be applied to the meta-analysis of a diagnostic prediction model. We discussed how to define the systematic review question, identify the relevant prediction model studies from the literature, critically appraise the identified studies, extract relevant summary statistics, quantitatively summarise the extracted estimates, and investigate sources of between-study heterogeneity.

Meta-analysis of a prediction model’s predictive performance bears many similarities to other types of meta-analysis. However, in contrast to synthesis of randomised trials, heterogeneity is much more likely in meta-analysis of studies assessing the predictive performance of a prediction model, owing to the increased variation of eligible study designs, increased inclusion of studies with different populations, and increased complexity of required statistical methods. When substantial heterogeneity occurs, summary estimates of model performance can be of limited value. For this reason, it is paramount to identify relevant studies through a systematic review, assess the presence of important subgroups, and evaluate the performance the model is likely to yield in new studies.

Although several concerns can be resolved by aforementioned strategies, it is possible that substantial between-study heterogeneity remains and can only be addressed by harmonising and analysing the study individual participant data. 19 Previous studies have demonstrated that access to individual participant data might also help to retrieve unreported performance measures (eg, calibration slope), estimate the within-study correlation between performance measures, 9 avoid continuity corrections and data transformations, further interpret model generalisability, 8 19 22 31 and tailor the model to populations at hand. 44

Often, multiple models exist for predicting the same condition in similar populations. In such situations, it could be desirable to investigate their relative performance. Although this strategy has already been adopted by several authors, caution is warranted in the absence of individual participant data. In particular, the lack of head-to-head comparisons between competing models and the increased likelihood of heterogeneity across validation studies renders comparative analyses highly prone to bias. Further, it is well known that performance measures such as the C statistic are relatively insensitive to improvements in predictive performance. We therefore believe that summary performance estimates might often be of limited value, and that a meta-analysis should rather focus on assessing their variability across relevant settings and populations. Formal comparisons between competing models are possible (eg, by adopting network meta-analysis methods) but appear most useful for exploratory purposes.

Finally, the following limitations need to be considered in order to fully appreciate this guidance. Firstly, our empirical example demonstrates that the level of reporting in validation studies is often poor. Although the quality of reporting has been steadily improving over the past few years, it will often be necessary to restore missing information from other quantities. This strategy might not always be reliable, such that sensitivity analyses remain paramount in any meta-analysis. Secondly, the statistical methods we discussed in this article are most applicable when meta-analysing the performance results from prediction models developed with logistic regression. Although the same principles apply to survival models, the level of reporting tends to be even less consistent because many more statistical choices and multiple time points need to be considered. Thirdly, we focused on frequentist methods for summarising model performance and calculating corresponding prediction intervals. Bayesian methods have, however, been recommended when predicting the likely performance in a future validation study. 45 Lastly, we mainly focused on statistical measures of model performance, and did not discuss how to meta-analyse clinical measures of performance such as net benefit. 46 Because these performance measures are not frequently reported and typically require subjective thresholds, summarising them appears difficult without access to individual participant data. Nevertheless, further research on how to meta-analyse net benefit estimates would be welcome.

In summary, systematic review and meta-analysis of prediction model performance could help to interpret the potential applicability and generalisability of a prediction model. When the meta-analysis shows promising results, it may be worthwhile to obtain individual participant data to investigate in more detail how the model performs across different populations and subgroups. 19 44

Contributors: KGMM, TPAD, JBR, and RDR conceived the paper objectives. TPAD prepared a first draft of this article, which was subsequently reviewed in multiple rounds by JAAGD, JE, KIES, LH, RDR, JBR, and KGMM. TPAD and JAAGD undertook the data extraction and statistical analyses. TPAD, JAAGD, RDR, and KGMM contributed equally to the paper. All authors approved the final version of the submitted manuscript. TPAD is guarantor. All authors had full access to all of the data (including statistical reports and tables) in the study and can take responsibility for the integrity of the data and the accuracy of the data analysis.

Funding: Financial support received from the Cochrane Methods Innovation Funds Round 2 (MTH001F) and the Netherlands Organization for Scientific Research (91617050 and 91810615). This work was also supported by the UK Medical Research Council Network of Hubs for Trials Methodology Research (MR/L004933/1- R20). RDR was supported by an MRC partnership grant for the PROGnosis RESearch Strategy (PROGRESS) group (grant G0902393). None of the funding sources had a role in the design, conduct, analyses, or reporting of the study or in the decision to submit the manuscript for publication.

Competing interests: All authors have completed the ICMJE uniform disclosure form at www.icmje.org/coi_disclosure.pdf and declare: support from the Cochrane Methods Innovation Funds Round 2, Netherlands Organization for Scientific Research, and the UK Medical Research Council for the submitted work; no financial relationships with any organisations that might have an interest in the submitted work in the previous three years; no other relationships or activities that could appear to have influenced the submitted work.

We thank The BMJ editors and reviewers for their helpful feedback on this manuscript.

- ↵ Khan K, Kunz R, Kleijnen J, et al. Systematic reviews to support evidence-based medicine: how to review and apply findings of healthcare research. CRC Press, 2nd ed, 2011.

- ↵ Steyerberg EW, Moons KGM, van der Windt DA, et al. PROGRESS Group. Prognosis Research Strategy (PROGRESS) 3: prognostic model research. PLoS Med 2013 ; 10 : e1001381 . doi:10.1371/journal.pmed.1001381 pmid:23393430 . OpenUrl CrossRef PubMed

- ↵ Geersing GJ, Bouwmeester W, Zuithoff P, Spijker R, Leeflang M, Moons KG. Search filters for finding prognostic and diagnostic prediction studies in Medline to enhance systematic reviews [correction in: PLoS One 2012;7(7): doi/10.1371/annotation/96bdb520-d704-45f0-a143-43a48552952e]. PLoS One 2012 ; 7 : e32844 . doi:10.1371/journal.pone.0032844 pmid:22393453 . OpenUrl CrossRef PubMed

- ↵ Wong SS, Wilczynski NL, Haynes RB, Ramkissoonsingh R. Hedges Team. Developing optimal search strategies for detecting sound clinical prediction studies in MEDLINE. AMIA Annu Symp Proc 2003 : 728 - 32 . pmid:14728269 .

- ↵ Ingui BJ, Rogers MA. Searching for clinical prediction rules in MEDLINE. J Am Med Inform Assoc 2001 ; 8 : 391 - 7 . doi:10.1136/jamia.2001.0080391 pmid:11418546 . OpenUrl Abstract / FREE Full Text

- ↵ Moons KGM, de Groot JAH, Bouwmeester W, et al. Critical appraisal and data extraction for systematic reviews of prediction modelling studies: the CHARMS checklist. PLoS Med 2014 ; 11 : e1001744 . doi:10.1371/journal.pmed.1001744 pmid:25314315 . OpenUrl CrossRef PubMed

- ↵ Wolff R, Whiting P, Mallett S, et al. PROBAST: a risk of bias tool for prediction modelling studies. Cochrane Colloquium Vienna. 2015.

- ↵ Debray TPA, Vergouwe Y, Koffijberg H, Nieboer D, Steyerberg EW, Moons KG. A new framework to enhance the interpretation of external validation studies of clinical prediction models. J Clin Epidemiol 2015 ; 68 : 279 - 89 . doi:10.1016/j.jclinepi.2014.06.018 pmid:25179855 . OpenUrl CrossRef PubMed

- ↵ Snell KI, Hua H, Debray TP, et al. Multivariate meta-analysis of individual participant data helped externally validate the performance and implementation of a prediction model. J Clin Epidemiol 2016 ; 69 : 40 - 50 . doi:10.1016/j.jclinepi.2015.05.009 pmid:26142114 . OpenUrl CrossRef PubMed

- ↵ Altman DG, Royston P. What do we mean by validating a prognostic model? Stat Med 2000 ; 19 : 453 - 73 . doi:10.1002/(SICI)1097-0258(20000229)19:4<453::AID-SIM350>3.0.CO;2-5 pmid:10694730 . OpenUrl CrossRef PubMed Web of Science

- ↵ Justice AC, Covinsky KE, Berlin JA. Assessing the generalizability of prognostic information. Ann Intern Med 1999 ; 130 : 515 - 24 . doi:10.7326/0003-4819-130-6-199903160-00016 pmid:10075620 . OpenUrl CrossRef PubMed Web of Science

- ↵ Collins GS, Omar O, Shanyinde M, Yu LM. A systematic review finds prediction models for chronic kidney disease were poorly reported and often developed using inappropriate methods. J Clin Epidemiol 2013 ; 66 : 268 - 77 . doi:10.1016/j.jclinepi.2012.06.020 pmid:23116690 . OpenUrl CrossRef PubMed

- ↵ Siregar S, Groenwold RHH, de Heer F, Bots ML, van der Graaf Y, van Herwerden LA. Performance of the original EuroSCORE. Eur J Cardiothorac Surg 2012 ; 41 : 746 - 54 . doi:10.1093/ejcts/ezr285 pmid:22290922 . OpenUrl Abstract / FREE Full Text

- ↵ Echouffo-Tcheugui JB, Batty GD, Kivimäki M, Kengne AP. Risk models to predict hypertension: a systematic review. PLoS One 2013 ; 8 : e67370 . doi:10.1371/journal.pone.0067370 pmid:23861760 . OpenUrl CrossRef PubMed

- ↵ Tzoulaki I, Liberopoulos G, Ioannidis JPA. Assessment of claims of improved prediction beyond the Framingham risk score. JAMA 2009 ; 302 : 2345 - 52 . doi:10.1001/jama.2009.1757 pmid:19952321 . OpenUrl CrossRef PubMed Web of Science

- ↵ Eichler K, Puhan MA, Steurer J, Bachmann LM. Prediction of first coronary events with the Framingham score: a systematic review. Am Heart J 2007 ; 153 : 722 - 31, 731.e1-8 . doi:10.1016/j.ahj.2007.02.027 pmid:17452145 . OpenUrl CrossRef PubMed Web of Science

- ↵ Perel P, Edwards P, Wentz R, Roberts I. Systematic review of prognostic models in traumatic brain injury. BMC Med Inform Decis Mak 2006 ; 6 : 38 . doi:10.1186/1472-6947-6-38 pmid:17105661 . OpenUrl CrossRef PubMed

- ↵ Collins GS, de Groot JA, Dutton S, et al. External validation of multivariable prediction models: a systematic review of methodological conduct and reporting. BMC Med Res Methodol 2014 ; 14 : 40 . doi:10.1186/1471-2288-14-40 pmid:24645774 . OpenUrl CrossRef PubMed

- ↵ Riley RD, Ensor J, Snell KI, et al. External validation of clinical prediction models using big datasets from e-health records or IPD meta-analysis: opportunities and challenges. BMJ 2016 ; 353 : i3140 . doi:10.1136/bmj.i3140 pmid:27334381 . OpenUrl FREE Full Text

- ↵ Peat G, Riley RD, Croft P, et al. PROGRESS Group. Improving the transparency of prognosis research: the role of reporting, data sharing, registration, and protocols. PLoS Med 2014 ; 11 : e1001671 . doi:10.1371/journal.pmed.1001671 pmid:25003600 . OpenUrl

- ↵ Iorio A, Spencer FA, Falavigna M, et al. Use of GRADE for assessment of evidence about prognosis: rating confidence in estimates of event rates in broad categories of patients. BMJ 2015 ; 350 : h870 . doi:10.1136/bmj.h870 pmid:25775931 . OpenUrl FREE Full Text

- ↵ Vergouwe Y, Moons KGM, Steyerberg EW. External validity of risk models: Use of benchmark values to disentangle a case-mix effect from incorrect coefficients. Am J Epidemiol 2010 ; 172 : 971 - 80 . doi:10.1093/aje/kwq223 pmid:20807737 . OpenUrl Abstract / FREE Full Text

- ↵ Moons KGM, Altman DG, Reitsma JB, et al. Transparent Reporting of a multivariable prediction model for Individual Prognosis or Diagnosis (TRIPOD): explanation and elaboration. Ann Intern Med 2015 ; 162 : W1-73 . doi:10.7326/M14-0698 pmid:25560730 . OpenUrl PubMed

- ↵ van Klaveren D, Gönen M, Steyerberg EW, Vergouwe Y. A new concordance measure for risk prediction models in external validation settings. Stat Med 2016 ; 35 : 4136 - 52 . doi:10.1002/sim.6997 pmid:27251001 . OpenUrl

- Higgins JPT, Green S. Combining Groups. http://handbook.cochrane.org/chapter_7/7_7_3_8_combining_groups.htm , 2011.

- ↵ Hozo SP, Djulbegovic B, Hozo I. Estimating the mean and variance from the median, range, and the size of a sample. BMC Med Res Methodol 2005 ; 5 : 13 . doi:10.1186/1471-2288-5-13 pmid:15840177 . OpenUrl CrossRef PubMed

- ↵ Bouwmeester W, Zuithoff NPA, Mallett S, et al. Reporting and methods in clinical prediction research: a systematic review. PLoS Med 2012 ; 9 : 1 - 12 . doi:10.1371/journal.pmed.1001221 pmid:22629234 . OpenUrl PubMed

- ↵ Austin PC, Pencinca MJ, Steyerberg EW. Predictive accuracy of novel risk factors and markers: A simulation study of the sensitivity of different performance measures for the Cox proportional hazards regression model. Stat Methods Med Res 2015 ; 0962280214567141 . pmid:25656552 .

- ↵ Blanche P, Dartigues JF, Jacqmin-Gadda H. Review and comparison of ROC curve estimators for a time-dependent outcome with marker-dependent censoring. Biom J 2013 ; 55 : 687 - 704 . doi:10.1002/bimj.201200045 pmid:23794418 . OpenUrl CrossRef PubMed Web of Science

- ↵ Jinks RC, Royston P, Parmar MKB. Discrimination-based sample size calculations for multivariable prognostic models for time-to-event data. BMC Med Res Methodol 2015 ; 15 : 82 . doi:10.1186/s12874-015-0078-y pmid:26459415 . OpenUrl CrossRef PubMed

- ↵ Pennells L, Kaptoge S, White IR, Thompson SG, Wood AM. Emerging Risk Factors Collaboration. Assessing risk prediction models using individual participant data from multiple studies. Am J Epidemiol 2014 ; 179 : 621 - 32 . doi:10.1093/aje/kwt298 pmid:24366051 . OpenUrl Abstract / FREE Full Text

- ↵ Riley RD, Higgins JPT, Deeks JJ. Interpretation of random effects meta-analyses. BMJ 2011 ; 342 : d549 . doi:10.1136/bmj.d549 pmid:21310794 . OpenUrl FREE Full Text

- ↵ Snell KIE. Development and application of statistical methods for prognosis research. PhD thesis, School of Health and Population Sciences, Birmingham, UK, 2015.

- ↵ van Klaveren D, Steyerberg EW, Perel P, Vergouwe Y. Assessing discriminative ability of risk models in clustered data. BMC Med Res Methodol 2014 ; 14 : 5 . doi:10.1186/1471-2288-14-5 pmid:24423445 . OpenUrl CrossRef PubMed

- ↵ Gengsheng Qin , Hotilovac L. Comparison of non-parametric confidence intervals for the area under the ROC curve of a continuous-scale diagnostic test. Stat Methods Med Res 2008 ; 17 : 207 - 21 . doi:10.1177/0962280207087173 pmid:18426855 . OpenUrl Abstract / FREE Full Text

- ↵ IntHout J, Ioannidis JP, Borm GF. The Hartung-Knapp-Sidik-Jonkman method for random effects meta-analysis is straightforward and considerably outperforms the standard DerSimonian-Laird method. BMC Med Res Methodol 2014 ; 14 : 25 . doi:10.1186/1471-2288-14-25 pmid:24548571 . OpenUrl CrossRef PubMed

- ↵ Cornell JE, Mulrow CD, Localio R, et al. Random-effects meta-analysis of inconsistent effects: a time for change. Ann Intern Med 2014 ; 160 : 267 - 70 . doi:10.7326/M13-2886 pmid:24727843 . OpenUrl PubMed Web of Science

- ↵ Ban JW, Emparanza JI, Urreta I, Burls A. Design Characteristics Influence Performance of Clinical Prediction Rules in Validation: A Meta-Epidemiological Study. PLoS One 2016 ; 11 : e0145779 . doi:10.1371/journal.pone.0145779 pmid:26730980 . OpenUrl

- ↵ Deeks JJ, Higgins JPT, Altman DG. Chapter 9. Analysing data and undertaking meta-analyses. Cochrane Collaboration, 2011 .

- ↵ Berlin JA, Santanna J, Schmid CH, Szczech LA, Feldman HI. Anti-Lymphocyte Antibody Induction Therapy Study Group. Individual patient- versus group-level data meta-regressions for the investigation of treatment effect modifiers: ecological bias rears its ugly head. Stat Med 2002 ; 21 : 371 - 87 . doi:10.1002/sim.1023 pmid:11813224 . OpenUrl CrossRef PubMed Web of Science

- ↵ Liberati A, Altman DG, Tetzlaff J, et al. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate healthcare interventions: explanation and elaboration. BMJ 2009 ; 339 : b2700 . doi:10.1136/bmj.b2700 pmid:19622552 . OpenUrl Abstract / FREE Full Text

- ↵ Stewart LA, Clarke M, Rovers M, et al. PRISMA-IPD Development Group. Preferred reporting items for a systematic review and meta- analysis of individual participant data: The PRISMA-IPD Statement. JAMA 2015 ; 313 : 1657 - 65 . doi:10.1001/jama.2015.3656 pmid:25919529 . OpenUrl CrossRef PubMed

- ↵ Collins GS, Reitsma JB, Altman DG, Moons KG. Transparent Reporting of a multivariable prediction model for Individual Prognosis or Diagnosis (TRIPOD): the TRIPOD statement. Ann Intern Med 2015 ; 162 : 55 - 63 . doi:10.7326/M14-0697 pmid:25560714 . OpenUrl CrossRef PubMed

- ↵ Debray TPA, Riley RD, Rovers MM, Reitsma JB, Moons KG. Cochrane IPD Meta-analysis Methods group. Individual participant data (IPD) meta-analyses of diagnostic and prognostic modeling studies: guidance on their use. PLoS Med 2015 ; 12 : e1001886 . doi:10.1371/journal.pmed.1001886 pmid:26461078 . OpenUrl CrossRef PubMed

- ↵ Sutton AJ, Abrams KR. Bayesian methods in meta-analysis and evidence synthesis. Stat Methods Med Res 2001 ; 10 : 277 - 303 . doi:10.1191/096228001678227794 pmid:11491414 . OpenUrl Abstract / FREE Full Text

- ↵ Vickers AJ, Van Calster B, Steyerberg EW. Net benefit approaches to the evaluation of prediction models, molecular markers, and diagnostic tests. BMJ 2016 ; 352 : i6 . doi:10.1136/bmj.i6 pmid:26810254 . OpenUrl FREE Full Text

- AI Collection

- Oxford Thesis Collection

- CC0 version of this metadata

Deep learning for time series prediction and decision making over time

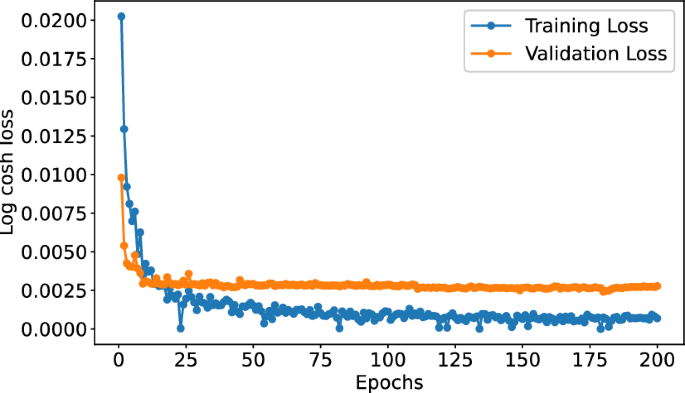

In this thesis, we develop a collection of state-of-the-art deep learning models for time series forecasting. Primarily focusing on a closer alignment with traditional methods in time series modelling, we adopt three main directions of research -- 1) novel architectures, 2) hybrid models, and 3) feature extraction. Firstly, we propose two new architectures for general one-step-ahead and multi-horizon forecasting. With the Recurrent Neural Filter (RNF), we take a closer look at the relation...

Email this record

Please enter the email address that the record information will be sent to.

Please add any additional information to be included within the email.

Cite this record

Chicago style, access document.

- DPhil_Thesis.pdf (Dissemination version, Version of record, 14.5MB)

Why is the content I wish to access not available via ORA?

Content may be unavailable for the following four reasons.

- Version unsuitable We have not obtained a suitable full-text for a given research output. See the versions advice for more information.

- Recently completed Sometimes content is held in ORA but is unavailable for a fixed period of time to comply with the policies and wishes of rights holders.

- Permissions All content made available in ORA should comply with relevant rights, such as copyright. See the copyright guide for more information.

- Clearance Some thesis volumes scanned as part of the digitisation scheme funded by Dr Leonard Polonsky are currently unavailable due to sensitive material or uncleared third-party copyright content. We are attempting to contact authors whose theses are affected.

Alternative access to the full-text

Request a copy.

We require your email address in order to let you know the outcome of your request.

Provide a statement outlining the basis of your request for the information of the author.

Please note any files released to you as part of your request are subject to the terms and conditions of use for the Oxford University Research Archive unless explicitly stated otherwise by the author.

Contributors

Bibliographic details, item description, related items, terms of use, views and downloads.

If you are the owner of this record, you can report an update to it here: Report update to this record

Report an update

We require your email address in order to let you know the outcome of your enquiry.

Review of Water Quality Prediction Methods

- Conference paper

- First Online: 31 May 2023

- Cite this conference paper

- Zhen Chen 9 , 10 ,

- Limin Liu 9 , 10 ,

- Yongsheng Wang 9 , 10 , 10 &

- Jing Gao 11

Part of the book series: Lecture Notes in Civil Engineering ((LNCE,volume 341))

Included in the following conference series:

- International Conference on Water Resource and Environment

222 Accesses

1 Citations

Water quality prediction plays a crucial role in environmental monitoring, ecosystem sustainability, and aquaculture, and it plays an important role in both economic and ecological benefits. For many years, researchers have been working on how to improve the accuracy of water quality prediction. However, at this stage, with the increase in external climate change, external noise, precipitation, and many other uncertainties, water quality prediction is facing the problem of insufficient accuracy. This paper analyzes the research methods of water quality prediction at home and abroad in recent years and summarizes the introduction through two aspects: mechanical water quality prediction methods and non-mechanical water quality prediction methods. Firstly, the mechanism of water quality prediction method is introduced, which uses various hydrological data information such as initial water head, bottom slope, hydraulic radius, etc. to predict water quality; Secondly, it introduces the non-mechanical water quality prediction method that uses historical water quality index data to analyze and mine to predict water quality. Finally, after introducing the existing prediction methods, the development direction of water quality prediction is analyzed and summarized.

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Durable hardcover edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

Han HG, Qiao JF, Chen QL (2012) Model predictive control of dissolved oxygen concentration based on a self-organizing RBF neural network. Control Eng Pract 20(4):465–476

Google Scholar

Wang Y, Zhou J, Chen K, Wang Y, Liu L (2017) Water quality prediction method based on LSTM neural network. In: 2017 12th international conference on intelligent systems and knowledge engineering (ISKE). IEEE, pp 1–5

Wang XY (2015) Research on urban water supply quality monitoring and early warning system

Fan M, Gu ZL (2010) Research progress and development trend of water quality model. Shanghai Environ Sci

Rinaldi S, Soncini-Sessa R (1978) Sensitivity analysis of generalized Streeter-Phelps models. Adv Water Resour 1(3):141–146

Xiao YJ (2018) The suitability of WASP model and QUAL2K model to simulate the water quality of Beichuan River

Miao HY, Zhou XC (2006) Application of improved genetic algorithm in parameter calibration of S-P BOD-DO water quality model. Chin J Hydraul Archit Eng (01):67–69

He Q, Sun SQ, Nie L (2003) Estimation of parameters K_1 and K_2 of S-P model for water quality of Nanfei River. J Hefei Univ Technol (Nat Sci Edn) 02:286–290

Liu BX, Lai XL, Cao Q, Cheng X (2013) In advanced materials research, vol 610, pp 1705–1709

Li SL, Li GY (2014) Research on the establishment of water resources management model coupled with S-P water quality simulation under uncertain conditions. Northeast Water Resour Hydropower (06):33–35+72

Wu J, Yu X (2021) Numerical investigation of dissolved oxygen transportation through a coupled SWE and Streeter–Phelps model

Paliwal R, Sharma P, Kansal A (2007) Water quality modelling of the river Yamuna (India) using QUAL2E-UNCAS. J Environ Manage 83(2):131–144

CAS PubMed Google Scholar

Bárbara VF (2006) Uso do modelo QUAL2E no estudo da qualidade da água e da capacidade de autodepuração do Rio Araguari-AP (Amazônia)

Knapik H, Fernandes C, Pickbrenner K, Porto M, Bassanesi K (2011) Qualidade da água da bacia do rio Iguaçu: diferenças conceituais entre os modelos QUAL2E e QUAL2K. Revista Brasileira de Recursos Hídricos 16(2):75–88

Zhao YX, Chen Y, Wu YY (2015) Theoretical method and application guide of QUAL2K river water quality simulation model. Meteorological Press

Gong QL (2016) Uncertainty study on the parameters of QUAL2K water quality model

Chen QS, Xie XH, Du QY, Liu Y (2018) Parameters sensitivity analysis of DO in water quality model of QUAL2K. In: IOP Conference series: earth and environmental science, vol 191, no 1. IOP Publishing, p 012030

Kuczera G, Diment G (1988) General water supply system simulation model: WASP. J Water Resour Plan Manag 114(4):365–382

Ambrose RB, Wool TA, Martin JL (1993) The water quality analysis simulation program, WASP5, Part A: model documentation. Environ Research Laboratory, US Environmental Protection Agency, Athens, GA

Dai TJ (2019) Research on water quality evaluation and prediction of Taolinkou reservoir

Liu C (2020) Evaluation of the purification capacity of typical Baiyangdian waters based on WASP water quality prediction model

Zhao ZH, Yao J (2021) Research on water pollution control of mountain rivers based on WASP model. People’s Yangtze River S1:38–41

Wang W, Wang X (2020) Progress in classification and application of coupled water quality models. People's Pearl River (07):79–84

He Q, Li JJ, Huang L (2014) Scenario analysis of water quality in the main section of the Yangtze River-Jialing River in Chongqing based on the coupled model of EFDC and WASP. In: 2014 annual meeting of the chinese society for environmental sciences (Chapter 4), pp 888–895

Jia H, Wang S, Wei M, Zhang Y (2011) Scenario analysis of water pollution control in the typical peri-urban river using a coupled hydrodynamic-water quality model. Front Environ Sci Eng China 5(2):255–265

CAS Google Scholar

Douglas-Mankin KR, Srinivasan R, Arnold JG (2010) Soil and Water Assessment Tool (SWAT) model: current developments and applications. Trans ASABE 53(5):1423–1431

de Andrade CW, Montenegro SM, Montenegro AA, Lima JRDS, Srinivasan R, Jones CA (2019) Soil moisture and discharge modeling in a representative watershed in northeastern Brazil using SWAT. Ecohydrol Hydrobiol 19(2):238–251

Pradhan P, Tingsanchali T, Shrestha S (2020) Evaluation of soil and water assessment tool and artificial neural network models for hydrologic simulation in different climatic regions of Asia. Sci Total Environ 701:134308

Giles NA, Babbar-Sebens M, Srinivasan R, Ficklin DL, Barnhart B (2019) Optimization of linear stream temperature model parameters in the soil and water assessment tool for the continental United States. Ecol Eng 127:125–134

Kalcic MM, Chaubey I, Frankenberger J (2015) Defining Soil and Water Assessment Tool (SWAT) hydrologic response units (HRUs) by field boundaries. Int J Agric Biol Eng 8(3):69–80

Li J, Ma TX, Lu YR, Song XF, Li RK, Liu JZ, Duan Z (2021) Multi-objective calibration and evaluation of SWAT model: Taking Meichuan River Basin as an example. J Univ Chin Acad Sci 05:590–600

Zhang ZM, Wang XY, Pan R (2017) An improved method for parameter calibration of uncertain water quality models. China Environ Sci (03):956–962

Shaw AR, Smith Sawyer H, LeBoeuf EJ, McDonald MP, Hadjerioua B (2017) Hydropower optimization using artificial neural network surrogate models of a high-fidelity hydrodynamics and water quality model. Water Resour Res 53(11):9444–9461

Xiang SL, Liu ZM, You BS (2006) Study on multiple linear regression analysis model for groundwater flow prediction. Hydrology 06:36–37

Chen ZM, Wang W, Zhao Y, Xu ZY (2020) Improved principal component analysis and multiple regression fusion for Hanfeng Lake water quality assessment and prediction. Environ Monitor Manage Technol 32(4):5

Teng EJ, Liu TL, An H (1995) Compilation of conversion method for non-ionic ammonia of surface water environmental quality standard. China Environ Monitor (04)

Nourani V, Alami MT, Vousoughi FD (2016) Self-organizing map clustering technique for ANN-based spatiotemporal modeling of groundwater quality parameters. J Hydroinf 18(2):288–309

Liu TQ, Wang QL (2017) Bayesian prediction of DO and NH 4 + -N content in Xiangjiang River Basin based on ARIMA model. J Hunan Agric Univ (Natural Science Edition) 05:575–580

Melesse AM, Ahmad S, McClain ME, Wang X, Lim YH (2011) Suspended sediment load prediction of river systems: an artificial neural network approach. Agric Water Manag 98(5):855–866

Zare A, Bayat V, Daneshkare A (2011) Forecasting nitrate concentration in groundwater using artificial neural network and linear regression models. Int Agrophys 25(2)

Huo S, He Z, Su J, Xi B, Zhu C (2013) Using artificial neural network models for eutrophication prediction. Procedia Environ Sci 18:310–316

Chang FJ, Chen PA, Chang LC, Tsai YH (2016) Estimating spatio-temporal dynamics of stream total phosphate concentration by soft computing techniques. Sci Total Environ 562:228–236

Rajaee T, Khani S, Ravansalar M (2020) Artificial intelligence-based single and hybrid models for prediction of water quality in rivers: a review. Chemom Intell Lab Syst 200:103978

Wang LX (2021) Research on anomaly detection method of urban river water quality based on multi-index time series data

Liu LX, Sun QX, Wang SY (1989) A preliminary study on the application of grey system theory to comprehensive evaluation of new crop varieties. Chin Agric Sci (03):22–27

Zhang DY (2021) Research and application of water quality prediction method based on EEMD-LSTM

Hu ZB, Pang Y, Song WW, Shao YX (2019) Application of grey system dynamic model group GM (1,1) in water quality prediction of Qinhuai River. Sichuan Environ 01:116–119

Delgado A, Vriclizar D, Medina E (2017) Artificial intelligence model based on grey systems to assess water quality from Santa River watershed. In: 2017 electronic congress (E-CON UNI). IEEE, pp 1–4

Li S, Zeng B, Ma X, Zhang D (2020) A novel grey model with a three-parameter background value and its application in forecasting average annual water consumption per capita in urban areas along the Yangtze River Basin. J Grey Syst 32(1)

Zuo K (2021) A new generalized discrete grey prediction model and its application. Math Pract Understand 24:1–13

Haghiabi AH, Nasrolahi AH, Parsaie A (2018) Water quality prediction using machine learning methods. Water Qual Res J 53(1):3–13

Khani S, Rajaee T (2017) Modeling of dissolved oxygen concentration and its hysteresis behavior in rivers using wavelet transform‐based hybrid models. CLEAN–Soil, Air, Water 45(2)