Want to create or adapt books like this? Learn more about how Pressbooks supports open publishing practices.

14 Quantitative analysis: Descriptive statistics

Numeric data collected in a research project can be analysed quantitatively using statistical tools in two different ways. Descriptive analysis refers to statistically describing, aggregating, and presenting the constructs of interest or associations between these constructs. Inferential analysis refers to the statistical testing of hypotheses (theory testing). In this chapter, we will examine statistical techniques used for descriptive analysis, and the next chapter will examine statistical techniques for inferential analysis. Much of today’s quantitative data analysis is conducted using software programs such as SPSS or SAS. Readers are advised to familiarise themselves with one of these programs for understanding the concepts described in this chapter.

Data preparation

In research projects, data may be collected from a variety of sources: postal surveys, interviews, pretest or posttest experimental data, observational data, and so forth. This data must be converted into a machine-readable, numeric format, such as in a spreadsheet or a text file, so that they can be analysed by computer programs like SPSS or SAS. Data preparation usually follows the following steps:

Data coding. Coding is the process of converting data into numeric format. A codebook should be created to guide the coding process. A codebook is a comprehensive document containing a detailed description of each variable in a research study, items or measures for that variable, the format of each item (numeric, text, etc.), the response scale for each item (i.e., whether it is measured on a nominal, ordinal, interval, or ratio scale, and whether this scale is a five-point, seven-point scale, etc.), and how to code each value into a numeric format. For instance, if we have a measurement item on a seven-point Likert scale with anchors ranging from ‘strongly disagree’ to ‘strongly agree’, we may code that item as 1 for strongly disagree, 4 for neutral, and 7 for strongly agree, with the intermediate anchors in between. Nominal data such as industry type can be coded in numeric form using a coding scheme such as: 1 for manufacturing, 2 for retailing, 3 for financial, 4 for healthcare, and so forth (of course, nominal data cannot be analysed statistically). Ratio scale data such as age, income, or test scores can be coded as entered by the respondent. Sometimes, data may need to be aggregated into a different form than the format used for data collection. For instance, if a survey measuring a construct such as ‘benefits of computers’ provided respondents with a checklist of benefits that they could select from, and respondents were encouraged to choose as many of those benefits as they wanted, then the total number of checked items could be used as an aggregate measure of benefits. Note that many other forms of data—such as interview transcripts—cannot be converted into a numeric format for statistical analysis. Codebooks are especially important for large complex studies involving many variables and measurement items, where the coding process is conducted by different people, to help the coding team code data in a consistent manner, and also to help others understand and interpret the coded data.

Data entry. Coded data can be entered into a spreadsheet, database, text file, or directly into a statistical program like SPSS. Most statistical programs provide a data editor for entering data. However, these programs store data in their own native format—e.g., SPSS stores data as .sav files—which makes it difficult to share that data with other statistical programs. Hence, it is often better to enter data into a spreadsheet or database where it can be reorganised as needed, shared across programs, and subsets of data can be extracted for analysis. Smaller data sets with less than 65,000 observations and 256 items can be stored in a spreadsheet created using a program such as Microsoft Excel, while larger datasets with millions of observations will require a database. Each observation can be entered as one row in the spreadsheet, and each measurement item can be represented as one column. Data should be checked for accuracy during and after entry via occasional spot checks on a set of items or observations. Furthermore, while entering data, the coder should watch out for obvious evidence of bad data, such as the respondent selecting the ‘strongly agree’ response to all items irrespective of content, including reverse-coded items. If so, such data can be entered but should be excluded from subsequent analysis.

Data transformation. Sometimes, it is necessary to transform data values before they can be meaningfully interpreted. For instance, reverse coded items—where items convey the opposite meaning of that of their underlying construct—should be reversed (e.g., in a 1-7 interval scale, 8 minus the observed value will reverse the value) before they can be compared or combined with items that are not reverse coded. Other kinds of transformations may include creating scale measures by adding individual scale items, creating a weighted index from a set of observed measures, and collapsing multiple values into fewer categories (e.g., collapsing incomes into income ranges).

Univariate analysis

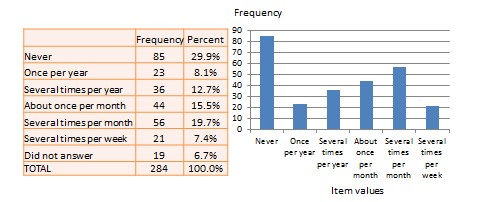

Univariate analysis—or analysis of a single variable—refers to a set of statistical techniques that can describe the general properties of one variable. Univariate statistics include: frequency distribution, central tendency, and dispersion. The frequency distribution of a variable is a summary of the frequency—or percentages—of individual values or ranges of values for that variable. For instance, we can measure how many times a sample of respondents attend religious services—as a gauge of their ‘religiosity’—using a categorical scale: never, once per year, several times per year, about once a month, several times per month, several times per week, and an optional category for ‘did not answer’. If we count the number or percentage of observations within each category—except ‘did not answer’ which is really a missing value rather than a category—and display it in the form of a table, as shown in Figure 14.1, what we have is a frequency distribution. This distribution can also be depicted in the form of a bar chart, as shown on the right panel of Figure 14.1, with the horizontal axis representing each category of that variable and the vertical axis representing the frequency or percentage of observations within each category.

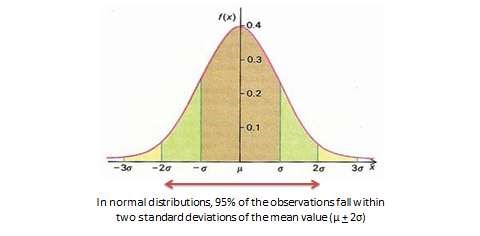

With very large samples, where observations are independent and random, the frequency distribution tends to follow a plot that looks like a bell-shaped curve—a smoothed bar chart of the frequency distribution—similar to that shown in Figure 14.2. Here most observations are clustered toward the centre of the range of values, with fewer and fewer observations clustered toward the extreme ends of the range. Such a curve is called a normal distribution .

Lastly, the mode is the most frequently occurring value in a distribution of values. In the previous example, the most frequently occurring value is 15, which is the mode of the above set of test scores. Note that any value that is estimated from a sample, such as mean, median, mode, or any of the later estimates are called a statistic .

Bivariate analysis

Bivariate analysis examines how two variables are related to one another. The most common bivariate statistic is the bivariate correlation —often, simply called ‘correlation’—which is a number between -1 and +1 denoting the strength of the relationship between two variables. Say that we wish to study how age is related to self-esteem in a sample of 20 respondents—i.e., as age increases, does self-esteem increase, decrease, or remain unchanged?. If self-esteem increases, then we have a positive correlation between the two variables, if self-esteem decreases, then we have a negative correlation, and if it remains the same, we have a zero correlation. To calculate the value of this correlation, consider the hypothetical dataset shown in Table 14.1.

After computing bivariate correlation, researchers are often interested in knowing whether the correlation is significant (i.e., a real one) or caused by mere chance. Answering such a question would require testing the following hypothesis:

Social Science Research: Principles, Methods and Practices (Revised edition) Copyright © 2019 by Anol Bhattacherjee is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License , except where otherwise noted.

Share This Book

- Skip to main content

- Skip to primary sidebar

- Skip to footer

- QuestionPro

- Solutions Industries Gaming Automotive Sports and events Education Government Travel & Hospitality Financial Services Healthcare Cannabis Technology Use Case NPS+ Communities Audience Contactless surveys Mobile LivePolls Member Experience GDPR Positive People Science 360 Feedback Surveys

- Resources Blog eBooks Survey Templates Case Studies Training Help center

Home Market Research

Descriptive Research: Definition, Characteristics, Methods + Examples

Suppose an apparel brand wants to understand the fashion purchasing trends among New York’s buyers, then it must conduct a demographic survey of the specific region, gather population data, and then conduct descriptive research on this demographic segment.

The study will then uncover details on “what is the purchasing pattern of New York buyers,” but will not cover any investigative information about “ why ” the patterns exist. Because for the apparel brand trying to break into this market, understanding the nature of their market is the study’s main goal. Let’s talk about it.

What is descriptive research?

Descriptive research is a research method describing the characteristics of the population or phenomenon studied. This descriptive methodology focuses more on the “what” of the research subject than the “why” of the research subject.

The method primarily focuses on describing the nature of a demographic segment without focusing on “why” a particular phenomenon occurs. In other words, it “describes” the research subject without covering “why” it happens.

Characteristics of descriptive research

The term descriptive research then refers to research questions, the design of the study, and data analysis conducted on that topic. We call it an observational research method because none of the research study variables are influenced in any capacity.

Some distinctive characteristics of descriptive research are:

- Quantitative research: It is a quantitative research method that attempts to collect quantifiable information for statistical analysis of the population sample. It is a popular market research tool that allows us to collect and describe the demographic segment’s nature.

- Uncontrolled variables: In it, none of the variables are influenced in any way. This uses observational methods to conduct the research. Hence, the nature of the variables or their behavior is not in the hands of the researcher.

- Cross-sectional studies: It is generally a cross-sectional study where different sections belonging to the same group are studied.

- The basis for further research: Researchers further research the data collected and analyzed from descriptive research using different research techniques. The data can also help point towards the types of research methods used for the subsequent research.

Applications of descriptive research with examples

A descriptive research method can be used in multiple ways and for various reasons. Before getting into any survey , though, the survey goals and survey design are crucial. Despite following these steps, there is no way to know if one will meet the research outcome. How to use descriptive research? To understand the end objective of research goals, below are some ways organizations currently use descriptive research today:

- Define respondent characteristics: The aim of using close-ended questions is to draw concrete conclusions about the respondents. This could be the need to derive patterns, traits, and behaviors of the respondents. It could also be to understand from a respondent their attitude, or opinion about the phenomenon. For example, understand millennials and the hours per week they spend browsing the internet. All this information helps the organization researching to make informed business decisions.

- Measure data trends: Researchers measure data trends over time with a descriptive research design’s statistical capabilities. Consider if an apparel company researches different demographics like age groups from 24-35 and 36-45 on a new range launch of autumn wear. If one of those groups doesn’t take too well to the new launch, it provides insight into what clothes are like and what is not. The brand drops the clothes and apparel that customers don’t like.

- Conduct comparisons: Organizations also use a descriptive research design to understand how different groups respond to a specific product or service. For example, an apparel brand creates a survey asking general questions that measure the brand’s image. The same study also asks demographic questions like age, income, gender, geographical location, geographic segmentation , etc. This consumer research helps the organization understand what aspects of the brand appeal to the population and what aspects do not. It also helps make product or marketing fixes or even create a new product line to cater to high-growth potential groups.

- Validate existing conditions: Researchers widely use descriptive research to help ascertain the research object’s prevailing conditions and underlying patterns. Due to the non-invasive research method and the use of quantitative observation and some aspects of qualitative observation , researchers observe each variable and conduct an in-depth analysis . Researchers also use it to validate any existing conditions that may be prevalent in a population.

- Conduct research at different times: The analysis can be conducted at different periods to ascertain any similarities or differences. This also allows any number of variables to be evaluated. For verification, studies on prevailing conditions can also be repeated to draw trends.

Advantages of descriptive research

Some of the significant advantages of descriptive research are:

- Data collection: A researcher can conduct descriptive research using specific methods like observational method, case study method, and survey method. Between these three, all primary data collection methods are covered, which provides a lot of information. This can be used for future research or even for developing a hypothesis for your research object.

- Varied: Since the data collected is qualitative and quantitative, it gives a holistic understanding of a research topic. The information is varied, diverse, and thorough.

- Natural environment: Descriptive research allows for the research to be conducted in the respondent’s natural environment, which ensures that high-quality and honest data is collected.

- Quick to perform and cheap: As the sample size is generally large in descriptive research, the data collection is quick to conduct and is inexpensive.

Descriptive research methods

There are three distinctive methods to conduct descriptive research. They are:

Observational method

The observational method is the most effective method to conduct this research, and researchers make use of both quantitative and qualitative observations.

A quantitative observation is the objective collection of data primarily focused on numbers and values. It suggests “associated with, of or depicted in terms of a quantity.” Results of quantitative observation are derived using statistical and numerical analysis methods. It implies observation of any entity associated with a numeric value such as age, shape, weight, volume, scale, etc. For example, the researcher can track if current customers will refer the brand using a simple Net Promoter Score question .

Qualitative observation doesn’t involve measurements or numbers but instead just monitoring characteristics. In this case, the researcher observes the respondents from a distance. Since the respondents are in a comfortable environment, the characteristics observed are natural and effective. In a descriptive research design, the researcher can choose to be either a complete observer, an observer as a participant, a participant as an observer, or a full participant. For example, in a supermarket, a researcher can from afar monitor and track the customers’ selection and purchasing trends. This offers a more in-depth insight into the purchasing experience of the customer.

Case study method

Case studies involve in-depth research and study of individuals or groups. Case studies lead to a hypothesis and widen a further scope of studying a phenomenon. However, case studies should not be used to determine cause and effect as they can’t make accurate predictions because there could be a bias on the researcher’s part. The other reason why case studies are not a reliable way of conducting descriptive research is that there could be an atypical respondent in the survey. Describing them leads to weak generalizations and moving away from external validity.

Survey research

In survey research, respondents answer through surveys or questionnaires or polls . They are a popular market research tool to collect feedback from respondents. A study to gather useful data should have the right survey questions. It should be a balanced mix of open-ended questions and close ended-questions . The survey method can be conducted online or offline, making it the go-to option for descriptive research where the sample size is enormous.

Examples of descriptive research

Some examples of descriptive research are:

- A specialty food group launching a new range of barbecue rubs would like to understand what flavors of rubs are favored by different people. To understand the preferred flavor palette, they conduct this type of research study using various methods like observational methods in supermarkets. By also surveying while collecting in-depth demographic information, offers insights about the preference of different markets. This can also help tailor make the rubs and spreads to various preferred meats in that demographic. Conducting this type of research helps the organization tweak their business model and amplify marketing in core markets.

- Another example of where this research can be used is if a school district wishes to evaluate teachers’ attitudes about using technology in the classroom. By conducting surveys and observing their comfortableness using technology through observational methods, the researcher can gauge what they can help understand if a full-fledged implementation can face an issue. This also helps in understanding if the students are impacted in any way with this change.

Some other research problems and research questions that can lead to descriptive research are:

- Market researchers want to observe the habits of consumers.

- A company wants to evaluate the morale of its staff.

- A school district wants to understand if students will access online lessons rather than textbooks.

- To understand if its wellness questionnaire programs enhance the overall health of the employees.

FREE TRIAL LEARN MORE

MORE LIKE THIS

Data Information vs Insight: Essential differences

May 14, 2024

Pricing Analytics Software: Optimize Your Pricing Strategy

May 13, 2024

Relationship Marketing: What It Is, Examples & Top 7 Benefits

May 8, 2024

The Best Email Survey Tool to Boost Your Feedback Game

May 7, 2024

Other categories

- Academic Research

- Artificial Intelligence

- Assessments

- Brand Awareness

- Case Studies

- Communities

- Consumer Insights

- Customer effort score

- Customer Engagement

- Customer Experience

- Customer Loyalty

- Customer Research

- Customer Satisfaction

- Employee Benefits

- Employee Engagement

- Employee Retention

- Friday Five

- General Data Protection Regulation

- Insights Hub

- Life@QuestionPro

- Market Research

- Mobile diaries

- Mobile Surveys

- New Features

- Online Communities

- Question Types

- Questionnaire

- QuestionPro Products

- Release Notes

- Research Tools and Apps

- Revenue at Risk

- Survey Templates

- Training Tips

- Uncategorized

- Video Learning Series

- What’s Coming Up

- Workforce Intelligence

Likert scale interpretation: How to analyze the data with examples

- January 10, 2022

- 10 min read

- Best practice

What are Likert scale and Likert scale questionnaires?

Likert scale examples: the types and uses of satisfaction scale questions, likert scale interpretation: analyzing likert scale/type data, how to use filtering and cross tabulation for your likert scale analysis, 1. compare new and old information to ensure a better understanding of progress, 2. compare information with other types of data and objective indicators, 3. make a visual representation: help the audience understand the data better, 4. focus on insights instead of just the numbers, how to analyze likert scale data, likert scale interpretation example overview, interpreting likert scale results, explore useful surveyplanet features for data analyzing.

Likert scaling consists of questions that are answerable with a statement that is scaled with 5 or 7 options that the respondent can choose from.

Have you ever answered a survey question that asks to what extent you agree with a statement? The answers were probably: strongly disagree, disagree, neither disagree nor agree, agree, or strongly agree. Well, that’s a Likert question.

Regardless of the name—a satisfaction scale, an agree-disagree scale, or a strongly agree scale—the format is pretty powerful and a widely used means of survey measurement, primarily used in customer experience and employee satisfaction surveys.

In this article, we’ll answer some common questions about Likert scales and how they are used, though most importantly Likert scale scoring and interpretation. Learn our advice about how to benefit from conclusions drawn from satisfaction surveys and how to use them to implement changes that will improve your business!

A Likert scale usually contains 5 or 7 response options—ranging from strongly agree to strongly disagree—with differing nuances between these and a mandatory mid-point of neither agree nor disagree (for those who hold no opinion). The Likert-type scale got its name from psychologist Rensis Likert, who developed it in 1932.

Likert scales are a type of closed-ended question, like common yes-or-no questions, they allow participants to choose from a predefined set of answers, as opposed to being able to phrase their opinions in their own words. But unlike yes-or-no questions, satisfaction-scale questions allow for the measurement of people’s views on a specific topic with a greater degree of nuance.

Since these questions are predefined, it’s essential to include questions that are as specific and understandable as possible.

Answer presets can be numerical, descriptive, or a combination of both numbers and words. Responses range from one extreme attitude to the other, while always including a neutral opinion in the middle.

A Likert scale question is one of the most commonly used in surveys to measure how satisfied a customer or employee is. The most common example of their use is in customer satisfaction surveys , which are an integral part of market research .

Are satisfaction-scale questions the best survey questions?

Maybe you’ve answered one too many customer satisfaction surveys with Likert scales in your lifetime and now consider them way too generic and bland. But, the fact is they are one of the most popular types of survey questions.

First of all, they are pretty appealing to respondents because they are easy to understand and do not require too much thinking to answer.

And, while binary (yes-or-no) questions offer only two response options (i.e., if a customer is satisfied with your products and services or not), satisfaction-scale questions provide a clearer understanding of customers’ thoughts and opinions.

By using well-prepared additional questions, questions about particular products or service segments can be asked. That way, getting to the bottom of customer dissatisfaction is possible, making it easier to find a way to address their complaints and improve their experience.

Such surveys enable figuring out why customers are satisfied with one product but not another. This empowers the recognition of products and service areas that customers are confident in while helping to find ways to improve others.

When it comes to analyzing and interpreting survey scale results, Likert questions are helpful because they provide quantitative data that is easy to code and interpret. Results can also be analyzed through cross-tabulation analysis (we’ll get back to that later).

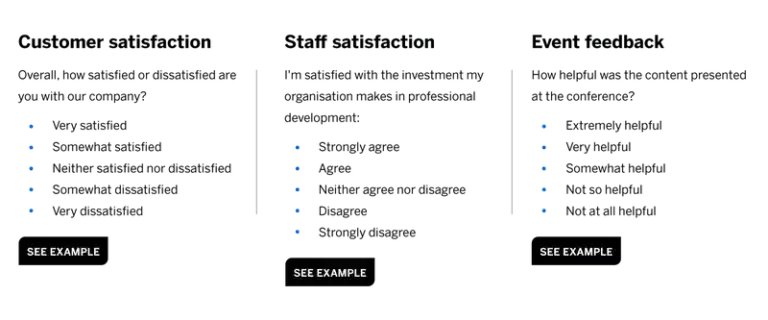

Likert questions can be used for many kinds of research. For example, determine the level of customer satisfaction with the latest product, assess employee satisfaction, or get post-event feedback from attendees after a specific event.

Questions can take different forms, but the most common is the 5-point or 7-point Likert scale question. There are 4-point and even 10-point Likert scale questions as well.

How to choose from these options?

The most common is the 5-point question. Most researchers advise the use of at least five response options (if not more). This ensures that respondents have enough choices to express their opinion as accurately as possible.

Some researchers suggest always using an even number of responses so respondents are not presented with a neutral answer, therefore having to “choose a side.” This is to avoid a tepid response even when respondents have an opinion, which is one of the most common types of errors in surveying .

Likert scale interpretation involves analyzing the responses to understand the participants’ attitudes toward the statements.

It’s important to note that Likert scales provide a quantitative representation of attitudes but do not necessarily capture underlying reasoning or motivations. Qualitative methods, such as interviews or open-ended questions, are often used in conjunction with Likert scales to gain a deeper understanding of participants’ perspectives.

Overall, Likert scale interpretation of data involves analyzing the numerical ratings, considering the directionality of the scale, examining central tendency and variability, identifying response patterns, and conducting comparative analyses to draw meaningful conclusions about people’s attitudes or opinions.

How to analyze satisfaction survey scale questions

For a survey to be its best , how gathered information is analyzed is as important as the gathering itself. That’s why we’ll now turn to the most effective ways of analyzing responses from satisfaction survey scales.

When using Likert scale questions, the analysis tools used are mean, median, and mode. These help better understand the information collected.

The mean (or average) is the average value of data, calculated by adding all the numbers and dividing this sum by the total number of values offered to respondents. The median is the middle value of a data set, while the mode is the number that occurs most often.

Some other useful ways of analyzing information are filtering and cross tabulation.

Using a filter, the responses of one particular group of respondents are focused upon and the rest filtered out. For example, how female customers rate a product can be determined by filtering out male respondents, while concentrating on customers aged 20 to 30 can be gleaned by filtering out older respondents.

Cross tabulation, on the other hand, is a method to compare two sets of information in one chart and analyze the relationship between multiple variables. In other words, it can show the responses of a particular subgroup while it can also be combined with other subgroups.

Say you want to look at the responses of unemployed female respondents aged 20 to 30. By using cross tabulation, all three parameters—gender, age, and employment status—can be combined and correlation calculated.

If this all sounds confusing, SurveyPlanet luckily doesn’t just offer great examples of surveys and the ability to create custom themes , but also the power to export survey results into several different formats, such as Microsoft Excel and Word, CSV, PDF, and JSON files.

How to interpret Likert scale data?

When information has been gathered and analyzed, it’s time to present it to stakeholders. This is the final stage of research. Analyzing the results of Likert scale questionnaires is a vital way to improve services and grow a business. Presenting the results correctly is a key step.

Here’s how to develop a clear goal and present it understandably and engagingly.

Compare the newly obtained information with data gathered from previous surveys. Sure, information gathered from the latest research is valuable on its own, but not helpful enough. For example, it tells you if customers are currently satisfied with products or services, but not whether things are better or worse than last year.

The key to improving customer service—and thus developing a business—is comparing current responses with previous ones. This is called longitudinal analysis. It can provide valuable insights about how a business is developing, if things are improving or declining, and what issues need to be solved.

If there is no previous data, then start collecting feedback immediately in order to compare results with future surveys. This is called benchmarking. It helps keep track of progress and how products, services, and overall customer satisfaction changes over time.

The most crucial information to compare new findings with is previous surveys. But it is highly recommended to constantly compare findings with other types of information, such as Google Analytics, sales data, and other objective indicators.

Another good practice is comparing qualitative with quantitative data . The more information, the more accurate the research results, which will help better convey findings to stakeholders. This will also improve business decision-making, strengthening the experiences of customers and employees.

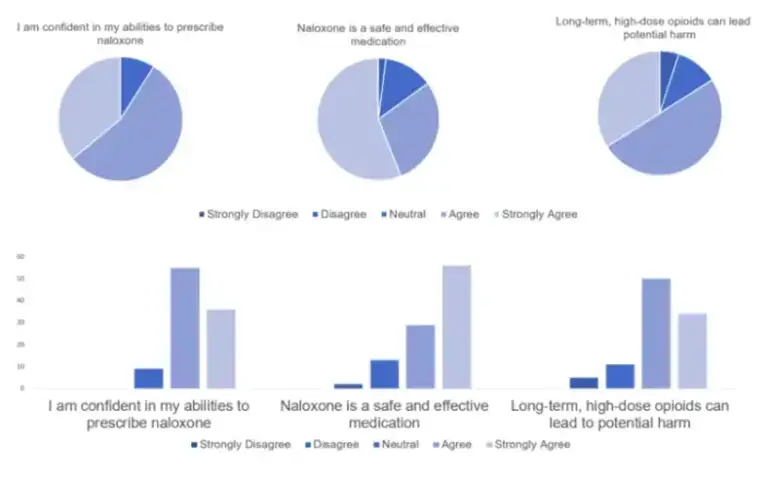

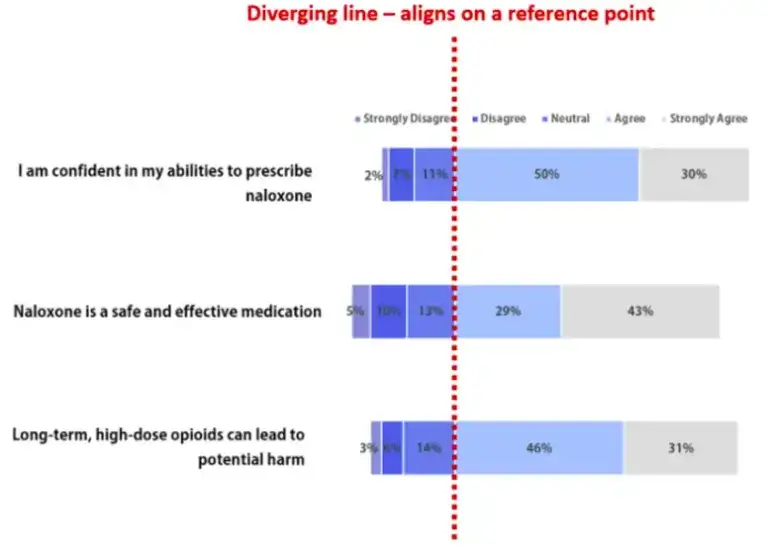

Numbers are easier to understand when suitable visual representation is provided. However, it is essential to use a medium that adequately highlights key findings.

Line graphs, pie charts, bar charts, histograms, scatterplots, infographics, and many more techniques can be used.

But don’t forget good old tables. Even if they’re not so visually dynamic and a little harder on the eyes, some information is simply best presented in tables, especially numerical data.

Working with all of these options, more satisfactory presentations can be created.

When presenting findings to stakeholders, don’t just focus on the numbers. Instead, highlight the conclusions about customer or employee satisfaction drawn from the research. That way, everyone present at the meeting will gain a deeper understanding of what you’re trying to convey.

A valuable and exciting piece of advice is to focus on the story the numbers tell. Don’t simply list the numbers collected. Instead, use relevant examples and connect all the information, building on each dataset to make a meaningful whole.

Define and describe problems that need to be solved in engaging and easy-to-understand terms so that listeners don’t have a hard time understanding what is being shared. Include suggestions that could improve, for example, customer experience outcomes. It is also important to share findings with the relevant teams, listen to their perspectives, and find solutions together.

An example of Likert scale data analysis and interpretation

Let’s consider an example scenario and go through the steps of analyzing and interpreting Likert scale data.

Scenario: A company conducts an employee satisfaction survey using a Likert scale to measure employees’ attitudes toward various aspects of their work environment. The scale ranges from 1 (Strongly Disagree) to 5 (Strongly Agree).

Item 1: “I feel valued and appreciated at work.”

Item 2: “My workload is manageable.”

Item 3: “I receive adequate training and support.”

Item 4: “I have opportunities for growth and advancement.”

Item 5: “My supervisor provides constructive feedback.”

Step 1: Calculate mean scores by summing up the responses and dividing by the number of respondents.

Item 1: Mean score = (4+5+5+4+3)/5 = 4.2

Item 2: Mean score = (3+4+3+3+4)/5 = 3.4

Item 3: Mean score = (4+4+5+4+3)/5 = 4.0

Item 4: Mean score = (3+4+3+2+4)/5 = 3.2

Item 5: Mean score = (4+3+4+3+5)/5 = 3.8

Step 2: Assess central tendency by looking at the distribution of responses to identify the most frequent response or central point.

Item 1: 4 (Agree) is the most frequent response.

Item 2: 3 (Neutral) is the most frequent response.

Item 3: 4 (Agree) is the most frequent response.

Item 4: 3 (Neutral) is the most frequent response.

Item 5: 4 (Agree) is the most frequent response.

Step 3: Consider Variability by assessing the range or spread of responses to understand the diversity of opinions.

Item 1: Range = 5-3 = 2 (relatively low variability)

Item 2: Range = 4-3 = 1 (low variability)

Item 3: Range = 5-3 = 2 (relatively low variability)

Item 4: Range = 4-2 = 2 (relatively low variability)

Item 5: Range = 5-3 = 2 (relatively low variability)

Step 4: Identify response patterns By looking for consistent agreement or disagreement across items or patterns of response clusters.

Step 5: Comparative analysis of responses among different groups, such as other departments or job positions, to identify attitude variations.

In this example, there is a pattern of agreement on items related to feeling valued at work (Item 1), receiving training and support (Item 3), and receiving constructive feedback (Item 5). However, there is a relatively neutral response pattern for workload manageability (Item 2) and growth opportunities (Item 4).

For example, you could compare responses between different departments to see if there are significant differences in employee satisfaction levels.

Based on the analysis, employees feel valued and appreciated at work (Item 1) and perceive adequate training and support (Item 3). However, there may be room for improvement regarding workload manageability (Item 2), opportunities for growth (Item 4), and the provision of constructive feedback (Item 5).

The relatively low variability across items suggests moderate agreement within the group. However, the neutral response pattern for workload manageability and opportunities for growth may indicate areas that require attention to enhance employee satisfaction.

Likert scales are a highly effective way of collecting qualitative data. They help you gain a deeper understanding of customers’ or employees’ opinions and needs.

Make this kind of vital research easier. Discover our unique features —like exporting and printing results —that will save time and energy. Let SurveyPlanet take care of your surveys!

Photo by Lukas from Pexels

Likert Scale

- Reference work entry

- First Online: 01 January 2022

- pp 2938–2941

- Cite this reference work entry

- Takashi Yamashita 3 &

- Roberto J. Millar 4

523 Accesses

1 Citations

Likert-type scale ; Rating scale

Likert scaling is one of the most fundamental and frequently used assessment strategies in social science research (Joshi et al. 2015 ). A social psychologist, Rensis Likert ( 1932 ), developed the Likert scale to measure attitudes. Although attitudes and opinions had been popular research topics in the social sciences, the measurement of these concepts was not established until this time. In a groundbreaking study, Likert ( 1932 ) introduced this new approach of measuring attitudes toward internationalism with a 5-point scale – (1) strongly approve, (2) approve, (3) undecided, (4) disapprove, and (5) strongly disapprove. For example, one of nine internationalism scale items measured attitudes toward statements like, “All men who have the opportunity should enlist in the Citizen’s Military Training Camps.” Based on the survey of 100 male students from one university, Likert showed the sound psychometric properties (i.e., validity and...

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

Institutional subscriptions

Baker TA, Buchanan NT, Small BJ, Hines RD, Whitfield KE (2011) Identifying the relationship between chronic pain, depression, and life satisfaction in older African Americans. Res Aging 33(4):426–443

Google Scholar

Bishop PA, Herron RL (2015) Use and misuse of the Likert item responses and other ordinal measures. Int J Exerc Sci 8(3):297

Carifio J, Perla R (2008) Resolving the 50-year debate around using and misusing Likert scales. Med Educ 42(12):1150–1152

DeMaris A (2004) Regression with social data: modeling continuous and limited response variables. Wiley, Hoboken

Femia EE, Zarit SH, Johansson B (1997) Predicting change in activities of daily living: a longitudinal study of the oldest old in Sweden. J Gerontol 52B(6):P294–P302. https://doi.org/10.1093/geronb/52B.6.P294

Article Google Scholar

Gomez RG, Madey SF (2001) Coping-with-hearing-loss model for older adults. J Gerontol 56(4):P223–P225. https://doi.org/10.1093/geronb/56.4.P223

Joshi A, Kale S, Chandel S, Pal D (2015) Likert scale: explored and explained. Br J Appl Sci Technol 7(4):396

Kong J (2017) Childhood maltreatment and psychological well-being in later life: the mediating effect of contemporary relationships with the abusive parent. J Gerontol 73(5):e39–e48. https://doi.org/10.1093/geronb/gbx039

Kuzon W, Urbanchek M, McCabe S (1996) The seven deadly sins of statistical analysis. Ann Plast Surg 37:265–272

Likert R (1932) A technique for the measurement of attitudes. Arch Psychol 22(140):55–55

Pruchno RA, McKenney D (2002) Psychological well-being of black and white grandmothers raising grandchildren: examination of a two-factor model. J Gerontol 57(5):P444–P452. https://doi.org/10.1093/geronb/57.5.P444

Sullivan GM, Artino AR Jr (2013) Analyzing and interpreting data from Likert-type scales. J Grad Med Educ 5(4):541–542

Trochim WM, Donnelly JP, Arora K (2016) Research methods: the essential knowledge base. Cengage Learning, Boston

Download references

Author information

Authors and affiliations.

Department of Sociology, Anthropology, and Health Administration and Policy, University of Maryland Baltimore County, Baltimore, MD, USA

Takashi Yamashita

Gerontology Doctoral Program, University of Maryland Baltimore, Baltimore, MD, USA

Roberto J. Millar

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to Takashi Yamashita .

Editor information

Editors and affiliations.

Population Division, Department of Economics and Social Affairs, United Nations, New York, NY, USA

Department of Population Health Sciences, Department of Sociology, Duke University, Durham, NC, USA

Matthew E. Dupre

Section Editor information

Department of Sociology and Center for Population Health and Aging, Duke University, Durham, NC, USA

Kenneth C. Land

Department of Sociology, University of Kentucky, Lexington, KY, USA

Anthony Bardo

Rights and permissions

Reprints and permissions

Copyright information

© 2021 Springer Nature Switzerland AG

About this entry

Cite this entry.

Yamashita, T., Millar, R.J. (2021). Likert Scale. In: Gu, D., Dupre, M.E. (eds) Encyclopedia of Gerontology and Population Aging. Springer, Cham. https://doi.org/10.1007/978-3-030-22009-9_559

Download citation

DOI : https://doi.org/10.1007/978-3-030-22009-9_559

Published : 24 May 2022

Publisher Name : Springer, Cham

Print ISBN : 978-3-030-22008-2

Online ISBN : 978-3-030-22009-9

eBook Packages : Social Sciences Reference Module Humanities and Social Sciences Reference Module Business, Economics and Social Sciences

Share this entry

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

Likert Scale Questionnaire: Examples & Analysis

Saul Mcleod, PhD

Editor-in-Chief for Simply Psychology

BSc (Hons) Psychology, MRes, PhD, University of Manchester

Saul Mcleod, PhD., is a qualified psychology teacher with over 18 years of experience in further and higher education. He has been published in peer-reviewed journals, including the Journal of Clinical Psychology.

Learn about our Editorial Process

Olivia Guy-Evans, MSc

Associate Editor for Simply Psychology

BSc (Hons) Psychology, MSc Psychology of Education

Olivia Guy-Evans is a writer and associate editor for Simply Psychology. She has previously worked in healthcare and educational sectors.

On This Page:

Various kinds of rating scales have been developed to measure attitudes directly (i.e., the person knows their attitude is being studied). The most widely used is the Likert scale (1932).

In its final form, the Likert scale is a five (or seven) point scale that is used to allow an individual to express how much they agree or disagree with a particular statement.

The Likert scale (typically) provides five possible answers to a statement or question that allows respondents to indicate their positive-to-negative strength of agreement or strength of feeling regarding the question or statement.

I believe that ecological questions are the most important issues facing human beings today.

A Likert scale assumes that the strength/intensity of an attitude is linear, i.e., on a continuum from strongly agree to strongly disagree, and makes the assumption that attitudes can be measured.

For example, each of the five (or seven) responses would have a numerical value that would be used to measure the attitude under investigation.

Examples of Items for Surveys

In addition to measuring statements of agreement, Likert scales can measure other variations such as frequency, quality, importance, and likelihood, etc.

Analyzing Data

The response categories in the Likert scales have a rank order, but the intervals between values cannot be presumed equal. Therefore, the mean (and standard deviation) are inappropriate for ordinal data (Jamieson, 2004).

Statistics you can use are:

- Summarize using a median or a mode (not a mean as it is ordinal scale data ); the mode is probably the most suitable for easy interpretation.

- Display the distribution of observations in a bar chart (it can’t be a histogram because the data is not continuous).

Critical Evaluation

Likert Scales have the advantage that they do not expect a simple yes / no answer from the respondent but rather allow for degrees of opinion and even no opinion at all.

Therefore, quantitative data is obtained, which means that the data can be analyzed relatively easily.

Offering anonymity on self-administered questionnaires should further reduce social pressure and thus may likewise reduce social desirability bias.

Paulhus (1984) found that more desirable personality characteristics were reported when people were asked to write their names, addresses, and telephone numbers on their questionnaire than when they were told not to put identifying information on the questionnaire.

Limitations

However, like all surveys, the validity of the Likert scale attitude measurement can be compromised due to social desirability.

This means that individuals may lie to put themselves in a positive light. For example, if a Likert scale was measuring discrimination, who would admit to being racist?

Bowling, A. (1997). Research Methods in Health . Buckingham: Open University Press.

Burns, N., & Grove, S. K. (1997). The Practice of Nursing Research Conduct, Critique, & Utilization . Philadelphia: W.B. Saunders and Co.

Jamieson, S. (2004). Likert scales: how to (ab) use them . Medical Education, 38(12) , 1217-1218.

Likert, R. (1932). A Technique for the Measurement of Attitudes. Archives of Psychology , 140, 1–55.

Paulhus, D. L. (1984). Two-component models of socially desirable responding . Journal of personality and social psychology, 46(3) , 598.

Further Information

- History of the Likert Scale

- Essential Elements of Questionnaire Design and Development

Related Articles

Research Methodology

Qualitative Data Coding

What Is a Focus Group?

Cross-Cultural Research Methodology In Psychology

What Is Internal Validity In Research?

Research Methodology , Statistics

What Is Face Validity In Research? Importance & How To Measure

Criterion Validity: Definition & Examples

- Master Your Homework

- Do My Homework

Likert Scale Research: A Comprehensive Guide

The Likert scale is a versatile research tool for gathering quantitative data about people’s attitudes and opinions. This comprehensive guide provides a thorough overview of the use of the Likert scale in research, from its historical origins to modern applications. We begin with an exploration of the history and development of this powerful tool, followed by discussions on how it can be applied in different types of studies. Next, we will explore the advantages and disadvantages associated with using a Likert-type survey as well as best practices for designing one. Finally, readers are provided examples demonstrating appropriate analysis techniques that can be used when analyzing data collected through these surveys. With this detailed guide at their disposal, researchers have all they need to successfully employ and leverage the full potential offered by conducting robust scientific inquiry based upon responses gathered via Likert scales.

I. Introduction to Likert Scale Research

Ii. history of the likert scale, iii. designing a likert survey, iv. benefits and limitations of using the likert scale for research purposes, v. analysing data collected from a likert survey, vi. improving response rates when administering a likert survey, vii. conclusion: summary of key points.

Likert Scale: Likert scale research is a powerful tool for understanding the attitudes and beliefs of individuals in various areas. The scale measures responses on a five-point, seven-point, or nine-point numerical continuum that ranges from “Strongly Agree” to “Strongly Disagree.” It allows researchers to accurately gauge people’s feelings about an issue or topic by asking them to choose one option out of many.

By using this type of survey instrument, researchers can explore differences between groups in terms of opinions on certain topics. This information can be used for academic research papers as well as marketing initiatives. For instance, a researcher might use the Likert scale when studying how different types of customers respond differently towards advertising campaigns.

- The data obtained through such surveys can help businesses better tailor their strategies so they are more effective at reaching potential customers.

- In academia it may also provide useful insight into why some student populations have stronger feelings than others concerning particular issues facing education today.

Exploring the Use of Likert Scales The use of a survey instrument to measure attitude dates back as far as 1932. This is when social scientist Rensis Likert developed and published his landmark paper on the “A Technique for the Measurement of Attitudes”, in which he introduced what we now refer to as the Likert Scale. The most widely known version involves rating responses across a continuum from strongly agree to strongly disagree with one’s opinion on any given topic or situation.

This technique has become popular among researchers over time due to its accuracy at measuring sentiment about certain issues. It can be used for both structured and unstructured surveys because it requires respondents to provide either quantitative answers such as numerical ratings (1-5) or qualitative answers such as descriptive statements that are then scored using predetermined criteria by the researcher(s). Additionally, this scale works well with large sample sizes so it is often utilized when conducting research involving multiple participants in order to obtain more accurate results than would otherwise be possible if only smaller samples were available for analysis. Furthermore, since these scales are relatively easy for people unfamiliar with statistics or research methods understand how they should answer questions presented within them – making them ideal tools for reaching out broad populations who may not have had previous experience participating in studies related topics like psychology or sociology!

Choosing the Right Survey Format

When designing a survey, it is important to choose the right format. Likert scale surveys are one of the most popular methods for measuring people’s attitudes and opinions on a particular topic. A Likert survey typically involves providing respondents with several statements related to a specific area or subject and asking them to indicate their level of agreement or disagreement with each statement using an anchored rating scale. This type of survey can be used in many research contexts, such as market research studies and academic papers.

To create an effective Likert-scale survey, there are certain key elements that should be considered. The questions should cover all points within your study’s scope; they must also be worded clearly so that respondents will understand what is being asked of them. Furthermore, when constructing a five-point (or seven-point) rating scale for each question – which provides gradations between strong agreement/disagreement – make sure you use clear labels at either end (e.g., Strongly Agree / Strongly Disagree). As demonstrated by Yeung et al.(2017), this step allows researchers to measure nuances in opinion more accurately than if only two ratings were offered.

- Ensure all questions relate back to your original goal.

- Make sure wording of each question is precise yet concise.

Advantages of the Likert Scale The Likert scale is a well-established tool used to collect survey data in research. It has long been valued for its simplicity and ease of use, as well as its ability to effectively measure responses across multiple variables or questions. The structure of the scale – ranging from ‘strongly agree’ to ‘strongly disagree’ – allows researchers to quickly and reliably capture attitudes towards any particular topic or issue under investigation. Moreover, recent studies suggest that this type of measurement can be advantageous when compared with other approaches such as semantic differential scales (Smith & Johnson, 2020).

Furthermore, another advantage is its flexibility; it can be used in both quantitative and qualitative research settings without needing significant adjustments or reconfigurations (Matzler et al., 2018). In addition, if deemed necessary by an investigator’s design choices one could easily modify a traditional 5-point likert item into higher order items on a 7 point degree based off their own criteria and objectives. Consequently this makes it ideal for conducting large surveys which require collecting data over wide ranges amongst numerous respondents within short time frames whilst maintaining accuracy throughout the entire process (Matzler et al., 2018). Limitations Despite these advantages there are certain limitations associated with using this instrumentation technique in comparison with more established methods such as interviews/focus groups etc.. For instance self-reported measures like likert scales rely heavily on respondent honesty when filling out questionnaires which may lead to artificially inflated results due to desirability bias i.e someone responding positively because they want people perceive them favourably rather than being honest about what they actually think e.g participants answering negatively regardless of whether they agree with the statement just because that was expected response instead providing true answers accordingly (Mavridis & Zafra‐Gómez , 2017 ). Additionally some individuals don’t have strong opinions regarding specific topics thus making it difficult score accurately via conventional rating systems versus alternative options available depending upon specified project requirements(Aluya 2017). Furthermore oftentimes investigators find themselves unable identify adequately detailed contextual factors surrounding individual responses since all information gathered must conform rigid formatting constraints imposed standardised procedures necessitated using likser scale instruments particularly high volume cases involving large sample sizes(Babenko & Babenko 2016)

Analyzing Survey Results

Quantitative data collected from a Likert survey can provide valuable insight into customer preferences and behavior. The use of a scale with which to measure responses gives us the ability to compare and contrast answers across different groups, allowing for more precise analysis than if respondents were simply asked open-ended questions.

In order to analyze the results of a Likert survey effectively, it is important that researchers understand how ratings are assigned and interpreted. A traditional five-point rating scale may be used in which responders rate items or statements on an increasing level of agreement (e.g., 1 = strongly disagree; 5 = strongly agree). As outlined by Krosnick & Alwin (1989) in their research paper titled “The Reliability of Trend Estimates From Surveys: The Role Of Question Context”, when using this kind of rating system it is beneficial for researchers to ask questions that both include verbal labels along with numerical equivalents.

- This helps eliminate confusion among respondents who may not have experience taking surveys.

- It also increases consistency in scoring between participants as they should all assign similar meanings to each number provided.

Additionally, conducting focus groups before deploying any survey can be useful when trying to identify what types of feedback will best help answer research objectives.

- These types of qualitative methods allow investigators the opportunity gain further context surrounding topics being discussed within the study.

Practical Strategies

- Offer incentives: Providing incentives such as vouchers or gift cards has been proven to be an effective way of encouraging respondents to complete surveys. This was demonstrated in a recent research paper that found offering participants monetary rewards increased response rates for Likert scale surveys.

- Timely reminders: Follow-up emails sent at regular intervals can help remind people who haven’t responded yet and encourage them to take part. Several studies have shown this is an efficient way of increasing completion rates, especially when the reminder messages are personalised.

Survey Design Tips Using best practices during survey design helps increase engagement from the respondent and maximises response rate potential.

- Keep it concise: Long questionnaires tend to lead to disengagement and frustration which will negatively impact responses. Consider how essential each section is before adding extra questions.

- Layout matters : Make sure your layout follows good design principles – make use of white space, visual cues (such as arrows), font size changes etc., so it’s easy on the eye but still clear enough for instructions not go unnoticed by your audience.

Summary of Findings The research conducted using a Likert scale revealed several key insights. Firstly, there is a clear trend among respondents towards favouring the new product launch. Over 70% of survey participants indicated that they would be interested in purchasing it when available. Additionally, there was strong interest in features such as customisation and personalised recommendations, with almost all participants indicating this to some degree or another.

Another finding from the survey results shows that customers are willing to pay more for enhanced services and experiences associated with the products offered by the company; however cost remains an important factor influencing their decision-making process. Furthermore, customer service expectations remain high – satisfaction ratings were generally positive but could still be improved upon.

This article on Likert Scale research is an invaluable resource for anyone looking to conduct their own studies or gain a better understanding of the topic. It provides a comprehensive overview, along with insightful tips and advice from experts in the field. We hope this guide has been helpful in demystifying what can be an intimidating subject and will serve as a useful reference point as researchers delve deeper into conducting surveys using the scale. With continued innovation, we believe that Likert Scale Research will remain at the forefront of survey methodology well into future decades, providing valuable data sets for organizations across all industries.

An official website of the United States government

The .gov means it’s official. Federal government websites often end in .gov or .mil. Before sharing sensitive information, make sure you’re on a federal government site.

The site is secure. The https:// ensures that you are connecting to the official website and that any information you provide is encrypted and transmitted securely.

- Publications

- Account settings

Preview improvements coming to the PMC website in October 2024. Learn More or Try it out now .

- Advanced Search

- Journal List

- Front Psychol

A Review of Key Likert Scale Development Advances: 1995–2019

Andrew t. jebb.

1 Department of Psychological Sciences, Purdue University, West Lafayette, IN, United States

2 Department of Psychology, University of Houston, Houston, TX, United States

Associated Data

Developing self-report Likert scales is an essential part of modern psychology. However, it is hard for psychologists to remain apprised of best practices as methodological developments accumulate. To address this, this current paper offers a selective review of advances in Likert scale development that have occurred over the past 25 years. We reviewed six major measurement journals (e.g., Psychological Methods , Educational , and Psychological Measurement ) between the years 1995–2019 and identified key advances, ultimately including 40 papers and offering written summaries of each. We supplemented this review with an in-depth discussion of five particular advances: (1) conceptions of construct validity, (2) creating better construct definitions, (3) readability tests for generating items, (4) alternative measures of precision [e.g., coefficient omega and item response theory (IRT) information], and (5) ant colony optimization (ACO) for creating short forms. The Supplementary Material provides further technical details on these advances and offers guidance on software implementation. This paper is intended to be a resource for psychological researchers to be informed about more recent psychometric progress in Likert scale creation.

Introduction

Psychological data are diverse and range from observations of behavior to face-to-face interviews. However, in modern times, one of the most common measurement methods is the self-report Likert scale ( Baumeister et al., 2007 ; Clark and Watson, 2019 ). Likert scales provide a convenient way to measure unobservable constructs, and published tutorials detailing the process of their development have been highly influential, such as Clark and Watson (1995) and Hinkin (1998) (being cited over 6,500 and 3,000 times, respectively, according to Google scholar).

Notably, however, it has been roughly 25 years since these seminal papers were published, and specific best-practices have changed or evolved since then. Recently, Clark and Watson (2019) gave an update to their 1995 article, integrating some newer topics into a general tutorial of Likert scale creation. However, scale creation—from defining the construct to testing nomological relationships—is such an extensive process that it is challenging for any paper to give full coverage to each of its stages. The authors were quick to note this themselves several times, e.g., “[w]e have space only to raise briefly some key issues” and “unfortunately we do not have the space to do justice to these developments here” (p. 5). Therefore, a contribution to psychology would be a paper that provides a review of advances in Likert scale development since classic tutorials were published. This paper would not be a general tutorial in scale development like Clark and Watson (1995 , 2019) , Hinkin (1998) , or others. Instead, it would focus on more recent advances and serve as a complement to these broader tutorials.

The present paper seeks to serve as such a resource by reviewing developments in Likert scale creation from the past 25 years. However, given that scale development is such an extensive topic, the limitations of this review should be made very explicit. The first limitations are with regard to scope. This is not a review of psychometrics , which would be impossibly broad, or advances in self-report in general , which would also be unwieldy (e.g., including measurement techniques like implicit measures and forced choice scales). This is a review of the initial development and validation of self-report Likert scales . Therefore, we also excluded measurement topics related the use self-report scales, like identifying and controlling for response biases. 1 Although this scope obviously omits many important aspects of measurement, it was necessary to do the review.

Importantly, like Clark and Watson (1995 , 2019 ), Hinkin (1998) , this paper was written at the level of the general psychologist, not methodologists, in order to benefit the field of psychology most broadly. This also meant that our scope was to fine articles that were broad enough to apply to most cases of Likert scale development. As a result, we omitted articles, for example, that only discussed measuring certain types of constructs [e.g., Haynes and Lench’s (2003) paper on the incremental validation of new clinical measures].

The second major limitation concerns its objectivity. Performing any review of what is “significant” requires, at a point, making subjective judgment calls. The majority of the papers we reviewed were fairly easy to decide on. For example, we included Simms et al. (2019) because they tackled a major Likert scale issue: the ideal number of response options (as well as the comparative performance of visual analog scales). By contrast, we excluded Permut et al. (2019) because their advance was about monitoring the attention of subjects taking surveys online, not about scale development, per se . However, other papers were more difficult to decide on. Our method of handling this ambuity is described below, but we do not try claim that subjectivity did not play a part of the review process in some way.

Additionally, (a) we did not survey every single journal where advances may have been published 2 and (b) articles published after 2019 were not included. Despite all these limitations, this review was still worth performing. Self-report Likert scales are an incredibly dominant source of data in psychology and the social sciences in general. The divide between methodological and substantive literatures—and between methodologists and substantive researchers ( Sharpe, 2013 )—can increase over time, but they can also be reduced by good communication and dissemination ( Sharpe, 2013 ). The current review is our attempt to bridge, in part, that gap.

To conduct this review, we examined every issue of six major journals related to psychological measurement from January 1995 to December 2019 (inclusive), screening out articles by either title and/or abstract. The full text of any potentially relevant article was reviewed by either the first or second author, and any borderline cases were discussed until a consensus was reached. A PRISMA flowchart of the process is shown in Figure 1 . The journals we surveyed were: Applied Psychological Measurement , Psychological Assessment , Educational and Psychological Measurement , Psychological Methods , Advances in Methods and Practices in Psychological Science , and Organizational Research Methods . For inclusion, our criteria were that the advance had to be: (a) related to the creation of self-report Likert scales (seven excluded), (b) broad and significant enough for a general psychological audience (23 excluded), and (c) not superseded or encapsulated by newer developments (11 excluded). The advances we included are shown in Table 1 , along with a short descriptive summary of each. Scale developers should not feel compelled to use all of these techniques, just those that contribute to better measurement in their context. More specific contexts (e.g., measuring socially sensitive constructs) can utilize additional resources.

PRISMA flowchart of review process.

Summary of Likert scale creation developments from 1995–2019.

To supplement this literature review, the remainder of the paper provides a more in-depth discussion of five of these advances that span a range of topics. These were chosen due to their importance, uniqueness, or ease-of-use, and lack of general coverage in classic scale creation papers. These are: (1) conceptualizations of construct validity, (2) approaches for creating more precise construct definitions, (3) readability tests for generating items, (4) alternative measures of precision (e.g., coefficient omega), and (5) ant colony optimization (ACO) for creating short forms. These developments are presented in roughly the order of what stage they occur in the process of scale creation, a schematic diagram of which is shown in Figure 2 .

Schematic diagram of Likert scale development (with advances in current paper, bolded).

Conceptualizing Construct Validity

Two views of validity.

Psychologists recognize validity as the fundamental concept of psychometrics and one of the most critical aspects of psychological science ( Hood, 2009 ; Cizek, 2012 ). However, what is “validity?” Despite the widespread agreement about its importance, there is disagreement about how validity should be defined ( Newton and Shaw, 2013 ). In particular, there are two divergent perspectives on the definition. The first major perspective defines validity not as a property of tests but as a property of the interpretations of test scores ( Messick, 1989 ; Kane, 1992 ). This view can be therefore called the interpretation camp ( Hood, 2009 ) or validity as construct validity ( Cronbach and Meehl, 1955 ), which is the perspective endorsed by Clark and Watson (1995 , 2019) and standards set forth by governing agencies for the North American educational and psychological measurement supracommunity ( Newton and Shaw, 2013 ). Construct validity is based on a synthesis and analysis of the evidence that supports a certain interpretation of test scores, so validity is a property of interpretive inferences about test scores ( Messick, 1989 , p. 13), especially interpreting score meaning ( Messick, 1989 , p. 17). Because the context of measurement affects test scores ( Messick, 1989 , pp. 14–15), the results of any validation effort are conditional upon the context in and group characteristics of the sample with which the studies were done, as are claims of validity drawn from these empirical results ( Newton, 2012 ; Newton and Shaw, 2013 ).

The other major perspective ( Borsboom et al., 2004 ) revivifies one of the oldest and most intuitive definitions of validity: “…whether or not a test measures what it purports to measure” ( Kelley, 1927 , p. 14). In other words, on this view, validity is a property of tests rather than interpretations. Validity is simply whether or not the statement, “test X measures attribute Y,” is true. To be true, it requires (a) that Y exists and (b) that variations in Y cause variations in X ( Borsboom et al., 2004 ). This definition can be called the test validity view and finds ample precedent in psychometric texts ( Hood, 2009 ). However, Clark and Watson (2019) , citing the Standards for Educational and Psychological Testing ( American Educational Research Association et al., 2014 ), reject this conception of validity.

Ultimately, this disagreement does not show any signs of resolving, and interested readers can consult papers that have attempted to integrate or adjudicate on the two views ( Lissitz and Samuelson, 2007 ; Hood, 2009 ; Cizek, 2012 ).

There Aren’t “Types” of Validity; Validity Is “One”

Even though there are stark differences between these two definitions of validity, one thing they do agree on is that there are not different “types” of validity ( Newton and Shaw, 2013 ). Language like “content validity” and “criterion-related validity” is misleading because it implies that their typical analytic procedures produce empirical evidence that does not bear on the central inference of interpreting the score’s meaning (i.e., construct validity; Messick, 1989 , pp. 13–14, 17, 19–21). Rather, there is only (construct) validity, and different validation procedures and types of evidence all contribute to making inferences about score meaning ( Messick, 1980 ; Binning and Barrett, 1989 ; Borsboom et al., 2004 ).

Despite the agreement that validity is a unitary concept, psychologists seem to disagree in practice; as of 2013, there were 122 distinct subtypes of validity ( Newton and Shaw, 2013 ), many of them named after the fourth edition of the Standards that stated that validity-type language was inappropriate ( American Educational Research Association et al., 1985 ). A consequence of speaking this way is that it perpetuates the view (a) that there are independent “types” of validity (b) that entail different analytic procedures to (c) produce corresponding types of evidence that (d) themselves correspond to different categories of inference ( Messick, 1989 ). This is why to even speak of content, construct, and criterion-related “analyses” (e.g., Lawshe, 1985 ; Landy, 1986 ; Binning and Barrett, 1989 ) can be problematic, since this misleads researchers into thinking that these produce distinct kinds of empirical evidence that have a direct, one-to-one correspondence to the three broad categories of inferences with which they are typically associated ( Messick, 1989 ).

However, an analytic procedure traditionally associated with a certain “type” of validity can be used to produce empirical evidence for another “type” of validity not typically associated with it. For instance, showing that the focal construct is empirically discriminable from similar constructs would constitute strong evidence for the inference of discriminability ( Messick, 1989 ). However, the researcher could use analyses typically associated with “criterion and incremental validity” ( Sechrest, 1963 ) to investigate discriminability as well (e.g., Credé et al., 2017 ). Thus, the key takeaway is to think not of “discriminant validity” or distinct “types” of validity, but to use a wide variety of research designs and statistical analyses to potentially provide evidence that may or may not support a given inference under investigation (e.g., discriminability). This demonstrates that thinking about validity “types” can be unnecessarily restrictive because it misleads researchers into thinking about validity as a fragmented concept ( Newton and Shaw, 2013 ), leading to negative downstream consequences in validation practice.

Creating Clearer Construct Definitions

Ensuring concept clarity.

Defining the construct one is interested in measuring is a foundational part of scale development; failing to do so properly undermines every scientific activity that follows (T. L. Thorndike, 1904 ; Kelley, 1927 ; Mackenzie, 2003 ; Podsakoff et al., 2016 ). However, there are lingering issues with conceptual clarity in the social sciences. Locke (2012) noted that “As someone who has been reviewing journal articles for more than 30 years, I estimate that about 90% of the submissions I get suffer from problems of conceptual clarity” (p. 146), and Podsakoff et al. (2016) stated that, “it is…obvious that the problem of inadequate conceptual definitions remains an issue for scholars in the organizational, behavioral, and social sciences” (p. 160). To support this effort, we surveyed key papers on construct clarity and integrated their recommendations into Table 2 , adding our own comments where appropriate. We cluster this advice into three “aspects” of formulating a construct definition, each of which contains several specific strategies.

Integrative summary of advice for defining constructs.

Specifying the Latent Continuum

In addition to clearly articulating the concept, there are other parts to defining a psychological construct for empirical measurement. Another recent development demonstrates the importance of incorporating the latent continuum in measurement ( Tay and Jebb, 2018 ). Briefly, many psychological concepts like emotion and self-esteem are conceived as having degrees of magnitudes (e.g., “low,” “moderate,” and “high”), and these degrees can be represented by a construct continuum. The continuum was originally a primary focus in early psychological measurement, but the advent of the convenient Likert(-type) scaling ( Likert, 1932 ) pushed it into the background.

However, defining the characteristics of this continuum is needed for proper measurement. For instance, what do the poles (i.e., endpoints) of the construct represent? Is the lower pole its absence , or is it the presence of an opposing construct (i.e., a unipolar or bipolar continuum)? And, what do the different continuum degrees actually represent? If the construct is a positive emotion, do they represent the intensity of experience or the frequency of experience? Quite often, scale developers do not define these aspects but leave them implicit. Tay and Jebb (2018) discuss different problems that can arise from this.

In addition to defining the continuum, there is also the practical issue of fully operationalizing the continuum ( Tay and Jebb, 2018 ). This involves ensuring that the whole continuum is well-represented when creating items. It also means being mindful when including reverse-worded items in their scales. These items may measure an opposite construct , which is desirable if the construct is bipolar (e.g., positive emotions as including happy and sad), but contaminates measurement if the construct is unipolar (e.g., positive emotions as only including feeling happy). Finally, developers should choose a response format that aligns with whether the continuum has been specified as unipolar or bipolar. For example, the numerical rating of 0–4 typically implies a unipolar scale to the respondent, whereas a −3-to-3 response scale implies a bipolar scale. Verbal labels like “Not at all” to “Extremely” imply unipolarity, whereas formats like “Strongly disagree” to “Strongly agree” imply bipolarity. Tay and Jebb (2018) also discuss operationalizing the continuum with regard to two other issues, assessing dimensionality of the scale and assuming the correct response process.

Readability Tests for Items

The current psychometric practice is to keep item statements short and simple with language that is familiar to the target respondents ( Hinkin, 1998 ). Instructions like these alleviate readability problems because psychologists are usually good at identifying and revising difficult items. However, professional psychologists also have a much higher degree of education compared to the rest of the population. In the United States, less than 2% of adults have doctorates, and a majority do not have a degree past high school ( U.S. Census Bureau, 2014 ). The average United States adult has an estimated 8th-grade reading level, with 20% of adults falling below a 5th-grade level ( Doak et al., 1998 ). Researchers can probably catch and remove scale items that are extremely verbose (e.g., “I am garrulous”), but items that might not be easily understood by target respondents may slip through the item creation process. Social science samples frequently consist of university students ( Henrich et al., 2010 ), but this subpopulation has a higher reading level than the general population ( Baer et al., 2006 ), and issues that would manifest for other respondents might not be evident when using such samples.

In addition to asking respondents directly (see Parrigon et al., 2017 for an example), another tool to assess readability is to use readability tests , which have already been used by scale developers in psychology (e.g., Lubin et al., 1990 ; Ravens-Sieberer et al., 2014 ). Readability tests are formulas that score the readability of some piece of writing, often as a function of the number of words per sentence and number of syllables per word. These tests only take seconds to implement and can serve as an additional way to check item language beyond the intuitions of scale developers. When these tests are used, scale items should only be analyzed individually , as testing the readability of the whole scale together can hide one or more difficult items. If an item receives a low readability score, the developer can revise the item.