11 Tips For Writing a Dissertation Data Analysis

Since the evolution of the fourth industrial revolution – the Digital World; lots of data have surrounded us. There are terabytes of data around us or in data centers that need to be processed and used. The data needs to be appropriately analyzed to process it, and Dissertation data analysis forms its basis. If data analysis is valid and free from errors, the research outcomes will be reliable and lead to a successful dissertation.

Considering the complexity of many data analysis projects, it becomes challenging to get precise results if analysts are not familiar with data analysis tools and tests properly. The analysis is a time-taking process that starts with collecting valid and relevant data and ends with the demonstration of error-free results.

So, in today’s topic, we will cover the need to analyze data, dissertation data analysis, and mainly the tips for writing an outstanding data analysis dissertation. If you are a doctoral student and plan to perform dissertation data analysis on your data, make sure that you give this article a thorough read for the best tips!

What is Data Analysis in Dissertation?

Dissertation Data Analysis is the process of understanding, gathering, compiling, and processing a large amount of data. Then identifying common patterns in responses and critically examining facts and figures to find the rationale behind those outcomes.

Data Analysis Tools

There are plenty of indicative tests used to analyze data and infer relevant results for the discussion part. Following are some tests used to perform analysis of data leading to a scientific conclusion:

| Hypothesis Testing | Regression and Correlation analysis |

| T-test | Z test |

| Mann-Whitney Test | Time Series and index number |

| Chi-Square Test | ANOVA (or sometimes MANOVA) |

11 Most Useful Tips for Dissertation Data Analysis

Doctoral students need to perform dissertation data analysis and then dissertation to receive their degree. Many Ph.D. students find it hard to do dissertation data analysis because they are not trained in it.

1. Dissertation Data Analysis Services

The first tip applies to those students who can afford to look for help with their dissertation data analysis work. It’s a viable option, and it can help with time management and with building the other elements of the dissertation with much detail.

Dissertation Analysis services are professional services that help doctoral students with all the basics of their dissertation work, from planning, research and clarification, methodology, dissertation data analysis and review, literature review, and final powerpoint presentation.

One great reference for dissertation data analysis professional services is Statistics Solutions , they’ve been around for over 22 years helping students succeed in their dissertation work. You can find the link to their website here .

For a proper dissertation data analysis, the student should have a clear understanding and statistical knowledge. Through this knowledge and experience, a student can perform dissertation analysis on their own.

Following are some helpful tips for writing a splendid dissertation data analysis:

2. Relevance of Collected Data

3. data analysis.

For analysis, it is crucial to use such methods that fit best with the types of data collected and the research objectives. Elaborate on these methods and the ones that justify your data collection methods thoroughly. Make sure to make the reader believe that you did not choose your method randomly. Instead, you arrived at it after critical analysis and prolonged research.

The overall objective of data analysis is to detect patterns and inclinations in data and then present the outcomes implicitly. It helps in providing a solid foundation for critical conclusions and assisting the researcher to complete the dissertation proposal.

4. Qualitative Data Analysis

Qualitative data refers to data that does not involve numbers. You are required to carry out an analysis of the data collected through experiments, focus groups, and interviews. This can be a time-taking process because it requires iterative examination and sometimes demanding the application of hermeneutics. Note that using qualitative technique doesn’t only mean generating good outcomes but to unveil more profound knowledge that can be transferrable.

Presenting qualitative data analysis in a dissertation can also be a challenging task. It contains longer and more detailed responses. Placing such comprehensive data coherently in one chapter of the dissertation can be difficult due to two reasons. Firstly, we cannot figure out clearly which data to include and which one to exclude. Secondly, unlike quantitative data, it becomes problematic to present data in figures and tables. Making information condensed into a visual representation is not possible. As a writer, it is of essence to address both of these challenges.

This method involves analyzing qualitative data based on an argument that a researcher already defines. It’s a comparatively easy approach to analyze data. It is suitable for the researcher with a fair idea about the responses they are likely to receive from the questionnaires.

5. Quantitative Data Analysis

Quantitative data contains facts and figures obtained from scientific research and requires extensive statistical analysis. After collection and analysis, you will be able to conclude. Generic outcomes can be accepted beyond the sample by assuming that it is representative – one of the preliminary checkpoints to carry out in your analysis to a larger group. This method is also referred to as the “scientific method”, gaining its roots from natural sciences.

The Presentation of quantitative data depends on the domain to which it is being presented. It is beneficial to consider your audience while writing your findings. Quantitative data for hard sciences might require numeric inputs and statistics. As for natural sciences , such comprehensive analysis is not required.

6. Data Presentation Tools

Since large volumes of data need to be represented, it becomes a difficult task to present such an amount of data in coherent ways. To resolve this issue, consider all the available choices you have, such as tables, charts, diagrams, and graphs.

Tables help in presenting both qualitative and quantitative data concisely. While presenting data, always keep your reader in mind. Anything clear to you may not be apparent to your reader. So, constantly rethink whether your data presentation method is understandable to someone less conversant with your research and findings. If the answer is “No”, you may need to rethink your Presentation.

7. Include Appendix or Addendum

After presenting a large amount of data, your dissertation analysis part might get messy and look disorganized. Also, you would not be cutting down or excluding the data you spent days and months collecting. To avoid this, you should include an appendix part.

The data you find hard to arrange within the text, include that in the appendix part of a dissertation . And place questionnaires, copies of focus groups and interviews, and data sheets in the appendix. On the other hand, one must put the statistical analysis and sayings quoted by interviewees within the dissertation.

8. Thoroughness of Data

Thoroughly demonstrate the ideas and critically analyze each perspective taking care of the points where errors can occur. Always make sure to discuss the anomalies and strengths of your data to add credibility to your research.

9. Discussing Data

Discussion of data involves elaborating the dimensions to classify patterns, themes, and trends in presented data. In addition, to balancing, also take theoretical interpretations into account. Discuss the reliability of your data by assessing their effect and significance. Do not hide the anomalies. While using interviews to discuss the data, make sure you use relevant quotes to develop a strong rationale.

10. Findings and Results

Findings refer to the facts derived after the analysis of collected data. These outcomes should be stated; clearly, their statements should tightly support your objective and provide logical reasoning and scientific backing to your point. This part comprises of majority part of the dissertation.

11. Connection with Literature Review

The role of data analytics at the senior management level, the decision-making model explained (in plain terms).

Any form of the systematic decision-making process is better enhanced with data. But making sense of big data or even small data analysis when venturing into a decision-making process might

13 Reasons Why Data Is Important in Decision Making

Wrapping up.

Writing data analysis in the dissertation involves dedication, and its implementations demand sound knowledge and proper planning. Choosing your topic, gathering relevant data, analyzing it, presenting your data and findings correctly, discussing the results, connecting with the literature and conclusions are milestones in it. Among these checkpoints, the Data analysis stage is most important and requires a lot of keenness.

As an IT Engineer, who is passionate about learning and sharing. I have worked and learned quite a bit from Data Engineers, Data Analysts, Business Analysts, and Key Decision Makers almost for the past 5 years. Interested in learning more about Data Science and How to leverage it for better decision-making in my business and hopefully help you do the same in yours.

Recent Posts

In today’s fast-paced business landscape, it is crucial to make informed decisions to stay in the competition which makes it important to understand the concept of the different characteristics and...

Have a language expert improve your writing

Run a free plagiarism check in 10 minutes, generate accurate citations for free.

- Knowledge Base

- Dissertation

- How to Write a Results Section | Tips & Examples

How to Write a Results Section | Tips & Examples

Published on August 30, 2022 by Tegan George . Revised on July 18, 2023.

A results section is where you report the main findings of the data collection and analysis you conducted for your thesis or dissertation . You should report all relevant results concisely and objectively, in a logical order. Don’t include subjective interpretations of why you found these results or what they mean—any evaluation should be saved for the discussion section .

Instantly correct all language mistakes in your text

Upload your document to correct all your mistakes in minutes

Table of contents

How to write a results section, reporting quantitative research results, reporting qualitative research results, results vs. discussion vs. conclusion, checklist: research results, other interesting articles, frequently asked questions about results sections.

When conducting research, it’s important to report the results of your study prior to discussing your interpretations of it. This gives your reader a clear idea of exactly what you found and keeps the data itself separate from your subjective analysis.

Here are a few best practices:

- Your results should always be written in the past tense.

- While the length of this section depends on how much data you collected and analyzed, it should be written as concisely as possible.

- Only include results that are directly relevant to answering your research questions . Avoid speculative or interpretative words like “appears” or “implies.”

- If you have other results you’d like to include, consider adding them to an appendix or footnotes.

- Always start out with your broadest results first, and then flow into your more granular (but still relevant) ones. Think of it like a shoe store: first discuss the shoes as a whole, then the sneakers, boots, sandals, etc.

Here's why students love Scribbr's proofreading services

Discover proofreading & editing

If you conducted quantitative research , you’ll likely be working with the results of some sort of statistical analysis .

Your results section should report the results of any statistical tests you used to compare groups or assess relationships between variables . It should also state whether or not each hypothesis was supported.

The most logical way to structure quantitative results is to frame them around your research questions or hypotheses. For each question or hypothesis, share:

- A reminder of the type of analysis you used (e.g., a two-sample t test or simple linear regression ). A more detailed description of your analysis should go in your methodology section.

- A concise summary of each relevant result, both positive and negative. This can include any relevant descriptive statistics (e.g., means and standard deviations ) as well as inferential statistics (e.g., t scores, degrees of freedom , and p values ). Remember, these numbers are often placed in parentheses.

- A brief statement of how each result relates to the question, or whether the hypothesis was supported. You can briefly mention any results that didn’t fit with your expectations and assumptions, but save any speculation on their meaning or consequences for your discussion and conclusion.

A note on tables and figures

In quantitative research, it’s often helpful to include visual elements such as graphs, charts, and tables , but only if they are directly relevant to your results. Give these elements clear, descriptive titles and labels so that your reader can easily understand what is being shown. If you want to include any other visual elements that are more tangential in nature, consider adding a figure and table list .

As a rule of thumb:

- Tables are used to communicate exact values, giving a concise overview of various results

- Graphs and charts are used to visualize trends and relationships, giving an at-a-glance illustration of key findings

Don’t forget to also mention any tables and figures you used within the text of your results section. Summarize or elaborate on specific aspects you think your reader should know about rather than merely restating the same numbers already shown.

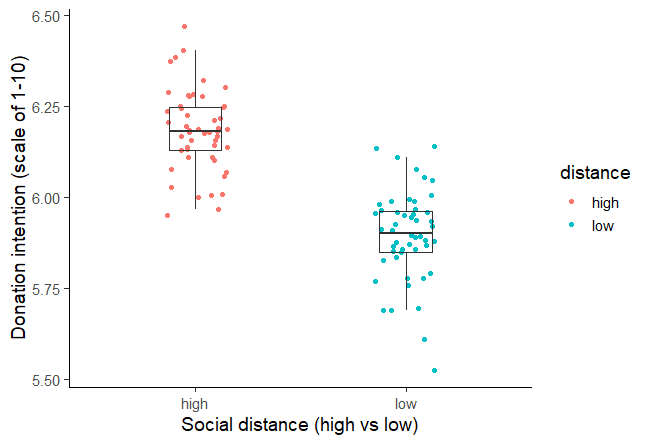

A two-sample t test was used to test the hypothesis that higher social distance from environmental problems would reduce the intent to donate to environmental organizations, with donation intention (recorded as a score from 1 to 10) as the outcome variable and social distance (categorized as either a low or high level of social distance) as the predictor variable.Social distance was found to be positively correlated with donation intention, t (98) = 12.19, p < .001, with the donation intention of the high social distance group 0.28 points higher, on average, than the low social distance group (see figure 1). This contradicts the initial hypothesis that social distance would decrease donation intention, and in fact suggests a small effect in the opposite direction.

Figure 1: Intention to donate to environmental organizations based on social distance from impact of environmental damage.

In qualitative research , your results might not all be directly related to specific hypotheses. In this case, you can structure your results section around key themes or topics that emerged from your analysis of the data.

For each theme, start with general observations about what the data showed. You can mention:

- Recurring points of agreement or disagreement

- Patterns and trends

- Particularly significant snippets from individual responses

Next, clarify and support these points with direct quotations. Be sure to report any relevant demographic information about participants. Further information (such as full transcripts , if appropriate) can be included in an appendix .

When asked about video games as a form of art, the respondents tended to believe that video games themselves are not an art form, but agreed that creativity is involved in their production. The criteria used to identify artistic video games included design, story, music, and creative teams.One respondent (male, 24) noted a difference in creativity between popular video game genres:

“I think that in role-playing games, there’s more attention to character design, to world design, because the whole story is important and more attention is paid to certain game elements […] so that perhaps you do need bigger teams of creative experts than in an average shooter or something.”

Responses suggest that video game consumers consider some types of games to have more artistic potential than others.

Your results section should objectively report your findings, presenting only brief observations in relation to each question, hypothesis, or theme.

It should not speculate about the meaning of the results or attempt to answer your main research question . Detailed interpretation of your results is more suitable for your discussion section , while synthesis of your results into an overall answer to your main research question is best left for your conclusion .

Prevent plagiarism. Run a free check.

I have completed my data collection and analyzed the results.

I have included all results that are relevant to my research questions.

I have concisely and objectively reported each result, including relevant descriptive statistics and inferential statistics .

I have stated whether each hypothesis was supported or refuted.

I have used tables and figures to illustrate my results where appropriate.

All tables and figures are correctly labelled and referred to in the text.

There is no subjective interpretation or speculation on the meaning of the results.

You've finished writing up your results! Use the other checklists to further improve your thesis.

If you want to know more about AI for academic writing, AI tools, or research bias, make sure to check out some of our other articles with explanations and examples or go directly to our tools!

Research bias

- Survivorship bias

- Self-serving bias

- Availability heuristic

- Halo effect

- Hindsight bias

- Deep learning

- Generative AI

- Machine learning

- Reinforcement learning

- Supervised vs. unsupervised learning

(AI) Tools

- Grammar Checker

- Paraphrasing Tool

- Text Summarizer

- AI Detector

- Plagiarism Checker

- Citation Generator

The results chapter of a thesis or dissertation presents your research results concisely and objectively.

In quantitative research , for each question or hypothesis , state:

- The type of analysis used

- Relevant results in the form of descriptive and inferential statistics

- Whether or not the alternative hypothesis was supported

In qualitative research , for each question or theme, describe:

- Recurring patterns

- Significant or representative individual responses

- Relevant quotations from the data

Don’t interpret or speculate in the results chapter.

Results are usually written in the past tense , because they are describing the outcome of completed actions.

The results chapter or section simply and objectively reports what you found, without speculating on why you found these results. The discussion interprets the meaning of the results, puts them in context, and explains why they matter.

In qualitative research , results and discussion are sometimes combined. But in quantitative research , it’s considered important to separate the objective results from your interpretation of them.

Cite this Scribbr article

If you want to cite this source, you can copy and paste the citation or click the “Cite this Scribbr article” button to automatically add the citation to our free Citation Generator.

George, T. (2023, July 18). How to Write a Results Section | Tips & Examples. Scribbr. Retrieved June 24, 2024, from https://www.scribbr.com/dissertation/results/

Is this article helpful?

Tegan George

Other students also liked, what is a research methodology | steps & tips, how to write a discussion section | tips & examples, how to write a thesis or dissertation conclusion, "i thought ai proofreading was useless but..".

I've been using Scribbr for years now and I know it's a service that won't disappoint. It does a good job spotting mistakes”

- Cookies & Privacy

- GETTING STARTED

- Introduction

- FUNDAMENTALS

Getting to the main article

Choosing your route

Setting research questions/ hypotheses

Assessment point

Building the theoretical case

Setting your research strategy

Data collection

Data analysis

CONSIDERATION ONE

The data analysis process.

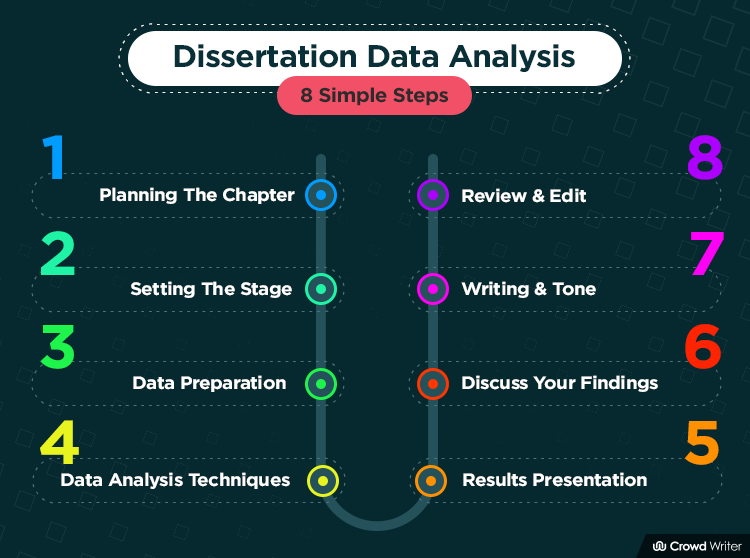

The data analysis process involves three steps : (STEP ONE) select the correct statistical tests to run on your data; (STEP TWO) prepare and analyse the data you have collected using a relevant statistics package; and (STEP THREE) interpret the findings properly so that you can write up your results (i.e., usually in Chapter Four: Results ). The basic idea behind each of these steps is relatively straightforward, but the act of analysing your data (i.e., by selecting statistical tests, preparing your data and analysing it, and interpreting the findings from these tests) can be time consuming and challenging. We have tried to make this process as easy as possible by providing comprehensive, step-by-step guides in the Data Analysis part of Lærd Dissertation, but you should leave time at least one week to analyse your data.

STEP ONE Select the correct statistical tests to run on your data

It is common that dissertation students collect good data, but then report the wrong findings because of selecting the incorrect statistical tests to run in the first place. Selecting the correct statistical tests to perform on the data that you have collected will depend on (a) the research questions/hypotheses you have set, together with the research design you have adopted, and (b) the type and nature of your data:

The research questions/hypotheses you have set, together with the research design you have adopted

Your research questions/hypotheses and research design explain what variables you are measuring and how you plan to measure these variables. These highlight whether you want to (a) predict a score or a membership of a group, (b) find out differences between groups or treatments, or (c) explore associations/relationships between variables. These different aims determine the statistical tests that may be appropriate to run on your data. We highlight the word may because the most appropriate test that is identified based on your research questions/hypotheses and research design can change depending on the type and nature of the data you collect; something we discuss next.

The type and nature of the data you collected

Data is not all the same. As you will have identified by now, not all variables are measured in the same way; variables can be dichotomous, ordinal, or continuous. In addition, not all data is normal , as term we explain the Data Analysis section, nor is the data you have collected when comparing groups necessarily equal for each group. As a result, you might think that running a particular statistical test is correct (e.g., a dependent t-test), based on the research questions/hypotheses you have set, but the data you have collected fails certain assumptions that are important to this statistical test (i.e., normality and homogeneity of variance ). As a result, you have to run another statistical test (e.g., a Mann-Whitney U instead of a dependent t-test).

To select the correct statistical tests to run on the data in your dissertation, we have created a Statistical Test Selector to help guide you through the various options.

STEP TWO Prepare and analyse your data using a relevant statistics package

The preparation and analysis of your data is actually a much more practical step than many students realise. Most of the time required to get the results that you will present in your write up (i.e., usually in Chapter Four: Results ) comes from knowing (a) how to enter data into a statistics package (e.g., SPSS) so that it can be analysed correctly, and (b) what buttons to press in the statistics package to correctly run the statistical tests you need:

Entering data is not just about knowing what buttons to press, but: (a) how to code your data correctly to recognise the types of variables that you have, as well as issues such as reverse coding ; (b) how to filter your dataset to take into account missing data and outliers ; (c) how to split files (i.e., in SPSS) when analysing the data for separate subgroups (e.g., males and females) using the same statistical tests; (d) how to weight and unweight data you have collected; and (e) other things you need to consider when entering data. What you have to do when it comes to entering data (i.e., in terms of coding, filtering, splitting files, and weighting/unweighting data) will depend on the statistical tests you plan to run. Therefore, entering data starts with using the Statistical Test Selector to help guide you through the various options. In the Data Analysis section, we help you to understand what you need to know about entering data in the context of your dissertation.

Running statistical tests

Statistics packages do the hard work of statistically analysing your data, but they rely on you making a number of choices. This is not simply about selecting the correct statistical test, but knowing, when you have selected a given test to run on your data, what buttons to press to: (a) test for the assumptions underlying the statistical test; (b) test whether corrections can be made when assumptions are violated ; (c) take into account outliers and missing data ; (d) choose between the different numerical and graphical ways to approach your analysis; and (e) other standard and more advanced tips. In the Data Analysis section, we explain what these considerations are (i.e., assumptions, corrections, outliers and missing data, numerical and graphical analysis) so that you can apply them to your own dissertation. We also provide comprehensive , step-by-step instructions with screenshots that show you how to enter data and run a wide range of statistical tests using the statistics package, SPSS. We do this on the basis that you probably have little or no knowledge of SPSS.

STEP THREE Interpret the findings properly

SPSS produces many tables of output for the typical tests you will run. In addition, SPSS has many new methods of presenting data using its Model viewer. You need to know which of these tables is important for your analysis and what the different figures/numbers mean. Interpreting these findings properly and communicating your results is one of the most important aspects of your dissertation. In the Data Analysis section, we show you how to understand these tables of output, what part of this output you need to look at, and how to write up the results in an appropriate format (i.e., so that you can answer you research hypotheses).

Ace Your Data Analysis

Get hands-on help analysing your data from a friendly Grad Coach. It’s like having a professor in your pocket.

Students Helped

Client pass rate, trustpilot score, facebook rating, how we help you .

Whether you’ve just started collecting your data, are in the thick of analysing it, or you’ve already written a draft chapter – we’re here to help.

Make sense of the data

If you’ve collected your data, but are feeling confused about what to do and how to make sense of it all, we can help. One of our friendly coaches will hold your hand through each step and help you interpret your dataset .

Alternatively, if you’re still planning your data collection and analysis strategy, we can help you craft a rock-solid methodology that sets you up for success.

Get your thinking onto paper

If you’ve analysed your data, but are struggling to get your thoughts onto paper, one of our friendly Grad Coaches can help you structure your results and/or discussion chapter to kickstart your writing.

Refine your writing

If you’ve already written up your results but need a second set of eyes, our popular Content Review service can help you identify and address key issues within your writing, before you submit it for grading .

Why Grad Coach ?

It's all about you

We take the time to understand your unique challenges and work with you to achieve your specific academic goals . Whether you're aiming to earn top marks or just need to cross the finish line, we're here to help.

An insider advantage

Our award-winning Dissertation Coaches all hold doctoral-level degrees and share 100+ years of combined academic experience. Having worked on "the inside", we know exactly what markers want .

Any time, anywhere

Getting help from your dedicated Dissertation Coach is simple. Book a live video /voice call, chat via email or send your document to us for an in-depth review and critique . We're here when you need us.

A track record you can trust

Over 10 million students have enjoyed our online lessons and courses, while 3000+ students have benefited from 1:1 Private Coaching. The plethora of glowing reviews reflects our commitment.

Chat With A Friendly Coach, Today

Prefer email? No problem - you c an email us here .

Have a question ?

Below we address some of the most popular questions we receive regarding our data analysis support, but feel free to get in touch if you have any other questions.

Dissertation Coaching

I have no idea where to start. can you help.

Absolutely. We regularly work with students who are completely new to data analysis (both qualitative and quantitative) and need step-by-step guidance to understand and interpret their data.

Can you analyse my data for me?

The short answer – no.

The longer answer:

If you’re undertaking qualitative research , we can fast-track your project with our Qualitative Coding Service. With this service, we take care of the initial coding of your dataset (e.g., interview transcripts), providing a firm foundation on which you can build your qualitative analysis (e.g., thematic analysis, content analysis, etc.).

If you’re undertaking quantitative research , we can fast-track your project with our Statistical Testing Service . With this service, we run the relevant statistical tests using SPSS or R, and provide you with the raw outputs. You can then use these outputs/reports to interpret your results and develop your analysis.

Importantly, in both cases, we are not analysing the data for you or providing an interpretation or write-up for you. If you’d like coaching-based support with that aspect of the project, we can certainly assist you with this (i.e., provide guidance and feedback, review your writing, etc.). But it’s important to understand that you, as the researcher, need to engage with the data and write up your own findings.

Can you help me choose the right data analysis methods?

Yes, we can assist you in selecting appropriate data analysis methods, based on your research aims and research questions, as well as the characteristics of your data.

Which data analysis methods can you assist with?

We can assist with most qualitative and quantitative analysis methods that are commonplace within the social sciences.

Qualitative methods:

- Qualitative content analysis

- Thematic analysis

- Discourse analysis

- Narrative analysis

- Grounded theory

Quantitative methods:

- Descriptive statistics

- Inferential statistics

Can you provide data sets for me to analyse?

If you are undertaking secondary research , we can potentially assist you in finding suitable data sets for your analysis.

If you are undertaking primary research , we can help you plan and develop data collection instruments (e.g., surveys, questionnaires, etc.), but we cannot source the data on your behalf.

Can you write the analysis/results/discussion chapter/section for me?

No. We can provide you with hands-on guidance through each step of the analysis process, but the writing needs to be your own. Writing anything for you would constitute academic misconduct .

Can you help me organise and structure my results/discussion chapter/section?

Yes, we can assist in structuring your chapter to ensure that you have a clear, logical structure and flow that delivers a clear and convincing narrative.

Can you review my writing and give me feedback?

Absolutely. Our Content Review service is designed exactly for this purpose and is one of the most popular services here at Grad Coach. In a Content Review, we carefully read through your research methodology chapter (or any other chapter) and provide detailed comments regarding the key issues/problem areas, why they’re problematic and what you can do to resolve the issues. You can learn more about Content Review here .

Do you provide software support (e.g., SPSS, R, etc.)?

It depends on the software package you’re planning to use, as well as the analysis techniques/tests you plan to undertake. We can typically provide support for the more popular analysis packages, but it’s best to discuss this in an initial consultation.

Can you help me with other aspects of my research project?

Yes. Data analysis support is only one aspect of our offering at Grad Coach, and we typically assist students throughout their entire dissertation/thesis/research project. You can learn more about our full service offering here .

Can I get a coach that specialises in my topic area?

It’s important to clarify that our expertise lies in the research process itself , rather than specific research areas/topics (e.g., psychology, management, etc.).

In other words, the support we provide is topic-agnostic, which allows us to support students across a very broad range of research topics. That said, if there is a coach on our team who has experience in your area of research, as well as your chosen methodology, we can allocate them to your project (dependent on their availability, of course).

If you’re unsure about whether we’re the right fit, feel free to drop us an email or book a free initial consultation.

What qualifications do your coaches have?

All of our coaches hold a doctoral-level degree (for example, a PhD, DBA, etc.). Moreover, they all have experience working within academia, in many cases as dissertation/thesis supervisors. In other words, they understand what markers are looking for when reviewing a student’s work.

Is my data/topic/study kept confidential?

Yes, we prioritise confidentiality and data security. Your written work and personal information are treated as strictly confidential. We can also sign a non-disclosure agreement, should you wish.

I still have questions…

No problem. Feel free to email us or book an initial consultation to discuss.

What our clients say

We've worked 1:1 with 3000+ students . Here's what some of them have to say:

David's depth of knowledge in research methodology was truly impressive. He demonstrated a profound understanding of the nuances and complexities of my research area, offering insights that I hadn't even considered. His ability to synthesize information, identify key research gaps, and suggest research topics was truly inspiring. I felt like I had a true expert by my side, guiding me through the complexities of the proposal.

Cyntia Sacani (US)

I had been struggling with the first 3 chapters of my dissertation for over a year. I finally decided to give GradCoach a try and it made a huge difference. Alexandra provided helpful suggestions along with edits that transformed my paper. My advisor was very impressed.

Tracy Shelton (US)

Working with Kerryn has been brilliant. She has guided me through that pesky academic language that makes us all scratch our heads. I can't recommend Grad Coach highly enough; they are very professional, humble, and fun to work with. If like me, you know your subject matter but you're getting lost in the academic language, look no further, give them a go.

Tony Fogarty (UK)

So helpful! Amy assisted me with an outline for my literature review and with organizing the results for my MBA applied research project. Having a road map helped enormously and saved a lot of time. Definitely worth it.

Jennifer Hagedorn (Canada)

Everything about my experience was great, from Dr. Shaeffer’s expertise, to her patience and flexibility. I reached out to GradCoach after receiving a 78 on a midterm paper. Not only did I get a 100 on my final paper in the same class, but I haven’t received a mark less than A+ since. I recommend GradCoach for everyone who needs help with academic research.

Antonia Singleton (Qatar)

I started using Grad Coach for my dissertation and I can honestly say that if it wasn’t for them, I would have really struggled. I would strongly recommend them – worth every penny!

Richard Egenreider (South Africa)

Fast-Track Your Data Analysis, Today

Enter your details below, pop us an email, or book an introductory consultation .

Raw Data to Excellence: Master Dissertation Analysis

Discover the secrets of successful dissertation data analysis. Get practical advice and useful insights from experienced experts now!

Have you ever found yourself knee-deep in a dissertation, desperately seeking answers from the data you’ve collected? Or have you ever felt clueless with all the data that you’ve collected but don’t know where to start? Fear not, in this article we are going to discuss a method that helps you come out of this situation and that is Dissertation Data Analysis.

Dissertation data analysis is like uncovering hidden treasures within your research findings. It’s where you roll up your sleeves and explore the data you’ve collected, searching for patterns, connections, and those “a-ha!” moments. Whether you’re crunching numbers, dissecting narratives, or diving into qualitative interviews, data analysis is the key that unlocks the potential of your research.

Dissertation Data Analysis

Dissertation data analysis plays a crucial role in conducting rigorous research and drawing meaningful conclusions. It involves the systematic examination, interpretation, and organization of data collected during the research process. The aim is to identify patterns, trends, and relationships that can provide valuable insights into the research topic.

The first step in dissertation data analysis is to carefully prepare and clean the collected data. This may involve removing any irrelevant or incomplete information, addressing missing data, and ensuring data integrity. Once the data is ready, various statistical and analytical techniques can be applied to extract meaningful information.

Descriptive statistics are commonly used to summarize and describe the main characteristics of the data, such as measures of central tendency (e.g., mean, median) and measures of dispersion (e.g., standard deviation, range). These statistics help researchers gain an initial understanding of the data and identify any outliers or anomalies.

Furthermore, qualitative data analysis techniques can be employed when dealing with non-numerical data, such as textual data or interviews. This involves systematically organizing, coding, and categorizing qualitative data to identify themes and patterns.

Types of Research

When considering research types in the context of dissertation data analysis, several approaches can be employed:

1. Quantitative Research

This type of research involves the collection and analysis of numerical data. It focuses on generating statistical information and making objective interpretations. Quantitative research often utilizes surveys, experiments, or structured observations to gather data that can be quantified and analyzed using statistical techniques.

2. Qualitative Research

In contrast to quantitative research, qualitative research focuses on exploring and understanding complex phenomena in depth. It involves collecting non-numerical data such as interviews, observations, or textual materials. Qualitative data analysis involves identifying themes, patterns, and interpretations, often using techniques like content analysis or thematic analysis.

3. Mixed-Methods Research

This approach combines both quantitative and qualitative research methods. Researchers employing mixed-methods research collect and analyze both numerical and non-numerical data to gain a comprehensive understanding of the research topic. The integration of quantitative and qualitative data can provide a more nuanced and comprehensive analysis, allowing for triangulation and validation of findings.

Primary vs. Secondary Research

Primary research.

Primary research involves the collection of original data specifically for the purpose of the dissertation. This data is directly obtained from the source, often through surveys, interviews, experiments, or observations. Researchers design and implement their data collection methods to gather information that is relevant to their research questions and objectives. Data analysis in primary research typically involves processing and analyzing the raw data collected.

Secondary Research

Secondary research involves the analysis of existing data that has been previously collected by other researchers or organizations. This data can be obtained from various sources such as academic journals, books, reports, government databases, or online repositories. Secondary data can be either quantitative or qualitative, depending on the nature of the source material. Data analysis in secondary research involves reviewing, organizing, and synthesizing the available data.

If you wanna deepen into Methodology in Research, also read: What is Methodology in Research and How Can We Write it?

Types of Analysis

Various types of analysis techniques can be employed to examine and interpret the collected data. Of all those types, the ones that are most important and used are:

- Descriptive Analysis: Descriptive analysis focuses on summarizing and describing the main characteristics of the data. It involves calculating measures of central tendency (e.g., mean, median) and measures of dispersion (e.g., standard deviation, range). Descriptive analysis provides an overview of the data, allowing researchers to understand its distribution, variability, and general patterns.

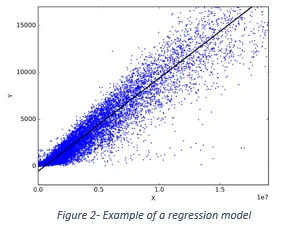

- Inferential Analysis: Inferential analysis aims to draw conclusions or make inferences about a larger population based on the collected sample data. This type of analysis involves applying statistical techniques, such as hypothesis testing, confidence intervals, and regression analysis, to analyze the data and assess the significance of the findings. Inferential analysis helps researchers make generalizations and draw meaningful conclusions beyond the specific sample under investigation.

- Qualitative Analysis: Qualitative analysis is used to interpret non-numerical data, such as interviews, focus groups, or textual materials. It involves coding, categorizing, and analyzing the data to identify themes, patterns, and relationships. Techniques like content analysis, thematic analysis, or discourse analysis are commonly employed to derive meaningful insights from qualitative data.

- Correlation Analysis: Correlation analysis is used to examine the relationship between two or more variables. It determines the strength and direction of the association between variables. Common correlation techniques include Pearson’s correlation coefficient, Spearman’s rank correlation, or point-biserial correlation, depending on the nature of the variables being analyzed.

Basic Statistical Analysis

When conducting dissertation data analysis, researchers often utilize basic statistical analysis techniques to gain insights and draw conclusions from their data. These techniques involve the application of statistical measures to summarize and examine the data. Here are some common types of basic statistical analysis used in dissertation research:

- Descriptive Statistics

- Frequency Analysis

- Cross-tabulation

- Chi-Square Test

- Correlation Analysis

Advanced Statistical Analysis

In dissertation data analysis, researchers may employ advanced statistical analysis techniques to gain deeper insights and address complex research questions. These techniques go beyond basic statistical measures and involve more sophisticated methods. Here are some examples of advanced statistical analysis commonly used in dissertation research:

Regression Analysis

- Analysis of Variance (ANOVA)

- Factor Analysis

- Cluster Analysis

- Structural Equation Modeling (SEM)

- Time Series Analysis

Examples of Methods of Analysis

Regression analysis is a powerful tool for examining relationships between variables and making predictions. It allows researchers to assess the impact of one or more independent variables on a dependent variable. Different types of regression analysis, such as linear regression, logistic regression, or multiple regression, can be used based on the nature of the variables and research objectives.

Event Study

An event study is a statistical technique that aims to assess the impact of a specific event or intervention on a particular variable of interest. This method is commonly employed in finance, economics, or management to analyze the effects of events such as policy changes, corporate announcements, or market shocks.

Vector Autoregression

Vector Autoregression is a statistical modeling technique used to analyze the dynamic relationships and interactions among multiple time series variables. It is commonly employed in fields such as economics, finance, and social sciences to understand the interdependencies between variables over time.

Preparing Data for Analysis

1. become acquainted with the data.

It is crucial to become acquainted with the data to gain a comprehensive understanding of its characteristics, limitations, and potential insights. This step involves thoroughly exploring and familiarizing oneself with the dataset before conducting any formal analysis by reviewing the dataset to understand its structure and content. Identify the variables included, their definitions, and the overall organization of the data. Gain an understanding of the data collection methods, sampling techniques, and any potential biases or limitations associated with the dataset.

2. Review Research Objectives

This step involves assessing the alignment between the research objectives and the data at hand to ensure that the analysis can effectively address the research questions. Evaluate how well the research objectives and questions align with the variables and data collected. Determine if the available data provides the necessary information to answer the research questions adequately. Identify any gaps or limitations in the data that may hinder the achievement of the research objectives.

3. Creating a Data Structure

This step involves organizing the data into a well-defined structure that aligns with the research objectives and analysis techniques. Organize the data in a tabular format where each row represents an individual case or observation, and each column represents a variable. Ensure that each case has complete and accurate data for all relevant variables. Use consistent units of measurement across variables to facilitate meaningful comparisons.

4. Discover Patterns and Connections

In preparing data for dissertation data analysis, one of the key objectives is to discover patterns and connections within the data. This step involves exploring the dataset to identify relationships, trends, and associations that can provide valuable insights. Visual representations can often reveal patterns that are not immediately apparent in tabular data.

Qualitative Data Analysis

Qualitative data analysis methods are employed to analyze and interpret non-numerical or textual data. These methods are particularly useful in fields such as social sciences, humanities, and qualitative research studies where the focus is on understanding meaning, context, and subjective experiences. Here are some common qualitative data analysis methods:

Thematic Analysis

The thematic analysis involves identifying and analyzing recurring themes, patterns, or concepts within the qualitative data. Researchers immerse themselves in the data, categorize information into meaningful themes, and explore the relationships between them. This method helps in capturing the underlying meanings and interpretations within the data.

Content Analysis

Content analysis involves systematically coding and categorizing qualitative data based on predefined categories or emerging themes. Researchers examine the content of the data, identify relevant codes, and analyze their frequency or distribution. This method allows for a quantitative summary of qualitative data and helps in identifying patterns or trends across different sources.

Grounded Theory

Grounded theory is an inductive approach to qualitative data analysis that aims to generate theories or concepts from the data itself. Researchers iteratively analyze the data, identify concepts, and develop theoretical explanations based on emerging patterns or relationships. This method focuses on building theory from the ground up and is particularly useful when exploring new or understudied phenomena.

Discourse Analysis

Discourse analysis examines how language and communication shape social interactions, power dynamics, and meaning construction. Researchers analyze the structure, content, and context of language in qualitative data to uncover underlying ideologies, social representations, or discursive practices. This method helps in understanding how individuals or groups make sense of the world through language.

Narrative Analysis

Narrative analysis focuses on the study of stories, personal narratives, or accounts shared by individuals. Researchers analyze the structure, content, and themes within the narratives to identify recurring patterns, plot arcs, or narrative devices. This method provides insights into individuals’ live experiences, identity construction, or sense-making processes.

Applying Data Analysis to Your Dissertation

Applying data analysis to your dissertation is a critical step in deriving meaningful insights and drawing valid conclusions from your research. It involves employing appropriate data analysis techniques to explore, interpret, and present your findings. Here are some key considerations when applying data analysis to your dissertation:

Selecting Analysis Techniques

Choose analysis techniques that align with your research questions, objectives, and the nature of your data. Whether quantitative or qualitative, identify the most suitable statistical tests, modeling approaches, or qualitative analysis methods that can effectively address your research goals. Consider factors such as data type, sample size, measurement scales, and the assumptions associated with the chosen techniques.

Data Preparation

Ensure that your data is properly prepared for analysis. Cleanse and validate your dataset, addressing any missing values, outliers, or data inconsistencies. Code variables, transform data if necessary, and format it appropriately to facilitate accurate and efficient analysis. Pay attention to ethical considerations, data privacy, and confidentiality throughout the data preparation process.

Execution of Analysis

Execute the selected analysis techniques systematically and accurately. Utilize statistical software, programming languages, or qualitative analysis tools to carry out the required computations, calculations, or interpretations. Adhere to established guidelines, protocols, or best practices specific to your chosen analysis techniques to ensure reliability and validity.

Interpretation of Results

Thoroughly interpret the results derived from your analysis. Examine statistical outputs, visual representations, or qualitative findings to understand the implications and significance of the results. Relate the outcomes back to your research questions, objectives, and existing literature. Identify key patterns, relationships, or trends that support or challenge your hypotheses.

Drawing Conclusions

Based on your analysis and interpretation, draw well-supported conclusions that directly address your research objectives. Present the key findings in a clear, concise, and logical manner, emphasizing their relevance and contributions to the research field. Discuss any limitations, potential biases, or alternative explanations that may impact the validity of your conclusions.

Validation and Reliability

Evaluate the validity and reliability of your data analysis by considering the rigor of your methods, the consistency of results, and the triangulation of multiple data sources or perspectives if applicable. Engage in critical self-reflection and seek feedback from peers, mentors, or experts to ensure the robustness of your data analysis and conclusions.

In conclusion, dissertation data analysis is an essential component of the research process, allowing researchers to extract meaningful insights and draw valid conclusions from their data. By employing a range of analysis techniques, researchers can explore relationships, identify patterns, and uncover valuable information to address their research objectives.

Turn Your Data Into Easy-To-Understand And Dynamic Stories

Decoding data is daunting and you might end up in confusion. Here’s where infographics come into the picture. With visuals, you can turn your data into easy-to-understand and dynamic stories that your audience can relate to. Mind the Graph is one such platform that helps scientists to explore a library of visuals and use them to amplify their research work. Sign up now to make your presentation simpler.

Subscribe to our newsletter

Exclusive high quality content about effective visual communication in science.

About Sowjanya Pedada

Sowjanya is a passionate writer and an avid reader. She holds MBA in Agribusiness Management and now is working as a content writer. She loves to play with words and hopes to make a difference in the world through her writings. Apart from writing, she is interested in reading fiction novels and doing craftwork. She also loves to travel and explore different cuisines and spend time with her family and friends.

Content tags

- +44 7897 053596

- [email protected]

Get an experienced writer start working

Review our examples before placing an order, learn how to draft academic papers, a step-by-step guide to dissertation data analysis.

How to Write a Dissertation Conclusion? | Tips & Examples

What is PhD Thesis Writing? | Beginner’s Guide

A data analysis dissertation is a complex and challenging project requiring significant time, effort, and expertise. Fortunately, it is possible to successfully complete a data analysis dissertation with careful planning and execution.

As a student, you must know how important it is to have a strong and well-written dissertation, especially regarding data analysis. Proper data analysis is crucial to the success of your research and can often make or break your dissertation.

To get a better understanding, you may review the data analysis dissertation examples listed below;

- Impact of Leadership Style on the Job Satisfaction of Nurses

- Effect of Brand Love on Consumer Buying Behaviour in Dietary Supplement Sector

- An Insight Into Alternative Dispute Resolution

- An Investigation of Cyberbullying and its Impact on Adolescent Mental Health in UK

3-Step Dissertation Process!

Get 3+ Topics

Dissertation Proposal

Get Final Dissertation

Types of data analysis for dissertation.

The various types of data Analysis in a Dissertation are as follows;

1. Qualitative Data Analysis

Qualitative data analysis is a type of data analysis that involves analyzing data that cannot be measured numerically. This data type includes interviews, focus groups, and open-ended surveys. Qualitative data analysis can be used to identify patterns and themes in the data.

2. Quantitative Data Analysis

Quantitative data analysis is a type of data analysis that involves analyzing data that can be measured numerically. This data type includes test scores, income levels, and crime rates. Quantitative data analysis can be used to test hypotheses and to look for relationships between variables.

3. Descriptive Data Analysis

Descriptive data analysis is a type of data analysis that involves describing the characteristics of a dataset. This type of data analysis summarizes the main features of a dataset.

4. Inferential Data Analysis

Inferential data analysis is a type of data analysis that involves making predictions based on a dataset. This type of data analysis can be used to test hypotheses and make predictions about future events.

5. Exploratory Data Analysis

Exploratory data analysis is a type of data analysis that involves exploring a data set to understand it better. This type of data analysis can identify patterns and relationships in the data.

Time Period to Plan and Complete a Data Analysis Dissertation?

When planning dissertation data analysis, it is important to consider the dissertation methodology structure and time series analysis as they will give you an understanding of how long each stage will take. For example, using a qualitative research method, your data analysis will involve coding and categorizing your data.

This can be time-consuming, so allowing enough time in your schedule is important. Once you have coded and categorized your data, you will need to write up your findings. Again, this can take some time, so factor this into your schedule.

Finally, you will need to proofread and edit your dissertation before submitting it. All told, a data analysis dissertation can take anywhere from several weeks to several months to complete, depending on the project’s complexity. Therefore, starting planning early and allowing enough time in your schedule to complete the task is important.

Essential Strategies for Data Analysis Dissertation

A. Planning

The first step in any dissertation is planning. You must decide what you want to write about and how you want to structure your argument. This planning will involve deciding what data you want to analyze and what methods you will use for a data analysis dissertation.

B. Prototyping

Once you have a plan for your dissertation, it’s time to start writing. However, creating a prototype is important before diving head-first into writing your dissertation. A prototype is a rough draft of your argument that allows you to get feedback from your advisor and committee members. This feedback will help you fine-tune your argument before you start writing the final version of your dissertation.

C. Executing

After you have created a plan and prototype for your data analysis dissertation, it’s time to start writing the final version. This process will involve collecting and analyzing data and writing up your results. You will also need to create a conclusion section that ties everything together.

D. Presenting

The final step in acing your data analysis dissertation is presenting it to your committee. This presentation should be well-organized and professionally presented. During the presentation, you’ll also need to be ready to respond to questions concerning your dissertation.

Data Analysis Tools

Numerous suggestive tools are employed to assess the data and deduce pertinent findings for the discussion section. The tools used to analyze data and get a scientific conclusion are as follows:

a. Excel

Excel is a spreadsheet program part of the Microsoft Office productivity software suite. Excel is a powerful tool that can be used for various data analysis tasks, such as creating charts and graphs, performing mathematical calculations, and sorting and filtering data.

b. Google Sheets

Google Sheets is a free online spreadsheet application that is part of the Google Drive suite of productivity software. Google Sheets is similar to Excel in terms of functionality, but it also has some unique features, such as the ability to collaborate with other users in real-time.

c. SPSS

SPSS is a statistical analysis software program commonly used in the social sciences. SPSS can be used for various data analysis tasks, such as hypothesis testing, factor analysis, and regression analysis.

d. STATA

STATA is a statistical analysis software program commonly used in the sciences and economics. STATA can be used for data management, statistical modelling, descriptive statistics analysis, and data visualization tasks.

SAS is a commercial statistical analysis software program used by businesses and organizations worldwide. SAS can be used for predictive modelling, market research, and fraud detection.

R is a free, open-source statistical programming language popular among statisticians and data scientists. R can be used for tasks such as data wrangling, machine learning, and creating complex visualizations.

g. Python

A variety of applications may be used using the distinctive programming language Python, including web development, scientific computing, and artificial intelligence. Python also has a number of modules and libraries that can be used for data analysis tasks, such as numerical computing, statistical modelling, and data visualization.

Testimonials

Very satisfied students

This is our reason for working. We want to make all students happy, every day. Review us on Sitejabber

Tips to Compose a Successful Data Analysis Dissertation

a. Choose a Topic You’re Passionate About

The first step to writing a successful data analysis dissertation is to choose a topic you’re passionate about. Not only will this make the research and writing process more enjoyable, but it will also ensure that you produce a high-quality paper.

Choose a topic that is particular enough to be covered in your paper’s scope but not so specific that it will be challenging to obtain enough evidence to substantiate your arguments.

b. Do Your Research

data analysis in research is an important part of academic writing. Once you’ve selected a topic, it’s time to begin your research. Be sure to consult with your advisor or supervisor frequently during this stage to ensure that you are on the right track. In addition to secondary sources such as books, journal articles, and reports, you should also consider conducting primary research through surveys or interviews. This will give you first-hand insights into your topic that can be invaluable when writing your paper.

c. Develop a Strong Thesis Statement

After you’ve done your research, it’s time to start developing your thesis statement. It is arguably the most crucial part of your entire paper, so take care to craft a clear and concise statement that encapsulates the main argument of your paper.

Remember that your thesis statement should be arguable—that is, it should be capable of being disputed by someone who disagrees with your point of view. If your thesis statement is not arguable, it will be difficult to write a convincing paper.

d. Write a Detailed Outline

Once you have developed a strong thesis statement, the next step is to write a detailed outline of your paper. This will offer you a direction to write in and guarantee that your paper makes sense from beginning to end.

Your outline should include an introduction, in which you state your thesis statement; several body paragraphs, each devoted to a different aspect of your argument; and a conclusion, in which you restate your thesis and summarize the main points of your paper.

e. Write Your First Draft

With your outline in hand, it’s finally time to start writing your first draft. At this stage, don’t worry about perfecting your grammar or making sure every sentence is exactly right—focus on getting all of your ideas down on paper (or onto the screen). Once you have completed your first draft, you can revise it for style and clarity.

And there you have it! Following these simple tips can increase your chances of success when writing your data analysis dissertation. Just remember to start early, give yourself plenty of time to research and revise, and consult with your supervisor frequently throughout the process.

How Does It Work ?

Fill the Form

Writer Starts Working

3+ Topics Emailed!

Studying the above examples gives you valuable insight into the structure and content that should be included in your own data analysis dissertation. You can also learn how to effectively analyze and present your data and make a lasting impact on your readers.

In addition to being a useful resource for completing your dissertation, these examples can also serve as a valuable reference for future academic writing projects. By following these examples and understanding their principles, you can improve your data analysis skills and increase your chances of success in your academic career.

You may also contact Premier Dissertations to develop your data analysis dissertation.

For further assistance, some other resources in the dissertation writing section are shared below;

How Do You Select the Right Data Analysis

How to Write Data Analysis For A Dissertation?

How to Develop a Conceptual Framework in Dissertation?

What is a Hypothesis in a Dissertation?

Get an Immediate Response

Discuss your requirments with our writers

WhatsApp Us Email Us Chat with Us

Get 3+ Free Dissertation Topics within 24 hours?

Your Number

Academic Level Select Academic Level Undergraduate Masters PhD

Area of Research

admin farhan

Related posts.

How to Write a Reaction Paper: Format, Template, & Examples

What Is a Covariate? Its Role in Statistical Modeling

What is Conventions in Writing | Definition, Importance & Examples

Comments are closed.

- Email: [email protected]

- Phone: 713-897-8719

- Toll Free: 1-877-720-2666

Statistical Analysis in a Dissertation: 4 Expert Tips for Academic Success

Are you looking to Conduct Statistical Analysis in a Dissertation? In this article. we offer top tips, tricks, and triumphs for success. Master the art of data analysis and take your dissertation to the next level with our expert guidance at Houston Essays.

Statistical analysis is an integral part of conducting a dissertation, playing a crucial role in deriving meaningful insights from research data. Whether you are studying social sciences, business, or any other field, understanding how to effectively conduct statistical analysis can greatly enhance the credibility of your research findings. In this article, we will explore the process of conducting statistical analysis in a dissertation, providing you with pro tips and tricks along the way.

Statistical analysis involves the application of various mathematical and computational techniques to analyze and interpret data. In the context of a dissertation, statistical analysis helps researchers draw conclusions, validate research hypotheses, and make informed decisions based on empirical evidence. It allows for a deeper understanding of the relationships and patterns within the data, enabling researchers to uncover valuable insights.

The examination of trends, structures, and correlations using quantitative data is known as statistical analysis. It is a crucial research instrument utilized by academics, government, corporations, and other groups.

Statistical Analysis in a Dissertation assists doctorate students with all the fundamental components of their dissertation work, including planning, researching and clarifying, methodology, dissertation data statistical analysis or evaluation, review of literature, and the final PowerPoint presentation. One of a dissertation’s most crucial elements, the statistical analysis section, is where you showcase your original research skills.

It can be challenging at first to analyze data and deal with statistics while writing your dissertation. However, there are several guidelines you can adhere to conduct statistical analysis in a dissertation.

In this article, we will discuss how to conduct statistical analysis in a dissertation.

Here’s what we’ll cover:

How to conduct statistical analysis in a dissertation, 1. selecting the appropriate statistical test.

Depending on the variables being examined and the nature/type of the queries that need to be resolved, we assist in choosing the most suitable statistical test. Whether category or numerical data is being evaluated also makes a difference. When deciding on a statistical test to use for an SPSS analysis, the following factors are frequently taken into account:

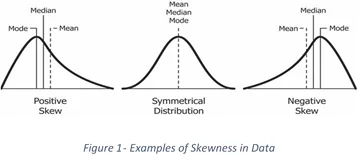

- Type and distribution of the information that was gathered: Checking the distribution of the data is crucial before choosing the type of test to apply to it. When data is reliable, parametric tests are conducted; however, if the data has a non-normal distribution, non-parametric testing is selected. Using tools like the histogram, Q-plots, and graphical representations of the values, we test the normality of the data distribution. On nominal, ordinal, and discrete types of data, non-parametric testing is also employed. Continuous data can be analyzed using both parametric and non-parametric tests.

- Study goals and objectives: The kind of statistical tests to use depends on the goal and objectives of a study. Depending on what the student wishes to accomplish before submitting the dissertation, our data analysis services may employ statistical tests, including regression analysis, chi-squared, t-tests, ANOVA, as well as correlations.

2. Preparing Your Data for Statistical Analysis

Before you start statistically analyzing your data, there are a few things you need to do, regardless of whether they are on paper or in a computer file (or both).

- First, ensure they don’t contain any information that might be used to identify specific participants.

- Secondly, make sure they don’t contain any data that might be used to identify specific participants. Unless the information is extremely sensitive, a secured space or computer with a password is generally sufficient. Make copies of your data or create backup files, and store them in another safe place, at least until the work is finished.

- The next step is to verify that your raw data are complete and seem to have been recorded appropriately. You might discover at this point that there are unreadable, missing, or blatantly misunderstood responses. You must determine whether these issues are severe enough to render a participant’s data useless. You might need to remove that participant’s data from the analysis if details about the key independent or dependent variable are absent or if numerous responses are missing or questionable. Don’t throw away or erase any data that you do want to exclude because you or another researcher could need to access them in the future. Set them away temporarily, and make notes on why you chose to do so—you’ll need to report this data.

You can now prepare your data for statistical analysis if it already exists in a computer database or enter it in a spreadsheet program if it isn’t. Prepare your data for analysis or create your data file using the appropriate software, such as SPSS, SAS, or R.

3. Conducting the Statistical Analysis

You have reached the stage of statistical analysis where all of the data that has been helpful up to this point will be interpreted. It becomes challenging to present so much data in a cohesive way since large quantities of data need to be displayed. Think about all your options, including tables, graphs, diagrams, and charts, to address this problem.

Tables aid in the concise presentation of both quantitative and qualitative information. Keep your reader in mind at all times when presenting data. Anything that is obvious to you might not be to your reader. Therefore, keep in mind that someone who is unfamiliar with your research and conclusions may not be able to understand your data presentation style. If “No” is the response, you might want to reconsider your presentation.

Your dissertation’s analysis section could become unorganized and messy after providing a lot of data. If it does, you should add an appendix to prevent this. Include any information that is difficult for you to incorporate inside the main body text in the dissertation’s appendix section. Additionally, add data sheets, questions, and records of interviews and focus groups to the appendix. The statistical analysis and quotes from interviews, on the other hand, must be included in the dissertation.

4. Validity and Reliability Of Statistical Analysis

The idea that the information provided is self-explanatory is a prevalent one. The majority of students who use statistics and citations believe that this is sufficient for explaining everything. It is not enough. Instead of just repeating everything, you should study the information and decide whether facts will support or contradict your viewpoints. Indicates whether the data you use are reliable and dependable.

Demonstrate the concepts in full detail and critically evaluate each viewpoint, taking care to address any potential fault spots. If you want to give your research more credibility, you should always talk about the weaknesses and strengths of your data.

Importance of Statistical Analysis in a Dissertation

Statistical analysis in a dissertation holds immense importance for several reasons. Firstly, it enhances the credibility of research findings by providing empirical evidence to support or refute research hypotheses. By applying statistical techniques, researchers can quantify the strength and direction of relationships, allowing for objective interpretations of the data.

Secondly, statistical analysis validates the research hypotheses by testing them against the collected data. It helps researchers determine whether the observed differences or relationships are statistically significant, thereby providing a basis for making valid inferences about the population under study.

Choosing the appropriate statistical methods

Before delving into the analysis, it is crucial to choose the appropriate statistical methods based on the research objectives and the type of data being collected. There are various types of statistical methods, each serving a specific purpose. Some commonly used methods in dissertations include:

Descriptive statistics

Descriptive statistics involve summarizing and presenting data in a meaningful way. It includes measures such as mean, median, mode, standard deviation, and frequency distributions. Descriptive statistics provide a comprehensive overview of the data, facilitating an initial understanding of its characteristics.

Inferential statistics

Inferential statistics aim to make inferences about a population based on sample data. It involves hypothesis testing, confidence intervals, and regression analysis. Inferential statistics allow researchers to draw conclusions beyond the immediate sample and generalize their findings to the larger population.

Multivariate analysis

Multivariate analysis deals with analyzing data that involves multiple variables simultaneously. Techniques such as multiple regression, factor analysis, and cluster analysis fall under this category. Multivariate analysis helps researchers uncover complex relationships and patterns within the data.

Collecting and organizing data

Once the appropriate statistical methods have been determined, the next step is to collect and organize the data for analysis. Data collection methods can vary depending on the nature of the research and can include surveys, interviews, observations, or secondary data sources. It is important to ensure the data collection process is rigorous and reliable to yield accurate results.

After collecting the data, it is crucial to clean and validate it. This involves checking for missing values, outliers, and inconsistencies. By addressing these issues, researchers can ensure the quality and integrity of the data, minimizing the potential for biased or misleading results.

Preparing data for analysis

Before conducting statistical analysis, the data often requires preparation to ensure compatibility with the chosen statistical software and methods. This preparation phase involves data coding and entry, as well as data transformation and normalization.

Data coding and entry involve assigning numerical codes or categories to the collected data to facilitate analysis. This step ensures that the data is in a format that can be readily processed by statistical software.

Data transformation and normalization are performed to standardize the data and make it suitable for analysis. This may include logarithmic transformations, scaling variables, or normalizing distributions. By transforming and normalizing the data, researchers can address issues such as nonlinearity or heteroscedasticity.

Conducting statistical analysis

Once the data is prepared, researchers can proceed with conducting the actual statistical analysis. It is essential to choose the right statistical software that aligns with the chosen methods and is suitable for handling the dataset.

Before diving into complex analyses, it is advisable to perform exploratory data analysis (EDA). EDA involves examining the data visually and descriptively to gain insights, detect patterns, and identify potential outliers. This step helps researchers understand the data better and make informed decisions about subsequent analyses.